2.5D Deep Learning and Machine Learning for Discriminative DLBCL and IDC with Radiomics on PET/CT

Abstract

1. Introduction

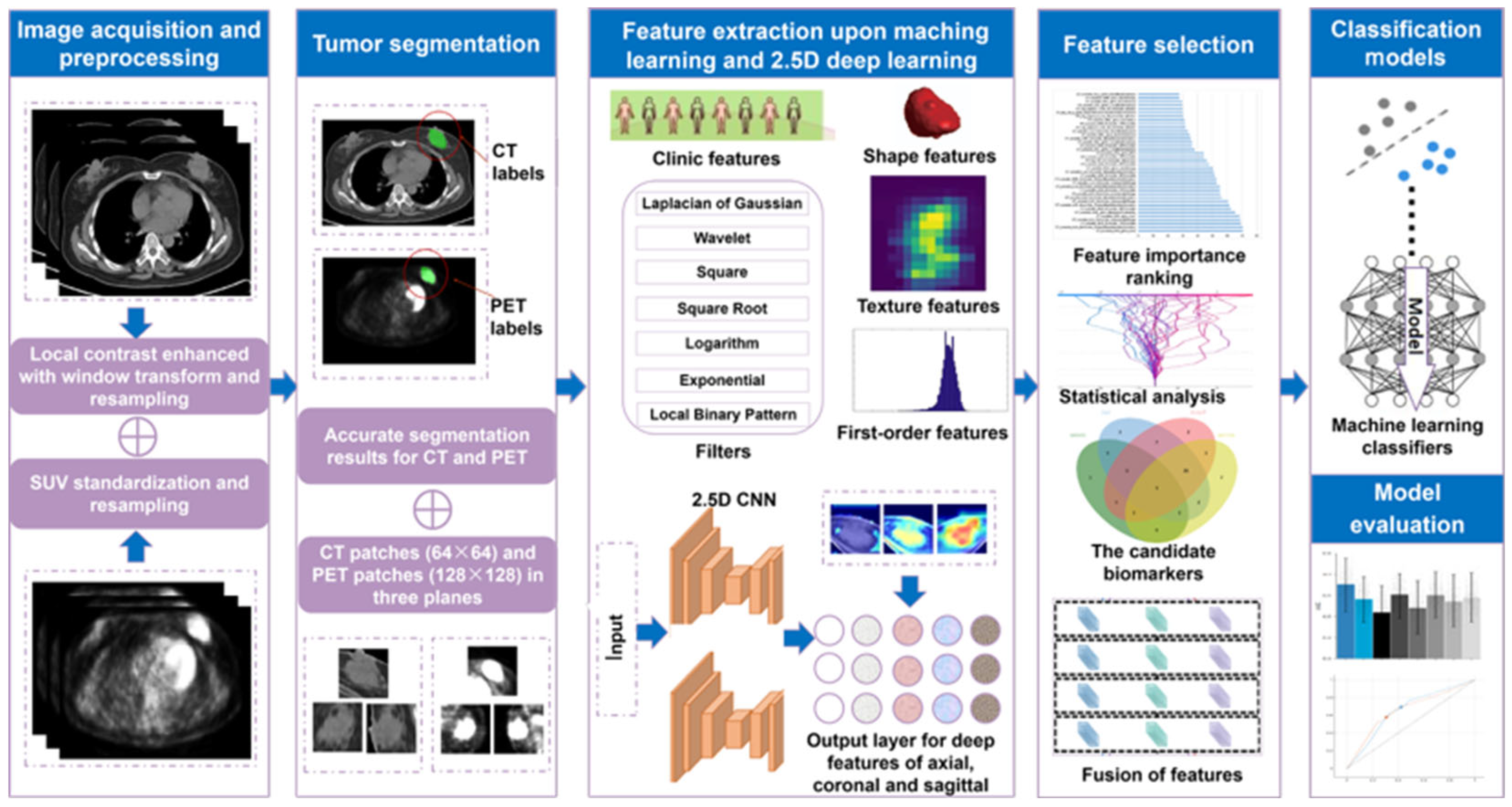

2. Materials and Methods

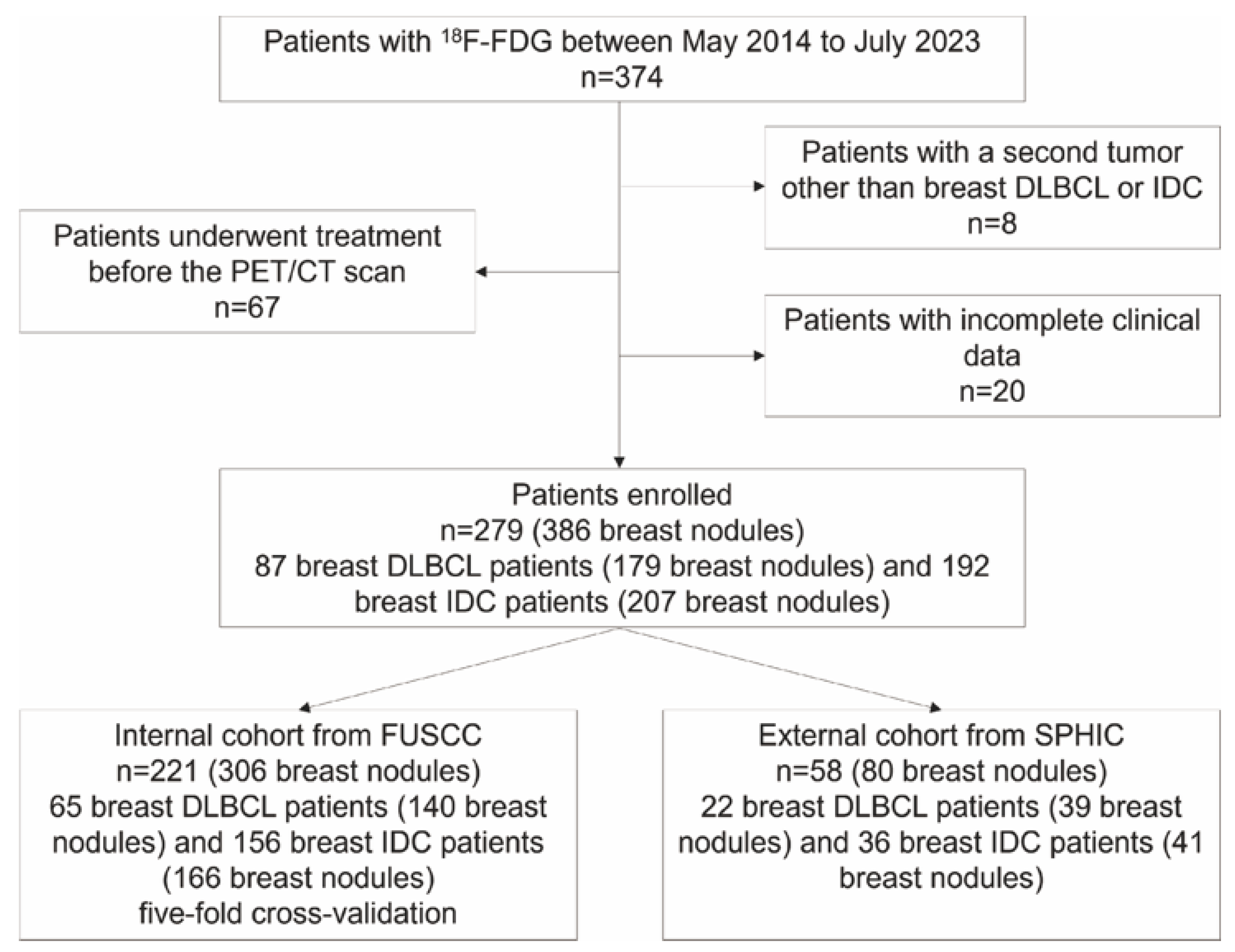

2.1. Patient Selection

2.2. Acquisitions and Preprocessing

2.3. Tumor Segmentation

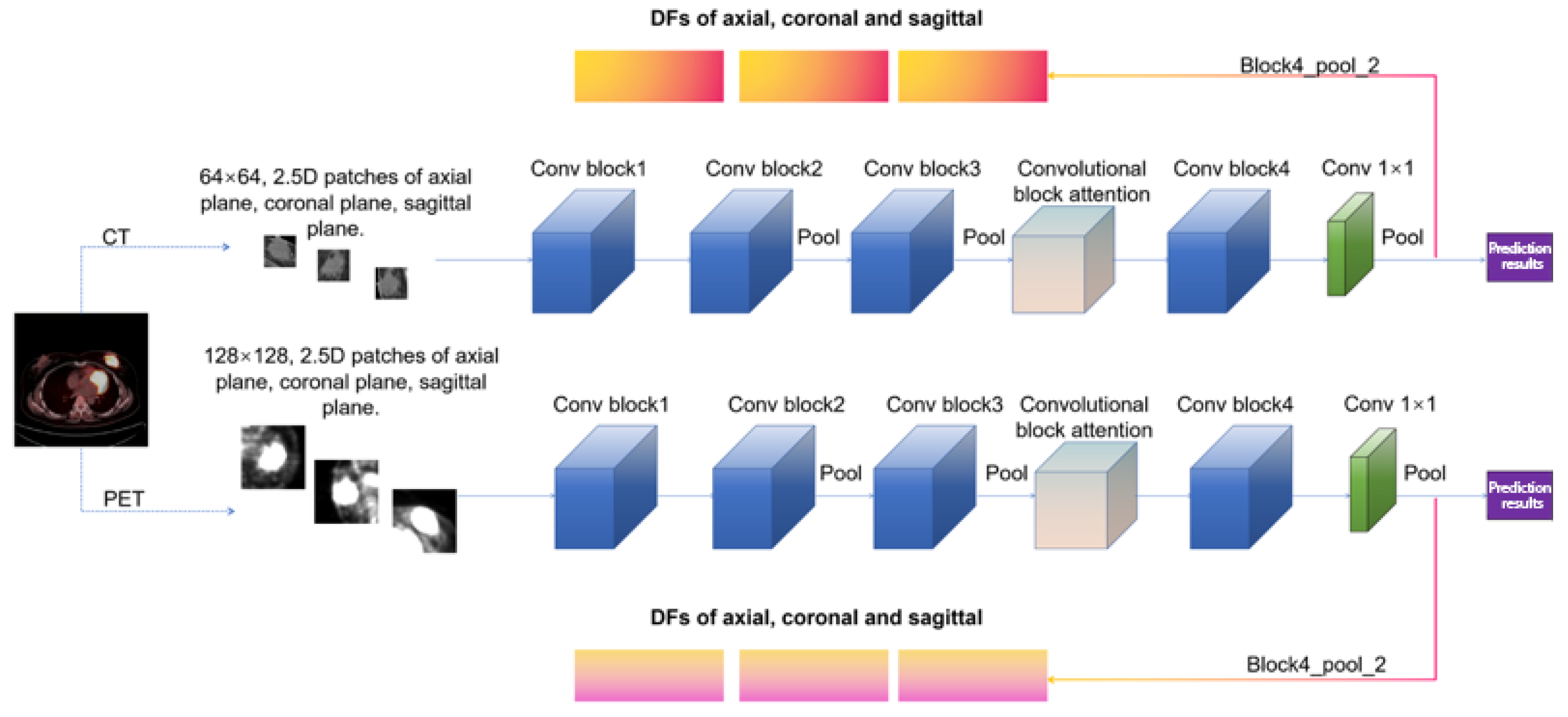

2.4. Feature Extraction

2.5. Feature Selection

2.6. Model Development and Evaluation

2.7. Model Interpretation

2.8. Statistical Analysis

3. Results

3.1. Study Population

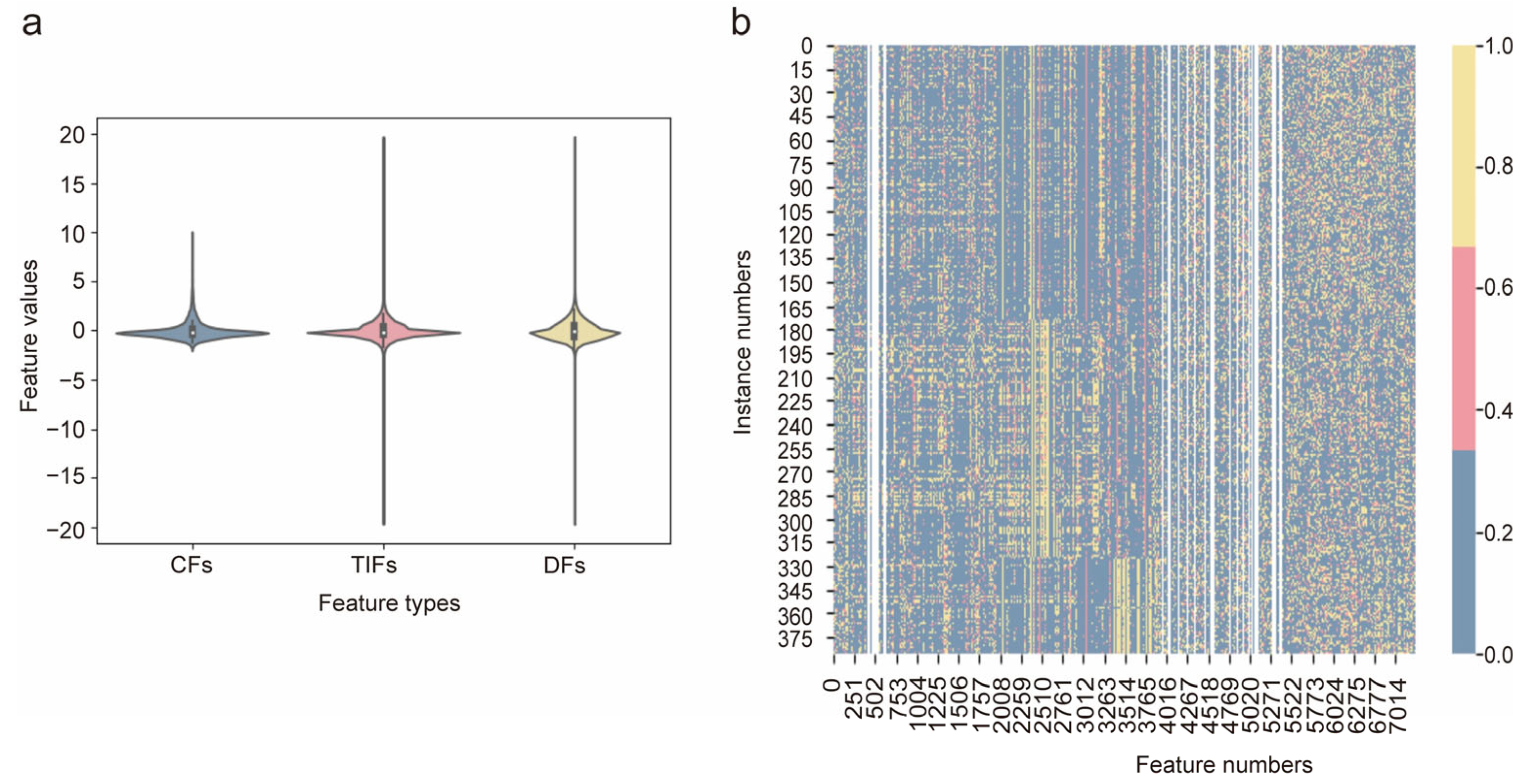

3.2. Feature Analysis

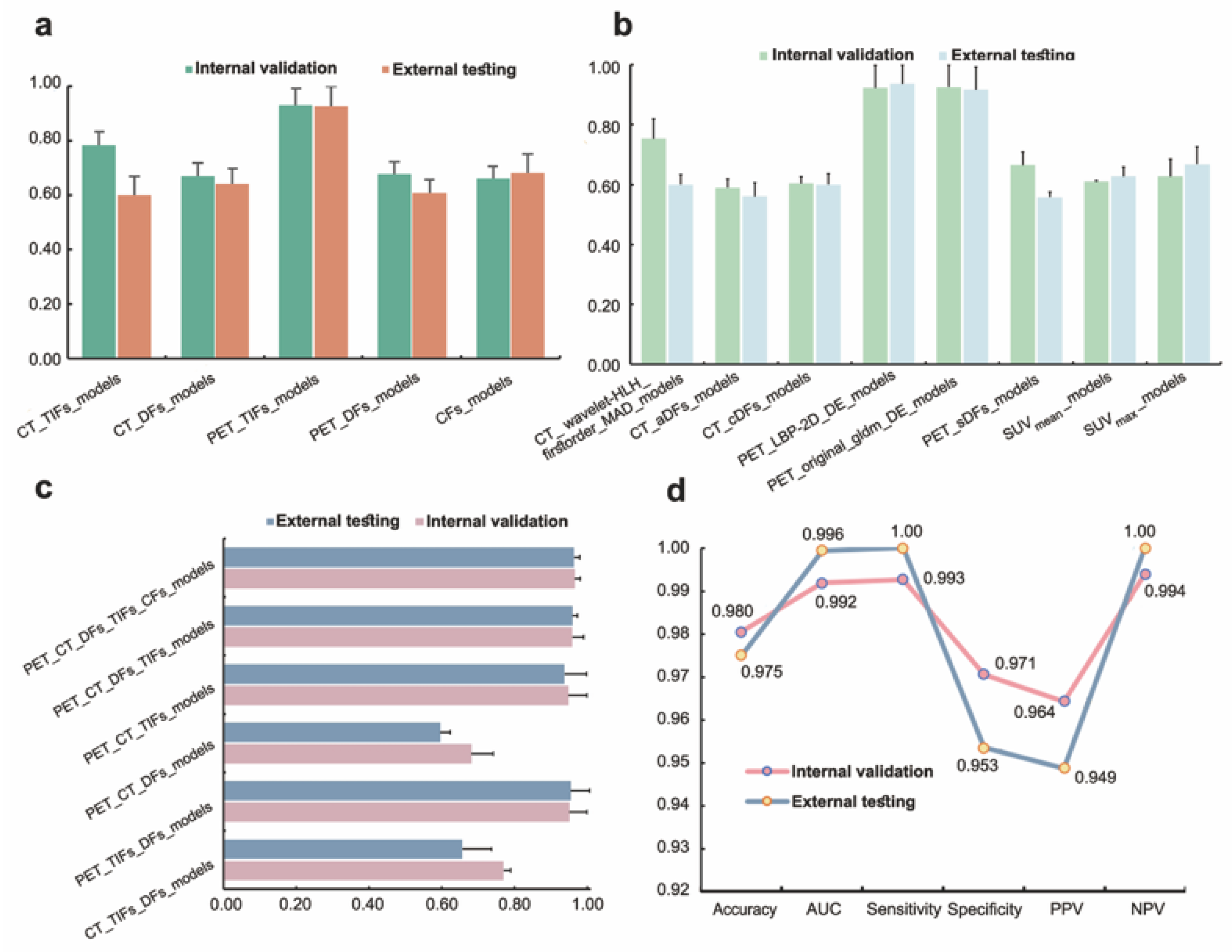

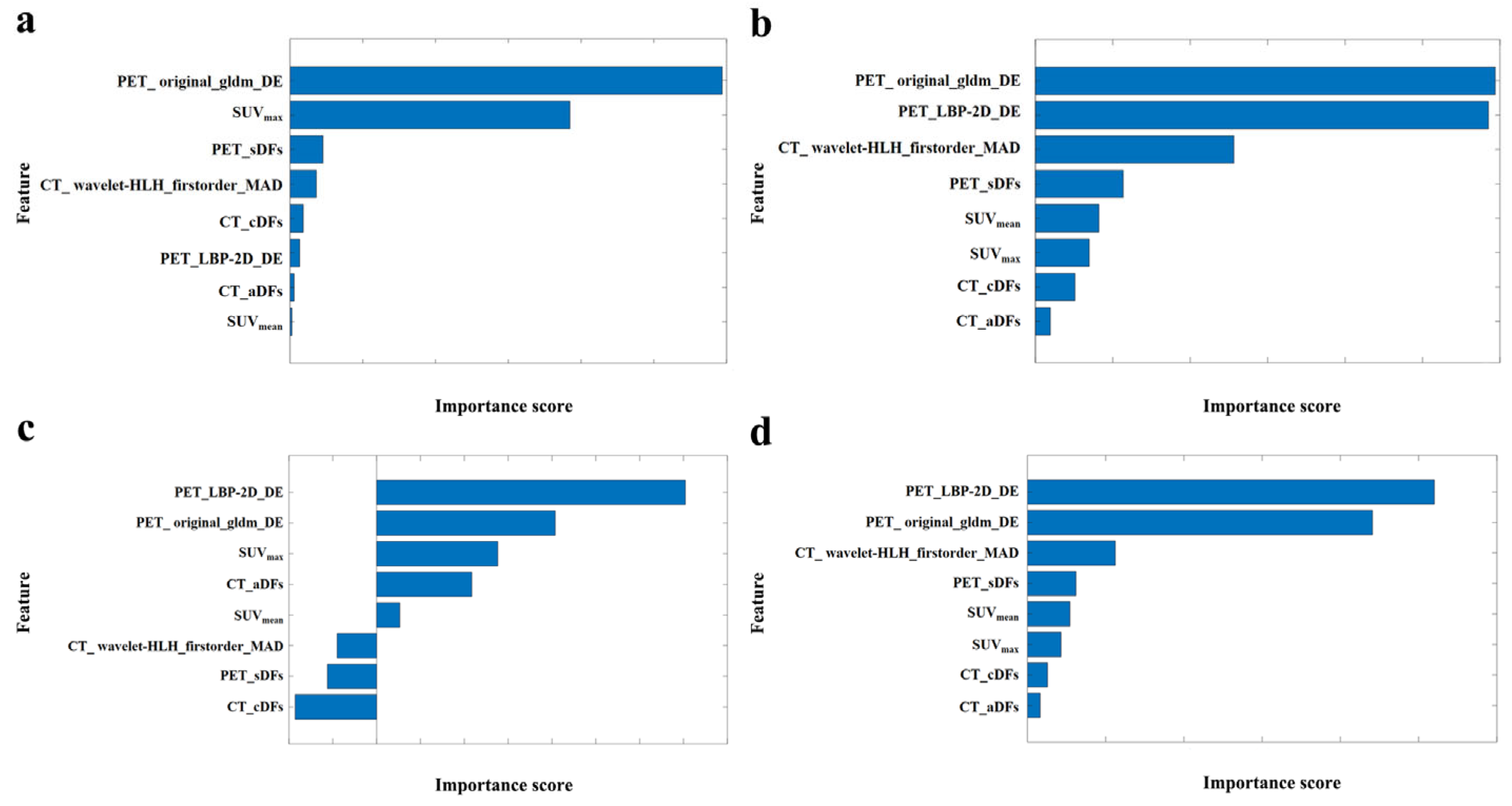

3.3. Model Performance

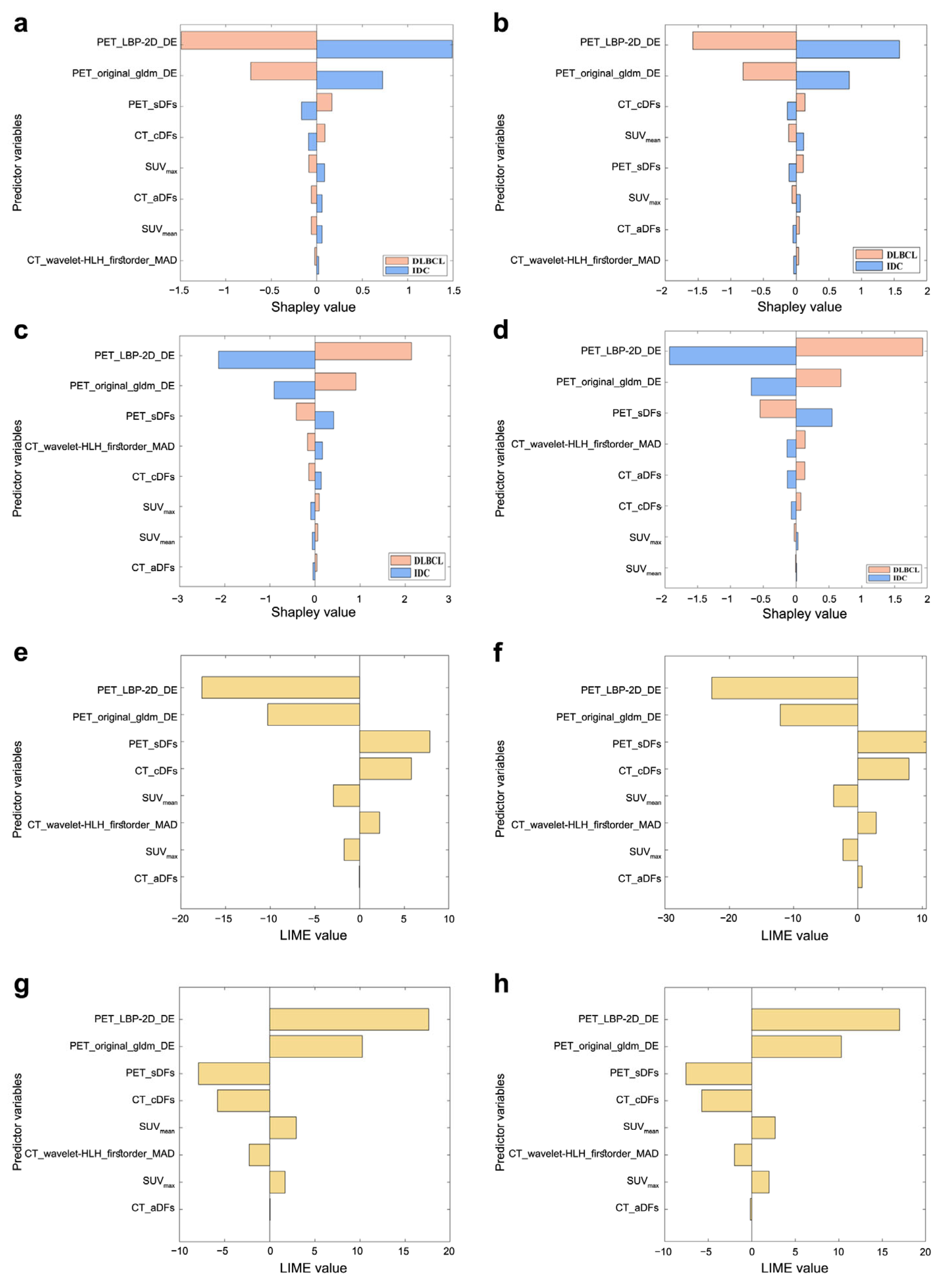

3.4. Model Interpretation

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| 18F-FDG | 18F-fluorodeoxyglucose |

| AI | Artificial intelligence |

| AUC | Area under the receiver operating characteristic curve |

| CFs | Clinic features |

| CI | Confidence interval |

| DFs | 2.5D deep features |

| DLBCL | Diffuse large B-cell lymphoma |

| FUSCC | Fudan University Shanghai Cancer Center |

| IDC | Invasive ductal carcinoma |

| LIME | Local interpretable model-agnostic explanations |

| ML | Machine learning |

| MRI | Magnetic resonance imaging |

| MTV | Metabolic tumor volume |

| NPV | Negative predictive value |

| PET/CT | Positron emission tomography/computed tomography |

| PPV | Positive predictive value |

| ROC | Receiver operating characteristic curve |

| SHAP | Shapley additive explanation |

| SPHIC | Shanghai Proton and Heavy Ion Center |

| SUV | Standard uptake value |

| TIFs | Traditional image features |

| TLG | Total lesion glucose |

References

- Rezkallah, E.; Elsaify, A.; Tin, S.M.; Dey, D.; Elsaify, W.M. Breast lymphoma: General review. Breast Dis. 2023, 42, 197–205. [Google Scholar] [CrossRef]

- Sakhri, S.; Aloui, M.; Bouhani, M.; Bouaziz, H.; Kamoun, S.; Slimene, M.; Ben Dhieb, T. Primary breast lymphoma: A case series and review of the literature. J. Med. Case Rep. 2023, 17, 290. [Google Scholar] [CrossRef]

- McDonald, E.S.; Clark, A.S.; Tchou, J.; Zhang, P.; Freedman, G.M. Clinical diagnosis and management of breast cancer. J. Nucl. Med. 2016, 57, 9S–16S. [Google Scholar] [CrossRef]

- Nicholson, B.T.; Bhatti, R.M.; Glassman, L. Extranodal lymphoma of the breast. Radiol. Clin. 2016, 54, 711–726. [Google Scholar] [CrossRef] [PubMed]

- Sarhangi, N.; Hajjari, S.; Heydari, S.F.; Ganjizadeh, M.; Rouhollah, F.; Hasanzad, M. Breast cancer in the era of precision medicine. Mol. Biol. Rep. 2022, 49, 10023–10037. [Google Scholar] [CrossRef] [PubMed]

- Breese, R.O.; Friend, K. Thirteen-Centimeter Breast Lymphoma. Am. Surg. 2022, 88, 1891–1892. [Google Scholar] [CrossRef]

- Ou, X.; Zhang, J.; Wang, J.; Pang, F.; Wang, Y.; Wei, X.; Ma, X. Radiomics based on 18F-FDG PET/CT could differentiate breast carcinoma from breast lymphoma using machine-learning approach: A preliminary study. Cancer Med. 2020, 9, 496–506. [Google Scholar] [CrossRef]

- Yoneyama, K.; Nakagawa, M.; Hara, A. Primary lymphoma of the breast: A case report and review of the literature. Radiol. Case Rep. 2021, 16, 55–61. [Google Scholar] [CrossRef]

- Solanki, M.; Visscher, D. Pathology of breast cancer in the last half century. Hum. Pathol. 2020, 95, 137–148. [Google Scholar] [CrossRef]

- Tagliafico, A.S.; Piana, M.; Schenone, D.; Lai, R.; Massone, A.M.; Houssami, N. Overview of radiomics in breast cancer diagnosis and prognostication. Breast 2020, 49, 74–80. [Google Scholar] [CrossRef]

- Chow, J.C.L. Recent Advances in Biomedical Imaging for Cancer Diagnosis and Therapy. In Multimodal Biomedical Imaging Techniques; Kalarikkal, N., Bhadrapriya, B.C., Anne Bose, B., Padmanabhan, P., Thomas, S., Vadakke Matham, M., Eds.; Springer Nature Singapore: Singapore, 2025; pp. 147–180. [Google Scholar]

- Chow, J.C.L. Quantum Computing and Machine Learning in Medical Decision-Making: A Comprehensive Review. Algorithms 2025, 18, 156. [Google Scholar] [CrossRef]

- Boubnovski Martell, M.; Linton-Reid, K.; Hindocha, S.; Chen, M.; Moreno, P.; Álvarez-Benito, M.; Salvatierra, Á.; Lee, R.; Posma, J.M.; Calzado, M.A. Deep representation learning of tissue metabolome and computed tomography annotates NSCLC classification and prognosis. NPJ Precis. Oncol. 2024, 8, 28. [Google Scholar] [CrossRef]

- Zhong, Y.; She, Y.; Deng, J.; Chen, S.; Wang, T.; Yang, M.; Ma, M.; Song, Y.; Qi, H.; Wang, Y. Deep learning for prediction of N2 metastasis and survival for clinical stage I non–small cell lung cancer. Radiology 2022, 302, 200–211. [Google Scholar] [CrossRef] [PubMed]

- Tong, W.-J.; Wu, S.-H.; Cheng, M.-Q.; Huang, H.; Liang, J.-Y.; Li, C.-Q.; Guo, H.-L.; He, D.-N.; Liu, Y.-H.; Xiao, H. Integration of artificial intelligence decision aids to reduce workload and enhance efficiency in thyroid nodule management. JAMA Netw. Open 2023, 6, e2313674. [Google Scholar] [CrossRef]

- Zheng, D.; He, X.; Jing, J. Overview of artificial intelligence in breast cancer medical imaging. J. Clin. Med. 2023, 12, 419. [Google Scholar] [CrossRef] [PubMed]

- Arya, S.S.; Dias, S.B.; Jelinek, H.F.; Hadjileontiadis, L.J.; Pappa, A.-M. The convergence of traditional and digital biomarkers through AI-assisted biosensing: A new era in translational diagnostics? Biosens. Bioelectron. 2023, 235, 115387. [Google Scholar] [CrossRef]

- Bera, K.; Braman, N.; Gupta, A.; Velcheti, V.; Madabhushi, A. Predicting cancer outcomes with radiomics and artificial intelligence in radiology. Nat. Rev. Clin. Oncol. 2022, 19, 132–146. [Google Scholar] [CrossRef]

- Booth, T.C.; Williams, M.; Luis, A.; Cardoso, J.; Ashkan, K.; Shuaib, H. Machine learning and glioma imaging biomarkers. Clin. Radiol. 2020, 75, 20–32. [Google Scholar] [CrossRef]

- Prelaj, A.; Miskovic, V.; Zanitti, M.; Trovo, F.; Genova, C.; Viscardi, G.; Rebuzzi, S.; Mazzeo, L.; Provenzano, L.; Kosta, S. Artificial intelligence for predictive biomarker discovery in immuno-oncology: A systematic review. Ann. Oncol. 2023, 35, 29–65. [Google Scholar] [CrossRef]

- Ma, W.; Guo, X.; Liu, L.; Qi, L.; Liu, P.; Zhu, Y.; Jian, X.; Xu, G.; Wang, X.; Lu, H. Magnetic resonance imaging semantic and quantitative features analyses: An additional diagnostic tool for breast phyllodes tumors. Am. J. Transl. Res. 2020, 12, 2083. [Google Scholar]

- Vanguri, R.S.; Luo, J.; Aukerman, A.T.; Egger, J.V.; Fong, C.J.; Horvat, N.; Pagano, A.; Araujo-Filho, J.d.A.B.; Geneslaw, L.; Rizvi, H. Multimodal integration of radiology, pathology and genomics for prediction of response to PD-(L) 1 blockade in patients with non-small cell lung cancer. Nat. Cancer 2022, 3, 1151–1164. [Google Scholar] [CrossRef] [PubMed]

- Challa, K.; Paysan, D.; Leiser, D.; Sauder, N.; Weber, D.C.; Shivashankar, G. Imaging and AI based chromatin biomarkers for diagnosis and therapy evaluation from liquid biopsies. NPJ Precis. Oncol. 2023, 7, 135. [Google Scholar] [CrossRef]

- Wang, S.; Yu, H.; Gan, Y.; Wu, Z.; Li, E.; Li, X.; Cao, J.; Zhu, Y.; Wang, L.; Deng, H. Mining whole-lung information by artificial intelligence for predicting EGFR genotype and targeted therapy response in lung cancer: A multicohort study. Lancet Digit. Health 2022, 4, e309–e319. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Liu, F.; Wang, R.; Qi, M.; Zhang, J.; Liu, X.; Song, S. End-to-end deep learning radiomics: Development and validation of a novel attention-based aggregate convolutional neural network to distinguish breast diffuse large B-cell lymphoma from breast invasive ductal carcinoma. Quant. Imaging Med. Surg. 2023, 13, 6598. [Google Scholar] [CrossRef]

- Hatt, M.; Tixier, F.; Pierce, L.; Kinahan, P.E.; Le Rest, C.C.; Visvikis, D. Characterization of PET/CT images using texture analysis: The past, the present… any future? Eur. J. Nucl. Med. Mol. Imaging 2017, 44, 151–165. [Google Scholar] [CrossRef]

- Eertink, J.J.; van de Brug, T.; Wiegers, S.E.; Zwezerijnen, G.J.; Pfaehler, E.; Lugtenburg, P.J.; van der Holt, B.; de Vet, H.C.; Hoekstra, O.S.; Boellaard, R. 18F-FDG PET/CT baseline rdiomics features improve the prediction of treatment outcome in diffuse large B-cell lymphoma patients. Blood 2020, 136, 27–28. [Google Scholar] [CrossRef]

- Liu, Q.; Sun, D.; Li, N.; Kim, J.; Feng, D.; Huang, G.; Wang, L.; Song, S. Predicting EGFR mutation subtypes in lung adenocarcinoma using 18F-FDG PET/CT radiomic features. Transl. Lung Cancer Res. 2020, 9, 549. [Google Scholar] [CrossRef]

- Chen, Q.; Zhang, L.; Mo, X.; You, J.; Chen, L.; Fang, J.; Wang, F.; Jin, Z.; Zhang, B.; Zhang, S. Current status and quality of radiomic studies for predicting immunotherapy response and outcome in patients with non-small cell lung cancer: A systematic review and meta-analysis. Eur. J. Nucl. Med. Mol. Imaging 2021, 49, 345–360. [Google Scholar] [CrossRef]

- Van Griethuysen, J.J.; Fedorov, A.; Parmar, C.; Hosny, A.; Aucoin, N.; Narayan, V.; Beets-Tan, R.G.; Fillion-Robin, J.-C.; Pieper, S.; Aerts, H.J. Computational radiomics system to decode the radiographic phenotype. Cancer Res. 2017, 77, e104–e107. [Google Scholar] [CrossRef]

- Zwanenburg, A.; Vallières, M.; Abdalah, M.A.; Aerts, H.J.; Andrearczyk, V.; Apte, A.; Ashrafinia, S.; Bakas, S.; Beukinga, R.J.; Boellaard, R. The image biomarker standardization initiative: Standardized quantitative radiomics for high-throughput image-based phenotyping. Radiology 2020, 295, 328–338. [Google Scholar] [CrossRef]

- Luo, P.; Zhang, R.; Ren, J.; Peng, Z.; Li, J. Switchable normalization for learning-to-normalize deep representation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 712–728. [Google Scholar] [CrossRef]

- Lambin, P.; Rios-Velazquez, E.; Leijenaar, R.; Carvalho, S.; Van Stiphout, R.G.; Granton, P.; Zegers, C.M.; Gillies, R.; Boellard, R.; Dekker, A. Radiomics: Extracting more information from medical images using advanced feature analysis. Eur. J. Cancer 2012, 48, 441–446. [Google Scholar] [CrossRef] [PubMed]

- Hancer, E.; Xue, B.; Zhang, M. Differential evolution for filter feature selection based on information theory and feature ranking. Knowl.-Based Syst. 2018, 140, 103–119. [Google Scholar] [CrossRef]

- Peng, H.; Long, F.; Ding, C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1226–1238. [Google Scholar] [CrossRef]

- Ziegel, E.R. The Elements of Statistical Learning; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar]

- Shur, J.D.; Doran, S.J.; Kumar, S.; Ap Dafydd, D.; Downey, K.; O’Connor, J.P.; Papanikolaou, N.; Messiou, C.; Koh, D.-M.; Orton, M.R. Radiomics in oncology: A practical guide. Radiographics 2021, 41, 1717–1732. [Google Scholar] [CrossRef]

- Riley, R.D.; Ensor, J.; Snell, K.I.; Harrell, F.E.; Martin, G.P.; Reitsma, J.B.; Moons, K.G.; Collins, G.; Van Smeden, M. Calculating the sample size required for developing a clinical prediction model. BMJ 2020, 368, m441. [Google Scholar] [CrossRef] [PubMed]

- Feng, K.; Zhao, S.; Shang, Q.; Liu, J.; Yang, C.; Ren, F.; Wang, X.; Wang, X. An overview of the correlation between IPI and prognosis in primary breast lymphoma. Am. J. Cancer Res. 2023, 13, 245. [Google Scholar]

- Quinn, C.; Maguire, A.; Rakha, E. Pitfalls in breast pathology. Histopathology 2023, 82, 140–161. [Google Scholar] [CrossRef]

- Obuchowski, N.A.; Bullen, J. Quantitative imaging biomarkers: Effect of sample size and bias on confidence interval coverage. Stat. Methods Med. Res. 2018, 27, 3139–3150. [Google Scholar] [CrossRef]

- Raunig, D.L.; McShane, L.M.; Pennello, G.; Gatsonis, C.; Carson, P.L.; Voyvodic, J.T.; Wahl, R.L.; Kurland, B.F.; Schwarz, A.J.; Gönen, M. Quantitative imaging biomarkers: A review of statistical methods for technical performance assessment. Stat. Methods Med. Res. 2015, 24, 27–67. [Google Scholar] [CrossRef]

- Sollini, M.; Bandera, F.; Kirienko, M. Quantitative imaging biomarkers in nuclear medicine: From SUV to image mining studies. Highlights from annals of nuclear medicine 2018. Eur. J. Nucl. Med. Mol. Imaging 2019, 46, 2737–2745. [Google Scholar] [CrossRef]

- Chen, W.; Hou, X.; Hu, Y.; Huang, G.; Ye, X.; Nie, S. A deep learning-and CT image-based prognostic model for the prediction of survival in non-small cell lung cancer. Med. Phys. 2021, 48, 7946–7958. [Google Scholar] [CrossRef]

- Ou, X.; Wang, J.; Zhou, R.; Zhu, S.; Pang, F.; Zhou, Y.; Tian, R.; Ma, X. Ability of 18F-FDG PET/CT radiomic features to distinguish breast carcinoma from breast lymphoma. Contrast Media Mol. Imaging 2019, 2019, 4507694. [Google Scholar] [CrossRef] [PubMed]

- Bao, D.; Zhao, Y.; Liu, Z.; Zhong, H.; Geng, Y.; Lin, M.; Li, L.; Zhao, X.; Luo, D. Prognostic and predictive value of radiomics features at MRI in nasopharyngeal carcinoma. Discov. Oncol. 2021, 12, 1–13. [Google Scholar] [CrossRef] [PubMed]

- Bicci, E.; Calamandrei, L.; Di Finizio, A.; Pietragalla, M.; Paolucci, S.; Busoni, S.; Mungai, F.; Nardi, C.; Bonasera, L.; Miele, V. Predicting Response to Exclusive Combined Radio-Chemotherapy in Naso-Oropharyngeal Cancer: The Role of Texture Analysis. Diagnostics 2024, 14, 1036. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Gong, G.; Sun, Q.; Meng, X. Prediction of pCR based on clinical-radiomic model in patients with locally advanced ESCC treated with neoadjuvant immunotherapy plus chemoradiotherapy. Front. Oncol. 2024, 14, 1350914. [Google Scholar] [CrossRef]

- Su, G.-H.; Xiao, Y.; You, C.; Zheng, R.-C.; Zhao, S.; Sun, S.-Y.; Zhou, J.-Y.; Lin, L.-Y.; Wang, H.; Shao, Z.-M. Radiogenomic-based multiomic analysis reveals imaging intratumor heterogeneity phenotypes and therapeutic targets. Sci. Adv. 2023, 9, eadf0837. [Google Scholar] [CrossRef]

| Characteristics | Total (n = 279) | Internal Cohort (n = 221) | External Cohort (n = 58) | p Value |

|---|---|---|---|---|

| Sex | 0.467 | |||

| Female | 277 (99.3) | 219 (99.1) | 58 (100.0) | |

| Male | 2 (0.7) | 2 (0.9) | 0 (0.0) | |

| Age (year) | 51.39 ± 11.80 | 51.58 ± 12.24 | 51.57 ± 11.47 | 0.995 |

| Height (m) | 1.59 ± 0.05 | 1.59 ± 0.05 | 1.58 ± 0.05 | 0.428 |

| Weight (kg) | 59.05 ± 8.70 | 59.59 ± 8.95 | 57.00 ± 7.46 | 0.044 |

| BMI (kg/m2) | 23.27 ± 3.22 | 23.44 ± 3.28 | 22.62 ± 2.95 | 0.085 |

| Stage | 0.281 | |||

| I | 42 (15.1) | 29 (13.1) | 13 (22.4) | |

| II | 108 (38.7) | 90 (40.7) | 18 (31.0) | |

| III | 45 (16.1) | 35 (15.8) | 10 (17.2) | |

| IV | 84 (30.1) | 67 (30.3) | 17 (29.3) |

| Models | AUC | Accuracy % | Sensitivity % | Specificity % | PPV% | NPV% | |

|---|---|---|---|---|---|---|---|

| Ou et al. (CT combined with PT predictive variables) [45] | Internal set | 0.85 | 76.2 | 86.1 | 66.7 | – | – |

| External set | – | – | – | – | – | – | |

| Ou et al. (PETa) [7] | Internal set | 0.81 | 80.8 | 80.6 | 84.2 | – | – |

| External set | – | – | – | – | – | – | |

| Chen et al. (AACNN_E) [25] | Internal set | 0.89 | 83.0 | 80.9 | 85.0 | 84.8 | 81.2 |

| External set | 0.79 | 71.6 | 61.4 | 84.7 | 84.0 | 62.6 | |

| PT_TDC_SVM | Internal set | 0.99 | 98.0 | 99.3 | 97.1 | 96.4 | 99.4 |

| External set | 0.99 | 97.5 | 100.0 | 95.4 | 94.9 | 100.0 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, F.; Chen, W.; Zhang, J.; Zou, J.; Gu, B.; Yang, H.; Hu, S.; Liu, X.; Song, S. 2.5D Deep Learning and Machine Learning for Discriminative DLBCL and IDC with Radiomics on PET/CT. Bioengineering 2025, 12, 873. https://doi.org/10.3390/bioengineering12080873

Liu F, Chen W, Zhang J, Zou J, Gu B, Yang H, Hu S, Liu X, Song S. 2.5D Deep Learning and Machine Learning for Discriminative DLBCL and IDC with Radiomics on PET/CT. Bioengineering. 2025; 12(8):873. https://doi.org/10.3390/bioengineering12080873

Chicago/Turabian StyleLiu, Fei, Wen Chen, Jianping Zhang, Jianling Zou, Bingxin Gu, Hongxing Yang, Silong Hu, Xiaosheng Liu, and Shaoli Song. 2025. "2.5D Deep Learning and Machine Learning for Discriminative DLBCL and IDC with Radiomics on PET/CT" Bioengineering 12, no. 8: 873. https://doi.org/10.3390/bioengineering12080873

APA StyleLiu, F., Chen, W., Zhang, J., Zou, J., Gu, B., Yang, H., Hu, S., Liu, X., & Song, S. (2025). 2.5D Deep Learning and Machine Learning for Discriminative DLBCL and IDC with Radiomics on PET/CT. Bioengineering, 12(8), 873. https://doi.org/10.3390/bioengineering12080873