1. Introduction

Esophageal carcinoma (EC) is the eighth most common cancer globally and the sixth leading cause of cancer-related death [

1]. Esophageal squamous cell carcinoma (ESCC) is the predominant subtype, making up over 90% of EC cases in high-risk regions like China [

2,

3]. Neoadjuvant therapy (NAT), followed by surgery, is the standard treatment for resectable locally advanced ESCC [

4,

5]. Lymph node metastasis (LNM) after neoadjuvant chemotherapy (NACT) or chemoradiotherapy (NACRT) significantly influences ESCC prognosis and guides personalized perioperative therapy [

6,

7,

8]. Thus, accurately identifying LN involvement post-NAC is critical.

In clinical practice, computed tomography (CT) is the standard method for diagnosing LNM in ESCC patients receiving NAT [

9]. A common diagnostic criterion is a lymph node short-axis diameter over 10 mm, yet only 8.0–37.5% of metastatic nodes in esophageal carcinoma meet this threshold [

10]. CT detects metastatic nodes at a rate of 57.14%, significantly lower than the pathological rate of 87.6%, resulting in less-than-ideal accuracy, specificity, and sensitivity [

11]. Although factors like the tumor size, depth of tumor invasion, histological type, and neutrophil–lymphocyte ratio have been linked to LNM [

12], their reliability remains controversial.

Radiomics can quantitatively describe tissue heterogeneity, objectively capturing characteristics not visually discernible by extracting quantitative features from medical images with high throughput [

13]. Recent studies have demonstrated the potential of radiomics and deep learning for predicting LNM in esophageal cancer. A systematic review by Ma et al. integrated the data from nine studies involving 719 patients and found that radiomic models utilizing CT, PET, and MRI achieved a sensitivity of 72% and a specificity of 76% (AUC = 0.74) for predicting LNM in ESCC patients [

14]. Studies show that combining radiomic features with clinical risk factors enhances the accuracy of predicting LNM in esophageal cancer compared to using either alone [

15].

However, prior research has primarily concentrated on patients eligible for direct surgical intervention, and there has been no exploration of radiomic analysis for predicting LNM status at the time of surgery in ESCC patients who have undergone neoadjuvant therapy. Additionally, traditional radiomic analysis generally considers the tumor as a single entity, often neglecting the phenotypic differences that exist within its subregions [

16]. The habitat approach—which segments tumors into distinct subregions by clustering voxels with similar imaging features—has demonstrated potential for more effectively capturing and characterizing intratumoral heterogeneity [

17,

18]. Furthermore, 2.5D deep learning methods, which leverage adjacent slices to extract localized 3D information at lower computational cost than full 3D approaches, have shown promising results in medical image classification [

19,

20] but have not been applied to predict LNM in esophageal cancer.

This study aims to develop and validate a CT-based predictive model for pathologically confirmed LNM status at the time of surgery after NAT in patients with locally advanced ESCC, facilitating personalized treatment decisions and prognostic assessment. To ensure reliability and applicability, we employ a focused approach comparing three key modeling strategies: habitat-based radiomic analysis, 2.5D deep learning with multi-instance learning integration, and combined models incorporating clinicoradiological factors.

2. Materials and Methods

2.1. Patients and Study Design

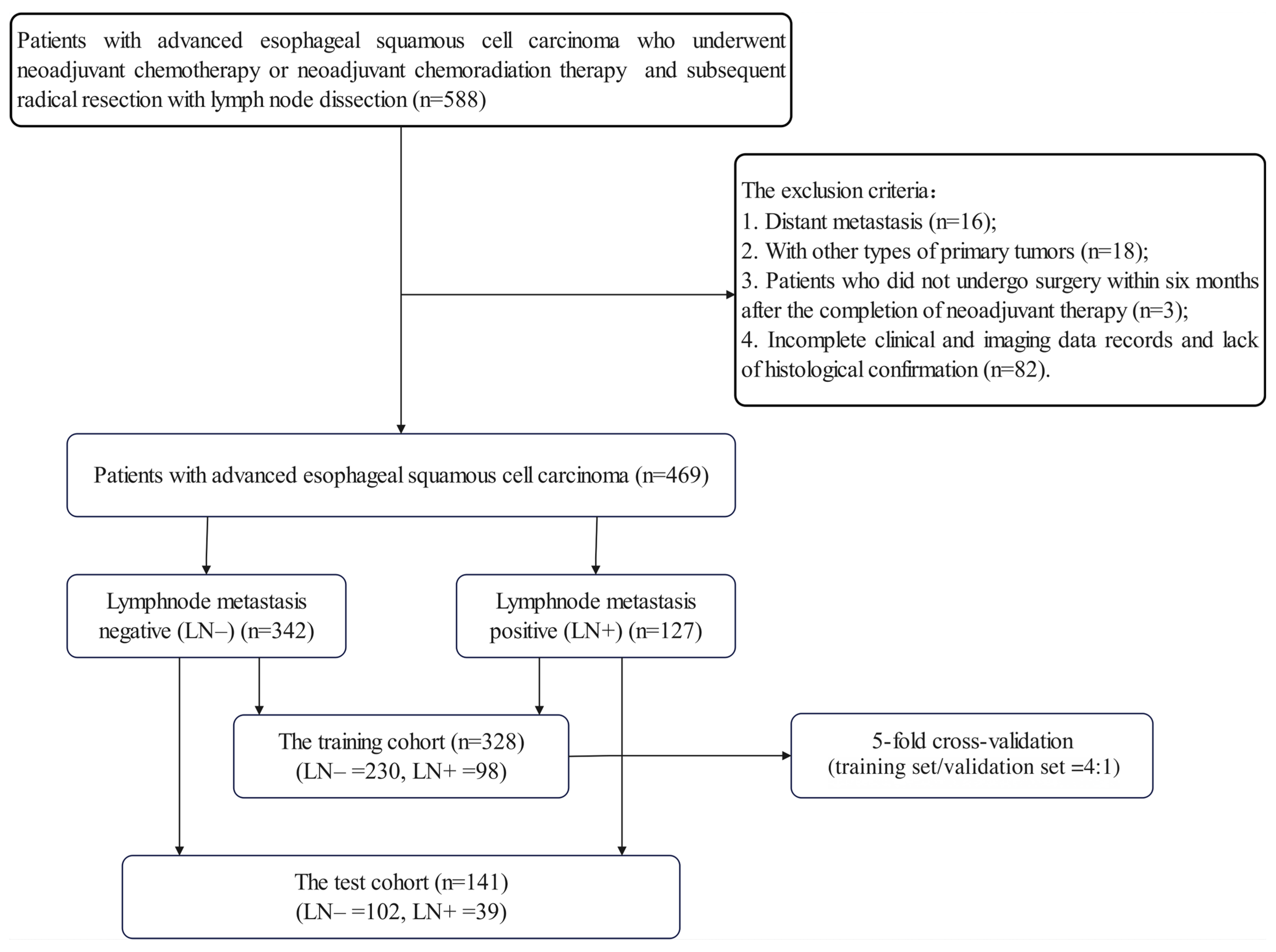

The retrospective study was ethically reviewed and received approval from the Institutional Review Board (IRB) of Sun Yat-sen University Cancer Center (No. B2021-335-01), and the requirement for informed consent was waived. A total of 469 patients with advanced ESCC who underwent NAT between March 2010 and June 2021 were identified from the institutional database. The inclusion criteria were as follows: (1) patients who underwent NACT or NACRT followed by radical resection with lymph node (LN) dissection; (2) contrast-enhanced CT examinations prior to NAT; (3) histologically confirmed ESCC; (4) detailed pathology records of LNs. The exclusion criteria were as follows: (1) distant metastasis at initial diagnosis; (2) presence of other types of primary tumors; (3) patients who did not undergo surgery within six months of the completion of NAT; (4) incomplete clinical and imaging data records and lack of histological confirmation.

All the enrolled patients were randomly divided into a training cohort (n = 328) and a test cohort (n = 141) at a ratio of 7:3. To ensure robust model training and hyperparameter optimization, we employed 5-fold cross-validation within the training cohort for all the modeling approaches. The Grid-Search algorithm was utilized to identify the optimal hyperparameters and optimize the algorithms. The final model performance was evaluated on the independent test set. All the enrolled patients underwent radical esophagectomy within six months of the completion of NAT. The clinical endpoint of this study was the pathologically confirmed presence of lymph node metastasis (LNM) at the time of surgery. The patients were categorized into LNM (LN+) or non-LNM (LN−) groups based on the postoperative pathology results.

Details of patient enrollment are shown in

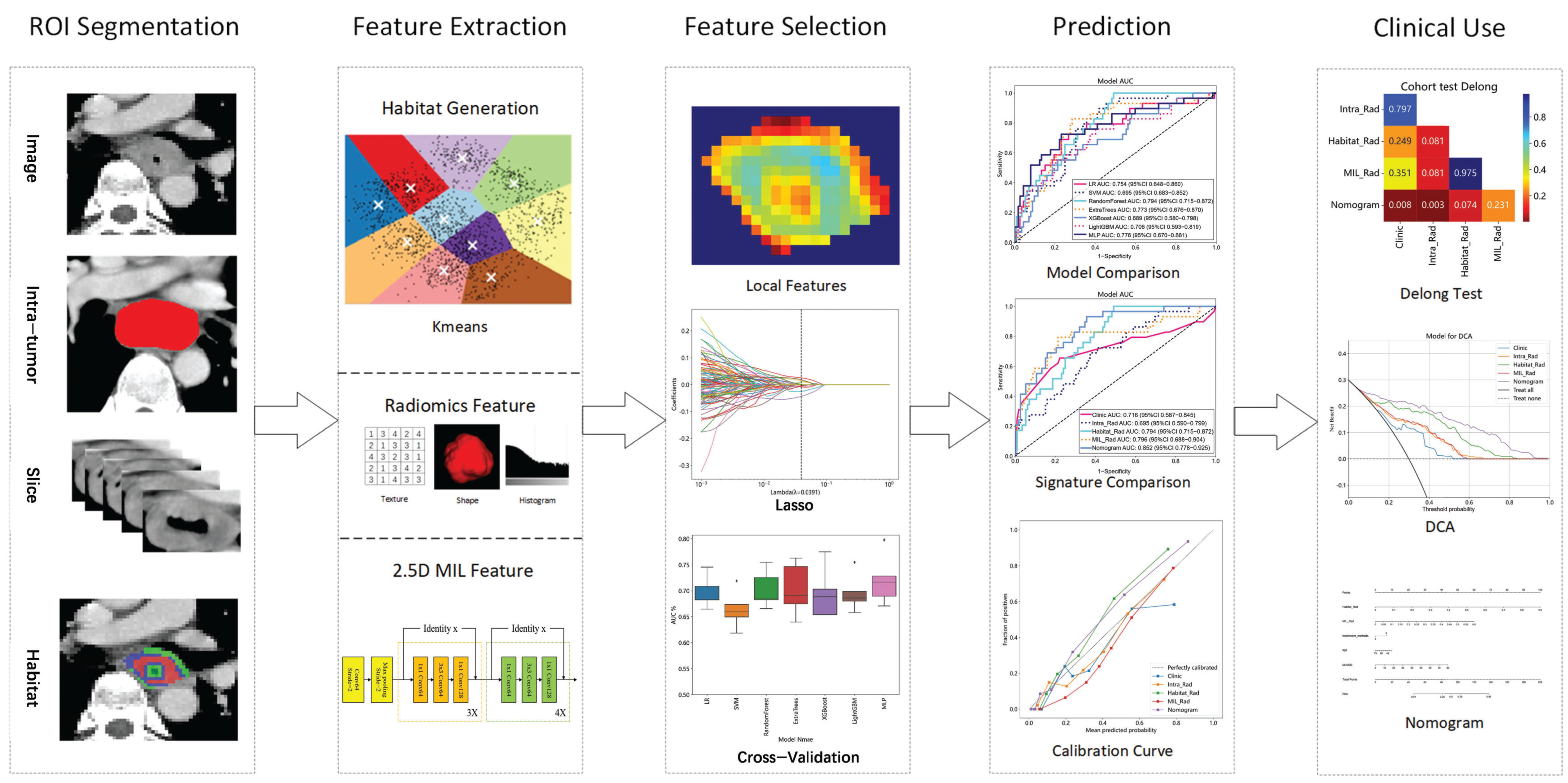

Figure A1, and the overall workflow of this study is illustrated in

Figure 1.

2.2. Neoadjuvant Regimens and Clinicoradiological Data

All the subjects in this study received a standardized and comprehensive neoadjuvant treatment (NAT) regimen, which included either neoadjuvant chemoradiotherapy or neoadjuvant chemotherapy alone, consistent with the National Comprehensive Cancer Network (NCCN) guidelines from 2010 to 2021 (

Appendix A.1). Each patient underwent radical esophagectomy within six months of completing the NAT; the specific surgical procedures are outlined in

Appendix A.2.

Clinical factors, including gender, age, smoking and drinking history, treatment method for NAT, clinical T (cT) and clinical N (cN) stages based on the 8th edition of the American Joint Committee on Cancer TNM staging system [

21], and key CT features based on the radiologists’ diagnosis (e.g., primary tumor maximum diameter, enhancement pattern, and lymph node characteristics such as maximum short-axis diameter, fusion, extracapsular invasion, and necrosis) were extracted from medical records. These clinical factors and CT features are collectively termed clinicoradiological features. All the patients were categorized by postoperative pathology into LNM (LN+) or non-LNM (LN−) groups.

2.3. Image Acquisition and Preprocessing and Tumor Segmentation

All the patients underwent contrast-enhanced CT examination within 2 weeks prior to neoadjuvant therapy using our hospital’s CT scanning systems (Discovery CT750 HD, GE Healthcare; Aquilion TSX-101A, Toshiba; SOMATOM Force; Brilliance iCT, Philips; uCT780, United Imaging Healthcare). The scanning coverage ranged from the thoracic inlet to the costophrenic angle, with the lower edge positioned at 2–7 cm. Following a routine non-enhanced CT scan, contrast-enhanced CT scanning commenced 25 s after administering 1.0 mL/kg of a non-ionic iodine contrast agent intravenously at a rate of 3.0 mL/s via a high-pressure auto-injector. The CT parameters were as follows: a peak voltage of 120 kVp, a tube current of 100–300 mA, a field of view (FOV) of 400–500 mm, a slice thickness of 5 mm, slice spacing of 5 mm, and a matrix of 512 × 512 mm. The raw data were reconstructed at a slice thickness of either 1.0 or 1.25 mm.

Two experienced radiologists with over 10 years of esophageal tumor diagnostic experience independently utilized ITK-Snap software (version 4.0) to delineate the tumor boundaries and create the region of interest (ROI) in a blinded manner. Intra- and inter-observer reproducibility were evaluated using the intraclass correlation coefficient (ICC) to ensure that the selected features were not influenced by segmentation uncertainties.

Our study employed various essential techniques to address significant challenges in medical image analysis. The CT pixel values were restricted to a range of −125 to 225 HU (Hounsfield Units) to standardize the dataset and mitigate the influence of extremes. For the feature extraction, we applied absolute resampling with a fixed bin width of 5 HU, resulting in a total of 70 bins across the intensity range. This approach ensures consistent quantization across all the patients regardless of intensity distribution differences.

For spatial normalization, we employed fixed-resolution resampling to address voxel spacing inconsistencies in different ROIs. This absolute resampling approach, rather than relative resampling, was chosen to ensure standardized spatial resolution for all ROIs regardless of the original acquisition parameters, achieving uniform voxel spacing of 1 mm × 1 mm × 1 mm across all the images.

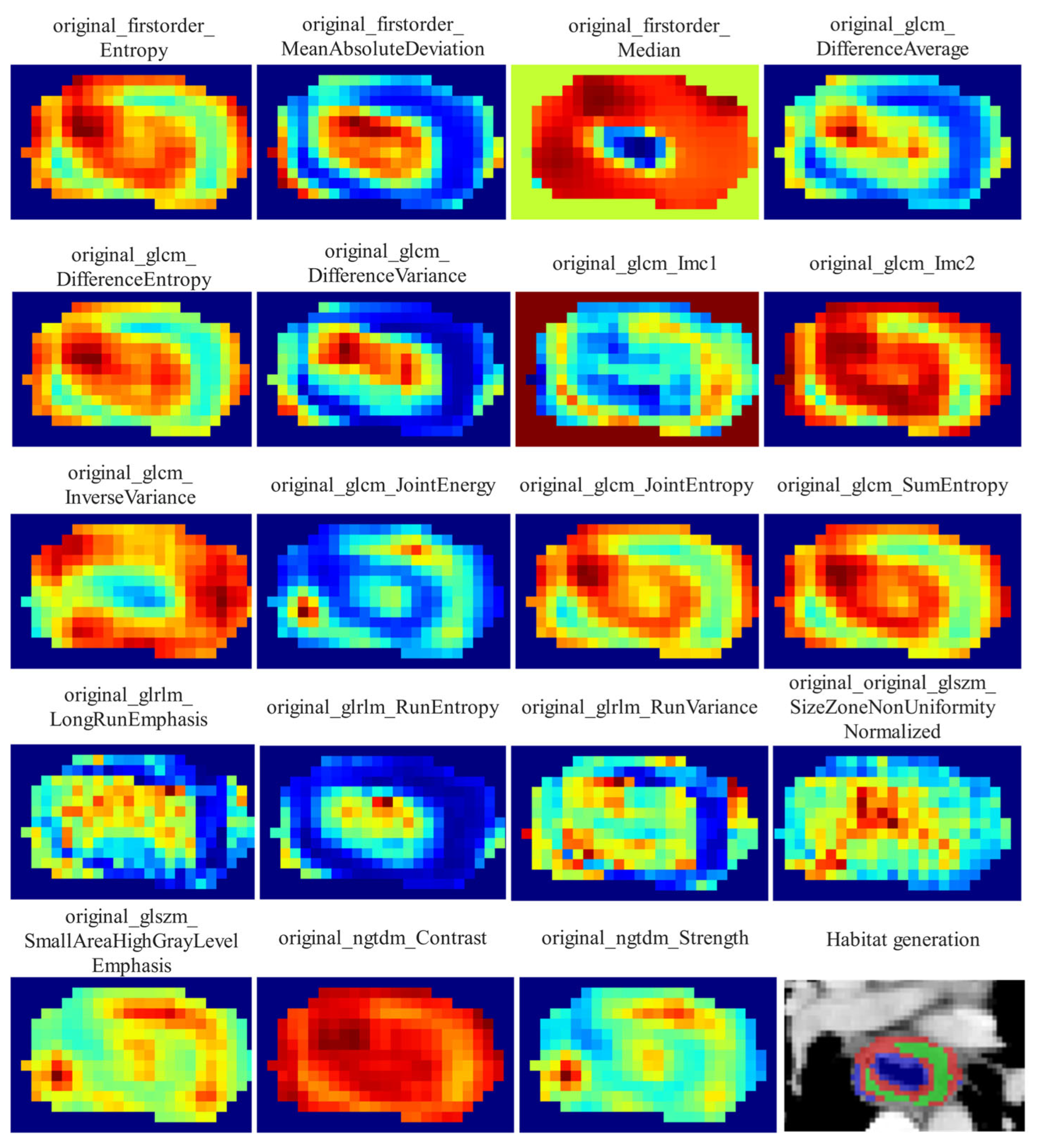

2.4. Habitat-Based Radiomics Procedure

2.4.1. Delineation of Habitat Subregions

Local features, such as local entropy and energy values, were extracted from each voxel within the ROIs using the OKT-gen_roi_rad_features tool. These features were amalgamated to form feature vectors encapsulating various attributes of each voxel’s characteristics. To calculate the local features for each voxel, a 3 × 3 × 3 sliding window was employed, enabling the extraction of 19 distinct feature vectors per voxel. These feature vectors were then subjected to K-means clustering to identify subregions within the tumor. Voxels exhibiting similar characteristics were grouped together, with each voxel assigned to one of the resulting clusters and spatially mapped as a habitat within the original image. A pre-determined three-cluster configuration was adopted, informed by existing habitat-related studies to prevent excessive parameter tuning [

22]. Details of the habitat generation process and the specific features used are illustrated in

Figure 2.

2.4.2. Feature Extraction

From each tumor subregion, a total of 1834 handcrafted radiomic features were extracted from portal venous-phase CT images and categorized into geometry (14 features), intensity (360 features), and texture (1460 features) categories. The geometry features encompassed the three-dimensional shape characteristics of the tumor, while the intensity features described the statistical distribution of voxel intensities within the tumor using first-order analysis. The texture features captured patterns and spatial distributions of intensities using second- and higher-order analysis. Various methods, including the gray-level co-occurrence matrix (GLCM), gray-level dependence matrix (GLDM), gray-level run length matrix (GLRLM), gray-level size zone matrix (GLSZM), and neighborhood gray-tone difference matrix (NGTDM), were utilized to extract the texture features. Since the clustering algorithm employed was unsupervised, it was not guaranteed that each subregion had the same label after clustering. To resolve this issue, we calculated the mean of the features for each subregion to represent the final attributes. For each patient, 1834 radiomic features were also extracted from the entire tumor ROI for comparison. The feature extraction process was carried out using an in-house program implemented in Pyradiomics 3.0.1 (

http://pyradiomics.readthedocs.io, accessed on 11 May 2024).

2.4.3. Feature Selection

To assess the robustness of the extracted image features from the ROIs, we conductedtest–retest and inter-rater analyses to ensure that the selected features were not influenced by segmentation uncertainties. The test–retest analysis involved one radiologist performing two segmentations at two-month intervals on each of the randomly selected 30 patients, while the inter-rater analysis required two radiologists to independently segment the ROIs for a separate set of 30 randomly selected patients. The features extracted from the segmented regions were assessed using the intraclass correlation coefficient (ICC), with those exhibiting an ICC ≥ 0.85 considered robust against segmentation uncertainties. After initial screening using the ICC, all the features were standardized using Z-scores to ensure a normal distribution. Subsequently, the p-values for all the imaging features were calculated using a t-test, retaining only radiomic features with a p-value < 0.05. Highly repeatable features were further analyzed using Pearson’s correlation coefficient to identify strongly correlated features. In cases where the correlation coefficient between any two features exceeded 0.9, only one feature was retained. To preserve the maximum feature representation ability, we implemented a greedy recursive deletion strategy to filter the features, removing the feature with the highest redundancy from the current set at each step. The final set of features used to create the radiomic signature was selected through the least absolute shrinkage and selection operator (LASSO) regression model. LASSO regression shrinks regression coefficients towards zero, effectively setting many irrelevant features’ coefficients to zero, based on the regularization weight λ. To identify the optimal λ for the LASSO regression, the 10-fold cross-validation approach was employed. The λ yielding the smallest mean squared error (MSE) between the predicted and actual LNM across the validations was chosen to select the final features.

2.4.4. Development of Two Handcrafted Radiomic Signatures

In this study, we compared the performance of different approaches to tumor region analysis for lymph node metastasis (LNM) prediction: analyzing the tumor region as a whole (Intra) and assessing tumor habitat (Habitat). For the intra radiomic signature (Intra_Rad), we applied Lasso feature selection followed by various machine learning methods to derive the radiomic signature. Specifically, we utilized widely adopted machine learning models, including logistic regression (LR) for linear classification, Support Vector Machines (SVMs), Random Forest, Extra Trees, Extreme Gradient Boosting (XGBoost), and Light Gradient Boosting Machine (LightGBM) for tree-based algorithms and Multi-Layer Perceptron for deep learning, to construct our risk model. In contrast, the habitat signature (Habitat_Rad) was developed through unsupervised clustering algorithms, which limited our ability to ascertain that clusters sharing the same centers represented similar physical meanings. To address this issue, we computed the mean values of the features. Furthermore, due to the unsupervised nature of the clustering, the feature selection process for the habitat signature did not incorporate the ICC evaluation; however, all the other configurations were aligned with those of the Intra models.

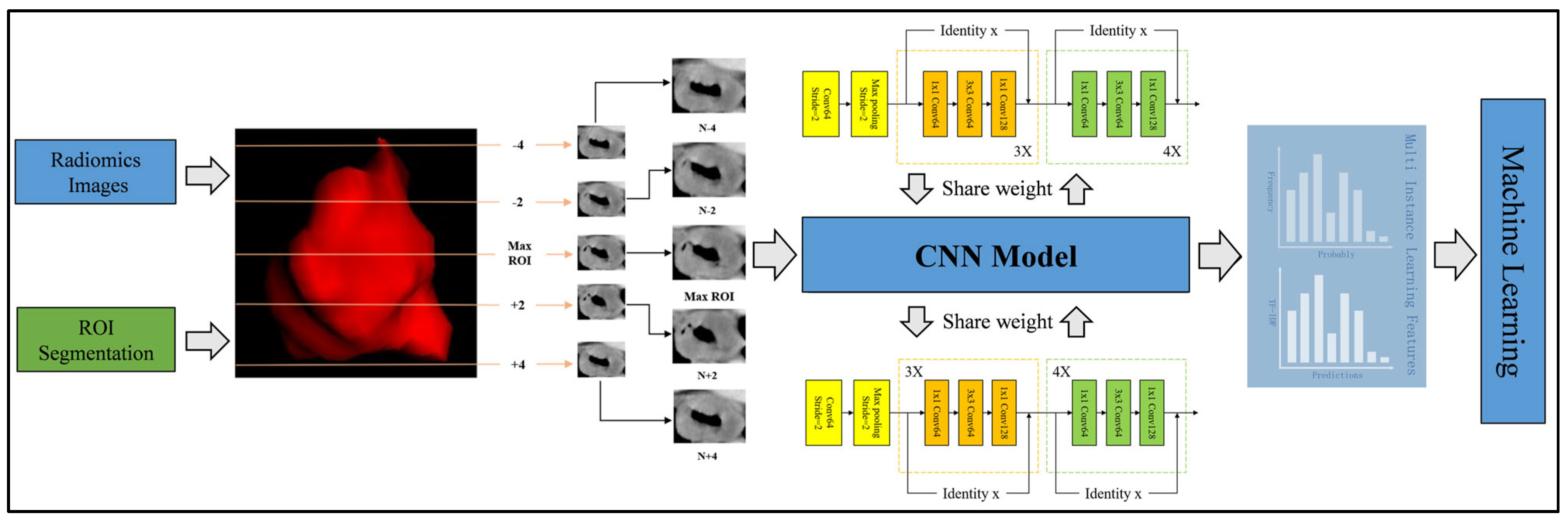

2.5. 2.5D Deep Learning Procedure

2.5.1. 2.5D Data Generation

To balance the advantages of 2D and 3D approaches, we employed a 2.5D methodology that incorporates spatial context while maintaining computational efficiency. For each patient, we first identified the CT slice with the largest cross-sectional area of the tumor ROI. Instead of using only immediately adjacent slices, we extracted the central slice together with two slices located two layers above and two layers below the central slice (i.e., at positions ±2 and ±4 slices from the central slice). This resulted in a stack of five slices per patient: the central slice, the slices at ±2 layers, and the slices at ±4 layers. By introducing this interval-based selection, we partially preserved 3D structural information while reducing data redundancy and computational demand. This process was implemented using OKT-crop_max_roi with the parameter surrounds of +2, +4, −2, and −4.

2.5.2. Model Training

All the generated 2.5D data were incorporated into a transfer learning framework. Instead of merging the slices into a single planar image, each slice was independently processed by convolutional neural network (CNN) models, specifically DenseNet201, ResNet50, and VGG19, all of which were pre-trained on the ImageNet Large Scale Visual Recognition Challenge 2012 (ILSVRC2012) dataset. Prior to inputting into the networks, each 2D slice underwent preprocessing that involved normalizing the gray values to the range [−1, 1] using min–max normalization and resizing to 224 × 224 pixels via nearest-neighbor interpolation, in order to meet the input requirements of the pre-trained models. Stochastic Gradient Descent (SGD) was employed as the optimizer, and sigmoid cross-entropy was utilized as the loss function. Due to the limited size of the image dataset, particular care was taken in selecting an appropriate learning rate to improve model generalization. In this study, we adopted the cosine decay learning rate schedule. The specific learning rate settings used in our experiments are detailed in

Appendix A.3.

The model training was performed on a workstation equipped with a Windows 10 operating system, an Intel Core i9-14900KF processor, 96 GB of DDR5 RAM, and an NVIDIA (Santa Clara, CA, USA) GeForce RTX 4090 GPU with 24 GB of VRAM. Under this hardware configuration, the training of each model required approximately 2 h.

2.5.3. Multi-Instance Learning

To address the predictions from the deep learning models, we introduced two fusion methods for multi-instance learning (MIL), akin to those in pathological image analysis [

23]. The first method, the predict likelihood histogram (PLH), utilizes 2.5D deep learning models to generate predictive probabilities and labels for cross-sectional areas of 2.5D images. By expanding the use of PLH channels, we created a histogram of probability distributions that accurately represented the image features, offering a detailed image portrayal. The second method, bag of words (BoW), segments the full image into slices to extract probabilities and predictions from each, combining 2.5D and multi-model results to yield 3 × 5 predictive outcomes (3 models, 5 slices) per sample. Mirroring the BoW approach from textual analysis, we treated these predictive outcomes as features similar to word frequencies in text, utilizing TF-IDF (Term Frequency–Inverse Document Frequency) for the feature characterization. By integrating the feature representations from the PLH and BoW, we forged a comprehensive feature set from MIL, merging various information sources for an adept depiction of image characteristics. The process of multi-instance learning feature fusion is detailed in

Appendix A.4. As shown in

Figure A4, the feature selection method and process after fusion are consistent with the feature selection steps of habitat-based radiomics.

Figure 3 visually depicts the comprehensive workflow of the 2.5D deep learning and multiple instance learning process.

2.5.4. Construction of a Multi-Instance Learning Radiomic Signature

The selected features from MIL were then inputted into machine learning algorithms akin to those used for radiomic feature modeling to construct the MIL radiomic signature (MIL_Rad). During the model training process, we similarly employed 5-fold cross-validation within the training set, combined with Grid-Search for hyperparameter optimization.

2.6. Building a Clinicoradiological Signature and Nomogram

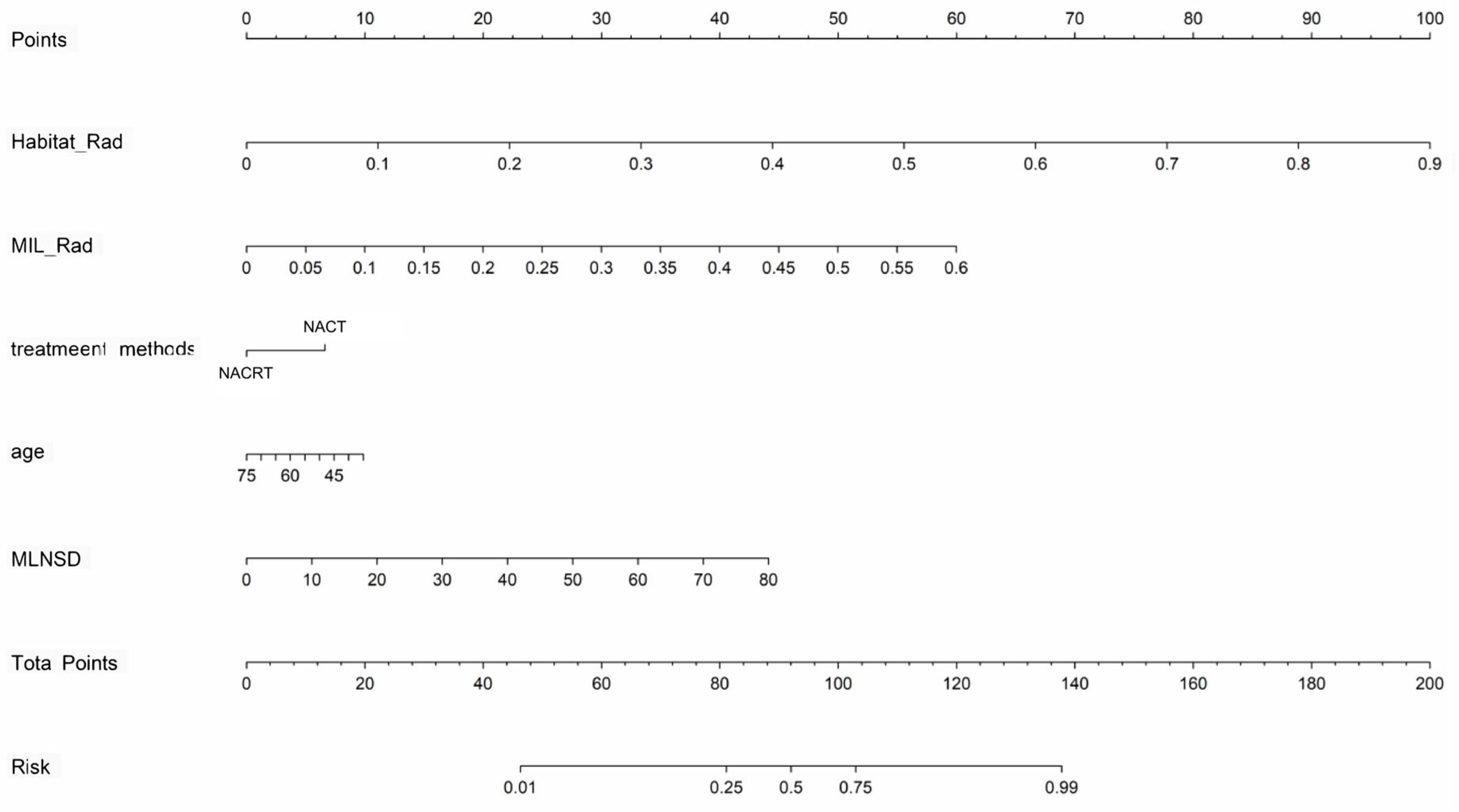

In the training cohort, significant clinical and radiologic predictors were identified via univariate and multivariate logistic regression, with odds ratios (ORs) and 95% confidence intervals (CIs) calculated. A clinicoradiological signature was developed as a baseline. A nomogram was then created by combining the Habitat_Rad and MIL_Rad signatures with independent predictors to further improve performance.

2.7. Model Performance Assessment and Interpretability

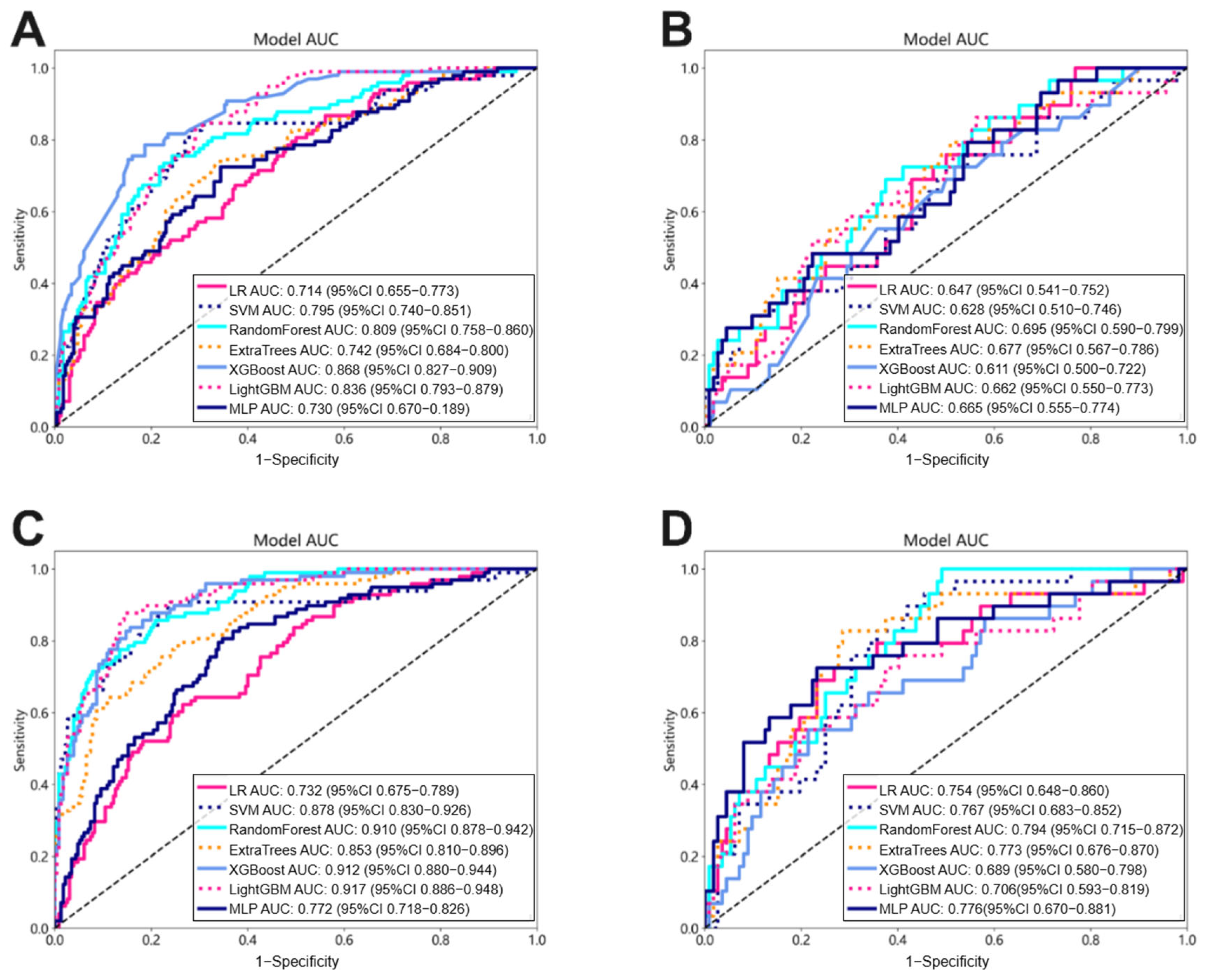

The performance of each model in predicting LNM was evaluated by calculating the area under the curve (AUC) of the receiver operating characteristic (ROC) curve. Furthermore, the corresponding metrics—accuracy, sensitivity, specificity, positive predictive value (PPV), and negative predictive value (NPV)—were computed. Calibration of all models was assessed in both the training and test groups using calibration curves derived from 1000 resampling bootstraps, as well as the Hosmer–Lemeshow goodness-of-fit test. Decision curve analysis (DCA) was conducted to estimate the clinical utility of each model by quantifying the net benefit across various threshold probabilities.

2.8. Statistics

The statistical analyses were conducted using SPSS (version 26.0, IBM) and Python (version 3.8;

http://www.python.org, accessed on 11 May 2024). The continuous variables were compared using the Student’s

t-test or the Mann–Whitney U-test, while the categorical variables were analyzed with the chi-square test or Fisher’s exact test, as appropriate. The area under the curves (AUCs) for different models were compared using the DeLong test. Univariate and multivariate Cox proportional hazards regression analyses were performed to identify independent predictors of lymph node metastasis (LNM). A two-tailed

p-value of less than 0.05 was considered statistically significant.

4. Discussion

In this study, we developed several predictive models using pre-treatment contrast-enhanced CT images and clinicoradiological factors to assess the status of lymph node metastasis (LNM) at the time of surgery in patients with esophageal squamous cell carcinoma (ESCC) following neoadjuvant therapy (NAT). Among these, the combined nomogram model, integrating a tumor-habitat-based radiomic signature, a multiple instance learning (MIL)-based signature derived from 2.5D deep learning models, and independent clinicoradiological risk factors, exhibited superior performance.

NAT for locally advanced ESCC can significantly reduce tumor staging before surgery and increase the rate of complete resection [

24,

25]. LNM status is a crucial prognostic factor in esophageal cancer and plays a significant role in determining personalized perioperative treatment strategies [

26]. To address the limitations of current radiological methods in preoperative assessment of LNM [

27,

28], our study developed and validated a series of models, including those based on clinicoradiological factors, handcrafted radiomic features, 2.5D deep learning, and combined approaches.

In terms of clinicoradiological factors, univariable and multivariable analyses identified two clinical characteristics (age and treatment method) and one peripheral LN radiographic feature (maximum lymph node short diameter) as independent risk factors, which aligns with previous studies’ findings [

29,

30,

31]. The risk factor-based models demonstrated inadequate discriminative capabilities, with the top model constructed by LightGBM achieving an AUC of 0.738 on the training dataset and 0.716 on the test set, indicating limited effectiveness in predicting LNM.

Our study demonstrated that tumor-habitat-based radiomics (Habitat_Rad) significantly outperformed whole-tumor-based approaches (Intra_Rad) in predicting LNM in ESCC. This aligns with previous findings showing similar superiority in intrahepatic cholangiocarcinoma [

32], breast cancer [

33], and cervical cancer [

34]. Tumor subregions, characterized by distinct tissue structures and functional properties [

35], arise from heterogeneity in the vasculature, metabolism, and gene expression during tumor progression [

36,

37]. CT imaging reveals these subregions through variations in density, morphology, and texture [

38], reflecting biological features such as necrosis, hemorrhage, calcification, and cellular proliferation. Therefore, exploring the relationships between imaging features of tumor subregions and LNM is crucial for improving tumor diagnosis, optimizing treatment strategies, and enhancing prognostic evaluations.

A single slice only provides information in the transverse plane, which means 3D anatomical information is lost during the training process, resulting in unreliable outcomes. A study on the performance of multi-organ cancer classification based on 2D and 3D image features in radiomic analysis shows that in several aspects, including LNM prediction, 3D image features provide predictive performance that is superior to or equal to 2D image features [

39]. However, 3D deep convolutional neural networks (DCNNs) often require significantly more parameters to train, and limited data and high computational cost typically hinder their performance. Therefore, we proposed a 2.5D method to convert 3D data into 2D images by integrating the largest tumor layer with the two layers above and below and then training it on 2D DCNNs. On the one hand, the 2.5D model captures more contextual information and is more effective than a pure 2D model. At the same time, it requires less computation than a 3D model, offering a balanced solution for performance and efficiency. To address the unsatisfactory performance of individual 2.5D deep learning (DL) models, we employed the multiple instance learning (MIL) method for model fusion, which led to more comprehensive representation. The resulting MIL-based signature demonstrated significantly improved performance compared to single 2.5D models.

In our study, the combined nomogram showed superior performance with AUC values of 0.929 in the training cohort and 0.852 in the test cohort. Tan et al. constructed a nomogram integrating radiomic features with CT-reported LN status for LNM prediction in resectable ESCC patients, achieving AUC values of 0.758 and 0.773 in the training and test sets, significantly outperforming traditional size criteria (AUCs of 0.661 and 0.586, respectively) [

40]. Wu et al.’s multi-level CT radiomic model, designed to preoperatively forecast LNM in ESCC, exhibited AUCs of 0.875 in the training cohort and 0.874 in the internal validation cohort. By incorporating clinical variables alongside handcrafted, computer vision (CV), and deep learning signatures, this model outperformed Model 1 (clinical predictors and handcrafted signature) and Model 2 (clinical predictors plus handcrafted and CV signatures) [

41]. These studies highlight the crucial role of radiomics in capturing tumor heterogeneity of ESCC and indicate the performance boost from combining multiple radiomic signatures.

Our study encountered several limitations. Firstly, although our model performs well on both the training and testing sets, it still faces the risk of overfitting and limited generalizability. In response to concerns about generalizability with a single train–test split, we implemented 5-fold cross-validation during the model development, which improved the robustness of our findings. However, we acknowledge that external validation with independent cohorts from different institutions remains necessary to fully establish the generalizability of our model. Secondly, the reproducibility of habitat division is a challenge. Unsupervised learning methods based on K-means clustering may introduce variability due to differences in equipment, scanning parameters, and image preprocessing steps. We have attempted to alleviate this issue through strict image standardization and feature selection processes, but future research should explore more robust habitat identification algorithms and standardization procedures. Thirdly, due to the relatively small dataset, our study primarily focused on binary classification predictions (presence/absence of LNM) without distinguishing LNM patterns in different regions (neck, chest, and abdomen), which is crucial for individualized treatment planning. Future studies should consider developing multi-class prediction models to assess the risk of lymph node metastasis in specific anatomical regions. Finally, the ethical issues of AI-assisted clinical decision-making systems cannot be ignored. AI-based predictions may lead to over-reliance or misinterpretation, especially when clinicians find it difficult to explain the model’s decision logic. We emphasize that the model proposed in this study should serve as an auxiliary tool for clinical decision-making rather than a replacement for clinical judgment. Future research and applications should prioritize model transparency, interpretability, and continuous monitoring of patient outcomes.