Abstract

Advancements in bioelectronic sensors and actuators have paved the way for real-time monitoring and control of the progression of wound healing. Real-time monitoring allows for precise adjustment of treatment strategies to align them with an individual’s unique biological response. However, due to the complexities of human–drug interactions and a lack of predictive models, it is challenging to determine how one should adjust drug dosage to achieve the desired biological response. This work proposes an adaptive closed-loop control framework that integrates deep learning, optimal control, and reinforcement learning to update treatment strategies in real time, with the goal of accelerating wound closure. The proposed approach eliminates the need for mathematical modeling of complex nonlinear wound-healing dynamics. We demonstrate the convergence of the controller via an in silico experimental setup, where the proposed approach successfully accelerated the wound-healing process by 17.71%. Finally, we share the experimental setup and results of an in vivo implementation to highlight the translational potential of our work. Our data-driven model suggests that the treatment strategy, as determined by our deep reinforcement learning algorithm, results in an accelerated onset of inflammation and subsequent transition to proliferation in a porcine wound model.

1. Introduction

Wound healing is a dynamic and continuous process that involves nonlinear interactions across different cell types (platelets, neutrophils, macrophages, myofibroblasts, fibroblasts, keratinocytes, and others) and bio-molecules (blood coagulation factors, pro- and anti-inflammatory cytokines, polymers, and enzymes of the extracellular matrix) [1]. These nonlinear processes can be modulated by different treatments administrated to the wound, which thus influence the healing process. In this context, personalized precision treatments have emerged as a vital research area in modern health care, driven by recent advancements in artificial intelligence [2,3,4]. The need for precision treatment arises from the fact that patients often exhibit varied responses to the same medication, a phenomenon that arises from both molecular differences between individuals and variations within the same patient over time [5]. Personalized treatment aims to identify the most effective drug types, dosages, and timings of administration for each patient’s unique responses, leveraging experimental data and statistical analysis [6].

In this work, we explore two treatment modalities: electric field (EF) therapy and fluoxetine (Flx), both of which have demonstrated efficacy in accelerating wound healing. Endogenous electric fields facilitate healing by promoting the migration of epidermal stem cells, which are vital for tissue repair [7]. Previous studies have shown that fluoxetine, when administered either systemically or topically, enhances wound healing in both diabetic and non-diabetic rodent models [8]. Moreover, fluoxetine has been found to inhibit pathogen growth, reduce biofilm formation, and limit the spread of infection in rodents [9]. More recent work confirms that fluoxetine suppresses inflammation and, as a consequence, can delay healing if applied too early [10,11,12]. Given this prior knowledge, our high-level strategy is prescriptive in that we initiate treatment with EF and then switch to fluoxetine as soon as sufficient levels of inflammation are detected. However, determining the optimal strength of the electric field and the optimal fluoxetine dosage presents a significant challenge, particularly when accounting for the nonlinear dynamics of cell migration induced by electric fields, drug metabolism, and the biological mechanisms targeted by the medication.

While mathematical models can help inform optimal treatment strategies [10,13], the complexity of biological systems and individual variability can undermine the reliability of model-based controllers. Even with accurate models, the inherent nonlinearity complicates the task of ensuring the optimality and safety of prescribed treatment strategies for wound care. Conversely, the study of linear systems is well established, providing scalable methods for designing, analyzing, controlling, and optimizing such systems [14,15]. Thus, we aim to learn a mapping from a nonlinear system to a linear one. Establishing a reliable mapping from nonlinear systems to linear models remains challenging. The Koopman operator theory, as discussed in foundational works [16,17,18], offers a promising approach by allowing a nonlinear system to be represented as an infinite-dimensional linear system. Yet, the optimization of this framework typically operates within functional space, which can be impractical in real-world applications and does not account for control inputs in nonlinear systems. Recent advances have sought to generalize Koopman operator theory to the control of nonlinear systems [19,20,21,22]. These extensions provide finite-dimensional function approximations that can facilitate the tractable control of nonlinear systems. This motivated us to design a deep neural network-based algorithm to learn a mapping from an unknown nonlinear system to its linear representation, an operation akin to the objectives of the Koopman operator. Our work builds upon previous research [21,22,23]. The major difference is that our approach addresses overfitting during training and does not rely on prior knowledge of a control–affine matrix. This work is coupled with optimal control and deep reinforcement learning (DRL) to optimize treatment strategies.

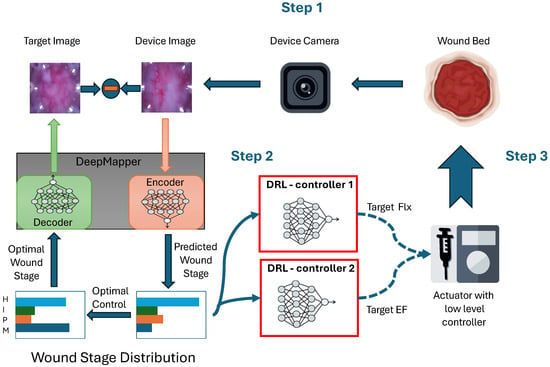

The main contribution of this paper is an adaptive closed-loop control framework using deep learning, optimal control, and reinforcement learning to enhance wound healing, as schematized in Figure 1. At the heart of the algorithm is DeepMapper, which provides an invertible mapping between a nonlinear state and a linear state. In this case, the nonlinear system is the temporal evolution of wound images, which are mapped to a linear system with four states representing the four canonical stages of wound healing: hemostasis (H), inflammation (I), proliferation (P), and maturation (M). DeepMapper also generates a linear ordinary differential equation that models the temporal evolution of these four wound-healing stages. The linear model is used to derive an optimal control law and the corresponding target nonlinear state (target image) to guide the deep reinforcement learning algorithm.0 That is, the error between the device and target image is used to update the deep reinforcement learning algorithm and adjust the applied EF strength or dosage of fluoxetine. The response of the wound to the treatment is captured in the next image, and the cycle repeats. This framework eliminates the need for mathematical modeling of nonlinear dynamics or the mechanism by which a treatment affects the wound-healing processes.

Figure 1.

The DRL-based closed-loop control pipeline for accelerating wound healing involves three steps. First, a wound image captured by a camera is fed into DeepMapper, which predicts the wound stage. The following four canonical wound stages are represented: hemostasis (H), inflammation (I), proliferation (P), and maturation (M). Second, the deep reinforcement learning (DRL) agents generate treatment strategies. Third, an electric field or a dose of fluoxetine is delivered to the wound through an actuator.

We generalized the DeepMapper method from [23], eliminating the need for a control–affine matrix. Our results demonstrate its ability to learn a linear representation of nonlinear wound-healing dynamics from a time-series of images monitoring wound closure in vivo. By leveraging optimal control and the decoder mechanism within DeepMapper, we derived an optimal reference signal that the DRL agent used to generate real-time treatment strategies. This approach not only improved the accuracy of the linear representation for modeling wound dynamics under optimal treatments but also enhanced the efficiency of the reinforcement learning agent. We validated the efficacy of our approach with experimental data. Simulations of nonlinear healing dynamics with adaptive interventions showed that the proposed method accelerated wound healing by 17.71% compared to the normal healing process. Additionally, the proposed framework has successfully been implemented in in vivo porcine experiments, demonstrating our method’s potential for translation to clinical settings [24]. The proposed framework showcases the significant potential to expedite wound healing by effectively integrating optimal control and data-driven methods. By leveraging advanced algorithms that adapt in real time to changing conditions, this system offers a more accurate and reliable means of promoting faster recovery that lacks the limitations of conventional models.

The remainder of the paper is organized as follows. Section 2 presents an overview of the closed-loop control framework and then the details of the design of the deep learning-based algorithm used to find the linear representation of nonlinear wound-healing dynamics, as well as a DRL-based algorithm for accelerating wound healing. Implementation details, simulation results, and a detailed discussion of these results are given in Section 3 and Section 4. Finally, in Section 5, we present conclusions and future research directions.

2. Materials and Methods

The control and optimization of wound-healing dynamics, particularly when one is devising the most effective treatment strategy, is challenging due to their inherently nonlinear nature. Previous work [21,22,23] has focused on finding linear representations of nonlinear systems from which optimal control can be derived. However, these approaches often require detailed knowledge of the control effects on the learned linear systems, such as a control–affine matrix, as in [23]. In practice, obtaining this information is often difficult, making it challenging to translate the learned linear system into actionable treatment parameters such as drug dosage or the strength of the electric field.

To address this gap, we employ a leader–follower strategy that is commonly used in robotic control by leveraging a decoder alongside a deep reinforcement learning (DRL) controller. This is made possible by DeepMapper, which both learns a linear representation of the nonlinear system and uses it to compute an optimal control strategy, bridging the gap between theoretical models and practical treatment parameters.

2.1. DeepMapper: Linearization of Nonlinear Wound-Healing Dynamics

Consider a nonlinear state-space model in discrete time, as follows:

The state space is a closed subset of , and the input (or action) space is finite, with cardinality , where . We may have state-dependent constraints, so that for each . there is a set for which is constrained to for each k.

Assume that there exists one unique equilibrium , which represents the full closure of the wound. This paper concerns finding an optimal control strategy such that the time from any initial state x to an equilibrium is minimized.

As discussed in [10], the function F can be an unmanageable nonlinear function that defines different cell transitions during wound healing. The nonlinear state x associated with wound healing as surveyed in the literature may include variables such as pH, temperature [25,26,27,28,29,30], or visual representations captured through images of the wound [31]. We assume that x can be measured by some sensor, but F is unknown to the control algorithm.

Solving for the optimal control policy is often difficult, particularly when the dynamics evolve nonlinearly. Given that extensive research and literature has been dedicated to the study of linear systems, encompassing scalable design, analysis, control, and optimization [14,32], we propose the utilization of a deep learning approach to model a linear system that best approximates the behavior of the underlying nonlinear system to guide control efforts. This builds on our previous work [23] to create a more generalized approach. The major differences are the following: 1. in this work, we do not assume knowledge of a control–affine matrix; 2. we formulate the problem in discrete time, thus removing the need to calculate the Jacobian matrix of a deep neural network and improving the efficiency of the algorithm.

Note that (1) defines a control–affine system. As discussed in [10,21], the decoupling of the states and inputs allows us to find a transformation of the states alone, as follows:

where is the state that evolves subject to nonlinear dynamics, is a function that maps the nonlinear state x to linear state z, and evolves linearly, as follows:

where , contains values that control the velocities of transitions from H (homeostasis) to I (inflammation), from I to P (proliferation), and from P to M (maturation).

Assume that ; with initial condition , there exists one unique equilibrium in the linear dynamics: , which represents a fully healed wound (100% maturation). Without loss of generality, we assume that . Thus, minimizing the time taken from any initial state x to an equilibrium is equivalent to minimizing the following:

with and .

The optimal-control problem for the linear dynamics (3) can be solved via the discrete-time algebraic Riccati equation, giving the optimal control law as follows:

which is referred to as the Linear Quadratic Regulator (LQR) [32].

The optimal control law in (4) informs only the transition rates between wound-healing stages required to minimize the time needed for maturation to reach 100%. However, it does not give any information on what treatments should be applied to the wound, such as what drug dosage should be used. Thus, we need a mechanism that can map the linear state z to the nonlinear state x to inform the real treatment u. In this paper, we adopted the updated DeepMapper architecture proposed in [33], where we employed three types of deep neural networks to parameterize the transformation function h, an inverse transformation function , and the two matrices and in (3).

In a system similar to that in [33], there are three high-level requirements for the neural networks, corresponding to the three types of loss function used in training:

1. Intrinsic coordinates that are useful for reconstruction. We seek to identify a few intrinsic coordinates where the dynamics evolve, along with the inverse so that the state x may be recovered. The reconstruction accuracy of the auto-encoder is achieved by minimizing the loss, as follows:

with as the mean-squared error, averaging over dimension then number of examples.

2. Prediction of future states. The intrinsic coordinates must enable prediction of future states. Specifically, we identified linear dynamics in the matrix . This corresponded to minimizing the loss, as follows:

3. Linear dynamics. To discover the mapping function h, we learned the linear dynamics A on the intrinsic coordinates, i.e., . Linear dynamics were achieved by minimizing the following loss, as below:

The final loss function is a weighted sum of , , and , as follows:

where with .

In biological systems, once a wound is created, the natural healing dynamics remain relatively stable over time, while the external environment affecting the wound can change frequently. This necessitates the design of a model where updates at a slower rate than ; we should also consider capturing the temporal relationship of the measurements and the delayed biological responses to changes in external conditions or treatments. Thus, is updated every N samples and is updated through all the samples within a window of size N through the attention mechanism detailed in [33].

Note that minimizing the loss L in (5) gives only an estimation of the linear representation of the nonlinear wound-healing dynamics through which an optimal control law can be derived. To derive the real optimal treatment strategy , in this paper, we propose the use of a DRL agent to track the reference signal incurred by (4) whenever it is available and penalize the DRL agent when it is not. We show that the control law learned by this DRL agent outperforms direct optimization over the nonlinear system without a learned mapping, achieving faster convergence and more stability.

For the in silico experiments, DeepMapper was trained on an augmented dataset originating from 256 wound images from mice. For in vivo experiments, the model was further trained on a collection of wound images from five in vivo experiments conducted on porcine models. Each of these porcine experiments contains a time series of images, with six images taken every 2 h for 3 days. Although this yielded approximately 900 images in total, we performed a downsampling step with each set of six images from each time point. This gave us a set of around 150 porcine images. For data augmentation, we conducted random rotation and added Gaussian noise.

2.2. Design of the Reinforcement Learning Algorithm

In this section, we introduce the use of a DRL algorithm to explore possible treatment policies such that when the optimal control input (4) is accessible, the DRL algorithm should be able to exploit its acquired knowledge to generate a policy that drives the linear state towards the one generated through optimal control. The exploration and exploitation of the DRL algorithm do not require knowledge of either nonlinear or linear dynamics, and thus, this approach not only alleviates the burden of mathematical interpretation in real-world treatment scenarios but significantly expedites the healing process. To realize this, we formulated the wound-healing dynamics as a Markov decision process (MDP) problem and subsequently solved it using the Advantage Actor Critic (A2C) algorithm [34].

Consider an MDP defined by , where represents the state space, represents the input/action space, P represents the transition-probability matrix, r represents the reward function, and represents the discount factor. In MDP, an autonomous agent makes sequential discrete-time decisions as time passes. Generally speaking, the MDP problem conforms to the decision-making process of physicians in wound care. Based on the state , the agent selects action at time k, then observes the next state and receives the reward . To collect more state information for wound management, the agent can perform state observation more frequently; for example, it may perform a state observation every hour and select an action every 20 min [10,31]. The state transits to the next state following the transition-probability matrix , which represents the dynamics of the operating environment. The transition-probability matrix satisfies the Markovian (or memoryless) property, since a transition to the next state depends only on the current state and action , rather than on a historical series of states and actions. The agent learns the optimal policy , which maps to optimal actions over trial-and-error interactions with the environment. Nevertheless, the transition-probability matrix and the probability distribution of the reward function are generally unknown in reality.

This article designed the actions, states, and rewards based on the characteristics of wound healing. The action in the algorithm indicates the EF (electric field) intensity or the dosage of the drug. The state x is an image of the wound. The reward r of the algorithm is the exponential of negative Euclidean distance between the next wound image and the image generated by the linear state, as follows:

where and is a hyperparameter that controls the magnitude of the reward.

2.3. Description of the Advantage Actor-Critic (A2C) Algorithm

This section introduces the A2C algorithm, a reinforcement learning optimization method based on the actor–critic (AC) framework [35]. The A2C algorithm differs from value-based algorithms in that it focuses on policy optimization. It combines two neural networks and employs an advantage function to assess action quality, enhancing the agent’s learning stability. The advantage function reduces variability in action selection, leading to more stable learning. Unlike the AC algorithm, the A2C algorithm incorporates an advantage function to stabilize the policy.

In the A2C algorithm, the actor selects actions based on the environment, then, using feedback from the critic, adjusts its action-selection probability. After the actor completes an action, it returns the new state and value to the critic, which computes the error and updates the action probabilities for the actor. This cycle continues until the termination condition is met.

The key formulas of the A2C algorithm are as follows. We represent the value of action u in a given state x as . This paper focuses on the execution of u when in state s and measures the reward of the current action based on the obtained results. represents the value of the subsequent state. The relationship between functions Q and V is described by the Bellman equation, as follows:

Denote the advantage A at state and as follows:

The advantage demonstrates the linear relationship between Q and V. This equation quantifies the advantages of an action compared with the average value in state x, promoting u balanced advantage value across the entire strategy. If the advantage value is less than 0, this action is inferior to the average and is not a good choice.

The gradient of the actor-network parameter update is calculated as follows:

where is the objective function of the actor network and is the probability of action output by the policy network in state x. in the formula represents the expected value when an action is selected according to the policy . We update the actor-network parameters through (9).

We insert (7) into (9) and obtain the following:

All decision values are calculated in a neural network, making the results more stable.

The steps for obtaining optimal solutions are summarized below in Algorithm 1. The optimal parameters for the neural networks are obtained using the Polyak–Ruppert averaging method, as follows: [36]:

where N denotes the total number of updates in the parameters and the interval with is known as the burn-in period; estimates from this period are abandoned to reduce the impact of transients in the early stages of training.

| Algorithm 1: Closed-loop control of wound healing with A2C. |

| Initialize DeepMapper; Optimize DeepMapper with historical wound images; Initialize actor and critic networks; while termination criteria not met do  |

| end |

2.4. Experiments in a Porcine Model

Here, we provide an overview of the in vivo experimental methods used to generate the results presented in this paper. Further details can be found in [24].

All swine experiments were conducted under the protocol approved by the University of California Davis (UC Davis) Institutional Animal Care and Use Committee (IACUC). Yorkshire mixed-breed female pigs between 70–80 lbs, approximately 4–5 months old, were used. The pigs were acclimated and trained for harness use with positive reinforcement (food treats) 7–10 days prior to the surgery (PMID: 35024682). Overnight fasting was applied with water available ad libitum before the surgery and the device change.

On the surgery day, the pigs were induced under general anesthesia, intubated endotracheally, and maintained under anesthesia using masked inhalation of isoflurane (1–5%). The back skin was clipped, depilated and prepared before being wounded. Six to eight round, full-thickness wounds (20 mm in diameter) were created on each pig. Device treatments (control or experimental devices) were administered to the cranial wounds, and the standard of care (no device, and the wounds sealed with Conformant 2 wound veil, Optifoam bolsters, and Tegaderm film dressings) was applied to the caudal wounds. The wounded area was covered with cushion foam sheets and a compression dressing/pig jacket to protect the skin of the back. A fentanyl patch as analgesic was applied for 72 h for post-operative pain management. Cycles of capturing photographs of the wounds were initiated when the device was connected to the power bank immediately after the surgery. The image data was acquired every 2 h and transmitted wirelessly to a laptop via a local Wi-Fi network for 24 h.

The in vivo experiment was conducted for 22 days, with daily wound examination and changes of the power banks to restart the device cycles. The devices were replaced on day 3 and removed on day 7 under anesthesia. Wound dressings were replaced every 3–4 days. At the endpoint of the experiment, the animals were euthanized, the devices were retrieved, and the wound tissue was harvested and bisected for histology analysis, as presented in [24].

3. Results

Wound-healing studies in humans have been limited by a scarcity of relevant in vivo and in vitro experimental models and the questionable practice of serial biopsies of human wounds, which are painful and may cause infection or scarring. Mouse models have an advantage in that mice are small, easy to handle, and relatively cheap. However, a high degree of phenotypic variability in skin exists between animal species, and the impact of these differences on wound healing has been recognized since at least 1915 [37]. Therefore, an accurate model of human wound healing should use an animal with skin with similar characteristics.

Porcine models have emerged as promising models in which to study wound healing, with over 1500 publications on the psychophysiology of various wound types. An advantage of using pigs is that they are anatomically and physiologically similar to humans and have been used to study many other diseases [38], such as diabetes, cardiovascular diseases, and infections. Similarities between pig and human skin also make pigs an appropriate model for the study of cutaneous wound healing. Like humans, they have a relatively thick epidermis, distinct rete pegs, dermal papillae, and dense elastic fibers in the dermis [39,40].

In this paper, we conducted one numerical study using mouse data and one experimental study in vivo with a porcine model.

3.1. Simulation Using Mouse Data

The mouse-image dataset used in this work was created as described in [41], and from that dataset, we generated circular-wound-only crops. The dataset includes wounds from eight mice (four from one cohort and four from another distinct cohort) that were imaged daily over sixteen days. Each mouse had two wounds, one on the left side and one on the right side. This process resulted in a total dataset of 256 images (8 mice × 2 wounds × 16 days). We split the dataset into training (224 images) and testing (32 images) subsets.

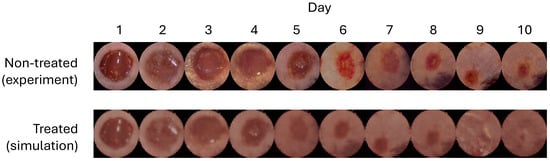

An example trajectory of testing images is shown in Figure 2(top). The natural healing process of mouse wounds typically spans approximately 10 to 11 days. In our study, we employed and , as detected by the DeepMapper model. The objective was to determine whether the proposed algorithm could accelerate the wound-healing process in mice.

Figure 2.

Trajectories of wound images. (Top): A sample trajectory of mouse-wound images in the testing dataset. It takes around 10 to 11 days for the mouse wound to heal. (Bottom): A sample trajectory of generated mouse-wound images using the control policy given by the DRL agents. On day 9, there were no obvious wound regions in the generated images.

Since no treatment was applied to the mouse wounds during data collection, remained unchanged. However, the components and in were modulated to new values, denoted as and , respectively. This modulation was achieved via a predefined function that simulated the effects of electrical fields and fluoxetine, as described by the following equations:

The goal was to optimize the control input such that the cumulative time in which the wound state is minimized. Here, represents the state of maturation, the last stage in wound healing.

To achieve this end, we trained DRL agents to derive the control policy using the reward function defined in (6) and the values of and predicted by DeepMapper from training images. The DRL agents were tested every 50 training episodes on the testing images, with 100 independent runs conducted using 100 different random seeds.

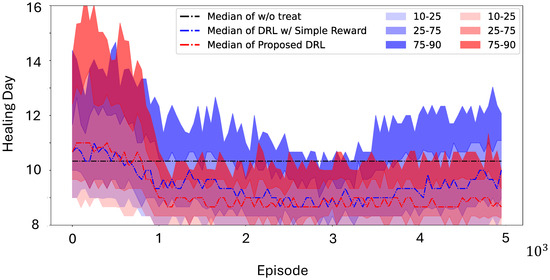

The results are presented in Figure 3 and show that the control policy derived by the DRL agents reduced the wound healing time to approximately 8.5 days, compared to 10.33 days for normal healing (17.71% faster). Moreover, the generated wound images in Figure 2(bottom) showed no visible wound regions by day 9, further validating the efficacy of the proposed method. Additionally, we compared this control policy with a policy optimized using a simpler reward function that directly targeted minimizing wound-healing time without employing the leader–follower tracking strategy proposed in this paper, as follows:

The blue curves in Figure 3 illustrate that this alternative approach not only converged more slowly than the proposed method but also exhibited higher variance. These findings highlight the effectiveness and robustness of the proposed DRL-based control strategy in expediting the wound-healing process.

Figure 3.

Learning curves of DRL agents over the mouse dataset with a predefined function of treatment effect, shown by percentile. Without any treatments, it would take 10.33 days for one mouse wound to heal. Our proposed method, shown in red curves, results a treatment policy that is more stable than the DRL agents implemented without the leader–follower strategy.

3.2. In Vivo Application

This work was further integrated into a 22 day in vivo porcine experiment in [24]. A total of six to ten wounds, each 20 mm in diameter, were created on the dorsum of each pig. These wounds were interfaced with a bioelectronic device with eight treatment-delivery channels: four channels were designed for fluoxetine delivery, and the rest were designed for the application of an electric field. Both the fluoxetine dosage and the intensity of the electric field were controlled via electric currents in the corresponding channels. The dosage of fluoxetine delivered from each channel was controlled by the current applied. The dosage can be calculated as follows:

where is the pump efficiency, is the Faraday constant, and g denotes the molecular weight of fluoxetine. In this application, we set , 96,485.3321 and g/mol. The wounds were also interfaced with a camera that took real-time images of the wound; based on these images, the proposed algorithm predicted wound stages and the DRL agent made decisions on the currents to be applied to control the dosage or intensity.

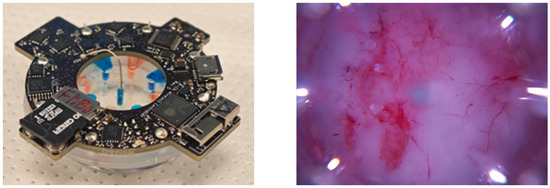

The real-time imaging system in [42,43] captured wound images every 2 h throughout the experiment. We fine-tuned DeepMapper to work with the device images. The wireless bioelectronic device and an image of a wound acquired by the device camera are shown in Figure 4. Note that the device images are substantially different than those taken with a standard camera, as they include wound-edge information and a different color spectrum. To preprocess the images, we subtracted [108.16, 61.49, 55.44] from the pixel values of the RGB channels in the wound image to reduce redness and downsampled the image to a specified shape (H: 128, W: 128, 3). The downsampling method we used is called the Lanczos algorithm [44]. Finally, we normalized pixel values to have zero mean and range from 0 to 1. The processed wound images were passed into DeepMapper for prediction of the wound’s healing stage and linear dynamics, based on which optimal reference signals were calculated via (4) and DeepMapper’s decoder.

Figure 4.

An image of EF+Flx combo actuators used in the in vivo experiments to deliver EF and fluoxetine (shown on the (left)) and a sample wound image captured by the device camera (shown on the (right)).

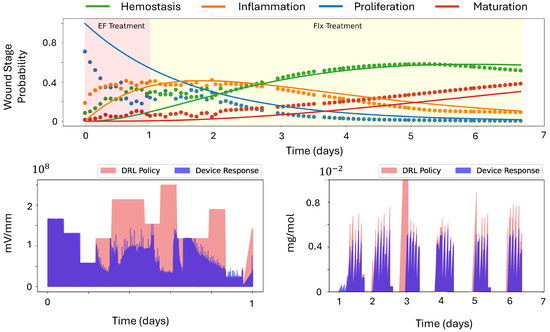

Figure 5 shows the wound-stage prediction and solutions to predicted linear dynamics. In the ideal case, predicted wound stage and the solution to the linear dynamics would align with each other. However, since DeepMapper was pretrained on historical wound images, its prior knowledge about wound evolution tended to dominate early predictions, leading to deviations from the actual linear evolution. For instance, we observed a mean squared error (MSE) of approximately 69.15% in the first-day predictions. As more data were collected over time, the dotted curves in Figure 5 increasingly aligned with the linear-dynamics solutions, with the MSE reducing to 3.71% by the end of the first day.

Figure 5.

Plots of first 7-day wound-stage predictions, with the solution to the learned linear dynamics and treatment policies generated by the DRL agents across the 22-day porcine experiment. (Top): Wound-stage (dotted curves) predictions and solutions to predicted linear dynamics (solid curves). (Bottom Left): Intensity of electric field. (Bottom Right): Fluoxetine delivery.

Two DRL agents then used the wound images to make dynamic adjustments to the currents, optimizing either fluoxetine dosage or electric-field intensity so that the cumulative reward (6) was maximized. In our experiment, only one DRL agent was active at a time, meaning that when DRL agent for the electric field was active, all other channels associated with fluoxetine delivery were shut off. Initially, we used the agent controlling the electric field; once the predicted probability of inflammation reached approximately 40% and started to decrease, we switched to the DRL agent for fluoxetine delivery.

On day 0, the wounds were created. These wounds were categorized into two groups: one consisting of wounds with both an actuator and a camera and subjected to DRL treatment, and one consisting of camera-only control wounds. Devices were applied to the wounds while the pigs were under anesthesia. The devices operated in closed-loop controlled-delivery mode for 22 h each day. Power banks were replaced daily during feeding. On day 3, the devices were replaced with new ones. The DRL agent for application of the electric field was initiated on day 0, and the switch to the DRL agent for fluoxetine was made in the middle of day 1, with fluoxetine treatment continuing until day 7. The strategies used to determine the applied intensity of electric field and dosage of fluoxetine are illustrated in Figure 5.

On day 7, the devices were removed and replaced with standard-of-care treatments, and the experiment continued until harvest on day 22. At that time, the animal was sacrificed and tissue samples were collected for analysis.

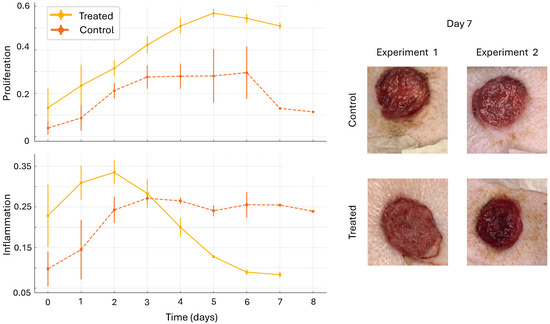

Figure 6 shows the comparison of control wounds with DRL-treated wounds. The results presented are from two experiments. In each experiment, there were two control wounds and two treated wounds. Multiple images were taken each day, and results were averaged across all wounds and experiments for days 1–7. Images from Day 7 are shown for visual comparison and validation of the model’s predictions. The bright red, shiny surface of the control wounds is an indicator of inflammation, while the softened edge of the treated wound serves as an indicator of proliferation. Thus, the DRL-based action supported the accelerated onset of inflammation and transition to proliferation. We note that the DRL algorithm resulted in a steady and constant delivery of fluoxetine. Previous work suggests that this is an optimal strategy and that it is more effective than bolus dosing [11,12]. This result suggests that the proposed algorithm provided a treatment strategy that effectively accelerated the healing process.

Figure 6.

Comparison of control wounds with DRL-treated wounds. The results presented are from two experiments. In each experiment, there were two control wounds and two treated wounds. Multiple images were taken each day, and results were averaged across all wounds and experiments for each day. Missing data points are omitted. Error bars indicate one standard deviation. Images from Day 7 are shown. The bright red and shiny surface of the control wounds is an indicator of inflammation, while the softened edge of the treated wound serves as an indicator of proliferation. Thus, the DRL-based action supported the accelerated onset of inflammation and transition to proliferation.

4. Discussion

The advent of new biotechnologies has introduced an array of sensors for biological systems, addressing a critical need in medicine to integrate these sensors into closed-loop control systems. However, the inherent complexity of biological processes poses a challenge for formulating precise mathematical models. Consequently, there is a growing demand for control algorithms that can operate effectively without relying on exact models. Although sensors provide valuable insights, their measurements offer only a partial view of the underlying dynamics of real biological systems.

Wound healing exemplifies a nonlinear biological process in which different cell types play distinct roles at various stages. In our study, we assumed the availability of a sensor capable of reflecting wound stages, as suggested in [31], with the sensor data being approximately modeled by a linear system of ordinary differential equations (ODEs). Despite the convenience of using linear models, discrepancies between sensor measurements and the actual biological processes involved in wound healing reveal the limitations of linear systems for capturing the underlying nonlinear dynamics. Nevertheless, we demonstrate that nonlinear systems can be effectively monitored and controlled using observations derived from linear approximations. This approach shows promise for a wide range of nonlinear biological processes where sensors exist, but accurate mathematical models are difficult to construct.

It is also important to note that more advanced deep reinforcement learning (DRL) algorithms can further enhance control strategies and improve sampling efficiency. For instance, in large state and action spaces, Actor-Critic (A2C) methods may suffer from slow convergence and susceptibility to local minima. More advanced approaches, such as Asynchronous Advantage Actor-Critic (A3C) [34], can leverage parallelism to mitigate these issues. However, these algorithms still struggle with convergence due to the nonconvex nature of their objective functions. The integration of convex optimization techniques, as explored in [45], holds potential for overcoming this limitation.

The in vivo experimental results presented in this paper, specifically the acceleration of wound healing, were measured using predictions from DeepMapper. While our findings suggest significant differences between treated and control wounds, further studies are required. Additional experiments and data collection are necessary to fine-tune the deep learning models used in the proposed approach. Expanding the dataset, particularly to include more images of treated wounds (many of the images were from control experiments) would help strengthen the model’s accuracy and generalizability. Moreover, biological analysis of the wounds by experts is needed to substantiate the results. However, due to the prolonged nature of the wound-healing process, these experiments can be both time-consuming and expensive.

Our primary objective is to propose an adaptive learning framework that combines deep learning, optimal control, and deep reinforcement learning to accelerate wound healing. Future work will focus on designing more efficient DRL algorithms tailored to these goals.

5. Conclusions

In this paper, we propose an adaptive closed-loop control framework for a nonlinear dynamical system. The controller integrates deep learning, optimal control, and reinforcement learning, aiming to accelerate nonlinear biological processes such as wound healing without the need for mathematical modeling. We have demonstrated that the proposed method converges to a treatment strategy that improves wound-healing time with no mechanistic model of wound healing or knowledge about the mechanism by which the drug or EF affects biological processes.

In the future, we aim to enhance the algorithm’s performance by reducing the action space and minimizing the selection of prohibited actions, which requires integrating insight into the biology. Additionally, we will explore the application of alternative reinforcement learning algorithms to achieve significant enhancements [34,45]. Before these approaches can be considered for use in clinical practice, future work must also include safety guarantees that are wrapped around the reinforcement learning algorithm. The cost of the device is also of concern. One advantage is that there is a minimal need to monitor the device, since it has the potential to communicate wirelessly with a handheld device. For example, for the in vivo experiments, the activity of the device and acquired images can be visualized and processed in real time.

Author Contributions

Conceptualization, F.L., M.R., R.I., M.T. (Mircea Teodorescu) and M.G.; methodology, F.L., K.Z., P.B., H.L., N.A., W.S.H., M.R., M.T. (Mircea Teodorescu) and M.G.; software, F.L., P.B. and N.R.; validation, F.L., R.I., M.R. and M.G.; formal analysis, F.L.; investigation, F.L., P.B., H.L., N.R., N.A., W.S.H., M.T. (Maryam Tebyani), K.S., C.F. and M.B.; resources, R.I., M.T. (Mircea Teodorescu), M.R. and M.G.; data curation, M.B., F.L., P.B., N.A., C.F. and W.S.H.; writing—original draft preparation, F.L., M.G. and K.Z.; writing—review and editing, all; visualization, F.L. and P.B.; supervision, M.G., R.I., M.T. (Mircea Teodorescu) and M.R.; project administration, M.R. and R.I.; funding acquisition, M.R. All authors have read and agreed to the published version of the manuscript.

Funding

This study was funded by the Office of Naval Research (ONR) under Grant Number N00014-23-1-2729 and by the Defense Advanced Research Projects Agency (DARPA) and the Advanced Research Projects Agency for Health (ARPA-H) through Cooperative Agreement D20AC00003 awarded by the US Department of the Interior (DOI), Interior Business Center. The content of the information does not necessarily reflect the position or the policy of the government, and no official endorsement should be inferred. The porcine wound images were captured by Da Vinci Services.

Institutional Review Board Statement

Here we present animal studies that were conducted as part of studies published in other papers. The procedure for swine care and the wounding surgery were derived from the guidelines of the Association for Assessment and Accreditation of Laboratory Animal Care and the NIH Guide for the Care and Use of Laboratory Animals, reviewed, and approved by the UC Davis Campus Veterinary Services, and the UC Davis Institutional Animal Care and Use Committee.

Data Availability Statement

All data is to be made publicly available through other referenced papers. The model and algorithm will be made available through GitHub upon publication (https://github.com/Fan-Lu/EnhancingWoundHealingUsingDRL).

Acknowledgments

The authors would like to acknowledge all lab members from the research groups of Gomez, Rolandi, Teodorescu, Isseroff, and Zao, who were critical to realizing the large collaborative endeavor that this work is a part of.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Portou, M.; Baker, D.; Abraham, D.; Tsui, J. The innate immune system, toll-like receptors and dermal wound healing: A review. Vasc. Pharmacol. 2015, 71, 31–36. [Google Scholar] [CrossRef]

- Zlobina, K.; Jafari, M.; Rolandi, M.; Gomez, M. The role of machine learning in advancing precision medicine with feedback control. Cell Rep. Phys. Sci. 2022, 3, 101149. [Google Scholar] [CrossRef]

- Dias, R.; Torkamani, A. Artificial intelligence in clinical and genomic diagnostics. Genome Med. 2019, 11, 70. [Google Scholar] [CrossRef]

- Krittanawong, C.; Zhang, H.; Wang, Z.; Aydar, M.; Kitai, T. Artificial Intelligence in Precision Cardiovascular Medicine. J. Am. Coll. Cardiol. 2017, 69, 2657–2664. [Google Scholar] [CrossRef]

- Roden, D.M.; George, A.L., Jr. The genetic basis of variability in drug responses. Nat. Rev. Drug Discov. 2002, 1, 37–44. [Google Scholar] [CrossRef]

- Bielinski, S.J.; Olson, J.E.; Pathak, J.; Weinshilboum, R.M.; Wang, L.; Lyke, K.J.; Ryu, E.; Targonski, P.V.; Van Norstrand, M.D.; Hathcock, M.A.; et al. Preemptive genotyping for personalized medicine: Design of the right drug, right dose, right time—Using genomic data to individualize treatment protocol. In Proceedings of the Mayo Clinic Proceedings; Elsevier: Amsterdam, The Netherlands, 2014; Volume 89, pp. 25–33. [Google Scholar]

- Zhao, M. Electrical fields in wound healing—An overriding signal that directs cell migration. In Proceedings of the Seminars in Cell & Developmental Biology; Elsevier: Amsterdam, The Netherlands, 2009; Volume 20, pp. 674–682. [Google Scholar]

- Farahani, R.M.Z.; Sadr, K.; Rad, J.S.; Mesgari, M. Fluoxetine enhances cutaneous wound healing in chronically stressed Wistar rats. Adv. Ski. Wound Care 2007, 20, 157–165. [Google Scholar] [CrossRef]

- Yoon, D.J.; Nguyen, C.; Bagood, M.D.; Fregoso, D.R.; Yang, H.y.; Lopez, A.I.M.; Crawford, R.W.; Tran, J.; Isseroff, R.R. Topical fluoxetine as a potential nonantibiotic adjunctive therapy for infected wounds. J. Investig. Dermatol. 2021, 141, 1608–1612. [Google Scholar] [CrossRef]

- Zlobina, K.; Xue, J.; Gomez, M. Effective spatio-temporal regimes for wound treatment by way of macrophage polarization: A mathematical model. Front. Appl. Math. Stat. 2022, 8, 791064. [Google Scholar] [CrossRef]

- Li, H.; Asefifeyzabadi, N.; Schorger, K.; Baniya, P.; Yang, H.y.; Tebyani, M.; Barbee, A.; Hee, W.S.; Gallegos, A.; Zhu, K.; et al. Remote-Controlled Wireless Bioelectronics for Fluoxetine Therapy to Promote Wound Healing in a Porcine Model. Adv. Mater. Technol. 2024, 70039. [Google Scholar] [CrossRef]

- Gallegos, A.; Li, H.; Yang, H.y.; Villa-Martinez, G.; Bazzi, I.; Sathyanarayanan, S.; Asefifeyzabadi, N.; Baniya, P.; Hee, W.S.; Siadat, M.; et al. Fluoxetine Delivery for Wound Treatment Through an Integrated Bioelectronic Device–Pharmacokinetic Parameters and Safety Profile in Swine. bioRxiv 2025. [Google Scholar] [CrossRef]

- Gholami, B.; Haddad, W.M.; Bailey, J.M.; Tannenbaum, A.R. Optimal drug dosing control for intensive care unit sedation by using a hybrid deterministic–stochastic pharmacokinetic and pharmacodynamic model. Optim. Control Appl. Methods 2013, 34, 547–561. [Google Scholar] [CrossRef]

- Van Overschee, P.; De Moor, B. Subspace Identification for Linear Systems: Theory—Implementation—Applications; Springer Science & Business Media: Heidelberg, Germany, 2012. [Google Scholar]

- Paraskevopoulos, P.N. Modern Control Engineering; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Koopman, B.O. Hamiltonian systems and transformation in Hilbert space. Proc. Natl. Acad. Sci. USA 1931, 17, 315–318. [Google Scholar] [CrossRef]

- Mezić, I.; Banaszuk, A. Comparison of systems with complex behavior. Phys. Nonlinear Phenom. 2004, 197, 101–133. [Google Scholar] [CrossRef]

- Mezić, I. Spectral properties of dynamical systems, model reduction and decompositions. Nonlinear Dyn. 2005, 41, 309–325. [Google Scholar] [CrossRef]

- Proctor, J.L.; Brunton, S.L.; Kutz, J.N. Generalizing Koopman theory to allow for inputs and control. Siam J. Appl. Dyn. Syst. 2018, 17, 909–930. [Google Scholar] [CrossRef]

- Lian, Y.; Wang, R.; Jones, C.N. Koopman based data-driven predictive control. arXiv 2021, arXiv:2102.05122. [Google Scholar]

- Kaiser, E.; Kutz, J.N.; Brunton, S.L. Data-driven discovery of Koopman eigenfunctions for control. Mach. Learn. Sci. Technol. 2021, 2, 035023. [Google Scholar] [CrossRef]

- Ahmed, A.; del Rio-Chanona, E.A.; Mercangöz, M. Learning Linear Representations of Nonlinear Dynamics Using Deep Learning. IFAC-PapersOnLine 2022, 55, 162–169. [Google Scholar] [CrossRef]

- Lu, F.; Zlobina, K.; Rondoni, N.A.; Teymoori, S.; Gomez, M. Enhancing wound healing through deep reinforcement learning for optimal therapeutics. R. Soc. Open Sci. 2024, 11, 240228. [Google Scholar] [CrossRef]

- Li, H.; Yang, H.y.; Lu, F.; Hee, W.S.; Asefifeyzabadi, N.; Baniya, P.; Gallegos, A.; Schorger, K.; Zhu, K.; Recendez, C.; et al. Real-time diagnostics and personalized wound therapy powered by AI and bioelectronics. medRxiv 2025. [Google Scholar] [CrossRef]

- Melai, B.; Salvo, P.; Calisi, N.; Moni, L.; Bonini, A.; Paoletti, C. A graphene oxide pH sensor for wound monitoring. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 1898–1901. [Google Scholar]

- Seo, H.; Lim, H.; Lim, T.; Seo, K.; Yang, J.; Kang, Y.; Han, S.; Ju, S.; Jeong, S. Facile and cost-effective fabrication of wearable alpha-naphtholphthalein-based halochromic sensor for wound pH monitoring. Nanotechnology 2024, 35, 245502. [Google Scholar] [CrossRef]

- Schreml, S.; Meier, R.; Weiß, K.; Cattani, J.; Flittner, D.; Gehmert, S.; Wolfbeis, O.; Landthaler, M.; Babilas, P. A sprayable luminescent pH sensor and its use for wound imaging in vivo. Exp. Dermatol. 2012, 21, 951–953. [Google Scholar] [CrossRef]

- Mirani, B.; Hadisi, Z.; Pagan, E.; Dabiri, S.; van Rijt, A.; Almutairi, L.; Noshadi, I.; Armstrong, D.; Akbari, M. Smart Dual-Sensor Wound Dressing for Monitoring Cutaneous Wounds. Adv. Healthc. Mater. 2023, 12, e2203233. [Google Scholar] [CrossRef]

- Zheng, X.; Yang, Z.; Sutarlie, L.; Thangaveloo, M.; Yu, Y.; Salleh, N.; Chin, J.; Xiong, Z.; Becker, D.; Loh, X.; et al. Battery-free and AI-enabled multiplexed sensor patches for wound monitoring. Sci. Adv. 2023, 9, eadg6670. [Google Scholar] [CrossRef]

- Tang, N.; Zheng, Y.; Jiang, X.; Zhou, C.; Jin, H.; Jin, K.; Wu, W.; Haick, H. Wearable Sensors and Systems for Wound Healing-Related pH and Temperature Detection. Micromachines 2021, 12, 430. [Google Scholar] [CrossRef]

- Carrión, H.; Jafari, M.; Yang, H.Y.; Isseroff, R.R.; Rolandi, M.; Gomez, M.; Norouzi, N. HealNet-Self-Supervised Acute Wound Heal-Stage Classification. In Proceedings of the International Workshop on Machine Learning in Medical Imaging; Springer: Heidelberg, Germany, 2022; pp. 446–455. [Google Scholar]

- Ogata, K. Modern Control Engineering, 5th ed.; Pearson: Tokyo, Japan, 2010. [Google Scholar]

- Lu, F.; Zlobina, K.; Osorio, S.; Yang, H.Y.; Nava, A.; Bagood, M.D.; Rolandi, M.; Isseroff, R.R.; Gomez, M. DeepMapper: Attention-based autoencoder for system identification in wound healing and stage prediction. bioRxiv 2024. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. Proc. Int. Conf. Mach. Learn. PMLR 2016, 48, 1928–1937. [Google Scholar]

- Sutton, R.S.; McAllester, D.; Singh, S.; Mansour, Y. Policy gradient methods for reinforcement learning with function approximation. Adv. Neural Inf. Process. Syst. 1999, 12, 1057–1063. [Google Scholar]

- Polyak, B.T.; Juditsky, A.B. Acceleration of stochastic approximation by averaging. Siam J. Control Optim. 1992, 30, 838–855. [Google Scholar] [CrossRef]

- Spain, K.C. The relation between the structure of the epidermis of the rat and the guinea pig, and the proliferative power of normal and regenerating epithelial cells of the same species. J. Exp. Med. 1915, 21, 193–202. [Google Scholar] [CrossRef]

- Sullivan, T.P.; Eaglstein, W.H.; Davis, S.C.; Mertz, P. The pig as a model for human wound healing. Wound Repair Regen. 2001, 9, 66–76. [Google Scholar] [CrossRef]

- Lindblad, W.J. Considerations for selecting the correct animal model for dermal wound-healing studies. J. Biomater. Sci. Polym. Ed. 2008, 19, 1087–1096. [Google Scholar] [CrossRef]

- William Montagna, P.D.; Yen, J.S. The skin of the domestic pig. J. Investig. Dermatol. 1963, 42, 11–21. [Google Scholar]

- Carrión, H.; Jafari, M.; Bagood, M.D.; Yang, H.y.; Isseroff, R.R.; Gomez, M. Automatic wound detection and size estimation using deep learning algorithms. Plos Comput. Biol. 2022, 18, e1009852. [Google Scholar] [CrossRef]

- Hee, W.S.; Tebyani, M.; Baniya, P.; Franco, C.; Keller, G.; Lu, F.; Li, H.; Asefifeyzabadi, N.; Yang, H.y.; Villa-Martinez, G.; et al. Design and validation of a wearable imaging system for automated wound monitoring in porcine model. bioRxiv 2024. [Google Scholar] [CrossRef]

- Baniya, P.; Tebyani, M.; Hee, W.S.; Li, H.; Asefifeyzabadi, N.; Yang, H.y.; Zhu, K.; Keller, G.; Franco, C.; Schorger, K.; et al. Wireless bioelectronic device for wound healing. bioRxiv 2024. [Google Scholar]

- Cullum, J.K.; Willoughby, R.A. Lanczos Algorithms for Large Symmetric Eigenvalue Computations: Vol. I: Theory; SIAM: Philadelphia, PA, USA, 2002. [Google Scholar]

- Lu, F.; Meyn, S. Convex Q Learning in a Stochastic Environment: Extended Version. arXiv 2023, arXiv:2309.05105. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).