Identification and Patient Benefit Evaluation of Machine Learning Models for Predicting 90-Day Mortality After Endovascular Thrombectomy Based on Routinely Ready Clinical Information

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Population

2.2. Study Variables and Missing Data

2.3. Statistical Analysis

2.4. Model Development

3. Results

3.1. Study Population

3.2. Model Development

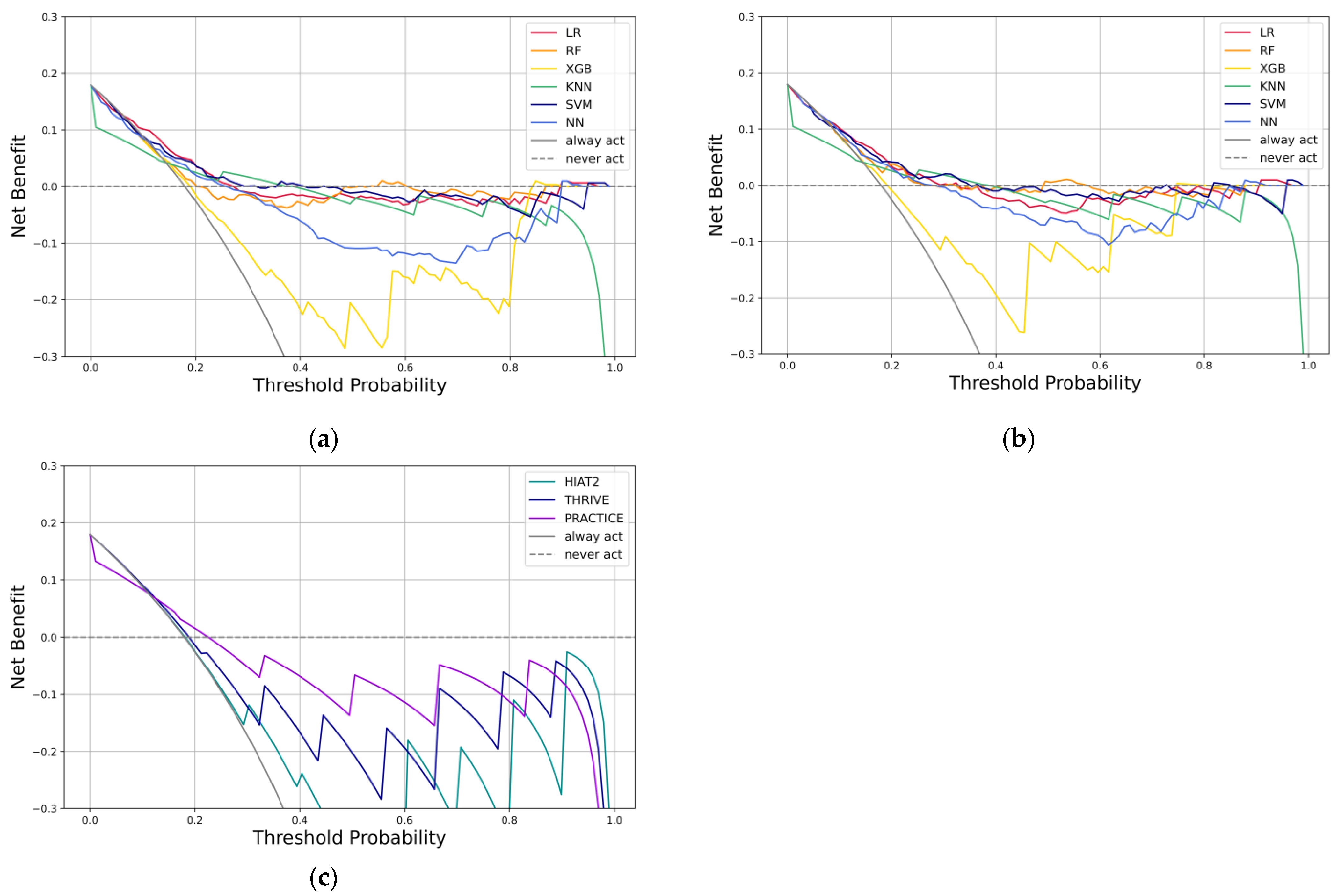

3.3. Model Performance

3.4. Explanatory Analysis

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AIS | Acute ischemic stroke |

| EVT | Endovascular thrombectomy |

| LVO | Large vessel occlusion |

| ML | Machine learning |

| HIAT | Houston Intra-Arterial recanalization |

| EVT | Endovascular thrombectomy |

| THRIVE | Totaled Health Risks In Vascular Events |

| PLAN | Preadmission comorbidities, Level of consciousness, Age, and Neurologic deficit |

| PREMISE | Predicting Early Mortality of Ischemic Stroke |

| TOAST | Trial Org 10172 in Acute Stroke Treatment |

| PRACTICE | Predicting 90-day mortality of AIS with MT |

| NIHSS | National Institutes of Health Stroke Scale |

| ASPECTS | Alberta Stroke Program Early CT Score |

| LR | Logistic regression |

| PYNEH | Pamela Youde Nethersole Eastern Hospital |

| NCCT | Non-contrast computed tomography |

| CTA | Computed tomography angiography |

| IVT | Intravenous thrombolysis |

| AI | Artificial intelligence |

| MICE | Multiple Imputation by Chained Equations |

| AUC | Area under the receiver operating characteristic curve |

| AUPRC | Area under the precision-recall curve |

| MCC | Matthews correlation coefficient |

| RF | Random forest |

| XGB | Extreme gradient boosting |

| KNN | K-nearest neighbor |

| SVM | Support vector machine |

| NN | Neural network |

| SMOTE | Synthetic Minority Oversampling Technique |

| SHAP | Shapley Additive Explanations |

| IQR | Interquartile range |

| SD | Standard deviation |

| ICA | Internal carotid artery |

| BA | Basilar artery |

| CI | Confidence Interval |

| PPV | Positive predictive value |

| NPV | Negative predictive value |

| AHA/ASA | American Heart Association/American Stroke Association |

References

- Katan, M.; Luft, A. Global Burden of Stroke. Semin. Neurol. 2018, 38, 208–211. [Google Scholar] [CrossRef]

- Number of Deaths by Leading Causes of Death, 2001–2021. Available online: https://www.chp.gov.hk/en/statistics/data/10/27/380.html (accessed on 21 February 2023).

- Tsang, A.C.O.; You, J.; Li, L.F.; Tsang, F.C.P.; Woo, P.P.S.; Tsui, E.L.H.; Yu, P.; Leung, G.K.K. Burden of Large Vessel Occlusion Stroke and the Service Gap of Thrombectomy: A Population-Based Study Using a Territory-Wide Public Hospital System Registry. Int. J. Stroke 2020, 15, 69–74. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Ye, S.; Wu, Y.-L.; Huang, S.-M.; Li, Y.-X.; Lu, K.; Huang, J.-B.; Chen, L.; Li, H.-Z.; Wu, W.-J.; et al. Predicting Mortality in Acute Ischaemic Stroke Treated with Mechanical Thrombectomy: Analysis of a Multicentre Prospective Registry. BMJ Open 2021, 11, e043415. [Google Scholar] [CrossRef] [PubMed]

- Awad, A.-W.; Kilburg, C.; Ravindra, V.M.; Scoville, J.; Joyce, E.; Grandhi, R.; Taussky, P. Predicting Death After Thrombectomy in the Treatment of Acute Stroke. Front. Surg. 2020, 7, 16. [Google Scholar] [CrossRef]

- Zeng, M.; Oakden-Rayner, L.; Bird, A.; Smith, L.; Wu, Z.; Scroop, R.; Kleinig, T.; Jannes, J.; Jenkinson, M.; Palmer, L.J. Pre-Thrombectomy Prognostic Prediction of Large-Vessel Ischemic Stroke Using Machine Learning: A Systematic Review and Meta-Analysis. Front. Neurol. 2022, 13, 945813. [Google Scholar] [CrossRef]

- Kremers, F.; Venema, E.; Duvekot, M.; Yo, L.; Bokkers, R.; Lycklama À Nijeholt, G.; van Es, A.; van der Lugt, A.; Majoie, C.; Burke, J.; et al. Outcome Prediction Models for Endovascular Treatment of Ischemic Stroke: Systematic Review and External Validation. Stroke 2022, 53, 825–836. [Google Scholar] [CrossRef]

- Teo, Y.H.; Lim, I.C.Z.Y.; Tseng, F.S.; Teo, Y.N.; Kow, C.S.; Ng, Z.H.C.; Chan Ko Ko, N.; Sia, C.-H.; Leow, A.S.T.; Yeung, W.; et al. Predicting Clinical Outcomes in Acute Ischemic Stroke Patients Undergoing Endovascular Thrombectomy with Machine Learning. Clin. Neuroradiol. 2021, 31, 1121–1130. [Google Scholar] [CrossRef]

- O’Donnell, M.J.; Fang, J.; D’Uva, C.; Saposnik, G.; Gould, L.; McGrath, E.; Kapral, M.K.; Investigators of the Registry of the Canadian Stroke Network. The PLAN Score: A Bedside Prediction Rule for Death and Severe Disability Following Acute Ischemic Stroke. Arch. Intern. Med. 2012, 172, 1548–1556. [Google Scholar] [CrossRef]

- Raza, S.A.; Rangaraju, S. A Review of Pre-Intervention Prognostic Scores for Early Prognostication and Patient Selection in Endovascular Management of Large Vessel Occlusion Stroke. Interv. Neurol. 2018, 7, 171–181. [Google Scholar] [CrossRef]

- Sarraj, A.; Albright, K.; Barreto, A.D.; Boehme, A.K.; Sitton, C.W.; Choi, J.; Lutzker, S.L.; Sun, C.-H.J.; Bibars, W.; Nguyen, C.B.; et al. Optimizing Prediction Scores for Poor Outcome After Intra-Arterial Therapy in Anterior Circulation Acute Ischemic Stroke. Stroke 2013, 44, 3324–3330. [Google Scholar] [CrossRef]

- Ryu, C.-W.; Kim, B.M.; Kim, H.-G.; Heo, J.H.; Nam, H.S.; Kim, D.J.; Kim, Y.D. Optimizing Outcome Prediction Scores in Patients Undergoing Endovascular Thrombectomy for Large Vessel Occlusions Using Collateral Grade on Computed Tomography Angiography. Neurosurgery 2019, 85, 350–358. [Google Scholar] [CrossRef] [PubMed]

- Flint, A.C.; Rao, V.A.; Chan, S.L.; Cullen, S.P.; Faigeles, B.S.; Smith, W.S.; Bath, P.M.; Wahlgren, N.; Ahmed, N.; Donnan, G.A.; et al. Improved Ischemic Stroke Outcome Prediction Using Model Estimation of Outcome Probability: The THRIVE-c Calculation. Int. J. Stroke 2015, 10, 815–821. [Google Scholar] [CrossRef]

- Nishi, H.; Oishi, N.; Ishii, A.; Ono, I.; Ogura, T.; Sunohara, T.; Chihara, H.; Fukumitsu, R.; Okawa, M.; Yamana, N.; et al. Predicting Clinical Outcomes of Large Vessel Occlusion Before Mechanical Thrombectomy Using Machine Learning. Stroke 2019, 50, 2379–2388. [Google Scholar] [CrossRef]

- Yao, Z.; Mao, C.; Ke, Z.; Xu, Y. An Explainable Machine Learning Model for Predicting the Outcome of Ischemic Stroke after Mechanical Thrombectomy. J. Neurointerv. Surg. 2023, 15, 1136–1141. [Google Scholar] [CrossRef]

- Lin, X.; Zheng, X.; Zhang, J.; Cui, X.; Zou, D.; Zhao, Z.; Pan, X.; Jie, Q.; Wu, Y.; Qiu, R.; et al. Machine Learning to Predict Futile Recanalization of Large Vessel Occlusion before and after Endovascular Thrombectomy. Front. Neurol. 2022, 13, 909403. [Google Scholar] [CrossRef] [PubMed]

- Flint, A.C.; Cullen, S.P.; Faigeles, B.S.; Rao, V.A. Predicting Long-Term Outcome after Endovascular Stroke Treatment: The Totaled Health Risks in Vascular Events Score. Am. J. Neuroradiol. 2010, 31, 1192–1196. [Google Scholar] [CrossRef] [PubMed]

- Leevy, J.L.; Khoshgoftaar, T.M.; Bauder, R.A.; Seliya, N. A Survey on Addressing High-Class Imbalance in Big Data. J. Big Data 2018, 5, 42. [Google Scholar] [CrossRef]

- Hoffman, H.; Wood, J.; Cote, J.R.; Jalal, M.S.; Otite, F.O.; Masoud, H.E.; Gould, G.C. Development and Internal Validation of Machine Learning Models to Predict Mortality and Disability After Mechanical Thrombectomy for Acute Anterior Circulation Large Vessel Occlusion. World Neurosurg. 2024, 182, e137–e154. [Google Scholar] [CrossRef]

- Wei, G.; Zhao, J.; Feng, Y.; He, A.; Yu, J. A Novel Hybrid Feature Selection Method Based on Dynamic Feature Importance. Appl. Soft Comput. 2020, 93, 106337. [Google Scholar] [CrossRef]

- Powers, W.J.; Rabinstein, A.A.; Ackerson, T.; Adeoye, O.M.; Bambakidis, N.C.; Becker, K.; Biller, J.; Brown, M.; Demaerschalk, B.M.; Hoh, B.; et al. Guidelines for the Early Management of Patients With Acute Ischemic Stroke: 2019 Update to the 2018 Guidelines for the Early Management of Acute Ischemic Stroke: A Guideline for Healthcare Professionals From the American Heart Association/American Stroke Association. Stroke 2019, 50, e344–e418. [Google Scholar] [CrossRef]

- Jiang, Q.; Wang, H.; Ge, J.; Hou, J.; Liu, M.; Huang, Z.; Guo, Z.; You, S.; Cao, Y.; Xiao, G. Mechanical Thrombectomy versus Medical Care Alone in Large Ischemic Core: An up-to-Date Meta-Analysis. Interv. Neuroradiol. 2022, 28, 104–114. [Google Scholar] [CrossRef] [PubMed]

- Baig, A.A.; Bouslama, M.; Turner, R.C.; Aguirre, A.O.; Kuo, C.C.; Lim, J.; Malueg, M.D.; Donnelly, B.M.; Lai, P.M.R.; Raygor, K.P.; et al. Mechanical Thrombectomy in Low Alberta Stroke Program Early CT Score (ASPECTS) in Hyperacute Stroke—A Systematic Review and Meta-Analysis. Br. J. Radiol. 2023, 96, 20230084. [Google Scholar] [CrossRef]

- Flint, A.C.; Xiang, B.; Gupta, R.; Nogueira, R.G.; Lutsep, H.L.; Jovin, T.G.; Albers, G.W.; Liebeskind, D.S.; Sanossian, N.; Smith, W.S.; et al. THRIVE Score Predicts Outcomes with a Third-Generation Endovascular Stroke Treatment Device in the TREVO-2 Trial. Stroke 2013, 44, 3370–3375. [Google Scholar] [CrossRef] [PubMed]

- Flint, A.C.; Cullen, S.P.; Rao, V.A.; Faigeles, B.S.; Pereira, V.M.; Levy, E.I.; Jovin, T.G.; Liebeskind, D.S.; Nogueira, R.G.; Jahan, R.; et al. The THRIVE Score Strongly Predicts Outcomes in Patients Treated with the Solitaire Device in the SWIFT and STAR Trials. Int. J. Stroke 2014, 9, 698–704. [Google Scholar] [CrossRef]

- Saito, T.; Rehmsmeier, M. The Precision-Recall Plot Is More Informative than the ROC Plot When Evaluating Binary Classifiers on Imbalanced Datasets. PLoS ONE 2015, 10, e0118432. [Google Scholar] [CrossRef]

- McDermott, M.B.A.; Hansen, L.H.; Zhang, H.; Angelotti, G.; Gallifant, J. A Closer Look at AUROC and AUPRC under Class Imbalance. arXiv 2024, arXiv:2401.06091. [Google Scholar] [CrossRef]

- Thölke, P.; Mantilla-Ramos, Y.-J.; Abdelhedi, H.; Maschke, C.; Dehgan, A.; Harel, Y.; Kemtur, A.; Mekki Berrada, L.; Sahraoui, M.; Young, T.; et al. Class Imbalance Should Not Throw You off Balance: Choosing the Right Classifiers and Performance Metrics for Brain Decoding with Imbalanced Data. Neuroimage 2023, 277, 120253. [Google Scholar] [CrossRef]

- Ghanem, M.; Ghaith, A.K.; El-Hajj, V.G.; Bhandarkar, A.; de Giorgio, A.; Elmi-Terander, A.; Bydon, M. Limitations in Evaluating Machine Learning Models for Imbalanced Binary Outcome Classification in Spine Surgery: A Systematic Review. Brain Sci. 2023, 13, 1723. [Google Scholar] [CrossRef]

- Zhu, Q. On the Performance of Matthews Correlation Coefficient (MCC) for Imbalanced Dataset. Pattern Recognit. Lett. 2020, 136, 71–80. [Google Scholar] [CrossRef]

- Van Calster, B.; McLernon, D.J.; van Smeden, M.; Wynants, L.; Steyerberg, E.W. Calibration: The Achilles Heel of Predictive Analytics. BMC Med. 2019, 17, 230. [Google Scholar] [CrossRef]

- Huang, Y.; Li, W.; Macheret, F.; Gabriel, R.A.; Ohno-Machado, L. A Tutorial on Calibration Measurements and Calibration Models for Clinical Prediction Models. J. Am. Med. Inform. Assoc. 2020, 27, 621–633. [Google Scholar] [CrossRef] [PubMed]

- Assel, M.; Sjoberg, D.D.; Vickers, A.J. The Brier Score Does Not Evaluate the Clinical Utility of Diagnostic Tests or Prediction Models. Diagn. Progn. Res. 2017, 1, 19. [Google Scholar] [CrossRef] [PubMed]

- Vickers, A.J.; van Calster, B.; Steyerberg, E.W. A Simple, Step-by-Step Guide to Interpreting Decision Curve Analysis. Diagn. Progn. Res. 2019, 3, 18. [Google Scholar] [CrossRef] [PubMed]

| Variables | Overall (n = 151) | 90-Day Mortality (n = 39) | No 90-Day Mortality (n = 112) | p-Value |

|---|---|---|---|---|

| Age, years, median (IQR) | 78 (69–85) | 84 (77.5–87.5) | 75 (68.75–83) | <0.001 |

| Male, n (%) | 57 (37.7) | 14 (35.9) | 43 (38.4) | 0.782 |

| Baseline NIHSS, median (IQR) | 23 (18.5–27) | 25 (20–28) | 23 (18–27) | 0.038 |

| Admission SBP, mmHg, mean (SD) | 156.73 (27.92) | 165.51 (28.39) | 153.67 (27.23) | 0.022 |

| Admission blood glucose level, median (IQR) | 7.2 (6.2–8.9) | 7.7 (6.2–9.25) | 7.2 (6.175–8.75) | 0.494 |

| History of previous stroke, n (%) | 34 (22.5) | 9 (23.1) | 25 (22.3) | 0.923 |

| Atrial Fibrillation, n (%) | 78 (51.7) | 22 (56.4) | 56 (50) | 0.490 |

| Hypertension, n (%) | 102 (67.5) | 27 (69.2) | 75 (67.0) | 0.795 |

| Diabetes Mellitus, n (%) | 37 (24.5) | 8 (20.5) | 29 (25.9) | 0.501 |

| ASPECTS, median (IQR) | 8 (6–9) | 7 (5–8) | 8 (6–9) | 0.002 |

| ICA/BA Occlusion, n (%) | 57 (37.7) | 20 (51.3) | 37 (33.0) | 0.043 |

| Bridging IVT, n (%) | 72 (47.7) | 20 (51.3) | 52 (46.4) | 0.601 |

| Algorithm | AUC (95% CI) | AUPRC | Balanced Accuracy | F1 Score | MCC | Brier Score |

|---|---|---|---|---|---|---|

| LR (model I) | 0.691 (0.637–0.742) | 0.387 | 0.600 | 0.340 | 0.234 | 0.150 |

| RF (model I) | 0.643 (0.587–0.696) | 0.327 | 0.596 | 0.329 | 0.253 | 0.156 |

| XGB (model I) | 0.606 (0.550–0.661) | 0.299 | 0.594 | 0.344 | 0.147 | 0.245 |

| KNN (model I) | 0.603 (0.546–0.657) | 0.328 | 0.558 | 0.244 | 0.161 | 0.163 |

| SVM (model I) | 0.668 (0.613–0.720) | 0.368 | 0.606 | 0.352 | 0.257 | 0.146 |

| NN (model I) | 0.643 (0.588–0.697) | 0.344 | 0.568 | 0.299 | 0.125 | 0.187 |

| LR (model II) | 0.712 (0.658–0.761) | 0.417 | 0.609 | 0.358 | 0.228 | 0.149 |

| RF (model II) | 0.689 (0.634–0.740) | 0.365 | 0.616 | 0.372 | 0.301 | 0.145 |

| XGB (model II) | 0.640 (0.584–0.694) | 0.339 | 0.608 | 0.358 | 0.181 | 0.215 |

| KNN (model II) | 0.603 (0.547–0.658) | 0.337 | 0.554 | 0.238 | 0.145 | 0.163 |

| SVM (model II) | 0.705 (0.651–0.755) | 0.421 | 0.618 | 0.375 | 0.270 | 0.143 |

| NN (model II) | 0.702 (0.648–0.752) | 0.425 | 0.652 | 0.418 | 0.270 | 0.167 |

| HIAT2 | 0.717 (0.664–0.766) | 0.402 | 0.704 | 0.474 | 0.340 | 0.292 |

| THRIVE | 0.688 (0.634–0.739) | 0.351 | 0.589 | 0.331 | 0.158 | 0.236 |

| PRACTICE | 0.611 (0.554–0.665) | 0.273 | 0.581 | 0.324 | 0.137 | 0.194 |

| HIAT2—0 | HIAT2—1 | |||

|---|---|---|---|---|

| SVM—0 | 191 | 43 | 234 (91.4%) | |

| SVM—1 | 5 | 17 | 22 (8.6%) | |

| 196 (76.6%) | 60 (23.4%) | 256 | p < 0.0001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ng, A.T.H.; Chan, L.W.C. Identification and Patient Benefit Evaluation of Machine Learning Models for Predicting 90-Day Mortality After Endovascular Thrombectomy Based on Routinely Ready Clinical Information. Bioengineering 2025, 12, 468. https://doi.org/10.3390/bioengineering12050468

Ng ATH, Chan LWC. Identification and Patient Benefit Evaluation of Machine Learning Models for Predicting 90-Day Mortality After Endovascular Thrombectomy Based on Routinely Ready Clinical Information. Bioengineering. 2025; 12(5):468. https://doi.org/10.3390/bioengineering12050468

Chicago/Turabian StyleNg, Andrew Tik Ho, and Lawrence Wing Chi Chan. 2025. "Identification and Patient Benefit Evaluation of Machine Learning Models for Predicting 90-Day Mortality After Endovascular Thrombectomy Based on Routinely Ready Clinical Information" Bioengineering 12, no. 5: 468. https://doi.org/10.3390/bioengineering12050468

APA StyleNg, A. T. H., & Chan, L. W. C. (2025). Identification and Patient Benefit Evaluation of Machine Learning Models for Predicting 90-Day Mortality After Endovascular Thrombectomy Based on Routinely Ready Clinical Information. Bioengineering, 12(5), 468. https://doi.org/10.3390/bioengineering12050468