Bringing Precision to Pediatric Care: Explainable AI in Predicting No-Show Trends Before and During the COVID-19 Pandemic

Abstract

1. Introduction

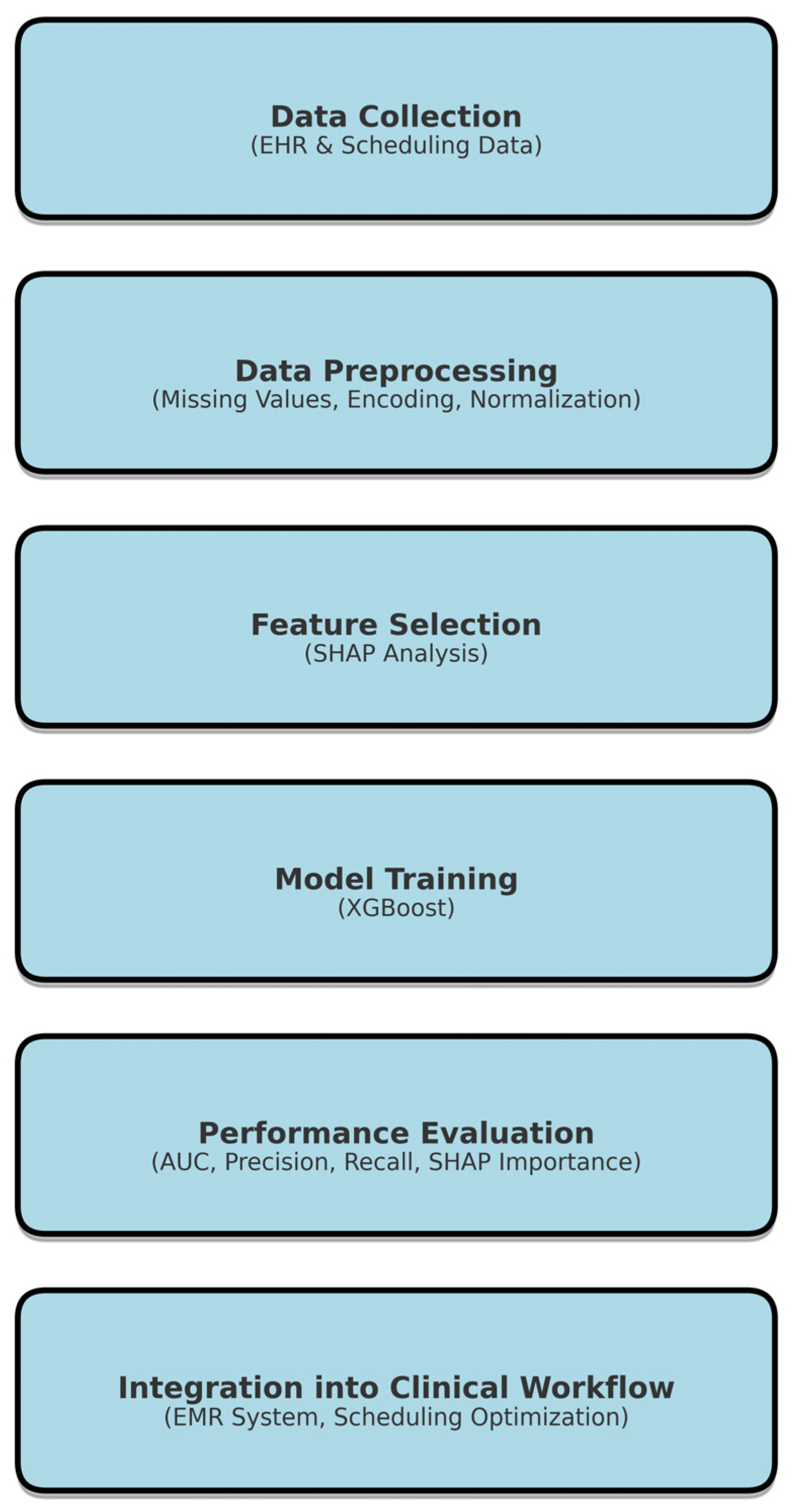

2. Methods

2.1. Ethical Approval

2.2. Study Population and Data Preprocessing

2.3. Machine Learning Algorithm Development

2.4. Performance Evaluation Metrics

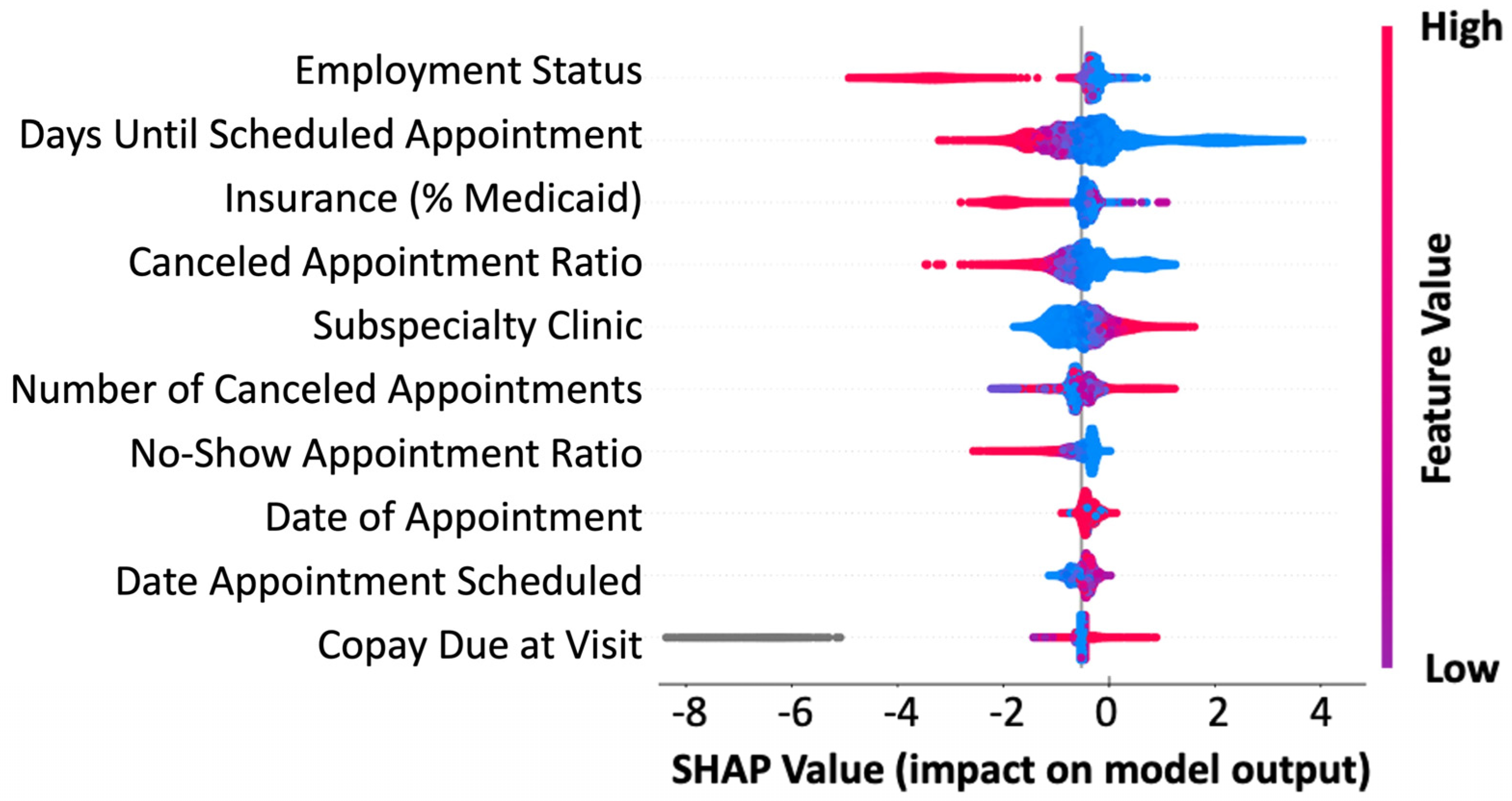

2.5. SHAP Feature Analysis

2.6. Intellectual Property/Data Availability

2.7. Statistics

3. Results

3.1. Baseline Characteristics and Influence of Variables on No-Show Rates

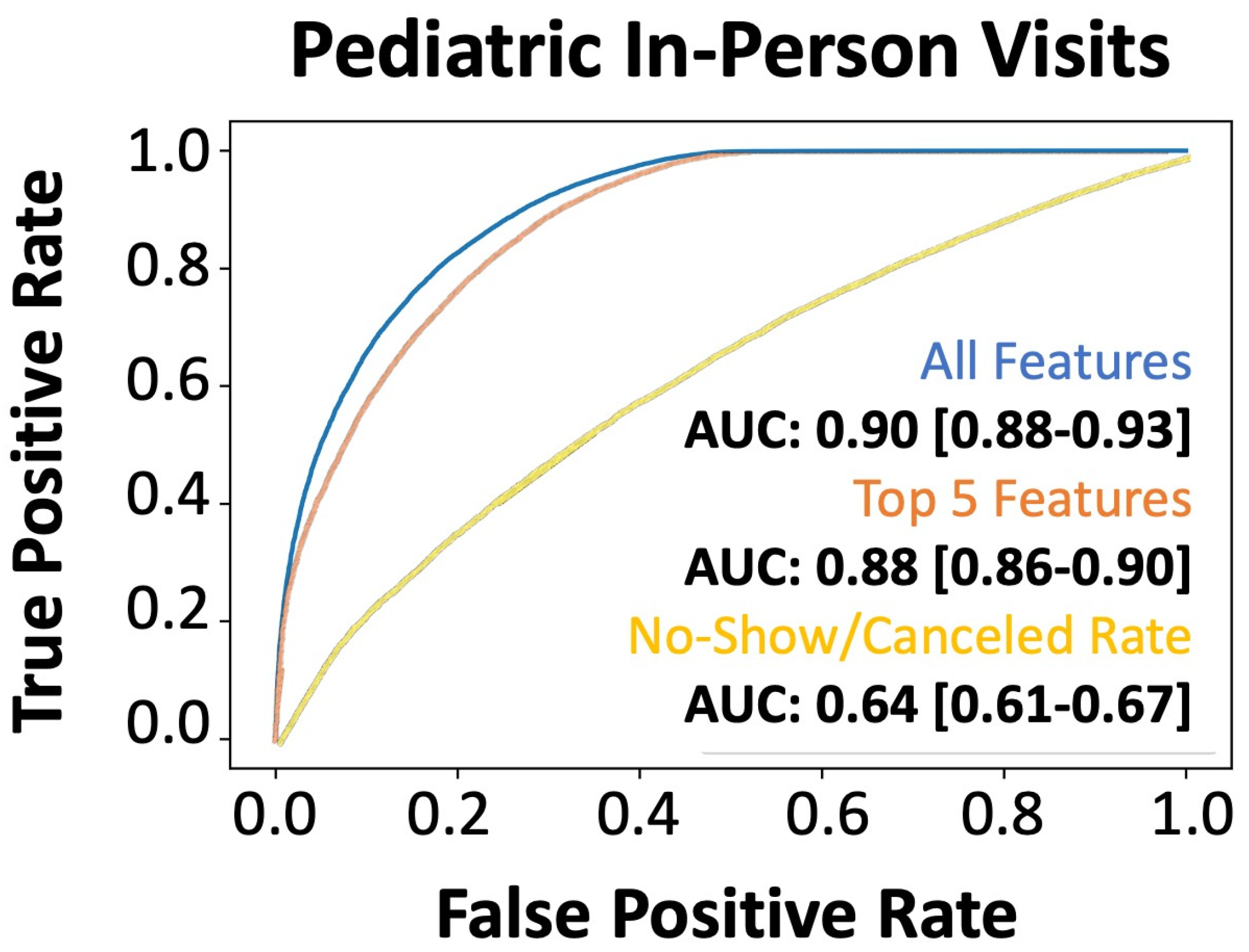

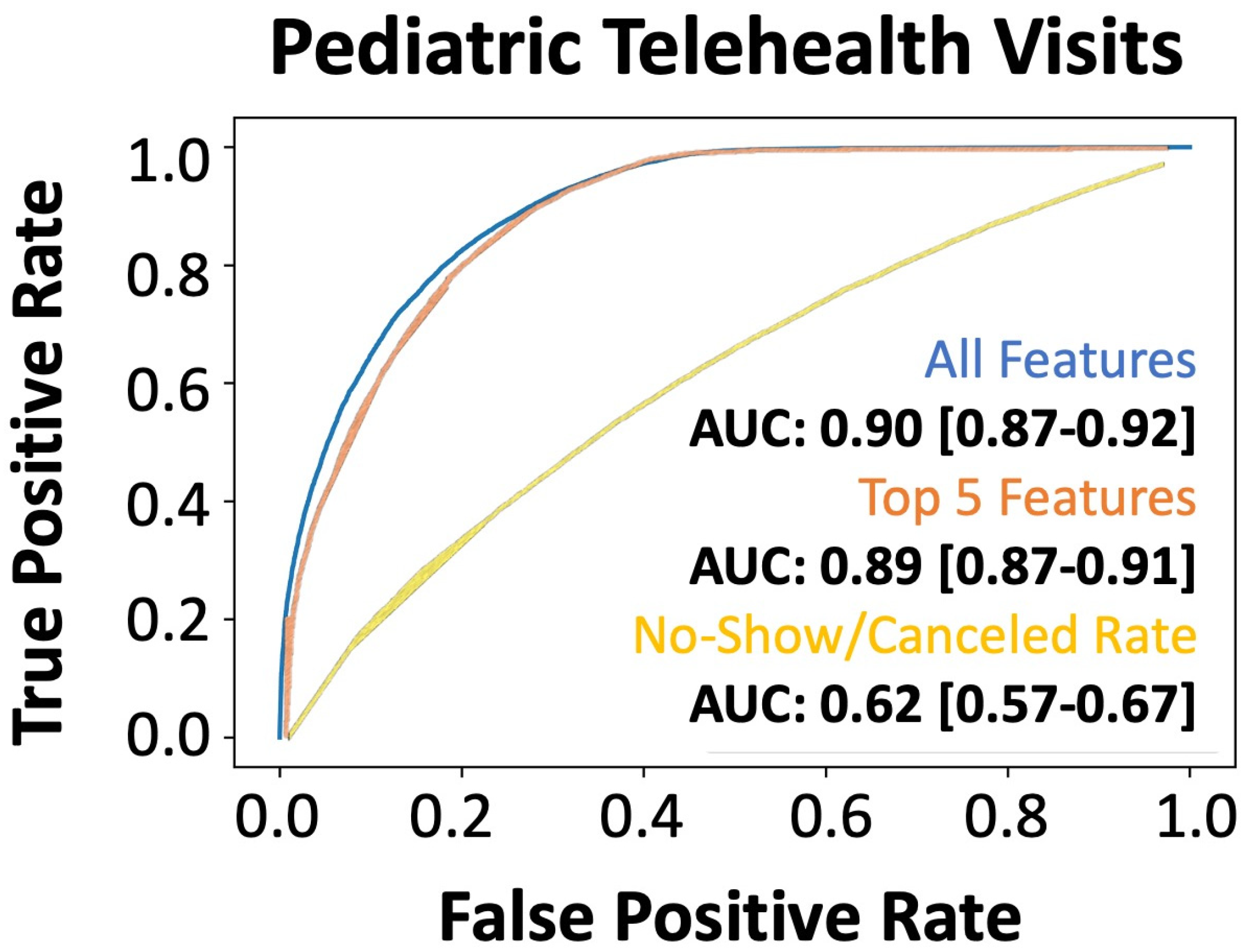

3.2. Performance of Model Predictions of No-Shows

3.3. Evaluation of Model Generalizability and Adaptability to Unknown Healthcare Needs

4. Discussion

5. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Marbouh, D.; Khaleel, I.; Al Shanqiti, K.; Al Tamimi, M.; Simsekler, M.C.E.; Ellahham, S.; Alibazoglu, D.; Alibazoglu, H. Evaluating the Impact of Patient No-Shows on Service Quality. Risk Manag. Healthc. Policy 2020, 13, 509–517. [Google Scholar] [CrossRef]

- Gier, J. Missed Appointments Cost the U.S. Healthcare System $150B Each Year. Available online: https://www.hcinnovationgroup.com/clinical-it/article/13008175/missed-appointments-cost-the-us-healthcare-system-150b-each-year (accessed on 24 April 2023).

- Ansell, D.; Crispo, J.A.G.; Simard, B.; Bjerre, L.M. Interventions to reduce wait times for primary care appointments: A systematic review. BMC Health Serv. Res. 2017, 17, 295. [Google Scholar] [CrossRef]

- Hayhurst, C. No-Show Effect: Even One Missed Appointment Risks Retention. Available online: https://www.athenahealth.com/knowledge-hub/financial-performance/no-show-effect-even-one-missed-appointment-risks-retention (accessed on 6 June 2023).

- Salazar, L.H.A.; Parreira, W.D.; Fernandes, A.M.R.; Leithardt, V.R.Q. No-Show in Medical Appointments with Machine Learning Techniques: A Systematic Literature Review. Information 2022, 13, 507. [Google Scholar] [CrossRef]

- Qureshi, Z.; Maqbool, A.; Mirza, A.; Iqbal, M.Z.; Afzal, F.; Kanubala, D.D.; Rana, T.; Umair, M.Y.; Wakeel, A.; Shah, S.K. Efficient Prediction of Missed Clinical Appointment Using Machine Learning. Comput. Math. Methods Med. 2021, 2021, 2376391. [Google Scholar] [CrossRef]

- Salazar, L.H.A.; Leithardt, V.R.Q.; Parreira, W.D.; Fernandes, A.M.R.; Barbosa, J.L.V.; Correia, S.D. Application of Machine Learning Techniques to Predict a Patient’s No-Show in the Healthcare Sector. Future Int. 2021, 14, 3. [Google Scholar] [CrossRef]

- Moharram, A.; Altamimi, S.; Alshammari, R. Data Analytics and Predictive Modeling for Appointments No-show at a Tertiary Care Hospital. In Proceedings of the 2021 1st International Conference on Artificial Intelligence and Data Analytics (CAIDA), Riyadh, Saudi Arabia, 6–7 April 2021; pp. 275–277. [Google Scholar]

- Daghistani, T.; AlGhamdi, H.; Alshammari, R.; AlHazme, R.H. Predictors of outpatients’ no-show: Big data analytics using apache spark. J. Big Data 2020, 7, 108. [Google Scholar] [CrossRef]

- Fan, G.; Deng, Z.; Ye, Q.; Wang, B. Machine learning-based prediction models for patients no-show in online outpatient appointments. Data Sci. Manag. 2021, 2, 45–52. [Google Scholar] [CrossRef]

- Alshammari, A.; Almalki, R.; Alshammari, R. Developing a Predictive Model of Predicting Appointment No-Show by Using Machine Learning Algorithms. J. Adv. Inform. Technol. 2021, 12, 234–239. [Google Scholar] [CrossRef]

- Ahmadi, E.; Garcia-Arce, A.; Masel, D.T.; Reich, E.; Puckey, J.; Maff, R. A metaheuristic-based stacking model for predicting the risk of patient no-show and late cancellation for neurology appointments. IISE Trans. Healthc. Syst. Eng. 2019, 9, 272–291. [Google Scholar] [CrossRef]

- Srinivas, S.; Salah, H. Consultation length and no-show prediction for improving appointment scheduling efficiency at a cardiology clinic: A data analytics approach. Int. J. Med/Inform. 2021, 145, 104290. [Google Scholar] [CrossRef]

- Alshammari, R.; Daghistani, T.; Alshammari, A. The Prediction of Outpatient No-Show Visits by using Deep Neural Network from Large Data. Int. J. Adv. Comput. Sci. Appl. 2020, 11, 533–539. [Google Scholar] [CrossRef]

- Shameer, K.; Johnson, K.W.; Yahi, A.; Miotto, R.; Li, L.I.; Ricks, D.; Jebakaran, J.; Kovatch, P.; Sengupta, P.P.; Gelijns, S.; et al. Predictive Modeling of Hospital Readmission Rates Using Electronic Medical Record-Wide Machine Learning: A Case-Study Using Mount Sinai Heart Failure Cohort. Pac. Symp. Biocomput. 2017, 22, 276–287. [Google Scholar] [CrossRef] [PubMed]

- Chong, L.R.; Tsai, K.T.; Lee, L.L.; Foo, S.G.; Chang, P.C. Artificial Intelligence Predictive Analytics in the Management of Outpatient MRI Appointment No-Shows. AJR Am. J. Roentgenol. 2020, 215, 1155–1162. [Google Scholar] [CrossRef] [PubMed]

- Mohammadi, I.; Wu, H.; Turkcan, A.; Toscos, T.; Doebbeling, B.N. Data Analytics and Modeling for Appointment No-show in Community Health Centers. J. Prim. Care Community Health 2018, 9, 2150132718811692. [Google Scholar] [CrossRef] [PubMed]

- Ellis, D.A.; McQueenie, R.; McConnachie, A.; Wilson, P.; Williamson, A.E. Demographic and practice factors predicting repeated non-attendance in primary care: A national retrospective cohort analysis. Lancet Public Health 2017, 2, e551–e559. [Google Scholar] [CrossRef]

- Festinger, D.S.; Lamb, R.J.; Marlowe, D.B.; Kirby, K.C. From telephone to office: Intake attendance as a function of appointment delay. Addict. Behav. 2002, 27, 131–137. [Google Scholar] [CrossRef]

- Chen, J.; Goldstein, I.H.; Lin, W.C.; Chiang, M.F.; Hribar, M.R. Application of Machine Learning to Predict Patient No-Shows in an Academic Pediatric Ophthalmology Clinic. AMIA Annu. Symp. Proc. 2020, 2020, 293–302. [Google Scholar] [PubMed]

- Liu, D.; Shin, W.Y.; Sprecher, E.; Conroy, K.; Santiago, O.; Wachtel, G.; Santillana, M. Machine learning approaches to predicting no-shows in pediatric medical appointment. NPJ Digit. Med. 2022, 5, 50. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the KDD’16: 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Srinivas, S.; Ravindran, A.R. Optimizing outpatient appointment system using machine learning algorithms and scheduling rules. Expert Syst. Appl. 2018, 102, 245–261. [Google Scholar] [CrossRef]

- Srinivas, S.; Ravindran, A.R. Designing schedule configuration of a hybrid appointment system for a two-stage outpatient clinic with multiple servers. Health Care Manag. Sci. 2020, 23, 360–386. [Google Scholar] [CrossRef]

- Samorani, M.; Harris, S.L.; Blount, L.G.; Lu, H.; Santoro, M.A. Overbooked and Overlooked: Machine Learning and Racial Bias in Medical Appointment Scheduling. Manuf. Serv. Oper. Manag. 2021, 24, 2825–2842. [Google Scholar] [CrossRef]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic Minority Over-sampling Technique. J. Artif. Intell. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Yang, J.; Soltan, A.A.S.; Eyre, D.W.; Yang, Y.; Clifton, D.A. An adversarial training framework for mitigating algorithmic biases in clinical machine learning. NPJ Digit. Med. 2023, 6, 55. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Dhanka, S.; Singh, J.; Ali Khan, A.; Maini, S. Hybrid machine learning techniques based on genetic algorithm for heart disease detection. Innov. Emerg. Technol. 2024, 11, 2450008. [Google Scholar] [CrossRef]

| Parameter | Appointment Completed [95% CI] (N = 116,442) | Appointment Not Completed [95% CI] (N = 93,202) | p-Value | Cohen (Effect Size) |

|---|---|---|---|---|

| Patient’s Age (years) | 11.56 [11.49–11.62] | 11.27 [11.2–11.33] | <0.001 | 0.0267 |

| Gender (% Male) | 61,478 (52.80%) | 48,409 (51.94%) | <0.001 | 0.0172 |

| Race/Ethnicity (% Caucasian) | 108,668 (93.32%) | 86,103 (92.38%) | <0.001 | 0.0377 |

| English Fluency (% Fluent) | 114,644 (98.46%) | 91,307 (97.97%) | <0.001 | 0.0397 |

| Department | ||||

| Allergy/Immunology | 12,811 (11.00%) | 9643 (10.35%) | <0.001 | 0.021 |

| Cardiology | 18,532 (15.92%) | 10,872 (11.66%) | <0.001 | 0.1162 * |

| Craniofacial Surgery | 694 (0.60%) | 564 (0.61%) | 0.7879 | 0.0012 |

| Cardiothoracic Surgery | 1881 (1.62%) | 1239 (1.33%) | <0.001 | 0.0227 |

| Dermatology | 1550 (1.33%) | 1187 (1.27%) | 0.2486 | 0.005 |

| Endocrinology | 12,739 (10.94%) | 9290 (9.97%) | <0.001 | 0.0312 |

| Gastroenterology | 10,864 (9.33%) | 11,661 (12.51%) | <0.001 | 0.1094 * |

| Hematology/Oncology | 7350 (6.31%) | 3342 (3.59%) | <0.001 | 0.1121 * |

| Infectious Diseases | 1125 (0.97%) | 920 (0.99%) | 0.6276 | 0.0021 |

| Neurology | 11,847 (10.17%) | 13,610 (14.60%) | <0.001 | 0.1465 * |

| Neonatology | 1329 (1.14%) | 1366 (1.47%) | <0.001 | 0.0305 |

| Neurosurgery | 4119 (3.54%) | 3665 (3.93%) | <0.001 | 0.0214 |

| Nephrology | 6152 (5.28%) | 6946 (7.45%) | <0.001 | 0.097 |

| Orthopedics | 13,942 (11.97%) | 8209 (8.81%) | <0.001 | 0.0975 |

| Plastic Surgery | 304 (0.26%) | 225 (0.24%) | 0.3725 | 0.0039 |

| Pulmonology | 1812 (1.56%) | 2425 (2.60%) | <0.001 | 0.0845 |

| Sports Medicine | 298 (0.26%) | 153 (0.16%) | <0.001 | 0.0182 |

| Surgery (General) | 3071 (2.64%) | 1823 (1.96%) | <0.001 | 0.0425 |

| Urology | 17,474 (15.01%) | 19,321 (20.73%) | <0.001 | 0.1603 * |

| Other Departments | 395 (0.34%) | 351 (0.38%) | 0.1533 | 0.0064 |

| Days Until Scheduled Appointment | 48.06 [47.68–48.44] | 82.28 [81.74–82.82] | <0.001 | 0.5180 * |

| Appointment Length | 25.05 [24.98–25.11] | 26.45 [26.38–26.52] | <0.001 | 0.1251 * |

| Referral Required | 16,837 (14.46%) | 14,858 (15.94%) | <0.001 | 0.0421 |

| Overbooked Time Slot | 12,302 (10.56%) | 5602 (6.01%) | <0.001 | 0.1482 * |

| Same Day Appointment | 9926 (8.52%) | 829 (0.89%) | <0.001 | 0.2734 * |

| Canceled Appointment Ratio | 20.40% [20.29–20.51%] | 29.07% [28.93–29.22%] | <0.001 | 0.4544 * |

| No-Show Appointment Ratio | 3.65% [3.62–3.69%] | 5.12% [5.07–5.17%] | <0.001 | 0.2249 * |

| Guardian Gender (% Male) | 14325 (22.98%) | 7380 (19.86%) | <0.001 | 0.0742 |

| Employment Status | ||||

| Full-Time | 58,784 (50.48%) | 31,562 (33.86%) | <0.001 | 0.3324 * |

| Part-Time | 1814 (1.56%) | 4758 (5.11%) | <0.001 | 0.0669 |

| Student | 32,678 (28.06%) | 977 (1.05%) | <0.001 | 0.0412 |

| Unemployed | 7903 (6.79%) | 23,121 (24.81%) | <0.001 | 0.0725 |

| Insurance (% Medicaid) | 50,497 (43.37%) | 35,233 (37.80%) | <0.001 | 0.1123 * |

| Copay Due at Visit | 8.07 [7.98–8.16] | 6.29 [6.2–6.38] | <0.001 | 0.1146 * |

| Maximum Temperature | 65.23 [65.12–65.33] | 63.94 [63.82–64.06] | <0.001 | 0.0699 |

| Minimum Temperature | 47.41 [47.31–47.51] | 46.35 [46.24–46.47] | <0.001 | 0.0615 |

| Temperature | 56.19 [56.09–56.29] | 55.03 [54.92–55.15] | <0.001 | 0.0673 |

| Wind Chill | 27.32 [27.21–27.43] | 25.96 [25.83–26.09] | <0.001 | 0.1008 * |

| Precipitation | 0.13 [0.13–0.13] | 0.13 [0.13–0.13] | 0.0235 | 0.01 |

| Wind Speed | 10.38 [10.36–10.4] | 10.42 [10.4–10.44] | 0.0057 | 0.0121 |

| Cloud Cover | 52.53 [52.35–52.72] | 53.79 [53.59–54] | <0.001 | 0.0395 |

| Relative Humidity | 67.26 [67.19–67.34] | 67.45 [67.37–67.53] | 0.0011 | 0.0142 |

| Weather—Clear | 29,065 (24.96%) | 22,124 (23.74%) | <0.001 | 0.0283 |

| Weather—Rain | 56,191 (48.26%) | 45,990 (49.34%) | <0.001 | 0.0218 |

| Weather—Overcast | 35,816 (30.76%) | 30,136 (32.33%) | <0.001 | 0.0341 |

| Weather—Partially Cloudy | 51,561 (44.28%) | 40,942 (43.93%) | 0.1066 | 0.0071 |

| Precision | Recall | F1-Score | |

|---|---|---|---|

| All Features | |||

| Negative Class | 0.83 | 0.75 | 0.79 |

| Positive Class | 0.82 | 0.88 | 0.85 |

| Accuracy | 0.82 | ||

| Top 5 Features | |||

| Negative Class | 0.78 | 0.74 | 0.76 |

| Positive Class | 0.81 | 0.84 | 0.82 |

| Accuracy | 0.80 | ||

| Racial/Ethnic Group | 2015–2018 (# of Patients and Total Percent) | 2019 (# of Patients and ROC AUC) | 2020 (# of Patients and ROC AUC) |

|---|---|---|---|

| Caucasian | 177,375 (93%) | 67,559 (AUC: 0.89) | 47,495 (AUC: 0.89) |

| Black | 5644 (3.0%) | 1999 (AUC: 0.84) | 1466 (AUC: 0.83) |

| Two or More Races | 4570 (2.4%) | 1933 (AUC: 0.75) | 1408 (AUC: 0.83) |

| Hispanic/Latino | 2386 (1.2%) | 1095 (AUC: 0.79) | 731 (AUC: 0.81) |

| Asian American | 1073 (0.6%) | 568 (AUC: 0.83) | 372 (AUC: 0.85) |

| Native American | 204 (0.1%) | 69 (AUC: 0.84) | 84 (AUC: 0.78) |

| Rank | Feature | Feature Importance (F Score) | Influence on No-Shows |

|---|---|---|---|

| 1 | Employment Status | 438 | Full-time employment leads to fewer no-shows |

| 2 | Days Until Scheduled Appointment | 105 | Longer wait times increase no-show likelihood |

| 3 | Insurance (% Medicaid) | 87 | Socioeconomic status is a barrier to receiving care |

| 4 | Canceled Appointment Ratio | 86 | Higher previous cancellations correlate with no-shows |

| 5 | Department or Medical Specialty | 52 | Higher no-show rates in hematology, neurology, and gastroenterology |

| Approach | Description |

|---|---|

| Optimized Double Booking | |

| Strategic Overbooking Optimization | Targets high-risk no-show patients for overbooking slots, pairing them with low-risk patients to reduce wasted provider time. |

| Risk-Based Appointment Reallocation | Dynamically reallocates appointments based on no-show risk, placing moderate-risk patients in flexible buffer slots. |

| Mixed Scheduling Approach | Distributes patient groups (high, moderate, low risk) throughout clinic schedules to balance efficiency and minimize provider downtime. |

| Integration with the EMR | |

| Automated No-Show Risk Score Generation | Generates no-show probabilities using patient history and real-time factors at the time of appointment booking. |

| Intelligent Scheduling Decision Engine | Integrates with EMR systems to suggest optimal scheduling decisions based on predicted no-show likelihood. |

| Adaptive Rebooking and Waitlist Management | Proactively engages high no-show risk patients with reminders or offers earlier slots to waitlist patients to maximize efficiency. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hathaway, Q.A.; Yanamala, N.; Narumanchi, T.; Narumanchi, J. Bringing Precision to Pediatric Care: Explainable AI in Predicting No-Show Trends Before and During the COVID-19 Pandemic. Bioengineering 2025, 12, 227. https://doi.org/10.3390/bioengineering12030227

Hathaway QA, Yanamala N, Narumanchi T, Narumanchi J. Bringing Precision to Pediatric Care: Explainable AI in Predicting No-Show Trends Before and During the COVID-19 Pandemic. Bioengineering. 2025; 12(3):227. https://doi.org/10.3390/bioengineering12030227

Chicago/Turabian StyleHathaway, Quincy A., Naveena Yanamala, TaraChandra Narumanchi, and Janani Narumanchi. 2025. "Bringing Precision to Pediatric Care: Explainable AI in Predicting No-Show Trends Before and During the COVID-19 Pandemic" Bioengineering 12, no. 3: 227. https://doi.org/10.3390/bioengineering12030227

APA StyleHathaway, Q. A., Yanamala, N., Narumanchi, T., & Narumanchi, J. (2025). Bringing Precision to Pediatric Care: Explainable AI in Predicting No-Show Trends Before and During the COVID-19 Pandemic. Bioengineering, 12(3), 227. https://doi.org/10.3390/bioengineering12030227