Abstract

Histopathological image classification using computational methods such as fine-tuned convolutional neural networks (CNNs) has gained significant attention in recent years. Graph neural networks (GNNs) have also emerged as strong alternatives, often employing CNNs or vision transformers (ViTs) as node feature extractors. However, as these models are usually pre-trained on small-scale natural image datasets, their performance in histopathology tasks can be limited. The introduction of foundation models trained on large-scale histopathological data now enables more effective feature extraction for GNNs. In this work, we integrate recently developed foundation models as feature extractors within a lightweight GNN and compare their performance with standard fine-tuned CNN and ViT models. Furthermore, we explore a prediction fusion approach that combines the outputs of the best-performing GNN and fine-tuned model to evaluate the benefits of complementary representations. Results demonstrate that GNNs utilizing foundation model features outperform those trained with CNN or ViT features and achieve performance comparable to standard fine-tuned CNN and ViT models. The highest overall performance is obtained with the proposed prediction fusion strategy. Evaluated on three publicly available datasets, the best fusion achieved F1-scores of 98.04%, 96.51%, and 98.28%, and balanced accuracies of 98.03%, 96.50%, and 97.50% on PanNuke, BACH, and BreakHis, respectively.

1. Introduction

Histopathology plays an important role in cancer diagnosis, prognosis, and treatment. Traditionally, histopathologists examine the microscopic structure of tissues using light microscopy to identify malignant features. Introduction of whole-slide digital scanners has transformed the field by enabling the generation of high-resolution digital slides. This digitization created opportunities for artificial intelligence-based methods in this field [1]. Automatic histological image analysis has become a critical tool in medical research and diagnostics, allowing for detailed examination of tissue structures at a microscopic level [2]. Image classification, a fundamental task in computational pathology, aims to assign diagnostic labels to these images or their subregions, such as distinguishing malignant from benign tissues [3]. Machine learning tools have emerged as powerful methods for various analysis tasks, such as regression, registration, and classification [4,5]. For histological image classification, various automated approaches have been proposed, ranging from classical machine learning to modern deep learning methods [6]. Convolutional neural networks (CNNs) [7], vision transformer (ViT)-based architectures [8], fused CNN–ViT models [9], and multimodal deep learning [10] are examples of such approaches. In addition to these, graph neural networks (GNNs) are also considered an alternative that can be utilized for medical and histological image classification tasks [11,12].

To apply GNNs to histology image classification, the first step is to construct a graph representation of the image, followed by the design of a graph-based architecture for classification [13]. Graph construction involves defining nodes, their embeddings, and the edges that connect them. Nodes can represent different histological entities, such as cell nuclei, image patches, or segmented regions (e.g., glands or tumors), while node embeddings are typically derived from features extracted by CNNs or ViTs [11]. Edges are generally established using spatial proximity or similarity measures to capture meaningful relationships between nodes [13]. Once the graph is constructed, GNN architectures can be employed for classification. Common building blocks include graph convolutional blocks [14], graph attention networks (GAT) [15], and GraphSAGE [16], which can be combined with pooling layers and fully connected or linear layers to perform the final classification. These models can be trained using standard loss functions such as categorical cross-entropy. More details and an overview of current trends in applying GNNs to medical and histological image analysis can be found in recent review articles [11,17].

In this study, we focus on graph node feature extraction. Many feature extraction methods have been proposed in the literature. Classical machine learning approaches typically rely on handcrafted or engineered features such as morphological and textural descriptors [18,19]. Due to the redundancy and computational cost of such features, several feature selection and dimensionality reduction techniques have also been proposed [20,21]. However, since extracting meaningful and informative features heavily depends on the specific application and dataset, handcrafted features may not optimally capture clinically relevant image characteristics, particularly in challenging cases [22].

With the advent of deep learning, and in particular, standard pre-trained CNNs, this domain has been revolutionized. CNN-based methods have become dominant due to their ability to automatically learn hierarchical and discriminative feature representations from images [23]. Pre-trained CNN architectures such as EfficientNet (V1 and V2) [24,25] and ResNet [26] have been widely used as feature extractors in both medical and non-medical image analysis tasks, including within GNN frameworks [27,28]. In addition to CNNs, Vision Transformer (ViT) models have also been recently explored as graph node feature extractors [11,29]. Owing to their ability to model long-range dependencies and capture global context through self-attention mechanisms, ViTs can provide highly informative features and, in some cases, outperform CNN-based features in specific image analysis tasks, particularly on large-scale datasets. While both CNNs and ViTs have demonstrated strong performance in many medical imaging tasks, they are usually pre-trained on natural image datasets (e.g., ImageNet [30]). The domain shift between natural and medical images can limit their ability to capture subtle, domain-specific histological patterns, which in turn may constrain their deployment in practical clinical settings [31]. Although various feature augmentation strategies, such as color jittering and deterministic or non-deterministic stain normalization, have been proposed to mitigate this issue [32,33], the domain shift and generalization challenges remain largely unsolved.

Recent advancements in pathology foundation models, specifically developed for histological image analysis, offer a promising alternative by providing robust and generalizable feature representations that can be adapted to specific tasks with minimal fine-tuning. Trained on large and diverse histology datasets, these models capture rich semantic and structural information, making them well-suited for feature extraction in complex histology domains. While they have been exploited for many downstream tasks in computational pathology, such as nuclei segmentation [34,35], histology image retrieval [28,36], and histology image segmentation [37,38], these foundation models can also be integrated into GNN frameworks to represent graph node features more effectively.

In this work, we leverage three recently developed pathology foundation models (CONCH [39], UNI [38], and UNI2 [38,40]) within a lightweight GNN as graph node feature extractors for histological image classification. We evaluate their classification performance against well-known pre-trained CNNs and ViT models (VGG19 [41], DenseNet201 [42], EfficientNetV2S [25], and ViT-Base [43]) on three publicly available benchmark datasets: PanNuke [44], BACH [45], and BreakHis [46]. Furthermore, we fuse the predictions of the best-performing GNN trained with foundation model features with those of the best-performing standard fine-tuned model to further improve classification performance.

In summary, the main contributions of this study can be listed as follows:

- We utilize pathology foundation models within a lightweight GNN for histological image classification.

- We compare the results of the proposed approach with standard fine-tuned CNN and ViT models, as well as GNNs using CNN and ViT features as node embeddings.

- We propose a fusion approach based on weighted averaging that combines the results from the best GNN models with the best standard fine-tuned models to further improve classification performance.

- We make our implementation publicly available to ensure reproducibility and facilitate future research.

The remainder of this manuscript is organized as follows: Section 2 provides a description of the datasets used in this study, an overview of the baseline CNN, ViT, and pathology foundation models, the architecture of the developed lightweight GNN model, the evaluation metrics utilized, the fusion and the implementation details. Section 3 presents and discusses the experimental results, and Section 4 concludes the work.

2. Materials and Methods

We propose a lightweight GNN-based model that leverages foundation model features as graph node representations to perform classification on histological images. We compare the performance of our proposed method with a number of baseline fine-tuned CNN and ViT models, as well as baseline GNN models that utilize standard node features extracted from either CNN-based or ViT-based pre-trained models.

2.1. Datasets

To conduct experiments, we use three datasets: PanNuke [44], BACH [45], and BreakHis [46]. All datasets contain samples extracted from whole-slide hematoxylin and eosin (H&E)-stained images. A brief description of each dataset is provided below, and further details can be found in the respective original studies.

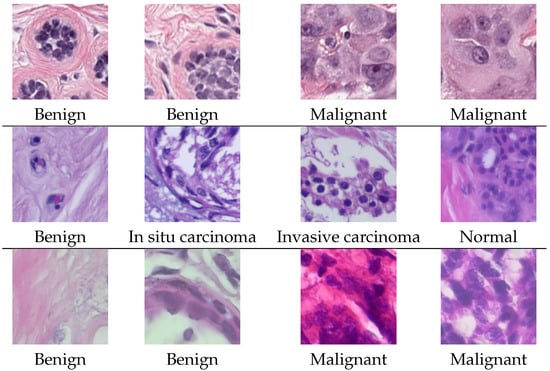

The PanNuke dataset contains 7901 image patches, each with a size of 256 × 256 pixels, captured at 40× magnification, and extracted from 19 different organs. The original dataset provides nuclei instance segmentation and classification masks with five types of nuclei, namely neoplastic tumor cells, inflammatory cells, connective tissue cells, dead cells, and epithelial cells. Since our objective is to perform a classification task, we construct a binary classification dataset by labeling samples from a subset of the PanNuke dataset as either malignant or benign, considering only images that contain at least one cell. Following the procedure proposed in [47], this results in a dataset comprising 2873 malignant and 3367 benign images.

The second dataset used in this study is the BACH dataset. It consists of 400 image patches of breast cancer tissue. Each image has a size of 2048 × 1536 pixels and was captured at 20× magnification. The images are labeled into four distinct classes, namely benign, in situ carcinoma, invasive carcinoma, and normal, with 100 images in each class.

The third and final dataset used in this study is the BreakHis dataset, which contains breast histological images at different magnification factors, divided into two classes: malignant and benign. The dataset comprises a total of 7909 images. However, in this work, we used only the images acquired at a magnification factor of 400× (corresponding to objective lens 40×), which is the common magnification used in routine pathology. This subset includes 338 benign images and 982 malignant images. All images are provided at a fixed size of pixels.

Example images from each dataset are shown in Figure 1.

Figure 1.

Example images from the PanNuke dataset (first row), the BACH dataset (second row) and the BreakHis dataset (third row).

2.2. Baseline Fine-Tuned Models

To establish a baseline for comparison, we use four pre-trained models in this study, namely VGG19 [41], DenseNet201 [42], EfficientNetV2S [25], and ViT-Base models [43].

VGG is one of the most well-known CNN architectures and has been widely applied to both medical and non-medical image classification tasks [48]. It primarily consists of convolutional layers, max-pooling layers, and fully connected layers. Several versions of the model exist, varying in depth. In this study, we use the pre-trained VGG19 variant. The model was pre-trained on the ImageNet dataset, which contains 1.2 million natural images across 1000 classes.

Another widely used CNN model employed in this study as a baseline is DenseNet [42]. In addition to standard building blocks such as convolutional layers, DenseNet uses the concept of skip connections by connecting each layer to every other layer in a feed-forward fashion. This design improves gradient flow and enhances model performance. Similar to VGG19, DenseNet has multiple depth variants. In this work, we use DenseNet201, pre-trained on the ImageNet dataset.

The third CNN baseline model used in this study is EfficientNetV2S. EfficientNetV2 is the second generation of the EfficientNet family [24], designed to improve training speed, parameter efficiency, and overall performance. It achieves this by combining mobile inverted bottleneck (MB) convolutional blocks with Fused-MB convolutional blocks, along with techniques such as a non-uniform scaling strategy and progressive learning. In this work, we use the EfficientNetV2S variant, which is the fastest model in the EfficientNetV2 family and is also pre-trained on the ImageNet dataset.

Besides these CNN models, we also employ a ViT-based model. In contrast to CNNs, which process images using localized convolutional filters, a ViT model represents images as flattened patch embeddings (e.g., patches of size 16 × 16 pixels) and processes them through a transformer encoder that applies self-attention to capture global relationships among all patches. For classification tasks, the transformer encoder is typically followed by a multi-layer perceptron (MLP) head to generate the final predictions. Similar to CNNs, ViTs come in different architectures such as ViT-Tiny and ViT-Small; in this work, we employ the commonly used ViT-Base (ViT-B/16) model pre-trained on the ImageNet dataset.

Although several other pre-trained CNN and ViT models exist, we explicitly selected VGG19 and DenseNet201 as they are well-established benchmark models in numerous classification studies involving datasets of various sizes, different numbers of classes, and varying levels of complexity [49,50,51]. EfficientNetV2-S, on the other hand, is a more recently developed model that offers excellent classification performance while remaining computationally efficient [24,52]. We also included ViT-Base as a representative transformer-based model, as it provides superior performance compared to smaller variants such as ViT-Tiny and ViT-Small, while being less computationally expensive than larger models such as ViT-Large and ViT-Huge [43].

To adapt these models for the histological image classification tasks on our employed datasets, we replaced the original fully connected layers with a single linear layer, where the number of output nodes corresponds to the number of classes in each dataset (two for PanNuke and BreakHis, and four for BACH). The input images were resized to a fixed resolution of 224 × 224 pixels and normalized using the mean and standard deviation of the ImageNet dataset. In addition, standard data augmentation techniques, including random horizontal and vertical flipping as well as random rotations, were applied during fine-tuning.

2.3. GNN Model

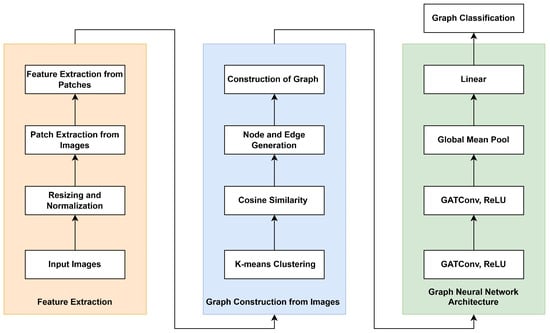

In this work, we develop a lightweight GNN as illustrated in Figure 2. The proposed method consists of three main components, namely feature extraction from image patches (orange section), graph construction (blue section), and the GNN architecture (green section).

Figure 2.

General workflow of the proposed approach.

2.3.1. Feature Extraction

The first step prior to feature extraction is resizing all images to a fixed size of 224 × 224 pixels and normalizing them using the mean and standard deviation of the ImageNet dataset. Non-overlapping patches are then extracted from the input images. These patches are subsequently passed to the pre-trained models, and the resulting embeddings are used for graph construction. For pre-trained models, we employ the same CNNs and ViT-Base as baseline models, along with three pathology foundation models: CONCH [39], UNI [38], and UNI-2 [38,40]. As fine-tuning these models significantly increases computational cost, all foundation models are used in their original pre-trained form without further domain adaptation or fine-tuning, similar to a number of other studies that also employ these models in a frozen form [28,53].

Although several medical and non-medical foundation models have been developed and proposed in recent years, we selected pathology-specific foundation models that have demonstrated excellent performance in previous studies. For instance, the UNI and CONCH models have shown superior performance compared to a number of other foundation models such as MedCLIP, BioMedCLIP, and Virchow in [28]. Similarly, UNI has also outperformed other pathology foundation models such as CTransPath and Phikon in [54]. UNI-2 represents an improved version of UNI, trained on a larger and more diverse dataset, and has exhibited better performance than the original UNI model [55]. A brief description of the selected foundation models is provided below.

CONCH is a vision–language pathology foundation model trained on 1.17 million histological image–text pairs using the CLIP paradigm [56], employing a vision encoder (ViT-B/16) and a GPT-style text encoder. UNI is a vision foundation model trained on more than 100 million histological image patches using the DINOv2 self-supervised learning approach [57]. Image embeddings are extracted from the UNI encoder, which is based on the ViT-L/16 architecture. We also utilize UNI-2, an extension of the original UNI model. UNI-2 is pre-trained on more than 200 million histological images using a ViT-H/14 vision encoder, making it one of the largest foundation models available for computational pathology tasks. Unlike CONCH and UNI, which extract 16 × 16 image patches, UNI-2 uses 14 × 14 patches due to its different vision encoder architecture.

The patch size, the number of extracted patches, and the size of the extracted features from each model (both standard and foundation models) are reported in the third, fourth, and fifth columns of Table 1, respectively. It should be noted that standard CNN models require larger patch sizes due to the technical limitation that smaller images cannot be used for feature extraction from pre-trained CNNs.

Table 1.

Image, patch, and clustering sizes for the algorithm. Den.201: DenseNet201, Eff.V2S: EfficientNetV2S, ViT-B: Vision Transformer-Base.

2.3.2. Graph Construction

The extracted features from image patches serve as the basis for graph construction. As the main focus of this study is to investigate the effect of integrating pathology foundation model features with a GNN, rather than developing or using advanced and computationally expensive graph construction methods, we design a lightweight GNN, as illustrated in Figure 2. For ViT-Base and pathology foundation models, we apply the k-means clustering algorithm to partition the features into a predefined number of clusters, set by default to 100 to keep the model lightweight. For clustering, we use a fixed random seed to ensure reproducibility. The resulting cluster centroids, which capture both the spatial and semantic characteristics of the image patches, are designated as the nodes of the graph. The input and output data sizes of the clustering algorithm across different models are summarized in the fifth and sixth columns of Table 1.

To establish connectivity between the nodes, we compute a cosine similarity matrix over all pairs of cluster centroids, quantifying their pairwise similarity. Edges are then defined based on this matrix. Specifically, for each pair of distinct clusters, if their similarity exceeds an empirically derived threshold of 0.6, bidirectional edges are added to the edge list. This lightweight graph construction strategy effectively condenses high-dimensional patch features into a compact and structured representation.

2.3.3. GNN Architecture

We utilize the graph attention network (GAT) [15] as the core building block to operate on the graph representations derived in the previous stage. GAT introduces an attention mechanism that enables nodes to weigh the importance of their neighbors and allows the network to focus on the most relevant connections in the graph. Our designed GNN model consists of two GATConv layers. These layers aggregate information from neighboring nodes based on learned attention weights, updating node representations in a way that preserves both feature and structural information. The first GATConv layer has four attention heads, each producing eight features, resulting in a combined output of 32 features per node, followed by a ReLU layer. The second GATConv layer is also equipped with four attention heads, each generating four output features per head, leading to a 16-dimensional representation per node, again followed by a ReLU layer. The output of the second ReLU layer is connected to a global mean pooling and a linear layer that maps the graph representation to the number of classes (two for the PanNuke and BreakHis datasets, and four for the BACH dataset). The linear projection enables the model to perform the final classification task by predicting the class label associated with each image.

It should be noted that our implementation choices, such as using two GATConv layers and applying a mean pooling layer to average the feature vectors of all nodes in the graph, were inspired by previous CNN- and GNN-based studies [58,59,60].

2.4. Evaluation

To evaluate the performance of the models for both binary and multi-class histological image classification, we employ two well-known evaluation metrics, namely F1-score (macro average for multi-classes) and balanced accuracy, as follows:

where precision and recall can be derived from the following formulas:

, , and represent true positives, false positives, and false negatives, respectively. For multi-class datasets, the macro F1-score can be derived as follows:

where C represents the number of classes.

Balanced accuracy can be derived from the following formula:

where again C, , and represent the number of classes, true positives, and false negatives, respectively.

To report the results, we calculate the mean and standard deviation of these metrics over 5-fold cross-validation to report the results. Besides reporting results in our experiments based on these two metrics, we also provide additional evaluation metrics, including precision, recall, sensitivity, specificity, area under the receiver operating characteristic curve (AUC), and Matthews Correlation Coefficient (MCC), as suggested in [61]. These metrics are reported for our best results and are available as Supplementary Materials in our GitHub repository (Tables S1–S3).

2.5. Fusion and Implementation Details

To perform fusion, we used weighted averaging as follows:

where denotes the fused prediction obtained by ensembling, and represent the predictions of Model A and Model B, respectively, and is the weighting factor assigned to Model A (with assigned to Model B). We empirically selected the optimal w within the range using a step size of .

For the implementation of the standard baseline models as well as graph construction, we use the PyTorch (version 2.5.1) deep learning framework. To extract features from the CONCH, UNI, and UNI2 foundation models, we follow the code and instructions provided on the respective GitHub pages at https://github.com/mahmoodlab/UNI (accessed on 3 December 2025) and https://github.com/mahmoodlab/CONCH (accessed on 3 December 2025).

All experiments are conducted using 5-fold cross-validation, and the results are reported as the average and standard deviation across the folds. For the standard baseline models, we used the Adam optimizer with a learning rate of , trained for 300 epochs with a batch size of 32, and a fixed seed point for reproducibility. The graph-based models were similarly optimized with Adam and a learning rate of , but trained for 300 epochs with a batch size of 32 and the same fixed seed point, using the cross-entropy loss function across all experiments.

As noted earlier, we used a fixed cluster size of 100 for the GNN-based experiments employing ViT, CONCH, UNI, and UNI2 feature extractors. Alternative cluster sizes were also evaluated, but the results did not vary substantially (refer to the Results section). For the GNN-based experiments with VGG19, EfficientNetV2S, and DenseNet201, we used 49 clusters, corresponding to the number of generated patches, since larger numbers could not be applied due to this constraint (see the fifth column in Table 1).

Experiments were run on a single workstation equipped with an Intel Xeon(R) Gold 6326 CPU running at 2.90 GHz, 47 GB of RAM, and an NVIDIA A40-16Q GPU with 16 GB of available memory. The code developed to generate and reproduce the results is publicly available in our GitHub repository at https://github.com/nematollahsaeidi/his_img_GNN_classification (accessed on 3 December 2025).

3. Results & Discussion

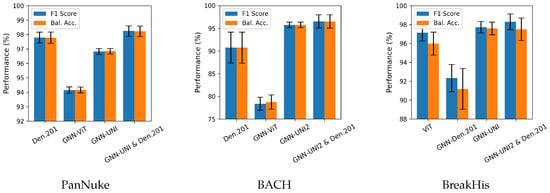

The classification results from our experiments are presented in Table 2, Table 3 and Table 4 for the PanNuke, BACH, and BreakHis datasets, respectively. The tables are divided into four parts: the first part shows the performance of fine-tuning standard models; the second part reports the performance of GNN models with standard feature extraction using pre-trained CNNs or ViT; the third part presents the performance of GNNs with pathology foundation model feature extraction; and the fourth part shows the performance of prediction fusion between the best GNN models (GNN-UNI or GNN-UNI2) and the overall best-performing standard CNN models (EfficientNetV2S and DensNet201).

Table 2.

Classification performance on the PanNuke dataset. Bal. Acc.: Balanced Accuracy, Den.201: DenseNet201, Eff.V2S: EfficientNetV2S, ViT: Vision Transformer-Base. The bold results indicate the best performance for each metric.

Table 3.

Classification performance on the BACH dataset. Bal. Acc.: Balanced Accuracy, Den.201: DenseNet201, Eff.V2S: EfficientNetV2S, ViT: Vision Transformer-Base. The bold results indicate the best performance for each metric.

Table 4.

Classification performance on the BreakHis dataset. Bal. Acc.: Balanced Accuracy, Den.201: DenseNet201, Eff.V2S: EfficientNetV2S, ViT: Vision Transformer-Base. The bold results indicate the best performance for each metric.

As shown in the tables, DenseNet201 and EfficientNetV2-S achieve the best overall performance among the standard fine-tuned models. For GNNs trained with features from standard models, GNN-ViT outperforms GNN-VGG19, GNN-DenseNet201, and GNN-EfficientNetV2-S by a considerable margin, with the exception of the BreakHis dataset, where GNN-DenseNet201 delivers slightly better performance than GNN-ViT. Nevertheless, the fine-tuned CNN and ViT models consistently outperform GNNs trained on pre-trained CNN or ViT features.

In contrast, GNNs trained with foundation model features outperform baseline GNNs in most cases. Among the evaluated foundation models, CONCH yields the lowest performance, while UNI and UNI-2 demonstrate competitive results.

Among the foundation models, UNI and UNI2 show strong performance on both the multi-organ (PanNuke) and single-organ (BACH and BreakHist) datasets. While CONCH performs comparably to the UNI family on the PanNuke and BreakHist datasets, its performance decreases notably on BACH. This may be due to the larger original image size in BACH relative to the other datasets, highlighting CONCH’s sensitivity to image resolution.

Fusion strategies combining the best foundation models (UNI or UNI-2) with the top-performing fine-tuned models (EfficientNetV2-S and DenseNet201) yield slight performance improvements, highlighting the potential of leveraging complementary information through model fusion. Among the fused models, the combination of GNN-UNI2 and DenseNet201 achieves the best overall performance.

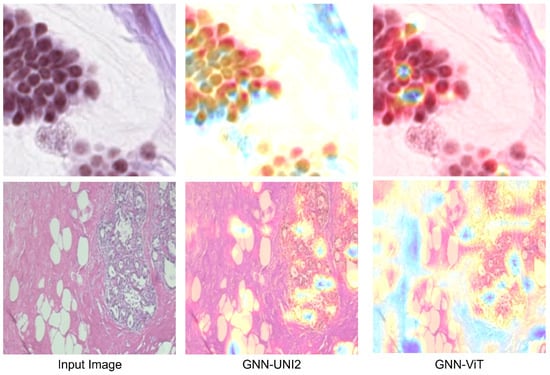

For better visualization, bar charts of the best-performing methods from each part of the results tables are shown in Figure 3. Additionally, in Figure 4, we present the attention heatmaps of example images using the Grad-CAM method [62] for the GNN-UNI2 (the best overall GNN model with foundation model features) and GNN-ViT models (the best overall GNN model with standard model features). In both cases, GNN-UNI2 correctly classified the images, whereas GNN-ViT produced incorrect predictions. As illustrated, GNN-UNI2 focuses more strongly on clinically relevant regions of the tissue compared to GNN-ViT.

Figure 4.

Attention heatmaps generated using the Grad-CAM method for two example images are shown for the GNN-UNI2 and GNN-ViT models. In both cases, GNN-UNI2 correctly classified the images, whereas GNN-ViT produced incorrect predictions. As illustrated, GNN-UNI2 focuses more strongly on clinically relevant regions of the tissue compared to GNN-ViT.

We also calculated the 95 percent confidence intervals for the best GNN-based models using the t values from the Student’s t distribution, and we report the results in Table 5. The GNN-UNI2 model consistently outperforms GNN-ViT on the BACH and BreakHis datasets, as indicated by the non-overlapping 95 percent confidence intervals, whereas for PanNuke, the intervals overlap, suggesting comparable performance.

Table 5.

Performance of the best GNN-based approaches using UNI-2 foundation model features and ViT features with 95% confidence intervals (t distribution). Values are reported as mean ± standard deviation using 5-fold cross-validation.

Besides the main results presented in the previous tables, we conduct a number of ablation studies. First, we evaluate the impact of the number of clusters on classification performance. This analysis is performed using the best GNN model (GNN-UNI2) with different numbers of clusters (10, 30, 50, and 100) on the PanNuke dataset. As the results in Table 6 indicate, varying the number of clusters does not drastically affect performance; however, the empirically chosen value of 100 clusters yields slightly improved results.

Table 6.

Classification performance of the GNN-UNI2 model with varying numbers of clusters on the PanNuke dataset. Bal. Acc.: Balanced Accuracy. The bold results indicate the best performance for each metric.

We also investigate the effect of the similarity threshold value on classification performance, and the results based on the GNN-UNI2 model are reported in Table 7. As the results show, the chosen threshold value of 0.6 delivers superior performance compared to other tested thresholds.

Table 7.

Classification performance of the GNN-UNI2 model with different similarity threshold values on the PanNuke dataset. Bal. Acc.: Balanced Accuracy. The bold results indicate the best performance for each metric.

To assess the performance of the weighted averaging strategy, we compare it with other approaches, namely simple averaging and meta-learning using logistic regression and a two-layer neural network, and report the results in Table 8. As shown in the table, the weighted averaging method achieves slightly superior performance while being computationally less expensive compared to the two meta-learners.

Table 8.

Classification performance of different ensembling approaches for GNN-UNI and DenseNet201 on the PanNuke dataset. The bold results indicate the best performance for each metric.

To estimate the computational cost, we measure both the training and testing times of different models, as well as GPU memory consumption. The results for the BreakHis dataset are reported in Table 9 and Table 10 as representative examples. As shown in Table 9, the training time of GNN-based approaches is considerably lower than that of fine-tuning methods; however, fine-tuned models achieve faster inference. In the case of GNN models, most of the training and testing time is consumed by graph construction rather than by the GNN model training or inference itself. In fact, when excluding the graph construction time, GNN models are significantly faster than fine-tuned models during inference.

Table 9.

Comparison of training and testing times across models on the BreakHis dataset. The reported values correspond to the average training time per cross-validation fold (in seconds) and the average testing time per image (in milliseconds).

Table 10.

Comparison of GPU memory consumption across models on the BreakHis dataset. The reported values correspond to the GPU memory during graph construction (in MB), during GNN/CNN/ViT training (in MB), and total memory (in MB).

We observed a similar pattern in GPU memory consumption. As the quantitative results in Table 10 show, training GNN-based models required much less memory compared to standard fine-tuned CNN or ViT models, although graph construction consumed a substantial amount of GPU memory.

Despite the promising results reported in this study, several limitations should be acknowledged.

First, although three well-established benchmark datasets were used for training and evaluation, using larger-scale datasets or extending the approach to whole-slide image classification would allow for a more comprehensive assessment.

Second, only a specific set of foundation and baseline models was considered. Future work could investigate a wider range of both medical and non-medical foundation models, as well as additional baseline architectures. The models included in this study were deliberately chosen due to their strong performance in previous computational pathology and computer vision tasks [28,38,39] and because the baselines are widely adopted in several related studies for medical image classification [45,63].

Third, prediction fusion was limited to a weighted averaging strategy. Alternative ensemble methods may provide further improvements in classification performance and should be explored in subsequent work.

Fourth, although the datasets used in this study capture only the static characteristics of sampled tissue, future work could explore applying the proposed method to datasets with longitudinal information, enabling the analysis of tumor region dynamics over time [64].

Finally, the main computational bottleneck of GNN-based approaches lies in graph construction rather than in training or inference. Future research should therefore explore strategies such as batch-based parallel computation for preprocessing and graph generation to reduce runtime.

4. Conclusions

Deep learning has transformed image analysis, yet most feature extractors, such as standard CNNs and ViTs used in computational pathology, still rely on models trained on natural images. This mismatch limits their ability to capture the fine-grained morphological cues that drive clinical decision-making. Pathology-specific foundation models represent an important step forward because they are trained on large and diverse histological data and therefore provide features that are substantially more aligned with the domain. Our work builds on this emerging trend by examining how these specialized representations behave within graph-based learning pipelines.

We systematically investigated the integration of foundation model features with graph-based neural networks for histological image classification. Using three benchmark datasets, we demonstrated that pathology-specific foundation models enhance GNN performance compared to conventional CNN- or ViT-based feature extractors, while also providing competitive results against standard fine-tuned baseline models. Furthermore, we showed that fusing predictions from foundation model feature-based GNNs and fine-tuned CNNs yields slight performance gains, underscoring the complementary nature of these representations. Although graph construction introduces computational overhead, our analysis confirmed the efficiency of the proposed pipelines relative to purely fine-tuning approaches when considering model training and inference times alone.

Looking ahead, this line of work offers several promising extensions. Future work can focus on extending this framework to larger and more diverse datasets, evaluating additional baseline and foundation models, application to whole-slide level prediction, exploring more advanced ensemble strategies, and further optimizing graph construction. Overall, our findings highlight the promise of combining foundation model representations with graph-based learning to advance the state of computational pathology.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/bioengineering12121332/s1, Table S1: Classification performance on the PanNuke dataset based on F1-score, balanced accuracy, precision, recall, sensitivity, specificity, area under the receiver operating characteristic curve (AUC), and Matthews Correlation Coefficient (MCC); Table S2: Classification performance on the BACH dataset based on F1-score, balanced accuracy, precision, recall, sensitivity, specificity, area under the receiver operating characteristic curve (AUC), and Matthews Correlation Coefficient (MCC); Table S3: Classification performance on the BreakHis dataset based on F1-score, balanced accuracy, precision, recall, sensitivity, specificity, area under the receiver operating characteristic curve (AUC), and Matthews Correlation Coefficient (MCC).

Author Contributions

Conceptualization, N.S. and A.M.; methodology, N.S. and A.M.; software, N.S. and N.T.; validation, N.S., N.T. and A.M.; investigation, N.S. and A.M.; writing—original draft, N.S. and A.M.; writing—review and editing, N.S., N.T., R.W. and A.M.; project administration, R.W. and A.M.; funding acquisition, N.S., R.W. and A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the OEAD Ernst Mach Grant (reference number: MPC-2024-01396).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used in this study are publicly available from previously published papers [44,45,46]. The code developed for this study is available on GitHub, with the corresponding link provided in the manuscript.

Acknowledgments

We would like to thank the reviewers for their valuable feedback, which helped us improve the manuscript.

Conflicts of Interest

The authors declare no conflicts of interest related to this work.

Abbreviations

The following abbreviations are used in this manuscript:

| GNN | Graph Neural Network |

| CNN | Convolutional Neural Network |

| ViT | Vision Transformer |

| GAT | Graph Attention Network |

| MB | Mobile Inverted Bottleneck |

| MLP | Multi-Layer Perceptron |

| Bal. Acc. | Balanced Accuracy |

| Den.201 | DenseNet201 |

| Eff.V2S | EfficientNetV2S |

References

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; Van Der Laak, J.A.; Van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar]

- Gurcan, M.N.; Boucheron, L.; Can, A.; Madabhushi, A.; Rajpoot, N.; Yener, B. Histopathological Image Analysis: A Review. IEEE Rev. Biomed. Eng. 2009, 2, 147–171. [Google Scholar] [CrossRef]

- Komura, D.; Ishikawa, S. Machine Learning Methods for Histopathological Image Analysis. Comput. Struct. Biotechnol. J. 2018, 16, 34–42. [Google Scholar] [CrossRef]

- Wang, L.; Shen, L.; Yi, J.; Yang, X.; Peng, Y.; Ding, J.; Tian, Y.; Yan, S. Prediction model of dynamic fracture toughness of nickel-based alloys: Combination of data-driven and multi-scale modelling. Eur. J. Mech.-A/Solids 2026, 116, 105892. [Google Scholar] [CrossRef]

- Mao, Z.; Suzuki, S.; Nabae, H.; Miyagawa, S.; Suzumori, K.; Maeda, S. Machine learning-enhanced soft robotic system inspired by rectal functions to investigate fecal incontinence. Bio-Des. Manuf. 2025, 8, 482–494. [Google Scholar] [CrossRef]

- Bahadir, C.D.; Omar, M.; Rosenthal, J.; Marchionni, L.; Liechty, B.; Pisapia, D.J.; Sabuncu, M.R. Artificial intelligence applications in histopathology. Nat. Rev. Electr. Eng. 2024, 1, 93–108. [Google Scholar] [CrossRef]

- Yan, T.; Chen, G.; Zhang, H.; Wang, G.; Yan, Z.; Li, Y.; Xu, S.; Zhou, Q.; Shi, R.; Tian, Z.; et al. Convolutional neural network with parallel convolution scale attention module and ResCBAM for breast histology image classification. Heliyon 2024, 10, e30889. [Google Scholar] [CrossRef] [PubMed]

- Karuppasamy, A. Recent ViT based models for Breast Cancer Histopathology Image Classification. In Proceedings of the 14th International Conference on Computing Communication and Networking Technologies, Delhi, India, 6–8 July 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Kanadath, A.; Jothi, J.A.A.; Urolagin, S. CViTS-Net: A CNN-ViT Network with Skip Connections for Histopathology Image Classification. IEEE Access 2024, 12, 117627–117649. [Google Scholar] [CrossRef]

- Mallya, M.; Hamarneh, G. Deep Multimodal Guidance for Medical Image Classification. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2022, Singapore, 18–22 September 2022; Wang, L., Dou, Q., Fletcher, P.T., Speidel, S., Li, S., Eds.; Springer: Cham, Switzerland, 2022; pp. 298–308. [Google Scholar] [CrossRef]

- Brussee, S.; Buzzanca, G.; Schrader, A.M.; Kers, J. Graph neural networks in histopathology: Emerging trends and future directions. Med. Image Anal. 2025, 101, 103444. [Google Scholar] [CrossRef]

- Tepe, E.; Bilgin, G. Classification of Tissue Types in Histology Images Using Graph Convolutional Networks. In Proceedings of the 2022 10th International Symposium on Digital Forensics and Security (ISDFS), Istanbul, Turkey, 6–7 June 2022; pp. 1–3. [Google Scholar] [CrossRef]

- Saeidi, N.; Karshenas, H.; Shoushtarian, B.; Hatamikia, S.; Woitek, R.; Mahbod, A. Leveraging Medical Foundation Model Features in Graph Neural Network-Based Retrieval of Breast Histopathology Images. arXiv 2024, arXiv:2405.04211. [Google Scholar]

- Zhang, S.; Tong, H.; Xu, J.; Maciejewski, R. Graph convolutional networks: A comprehensive review. Comput. Soc. Netw. 2019, 6, 11. [Google Scholar] [CrossRef] [PubMed]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Hamilton, W.; Ying, Z.; Leskovec, J. Inductive representation learning on large graphs. Adv. Neural Inf. Process. Syst. 2017, 30, 1024–1034. [Google Scholar]

- Zhang, L.; Zhao, Y.; Che, T.; Li, S.; Wang, X. Graph neural networks for image-guided disease diagnosis: A review. iRADIOLOGY 2023, 1, 151–166. [Google Scholar] [CrossRef]

- Georgiou, T.; Liu, Y.; Chen, W.; Lew, M. A survey of traditional and deep learning-based feature descriptors for high dimensional data in computer vision. Int. J. Multimed. Inf. Retr. 2020, 9, 135–170. [Google Scholar] [CrossRef]

- del Toro, O.J.; Otálora, S.; Andersson, M.; Eurén, K.; Hedlund, M.; Rousson, M.; Müller, H.; Atzori, M. Chapter 10—Analysis of Histopathology Images: From Traditional Machine Learning to Deep Learning. In Biomedical Texture Analysis; Depeursinge, A., Al-Kadi, O.S., Mitchell, J., Eds.; The Elsevier and MICCAI Society Book Series; Academic Press: Cambridge, MA, USA, 2017; pp. 281–314. [Google Scholar] [CrossRef]

- Xu, J.L.; Esquerre, C.; Sun, D.W. Methods for performing dimensionality reduction in hyperspectral image classification. J. Near Infrared Spectrosc. 2018, 26, 61–75. [Google Scholar] [CrossRef]

- Bolón-Canedo, V.; Remeseiro, B. Feature selection in image analysis: A survey. Artif. Intell. Rev. 2020, 53, 2905–2931. [Google Scholar] [CrossRef]

- Khan, K.; Awang, S.; Talab, M.A.; Kahtan, H. A comprehensive review of machine learning and deep learning techniques for intraclass variability breast cancer recognition. Frankl. Open 2025, 11, 100296. [Google Scholar] [CrossRef]

- Srinidhi, C.L.; Ciga, O.; Martel, A.L. Deep Neural Network Models for Computational Histopathology: A Survey. Med. Image Anal. 2021, 67, 101813. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 28 August 2021; Chaudhuri, K., Salakhutdinov, R., Eds.; PMLR: Cambridge, MA, USA, 2019; Volume 97, pp. 6105–6114. [Google Scholar]

- Tan, M.; Le, Q. EfficientNetV2: Smaller Models and Faster Training. In Proceedings of the 38th International Conference on Machine Learning, Long Beach, CA, USA, 12 August 2021; Meila, M., Zhang, T., Eds.; PMLR: Cambridge, MA, USA, 2021; Volume 139, pp. 10096–10106. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, Nevada, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Rehan, A.; Mukhtar, A.; Zaidi, H.; Ul Abidin, Z.; Tariq Toor, W. Hybrid Deep Learning for Blood Cancer Detection: A CNN-GCN Approach for Enhanced Diagnostic Accuracy. In Proceedings of the 2025 International Conference on Emerging Technologies in Electronics, Computing, and Communication (ICETECC), Jamshoro, Pakistan, 23–25 April 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Mahbod, A.; Saeidi, N.; Hatamikia, S.; Woitek, R. Evaluating pre-trained convolutional neural networks and foundation models as feature extractors for content-based medical image retrieval. Eng. Appl. Artif. Intell. 2025, 150, 110571. [Google Scholar] [CrossRef]

- Min, E.; Chen, R.; Bian, Y.; Xu, T.; Zhao, K.; Huang, W.; Zhao, P.; Huang, J.; Ananiadou, S.; Rong, Y. Transformer for graphs: An overview from architecture perspective. arXiv 2022, arXiv:2202.08455. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Tellez, D.; Litjens, G.; Bándi, P.; Bulten, W.; Bokhorst, J.M.; Ciompi, F.; van der Laak, J. Quantifying the effects of data augmentation and stain color normalization in convolutional neural networks for computational pathology. Med. Image Anal. 2019, 58, 101544. [Google Scholar] [CrossRef]

- Faryna, K.; van der Laak, J.; Litjens, G. Automatic data augmentation to improve generalization of deep learning in H&E stained histopathology. Comput. Biol. Med. 2024, 170, 108018. [Google Scholar] [CrossRef]

- Mahbod, A.; Dorffner, G.; Ellinger, I.; Woitek, R.; Hatamikia, S. Improving generalization capability of deep learning-based nuclei instance segmentation by non-deterministic train time and deterministic test time stain normalization. Comput. Struct. Biotechnol. J. 2024, 23, 669–678. [Google Scholar] [CrossRef]

- Adjadj, B.; Bannier, P.A.; Horent, G.; Mandela, S.; Lyon, A.; Schutte, K.; Marteau, U.; Gaury, V.; Dumont, L.; Mathieu, T.; et al. Towards Comprehensive Cellular Characterisation of H&E slides. arXiv 2025, arXiv:2508.09926. [Google Scholar] [CrossRef]

- Hörst, F.; Rempe, M.; Becker, H.; Heine, L.; Keyl, J.; Kleesiek, J. Cellvit++: Energy-efficient and adaptive cell segmentation and classification using foundation models. arXiv 2025, arXiv:2501.05269. [Google Scholar]

- Wang, X.; Du, Y.; Yang, S.; Zhang, J.; Wang, M.; Zhang, J.; Yang, W.; Huang, J.; Han, X. RetCCL: Clustering-guided contrastive learning for whole-slide image retrieval. Med. Image Anal. 2023, 83, 102645. [Google Scholar] [CrossRef]

- Griebel, T.; Archit, A.; Pape, C. Segment anything for histopathology. arXiv 2025, arXiv:2502.00408. [Google Scholar] [CrossRef]

- Chen, R.J.; Ding, T.; Lu, M.Y.; Williamson, D.F.K.; Jaume, G.; Song, A.H.; Chen, B.; Zhang, A.; Shao, D.; Shaban, M.; et al. Towards a general-purpose foundation model for computational pathology. Nat. Med. 2024, 30, 850–862. [Google Scholar] [CrossRef]

- Lu, M.Y.; Chen, B.; Williamson, D.F.K.; Chen, R.J.; Liang, I.; Ding, T.; Jaume, G.; Odintsov, I.; Le, L.P.; Gerber, G.; et al. A visual-language foundation model for computational pathology. Nat. Med. 2024, 30, 863–874. [Google Scholar] [CrossRef] [PubMed]

- Chen, R.J.; Ding, T.; Lu, M.Y.; Williamson, D.F.K.; Jaume, G.; Song, A.H.; Chen, B.; Zhang, A.; Shao, D.; Shaban, M.; et al. UNI: Universal Pathology Foundation Model. 2023. Available online: https://github.com/mahmoodlab/UNI (accessed on 27 August 2025).

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Gamper, J.; Alemi Koohbanani, N.; Benet, K.; Khuram, A.; Rajpoot, N. PanNuke: An Open Pan-Cancer Histology Dataset for Nuclei Instance Segmentation and Classification. In Proceedings of the Digital Pathology; Reyes-Aldasoro, C.C., Janowczyk, A., Veta, M., Bankhead, P., Sirinukunwattana, K., Eds.; Springer: Cham, Switzerland, 2019; pp. 11–19. [Google Scholar] [CrossRef]

- Aresta, G.; Araújo, T.; Kwok, S.; Chennamsetty, S.S.; Safwan, M.; Alex, V.; Marami, B.; Prastawa, M.; Chan, M.; Donovan, M.; et al. BACH: Grand challenge on breast cancer histology images. Med. Image Anal. 2019, 56, 122–139. [Google Scholar] [CrossRef]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. A Dataset for Breast Cancer Histopathological Image Classification. IEEE Trans. Biomed. Eng. 2016, 63, 1455–1462. [Google Scholar] [CrossRef]

- Huang, Z.; Bianchi, F.; Yuksekgonul, M.; Montine, T.J.; Zou, J. A visual–language foundation model for pathology image analysis using medical Twitter. Nat. Med. 2023, 29, 2307–2316. [Google Scholar] [CrossRef]

- Triyadi, A.B.; Bustamam, A.; Anki, P. Deep Learning in Image Classification using VGG-19 and Residual Networks for Cataract Detection. In Proceedings of the 2nd International Conference on Information Technology and Education, Malang, Indonesia, 22 January 2022; pp. 293–297. [Google Scholar] [CrossRef]

- Chen, C.; Mat Isa, N.A.; Liu, X. A review of convolutional neural network based methods for medical image classification. Comput. Biol. Med. 2025, 185, 109507. [Google Scholar] [CrossRef]

- Mohammed, F.A.; Tune, K.K.; Assefa, B.G.; Jett, M.; Muhie, S. Medical Image Classifications Using Convolutional Neural Networks: A Survey of Current Methods and Statistical Modeling of the Literature. Mach. Learn. Knowl. Extr. 2024, 6, 699–735. [Google Scholar] [CrossRef]

- Mahbod, A.; Schaefer, G.; Wang, C.; Ecker, R.; Dorffner, G.; Ellinger, I. Investigating and Exploiting Image Resolution for Transfer Learning-based Skin Lesion Classification. In Proceedings of the International Conference on Pattern Recognition, Milan, Italy, 10–15 January 2021; pp. 4047–4053. [Google Scholar] [CrossRef]

- Khullar, V.; Ahuja, R.; Solanki, V.; Chaudhary, A. Real Fake Image Classification using Explainable EfficientNetV2S: A Comparative Analysis. In Proceedings of the International Conference on Electrical Electronics and Computing Technologies, Greater Noida, India, 29–31 August 2024; Volume 1, pp. 1–5. [Google Scholar] [CrossRef]

- Ganz, J.; Ammeling, J.; Rosbach, E.; Lausser, L.; Bertram, C.A.; Breininger, K.; Aubreville, M. Is Self-supervision Enough? In Proceedings of the Bildverarbeitung für die Medizin; Palm, C., Breininger, K., Deserno, T., Handels, H., Maier, A., Maier-Hein, K.H., Tolxdorff, T.M., Eds.; Springer: Wiesbaden, Germany, 2025; pp. 63–68. [Google Scholar] [CrossRef]

- Lee, J.; Lim, J.; Byeon, K.; Kwak, J.T. Benchmarking pathology foundation models: Adaptation strategies and scenarios. Comput. Biol. Med. 2025, 190, 110031. [Google Scholar] [CrossRef] [PubMed]

- Vadori, V.; Peruffo, A.; GraÃïc, J.M.; Finos, L.; Grisan, E. Mind the gap: Evaluating patch embeddings from general-purpose and histopathology foundation models for cell segmentation and classification. arXiv 2025, arXiv:2502.02471. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning, San Francisco, CA, USA, 18–24 July 2021; Meila, M., Zhang, T., Eds.; PMLR: Cambridge, MA, USA, 2021; Volume 139, pp. 8748–8763. [Google Scholar]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A.; et al. DINOv2: Learning Robust Visual Features without Supervision. arXiv 2023, arXiv:2304.07193. [Google Scholar]

- Lei, C.; Wang, H.; Lei, J. Si-gat: A method based on improved graph attention network for sonar image classification. arXiv 2022, arXiv:2211.15133. [Google Scholar] [CrossRef]

- Dai, Y.; Sharoff, S.; de Kamps, M. GATology for Linguistics: What Syntactic Dependencies It Knows. arXiv 2023, arXiv:2305.13403. [Google Scholar] [CrossRef]

- Singh, P.K.; Susan, S. EfficientNetB0-CatBoost: Deep Learning with Categorical Boosting for Food Image Recognition. Cureus J. 2025, 2, es44389-025-03791-2. [Google Scholar] [CrossRef]

- Mookkandi, K.; Nath, M.K.; Dash, S.S.; Mishra, M.; Blange, R. A Robust Lightweight Vision Transformer for Classification of Crop Diseases. AgriEngineering 2025, 7, 268. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22 October 2017; pp. 618–626. [Google Scholar] [CrossRef]

- Handa, P.; Mahbod, A.; Schwarzhans, F.; Woitek, R.; Goel, N.; Dhir, M.; Chhabra, D.; Jha, S.; Sharma, P.; Thakur, V.; et al. Capsule vision 2024 challenge: Multi-class abnormality classification for video capsule endoscopy. arXiv 2024, arXiv:2408.04940. [Google Scholar] [CrossRef]

- Meyer, P.G.; Cherstvy, A.G.; Seckler, H.; Hering, R.; Blaum, N.; Jeltsch, F.; Metzler, R. Directedeness, correlations, and daily cycles in springbok motion: From data via stochastic models to movement prediction. Phys. Rev. Res. 2023, 5, 043129. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).