CoviSwin: A Deep Vision Transformer for Automatic Segmentation of COVID-19 CT Scans

Abstract

1. Introduction

- Transformer-based model utilizing a Swin variant deep-learning model: We design a segmentation framework that employs the Swin Transformer V2 Large, heretofore denoted by SwinV2-L with approximately 197 million parameters, as the encoder component. The large size, hierarchical attention structure, and shifted window mechanism of SwinV2 enable multi-scale feature extraction, improving the model’s ability to capture both fine-grained and global contextual information within medical images.

- Decoder with attention-gated refinement and deep supervision: The decoder incorporates three key enhancements: (i) attention gates within the upsampling/deconvolution blocks to selectively emphasize task-relevant features, (ii) a residual connection in the final decoder block to stabilize gradient flow and preserve fine structural details, and (iii) an auxiliary output head (deep supervision) attached at an intermediate decoder stage. The residual path provides identity “highways” that mitigate vanishing gradients in deep U-shaped encoder–decoder and Transformer blocks, while the auxiliary head shortens the backpropagation path and supplies stronger supervisory signals to earlier layers. Together, these components may enhance feature refinement, improve training stability and convergence, as well as potentially yield more accurate segmentation masks.

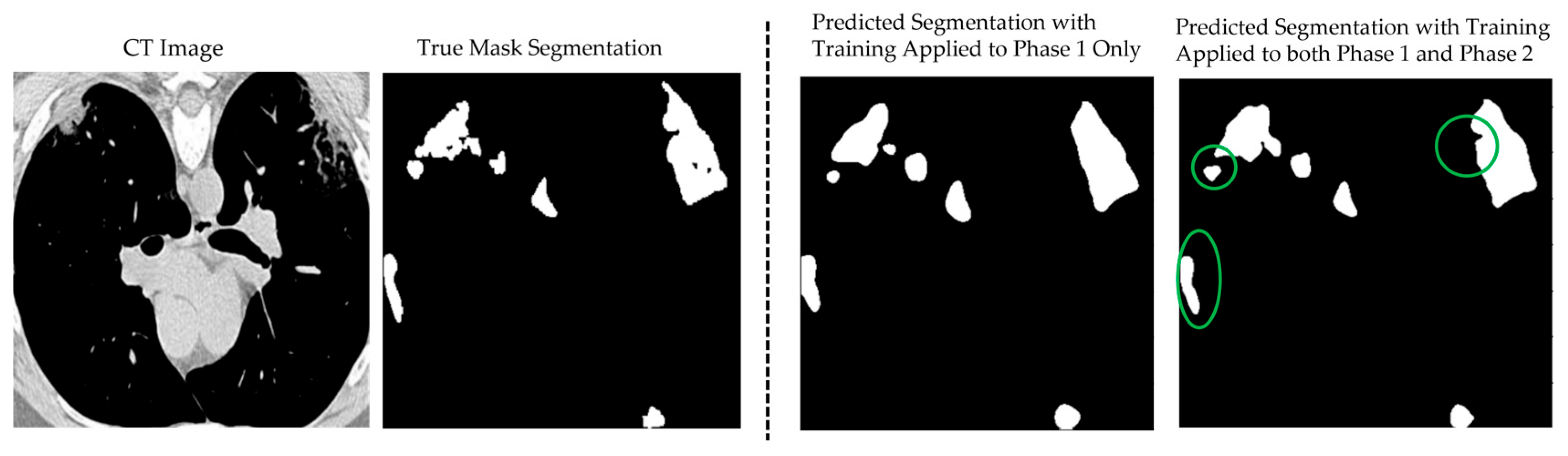

- Two-phase training mechanism: We employ a two-phase training strategy designed to improve both optimization stability and training efficiency. During the first phase, the encoder is kept frozen while the model is trained on a large pseudo-labeled dataset [8]. This initialization step serves to steer the decoder’s parameters away from random initialization and towards more promising regions of the solution space. In the second phase, we selectively unfreeze parts of the encoder and jointly fine-tune the entire model on the target dataset. This progression enables more effective transfer of knowledge from the pseudo-label pretraining stage, accelerates convergence, and could yield a more robust set of optimized parameters.

2. Related Work

3. Materials and Methods

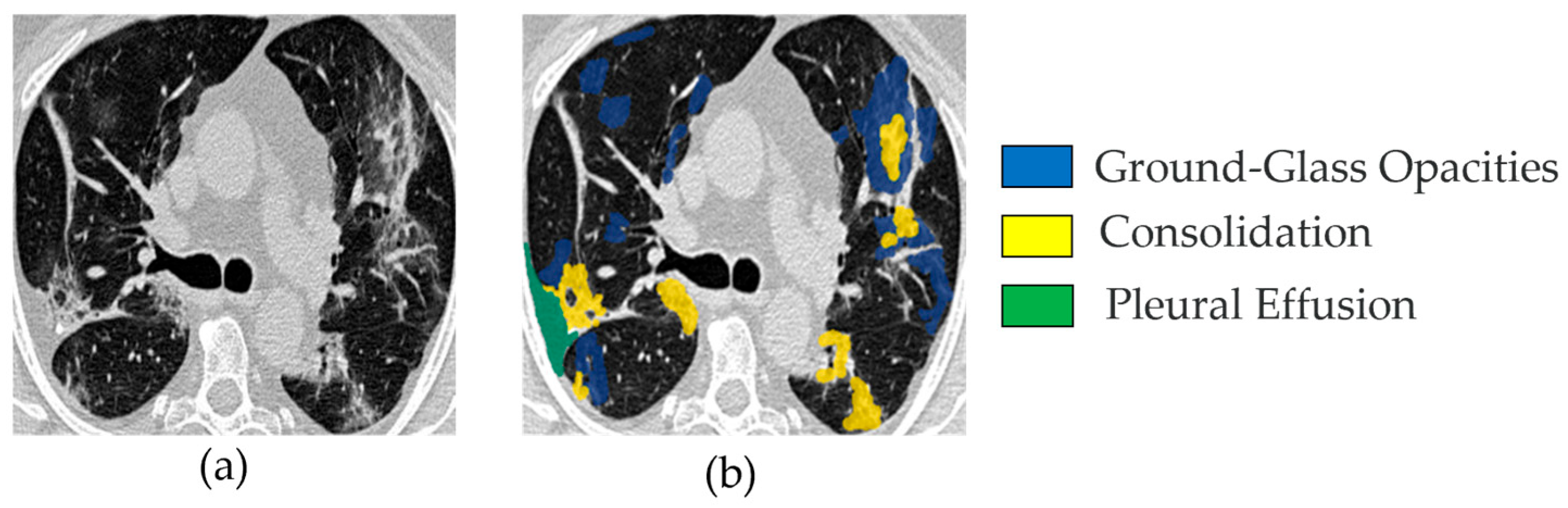

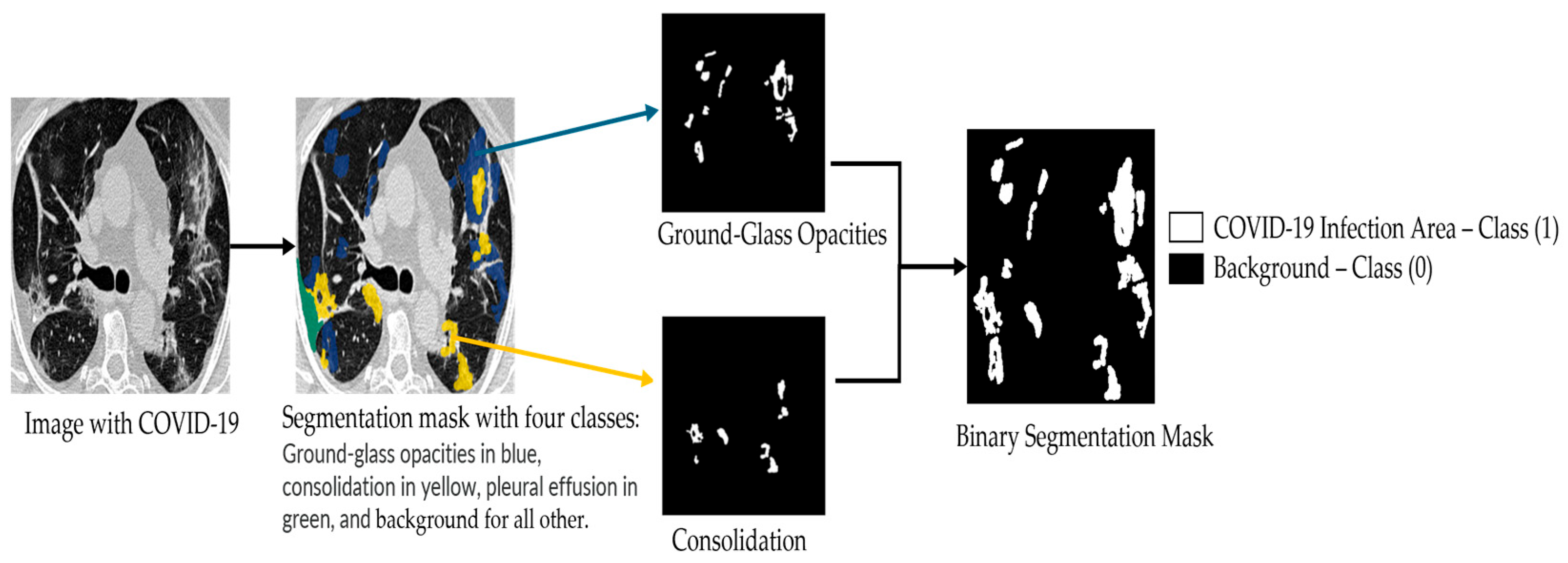

3.1. Dataset Description

Data Augmentation

3.2. Model Description

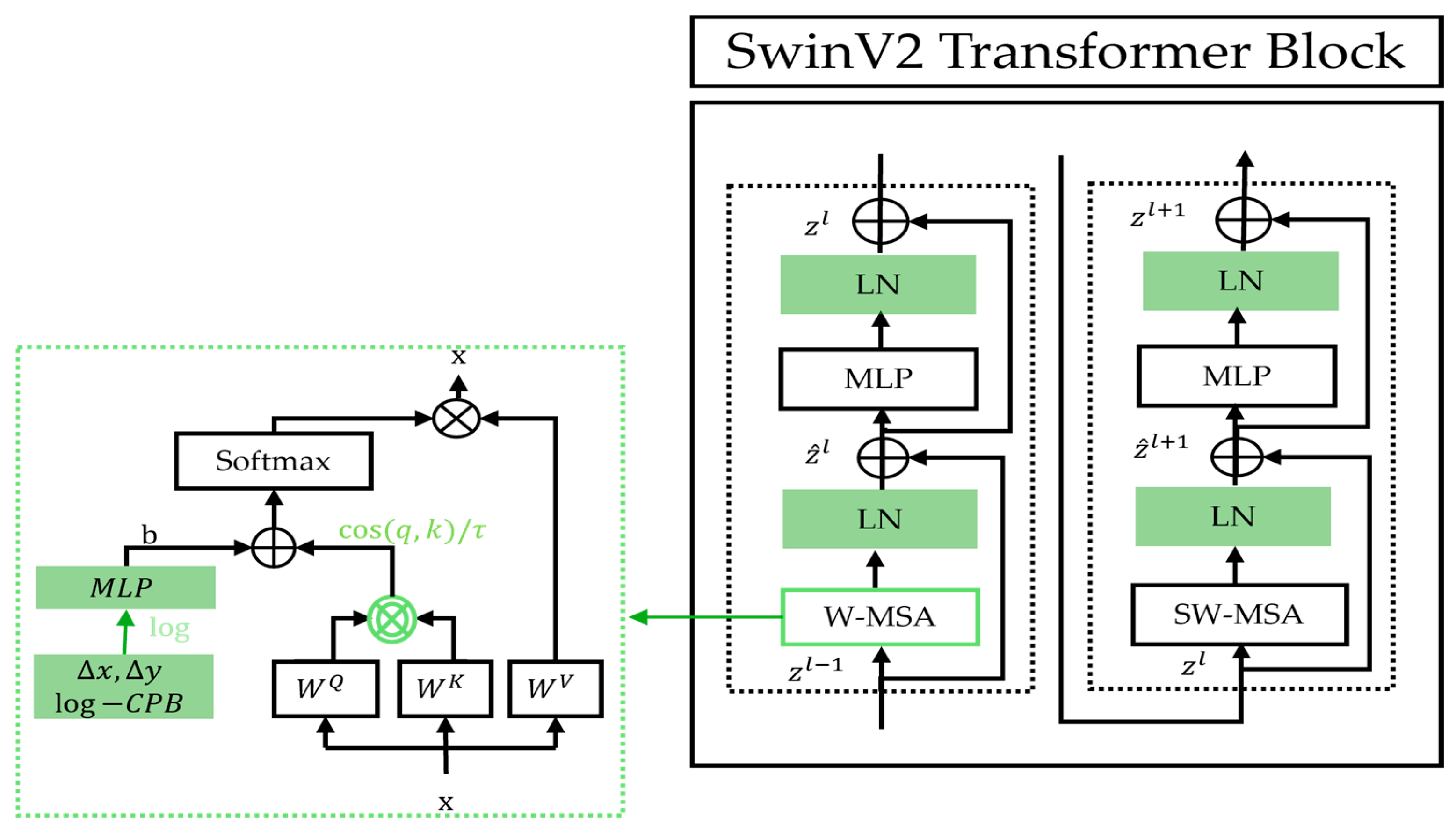

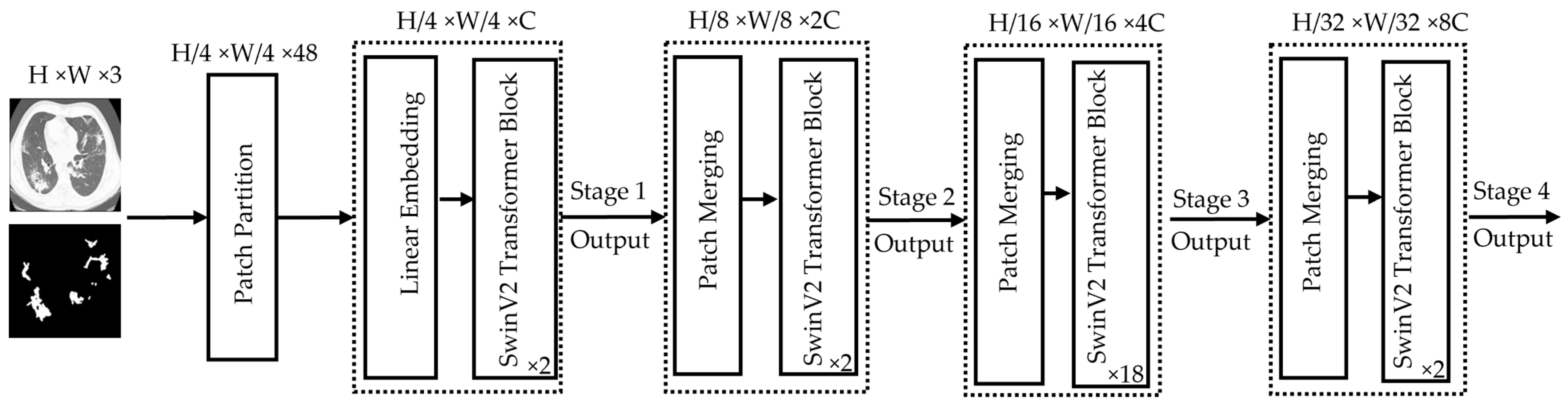

3.2.1. Swin Transformer

3.2.2. CoviSwin Architecture

- ReLU provides non-saturating positive responses that maintain stronger gradients than sigmoid/tanh, improving optimization stability and convergence [28];

- IN reduces per instance covariate shift and stabilizes feature statistics across varying scanners and patients, which is advantageous in medical imaging with small to moderate batch sizes [29].

3.3. Experimental Setup

- Recall (Sensitivity): Sensitivity evaluates the model’s ability to correctly segment COVID-19-infected lung regions. A higher sensitivity value indicates a better capability to identify and segment infected areas accurately.

- Dice Similarity Coefficient: The Dice coefficient measures the similarity between the predicted segmentation mask and the ground truth. A higher Dice score indicates better segmentation accuracy, demonstrating a stronger overlap between the predicted and actual infected regions.

- Specificity: Specificity measures the model’s effectiveness in correctly identifying non-infected lung regions. A higher specificity score signifies better segmentation performance in distinguishing healthy lung tissue from infected areas.

4. Results and Discussion

4.1. Design of a Two-Phase Training Strategy for Segmentation Enhancement

4.2. Design of a Hybrid Loss Function for Enhanced Segmentation Accuracy

- The Dice Loss is commonly used in segmentation tasks to maximize the overlap between predicted and ground truth regions. It is especially effective for handling class imbalance by emphasizing under-represented areas with fewer positive pixels. Studies have shown its superiority in segmenting regions with high class imbalance [37,38,39]. This loss function is defined as follows:where is the number of pixels in each patche, is model’s predicted probabilities per pixel , is the ground truth label per pixel , and equals a small number added to avoid division by zero.

- The Intersection over Union (IoU) Loss works by maximizing the intersection and minimizing the union of the predicted and actual regions. It directly enhances spatial agreement and is particularly robust for segmenting objects with clear boundaries [40]. However, the IoU Loss can be less sensitive to smoothness, which is sometimes necessary for more gradual transitions in medical images [41,42]. This loss function is formulated in the following way:

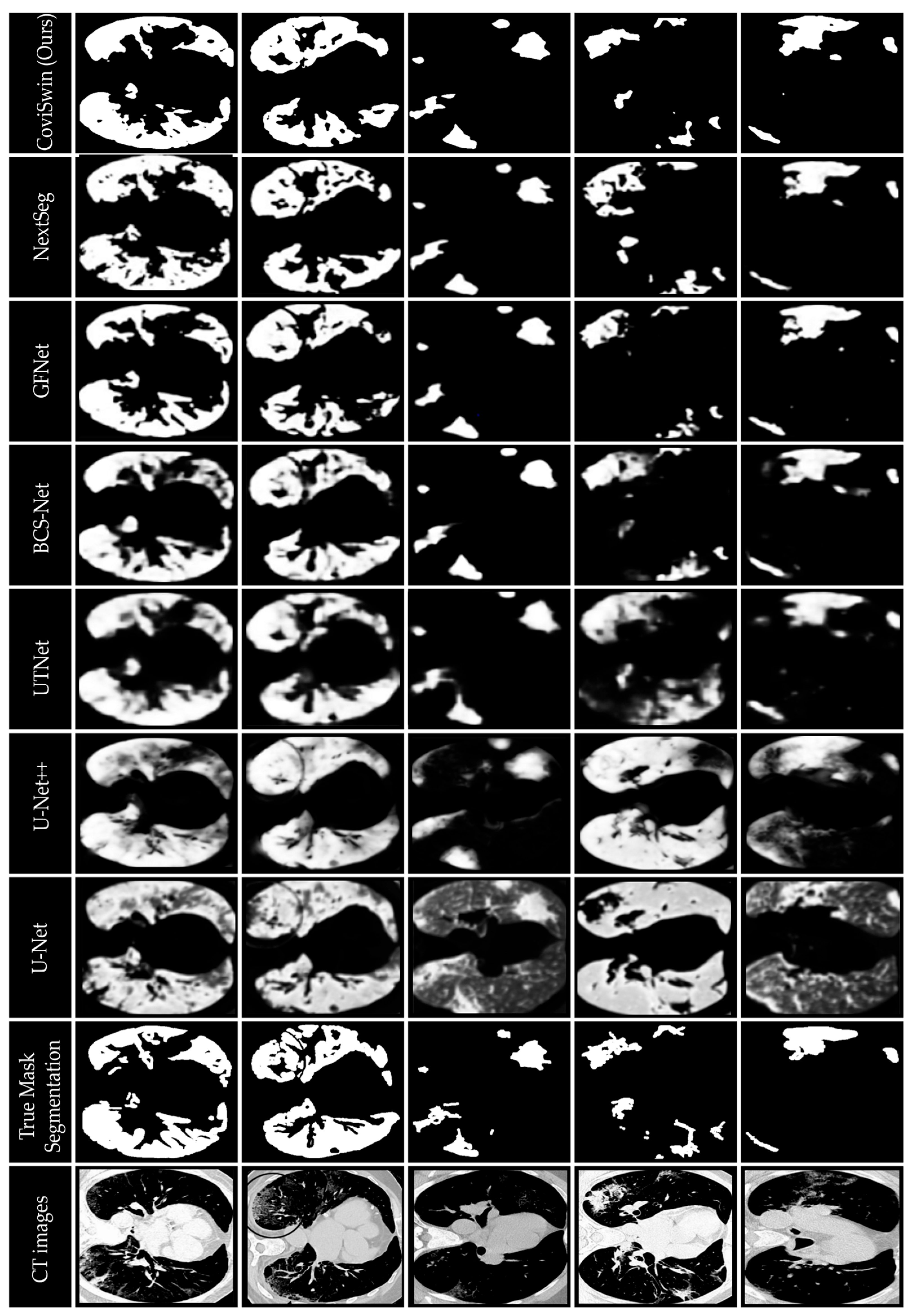

4.3. Comparisons with State-of-the-Art Methods

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AMOS | Abdominal Multi-Organ Segmentation |

| ADNI | Alzheimer’s Disease Neuroimaging Initiative |

| AG | Attention Gate |

| BCE | Binary Cross-Entropy |

| CXR | Chest X-Ray |

| CGS | Collaborative Generalist and Specialists |

| CT | Computed Tomography |

| CBAM | Convolutional Block Attention Module |

| CNNs | Convolutional Neural Networks |

| COVID-19 | “CO” stands for corona, “VI” stands for virus, “D” stands for disease, and “19” stands for 2019 |

| DSC | Dice Similarity Coefficient |

| FN | False Negative |

| FP | False Positive |

| GELU | Gaussian Error Linear Unit |

| GPU | Graphics Processing Unit |

| HVTs | Hybrid Vision Transformers |

| IN | Instance Normalization |

| IoU | Intersection over Union |

| KiTS | Kidner Tumor Segmentation |

| LN | Layer Normalization |

| LTM | Learnable Token Merging |

| MRI | Magnetic Resonance Imaging |

| MTAN | Mean Teacher Attention N-Net |

| MSA | Multi-head Self-Attention |

| MLP | Multi-Layer Perceptron |

| NL | Normalization Layer |

| PSNR | Peak Signal-to-Noise Ratio |

| RAM | Random Access Memory |

| ReLU | Rectified Linear Unit |

| RPB | Relative Position Bias |

| RCB | Residual Convolutional Block |

| SARS | Severe Acute Respiratory Syndrome |

| SARS-CoV-2 | Severe Acute Respiratory Syndrome CoronaVirus 2 |

| SD | Standard Deviation |

| SSIM | Structural Similarity Index |

| Swin | Shifted Window Transformer |

| SwinV2 | Shifted Window Transformer Version 2 |

| SW-MSA | Shifted Window Multi-Head Self-Attention |

| TN | True Negative |

| TP | True Positive |

| US | UltraSound |

| ViTs | Vision Transformers |

| W-MSA | Window-based Multi-Head Self-Attention |

| WHO | World Health Organization |

Appendix A

| Run Number | Sensitivity (Recall) | Dice Similarity Coefficient (DSC) | Specificity |

|---|---|---|---|

| 1 | 0.797 | 0.773 | 0.955 |

| 2 | 0.787 | 0.782 | 0.961 |

| 3 | 0.772 | 0.779 | 0.968 |

| 4 | 0.786 | 0.791 | 0.969 |

| 5 | 0.783 | 0.781 | 0.963 |

| 6 | 0.782 | 0.782 | 0.964 |

| 7 | 0.792 | 0.782 | 0.962 |

| 8 | 0.797 | 0.773 | 0.955 |

| 9 | 0.789 | 0.772 | 0.958 |

| 10 | 0.817 | 0.791 | 0.965 |

| Mean | 0.790 | 0.781 | 0.962 |

| Standard Deviation (SD) | 0.011988884 | 0.006785606 | 0.004876246 |

References

- World Health Organization. Naming the coronavirus disease (COVID-19) and the virus that causes it. Braz. J. Implantol. Health Sci. 2020, 2, 3. Available online: https://bjihs.emnuvens.com.br/bjihs/article/view/173 (accessed on 24 March 2020).

- Oulefki, A.; Agaian, S.; Trongtirakul, T.; Laouar, A.K. Automatic COVID-19 lung infected region segmentation and measurement using CT-scans images. Pattern Recognit. 2021, 114, 107747. [Google Scholar] [CrossRef]

- Youssef, B.B.; Ismail, M.M.B.; Bchir, O. Detecting Insults on Social Network Platforms Using a Deep Learning Transformer-Based Model. In Integrating Machine Learning Into HPC-Based Simulations and Analytics; IGI Global, 1AD: Hershey, PA, USA, 2025; pp. 1–36. [Google Scholar] [CrossRef]

- Li, J.; Chen, J.; Tang, Y.; Wang, C.; Landman, B.A.; Zhou, S.K. Transforming medical imaging with Transformers? A comparative review of key properties, current progresses, and future perspectives. Med. Image Anal. 2023, 85, 102762. [Google Scholar] [CrossRef]

- Alsenan, A.; Ben Youssef, B.; Alhichri, H. MobileUNetV3—A Combined UNet and MobileNetV3 Architecture for Spinal Cord Gray Matter Segmentation. Electronics 2022, 11, 2388. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-like Pure Transformer for Medical Image Segmentation. arXiv 2021, arXiv:2105.05537. [Google Scholar] [CrossRef]

- Fan, D.-P.; Zhou, T.; Ji, G.-P.; Zhou, Y.; Chen, G.; Fu, H.; Shen, J.; Shao, L. Inf-Net: Automatic COVID-19 Lung Infection Segmentation From CT Images. IEEE Trans. Med. Imaging 2020, 39, 2626–2637. [Google Scholar] [CrossRef]

- Lasloum, T.; Youssef, B.B.; Alhichri, H. Domain Adaptation for Performance Enhancement of Deep Learning Models for Remote Sensing Scenes Classification. In Integrating Machine Learning into HPC-Based Simulations and Analytics; IGI Global, 1AD: Hershey, PA, USA, 2025; pp. 61–88. [Google Scholar] [CrossRef]

- Wang, Y.; Li, Z.; Qi, L.; Yu, Q.; Shi, Y.; Gao, Y. Balancing Multi-Target Semi-Supervised Medical Image Segmentation with Collaborative Generalist and Specialists. IEEE Trans. Med. Imaging 2025, 44, 3025–3037. [Google Scholar] [CrossRef] [PubMed]

- Sun, P.; Yang, S.; Guan, H.; Mo, T.; Yu, B.; Chen, Z. MTAN: A semi-supervised learning model for kidney tumor segmentation. J. X-Ray Sci. Technol. 2023, 31, 1295–1313. [Google Scholar] [CrossRef] [PubMed]

- Shamshad, F.; Khan, S.; Zamir, S.W.; Khan, M.H.; Hayat, M.; Khan, F.S.; Fu, H. Transformers in medical imaging: A survey. Med. Image Anal. 2023, 88, 102802. [Google Scholar] [CrossRef] [PubMed]

- Parvaiz, A.; Khalid, M.A.; Zafar, R.; Ameer, H.; Ali, M.; Fraz, M.M. Vision Transformers in medical computer vision—A contemplative retrospection. Eng. Appl. Artif. Intell. 2023, 122, 106126. [Google Scholar] [CrossRef]

- You, C.; Zhao, R.; Liu, F.; Dong, S.; Chinchali, S.; Topcu, U.; Staib, L.; Duncan, J.S. Class-Aware Adversarial Transformers for Medical Image Segmentation. Adv. Neural Inf. Process. Syst. 2022, 35, 29582–29596. [Google Scholar]

- Hu, Z.; Li, Y.; Wang, Z.; Zhang, S.; Hou, W. Conv-Swinformer: Integration of CNN and shift window attention for Alzheimer’s disease classification. Comput. Biol. Med. 2023, 164, 107304. [Google Scholar] [CrossRef]

- Park, N.; Kim, S. How Do Vision Transformers Work? arXiv 2022, arXiv:2202.06709. [Google Scholar] [CrossRef]

- Huang, J.; Fang, Y.; Wu, Y.; Wu, H.; Gao, Z.; Li, Y.; Del Ser, J.; Xia, J.; Yang, G. Swin transformer for fast MRI. Neurocomputing 2022, 493, 281–304. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. Vision Transformers for Lung Segmentation on CXR Images. Sn Comput. Sci. 2023, 4, 414. [Google Scholar] [CrossRef] [PubMed]

- Fan, C.-M.; Liu, T.-J.; Liu, K.-H. SUNet: Swin Transformer UNet for Image Denoising. In Proceedings of the 2022 IEEE International Symposium on Circuits and Systems (ISCAS), Austin, TX, USA, 27 May–1 June 2022; pp. 2333–2337. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, Y.; Lin, X.; Dong, J.; Cheng, T.; Liang, J. SwinBTS: A Method for 3D Multimodal Brain Tumor Segmentation Using Swin Transformer. Brain Sci. 2022, 12, 797. [Google Scholar] [CrossRef] [PubMed]

- Ji, Y.; Bai, H.; Ge, C.; Yang, J.; Zhu, Y.; Zhang, R.; Li, Z.; Zhanng, L.; Ma, W.; Wan, X.; et al. AMOS: A Large-Scale Abdominal Multi-Organ Benchmark for Versatile Medical Image Segmentation. arXiv 2022, arXiv:2206.08023. [Google Scholar] [CrossRef]

- Wang, Y.; Yang, Y. Efficient Visual Transformer by Learnable Token Merging. arXiv 2024, arXiv:2407.15219. [Google Scholar] [CrossRef]

- Song, W.; Wang, X.; Guo, Y.; Li, S.; Xia, B.; Hao, A. CenterFormer: A Novel Cluster Center Enhanced Transformer for Unconstrained Dental Plaque Segmentation. Trans. Multimed. 2024, 26, 10965–10978. [Google Scholar] [CrossRef]

- COVID-19. Medical Segmentation. Available online: http://medicalsegmentation.com/covid19/ (accessed on 18 March 2025).

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L.; et al. Swin Transformer V2: Scaling Up Capacity and Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 1170–1179. [Google Scholar] [CrossRef]

- Peng, J.; Li, Y.; Liu, C.; Gao, X. The Circular U-Net with Attention Gate for Image Splicing Forgery Detection. Electronics 2023, 12, 1451. [Google Scholar] [CrossRef]

- Huang, K.-W.; Yang, Y.-R.; Huang, Z.-H.; Liu, Y.-Y.; Lee, S.-H. Retinal Vascular Image Segmentation Using Improved UNet Based on Residual Module. Bioengineering 2023, 10, 722. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar] [CrossRef]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Stoyanov, D., Taylor, Z., Carneiro, G., Syeda-Mahmood, T., Martel, A., Maier-Hein, L., Tavares, J.M.R.S., Bradley, A., Papa, J.P., Belagiannis, V., et al., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. Available online: https://openaccess.thecvf.com/content_cvpr_2016/papers/He_Deep_Residual_Learning_CVPR_2016_paper.pdf (accessed on 27 October 2025).

- Abuqaddom, I.; Mahafzah, B.A.; Faris, H. Oriented stochastic loss descent algorithm to train very deep multi-layer neural networks without vanishing gradients. Knowl.-Based Syst. 2021, 230, 107391. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International conference on Machine Learning (ICML), Haifa, Israel, 21–24 June 2010; pp. 807–814. Available online: https://www.cs.toronto.edu/~hinton/absps/reluICML.pdf (accessed on 27 October 2025).

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer Normalization. arXiv 2016, arXiv:1607.06450. Available online: http://arxiv.org/abs/1607.06450 (accessed on 27 October 2025). [CrossRef]

- Bazi, Y.; Al Rahhal, M.M.; Alhichri, H.; Alajlan, N. Simple Yet Effective Fine-Tuning of Deep CNNs Using an Auxiliary Classification Loss for Remote Sensing Scene Classification. Remote Sens. 2019, 11, 2908. [Google Scholar] [CrossRef]

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding deep learning (still) requires rethinking generalization. Commun. ACM 2021, 64, 107–115. [Google Scholar] [CrossRef]

- Panja, A.; Kuiry, S.; Das, A.; Nasipuri, M.; Das, N. COVID-CT-H-UNet: A novel COVID-19 CT segmentation network based on attention mechanism and Bi-category Hybrid loss. arXiv 2024, arXiv:2403.10880. [Google Scholar] [CrossRef]

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Cardoso, M.J. Generalised Dice Overlap as a Deep Learning Loss Function for Highly Unbalanced Segmentations. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Cardoso, M.J., Arbel, T., Carneiro, G., Syeda-Mahmood, T., Tavares, J.M.R.S., Moradi, M., Bradley, A., Greenspan, H., Papa, J.P., Madabhushi, A., et al., Eds.; Springer: Cham, Switzerland; Québec City, QC, Canada, 2017; Volume 10553, pp. 240–248. [Google Scholar] [CrossRef]

- Eelbode, T.; Bertels, J.; Berman, M.; Vandermeulen, D.; Maes, F.; Bisschops, R.; Blaschko, M.B. Optimization for Medical Image Segmentation: Theory and Practice When Evaluating With Dice Score or Jaccard Index. IEEE Trans. Med. Imaging 2020, 39, 3679–3690. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Popordanoska, T.; Bertels, J.; Lemmens, R.; Blaschko, M.B. Dice Semimetric Losses: Optimizing the Dice Score with Soft Labels. arXiv 2024, arXiv:2303.16296. [Google Scholar] [CrossRef]

- Milletari, F.; Navab, N.; Ahmadi, S.-A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar] [CrossRef]

- El Jurdi, R.; Petitjean, C.; Honeine, P.; Cheplygina, V.; Abdallah, F. High-level prior-based loss functions for medical image segmentation: A survey. Comput. Vis. Image Underst. 2021, 210, 103248. [Google Scholar] [CrossRef]

- Sun, F.; Luo, Z.; Li, S. Boundary Difference Over Union Loss For Medical Image Segmentation. arXiv 2023, arXiv:2308.00220. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI); Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer International Publishing: Munich, Germany, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; McDonagh, S.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning Where to Look for the Pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar] [CrossRef]

- Ibtehaz, N.; Kihara, D. ACC-UNet: A Completely Convolutional UNet Model for the 2020s. arXiv 2023, arXiv:2308.13680. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar] [CrossRef]

- Gao, Y.; Zhou, M.; Metaxas, D.N. UTNet: A Hybrid Transformer Architecture for Medical Image Segmentation. arXiv 2021, arXiv:2107.00781. [Google Scholar] [CrossRef]

- Dong, A.; Wang, R.; Zhang, X.; Liu, J. NextSeg: Automatic COVID-19 Lung Infection Segmentation from CT Images Based on Next-ViT. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 30 June 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Liu, Q.; Dou, Q.; Yu, L.; Heng, P.A. MS-Net: Multi-Site Network for Improving Prostate Segmentation with Heterogeneous MRI Data. IEEE Trans. Med. Imaging 2020, 39, 2713–2724. [Google Scholar] [CrossRef] [PubMed]

- Cong, R.; Yang, H.; Jiang, Q.; Gao, W.; Li, H.; Wang, C.; Zhao, Y.; Kwong, S. BCS-Net: Boundary, Context, and Semantic for Automatic COVID-19 Lung Infection Segmentation from CT Images. IEEE Trans. Instrum. Meas. 2022, 71, 1–11. [Google Scholar] [CrossRef]

- Fan, C.; Zeng, Z.; Xiao, L.; Qu, X. GFNet: Automatic Segmentation of COVID-19 Lung Infection Regions Using CT Images Based on Boundary Features. Pattern Recognit. 2022, 132, 108963. [Google Scholar] [CrossRef] [PubMed]

| Year, Ref. | Deep Learning Model | Description | Reported Results |

|---|---|---|---|

| 2022, [14] | Class-Aware Adversarial Transformer (CATformer, and CASTformer) | Transformer segmentation models (CATformer and CASTformer) of medical structures, such as tumors or organs. | Average Dice = 82.17 and 82.55 |

| 2022, [20] | SwinBTS | 3D Swin Transformer model for brain tumor segmentation using multi-modal MRI datasets (BraTS). | Average Dice = 81.15 |

| 2022, [21] | Swin Transformer | Swin Transformer model for multi-organ segmentation (AMOS) of abdominal CT images. | Mean Dice = 84.91 (in-distribution) and 87.20 (out-of-distribution) |

| 2023, [18] | ARSeg, MedT, TransUNet, TransM, and UNeXt | ViTs for lung segmentation of COVID-19 using CXR images. | F1 Score = 95.36, 97.40, 96.80, 97.47, and 96.26 |

| 2023, [15] | Swin Transformer with CNN | CNNs and Swin Transformer combined to classify Alzheimer’s Disease Neuroimaging Initiative (ADNI) data using brain imaging. | Accuracy = 82.09% Sensitivity = 86.96% |

| 2024, [22] | Transformers with Learnable Token Merging (LTM) | ViT-based models using learnably merging semantically similar tokens on ImageNet-1K benchmark. | Top-1 Accuracy = 83.64% (LTM-Swin-B after 50 epochs) |

| 2024, [23] | CenterFormer Model | Cluster center enhanced Transformer for dental plaque segmentation on the dental plaque dataset. | Pixel Accuracy = 76.81% |

| Type of Swin Transformers | Number of Channels (Stage 1) | Number of Layers per Stage | Number of Parameters (in Millions) | Image Resolutions |

|---|---|---|---|---|

| Swin-T | 96 | (2,2,6,2) | 28 | 224 × 224 |

| Swin-S | 96 | (2,2,18,2) | 50 | 224 × 224 |

| Swin-B | 128 | (2,2,18,2) | 88 | 224 × 224/384 × 384 |

| Swin-L | 192 | (2,2,18,2) | 197 | 224 × 224/384 × 384 |

| SwinV2-T/S/B | 96/96/128 | (2,2,6,2) | 28/50/88 | 256 × 256 |

| SwinV2-L | 192 | (2,2,18,2) | 197 | 256 × 256/384 × 384 |

| SwinV2-H | 352 | (2,2,18,2) | 658 | 256 × 256/512 × 512 |

| SwinV2-G | 512 | (2,2,42,4) | 3000 | 1024 × 1024 |

| Parts | Layers | Input Shape | Output Shape | |

|---|---|---|---|---|

| Encoder | Patch Embedding | Conv2D | [1, 3, 384, 384] | [1, 192, 96, 96] |

| LayerNorm | [1, 192, 96, 96] | [1, 192, 96, 96] | ||

| SwinV2_Stage1 | [1, 192, 96, 96] | [1, 384, 48, 48] | ||

| SwinV2_Stage2 | [1, 384, 48, 48] | [1, 768, 24, 24] | ||

| SwinV2_Stage3 | [1, 768, 24, 24] | [1, 1536, 12, 12] | ||

| SwinV2_Stage4 | LayerNorm | [1, 1536, 12, 12] | [1, 1536, 12, 12] | |

| Decoder | UpsampleAttnBlock3 | Upsample + Conv + ReLU + IN | [1, 1536, 12, 12] | [1, 768, 24, 24] |

| AttentionGate_3 + CBAM | [1, 768, 24, 24] | [1, 768, 24, 24] | ||

| UpsampleAttnBlock2 | Upsample + Conv + ReLU + IN | [1, 768, 24, 24] | [1, 384, 48, 48] | |

| AttentionGate_2 + CBAM | [1, 384, 48, 48] | [1, 384, 48, 48] | ||

| UpsampleAttnBlock1 | Upsample + Conv + ReLU + IN | [1, 384, 48, 48] | [1, 192, 96, 96] | |

| AttentionGate_1 + CBAM | [1, 192, 96, 96] | [1, 192, 96, 96] | ||

| UpsampleBlock0 | Upsample + Conv + ReLU + IN | [1, 192, 96, 96] | [1, 64, 192, 192] | |

| UpsampleBlock192to384_ | Upsample + Conv + ReLU + IN | [1, 64, 192, 192] | [1, 32, 384, 384] | |

| Auxiliary Head | Conv2D | [1, 64, 192, 192] | [1, 2, 192, 192] | |

| Boundary Head | Conv2D | [1, 32, 384, 384] | [1, 2, 384, 384] | |

| Output Conv | Conv2D + ResidualBlock | [1, 32, 384, 384] | [1, 2, 384, 384] | |

| Phase | Trainable Parameters | Frozen Parameters | Training Time (20 Epochs) | Notes |

|---|---|---|---|---|

| Phase 1 (Frozen Encoder) | 21.96 M | 195.2 M | 80.76 min (1.35 h) | Only Decoder is Updated |

| Phase 2 (Partially Unfrozen Encoder) | 36.16 M | 181.0 M | 11.53 min (0.19 h) | Decoder + Part of the Encoder are Updated |

| Phase | Recall | DSC | Specificity |

|---|---|---|---|

| Phase 1 | 0.746 | 0.736 | 0.960 |

| Phase 2 | 0.772 | 0.779 | 0.968 |

| Phase | Loss Function | Recall | DSC | Specificity |

|---|---|---|---|---|

| Phase 1 | 0.785 | 0.742 | 0.953 | |

| 0.77 | 0.739 | 0.95 | ||

| 0.78 | 0.721 | 0.95 | ||

| 0.665 | 0.726 | 0.973 | ||

| 0.687 | 0.717 | 0.965 | ||

| 0.787 | 0.742 | 0.944 | ||

| 0.763 | 0.734 | 0.951 |

| Phase | Loss Function | Recall | DSC | Specificity |

|---|---|---|---|---|

| Phase 2 | 0.817 | 0.791 | 0.965 | |

| 0.78 | 0.775 | 0.964 |

| Model [Ref.], Year | Sensitivity | DSC | Specificity |

|---|---|---|---|

| U-Net [18], 2023 | 0.653 | 0.632 | 0.917 |

| U-Net++ [29], 2018 | 0.638 | 0.623 | 0.935 |

| Inf-Net [8], 2020 | 0.692 | 0.682 | 0.944 |

| Semi-Inf-Net [8], 2020 | 0.725 | 0.739 | 0.96 |

| MSNet [49], 2020 | 0.718 | 0.773 | 0.954 |

| Atten-Unet [44], 2018 | 0.673 | 0.65 | 0.924 |

| UTNet [47], 2021 | 0.694 | 0.695 | 0.932 |

| TransUnet [46], 2021 | 0.722 | 0.712 | 0.953 |

| BCS-Net [50], 2022 | 0.709 | 0.763 | 0.946 |

| Swin-Unet [7], 2021 | 0.713 | 0.689 | 0.938 |

| GFNet [51], 2022 | 0.735 | 0.754 | 0.957 |

| ACC-Unet [45], 2023 | 0.724 | 0.768 | 0.949 |

| NextSeg [48], 2024 | 0.731 | 0.782 | 0.962 |

| CoviSwin (Ours), 2025 Mean ± SD | 0.790 ± 0.0120 | 0.781 ± 0.0068 | 0.962 ± 0.0049 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alsenan, A.; Ben Youssef, B.; Alhichri, H.S. CoviSwin: A Deep Vision Transformer for Automatic Segmentation of COVID-19 CT Scans. Bioengineering 2025, 12, 1227. https://doi.org/10.3390/bioengineering12111227

Alsenan A, Ben Youssef B, Alhichri HS. CoviSwin: A Deep Vision Transformer for Automatic Segmentation of COVID-19 CT Scans. Bioengineering. 2025; 12(11):1227. https://doi.org/10.3390/bioengineering12111227

Chicago/Turabian StyleAlsenan, Alhanouf, Belgacem Ben Youssef, and Haikel S. Alhichri. 2025. "CoviSwin: A Deep Vision Transformer for Automatic Segmentation of COVID-19 CT Scans" Bioengineering 12, no. 11: 1227. https://doi.org/10.3390/bioengineering12111227

APA StyleAlsenan, A., Ben Youssef, B., & Alhichri, H. S. (2025). CoviSwin: A Deep Vision Transformer for Automatic Segmentation of COVID-19 CT Scans. Bioengineering, 12(11), 1227. https://doi.org/10.3390/bioengineering12111227