AI-Based Facial Emotion Analysis for Early and Differential Diagnosis of Dementia

Abstract

1. Introduction

- To explore the automatic detection of both MCI and overt dementia employing elicited facial emotion features extracted from video recordings;

- To assess the capability of the proposed system to discriminate AD from other forms of cognitive impairment. To the best of our knowledge, this is the first study to propose an automated method to differentiate between diverse etiologies of dementia based on facial emotion analysis.

2. Materials and Methods

2.1. Collected Data

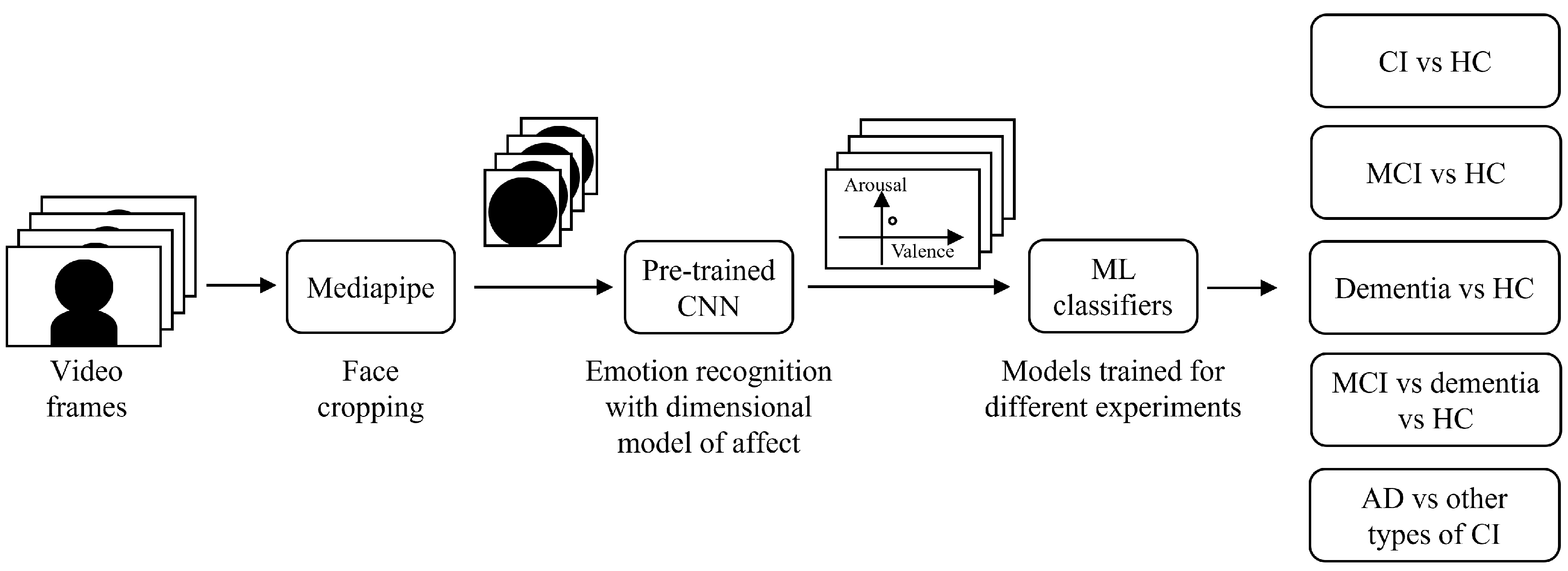

2.2. System Architecture and Data Processing

2.3. Experiments

- CI vs. HCs: In this experiment, all CI subjects were grouped together and compared to the HC group through a binary classification task. This allowed for the validation of the generalization capability of the proposed algorithm when tested on the expanded dataset, compared to that in [18]. The dataset included 64 subjects in total: 36 CI (26 MCI + 10 overt dementia) and 28 HCs.

- MCI vs. HCs: For this experiment, only subjects with a clinical diagnosis of MCI were selected among the CI group. The objective was to investigate whether any differences from the HC group would be detected during the earlier stages of the disease. The dataset included 54 subjects: 26 MCI and 28 HCs. A binary classification task was applied to distinguish between these two classes.

- Dementia vs. HCs: In contrast to the previous experiment, this analysis included only patients with overt dementia, with the aim of identifying the differences from the HC group appearing during the later stages of the disease. The dataset included 38 subjects: 10 overt dementia and 28 HCs. A binary classification task was carried out to distinguish between these two classes.

- MCI vs. dementia vs. HCs: In this experiment, the three different classes of subjects were compared, according to the level of severity of the disease. The dataset included 64 subjects in total: 26 MCI, 10 with overt dementia, and 28 HCs. The analysis moved from a binary to a multiclass classification task among the three classes. It should be noted that the dataset was imbalanced across classes, with the overt dementia group including fewer subjects compared to the other two.

- AD vs. other types of CI: The aim of this last experiment was to investigate any differences in facial emotion responses among individuals with different types of CI. Specifically, patients diagnosed with AD were grouped together and compared to the broader group of individuals with other forms of CI. This approach was motivated by the fact that AD is the most common cause of dementia, and a differential diagnosis distinguishing AD from other etiologies is of critical clinical importance. The dataset included 36 subjects: 26 MCI (13: due to AD; 13: other types) and 10 subjects with overt dementia (4: AD, 6: other types). Two classes were considered: AD (17 subjects) and other types of CI (19 subjects). A binary classification task was performed to distinguish between these two classes.

2.4. Model Selection and Evaluation

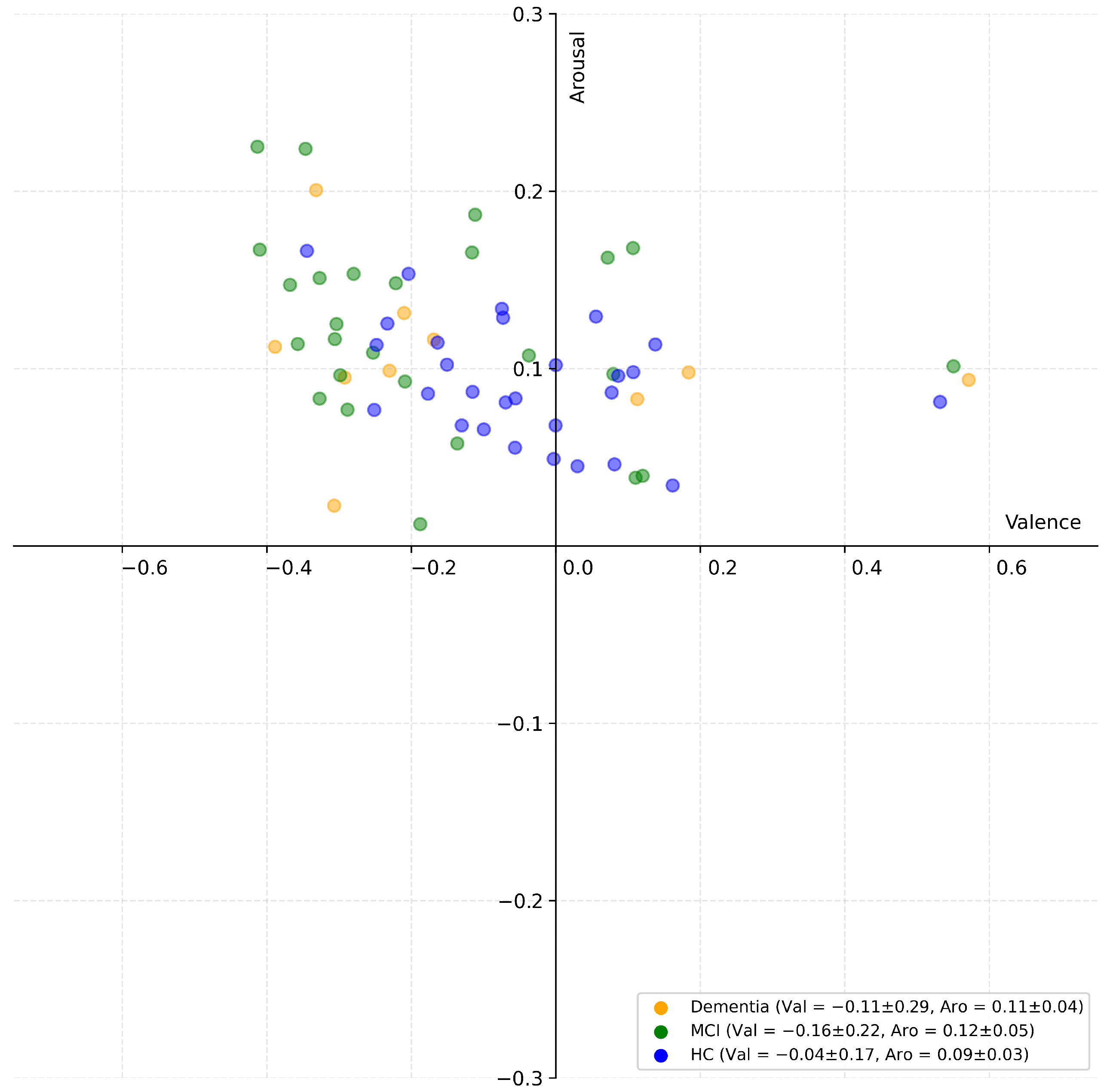

3. Results

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. Global Status Report on the Public Health Response to Dementia; World Health Organization: Geneva, Switzerland, 2021.

- Scheltens, P.; Blennow, K.; Breteler, M.M.; De Strooper, B.; Frisoni, G.B.; Salloway, S.; Van der Flier, W.M. Alzheimer’s disease. Lancet 2016, 388, 505–517. [Google Scholar] [CrossRef] [PubMed]

- T O’Brien, J.; Thomas, A. Vascular dementia. Lancet 2015, 386, 1698–1706. [Google Scholar] [CrossRef]

- Bang, J.; Spina, S.; Miller, B.L. Frontotemporal dementia. Lancet 2015, 386, 1672–1682. [Google Scholar] [CrossRef]

- Walker, Z.; Possin, K.L.; Boeve, B.F.; Aarsland, D. Lewy body dementias. Lancet 2015, 386, 1683–1697. [Google Scholar] [CrossRef]

- Koyama, A.; Okereke, O.I.; Yang, T.; Blacker, D.; Selkoe, D.J.; Grodstein, F. Plasma amyloid-β as a predictor of dementia and cognitive decline: A systematic review and meta-analysis. Arch. Neurol. 2012, 69, 824–831. [Google Scholar] [CrossRef]

- Alexander, G.C.; Emerson, S.; Kesselheim, A.S. Evaluation of aducanumab for Alzheimer disease: Scientific evidence and regulatory review involving efficacy, safety, and futility. JAMA 2021, 325, 1717–1718. [Google Scholar] [CrossRef]

- van Dyck, C.H.; Swanson, C.J.; Aisen, P.; Bateman, R.J.; Chen, C.; Gee, M.; Kanekiyo, M.; Li, D.; Reyderman, L.; Cohen, S.; et al. Lecanemab in Early Alzheimer’s Disease. N. Engl. J. Med. 2023, 388, 9–21. [Google Scholar] [CrossRef]

- Albert, M.S.; DeKosky, S.T.; Dickson, D.; Dubois, B.; Feldman, H.H.; Fox, N.C.; Gamst, A.; Holtzman, D.M.; Jagust, W.J.; Petersen, R.C.; et al. The diagnosis of mild cognitive impairment due to Alzheimer’s disease: Recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimer’s Dement. 2011, 7, 270–279. [Google Scholar] [CrossRef]

- Chen, K.H.; Lwi, S.J.; Hua, A.Y.; Haase, C.M.; Miller, B.L.; Levenson, R.W. Increased subjective experience of non-target emotions in patients with frontotemporal dementia and Alzheimer’s disease. Curr. Opin. Behav. Sci. 2017, 15, 77–84. [Google Scholar] [CrossRef] [PubMed]

- Pressman, P.S.; Chen, K.H.; Casey, J.; Sillau, S.; Chial, H.J.; Filley, C.M.; Miller, B.L.; Levenson, R.W. Incongruences between facial expression and self-reported emotional reactivity in frontotemporal dementia and related disorders. J. Neuropsychiatry Clin. Neurosci. 2023, 35, 192–201. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Dodge, H.H.; Mahoor, M.H. MC-ViViT: Multi-branch Classifier-ViViT to detect Mild Cognitive Impairment in older adults using facial videos. Expert Syst. Appl. 2024, 238, 121929. [Google Scholar] [CrossRef]

- Dodge, H.H.; Yu, K.; Wu, C.Y.; Pruitt, P.J.; Asgari, M.; Kaye, J.A.; Hampstead, B.M.; Struble, L.; Potempa, K.; Lichtenberg, P.; et al. Internet-Based Conversational Engagement Randomized Controlled Clinical Trial (I-CONECT) Among Socially Isolated Adults 75+ Years Old with Normal Cognition or Mild Cognitive Impairment: Topline Results. Gerontologist 2023, 64, gnad147. [Google Scholar] [CrossRef]

- Umeda-Kameyama, Y.; Kameyama, M.; Tanaka, T.; Son, B.K.; Kojima, T.; Fukasawa, M.; Iizuka, T.; Ogawa, S.; Iijima, K.; Akishita, M. Screening of Alzheimer’s disease by facial complexion using artificial intelligence. Aging 2021, 13, 1765–1772. [Google Scholar] [CrossRef]

- Zheng, C.; Bouazizi, M.; Ohtsuki, T.; Kitazawa, M.; Horigome, T.; Kishimoto, T. Detecting Dementia from Face-Related Features with Automated Computational Methods. Bioengineering 2023, 10, 862. [Google Scholar] [CrossRef]

- Kishimoto, T.; Takamiya, A.; Liang, K.; Funaki, K.; Fujita, T.; Kitazawa, M.; Yoshimura, M.; Tazawa, Y.; Horigome, T.; Eguchi, Y.; et al. The project for objective measures using computational psychiatry technology (PROMPT): Rationale, design, and methodology. Contemp. Clin. Trials Commun. 2020, 19, 100649. [Google Scholar] [CrossRef] [PubMed]

- Fei, Z.; Yang, E.; Yu, L.; Li, X.; Zhou, H.; Zhou, W. A Novel deep neural network-based emotion analysis system for automatic detection of mild cognitive impairment in the elderly. Neurocomputing 2022, 468, 306–316. [Google Scholar] [CrossRef]

- Bergamasco, L.; Lorenzo, F.; Coletta, A.; Olmo, G.; Cermelli, A.; Rubino, E.; Rainero, I. Automatic Detection of Cognitive Impairment Through Facial Emotion Analysis. Appl. Sci. 2025, 15, 9103. [Google Scholar] [CrossRef]

- Okunishi, T.; Zheng, C.; Bouazizi, M.; Ohtsuki, T.; Kitazawa, M.; Horigome, T.; Kishimoto, T. Dementia and MCI Detection Based on Comprehensive Facial Expression Analysis From Videos During Conversation. IEEE J. Biomed. Health Inform. 2025, 29, 3537–3548. [Google Scholar] [CrossRef]

- Chu, C.S.; Wang, D.Y.; Liang, C.K.; Chou, M.Y.; Hsu, Y.H.; Wang, Y.C.; Liao, M.C.; Chu, W.T.; Lin, Y.T. Automated Video Analysis of Audio-Visual Approaches to Predict and Detect Mild Cognitive Impairment and Dementia in Older Adults. J. Alzheimer’s Dis. 2023, 92, 875–886. [Google Scholar] [CrossRef]

- Jiang, Z.; Seyedi, S.; Haque, R.U.; Pongos, A.L.; Vickers, K.L.; Manzanares, C.M.; Lah, J.J.; Levey, A.I.; Clifford, G.D. Automated analysis of facial emotions in subjects with cognitive impairment. PLoS ONE 2022, 17, e0262527. [Google Scholar] [CrossRef]

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual; Technical Report Technical Report A-8; University of Florida, NIMH Center for the Study of Emotion and Attention: Gainesville, FL, USA, 2008. [Google Scholar]

- Bradley, M.M.; Lang, P.J. The International Affective Digitized Sounds (IADS-2): Affective Ratings of Sounds and Instruction Manual; Technical Report Technical Report B-3; University of Florida, NIMH Center for the Study of Emotion and Attention: Gainesville, FL, USA, 2007. [Google Scholar]

- Merghani, W.; Davison, A.K.; Yap, M.H. A Review on Facial Micro-Expressions Analysis: Datasets, Features and Metrics. arXiv 2018, arXiv:1805.02397. [Google Scholar] [CrossRef]

- Peirce, J.; Gray, J.R.; Simpson, S.; MacAskill, M.; Höchenberger, R.; Sogo, H.; Kastman, E.; Lindeløv, J.K. PsychoPy2: Experiments in behavior made easy. Behav. Res. Methods 2019, 51, 195–203. [Google Scholar] [CrossRef]

- Jack, C.R., Jr.; Bennett, D.A.; Blennow, K.; Carrillo, M.C.; Dunn, B.; Haeberlein, S.B.; Holtzman, D.M.; Jagust, W.; Jessen, F.; Karlawish, J.; et al. NIA-AA research framework: Toward a biological definition of Alzheimer’s disease. Alzheimer’s Dement. 2018, 14, 535–562. [Google Scholar] [CrossRef]

- Jack, C.R., Jr.; Andrews, J.S.; Beach, T.G.; Buracchio, T.; Dunn, B.; Graf, A.; Hansson, O.; Ho, C.; Jagust, W.; McDade, E.; et al. Revised criteria for diagnosis and staging of Alzheimer’s disease: Alzheimer’s Association Workgroup. Alzheimer’s Dement. 2024, 20, 5143–5169. [Google Scholar] [CrossRef]

- Frisoni, G.B.; Festari, C.; Massa, F.; Ramusino, M.C.; Orini, S.; Aarsland, D.; Agosta, F.; Babiloni, C.; Borroni, B.; Cappa, S.F.; et al. European intersocietal recommendations for the biomarker-based diagnosis of neurocognitive disorders. Lancet Neurol. 2024, 23, 302–312. [Google Scholar] [CrossRef] [PubMed]

- Rascovsky, K.; Hodges, J.R.; Knopman, D.; Mendez, M.F.; Kramer, J.H.; Neuhaus, J.; Van Swieten, J.C.; Seelaar, H.; Dopper, E.G.; Onyike, C.U.; et al. Sensitivity of revised diagnostic criteria for the behavioural variant of frontotemporal dementia. Brain 2011, 134, 2456–2477. [Google Scholar] [CrossRef] [PubMed]

- McKeith, I.G.; Boeve, B.F.; Dickson, D.W.; Halliday, G.; Taylor, J.P.; Weintraub, D.; Aarsland, D.; Galvin, J.; Attems, J.; Ballard, C.G.; et al. Diagnosis and management of dementia with Lewy bodies: Fourth consensus report of the DLB Consortium. Neurology 2017, 89, 88–100. [Google Scholar] [CrossRef] [PubMed]

- Sachdev, P.; Kalaria, R.; O’Brien, J.; Skoog, I.; Alladi, S.; Black, S.E.; Blacker, D.; Blazer, D.G.; Chen, C.; Chui, H.; et al. Diagnostic criteria for vascular cognitive disorders: A VASCOG statement. Alzheimer Dis. Assoc. Disord. 2014, 28, 206–218. [Google Scholar] [CrossRef]

- Wilson, S.M.; Galantucci, S.; Tartaglia, M.C.; Gorno-Tempini, M.L. The neural basis of syntactic deficits in primary progressive aphasia. Brain Lang. 2012, 122, 190–198. [Google Scholar] [CrossRef]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. MediaPipe: A Framework for Building Perception Pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Personal. Soc. Psychol. 1971, 17, 124–129. [Google Scholar] [CrossRef] [PubMed]

- Mollahosseini, A.; Hasani, B.; Mahoor, M.H. Affectnet: A database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 2017, 10, 18–31. [Google Scholar] [CrossRef]

- Cao, Q.; Shen, L.; Xie, W.; Parkhi, O.M.; Zisserman, A. VGGFace2: A Dataset for Recognising Faces across Pose and Age. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; pp. 67–74. [Google Scholar] [CrossRef]

- Ngo, Q.; Yoon, S. Facial Expression Recognition Based on Weighted-Cluster Loss and Deep Transfer Learning Using a Highly Imbalanced Dataset. Sensors 2020, 20, 2639. [Google Scholar] [CrossRef]

- keras vggface. VGGFace Implementation with Keras Framework. Available online: https://github.com/rcmalli/keras-vggface (accessed on 29 January 2025).

- Parthasarathy, S.; Busso, C. Jointly Predicting Arousal, Valence and Dominance with Multi-Task Learning. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017; pp. 1103–1107. [Google Scholar] [CrossRef]

- Chollet, F. Keras. 2015. Available online: https://keras.io (accessed on 29 January 2025).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Alsuhaibani, M.; Pourramezan Fard, A.; Sun, J.; Far Poor, F.; Pressman, P.S.; Mahoor, M.H. A Review of Machine Learning Approaches for Non-Invasive Cognitive Impairment Detection. IEEE Access 2025, 13, 56355–56384. [Google Scholar] [CrossRef]

- Pellegrini, E.; Ballerini, L.; del C. Valdes Hernandez, M.; Chappell, F.M.; González-Castro, V.; Anblagan, D.; Danso, S.; Muñoz-Maniega, S.; Job, D.; Pernet, C.; et al. Machine learning of neuroimaging for assisted diagnosis of cognitive impairment and dementia: A systematic review. Alzheimer’s Dement. Diagn. Assess. Dis. Monit. 2018, 10, 519–535. [Google Scholar] [CrossRef]

- Li, J.; Jin, K.; Zhou, D.; Kubota, N.; Ju, Z. Attention mechanism-based CNN for facial expression recognition. Neurocomputing 2020, 411, 340–350. [Google Scholar] [CrossRef]

- Wen, Z.; Lin, W.; Wang, T.; Xu, G. Distract Your Attention: Multi-Head Cross Attention Network for Facial Expression Recognition. Biomimetics 2023, 8, 199. [Google Scholar] [CrossRef]

- Ma, F.; Sun, B.; Li, S. Facial Expression Recognition with Visual Transformers and Attentional Selective Fusion. IEEE Trans. Affect. Comput. 2023, 14, 1236–1248. [Google Scholar] [CrossRef]

- Huang, Q.; Huang, C.; Wang, X.; Jiang, F. Facial expression recognition with grid-wise attention and visual transformer. Inf. Sci. 2021, 580, 35–54. [Google Scholar] [CrossRef]

- Karthikeyan, P.; Kirutheesvar, S.; Sivakumar, S. Facial Emotion Recognition for Enhanced Human-Computer Interaction using Deep Learning and Temporal Modeling with BiLSTM. In Proceedings of the 2024 5th International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 18–20 September 2024; pp. 1791–1797. [Google Scholar] [CrossRef]

- Chechkin, A.; Pleshakova, E.; Gataullin, S. A Hybrid KAN-BiLSTM Transformer with Multi-Domain Dynamic Attention Model for Cybersecurity. Technologies 2025, 13, 223. [Google Scholar] [CrossRef]

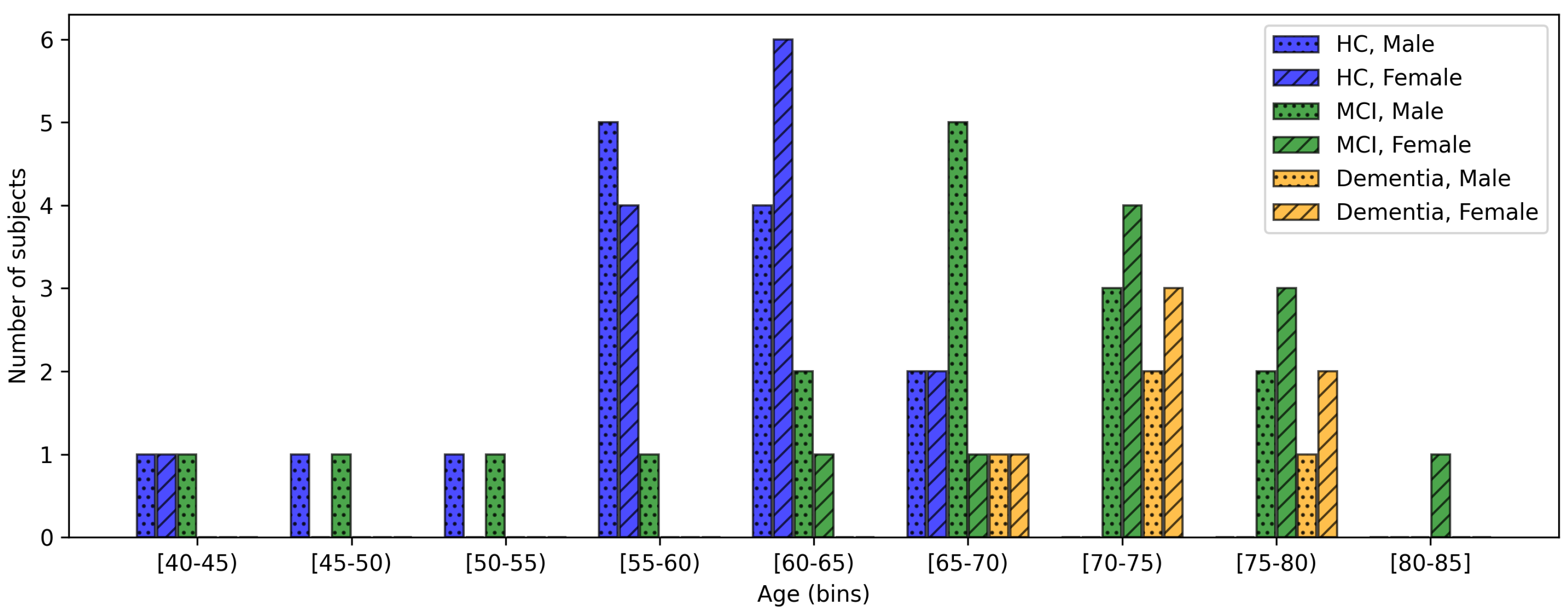

| MCI | Overt Dementia | Healthy Controls | |

|---|---|---|---|

| Number of subjects | 26 | 10 | 28 |

| Age (mean ± standard deviation) | 68.2 ± 9.3 | 72.9 ± 3.8 | 58.8 ± 6.9 |

| Sex (number of females, %) | 10 (38.5%) | 6 (60.0%) | 14 (50.0%) |

| Ethnicity | Caucasian | Caucasian | Caucasian |

| Years of education (mean ± standard deviation) | 13.7 ± 4.6 | 10.4 ± 5.4 | 15.6 ± 4.8 |

| MMSE score (mean ± standard deviation) | 25.8 ± 3.6 | 18.8 ± 5.5 | 29.2 ± 1.2 |

| MoCA score (mean ± standard deviation) | 20.0 ± 4.4 | 14.0 ± 3.6 | 25.4 ± 2.2 |

| Differential CI diagnosis | 13: due to AD; 13: other types | 4: AD; 6: other types | No cognitive impairment |

| Experiment | Model | Parameters | Accuracy | F1 Score |

|---|---|---|---|---|

| CI vs. HCs | KNN | 3 neighbors, Manhattan distance | 0.736 ± 0.102 | 0.722 ± 0.111 |

| LR | L2 penalty, tolerance = 0.0001, C = 0.001 | 0.623 ± 0.139 | 0.620 ± 0.141 | |

| SVM | linear kernel, tolerance = 0.001, C = 0.01 | 0.624 ± 0.092 | 0.612 ± 0.092 | |

| MCI vs. HCs | KNN | 3 neighbors, Manhattan distance | 0.760 ± 0.041 | 0.745 ± 0.048 |

| LR | L2 penalty, tolerance = 0.0001, C = 0.001 | 0.684 ± 0.114 | 0.674 ± 0.110 | |

| SVM | linear kernel, tolerance = 0.001, C = 0.001 | 0.667 ± 0.069 | 0.664 ± 0.068 | |

| Dementia vs. HCs | KNN | 3 neighbors, Euclidean distance | 0.732 ± 0.097 | 0.487 ± 0.156 |

| LR | L2 penalty, tolerance = 0.0001, C = 0.1 | 0.654 ± 0.145 | 0.492 ± 0.174 | |

| SVM | linear kernel, tolerance = 0.001, C = 0.0001 | 0.736 ± 0.018 | 0.424 ± 0.006 | |

| MCI vs. dementia vs. HCs | KNN | 5 neighbors, Manhattan distance | 0.641 ± 0.103 | 0.463 ± 0.076 |

| LR | L2 penalty, tolerance = 0.0001, C = 0.01 | 0.591 ± 0.104 | 0.427 ± 0.109 | |

| SVM | linear kernel, tolerance = 0.001, C = 0.1 | 0.578 ± 0.077 | 0.413 ± 0.051 |

| Experiment | Model | Parameters | Accuracy | F1 Score |

|---|---|---|---|---|

| AD vs. other types of CI | KNN | 5 neighbors, Chebyshev distance | 0.754 ± 0.128 | 0.749 ± 0.130 |

| LR | L2 penalty, tolerance = 0.0001, C = 0.0001 | 0.586 ± 0.171 | 0.571 ± 0.174 | |

| SVM | linear kernel, tolerance = 0.001, C = 0.01 | 0.643 ± 0.090 | 0.602 ± 0.088 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bergamasco, L.; Coletta, A.; Olmo, G.; Cermelli, A.; Rubino, E.; Rainero, I. AI-Based Facial Emotion Analysis for Early and Differential Diagnosis of Dementia. Bioengineering 2025, 12, 1082. https://doi.org/10.3390/bioengineering12101082

Bergamasco L, Coletta A, Olmo G, Cermelli A, Rubino E, Rainero I. AI-Based Facial Emotion Analysis for Early and Differential Diagnosis of Dementia. Bioengineering. 2025; 12(10):1082. https://doi.org/10.3390/bioengineering12101082

Chicago/Turabian StyleBergamasco, Letizia, Anita Coletta, Gabriella Olmo, Aurora Cermelli, Elisa Rubino, and Innocenzo Rainero. 2025. "AI-Based Facial Emotion Analysis for Early and Differential Diagnosis of Dementia" Bioengineering 12, no. 10: 1082. https://doi.org/10.3390/bioengineering12101082

APA StyleBergamasco, L., Coletta, A., Olmo, G., Cermelli, A., Rubino, E., & Rainero, I. (2025). AI-Based Facial Emotion Analysis for Early and Differential Diagnosis of Dementia. Bioengineering, 12(10), 1082. https://doi.org/10.3390/bioengineering12101082