1. Introduction

Advancements in healthcare have increased the average global life expectancy. It is projected that about 90% of the countries will be considered aged societies, and more than 50% will be ultra-aged by 2100 [

1]. This demographic change has a profound impact on the care of old people, particularly in relation to dementia, a neurodegenerative disease often developed in the aging population. Alzheimer’s disease (AD) is the most common type of dementia, which includes up to 80% of total dementia [

2]. Characterized by the presence of amyloid-

(A

) plaques and tau-containing neurofibrillary tangles (NFTs), AD significantly impacts the quality of life with a decline in cognitive ability that interferes with daily activities [

3]. The progression of AD includes different stages, from preclinical and very mild cognitive impairment (MCI) to mild and severe stages [

4].

AD diagnosis involves clinical examinations and interviews with patients and their family members [

5]. Clinicians often request additional pathological tests to identify patients more accurately [

6]. Imaging techniques such as Positron Emission Tomography (PET) and Magnetic Resonance Imaging (MRI), which are widely accessible and noninvasive imaging modalities, are commonly utilized for the diagnosis and treatment of AD [

7,

8,

9]. There are several commonly used screening tools to measure AD, including the Clinical Dementia Rating (CDR) and the Mini-Mental State Examination (MMSE) [

10,

11]. However, early diagnosis of AD is challenging as studies show that the misdiagnosis rates of probable AD are above 16% [

12]. These limitations underscore the need for advanced computational methods such as machine learning (ML) and deep learning (DL) to improve diagnosis and management.

Numerous researchers have applied ML algorithms including support vector machines (SVMs), Random Forests (RFs), logistic regression (LR), naive Bayes (NB), and multilayer perceptrons (MLPs) for AD diagnosis [

4,

13,

14,

15,

16,

17,

18]. The performance of these algorithms depends on manual feature extraction techniques that require domain expertise and are labor intensive [

19,

20]. Deep learning, particularly convolutional neural networks (CNNs), has addressed these limitations by automating feature extraction from MRI and PET scans, improving diagnostic accuracy [

21,

22,

23,

24]. CNNs, however, struggle with capturing temporal changes for AD progression [

25]. Recurrent Neural Networks (RNNs), including Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU), overcome the problem of capturing long-term dependencies in time-series data such as Electroencephalogram (EEG) signals and clinical assessments [

26,

27,

28,

29,

30,

31]. Recent advances have made natural language processing (NLP) an essential tool for analyzing language and speech patterns that are indicators of cognitive decline [

32,

33]. Models such as Bidirectional Encoder Representations from Transformers (BERT) and the Generative Pre-trained Transformer (GPT) analyze changes in fluency and complexity to detect neurological impairments [

32,

33,

34,

35,

36]. On the other hand, vision transformers (ViTs) have advanced the use of medical imaging for AD diagnosis by capturing local and global dependencies through self-attention mechanisms [

25,

37,

38,

39,

40,

41], which requires significantly more computation than CNN models.

Along with imaging data, healthcare providers use patient records, neurological tests, and genetic history to diagnose AD [

42]. This approach leads to the collection of various data such as imaging, biological markers, and clinical evaluation in patients [

43]. Although Computed Tomography (CT), MRI, and PET images provide crucial insights into the disease, incorporating patient records, neurological tests, and genetic history is equally important for diagnosing Alzheimer’s disease. Given the challenges in acquiring more images from a larger patient population, it is vital to leverage the available data to enhance diagnostic precision. While CNN models excel with imaging data, their current frameworks fall short in effectively utilizing multimodal information for improved performance [

42]. Moreover, most of the existing multimodal models struggle as they compute attentions separately from each modality. These challenges limit the clinical applicability and generalizability of such models in AD diagnosis. In addition, most studies focus on diagnosing AD from normal control (NC) [

44]. However, MCI is a preliminary stage that is considered a transition state from NC to AD dementia [

45]. Since there is currently no treatment for AD, accurately diagnosing the disease in its early stage is important to provide patient care and develop future treatments. We propose a novel multimodal deep learning framework that effectively integrates imaging data (MRI), textual clinical data, demographic information, genetic biomarkers, and cognitive test scores to diagnose AD, MCI, and NC. This approach aims to improve diagnostic accuracy and interpretability by utilizing an improved feature aggregation strategy that captures cross-modal interactions.

The main contributions of our work are as follows:

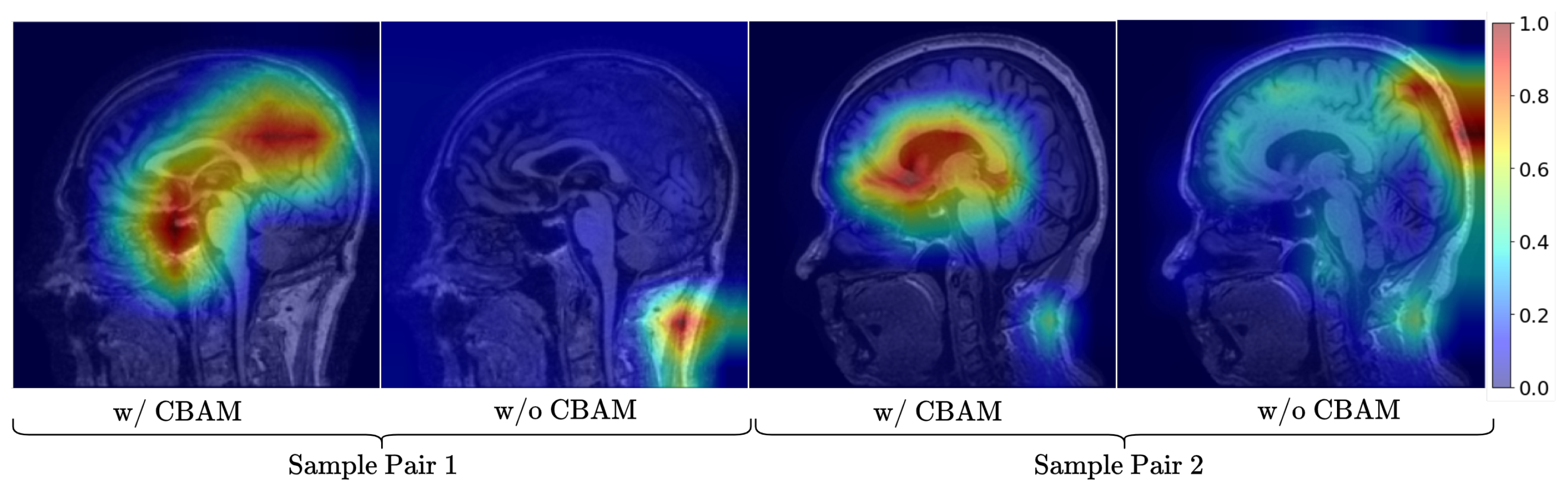

We propose NeuroNet-AD, a novel multimodal deep learning framework that integrates MRI images with clinical text-based metadata for improved multiclass AD classification with the utilization of the Convolutional Block Attention Module (CBAM) and the Meta-Guided Cross-Attention (MGCA) mechanism. These attention computations allow the model to focus on important features and allow for better cross-modality data fusion.

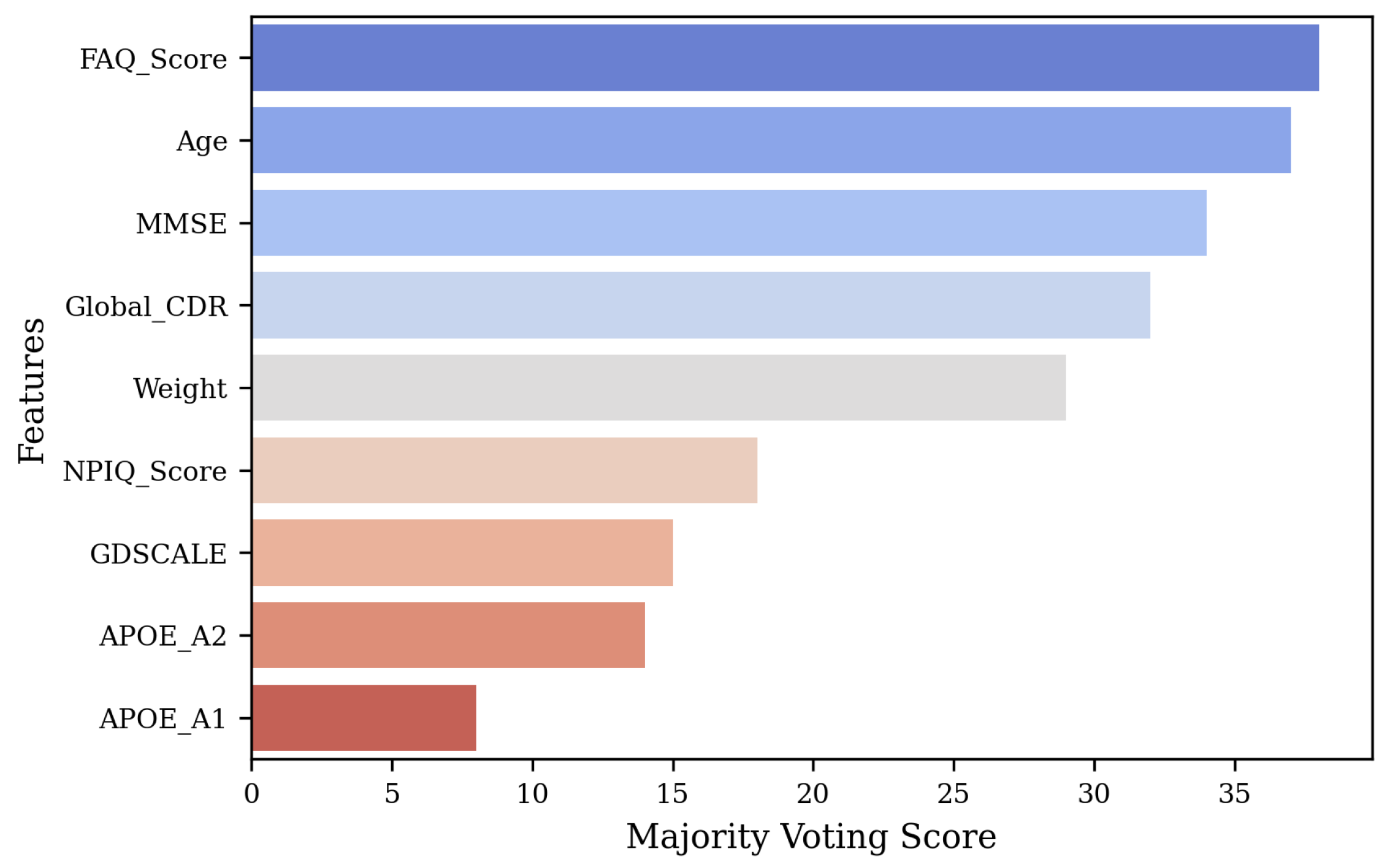

We employ an ensemble-based feature selection strategy combining Random Forest, eXtreme Gradient Boosting (XGBoost), Light Gradient Boosting Machine (LightGBM), ExtraTrees, and AdaBoost, following majority voting to identify the top-most discriminative features from the clinical text data that contribute the most to the performance.

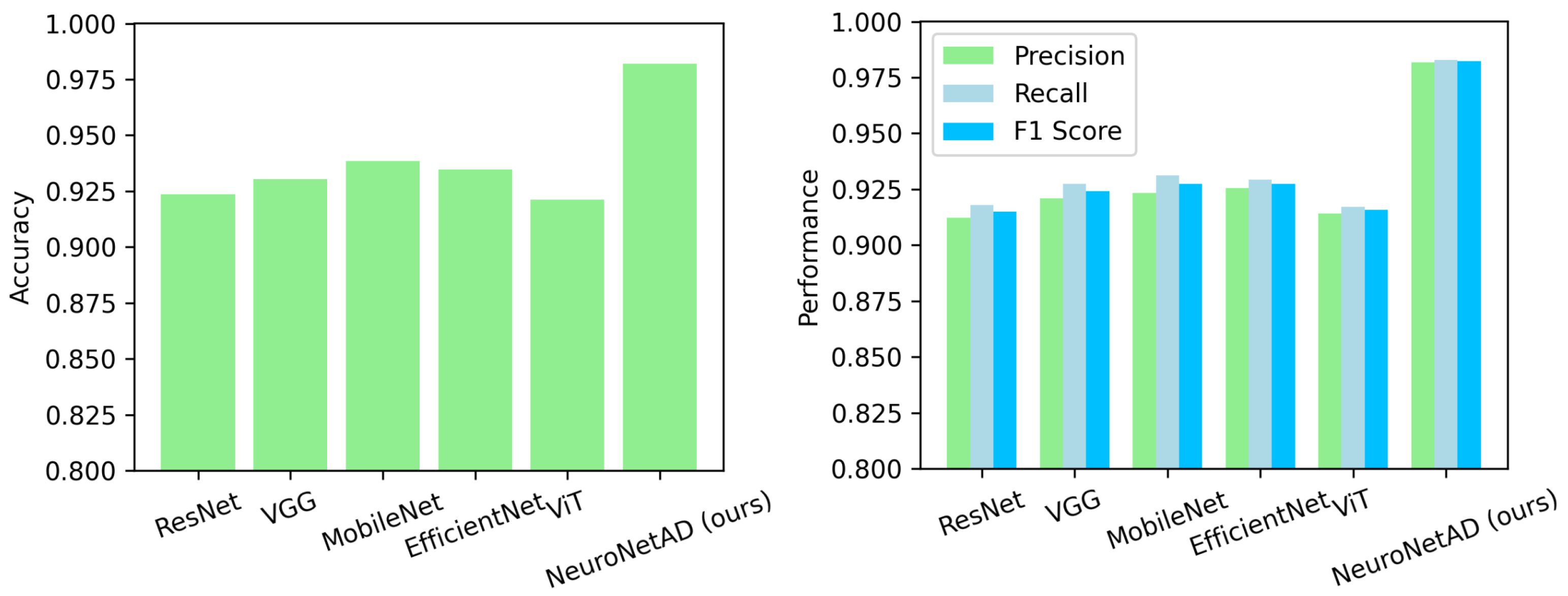

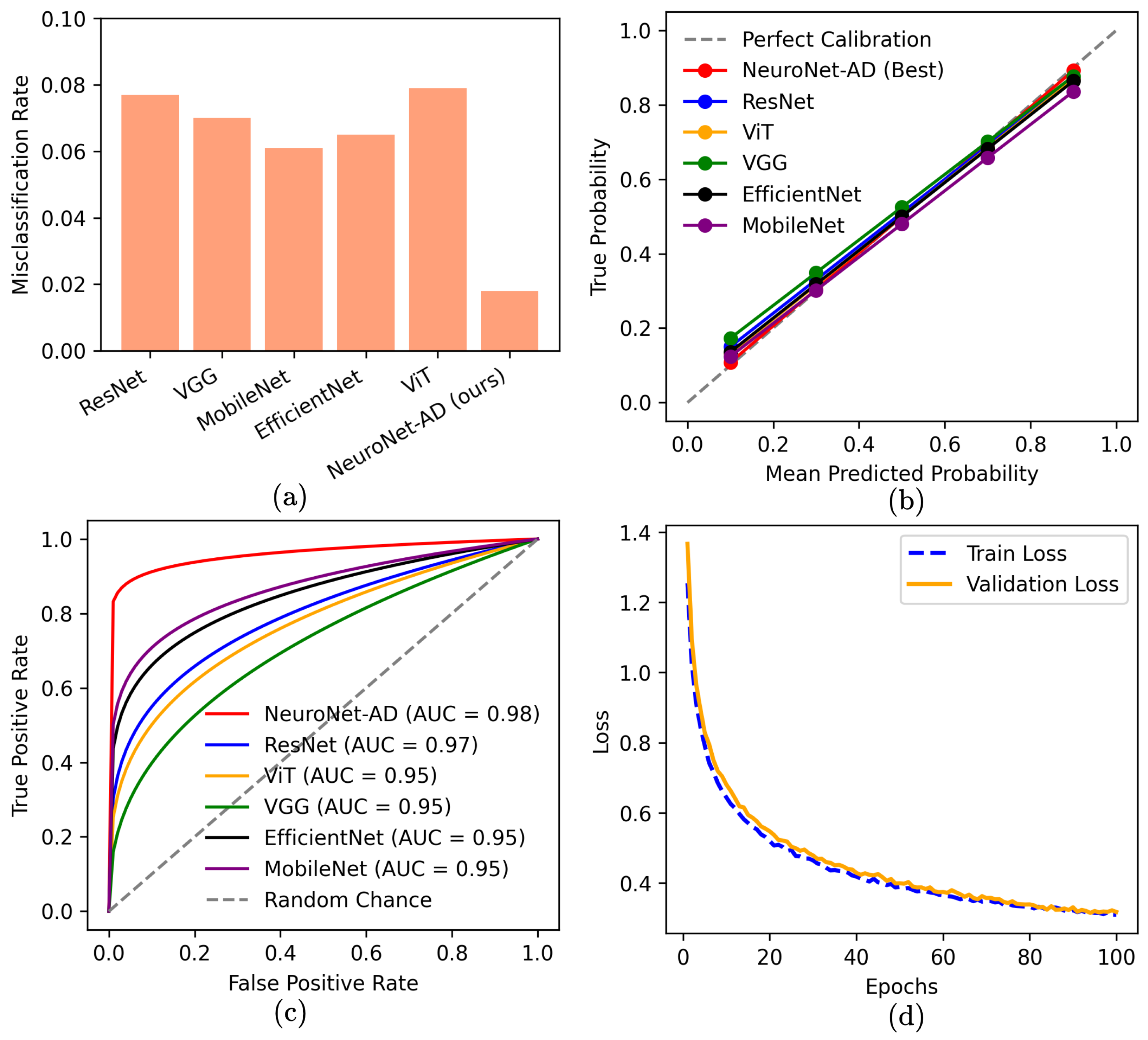

We conduct comprehensive experiments using the Alzheimer’s Disease Neuroimaging Initiative (ADNI) dataset to validate the performance of NeuroNet-AD along with the other state-of-the-art (SOTA) models. We also quantify the impact of each component of NeuroNet-AD on the performance to justify its configuration.

The remainder of this paper is organized as follows: Related works are discussed in

Section 2.

Section 3 illustrates the methods proposed and employed in this work.

Section 4 presents the implementation of the model and the description of the dataset. The results obtained are reported in

Section 5, and

Section 6 concludes the paper.

2. Related Works

The multimodal approach of integrating neuroimaging with clinical textual data offers a more comprehensive understanding of the complex and diverse nature of AD [

46,

47]. This approach allows for early detection and accurate monitoring of the progression of the disease [

48,

49,

50].

Golovanevsky et al. (2022) [

42] presented an attention-based multimodal Alzheimer’s disease diagnosis (MADDi) model to detect AD and MCI through the integration of imaging, genetic, and clinical data and achieved an accuracy of 96.88% on the ADNI dataset. In another study, Wisely et al. (2022) [

51] developed a convolutional neural network (CNN) to detect AD from NC using multimodal retinal images and patient data. The retinal image dataset consisted of 284 eye images from 159 subjects. The model achieved the highest performance with an Area Under the Curve (AUC) of 0.809 using only the image dataset, while the full multimodal model incorporating all imaging data, quantitative metrics, and patient data achieved an improved AUC of 0.836. Altaf et al. (2018) [

52] presented a model integrating feature descriptors such as the Gray Level Co-occurrence Matrix (GLCM), Scale-Invariant Feature Transform (SIFT), Local Binary Pattern (LBP), and Histogram of Oriented Gradients (HOG) to extract information from MRI images. In addition, the study combined clinical data with image-based features to form a comprehensive hybrid feature vector. The proposed model was validated on the ADNI dataset, achieving an accuracy of 98.4% for binary classification (AD vs. NC) and 79.8% for multiclass classification (AD, NC, and MCI).

Recent advances in vision language pre-training (VLP) have shown promising applications in medical diagnosis, particularly by integrating multimodal data such as images (X-rays and MRIs) and text such as doctors’ notes, electronic health records (EHRs), or histories [

53]. Some recent works in AD diagnosis have utilized the VLP model using large-scale medical image and text data to improve interpretability and classification accuracy. Chen and Hong (2024) [

54] developed Medical Bootstrapping Language Image Pre-training (MedBLIP), a lightweight computer-aided diagnosis (CAD) system that uses 3D medical images and text data using a query-based mechanism. The model detected NC, MCI, and AD using frozen pre-trained encoders and parameter-efficient fine-tuning techniques, achieving an accuracy of 78.7% on the ADNI dataset, 83.3% on the National Alzheimer’s Coordinating Center (NACC) dataset, and 85.3% on the Open Access Series of Imaging Studies (OASIS) dataset. In zero-shot evaluation, MedBLIP demonstrated impressive performance with 80.8% accuracy on the Australian Imaging, Biomarkers & Lifestyle (AIBL) dataset and 71.0% on the Minimal Interval Resonance Imaging in Alzheimer’s Disease (MIRIAD) dataset.

Lee et al. (2025) proposed a graph neural network approach utilizing a vision–language model (VLM) to map image–text relationships for dementia detection [

55]. The method, employing Bootstrapping Language Image Pre-training (BLIP) and graph convolutional networks (GCNs), achieved an accuracy of 88.73% to detect NC and AD on the Alzheimer’s Dementia Recognition through Spontaneous Speech (ADReSSo Challenge) dataset. In another study, Feng et al. (2023) [

56] introduced a framework employing large language models (LLMs) with convolutional neural networks (CNNs) and transformers to fuse image and non-image data. This approach used cross-attention mechanisms and prompt tuning to align modalities. The experiments on the ADNI dataset achieved an accuracy of 96.36% for the AD vs. NC classification and 94.71% for the early MCI (EMCI) vs. late MCI (LMCI) classification.

Finally, Chiumento et al. (2024) [

57] introduced a framework using synthetic diagnostic reports generated from structured clinical and MRI data to train the Biomedical Contrastive Language–Image Pre-training (BiomedCLIP) and T5 (Text-to-Text Transfer Transformer) models. Their model’s performance was evaluated using the Bilingual Evaluation Understudy (BLEU-4) (0.1827), Recall-Oriented Understudy for Gisting Evaluation on Longest common subsequence (ROUGE-L) (0.3719), and Metric for Evaluation of Translation with Explicit Ordering (METEOR) (0.4163) scores on the OASIS-4 dataset for NC vs. MCI vs. AD classification.

While existing multimodal approaches enhance AD diagnosis, many models lack an effective feature aggregation strategy that fully captures cross-modal interactions, limiting their interpretability and robustness. To address this, we propose NeuroNet-AD, a novel multimodal framework that integrates MRI images with clinical text metadata. NeuroNet-AD employs the Convolutional Block Attention Module (CBAM) and Meta-Guided Cross-Attention (MGCA) to enhance feature fusion, alongside an ensemble-based feature selection strategy for improved discriminative power. Our comprehensive experiments on the ADNI dataset validate its effectiveness against SOTA models.

4. Experiments

4.1. Dataset

The dataset used for this research was collected from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) study: a longitudinal, multi-center, observational dataset [

58]. We used the ADNI1 version, which includes 3D MRI images and related metadata from 200 subjects. These subjects encompass Normal controls (NCs), individuals with mild cognitive impairment (MCI), and patients diagnosed with Alzheimer’s disease (AD). Along with imaging data, the dataset offers several clinically relevant metadata fields: Weight, Age, APOE-A1, APOE-A2 (Apolipoprotein E alleles associated with AD risk), MMSE (Mini-Mental State Examination), GDSCALE (Geriatric Depression Scale), Global CDR (Clinical Dementia Rating), FAQ-Score (Functional Activities Questionnaire), and NPIQ-Score (Neuropsychiatric Inventory Questionnaire). For each subject, 10 slices were extracted from their 3D MRI scans, totaling 2000 images. A summary of the dataset distribution for the experiments is provided in

Table 1. To ensure a robust evaluation and prevent data leakage, data splitting was conducted at the patient level. All slices from a single subject were assigned exclusively to one set (training, validation, or testing), preventing any slices from the same subject from appearing in multiple sets. Specifically, 20% of the subjects were set aside as a held-out test set, which was not used during training or model selection. The remaining 80% of subjects were employed for subject-level 5-fold cross-validation, with each fold maintaining strict separation between training and validation sets. Model performance during cross-validation was reported as the mean ± standard deviation across folds. The configuration with the best average performance was then retrained on the entire training–validation set and finally evaluated on the held-out test set to provide an unbiased estimate of the model’s performance.

Additionally, external validation was conducted using the OASIS-3 dataset, a large-scale, publicly accessible neuroimaging resource featuring longitudinal MRI scans, cognitive assessments, and clinical data across the cognitive spectrum. The same preprocessing steps were applied to maintain consistency, allowing for a fair evaluation of the model’s generalizability beyond the ADNI1 cohort. The OASIS-3 subset comprised 704 NC, 19 MCI, and 198 AD images (total 921 for external validation). In addition to imaging, it offers rich metadata, including demographics, diagnoses, and longitudinal clinical measures and supporting comprehensive subject characterization.

4.2. Feature Selection

Selecting the most important features is crucial for enhancing model performance, reducing dimensionality, and improving interpretability. The original feature set included Weight, Age, APOE-A1, APOE-A2, MMSE, GDSCALE, Global-CDR, FAQ-Score, and NPIQ-Score, though not all may significantly contribute to the performance. To identify the most relevant features, we first extracted metadata features from the dataset and normalized them using StandardScaler to ensure comparability across different scales. We specifically chose Random Forest, XGBoost, LightGBM, Extra Trees, and AdaBoost for their diverse strengths in handling structured data and effectively capturing feature importance. Next, we trained five ensemble models on the processed data and extracted feature importance scores from each model. To ensure robustness, we applied a majority voting mechanism, where features received weighted points based on their rankings across all models, with higher-ranked features accumulating more points.

Let

denote the rank of feature

f assigned by ensemble model

m. We compute a weighted vote

, where

is the weight of model

m (equal weights in our case). The scores are normalized as

and the features with the top

scores are selected. The total votes for each feature were then normalized to compute confidence scores, representing the proportion of votes received relative to the total. Finally, the features were ranked based on their majority voting scores, with the top five retained features being FAQ-Score, Age, MMSE, Global-CDR, and Weight, which demonstrated the highest importance across all models.

Figure 2 shows the feature selection results.

4.3. Implementation

We implemented the NeuroNet-AD model to evaluate the performance across different experimental settings. The model’s training process was performed using PyTorch. We employed the cross-entropy loss function, which is suitable for multiclass classification tasks. This loss function measures the performance of the model by comparing the predicted class probabilities with the actual class labels. The model parameters were optimized using the Adam optimizer. The learning rate was set to 0.001, providing a balance between convergence speed and stability. To enhance generalization and reduce overfitting, we employed several regularization techniques. Specifically, dropout layers with a rate of 0.5 were added to the fully connected layers, and weight decay regularization (set to ) was included in the Adam optimizer to penalize overly complex models. Additionally, data augmentation techniques were applied to the MRI slices, including random horizontal flipping, small-angle rotations (±10°), and slight intensity scaling, thereby increasing the effective diversity of the training data.

To mitigate the risk of overfitting, we incorporated an early stopping mechanism with a patience of 20 epochs, meaning the training process was terminated if the validation accuracy did not improve for 20 consecutive epochs. We monitored the validation accuracy, and the model achieving the highest validation accuracy was saved and considered the best-performing model for that specific experimental setup. Given the relatively small dataset size, all splits were performed at the patient level to avoid data leakage, and model evaluation followed subject-level 5-fold cross-validation with an independent held-out test set.

To strictly enforce subject-level independence and prevent data leakage between slices, we grouped all MRI slices by unique subject identifiers before partitioning. This ensured that slices from the same subject were never split across training, validation, or test sets. During 5-fold cross-validation, we performed stratified sampling at the subject level to maintain approximately balanced distributions of NC, MCI, and AD subjects across all folds. In each fold, one set of subjects was reserved exclusively for validation, while the remaining were used for training, ensuring no overlap between partitions. After cross-validation, we retrained the best-performing configuration on the full training–validation set and then tested it on the held-out test set, which included 20% of subjects unseen during both training and model selection. The complete implementation of the splitting pipeline, including the subject-ID–based partitioning code, is publicly available in our GitHub repository (

https://github.com/Rahman-Motiur/NeuroNet-AD) to ensure full reproducibility.

6. Conclusions

In this study, we proposed NeuroNet-AD, a novel multimodal deep learning framework designed to improve the diagnosis of AD, including its early stage, known as MCI. NeuroNet-AD effectively integrates structural MRI images with clinical text-based metadata, utilizing complementary information from both modalities to enhance diagnostic accuracy. Incorporating the Convolutional Block Attention Module (CBAM) within the ResNet-18 backbone significantly improved image feature extraction by emphasizing critical spatial and channel-wise information. The Meta-Guided Cross-Attention (MGCA) module also facilitated robust cross-modal feature alignment, enabling effective fusion of neuroimaging and textual data. Our ensemble-based feature selection strategy enhanced model performance by identifying the most discriminative features from the clinical metadata, reducing overfitting, and improving generalization. We evaluated NeuroNet-AD on the ADNI1 dataset using subject-level 5-fold cross-validation and a held-out test set to ensure robustness. NeuroNet-AD achieved 98.68% accuracy in multiclass classification tasks and 99.13% accuracy in the binary setting on the ADNI dataset, outperforming state-of-the-art models. External validation on the OASIS-3 dataset further confirmed the model’s generalization ability, achieving 94.10% accuracy in the multiclass setting and 98.67% accuracy in the binary setting, despite demographic and acquisition variability. The effectiveness of CBAM, MGCA, and advanced feature engineering in improving diagnostic performance was further validated through comprehensive ablation studies. A limitation of this work is that variations in test-set definitions across studies may hinder direct performance comparisons, even though we employed a strict subject-level split with an independent held-out test set. Another limitation is that the model is currently restricted to MRI and limited clinical metadata, which may reduce generalizability and interpretability across diverse patient populations. In future studies, we aim to explore the integration of additional modalities such as genetic data, longitudinal patient records, and other neuroimaging techniques to further enhance the model’s diagnostic capabilities and interpretability in real-world clinical settings.