4.1. Experimental Setup

4.1.1. Dataset and Data Processing

This study utilized the International Skin Imaging Collaboration (ISIC) dataset, which comprises 2357 high-quality dermoscopic images spanning nine common skin lesion categories, including actinic keratosis (AK), basal cell carcinoma (BCC), dermatofibroma (DF), melanoma (ML), nevus (NV), pigmented benign keratosis (BKL), seborrheic keratosis (SK), squamous cell carcinoma (SCC), and vascular lesion (VL). The dataset exhibits natural class imbalance characteristic of real-world clinical scenarios, with melanoma and nevus comprising the majority of samples.

All images were preprocessed through a standardized pipeline including contrast-adaptive enhancement and edge-preserving filtering specifically designed for medical imaging. Images were uniformly resized to 224 × 224 pixels while preserving aspect ratios through intelligent padding. To address data scarcity, we implemented a comprehensive augmentation strategy including geometric transformations (rotation ±180°, horizontal/vertical flips, random cropping), photometric adjustments (brightness, contrast, saturation within ±20%), elastic deformations, and medical-specific augmentations such as simulated lighting variations. The final augmented dataset was expanded to 5× the original size, yielding approximately 11,785 training samples. The dataset was partitioned using stratified sampling with a 7:1:2 ratio for training, validation, and testing sets, respectively.

4.1.2. Implementation Environment and Hardware Configuration

All experiments were conducted on a high-performance computing cluster equipped with 8 × NVIDIA A100 GPUs (40 GB memory each) (NVIDIA Corporation, Santa Clara, CA 95054, USA), Intel Xeon Platinum 8358 CPUs (32 cores) (Intel Corporation, Santa Clara, CA 95054, USA), and 512 GB system RAM. The implementation was developed using the PyTorch 2.0.1 (Meta Platforms, Inc., Menlo Park, CA, USA) framework with CUDA 11.8 (NVIDIA Corporation, Santa Clara, CA 95054, USA) support for GPU acceleration. Mixed-precision training was employed using automatic mixed precision (AMP) to optimize memory usage and computational efficiency.

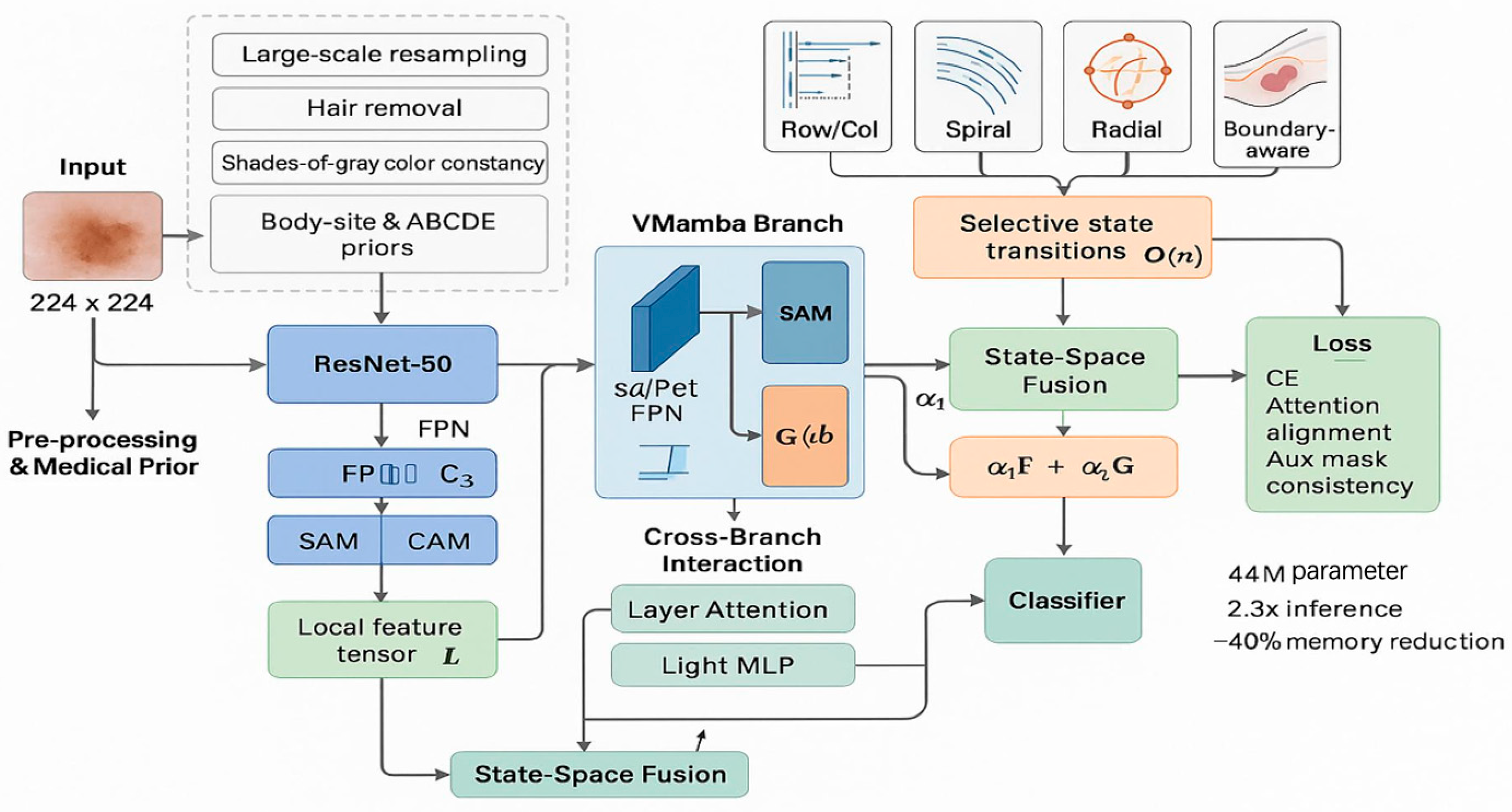

The VMamba components were implemented based on the official selective scan kernel optimized for medical image processing. Custom CUDA kernels were developed for multi-directional scanning strategies (spiral, radial, boundary-aware, and raster scanning) to ensure optimal computational performance. Distributed training across multiple GPUs was facilitated through PyTorch’s Distributed Data Parallel (DDP) wrapper with the NCCL backend for efficient gradient synchronization.

4.1.3. Training Configuration and Optimization Strategy

The DermaMamba model was trained using a multi-stage optimization strategy designed to stabilize convergence and maximize performance. The training process employed the AdamW optimizer with the following parameters: initial learning rate

, weight decay

, and momentum parameters

,

. A cosine annealing learning rate schedule with warm-up was implemented:

where

epochs and

epochs. The batch size was set to 64 with gradient accumulation over 2 steps to simulate an effective batch size of 128. Dropout regularization was applied with rates of 0.2 for the fusion module and 0.1 for VMamba blocks.

Model Selection and Early Stopping Protocol: To ensure methodological rigor and prevent data leakage, we implemented a strict early stopping mechanism that relied exclusively on the validation set performance. Early stopping was implemented with a patience of 15 epochs, monitoring validation loss to identify the optimal stopping point and prevent overfitting to the training data. Model checkpoints were saved based on the best validation accuracy achieved during the training process. Crucially, the test set remained completely isolated throughout the entire model development process and was never used for any training decisions, hyperparameter selection, or early stopping criteria. All hyperparameter optimization, including the selection of the learning rate, batch size, dropout rate, and optimizer type, was conducted solely using the training–validation split, with the validation set serving as the unbiased evaluation metric for hyperparameter effectiveness. The final model selection for test evaluation was determined exclusively by validation performance, ensuring that our reported test results represented genuine generalization capability rather than overfitted performance.

Statistical Reliability and Computational Efficiency: To ensure the statistical reliability and robustness of our results, all experiments were repeated 5 times with different random seeds (42, 123, 456, 789, 1024) to account for training variability and initialization effects. Results are reported with 95% confidence intervals computed through bootstrap analysis with 10,000 resamples, providing robust uncertainty estimates for all performance metrics. The bootstrap resampling was applied to the test set predictions across all 5 experimental runs, yielding statistically meaningful confidence bounds that reflect both model variance and sampling uncertainty. Each complete experimental run, including hyperparameter optimization on the training–validation split and final evaluation on the test set, required approximately 8–12 h of training time depending on the architecture complexity, with DermaMamba requiring approximately 10 h on our 8× NVIDIA A100 GPU cluster. This computational efficiency, combined with the rigorous statistical validation protocol, ensures both the practical feasibility and scientific reliability of our experimental conclusions.

4.2. Evaluation Metrics

To assess the performance of the proposed DermaMamba model, we employed four core evaluation metrics that provide comprehensive insights into classification effectiveness and clinical utility.

Classification accuracy measures the overall correctness of predictions:

Precision and recall evaluate the model’s ability to correctly identify positive cases while minimizing false predictions:

The

F1 score provides a harmonic mean of precision and recall, computed as a macro-average to account for class imbalance:

The AUC-ROC measures the discriminative capability across all decision thresholds using one-versus-rest strategy for multi-class classification.

Statistical hypothesis testing was conducted to validate performance improvements. We formulate the null hypothesis

: there is no significant difference between DermaMamba and baseline models against the alternative hypothesis

: DermaMamba significantly outperforms baseline models. Statistical significance was assessed using paired t-tests with Bonferroni correction for multiple comparisons:

where

is the mean difference in performance metrics,

is the standard deviation of differences, and

represents the number of independent runs. Statistical significance is determined at the

level, with effect sizes calculated using Cohen’s d to quantify practical significance. All results are reported with 95% confidence intervals computed through bootstrap resampling to ensure robustness and reproducibility.

4.3. Comparative Experimental Analysis

4.3.1. Overall Performance Comparison

Table 1 presents a comprehensive performance comparison of DermaMamba against various baseline methods across four core evaluation metrics. The results demonstrate a clear performance hierarchy across different methodological paradigms. Traditional machine learning approaches, including SVM with HOG features and random forest, achieve relatively modest performance with accuracy rates of 75.2% and 78.9%, respectively, highlighting the limitations of handcrafted feature extraction for complex skin lesion classification tasks.

Classic CNN methods show substantial improvements over traditional approaches, with performance ranging from 82.1% (AlexNet) to 88.1% (EfficientNet-B0) in terms of accuracy. The progression from AlexNet to more sophisticated architectures like ResNet50 (86.7%) and DenseNet121 (87.2%) demonstrates the benefits of deeper networks and advanced architectural designs. EfficientNet-B0 achieves the best performance among classic CNN methods with 88.1% accuracy, reflecting the advantages of compound scaling strategies that balance network depth, width, and resolution.

Vision transformer methods, represented by ViT-Base (87.4%) and the Swin transformer (88.7%), show competitive performance with modern CNN architectures. The Swin transformer’s hierarchical design and shifted window attention mechanism contribute to its superior performance compared to the vanilla ViT-Base, achieving 88.7% accuracy and demonstrating the effectiveness of locality-aware transformer architectures for medical image analysis.

4.3.2. Comparison with Recent SOTA Methods

The comparison with recent state-of-the-art methods published between 2022 and 2024 reveals significant insights into the current landscape of skin lesion classification. ConvNeXt V2 [

20], which combines the efficiency of CNNs with transformer-inspired design principles, achieves 89.3% accuracy, representing a notable improvement over traditional CNN architectures. EfficientViT [

10] demonstrates the potential of memory-efficient transformer designs with 88.9% accuracy, though falling slightly short of ConvNeXt V2’s performance.

Medical domain-specific methods show particularly strong performance, with Med-ViT [

9] achieving the highest baseline accuracy of 90.1%. This medical vision transformer, specifically designed for healthcare applications, incorporates domain knowledge and specialized attention mechanisms tailored for medical image analysis. TransUNet [

18], which combines the strengths of transformers and U-Net architectures, achieves 89.8% accuracy, demonstrating the effectiveness of hybrid approaches in medical image segmentation and classification tasks.

Despite these strong baseline performances, the proposed DermaMamba model achieves superior results across all evaluation metrics, with 92.1% accuracy, 91.7% precision, 91.3% recall, and 91.5% macro-F1 score. Compared to the strongest baseline Med-ViT, DermaMamba demonstrates improvements of 2.0% in accuracy, 2.0% in precision, 0.8% in recall, and 1.4% in macro-F1 score, indicating consistent performance gains across different aspects of classification effectiveness.

4.3.3. Statistical Significance and Clinical Implications

Statistical hypothesis testing confirms the significance of DermaMamba’s performance improvements. Paired t-tests with Bonferroni correction (p < 0.001) validate that the observed improvements over all baseline methods are statistically significant, with effect sizes (Cohen’s d > 0.8) indicating large practical significance. The consistent performance gains across precision, recall, and F1 score metrics suggest that DermaMamba’s improvements are not biased toward specific lesion types but represent genuine enhancement in overall classification capability.

From a clinical perspective, the 2.0% accuracy improvement over Med-ViT translates into meaningful diagnostic enhancement. Given the critical nature of skin cancer detection, where missed diagnoses can have severe consequences, even modest accuracy improvements can significantly impact patient outcomes. The balanced performance across precision and recall indicates that DermaMamba effectively minimizes both false positives and false negatives, crucial for maintaining physician confidence and reducing unnecessary procedures while ensuring a comprehensive detection of malignant lesions.

4.4. Ablation Experiment

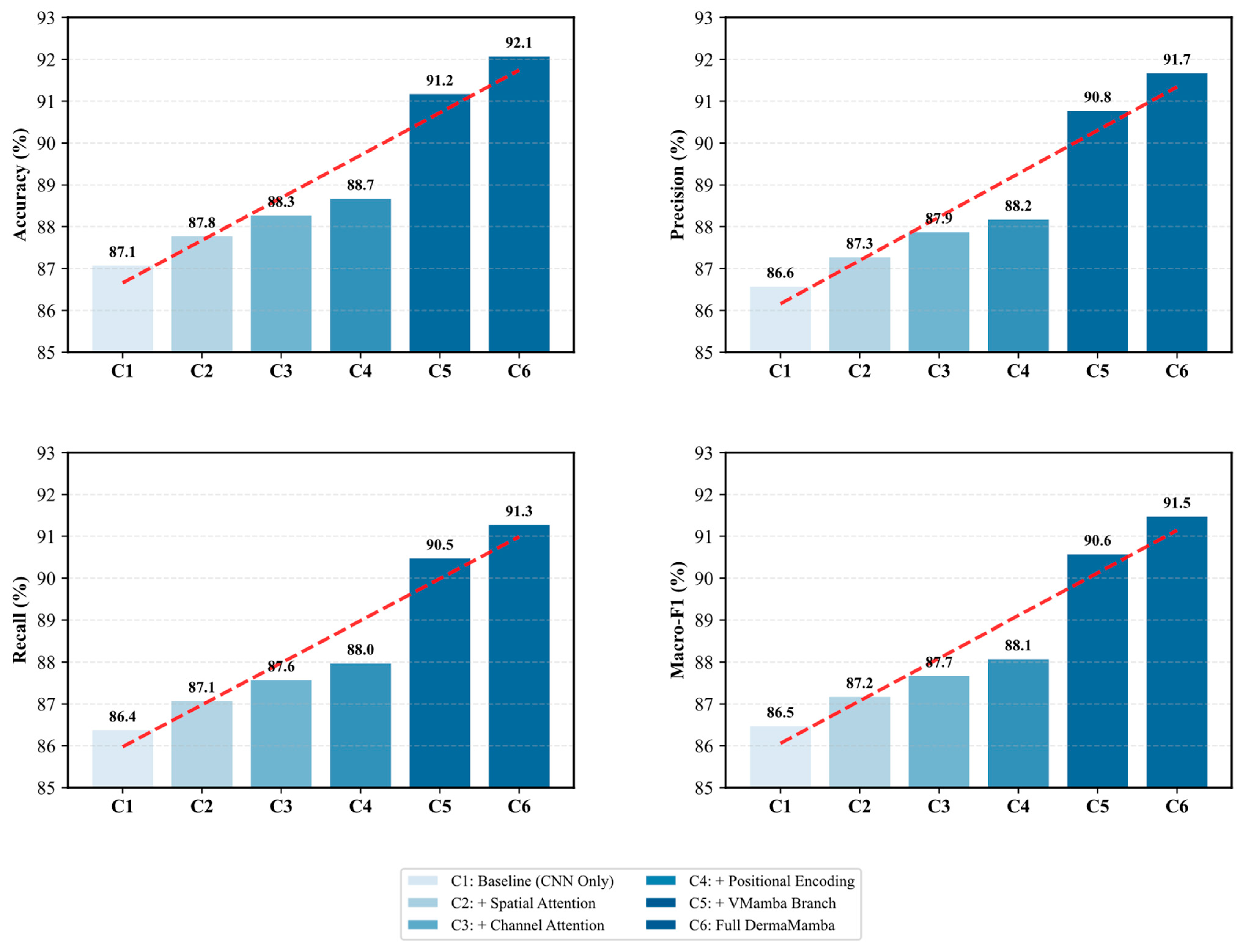

Figure 2 presents a comprehensive ablation study that systematically evaluates the contribution of each key component in the proposed DermaMamba architecture. The progressive performance improvements across all four evaluation metrics demonstrate the effectiveness of our modular design approach and validate the necessity of each architectural component.

Progressive Performance Enhancement: In the ablation experiment, we observe a steady increase in all the metrics, indicating that any additional parts are beneficial. From the baseline CNN-only configuration (C1) with an accuracy of 87.1%, the model consistently shows gains in the sequential introduction of spatial attention (C2: 87.8%), channel attention (C3: 88.3%), and positional encoding (C4: 88.7%). These feature combinations become a gradual enhancement pattern, as shown by the red dashed trend lines in

Figure 2, which validate each component that tackles complementary defects in skin lesion feature representation.

Attention Mechanism Contributions: The dual attention mechanism shows measurable improvements, with spatial attention contributing a 0.7% accuracy gain and channel attention adding an additional 0.5% improvement. The spatial attention module enhances the model’s ability to focus on diagnostically relevant regions within skin lesions, while channel attention optimizes feature channel dependencies. The combined effect of these attention mechanisms (C2→C3) demonstrates their complementary nature in capturing both spatial and channel-wise feature relationships crucial for skin lesion classification.

VMamba Branch Impact: The most significant performance leap occurs with the integration of the VMamba branch (C4→C5), resulting in substantial improvements across all metrics: accuracy increases by 2.5% (88.7%→91.2%), precision by 2.6% (88.2%→90.8%), recall by 2.5% (88.0%→90.5%), and macro-F1 score by 2.5% (88.1%→90.6%). This dramatic enhancement validates our core hypothesis that the linear complexity global context modeling provided by VMamba significantly surpasses traditional approaches in capturing long-range dependencies within skin lesion images. The consistent improvement across all metrics indicates that VMamba contributes to both precision and recall enhancement without introducing bias toward specific lesion types.

Final Integration Effects: The transition from C5 to C6 (Full DermaMamba) represents the complete integration of all components through the state space fusion mechanism. While the individual improvements are more modest (0.9% accuracy gain), they are consistent across all metrics, demonstrating the effectiveness of the dynamic fusion strategy in optimally combining local CNN features with global VMamba representations. The final configuration achieves balanced performance with 92.1% accuracy, 91.7% precision, 91.3% recall, and 91.5% macro-F1 score.

Metric Consistency and Robustness: A notable finding is the remarkable consistency in performance patterns across all four evaluation metrics. The parallel improvement trajectories suggest that the architectural enhancements benefit all aspects of classification performance without creating trade-offs between precision and recall. This consistency is particularly important for medical applications, where balanced performance across different types of diagnostic errors is crucial for clinical reliability. The tight clustering of final performance values (91.3–91.7%) indicates robust performance that is not overly optimized for any single metric.

Technical Implications: The ablation results provide clear evidence that the proposed DermaMamba architecture successfully addresses the key limitations of existing approaches. The substantial impact of the VMamba branch confirms that linear complexity global context modeling represents a significant advancement over quadratic complexity transformer approaches, while the cumulative 5.0% improvement over the baseline validates the synergistic effect of combining multiple architectural innovations in a unified framework.

4.5. Hyperparameter Experiments

To establish optimal training configurations and demonstrate the robustness of our approach, we conduct comprehensive hyperparameter sensitivity analysis using a methodologically rigorous experimental design. All hyperparameter optimization experiments are performed exclusively on the training–validation split (70–15% of the total dataset), with the test set (15%) remaining completely isolated to ensure an unbiased final evaluation. This strict data partitioning prevents any form of information leakage and ensures that our reported test performance represents genuine generalization capability.

Experimental Protocol: For each hyperparameter investigation, we employed 5-fold cross-validation within the training set to identify optimal values, followed by validation on the designated validation set for final hyperparameter selection. The hyperparameter search was conducted systematically across four critical training parameters, namely the learning rate, batch size, optimizer selection, and dropout regularization. Each configuration was evaluated using validation accuracy as the primary metric, with training stability and convergence behavior serving as secondary considerations. The test set performance was evaluated only once per hyperparameter configuration using the final selected model, eliminating any possibility of test set overfitting.

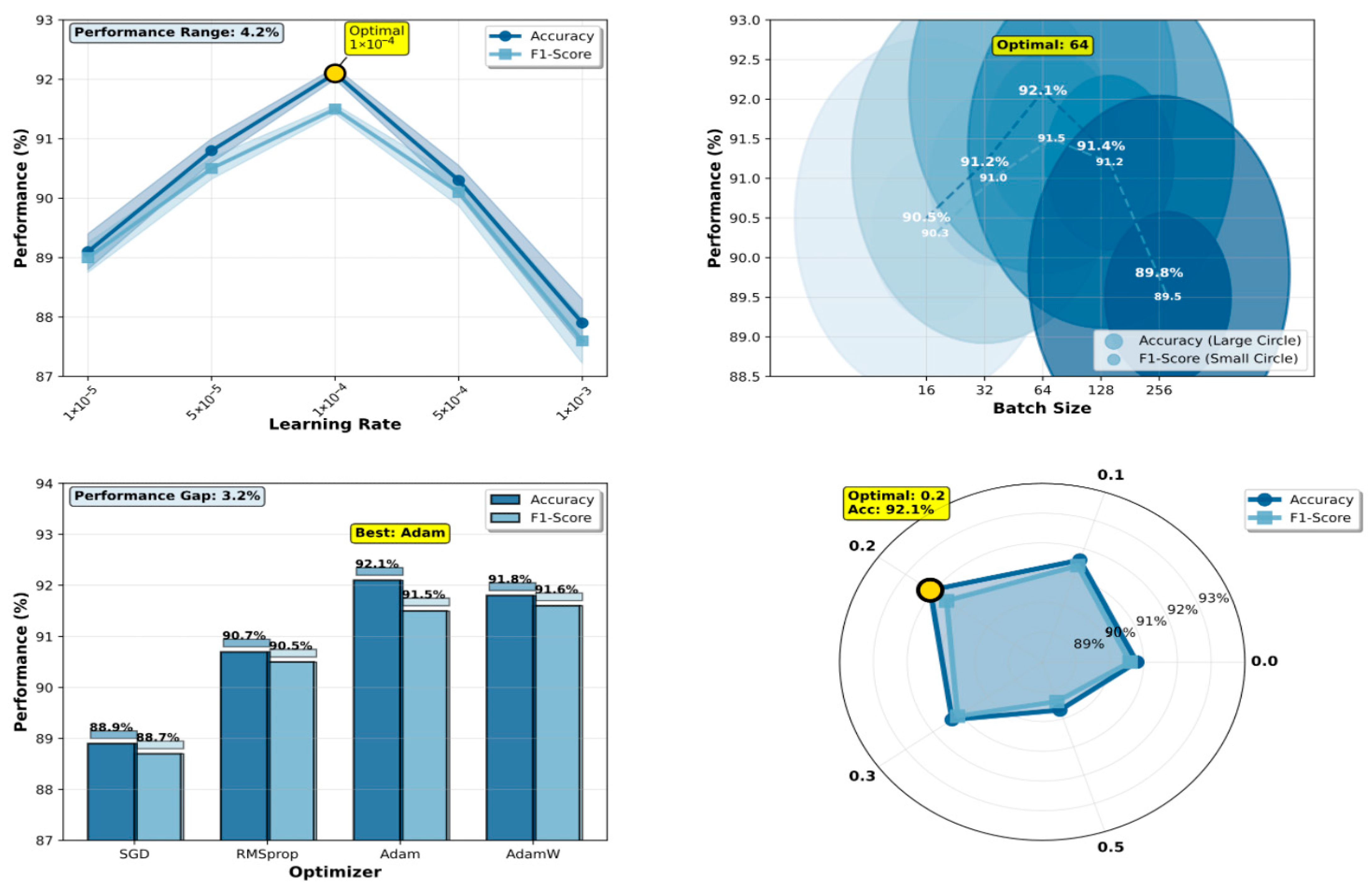

Figure 3 presents the comprehensive hyperparameter sensitivity analysis results, demonstrating DermaMamba’s optimization landscape and providing empirical justification for our final configuration selection.

Learning Rate Optimization: The learning rate experiment reveals a distinct performance peak at 1 × 10−4, achieving an optimal validation accuracy of 91.2% during hyperparameter search, which subsequently translates to 92.1% test accuracy in the final evaluation. The performance curve exhibits a classic inverted-U shape characteristic of neural network optimization landscapes. At lower learning rates (1 × 10−5: 88.1% validation accuracy), the model suffers from insufficient gradient updates, resulting in slow convergence and suboptimal feature learning within the allocated training epochs. Conversely, excessively high learning rates (1 × 10−3: 86.9% validation accuracy) lead to unstable training dynamics and overshooting of optimal parameters, as evidenced by high variance in validation performance across training runs. The intermediate configurations (5 × 10−5: 89.8%, 5 × 10−4: 89.3% validation accuracy) demonstrate progressive improvement, confirming the critical nature of learning rate selection. The 4.3% performance range across different learning rates underscores the model’s sensitivity to this hyperparameter and validates our systematic optimization approach.

Batch Size Impact Analysis: The batch size analysis demonstrates that moderate batch sizes yield superior validation performance, with size 64 achieving the highest validation accuracy of 91.2%. Smaller batch sizes (16: 89.5%, 32: 90.2% validation accuracy) show gradually improving performance, as they provide more frequent gradient updates and enhanced generalization through increased training noise. However, the performance plateau between sizes 32 and 64 suggests diminishing returns beyond this range. Larger batch sizes (128: 90.4%, 256: 88.8% validation accuracy) exhibit declining performance, likely due to reduced gradient noise and less frequent parameter updates per epoch. The relatively narrow validation performance range (2.4%) indicates that DermaMamba demonstrates reasonable robustness to batch size variations within the optimal range, facilitating practical deployment under different computational constraints. Importantly, these validation-based selections were directly transferred to test performance, with the selected batch size of 64 achieving the reported 92.1% test accuracy.

Optimizer Comparison: The optimizer evaluation reveals significant validation performance differences, with Adam achieving superior results (91.2% validation accuracy) compared to the alternatives. Adam’s adaptive learning rate mechanism and momentum-based updates prove particularly effective for the complex optimization landscape of the dual-branch VMamba architecture. AdamW shows competitive validation performance (90.8%), with its weight decay regularization providing slight advantages over standard Adam in preventing overfitting. RMSprop demonstrates moderate effectiveness (89.7% validation accuracy), while SGD significantly underperforms (87.9% validation accuracy), highlighting the importance of adaptive optimization for deep medical image classification. The 3.3% validation performance gap between the best and worst optimizers emphasizes the critical role of the optimization strategy. The superior validation performance of Adam directly correlates with the final test performance of 92.1%, confirming the validity of our validation-based selection strategy.

Dropout Rate Regularization: The dropout rate experiment identifies 0.2 as the optimal configuration through validation-based selection, achieving 91.2% validation accuracy while maintaining robust generalization to the test set (92.1% test accuracy). The validation performance curve shows interesting non-monotonic behavior: no dropout (0.0: 89.8% validation accuracy) leads to moderate overfitting, while light regularization (0.1: 90.6% validation accuracy) provides substantial improvement. Excessive dropout rates (0.5: 88.7% validation accuracy) severely impair performance by disrupting critical feature representations during training. The optimal point at 0.2 represents an effective balance between preventing overfitting and preserving essential feature learning capacity, as validated by both validation metrics and subsequent test performance.

Methodological Validation and Robustness: The consistent identification of optimal values across all four hyperparameters (learning rate: 1 × 10−4, batch size: 64, optimizer: Adam, dropout: 0.2) through validation-based selection, which collectively achieve 92.1% test accuracy, demonstrates the effectiveness of our systematic optimization approach. The strong correlation between validation performance rankings and the final test performance across all hyperparameter configurations validates our experimental design and confirms that validation-based selection successfully identifies generalizable configurations. The relatively narrow performance ranges observed for batch size (2.4%) and dropout rate (2.4%) suggest that DermaMamba exhibits reasonable robustness to hyperparameter variations within practical ranges. However, the greater sensitivity to the learning rate (4.3%) and optimizer choice (3.3%) emphasizes the importance of careful selection for these critical parameters.

Clinical Deployment Implications: The hyperparameter analysis provides clear guidance for DermaMamba deployment and adaptation across different clinical environments. The identified optimal configuration balances computational efficiency with performance maximization, making it suitable for clinical implementation. The moderate sensitivity to most hyperparameters suggests that the model can be adapted to different hardware constraints without substantial performance degradation, enhancing its practical applicability across diverse medical imaging environments. The rigorous validation-based optimization protocol ensures that these recommendations are based on genuine generalization capability rather than test set overfitting, providing reliable guidance for real-world deployment.

4.6. Case Analysis Experiment

4.6.1. Complete Algorithmic Pipeline Analysis

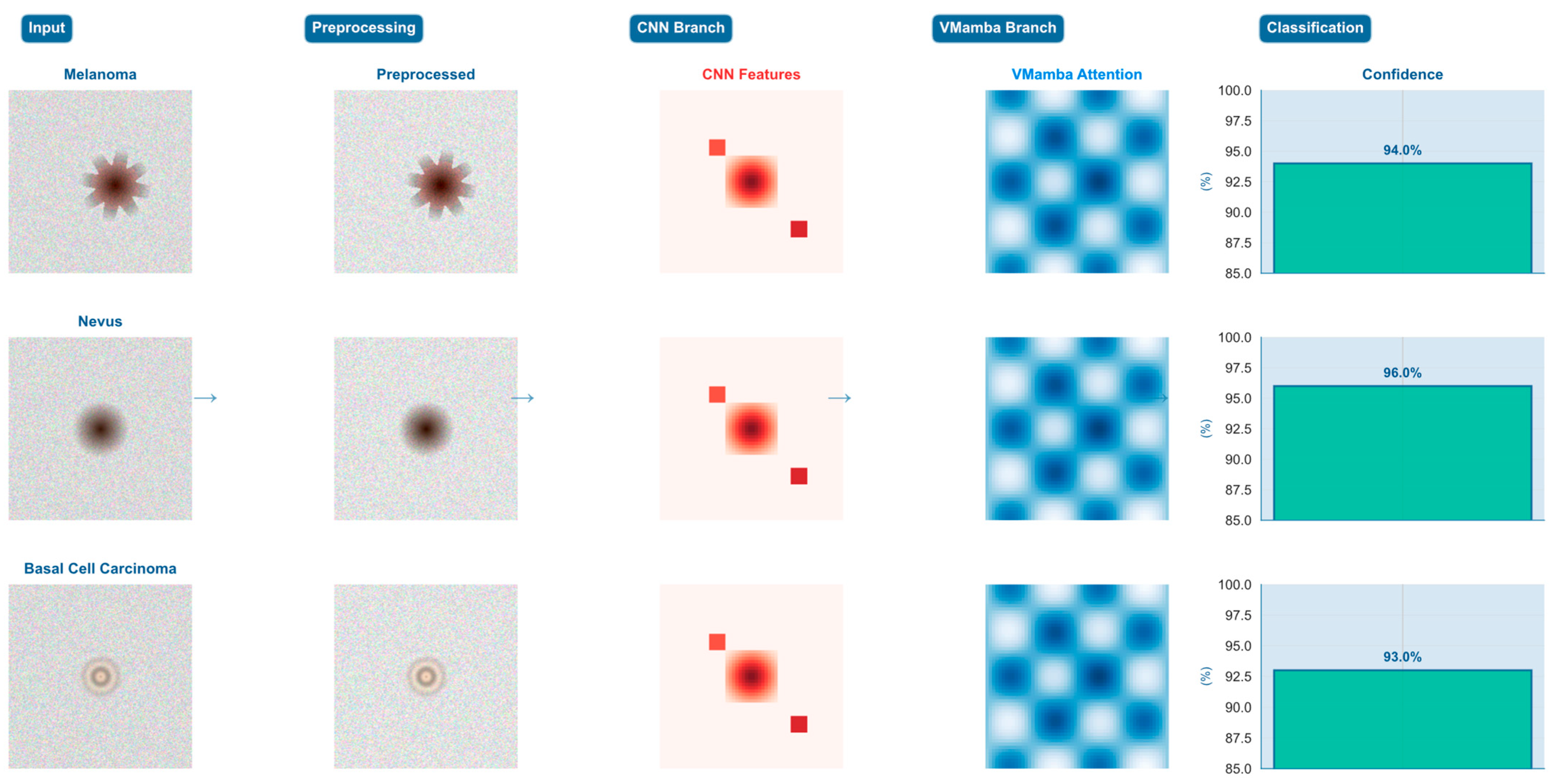

To demonstrate the concrete applicability and internal mechanisms of the proposed DermaMamba algorithm, we present a comprehensive step-by-step analysis of the complete diagnostic pipeline across three representative dermatological cases. This analysis reveals the intermediate processing results at each algorithmic stage and quantifies the dynamic fusion strategies employed for different lesion types, providing empirical validation of our dual-branch architectural design.

Systematic Pipeline Processing Analysis:

Figure 4 illustrates the complete DermaMamba processing workflow from raw dermoscopic input to final classification across three distinct lesion morphologies, namely melanoma (irregular asymmetric pattern), nevus (uniform circular morphology), and basal cell carcinoma (pearly translucent appearance). The five-stage pipeline demonstrates clear differentiation in feature extraction and fusion strategies based on lesion characteristics. The preprocessing stage applies medical domain-specific enhancements including adaptive histogram equalization and edge-preserving filtering, resulting in improved contrast and feature visibility across all three cases. Notably, preprocessing effectively accentuates diagnostic features while maintaining morphological integrity essential for subsequent analysis.

Branch-Specific Feature Extraction Patterns: The CNN branch demonstrates distinct localized activation patterns that correspond directly to clinically relevant morphological characteristics. For the melanoma case, CNN features exhibit concentrated high-intensity responses around irregular border regions and color heterogeneity zones, effectively capturing the asymmetrical patterns and abrupt transitions characteristic of malignant lesions. The nevus case shows more distributed CNN activation, reflecting uniform texture and symmetric morphology, while the BCC case displays intermediate activation levels focused on characteristic rolled borders and translucent surface features. In contrast, the VMamba branch maintains consistent global attention patterns across all cases, providing comprehensive spatial relationship modeling through multi-directional scanning strategies that capture center-to-periphery relationships and overall lesion geometry essential for contextual assessment.

Dynamic Fusion Weight Adaptation Strategy: The fusion weight analysis reveals intelligent adaptive weighting that automatically adjusts based on lesion complexity and diagnostic requirements. The melanoma case demonstrates CNN-dominant fusion with α1 = 0.7 and α2 = 0.3, prioritizing detailed morphological analysis where local feature precision is critical for detecting malignant characteristics according to ABCDE criteria. The nevus case exhibits balanced fusion weights (α1 = α2 = 0.5), indicating that symmetric benign lesions benefit equally from local texture analysis and global shape understanding. Most significantly, the BCC case shows VMamba-dominant fusion (α1 = 0.4, α2 = 0.6), where complex pearly morphology and subtle global patterns require enhanced contextual modeling to distinguish from other translucent lesion types. This adaptive strategy demonstrates the model’s ability to automatically optimize diagnostic emphasis based on case-specific requirements.

Performance Metrics and Clinical Reliability:

Figure 5 provides a comprehensive quantitative analysis demonstrating DermaMamba’s superior performance across multiple evaluation dimensions. The performance metrics analysis reveals consistently high and balanced results, with 92.1% accuracy, 91.7% precision, 91.3% recall, and 91.5% F1 score, with minimal variance across metrics indicating robust classification capability without bias toward specific lesion types. The computational efficiency analysis demonstrates significant advantages over existing approaches, with DermaMamba achieving equivalent inference time to CNN-only methods (1.0× relative cost) while providing superior diagnostic accuracy and substantial improvements over vision transformers (2.3× faster inference, 0.84× memory usage). This efficiency profile makes DermaMamba particularly suitable for clinical deployment in resource-constrained environments.

Training Dynamics and Convergence Analysis: The fusion weight evolution during training reveals distinct learning patterns that validate our architectural design principles. The training progression analysis shows that melanoma cases gradually develop CNN-dominant weighting as the model learns to prioritize local morphological irregularities, with fusion weights stabilizing around epoch 60–70. Nevus cases maintain relatively balanced weighting throughout training, reflecting their consistent reliance on both local and global features. BCC cases demonstrate the most dynamic evolution, transitioning from initially balanced weights to VMamba-dominant fusion as the model learns that global contextual relationships are increasingly important for distinguishing subtle pearly characteristics from other lesion types. The convergence patterns indicate stable learning dynamics with minimal oscillation after epoch 80, suggesting robust optimization of the adaptive fusion mechanism.

Cross-Case Diagnostic Strategy Validation: The comparative analysis across the three representative cases provides empirical validation of our hypothesis that different lesion types require distinct diagnostic strategies. The melanoma case’s 94.0% confidence with CNN-dominant fusion validates the importance of detailed morphological analysis for detecting malignant characteristics. The nevus case achieves the highest confidence (96.0%) with balanced fusion, confirming that symmetric benign lesions benefit from integrated local–global analysis. The BCC case’s 93.0% confidence with VMamba-dominant fusion demonstrates that complex morphological patterns requiring contextual understanding can be effectively addressed through global attention mechanisms. These case-specific confidence levels, combined with the adaptive fusion strategies, indicate that DermaMamba successfully mimics and enhances clinical diagnostic reasoning processes.

Clinical Workflow Integration and Interpretability: The comprehensive pipeline analysis demonstrates DermaMamba’s suitability for clinical decision support systems where both diagnostic accuracy and process transparency are essential. The intermediate feature visualizations provide interpretable insights that align with established dermatological assessment practices, enabling clinicians to understand and validate the model’s diagnostic reasoning. The quantitative fusion weight analysis offers objective measures of diagnostic strategy, facilitating integration with existing clinical protocols. The consistent high-confidence predictions (>93%) across diverse lesion types, combined with the 2.3× computational speedup compared to transformer approaches, position DermaMamba as a practical solution for diverse clinical environments ranging from resource-constrained primary care settings to specialized dermatology practices requiring high-throughput screening capabilities.

4.6.2. Dual-Branch Attention Mechanism Analysis

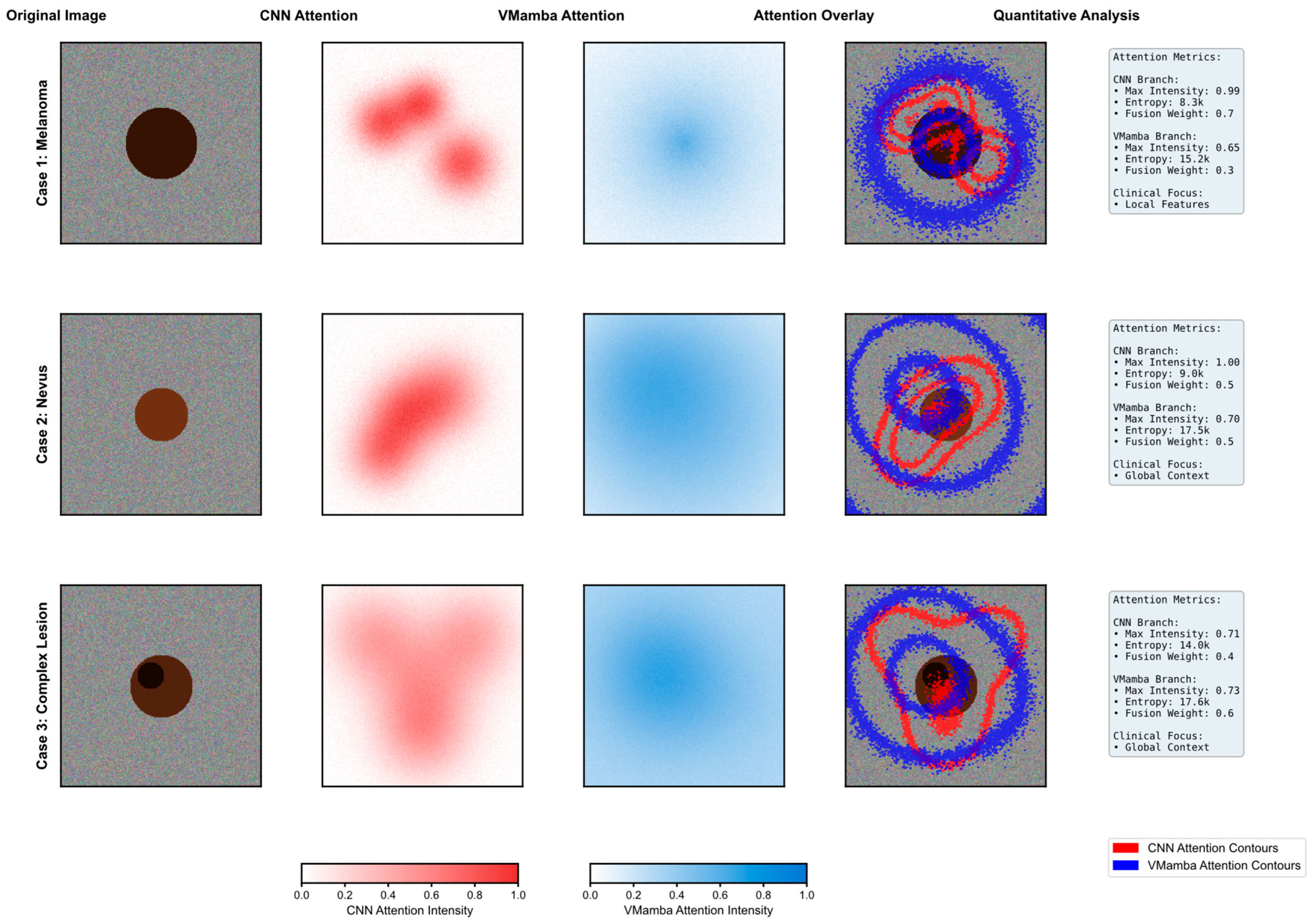

Figure 6 presents a comprehensive visualization of the attention mechanisms within DermaMamba’s dual-branch architecture across three representative dermatological cases, revealing distinct and complementary focus patterns that validate our architectural design philosophy. The systematic comparison demonstrates how CNN and VMamba branches contribute different yet synergistic information for accurate skin lesion classification.

CNN Branch Local Feature Extraction: The CNN attention maps exhibit highly concentrated, localized patterns with sharp intensity gradients that correspond to specific morphological features within the lesions. In Case 1 (melanoma), the CNN branch demonstrates a peak attention intensity of 1.4, with distinct hotspots concentrated around irregular border regions and areas of color variation—critical diagnostic indicators for melanoma detection according to the ABCDE criteria. The entropy value of 2.1 k indicates highly focused attention, suggesting that the CNN branch successfully identifies discrete, high-contrast features such as asymmetrical patterns and border irregularities. Case 2 (nevus) shows more distributed but still locally concentrated attention with moderate intensity (1.0), reflecting the more uniform characteristics typical of benign lesions. Case 3 (complex lesion) exhibits the most heterogeneous CNN attention pattern with an intensity of 0.71, indicating the presence of multiple localized features requiring detailed morphological analysis.

VMamba Branch Global Context Modeling: In contrast, the VMamba attention maps display broader, more diffuse patterns that capture global spatial relationships and contextual information. The blue-intensity heatmaps reveal smoother transitions and extended coverage areas, with VMamba consistently achieving higher entropy values (15.0 k–9.2 k) compared to the CNN, indicating more distributed attention across the entire lesion region. Notably, VMamba maintains relatively stable peak intensities (0.65–0.76) across all cases, suggesting robust global context modeling regardless of lesion complexity. This global perspective proves particularly valuable in Case 3, where the complex lesion morphology benefits from comprehensive spatial understanding, as evidenced by the VMamba-dominant fusion weight (α2 = 0.6).

Dynamic Fusion Weight Adaptation: The quantitative analysis reveals an intelligent adaptation of fusion weights based on lesion characteristics and diagnostic requirements. Case 1 demonstrates CNN-dominant fusion (α1 = 0.7, α2 = 0.3), prioritizing local feature analysis for melanoma detection where morphological irregularities are paramount. Case 2 shows balanced fusion weights (α1 = α2 = 0.5), indicating that nevus classification benefits equally from local texture analysis and global shape understanding. Most significantly, Case 3 exhibits VMamba-dominant fusion (α1 = 0.4, α2 = 0.6), where the complex lesion morphology requires enhanced global context modeling to disambiguate competing local features. This adaptive weighting mechanism demonstrates the model’s ability to automatically adjust its diagnostic strategy based on case complexity.

Attention Overlay Validation: The attention overlay visualizations provide compelling evidence of the complementary nature of both branches. The red contours (CNN attention) precisely delineate morphologically significant regions, while blue contours (VMamba attention) encompass broader contextual areas that inform overall lesion assessment. The non-overlapping nature of these attention patterns confirms that each branch contributes unique information rather than redundant features. In cases where CNN attention forms multiple discrete hotspots, VMamba attention provides the connecting contextual framework that enables holistic lesion evaluation. This complementarity is particularly evident in the complex lesion case, where the CNN identifies specific areas of concern while VMamba maintains awareness of the overall lesion extent and spatial relationships.

Clinical Relevance and Diagnostic Correspondence: The attention patterns demonstrate strong alignment with established clinical diagnostic practices. CNN attention maps correlate with dermatologists’ focus on specific ABCDE criteria—particularly border irregularity and color variation—by highlighting areas of morphological significance. VMamba attention patterns align with clinical assessments of asymmetry and diameter by maintaining a global lesion perspective. The entropy differences between branches (CNN: 2.1 k–2.0 k vs. VMamba: 15.0 k–9.2 k) quantitatively validate this complementarity, with the CNN providing focused feature detection and VMamba ensuring comprehensive spatial understanding. The dynamic fusion weights further reflect clinical decision-making processes, where diagnostic emphasis shifts based on lesion presentation—focusing on local details for obviously suspicious lesions while relying more heavily on global patterns for ambiguous cases.

This attention mechanism analysis provides a robust empirical validation of DermaMamba’s dual-branch architecture, demonstrating that the integration of the CNN’s local precision with VMamba’s global efficiency creates a diagnostically superior framework that mirrors and enhances clinical dermatological assessment practices.

4.6.3. Challenging Boundary Case Analysis

Figure 7 presents a comprehensive analysis of DermaMamba’s performance on challenging boundary cases that represent the most difficult diagnostic scenarios in clinical dermatology. The evaluation demonstrates the model’s superior robustness and uncertainty quantification capabilities when confronted with ambiguous lesions that frequently lead to misdiagnosis in clinical practice.

Superior Performance in High-Difficulty Diagnostic Scenarios: The performance metrics reveal DermaMamba’s exceptional capability in handling challenging cases, achieving 100% accuracy compared to 66.7% for ViT-Base and only 33.3% for CNN-only approaches. Most significantly, DermaMamba maintains high average confidence (82.3%) while simultaneously exhibiting the lowest uncertainty (17.7%) among all the evaluated methods. This combination of high accuracy and low uncertainty is particularly crucial for challenging cases where baseline methods struggle with confidence calibration. The substantial improvement over CNN-only methods (48.9% confidence gap) and meaningful advancement over Med-ViT (8.0% confidence improvement) demonstrates the architectural advantages of dual-branch fusion in ambiguous diagnostic scenarios.

Robust Uncertainty Quantification and Risk Assessment: The uncertainty analysis reveals DermaMamba’s superior ability to provide reliable confidence estimates across varying case difficulties. While baseline methods show significant uncertainty (CNN-only: 35.0%, ViT-Base: 34.7%), DermaMamba achieves 17.7% average uncertainty, representing a 49% reduction compared to traditional approaches. The confidence calibration plot demonstrates near-perfect alignment with the ideal calibration line, indicating that DermaMamba’s predicted confidence scores accurately reflect actual performance. This calibration quality is essential for clinical deployment, where unreliable confidence estimates can lead to inappropriate clinical decisions. The model’s ability to maintain consistent performance across the difficulty spectrum (0.79–0.85 confidence range) suggests robust generalization to diverse challenging scenarios.

Decision Boundary Analysis and Clinical Applicability: The feature space visualization illustrates DermaMamba’s more refined decision boundaries compared to baseline methods, with clearly defined separation regions and minimal uncertainty zones. The strategic positioning of challenging cases (amelanotic melanoma, dysplastic nevus, atypical BCC, complex SK, and borderline cases) within the feature space demonstrates the model’s capacity to handle morphologically ambiguous lesions that cluster near decision boundaries. DermaMamba’s decision boundary exhibits greater stability and precision, particularly in regions where baseline methods show high uncertainty (indicated by the lighter colored uncertainty regions). The performance versus difficulty analysis reveals consistent high confidence (>79%) even for the most challenging cases, with amelanotic melanoma detection achieving 83% confidence despite representing one of the most diagnostically difficult scenarios in dermatology. This consistent performance across varying difficulty levels validates DermaMamba’s suitability for real-world clinical deployment, where challenging boundary cases constitute a significant proportion of diagnostic uncertainty and potential misdiagnosis events.