Performance of ChatGPT-4 as an Auxiliary Tool: Evaluation of Accuracy and Repeatability on Orthodontic Radiology Questions

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design

2.2. Evaluation Criteria

2.3. Statistical Analysis

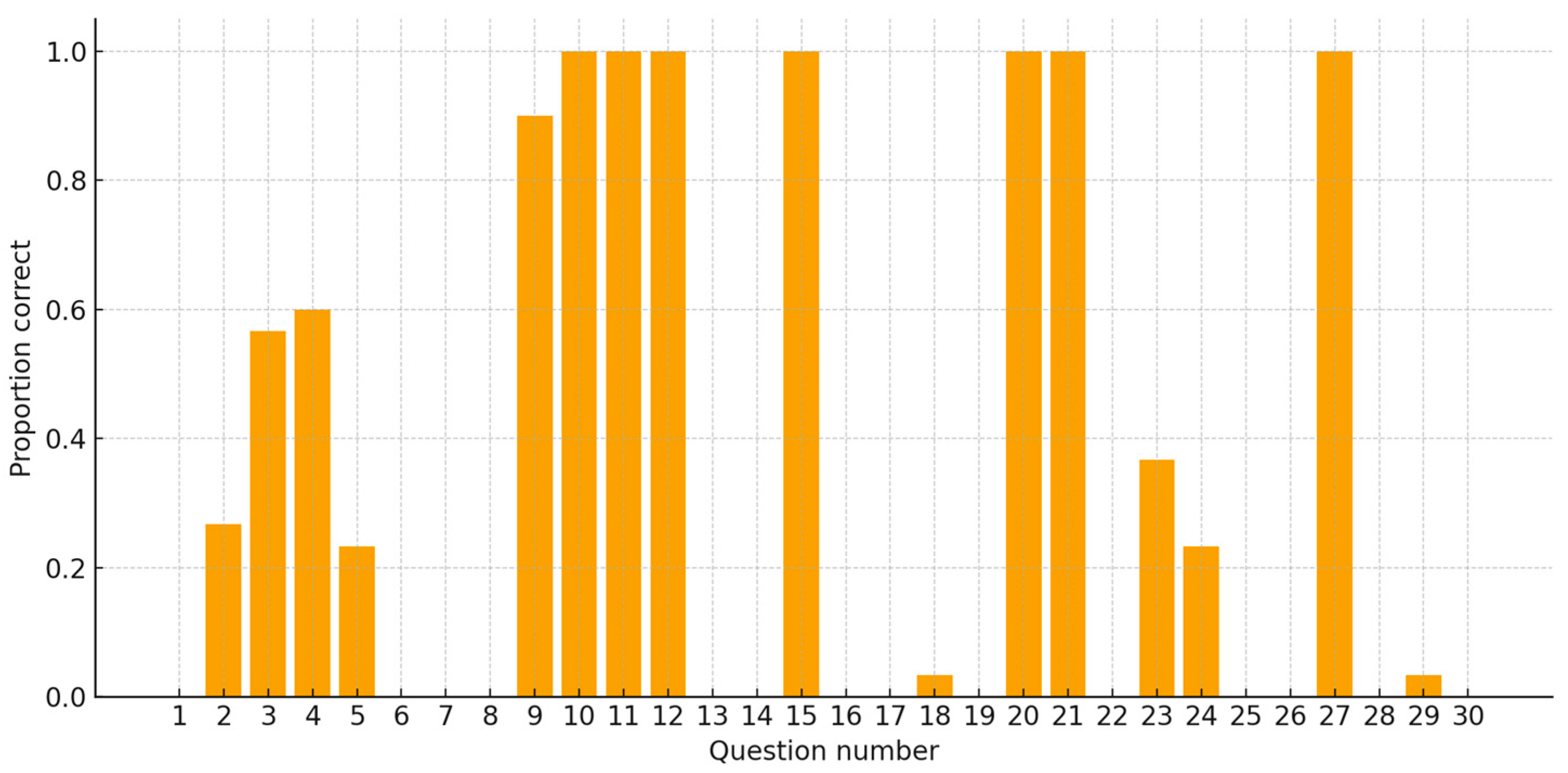

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| LLMs | Large language models |

| ML | Machine Learning |

| DL | Deep learning |

| AutoML | Automated Machine Learning |

| TADs | Temporary Anchorage Devices |

References

- Liu, J.; Zhang, C.; Shan, Z. Application of Artificial Intelligence in Orthodontics: Current State and Future Perspectives. Healthcare 2023, 11, 2760. [Google Scholar] [CrossRef]

- Bichu, Y.M.; Hansa, I.; Bichu, A.Y.; Premjani, P.; Flores-Mir, C.; Vaid, N.R. Applications of Artificial Intelligence and Machine Learning in Orthodontics: A Scoping Review. Prog. Orthod. 2021, 22, 18. [Google Scholar] [CrossRef] [PubMed]

- Lu, W.; Yu, X.; Li, Y.; Cao, Y.; Chen, Y.; Hua, F. Artificial Intelligence–Related Dental Research: Bibliometric and Altmetric Analysis. Int. Dent. J. 2025, 75, 166–175. [Google Scholar] [CrossRef]

- Thirunavukarasu, A.J.; Hassan, R.; Mahmood, S.; Sanghera, R.; Barzangi, K.; El Mukashfi, M.; Shah, S. Trialling a Large Language Model (ChatGPT) in General Practice with the Applied Knowledge Test: Observational Study Demonstrating Opportunities and Limitations in Primary Care. JMIR Med. Educ. 2023, 9, e46599. [Google Scholar] [CrossRef]

- Kazimierczak, N.; Kazimierczak, W.; Serafin, Z.; Nowicki, P.; Nożewski, J.; Janiszewska-Olszowska, J. AI in Orthodontics: Revolutionizing Diagnostics and Treatment Planning—A Comprehensive Review. J. Clin. Med. 2024, 13, 344. [Google Scholar] [CrossRef] [PubMed]

- Eggmann, F.; Weiger, R.; Zitzmann, N.U.; Blatz, M.B. Implications of Large Language Models Such as ChatGPT for Dental Medicine. J. Esthet. Restor. Dent. 2023, 35, 1098–1102. [Google Scholar] [CrossRef]

- Khurana, S.; Vaddi, A. ChatGPT From the Perspective of an Academic Oral and Maxillofacial Radiologist. Cureus 2023, 15, e40053. [Google Scholar] [CrossRef]

- Alkhamees, A. Evaluation of Artificial Intelligence as a Search Tool for Patients: Can ChatGPT-4 Provide Accurate Evidence-Based Orthodontic-Related Information? Cureus 2024, 16, e65820. [Google Scholar] [CrossRef]

- Shujaat, S. Automated Machine Learning in Dentistry: A Narrative Review of Applications, Challenges, and Future Directions. Diagnostics 2025, 15, 273. [Google Scholar] [CrossRef]

- Tanaka, O.M.; Gasparello, G.G.; Hartmann, G.C.; Casagrande, F.A.; Pithon, M.M. Assessing the Reliability of ChatGPT: A Content Analysis of Self-Generated and Self-Answered Questions on Clear Aligners, TADs and Digital Imaging. Dent. Press J. Orthod. 2023, 28, e2323183. [Google Scholar] [CrossRef] [PubMed]

- Ryu, J.; Kim, Y.-H.; Kim, T.-W.; Jung, S.-K. Evaluation of Artificial Intelligence Model for Crowding Categorization and Extraction Diagnosis Using Intraoral Photographs. Sci. Rep. 2023, 13, 5177. [Google Scholar] [CrossRef]

- Ye, H.; Cheng, Z.; Ungvijanpunya, N.; Chen, W.; Cao, L.; Gou, Y. Is Automatic Cephalometric Software Using Artificial Intelligence Better than Orthodontist Experts in Landmark Identification? BMC Oral Health 2023, 23, 467. [Google Scholar] [CrossRef]

- Albalawi, F.; Abalkhail, K. Trends and Application of Artificial Intelligence Technology in Orthodontic Diagnosis and Treatment Planning—A Review. Appl. Sci. 2022, 12, 11864. [Google Scholar] [CrossRef]

- Surovková, J.; Haluzová, S.; Strunga, M.; Urban, R.; Lifková, M.; Thurzo, A. The New Role of the Dental Assistant and Nurse in the Age of Advanced Artificial Intelligence in Telehealth Orthodontic Care with Dental Monitoring: Preliminary Report. Appl. Sci. 2023, 13, 5212. [Google Scholar] [CrossRef]

- Skryd, A.; Lawrence, K. ChatGPT as a Tool for Medical Education and Clinical Decision-Making on the Wards: Case Study. JMIR Form. Res. 2024, 8, e51346. [Google Scholar] [CrossRef] [PubMed]

- Makrygiannakis, M.A.; Giannakopoulos, K.; Kaklamanos, E.G. Evidence-Based Potential of Generative Artificial Intelligence Large Language Models in Orthodontics: A Comparative Study of ChatGPT, Google Bard, and Microsoft Bing. Eur. J. Orthod. 2024, 46, cjae017. [Google Scholar] [CrossRef]

- Chakraborty, C.; Pal, S.; Bhattacharya, M.; Dash, S.; Lee, S.-S. Overview of Chatbots with Special Emphasis on Artificial Intelligence-Enabled ChatGPT in Medical Science. Front. Artif. Intell. 2023, 6, 1237704. [Google Scholar] [CrossRef]

- De Angelis, L.; Baglivo, F.; Arzilli, G.; Privitera, G.P.; Ferragina, P.; Tozzi, A.E.; Rizzo, C. ChatGPT and the Rise of Large Language Models: The New AI-Driven Infodemic Threat in Public Health. Front. Public Health 2023, 11, 1166120. [Google Scholar] [CrossRef] [PubMed]

- Hulsen, T. Artificial Intelligence in Healthcare: ChatGPT and Beyond. AI 2024, 5, 550–554. [Google Scholar] [CrossRef]

- Suárez, A.; Jiménez, J.; Llorente De Pedro, M.; Andreu-Vázquez, C.; Díaz-Flores García, V.; Gómez Sánchez, M.; Freire, Y. Beyond the Scalpel: Assessing ChatGPT’s Potential as an Auxiliary Intelligent Virtual Assistant in Oral Surgery. Comput. Struct. Biotechnol. J. 2024, 24, 46–52. [Google Scholar] [CrossRef]

- Ryu, J.; Lee, Y.-S.; Mo, S.-P.; Lim, K.; Jung, S.-K.; Kim, T.-W. Application of Deep Learning Artificial Intelligence Technique to the Classification of Clinical Orthodontic Photos. BMC Oral Health 2022, 22, 454. [Google Scholar] [CrossRef]

- Alessandri-Bonetti, A.; Sangalli, L.; Salerno, M.; Gallenzi, P. Reliability of Artificial Intelligence-Assisted Cephalometric Analysis. A Pilot Study. BioMedInformatics 2023, 3, 44–53. [Google Scholar] [CrossRef]

- Freire, Y.; Santamaría Laorden, A.; Orejas Pérez, J.; Gómez Sánchez, M.; Díaz-Flores García, V.; Suárez, A. ChatGPT Performance in Prosthodontics: Assessment of Accuracy and Repeatability in Answer Generation. J. Prosthet. Dent. 2024, 131, 659.e1–659.e6. [Google Scholar] [CrossRef]

- Isaacson, K.G.; Thom, A.R.; Atack, N.E.; Horner, K.; Whaites, E. Guidelines for the Use of Radiographs in Clinical Orthodontics, 4th ed.; British Orthodontic Society: London, UK, 2015; ISBN 1-899297-09-X. Available online: https://bos.org.uk/wp-content/uploads/2022/03/Orthodontic-Radiographs-2016-2.pdf (accessed on 25 May 2025).

- Kühnisch, J.; Anttonen, V.; Duggal, M.S.; Spyridonos, M.L.; Rajasekharan, S.; Sobczak, M.; Stratigaki, E.; Van Acker, J.W.G.; Aps, J.K.M.; Horner, K.; et al. Best Clinical Practice Guidance for Prescribing Dental Radiographs in Children and Adolescents: An EAPD Policy Document. Eur. Arch. Paediatr. Dent. 2020, 21, 375–386. [Google Scholar] [CrossRef]

- European Commission. European Guidelines on Radiation Protection in Dental Radiology: The Safe Use of Radiographs in Dental Practice; European Commission, Ed.; Publications Office: Luxembourg, 2004.

- Etherington, G.; Bérard, P.; Blanchardon, E.; Breustedt, B.; Castellani, C.M.; Challeton-de Vathaire, C.; Giussani, A.; Franck, D.; Lopez, M.A.; Marsh, J.W.; et al. Technical recommendations for monitoring individuals for occupational intakes of radionuclides. Radiat. Prot. Dosim. 2016, 170, 8–12. [Google Scholar] [CrossRef] [PubMed]

- Gwet, K.L. Handbook of Inter-Rater Reliability: The Definitive Guide to Measuring the Extent of Agreement Among Raters. Volume 1: Analysis of Categorical Ratings, 5th ed.; AgreeStat Analytics: Gaithersburg, MD, USA, 2021; ISBN 978-1-7923-5463-2. [Google Scholar]

- Suárez, A.; Díaz-Flores García, V.; Algar, J.; Gómez Sánchez, M.; Llorente de Pedro, M.; Freire, Y. Unveiling the ChatGPT Phenomenon: Evaluating the Consistency and Accuracy of Endodontic Question Answers. Int. Endod. J. 2024, 57, 108–113. [Google Scholar] [CrossRef] [PubMed]

- Dermata, A.; Arhakis, A.; Makrygiannakis, M.A.; Giannakopoulos, K.; Kaklamanos, E.G. Evaluating the Evidence-Based Potential of Six Large Language Models in Paediatric Dentistry: A Comparative Study on Generative Artificial Intelligence. Eur. Arch. Paediatr. Dent. 2025, 26, 527–535. [Google Scholar] [CrossRef] [PubMed]

- Gajjar, K.; Balakumaran, K.; Kim, A.S. Reversible Left Ventricular Systolic Dysfunction Secondary to Pazopanib. Cureus 2018, 10, e3517. [Google Scholar] [CrossRef]

- Naureen, S.; Kiani, H. Assessing the Accuracy of AI Models in Orthodontic Knowledge: A Comparative Study Between ChatGPT-4 and Google Bard. J. Coll. Physicians Surg. Pak. 2024, 34, 761–766. [Google Scholar] [CrossRef]

- Shahriar, S.; Lund, B.D.; Mannuru, N.R.; Arshad, M.A.; Hayawi, K.; Bevara, R.V.K.; Mannuru, A.; Batool, L. Putting GPT-4o to the Sword: A Comprehensive Evaluation of Language, Vision, Speech, and Multimodal Proficiency. Appl. Sci. 2024, 14, 7782. [Google Scholar] [CrossRef]

- Luo, D.; Liu, M.; Yu, R.; Liu, Y.; Jiang, W.; Fan, Q.; Kuang, N.; Gao, Q.; Yin, T.; Zheng, Z. Evaluating the Performance of GPT-3.5, GPT-4, and GPT-4o in the Chinese National Medical Licensing Examination. Sci. Rep. 2025, 15, 14119. [Google Scholar] [CrossRef] [PubMed]

- Salmanpour, F.; Camcı, H.; Geniş, Ö. Comparative Analysis of AI Chatbot (ChatGPT-4.0 and Microsoft Copilot) and Expert Responses to Common Orthodontic Questions: Patient and Orthodontist Evaluations. BMC Oral Health 2025, 25, 896. [Google Scholar] [CrossRef] [PubMed]

- Freire, Y.; Santamaría Laorden, A.; Orejas Pérez, J.; Ortiz Collado, I.; Gómez Sánchez, M.; Thuissard Vasallo, I.J.; Díaz-Flores García, V.; Suárez, A. Evaluating the influence of prompt formulation on the reliability and repeatability of ChatGPT in implant-supported prostheses. PLoS ONE 2025, 20, e0323086. [Google Scholar] [CrossRef] [PubMed]

| Question Number | Question Description |

|---|---|

| 1 | What is the function of panoramic radiography in orthodontics? |

| 2 | Do we need to take an orthopantomography before the clinical examination for a correct diagnosis in orthodontics? |

| 3 | Do we need for a correct diagnosis in orthodontics to take a CBCT before the clinical examination? |

| 4 | In what type of radiographs can we be able to assess the state of skeletal maturation of the patient? |

| 5 | What radiological tests would help in the diagnosis and treatment of impacted teeth? |

| 6 | In patients with mixed dentition, is it indicated to routinely take a panoramic radiograph to assess tooth replacement? |

| 7 | What radiographs should be taken at the end of an orthodontic treatment? |

| 8 | Is it indicated to take post-treatment x-rays at the end of orthodontic cases? |

| 9 | If third molars need to be extracted for orthodontic treatment, which radiodiagnostic test is the most indicated prior to extraction? |

| 10 | Is pre-treatment lateral skull teleradiography indicated in patients under 10 years of age with class III? |

| 11 | Is pre-treatment lateral skull teleradiography indicated in patients under 10 years of age with Class II? |

| 12 | Is it appropriate to perform lateral skull radiography in patients between 10 and 18 years of age who are about to start treatment with functional appliances? |

| 13 | Is it indicated to take radiographic records in a patient older than 10 years if the canines have not erupted, but by palpation we can intuit that they are in a favourable position? |

| 14 | Is it considered necessary to take an X-ray in a patient over 14 years of age who has not erupted the second permanent molars? |

| 15 | Is a lateral skull X-ray indicated before starting treatment in patients over 18 years of age with biprotrusion? |

| 16 | How should we position the patient to take a correct lateral skull teleradiography? |

| 17 | How should we prepare the patient to take a correct panoramic radiograph? |

| 18 | How should we instruct the patient to bite in order to take a panoramic X-ray correctly? |

| 19 | In a patient with symptoms associated with temporomandibular dysfunction, is it necessary to take an orthopantomography for a correct diagnosis? |

| 20 | Is it correct to perform a CBCT in patients with temporomandibular dysfunction if we want to know the position of the articular disc? |

| 21 | What test should we perform to assess the condition of the articular disc of the temporomandibular joint? |

| 22 | What should the decision to perform a CBCT on an orthodontic patient be based on? |

| 23 | What are the indications for CBCT in orthodontics? |

| 24 | In which cases is it justified to perform a CBCT on an orthodontic patient? |

| 25 | Can a cephalometric study be performed using the image obtained from a CBCT? |

| 26 | What is the recommended field of view (FOV) if it is necessary to perform a CBCT on an orthodontic patient? |

| 27 | What radiological test could you perform to measure bone volume when placing a mini-screw (TAD)? |

| 28 | Is the use of CBCT routinely indicated in case planning where TADS are necessary? |

| 29 | When would a CBCT be justified when extracting a supernumerary tooth? |

| 30 | In which situation would a CBCT be indicated and justified for the evaluation of an impacted tooth? |

| Grading | Grading Description |

|---|---|

| Incorrect (0) | The answer provided is completely incorrect or unrelated to the question. It does not demonstrate an adequate understanding or knowledge of the topic. |

| Partially correct or incomplete (1) | The answer shows some understanding or knowledge of the topic, but there are significant errors or missing elements. Although not completely incorrect, the answer is not sufficiently correct or complete to be considered certain or adequate. |

| Correct (2) | The answer is completely accurate and shows a solid and precise understanding of the subject. All major components are addressed in a thorough and accurate manner. |

| Incorrect | Partially Correct or Incomplete | Correct | ||||

|---|---|---|---|---|---|---|

| Question | n | Percentage (%) | n | Percentage (%) | n | Percentage (%) |

| 1 | 17 | 56.67 | 13 | 43.33 | 0 | 0.00 |

| 2 | 0 | 0.00 | 22 | 73.33 | 8 | 26.67 |

| 3 | 2 | 6.67 | 11 | 36.67 | 17 | 56.67 |

| 4 | 0 | 0.00 | 12 | 40.00 | 18 | 60.005 |

| 5 | 0 | 0.00 | 23 | 76.67 | 7 | 23.33 |

| 6 | 20 | 66.67 | 10 | 33.33 | 0 | 0.00 |

| 7 | 30 | 100.00 | 0 | 0.00 | 0 | 0.00 |

| 8 | 30 | 100.00 | 0 | 0.00 | 0 | 0.00 |

| 9 | 0 | 0.00 | 3 | 10.00 | 27 | 90.00 |

| 10 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 11 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 12 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 13 | 30 | 100.00 | 0 | 0.00 | 0 | 0.00 |

| 14 | 0 | 0.00 | 30 | 100.00 | 0 | 0,00 |

| 15 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 16 | 5 | 16.67 | 25 | 83.33 | 0 | 0.00 |

| 17 | 0 | 0.00 | 30 | 100.00 | 0 | 0.00 |

| 18 | 29 | 96.67 | 0 | 0.00 | 1 | 3.33 |

| 19 | 4 | 13.33 | 26 | 86.67 | 0 | 0.00 |

| 20 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 21 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 22 | 6 | 20.00 | 24 | 80.00 | 0 | 0.00 |

| 23 | 0 | 0.00 | 19 | 63.33 | 11 | 36.67 |

| 24 | 0 | 0.00 | 23 | 76.67 | 7 | 23.33 |

| 25 | 0 | 0.00 | 30 | 100.00 | 0 | 0.00 |

| 26 | 20 | 66.67 | 10 | 33.33 | 0 | 0.00 |

| 27 | 0 | 0.00 | 0 | 0.00 | 30 | 100.00 |

| 28 | 0 | 0.00 | 30 | 100.00 | 0 | 0.00 |

| 29 | 0 | 0.00 | 29 | 96.67 | 1 | 3.33 |

| 30 | 30 | 100.00 | 0 | 0.00 | 0 | 0.00 |

| Methods | Coeficient | 95% Confidence Interval | Benchmark Scale |

|---|---|---|---|

| Percent Agreement | 0.938 | 0.911–0.965 | Almost perfect |

| Brennan and Prediger | 0.833 | 0.759–0.907 | Substantial |

| Cohen/Conger’s Kappa | 0.813 | 0.713–0.913 | Substantial |

| Scott/Fleiss’ Kappa | 0.813 | 0.768–0.909 | Substantial |

| Gwet’s AC | 0.838 | 0.713–0.913 | Substantial |

| Krippendorff’s Alpha | 0.813 | 0.911–0.965 | Substantial |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Morales Morillo, M.; Iturralde Fernández, N.; Pellicer Castillo, L.D.; Suarez, A.; Freire, Y.; Diaz-Flores García, V. Performance of ChatGPT-4 as an Auxiliary Tool: Evaluation of Accuracy and Repeatability on Orthodontic Radiology Questions. Bioengineering 2025, 12, 1031. https://doi.org/10.3390/bioengineering12101031

Morales Morillo M, Iturralde Fernández N, Pellicer Castillo LD, Suarez A, Freire Y, Diaz-Flores García V. Performance of ChatGPT-4 as an Auxiliary Tool: Evaluation of Accuracy and Repeatability on Orthodontic Radiology Questions. Bioengineering. 2025; 12(10):1031. https://doi.org/10.3390/bioengineering12101031

Chicago/Turabian StyleMorales Morillo, Mercedes, Nerea Iturralde Fernández, Luis Daniel Pellicer Castillo, Ana Suarez, Yolanda Freire, and Victor Diaz-Flores García. 2025. "Performance of ChatGPT-4 as an Auxiliary Tool: Evaluation of Accuracy and Repeatability on Orthodontic Radiology Questions" Bioengineering 12, no. 10: 1031. https://doi.org/10.3390/bioengineering12101031

APA StyleMorales Morillo, M., Iturralde Fernández, N., Pellicer Castillo, L. D., Suarez, A., Freire, Y., & Diaz-Flores García, V. (2025). Performance of ChatGPT-4 as an Auxiliary Tool: Evaluation of Accuracy and Repeatability on Orthodontic Radiology Questions. Bioengineering, 12(10), 1031. https://doi.org/10.3390/bioengineering12101031