Deep Learning-Based Recognition of Periodontitis and Dental Caries in Dental X-ray Images

Abstract

1. Introduction

2. Materials and Methods

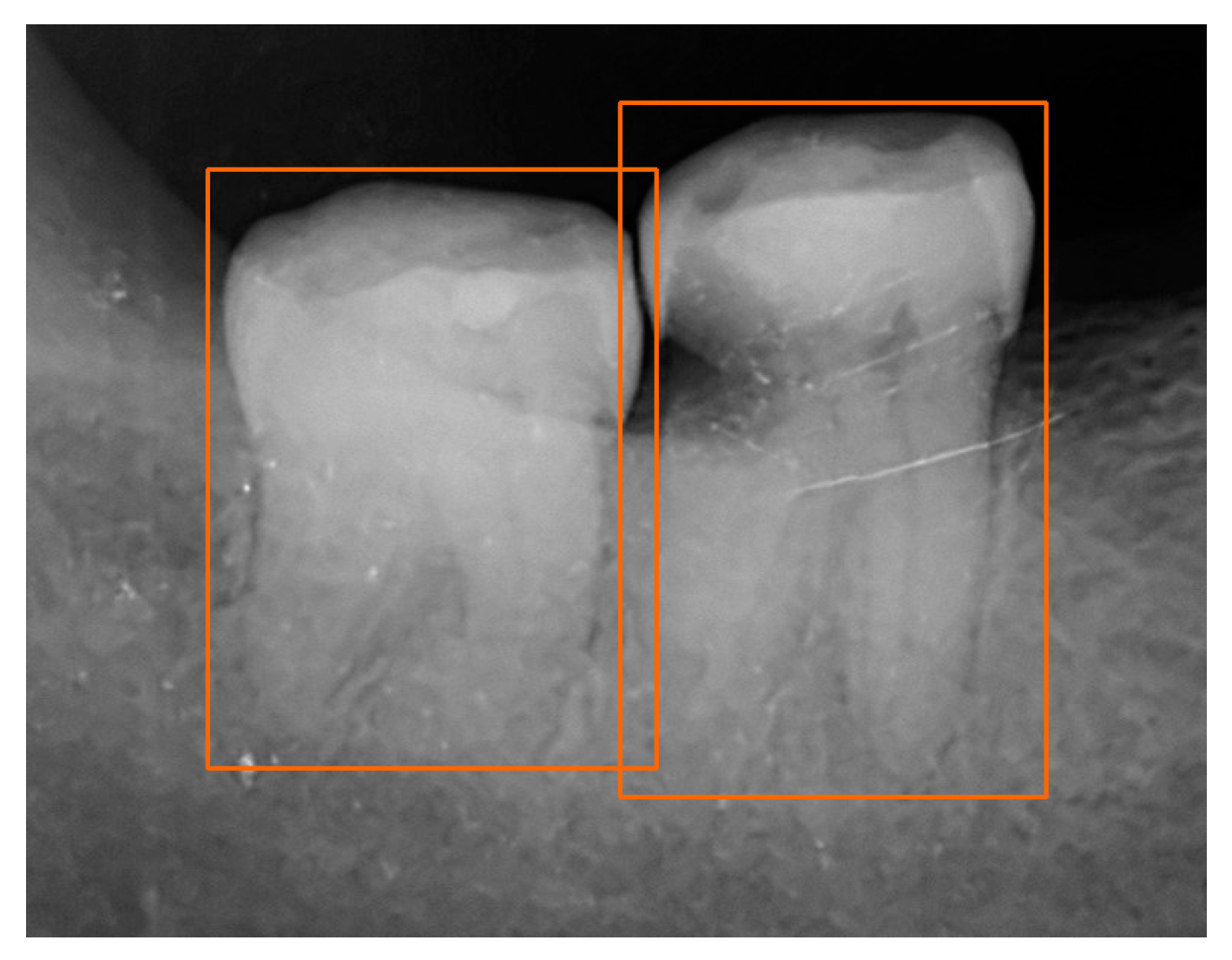

2.1. Dataset

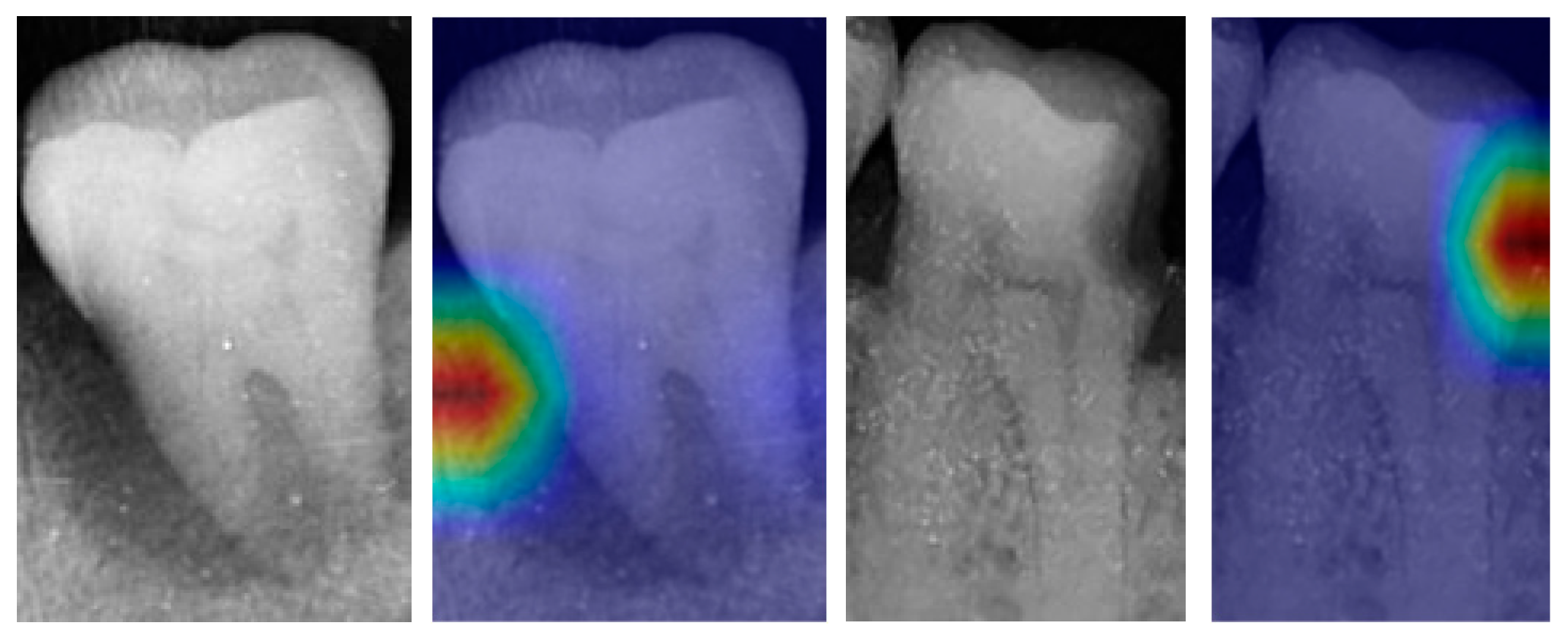

2.2. Proposed Method

2.3. Performance Metrics

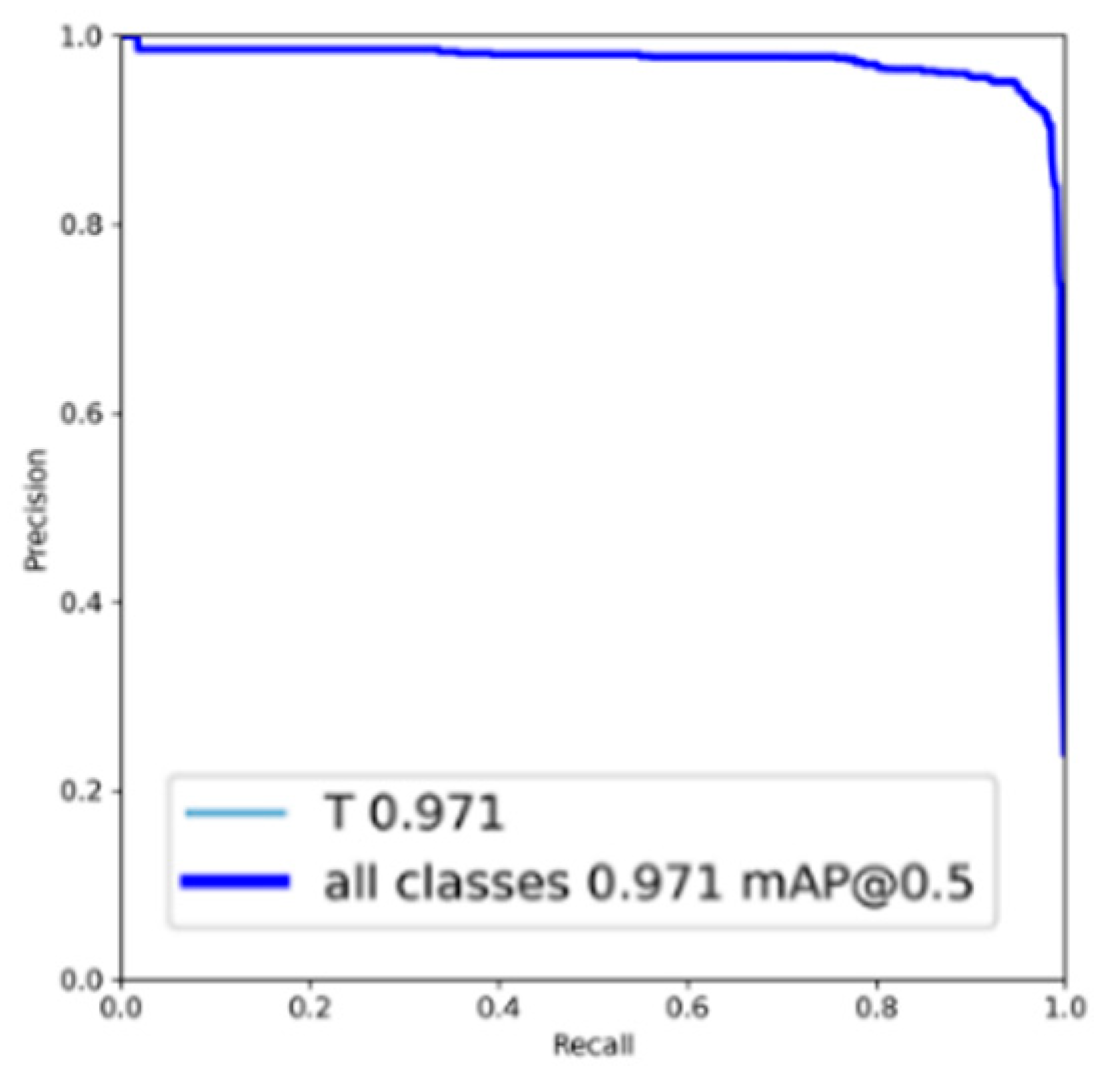

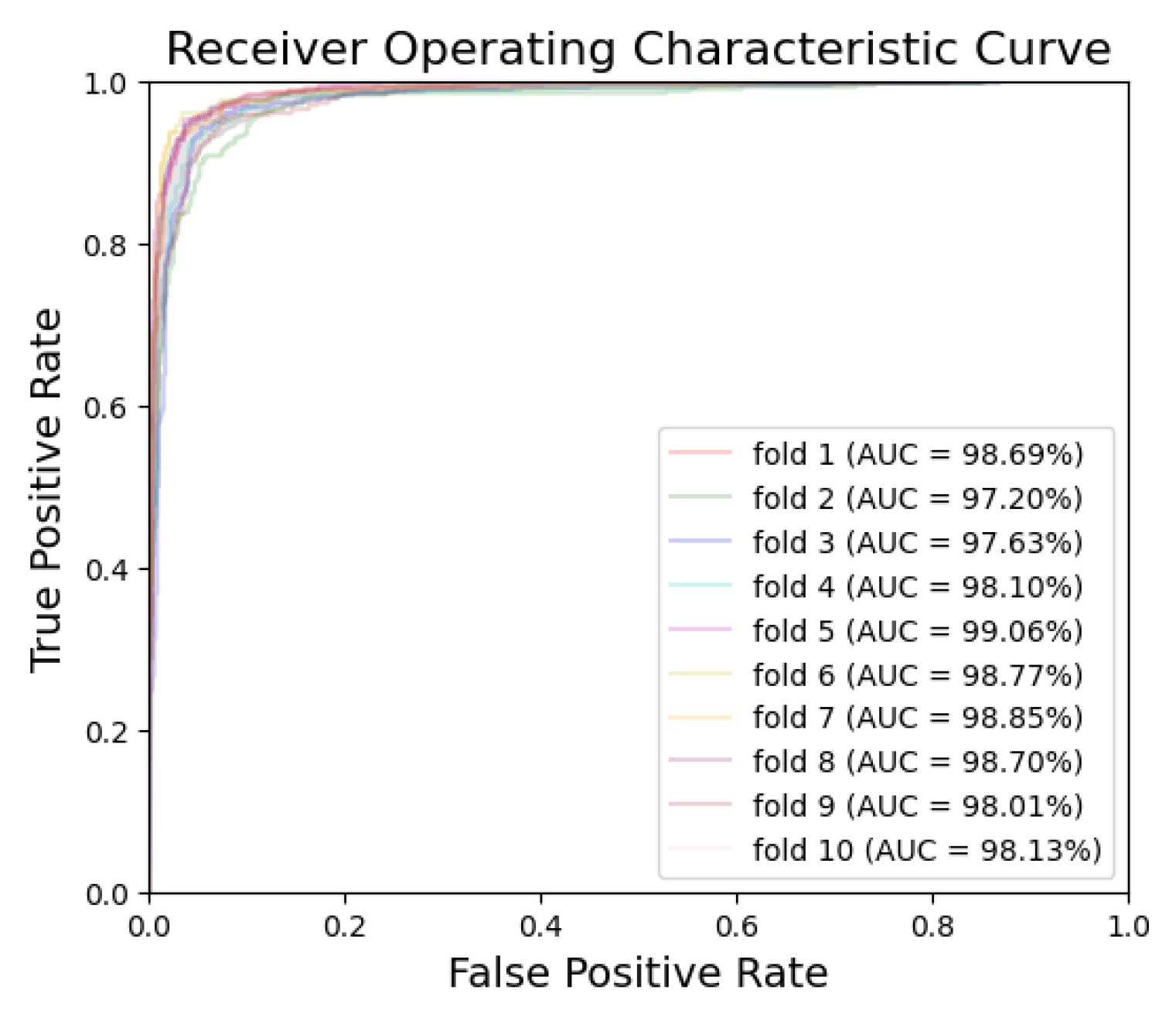

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Panayides, A.S.; Amini, A.; Filipovic, N.D.; Sharma, A.; Tsaftaris, S.A.; Young, A.; Foran, D.; Do, N.; Golemati, S.; Kurc, T.; et al. AI in medical imaging informatics: Current challenges and future directions. IEEE J. Biomed. Health Inform. 2020, 24, 1837–1857. [Google Scholar] [CrossRef]

- Kishimoto, T.; Goto, T.; Matsuda, T.; Iwawaki, Y.; Ichikawa, T. Application of artificial intelligence in the dental field: A literature review. J. Prosthodont. Res. 2022, 66, 19–28. [Google Scholar] [CrossRef]

- Suhail, Y.; Upadhyay, M.; Chhibber, A.; Kshitiz. Machine learning for the diagnosis of orthodontic extractions: A computational analysis using ensemble learning. Bioengineering 2020, 7, 55. [Google Scholar] [CrossRef]

- Schwendicke, F.; Golla, T.; Dreher, M.; Krois, J. Convolutional neural networks for dental image diagnostics: A scoping review. J. Dent. 2019, 91, 103226. [Google Scholar] [CrossRef] [PubMed]

- Rao, R.S.; Shivanna, D.B.; Lakshminarayana, S.; Mahadevpur, K.S.; Alhazmi, Y.A.; Bakri, M.M.H.; Alharbi, H.S.; Alzahrani, K.J.; Alsharif, K.F.; Banjer, H.J.; et al. Ensemble deep-learning-based prognostic and prediction for recurrence of sporadic odontogenic keratocysts on hematoxylin and eosin stained pathological images of incisional biopsies. J. Pers. Med. 2022, 12, 1220. [Google Scholar] [CrossRef] [PubMed]

- Murata, M.; Ariji, Y.; Ohashi, Y.; Kawai, T.; Fukuda, M.; Funakoshi, T.; Kise, Y.; Nozawa, M.; Katsumata, A.; Fujita, H.; et al. Deep-learning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol. 2019, 35, 301–307. [Google Scholar] [CrossRef]

- Celik, M.E. Deep learning based detection tool for impacted mandibular third molar teeth. Diagnostics 2022, 12, 942. [Google Scholar] [CrossRef] [PubMed]

- Chuo, Y.; Lin, W.M.; Chen, T.Y.; Chan, M.L.; Chang, Y.S.; Lin, Y.R.; Lin, Y.J.; Shao, Y.H.; Chen, C.A.; Chen, S.L.; et al. A high-accuracy detection system: Based on transfer learning for apical lesions on periapical radiograph. Bioengineering 2022, 9, 777. [Google Scholar] [CrossRef]

- Chen, Y.C.; Chen, M.Y.; Chen, T.Y.; Chan, M.L.; Huang, Y.Y.; Liu, Y.L.; Lee, P.T.; Lin, G.J.; Li, T.F.; Chen, C.A.; et al. Improving dental implant outcomes: CNN-based system accurately measures degree of peri-implantitis damage on periapical film. Bioengineering 2023, 10, 640. [Google Scholar] [CrossRef]

- Falcao, A.; Bullón, P. A review of the influence of periodontal treatment in systemic diseases. Periodontol. 2000 2019, 79, 117–128. [Google Scholar] [CrossRef]

- Watt, R.G.; Heilmann, A.; Listl, S.; Peres, M.A. London charter on oral health inequalities. J. Dent. Res. 2016, 95, 245–247. [Google Scholar] [CrossRef]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Diagnosis and prediction of periodontally compromised teeth using a deep learning-based convolutional neural network algorithm. J. Periodontal Implant Sci. 2018, 48, 114–123. [Google Scholar] [CrossRef]

- Li, H.; Zhou, J.; Zhou, Y.; Chen, Q.; She, Y.; Gao, F.; Xu, Y.; Chen, J.; Gao, X. An interpretable computer-aided diagnosis method for periodontitis from panoramic radiographs. Front. Physiol. 2021, 12, 655556. [Google Scholar] [CrossRef]

- Chang, J.; Chang, M.F.; Angelov, N.; Hsu, C.Y.; Meng, H.W.; Sheng, S.; Glick, A.; Chang, K.; He, Y.R.; Lin, Y.B.; et al. Application of deep machine learning for the radiographic diagnosis of periodontitis. Clin. Oral Investig. 2022, 26, 6629–6637. [Google Scholar] [CrossRef] [PubMed]

- Sornam, M.; Prabhakaran, M. A new linear adaptive swarm intelligence approach using back propagation neural network for dental caries classification. In Proceedings of the 2017 IEEE International Conference on Power, Control, Signals and Instrumentation Engineering, Chennai, India, 21–22 September 2017. [Google Scholar]

- Lee, J.H.; Kim, D.H.; Jeong, S.N.; Choi, S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018, 77, 106–111. [Google Scholar] [CrossRef]

- Geetha, V.; Aprameya, K.S.; Hinduja, D.M. Dental caries diagnosis in digital radiographs using back-propagation neural network. Health Inf. Sci. Syst. 2020, 8, 8. [Google Scholar] [CrossRef]

- Jusman, Y.; Anam, M.K.; Puspita, S.; Saleh, E. Machine learnings of dental caries images based on Hu moment invariants features. In Proceedings of the 2021 International Seminar on Application for Technology of Information and Communication, Semarangin, Indonesia, 18–19 September 2021. [Google Scholar]

- Imak, A.; Celebi, A.; Siddique, K.; Turkoglu, M.; Sengur, A.; Salam, I. Dental caries detection using score-based multi-input deep convolutional neural network. IEEE Access 2022, 10, 18320–18329. [Google Scholar] [CrossRef]

- Bui, T.H.; Hamamoto, K.; Paing, M.P. Automated caries screening using ensemble deep learning on panoramic radiographs. Entropy 2022, 24, 1358. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Li, H.; Zhao, Y.; Zhao, J.; Wang, J. Dental disease detection on periapical radiographs based on deep convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 649–661. [Google Scholar] [CrossRef]

- Li, S.; Liu, J.; Zhou, Z.; Zhou, Z.; Wu, X.; Li, Y.; Wang, S.; Liao, W.; Ying, S.; Zhao, Z. Artificial intelligence or caries and periapical periodontitis detection. J. Dent. 2022, 122, 104107. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023. [Google Scholar]

- Srivastava, S.; Divekar, A.V.; Anilkumar, C.; Naik, I.; Kulkarni, V.; Pattabiraman, V. Comparative analysis of deep learning image detection algorithms. J. Big Data 2021, 8, 66. [Google Scholar] [CrossRef]

- Pizer, S.M.; Amburn, E.P.; Austin, J.D.; Cromartie, R.; Geselowitz, A.; Greer, T.; Romeny, B.H.; Zimmerman, J.B.; Zuiderveld, K. Adaptive histogram equalization and its variations. Comput. Vis. Graph. Image Process. 1987, 39, 355–368. [Google Scholar] [CrossRef]

- Pizer, S.M.; Johnston, R.E.; Ericksen, J.P.; Yankaskas, B.C.; Muller, K.E. Contrast-limited adaptive histogram equalization: Speed and effectiveness. In Proceedings of the First Conference on Visualization in Biomedical Computing, Atlanta, GA, USA, 22–25 May 1990. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision, Bombay, India, 4–7 January 1998. [Google Scholar]

- Pandey, P.; Bhan, A.; Dutta, M.K.; Travieso, C.M. Automatic image processing based dental image analysis using automatic gaussian fitting energy and level sets. In Proceedings of the 2017 International Conference and Workshop on Bioinspired Intelligence, Funchal, Portugal, 10–12 July 2017. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Tan, M.; Le, Q.V. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th Interna-tional Conference on Machine Learning, Long Beach, CA, USA, 10–15 June 2019. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

| Dataset | Normal | Periodontitis | Dental Caries | Both Diseases | Total | |

|---|---|---|---|---|---|---|

| Original | Training and Validation | 700 | 1000 | 400 | 500 | 2600 |

| Testing | 100 | 50 | 50 | 50 | 250 | |

| Augmentation | Training and Validation | 3300 | 1000 | 1600 | 1500 | 7400 |

| Testing | 300 | 150 | 150 | 150 | 750 | |

| Total | Training | 3200 | 1600 | 1600 | 1600 | 8000 |

| Validation | 800 | 400 | 400 | 400 | 2000 | |

| Testing | 400 | 200 | 200 | 200 | 1000 |

| Hardware Platform | Version |

| CPU | 12th Gen Intel Core i5-12400 |

| GPU | NVIDIA GeForce RTX 3070 |

| DRAM | 32 GB DDR4 3200 MHz |

| Software Platform | Version |

| Python | 3.7.16 |

| Tensorflow | 2.9.1 |

| PyTorch | 1.7.1 |

| Hyperparameter | Value |

|---|---|

| Initial learning rate | 0.001 |

| Max epoch | 50 |

| Batch size | 50 |

| Learning drop period | 4 |

| Learning rate drop factor | 0.316 |

| Model | Disease | Accuracy | Sensitivity | Specificity | PPV | NPV | ROC AUC | PR AUC |

|---|---|---|---|---|---|---|---|---|

| (%) | (%) | (%) | (%) | (%) | (%) | (%) | ||

| Xception | Periodontitis | 89.76 | 89.26 | 90.59 | 86.40 | 92.54 | 94.58 | 93.34 |

| Dental caries | 88.13 | 86.98 | 88.41 | 83.50 | 91.21 | 93.49 | 90.44 | |

| MobileNetV2 | Periodontitis | 91.42 | 91.21 | 91.60 | 87.73 | 94.01 | 96.86 | 95.89 |

| Dental caries | 89.03 | 88.25 | 89.51 | 85.32 | 91.87 | 96.31 | 94.76 | |

| EfficientNet-B0 | Periodontitis | 95.44 | 93.28 | 96.88 | 95.24 | 95.59 | 98.67 | 98.38 |

| Dental caries | 94.94 | 94.15 | 95.47 | 93.30 | 96.08 | 98.31 | 97.55 |

| Model | Periodontitis | Dental Caries | ||||

|---|---|---|---|---|---|---|

| Minimum (%) | Maximum (%) | Mean (%) | Minimum (%) | Maximum (%) | Mean (%) | |

| Xception | 88.98 | 91.66 | 89.76 | 86.89 | 89.11 | 88.13 |

| MobileNetV2 | 89.98 | 92.51 | 91.42 | 87.36 | 90.42 | 89.03 |

| EfficientNet-B0 | 94.60 | 96.30 | 95.44 | 92.80 | 96.40 | 94.94 |

| Disease | TP | FN | TN | FP | Accuracy | Sensitivity | Specificity | PPV | NPV | ROC AUC | PR AUC | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (%) | (%) | (%) | (%) | (%) | (%) | (%) | ||||||

| Fold-1 | Periodontitis | 369 | 31 | 586 | 14 | 95.50 | 92.25 | 97.67 | 96.34 | 94.98 | 98.80 | 98.38 |

| Dental caries | 381 | 19 | 574 | 26 | 95.50 | 95.25 | 95.67 | 93.61 | 96.80 | 98.69 | 98.05 | |

| Fold-2 | Periodontitis | 361 | 39 | 585 | 15 | 94.60 | 90.25 | 97.50 | 96.01 | 93.75 | 98.07 | 97.78 |

| Dental caries | 363 | 37 | 565 | 35 | 92.80 | 90.75 | 94.17 | 91.21 | 93.85 | 97.20 | 96.22 | |

| Fold-3 | Periodontitis | 369 | 31 | 580 | 20 | 94.90 | 92.25 | 96.67 | 94.86 | 94.93 | 98.37 | 98.10 |

| Dental caries | 377 | 23 | 568 | 32 | 94.50 | 94.25 | 94.67 | 92.18 | 96.11 | 97.63 | 95.99 | |

| Fold-4 | Periodontitis | 371 | 29 | 576 | 24 | 94.70 | 92.75 | 96.00 | 93.92 | 95.21 | 98.68 | 98.36 |

| Dental caries | 372 | 28 | 572 | 28 | 94.40 | 93.00 | 95.33 | 93.00 | 95.33 | 98.10 | 97.45 | |

| Fold-5 | Periodontitis | 380 | 20 | 582 | 18 | 96.20 | 95.00 | 97.00 | 95.48 | 96.68 | 98.88 | 98.73 |

| Dental caries | 381 | 19 | 579 | 21 | 96.00 | 95.25 | 96.50 | 94.78 | 96.82 | 99.06 | 98.64 | |

| Fold-6 | Periodontitis | 376 | 24 | 583 | 17 | 95.90 | 94.00 | 97.17 | 95.67 | 96.05 | 99.05 | 98.87 |

| Dental caries | 384 | 16 | 580 | 20 | 96.40 | 96.00 | 96.67 | 95.05 | 97.32 | 98.77 | 98.45 | |

| Fold-7 | Periodontitis | 377 | 23 | 586 | 14 | 96.30 | 94.25 | 97.67 | 96.42 | 96.22 | 99.14 | 98.89 |

| Dental caries | 375 | 25 | 588 | 12 | 96.30 | 93.75 | 98.00 | 96.90 | 95.92 | 98.85 | 98.44 | |

| Fold-8 | Periodontitis | 382 | 18 | 575 | 25 | 95.70 | 95.50 | 95.83 | 93.86 | 96.96 | 98.70 | 98.51 |

| Dental caries | 378 | 22 | 578 | 22 | 95.60 | 94.50 | 96.33 | 94.50 | 96.33 | 98.70 | 98.15 | |

| Fold-9 | Periodontitis | 371 | 29 | 575 | 25 | 94.60 | 92.75 | 95.83 | 93.69 | 95.20 | 98.33 | 97.80 |

| Dental caries | 379 | 21 | 557 | 43 | 93.60 | 94.75 | 92.83 | 89.81 | 96.37 | 98.01 | 96.92 | |

| Fold-10 | Periodontitis | 375 | 25 | 585 | 15 | 96.00 | 93.75 | 97.50 | 96.15 | 95.90 | 98.63 | 98.36 |

| Dental caries | 376 | 24 | 567 | 33 | 94.30 | 94.00 | 94.50 | 91.93 | 95.94 | 98.13 | 97.20 | |

| Mean | Periodontitis | -- | -- | -- | -- | 95.44 | 93.28 | 96.88 | 95.24 | 95.59 | 98.67 | 98.38 |

| Dental caries | -- | -- | -- | -- | 94.94 | 94.15 | 95.47 | 93.30 | 96.08 | 98.31 | 97.55 |

| Method | Disease | Accuracy (%) | Sensitivity (%) | Specificity (%) | PPV (%) | NPV (%) | ROC AUC (%) | PR AUC (%) |

|---|---|---|---|---|---|---|---|---|

| With image processing | Periodontitis | 95.44 | 93.28 | 96.88 | 95.24 | 95.59 | 98.67 | 98.38 |

| Dental caries | 94.94 | 94.15 | 95.47 | 93.30 | 96.08 | 98.31 | 97.55 | |

| Without image processing | Periodontitis | 93.05 | 92.10 | 93.68 | 90.77 | 94.72 | 96.72 | 96.22 |

| Dental caries | 92.91 | 92.33 | 93.30 | 90.23 | 94.80 | 96.49 | 95.83 |

| Method | CNN Network | Accuracy (%) | Sensitivity (%) | Specificity (%) | PPV (%) | NPV (%) | ROC AUC (%) |

|---|---|---|---|---|---|---|---|

| [16] | GoogLeNet InceptionV3 | 82.0 | 81.0 | 83.0 | 82.7 | 81.4 | 84.5 |

| Proposed method | EfficientNet-B0 | 94.94 | 94.15 | 95.47 | 93.30 | 96.08 | 98.31 |

| Method | CNN Network | Disease | Sensitivity (%) | Specificity (%) | PPV (%) | NPV (%) | ROC AUC (%) |

|---|---|---|---|---|---|---|---|

| [22] | Modified ResNet-18 Backbone | Periapical periodontitis | 82.00 | 84.00 | 83.67 | 82.35 | 87.90 |

| Dental caries | 83.50 | 82.00 | 82.27 | 83.25 | 87.50 | ||

| Proposed method | EfficientNet-B0 | Periodontitis | 93.28 | 96.88 | 95.24 | 95.59 | 98.67 |

| Dental caries | 94.15 | 95.47 | 93.30 | 96.08 | 98.31 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, I.D.S.; Yang, C.-M.; Chen, M.-J.; Chen, M.-C.; Weng, R.-M.; Yeh, C.-H. Deep Learning-Based Recognition of Periodontitis and Dental Caries in Dental X-ray Images. Bioengineering 2023, 10, 911. https://doi.org/10.3390/bioengineering10080911

Chen IDS, Yang C-M, Chen M-J, Chen M-C, Weng R-M, Yeh C-H. Deep Learning-Based Recognition of Periodontitis and Dental Caries in Dental X-ray Images. Bioengineering. 2023; 10(8):911. https://doi.org/10.3390/bioengineering10080911

Chicago/Turabian StyleChen, Ivane Delos Santos, Chieh-Ming Yang, Mei-Juan Chen, Ming-Chin Chen, Ro-Min Weng, and Chia-Hung Yeh. 2023. "Deep Learning-Based Recognition of Periodontitis and Dental Caries in Dental X-ray Images" Bioengineering 10, no. 8: 911. https://doi.org/10.3390/bioengineering10080911

APA StyleChen, I. D. S., Yang, C.-M., Chen, M.-J., Chen, M.-C., Weng, R.-M., & Yeh, C.-H. (2023). Deep Learning-Based Recognition of Periodontitis and Dental Caries in Dental X-ray Images. Bioengineering, 10(8), 911. https://doi.org/10.3390/bioengineering10080911