A Comparative Study of Automated Machine Learning Platforms for Exercise Anthropometry-Based Typology Analysis: Performance Evaluation of AWS SageMaker, GCP VertexAI, and MS Azure

Abstract

1. Introduction

2. Methods

2.1. Dataset Preparation and Definition of Each Exercise

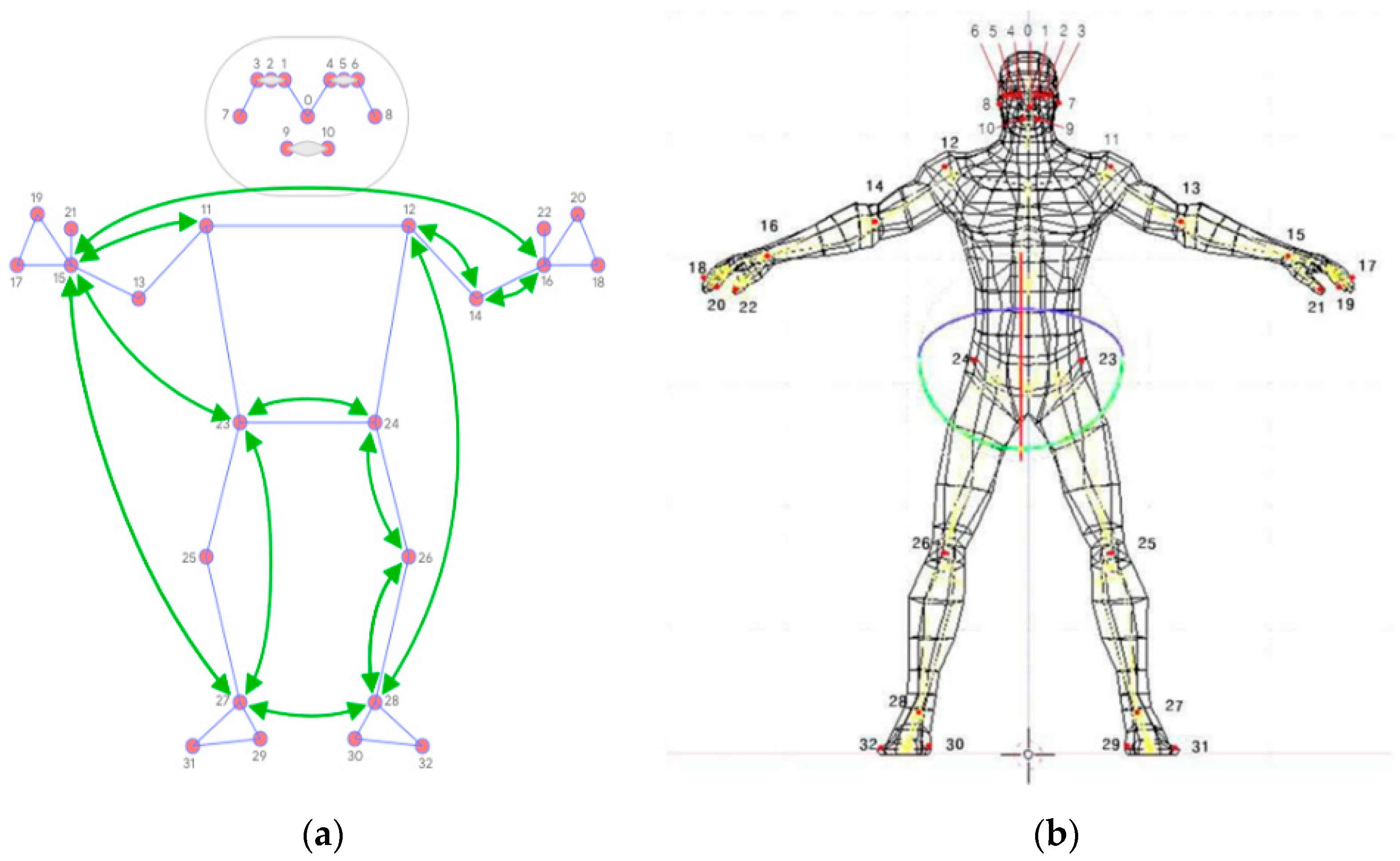

2.2. Skeleton Data Extraction

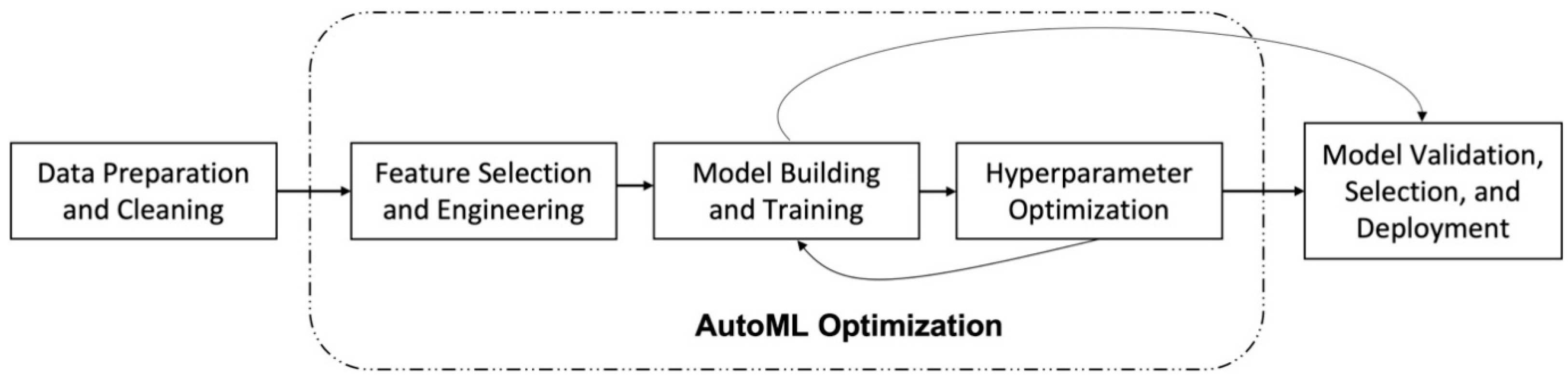

2.3. AutoML Processing

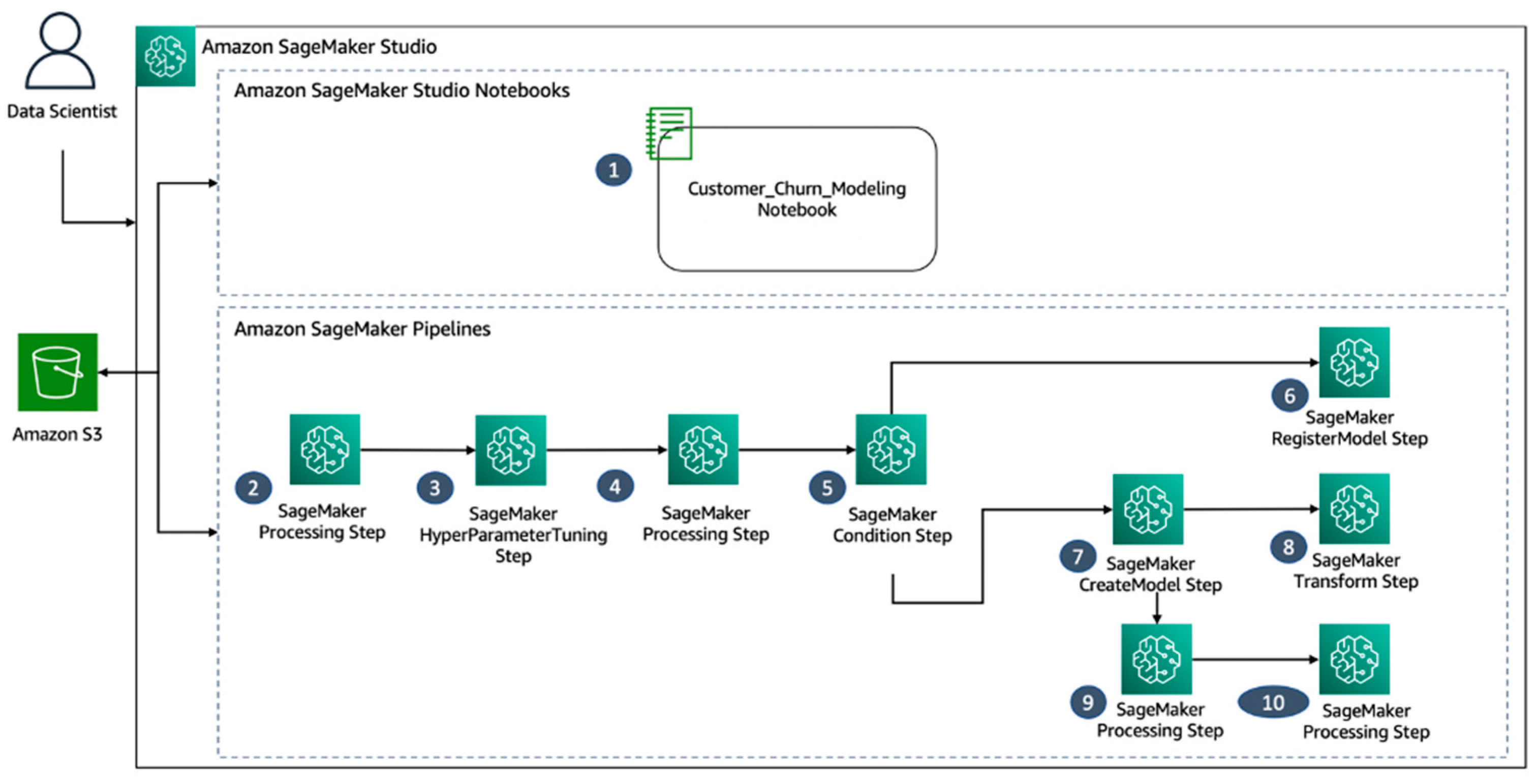

2.4. Amazon Web Service (AWS): SageMaker

- Sign up for Amazon Web Services (AWS) and navigate to Amazon SageMaker.

- Select “Create Notebook Instance” and configure the notebook instance.

- In “Permissions and Encryption”, select “Create new role” and choose the bucket in the “Create IAM role”.

- Once the notebook instance is created and its status changes to “InService”, open Jupyter and create a new conda_python3 file.

- Access the data storage section by clicking “S3” under “Storage” and create a new bucket.

- Upload the data file to the newly created bucket and folder.

- Register your name in the control panel by clicking “Add User”.

- Start the machine learning process by clicking “Start App” and selecting the desired machine learning tasks and components.

- Import the data file from Amazon S3 and set the experimental name and target.

- Set the objective metric and runtime and review the settings before clicking “Create Experiment”.

- “Development of a Customer Churn Model Utilizing Studio Notebooks within an Integrated Development Environment (IDE)”

- “Data Preprocessing for Feature Construction and Division into Training, Validation, and Test Datasets”

- “Hyperparameter Tuning via the SageMaker XGBoost Framework to Determine the Optimal Model Based on AUC Score”

- “Evaluation of the Optimal Model Utilizing the Test Dataset”

- “Determination of Adherence to a Specified AUC Threshold for Model Selection”

- “Registration of the Trained Churn Model in the SageMaker Model Registry”

- “Creation of a SageMaker Model Using Artifacts from the Optimal Model”

- “Batch Transformation of the Dataset via the Created Model”

- “Creation of a Configuration File for Model Explainability and Bias Reports, Including Columns to Check for 10. Bias and Baseline Values for SHAPley Plot Generation”

- “Use of Clarify with the Configuration File for Generation of Model Explainability and Bias Reports” [17].

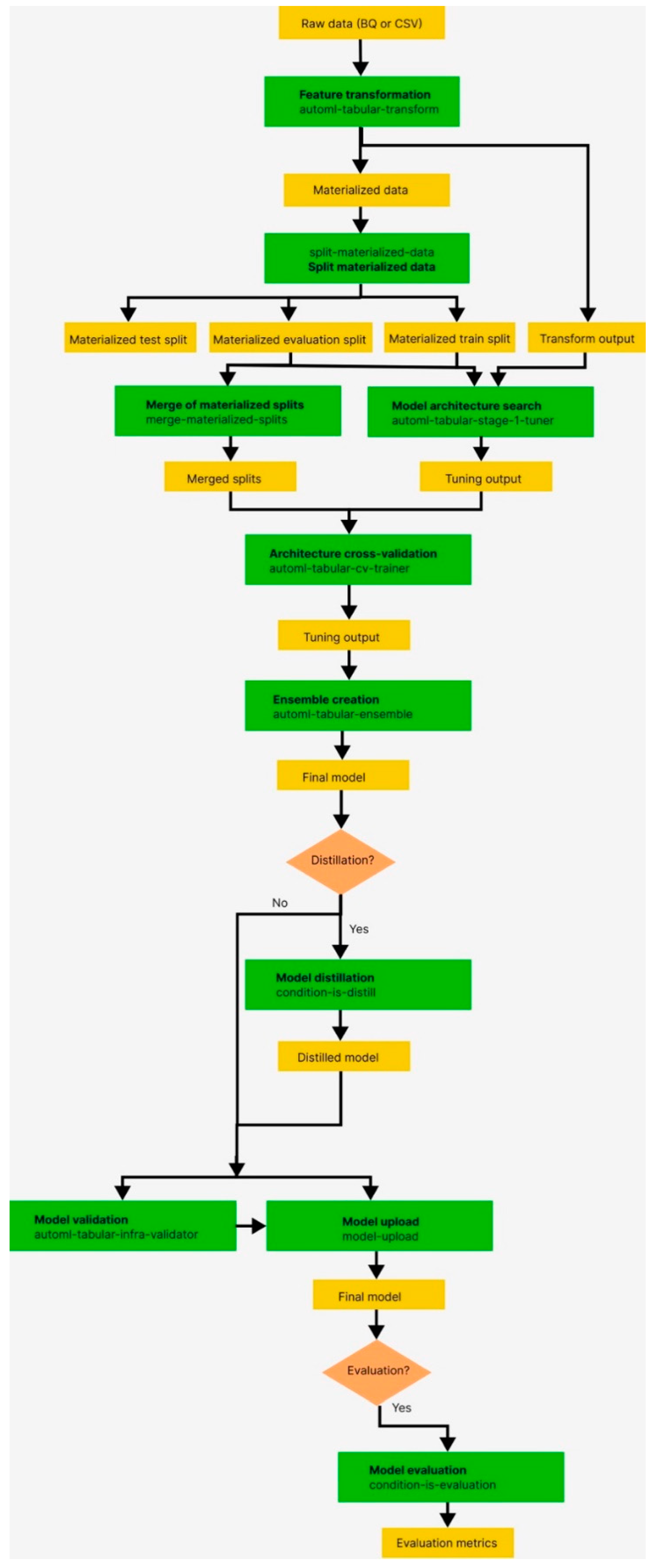

2.5. Google Cloud Platform (GCP): Vertex AI

- After signing up for the Google Cloud Platform and entering payment information, the Console was accessed.

- The project was named in Google Cloud Storage—Bucket and the CSV file for learning was uploaded in the upper left menu.

- The Google Cloud Artificial Intelligence—Vertex AI—Dataset location was then accessed.

- AutoML Tables could create supervised machine learning models from tabular data with a variety of data types and problem types such as binary classification, multi-class classification, and regression.

- Vertex AI is the successor to AutoML Tables and offers a unified API and new features. Hence, this study was conducted in the Vertex menu.

- In the Vertex AI—Dataset, the “Dataset Name” was recorded, and the “Table Type—Regression/Classification” was selected from the “Data Type and Target Selection” menu.

- The region was then selected from the “Region” menu and “Create” was clicked.

- In the Dataset section, “Add Data to Dataset—Select Data Source—Select CSV File from Cloud Storage” was clicked.

- The file path was browsed to specify the file that had already been uploaded to the cloud, and “Continue” was clicked.

- The maximum file size allowed is 10 GB, which was sufficient for the 4 GB of data.

- The “Generate Statistics” button was clicked on the right to check the outline of the data to be learned.

- To train a new model, the “Learn new model” button in the upper right corner was clicked, and “Train new model” was chosen.

- In the “Train new model—learning method” submenu, “Classification” was selected under “Objective”, and “AutoML” was chosen under “Model Training Method”.

- “Continue” was clicked, and the “Train new model—model details” screen was reached.

- “New model learning” was selected, and the “Target column” was set to “class”.

- In the data partitioning menu, 80% of the data was allocated for training, 10% for validation, and 10% for testing.

- “Continue” was clicked, and the “Train new model—learning options” menu was reached.

- In “Customize Transformation Options”, “Automatic Option” was selected among the options of auto, categorical, text, timestamp, and number.

- “Continue” was clicked, and the “Train new model—computing and pricing” menu was reached.

- “7” was entered as the maximum node time for model training in the “Budget” menu, and “Enable Early Stopping” was selected.

- The learning process was started by clicking the “Start learning” button at the bottom of the “Train new model” menu.

- The pipeline components in the workflow are as follows:

- Feature-Transform-Engine: This component performs feature extraction. For further information, refer to the Feature Transform Engine.

- Split-Materialized-Data: This component splits the materialized data into three sets, namely, the training set, the evaluation set, and the test set.

- 3.

- Merge-Materialized-Splits: This component merges the materialized evaluation and training splits.

- 4.

- AutoML-Tabular-Stage-1-Tuner: This component retrieves the model architecture and optimizes the hyperparameters.

- The architecture is defined by a set of hyperparameters, including the model type (such as neural networks or boosted trees) and model parameters.

- A model is trained for each considered architecture.

- 5.

- AutoML-Tabular-CV-Trainer: This component cross-validates the architecture by training the model on different parts of the input data.

- The best-performing architecture from the previous steps is considered.

- Approximately 10 of the best architectures are selected, with the exact number determined by the learning budget.

- 6.

- AutoML-Tabular-Ensemble: These component ensembles the architecture best suited for generating the final model.

- The following diagram represents K-fold cross-validation using bagging.

- 7.

- Condition-Is-Discip (Optional): This component generates smaller ensemble models to optimize prediction latency and cost.

- 8.

- AutoML-Tabular-Infra-Validator: This component validates the trained model to ensure its validity.

- 9.

- Model-Upload: This component is responsible for uploading the validated model.

- 10.

2.6. Microsoft (MS); Azure

- Click on “Get Started” and log in.

- Register account information and set relevant details in the “Create Subscription” section, including name, account, billing profile, bill, and plan.

- Select the “Quick Start Center” and choose “Get started with data analytics, machine learning, and intelligence”.

- From the options, select “Quickly Build and Deploy Models with Azure Machine Learning” and initiate the creation process.

- In the “Create Machine Learning Workspace” section, enter the relevant details, including the region and storage account.

- Review the contents and initiate the deployment process. Once the deployment is complete, access the resource by clicking the “Go to resource” button.

- In the “Work with models in Azure Machine Learning Studio” section, click the “Start Studio” button and select “Automated ML Tasks” under the “New” menu.

- Initiate the process to create a new automated ML job by uploading the relevant file.

- In the task configuration, set the experiment name and select the subject column. Select the appropriate compute type, either “Compute Cluster” or “Compute Instance”.

- Determine the virtual machine settings, including the computer name, minimum and maximum number of nodes, and idle time before scaling down.

- Start the learning process by specifying the action and settings, such as checking classification and using deep learning.

- Configure additional settings and view the Featurization Settings.

- In “Validation and Testing”, set the validation and testing percentage of the data to 10.

- Upon entering the relevant details and clicking the “Finish” button, the learning process will commence after loading for approximately 20 s. It is expected to take approximately 2 h to complete the supervised learning process for a file size of 4.48 GB.

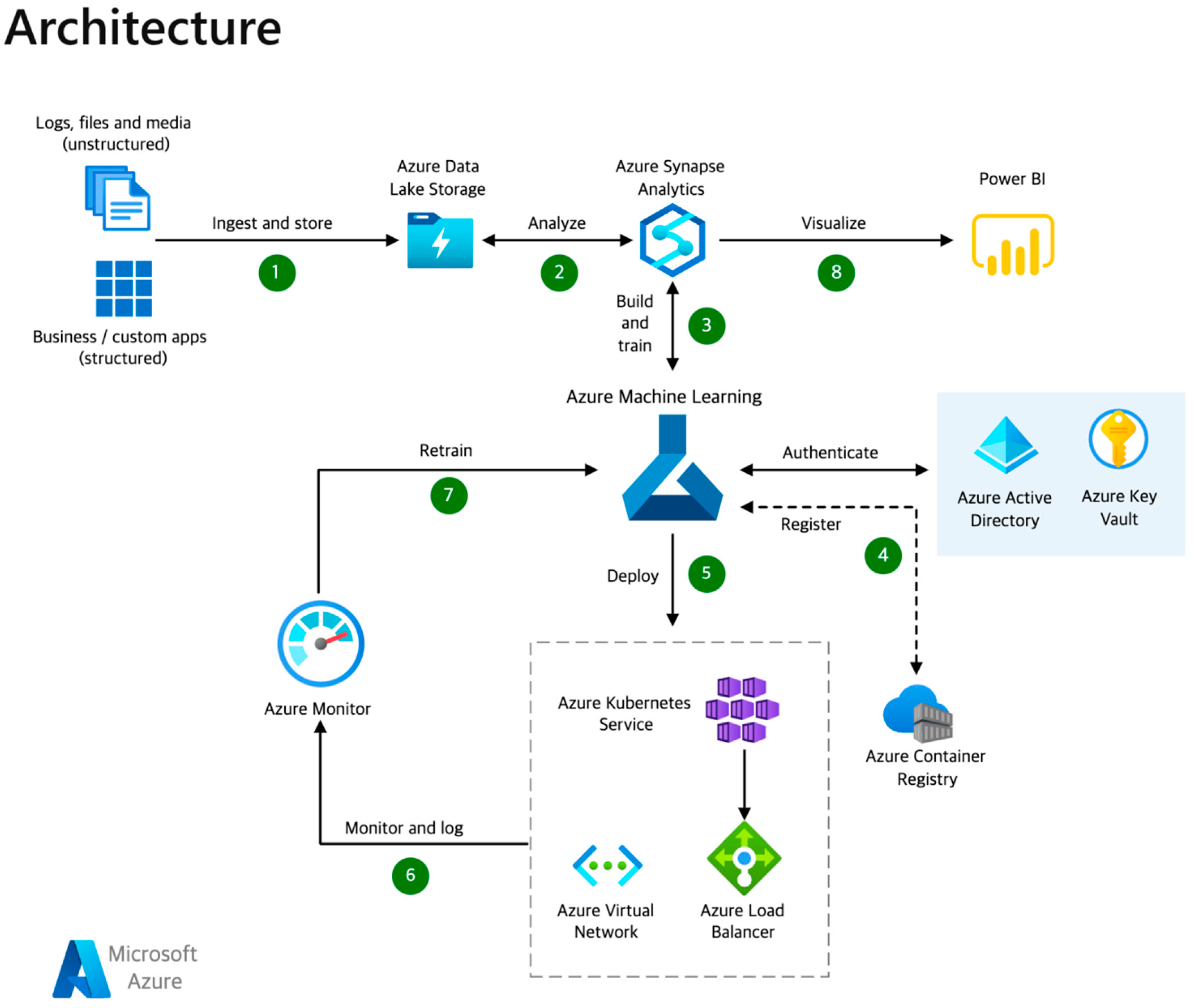

- Dataflow:

- Structured, unstructured, and semi-structured data, such as logs, files, and media, should be gathered into Azure Data Lake Storage Gen2 for efficient dataflow management.

- Datasets should be cleaned, transformed, and analyzed using Apache Spark in Azure Synapse Analytics for optimal data processing.

- Machine learning models should be constructed and trained in Azure Machine Learning for effective model development.

- Access and authentication for data and the ML workspace should be managed with Azure Active Directory and Azure Key Vault, while container management should be overseen with Azure Container Registry.

- Machine learning models should be deployed to a container with Azure Kubernetes Services, while ensuring deployment security and management through Azure VNets and Azure Load Balancer.

- Model performance should be monitored through log metrics and monitoring with Azure Monitor for effective model evaluation.

- Models should be continuously retrained as necessary in Azure Machine Learning for optimal model performance.

3. Classification Evaluation Metrics

- True (T): The prediction is accurate.

- False (F): The prediction is inaccurate.

- Positive (P): The model predicts a positive outcome.

- Negative (N): The model predicts a negative outcome.

- True positive (TP): The model correctly predicted a positive outcome, and the actual answer was indeed positive.

- True negative (TN): The model correctly predicted a negative outcome, and the actual answer was indeed negative.

- False positive (FP): The model incorrectly predicted a positive outcome, but the actual answer was negative.

- False negative (FN): The model incorrectly predicted a negative outcome, but the actual answer was positive.

- False Positive Rate (FPR): The false positive rate provides the proportion of incorrect predictions in the positive class.

4. Experimental Results

4.1. Model Evaluation

4.2. Model Comparison

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Diaz, S.; Stephenson, J.B.; Labrador, M.A. Use of wearable sensor technology in gait, balance, and range of motion analysis. Appl. Sci. 2019, 10, 234. [Google Scholar] [CrossRef]

- Choi, W.; Heo, S. Deep learning approaches to automated video classification of upper limb tension test. Healthcare 2021, 9, 1579. [Google Scholar] [CrossRef] [PubMed]

- GochOO, M.; Kim, T.; Bae, J.; Kim, D.; Kim, Y.; Cho, J. Stochastic Remote Sensing Event Classification over Adaptive Posture Estimation via Multifused Data and Deep Belief Network. Remote Sens. 2021, 13, 912. [Google Scholar] [CrossRef]

- Liu, W.; Huang, P.-W.; Liao, W.-C.; Chuang, W.-H.; Wang, P.-C.; Wang, C.-C.; Lai, K.-L.; Chen, Y.-S.; Wu, C.-H. Vision-Based Estimation of MDS-UPDRS Scores for Quantifying Parkinson’s Disease Tremor Severity. Med. Image Anal. 2022, 75, 102754. [Google Scholar]

- Dubey, S.; Dixit, M. A Comprehensive Survey on Human Pose Estimation Approaches. Multimed. Syst. 2023, 29, 167–195. [Google Scholar] [CrossRef]

- Chung, J.-L.; Ong, L.-Y.; Leow, M.-C. Comparative Analysis of Skeleton-Based Human Pose Estimation. Future Internet 2022, 14, 380. [Google Scholar] [CrossRef]

- Garg, S.; Saxena, A.; Gupta, R. Yoga Pose Classification: A CNN and MediaPipe Inspired Deep Learning Approach for Real-World Application. J. Ambient Intell. Humaniz. Comput. 2022, 1–12. [Google Scholar] [CrossRef]

- Liu, A.-L.; Chu, W.-T. A Posture Evaluation System for Fitness Videos Based on Recurrent Neural Network. In Proceedings of the 2020 International Symposium on Computer, Consumer and Control (IS3C), Taichung, Taiwan, 28–30 May 2020; pp. 185–188. [Google Scholar]

- Yang, Y.; Wang, X.; Liu, Y.; Huang, D.; Yang, Q. Estimate of Head Posture Based on Coordinate Transformation with MP-MTM-LSTM Network. Int. J. Pattern Recognit. Artif. Intell. 2020, 34, 2059031. [Google Scholar] [CrossRef]

- Lin, C.-B.; Lin, Y.-H.; Huang, P.-J.; Wu, Y.-C.; Liu, K.-H. A Framework for Fall Detection Based on OpenPose Skeleton and LSTM/GRU Models. Appl. Sci. 2020, 11, 329. [Google Scholar] [CrossRef]

- Sorokina, V.; Ablameyko, S. Extraction of Human Body Parts in Image Using Convolutional Neural Network and Attention Model. In Proceedings of the 15th International Conference, Minsk, Belarus, 21–24 September 2021. [Google Scholar]

- Lai, D.K.-H.; Yu, H.; Hung, C.-K.; Lo, K.-L.; Tang, F.-H.K.; Ho, K.-C.; Leung, C.K.-Y. Dual Ultra-Wideband (UWB) Radar-Based Sleep Posture Recognition System: Towards Ubiquitous Sleep Monitoring. Eng. Regener. 2023, 4, 36–43. [Google Scholar] [CrossRef]

- Waring, J.; Lindvall, C.; Umeton, R. Automated machine learning: Review of the state-of-the-art and opportunities for healthcare. Artif. Intell. Med. 2020, 104, 101822. [Google Scholar] [CrossRef] [PubMed]

- Wan, K.W.; Wong, C.H.; Ip, H.F.; Fan, D.; Yuen, P.L.; Fong, H.Y.; Ying, M. Evaluation of the performance of traditional machine learning algorithms, convolutional neural network and AutoML Vision in ultrasound breast lesions classification: A comparative study. Quant Imaging Med. Surg. 2021, 11, 1381–1393. [Google Scholar] [CrossRef] [PubMed]

- Siriborvornratanakul, T. Human behavior in image-based Road Health Inspection Systems despite the emerging AutoML. J. Big Data 2022, 9, 96. [Google Scholar] [CrossRef] [PubMed]

- AIHub by the Korea Intelligence Society Agency. 2023. Available online: https://aihub.or.kr/aihubdata/data/list.do?pageIndex=1&currMenu=115&topMenu=100&da-ta-SetSn=&srchOrder=&SrchdataClCode=DATACL001&searchKeyword=&srchDataRealmCode=REALM006 (accessed on 3 January 2023).

- Amazon Web Services. Build, Tune, and Deploy an End-to-End Churn Prediction Model Using Amazon SageMaker Pipelines. 2021. Available online: https://aws.amazon.com/ko/blogs/machine-learning/build-tune-and-deploy-an-end-to-end-churn-prediction-model-using-amazon-sagemaker-pipelines/ (accessed on 15 January 2023).

- Google Cloud. End-to-End AutoML Workflow. 2021. Available online: https://cloud.google.com/vertex-ai/docs/tabular-data/tabular-workflows/e2e-AutoML?hl=ko#end-to-end_on (accessed on 15 January 2023).

- Microsoft. Azure Machine Learning Solution Architecture. 2021. Available online: https://learn.microsoft.com/en-us/azure/architecture/solution-ideas/articles/azure-machine-learning-solution-architecture (accessed on 15 January 2023).

- Zhu, F.; Hua, W.; Zhang, Y. GRU Deep Residual Network for Time Series Classification. In Proceedings of the 2023 IEEE 6th Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), Chongqing, China, 24–26 February 2023; IEEE: Piscataway, NJ, USA, 2023; Volume 6. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling. In Proceedings of the Neural Information Processing Systems, NIPS, Montreal, QC, Canada, 8–13 December 2014; pp. 207–213. [Google Scholar]

- Li, S.; Mingyu, S. A comparison between linear regression, lasso regression, decision tree, XGBoost, and RNN for asset price strategies. In Proceedings of the International Conference on Cyber Security, Artificial Intelligence, and Digital Economy (CSAIDE 2022), Huzhou, China, 15–17 April 2022; SPIE: Bellingham, DC, USA, 2022; Volume 12330. [Google Scholar]

- Pan, H.; Li, Z.; Tian, C.; Wang, L.; Fu, Y.; Qin, X.; Liu, F. The LightGBM-based classification algorithm for Chinese characters speech imagery BCI system. Cogn. Neurodyn. 2023, 17, 373–384. [Google Scholar] [CrossRef] [PubMed]

- Garcia, S.; Luengo, J.; Herrera, F. Data Preprocessing in Data Mining; Springer: Berlin/Heidelberg, Germany, 2015; pp. 23–57. [Google Scholar]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006; Volume 4. [Google Scholar]

- He, X.; Zhao, K.; Chu, X. Automl: A Survey of the State of-the-Art. arXiv 2019, arXiv:1908.00709. [Google Scholar] [CrossRef]

- Zaharia, S.; Rebedea, T.; Trausan-Matu, S. Machine Learning-Based Security Pattern Recognition Techniques for Code Developers. Appl. Sci. 2022, 12, 12463. [Google Scholar] [CrossRef]

- Dong, X.; Yu, Z.; Cao, W.; Shi, Y.; Ma, Q. A survey on ensemble learning. Front. Comput. Sci. 2020, 14, 241–258. [Google Scholar] [CrossRef]

| Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) | Log Loss | Algorithm | ||

|---|---|---|---|---|---|---|---|

| RNNs ML | Colab Pro | 72.95 | 70.46 | 69.28 | 68.57 | 0.85 | RNN |

| 73.75 | 74.55 | 73.68 | 73.11 | 0.71 | LSTM | ||

| 73.26 | 74.55 | 73.33 | 73.18 | 0.74 | GRU | ||

| Auto ML | AWS SageMaker | 99.6 | 99.8 | 99.2 | 99.5 | 0.014 | XGBoost |

| GCP Vertex AI | 89.90 | 94.20 | 88.40 | 91.20 | 0.268 | hidden | |

| MS Azure | 84.20 | 82.20 | 81.80 | 81.50 | 1.176 | LightGBM | |

| Average Score | 91.23 | 92.07 | 89.80 | 90.73 | 0.49 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, W.; Choi, T.; Heo, S. A Comparative Study of Automated Machine Learning Platforms for Exercise Anthropometry-Based Typology Analysis: Performance Evaluation of AWS SageMaker, GCP VertexAI, and MS Azure. Bioengineering 2023, 10, 891. https://doi.org/10.3390/bioengineering10080891

Choi W, Choi T, Heo S. A Comparative Study of Automated Machine Learning Platforms for Exercise Anthropometry-Based Typology Analysis: Performance Evaluation of AWS SageMaker, GCP VertexAI, and MS Azure. Bioengineering. 2023; 10(8):891. https://doi.org/10.3390/bioengineering10080891

Chicago/Turabian StyleChoi, Wansuk, Taeseok Choi, and Seoyoon Heo. 2023. "A Comparative Study of Automated Machine Learning Platforms for Exercise Anthropometry-Based Typology Analysis: Performance Evaluation of AWS SageMaker, GCP VertexAI, and MS Azure" Bioengineering 10, no. 8: 891. https://doi.org/10.3390/bioengineering10080891

APA StyleChoi, W., Choi, T., & Heo, S. (2023). A Comparative Study of Automated Machine Learning Platforms for Exercise Anthropometry-Based Typology Analysis: Performance Evaluation of AWS SageMaker, GCP VertexAI, and MS Azure. Bioengineering, 10(8), 891. https://doi.org/10.3390/bioengineering10080891