For Heart Rate Assessments from Drone Footage in Disaster Scenarios

Abstract

1. Introduction

- (i)

- Selection and temporal tracking of relevant facial regions;

- (ii)

- Modeling of the recorded signals for PPG signal extraction;

- (iii)

- De-noising of rPPG signals.

2. Materials and Methods

2.1. Imaging Pipeline Overview

- (i)

- Data acquisition and image pre-processing: Adjustments made to the system’s parameters before and during the measurements;

- (ii)

- ROI selection and tracking: Selection of beneficial facial regions to track throughout the recording to extract rPPG signals;

- (iii)

- rPPG signal extraction: Combining the measured raw data from which the rPPG signals would be extracted;

- (iv)

- De-noising and post-processing: Refinement of the extracted rPPG signals to obtain a predominantly sparse signal for assessing HR;

- (v)

- Heart-rate estimation: Final step of calculating the HR from the extracted rPPG signals.

2.2. Data Acquisition and Image Pre-Processing

2.2.1. Face-Aware Adaptive Exposure Time Adjustment

| Algorithm 1 Automatic exposure time adjustment with respect to a person’s face |

|

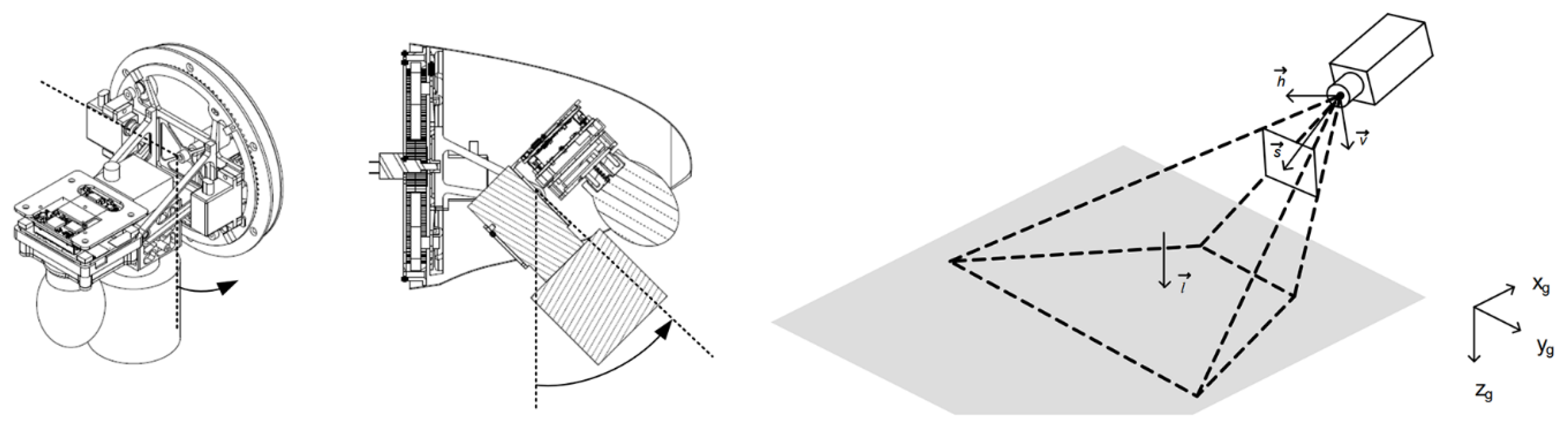

2.2.2. Active Image Stabilization

2.3. ROI Selection and Tracking

2.4. De-Noising and Signal Post-Processing

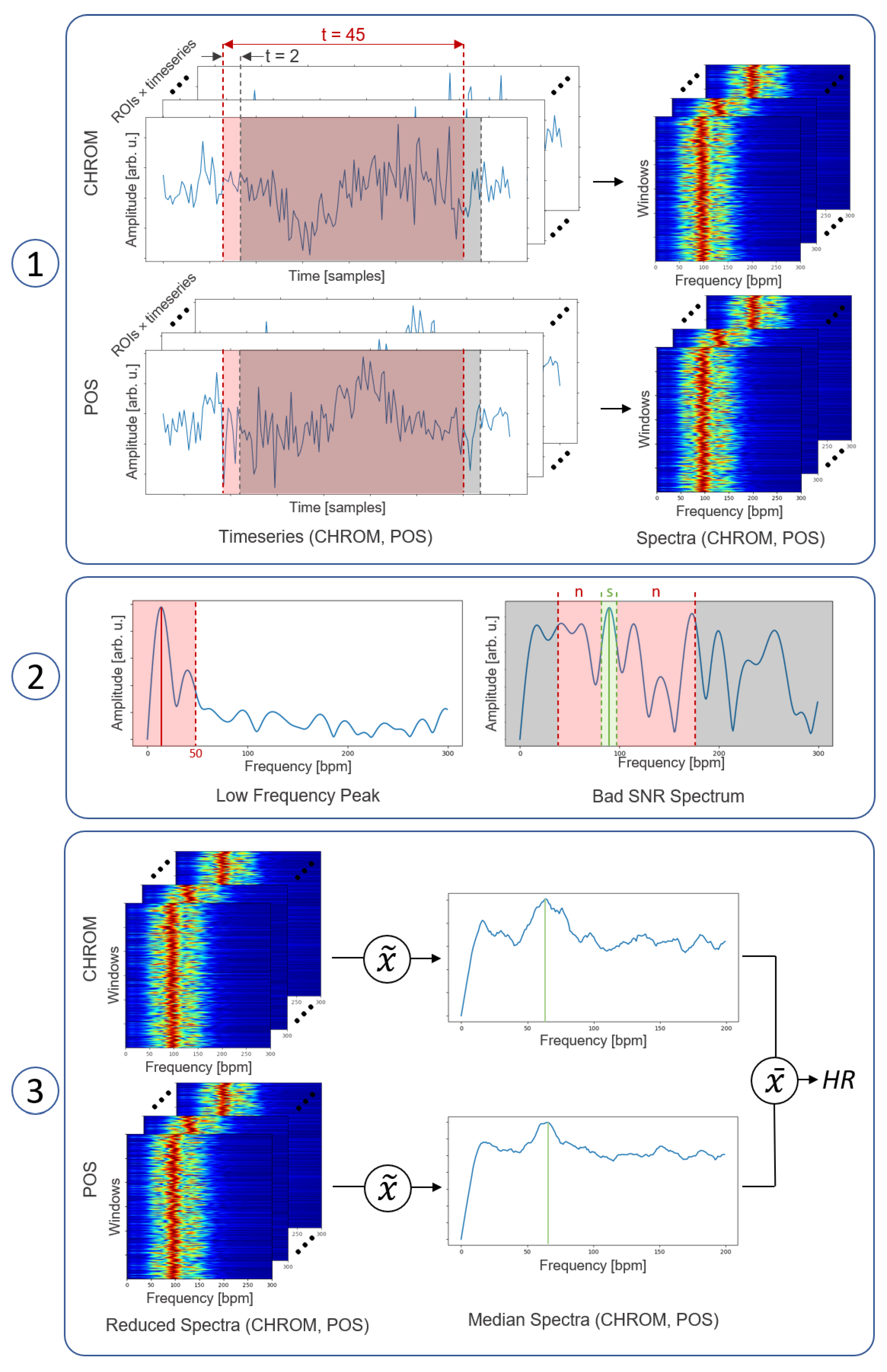

2.5. Heart-Rate Estimation

2.6. Experimental Evaluation

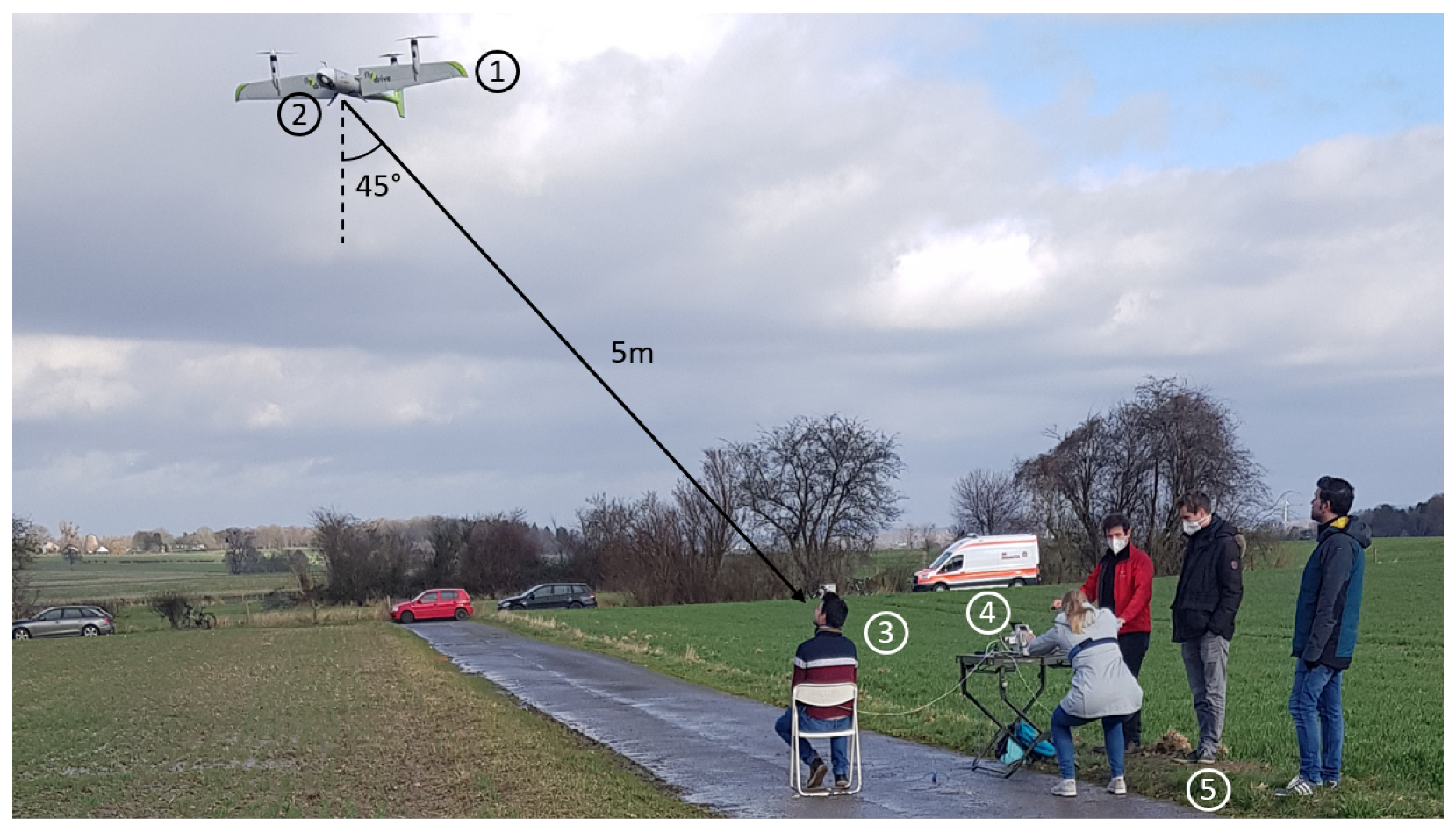

2.6.1. Flight System and Camera Setup

2.6.2. Data Acquisition

2.6.3. Experimental Setup and Study Protocol

3. Results

3.1. Recording Conditions

3.2. Target Acquisition and Adaptive Exposure Time Adjustment

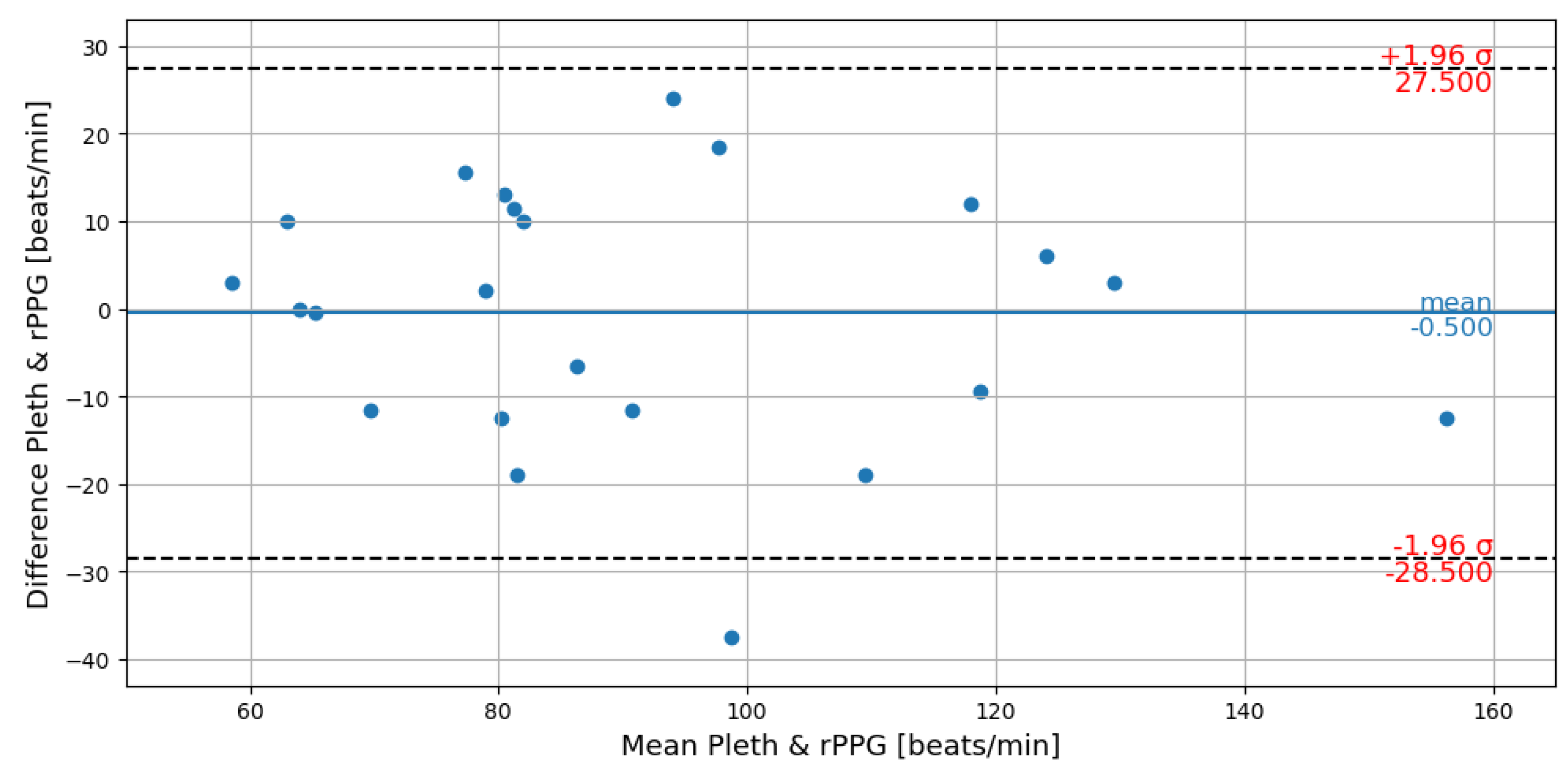

3.3. Heart Rate Assessment

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| UAS | Unmanned Aerial System |

| MCI | Mass-Casualty Incident |

| HR | Heart Rate |

| rPPG | Remote Photoplethysmography |

| ROI | Region-of-Interest |

| GLM | Generalized Linear Modeling |

| SNR | Signal-to-Noise Ration |

| RMSE | Root-Mean-Squared Error |

| bpm | beats-per-minute |

References

- Johnson, A.M.; Cunningham, C.J.; Arnold, E.; Rosamond, W.D.; Zègre-Hemsey, J.K. Impact of using drones in emergency medicine: What does the future hold? Open Access Emerg. Med. OAEM 2021, 13, 487. [Google Scholar] [CrossRef]

- FJ, E.R. Drones at the service of emergency responders: Rather more than more toys. Emergencias Rev. Soc. Esp. Med. Emergencias 2016, 28, 73–74. [Google Scholar]

- Konert, A.; Smereka, J.; Szarpak, L. The use of drones in emergency medicine: Practical and legal aspects. Emerg. Med. Int. 2019, 2019, 3589792. [Google Scholar] [CrossRef] [PubMed]

- Abrahamsen, H.B. A remotely piloted aircraft system in major incident management: Concept and pilot, feasibility study. BMC Emerg. Med. 2015, 15, 12. [Google Scholar] [CrossRef] [PubMed]

- Rosser, J.C.; Vignesh, V.; Terwilliger, B.A.; Parker, B.C. Surgical and Medical Applications of Drones: A Comprehensive Review. J. Soc. Laparoendosc. Surg. 2018, 22, e2018.00018. [Google Scholar] [CrossRef] [PubMed]

- Carrillo-Larco, R.; Moscoso-Porras, M.; Taype-Rondan, A.; Ruiz-Alejos, A.; Bernabe-Ortiz, A. The use of unmanned aerial vehicles for health purposes: A systematic review of experimental studies. Glob. Heal. Epidemiol. Genom. 2018, 3, e13. [Google Scholar] [CrossRef] [PubMed]

- Moeyersons, J.; Maenhaut, P.J.; Turck, F.D.; Volckaert, B. Aiding first incident responders using a decision support system based on live drone feeds. In Proceedings of the International Symposium on Knowledge and Systems Sciences, Tokyo, Japan, 25–27 November 2018; Springer: Singapore, 2018; pp. 87–100. [Google Scholar]

- Burke, C.; McWhirter, P.R.; Veitch-Michaelis, J.; McAree, O.; Pointon, H.A.; Wich, S.; Longmore, S. Requirements and limitations of thermal drones for effective search and rescue in marine and coastal areas. Drones 2019, 3, 78. [Google Scholar] [CrossRef]

- Chuang, C.C.; Rau, J.Y.; Lai, M.K.; Shih, C.L. Combining unmanned aerial vehicles, and internet protocol cameras to reconstruct 3-D disaster scenes during rescue operations. Prehospital Emerg. Care 2018, 23, 479–484. [Google Scholar] [CrossRef]

- Verkruysse, W.; Svaasand, L.O.; Nelson, J.S. Remote plethysmographic imaging using ambient light. Opt. Express 2008, 16, 21434–21445. [Google Scholar] [CrossRef]

- Yu, S.; Hu, S.; Azorin-Peris, V.; Chambers, J.A.; Zhu, Y.; Greenwald, S.E. Motion-compensated noncontact imaging photoplethysmography to monitor cardiorespiratory status during exercise. J. Biomed. Opt. 2011, 16, 077010. [Google Scholar]

- Aarts, L.A.; Jeanne, V.; Cleary, J.P.; Lieber, C.; Nelson, J.S.; Oetomo, S.B.; Verkruysse, W. Non-contact heart rate monitoring utilizing camera photoplethysmography in the neonatal intensive care unit—A pilot study. Early Hum. Dev. 2013, 89, 943–948. [Google Scholar] [CrossRef] [PubMed]

- Villarroel, M.; Chaichulee, S.; Jorge, J.; Davis, S.; Green, G.; Arteta, C.; Zisserman, A.; McCormick, K.; Watkinson, P.; Tarassenko, L. Non-contact physiological monitoring of preterm infants in the neonatal intensive care unit. NPJ Digit. Med. 2019, 2, 128. [Google Scholar] [CrossRef]

- Jeyakumar, V.; Nirmala, K.; Sarate, S.G. Non-contact measurement system for COVID-19 vital signs to aid mass screening—An alternate approach. In Cyber-Physical Systems; Elsevier: Amsterdam, The Netherlands, 2022; pp. 75–92. [Google Scholar]

- Magdalena Nowara, E.; Marks, T.K.; Mansour, H.; Veeraraghavan, A. SparsePPG: Towards driver monitoring using camera-based vital signs estimation in near-infrared. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1272–1281. [Google Scholar]

- Takano, C.; Ohta, Y. Heart rate measurement based on a time-lapse image. Med. Eng. Phys. 2007, 29, 853–857. [Google Scholar] [CrossRef]

- Unakafov, A.M. Pulse rate estimation using imaging photoplethysmography: Generic framework and comparison of methods on a publicly available dataset. Biomed. Phys. Eng. Express 2018, 4, 045001. [Google Scholar] [CrossRef]

- Allen, J. Photoplethysmography and its application in clinical physiological measurement. Physiol. Meas. 2007, 28, R1. [Google Scholar] [CrossRef]

- Lempe, G.; Zaunseder, S.; Wirthgen, T.; Zipser, S.; Malberg, H. ROI selection for remote photoplethysmography. In Bildverarbeitung für die Medizin 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 99–103. [Google Scholar]

- Kumar, M.; Veeraraghavan, A.; Sabharwal, A. DistancePPG: Robust non-contact vital signs monitoring using a camera. Biomed. Opt. Express 2015, 6, 1565–1588. [Google Scholar] [CrossRef]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, CVPR 2001, Kauai, HI, USA, 8–14 December 2001; Volume 1, p. 1. [Google Scholar]

- Tomasi, C.; Kanade, T. Detection and tracking of point. Int. J. Comput. Vis. 1991, 9, 137–154. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An Iterative Image Registration Technique with an Application to Stereo Vision. In Proceedings of the IJCAI-81: 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; Volume 81. [Google Scholar]

- De Haan, G.; Jeanne, V. Robust pulse rate from chrominance-based rPPG. IEEE Trans. Biomed. Eng. 2013, 60, 2878–2886. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Den Brinker, A.C.; Stuijk, S.; De Haan, G. Algorithmic principles of remote PPG. IEEE Trans. Biomed. Eng. 2016, 64, 1479–1491. [Google Scholar] [CrossRef]

- Wang, W.; den Brinker, A.C.; De Haan, G. Discriminative signatures for remote-PPG. IEEE Trans. Biomed. Eng. 2019, 67, 1462–1473. [Google Scholar] [CrossRef]

- Rapczynski, M.; Werner, P.; Al-Hamadi, A. Effects of video encoding on camera-based heart rate estimation. IEEE Trans. Biomed. Eng. 2019, 66, 3360–3370. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.H.; Tang, I.L.; Chang, C.H. Enhancing the detection rate of inclined faces. In Proceedings of the 2015 IEEE Trustcom/BigDataSE/ISPA, Washington, DC, USA, 20–22 August 2015; Volume 2, pp. 143–146. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. Mediapipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

- Kartynnik, Y.; Ablavatski, A.; Grishchenko, I.; Grundmann, M. Real-time facial surface geometry from monocular video on mobile GPUs. arXiv 2019, arXiv:1907.06724. [Google Scholar]

- Yan, M.; Zhao, M.; Xu, Z.; Zhang, Q.; Wang, G.; Su, Z. Vargfacenet: An efficient variable group convolutional neural network for lightweight facial recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Nowara, E.M.; Marks, T.K.; Mansour, H.; Veeraraghavan, A. Near-infrared imaging photoplethysmography during driving. IEEE Trans. Intell. Transp. Syst. 2020, 23, 3589–3600. [Google Scholar] [CrossRef]

- Bajraktari, F.; Liu, J.; Pott, P.P. Methods of Contactless Blood Pressure Measurement. Curr. Dir. Biomed. Eng. 2022, 8, 439–442. [Google Scholar] [CrossRef]

- Wang, W.; Shan, C. Impact of makeup on remote-ppg monitoring. Biomed. Phys. Eng. Express 2020, 6, 035004. [Google Scholar] [CrossRef] [PubMed]

- Nowara, E.M.; McDuff, D.; Veeraraghavan, A. A meta-analysis of the impact of skin tone and gender on non-contact photoplethysmography measurements. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 284–285. [Google Scholar]

| landmarks (4–6) (x,y) | center-of-mass (x,y) | number of pixels |

| landmarks (4–6) (x,y) | center-of-mass (x,y) | height above ground |

| longitude | latitude | velocity (u,v,w) |

| nostrils (center, right) | eyes (center, right) | (x,y,z) |

| (x,y,z) | (x,y,z) | (x,y,z) |

| Phase I | Phase II | |||||

|---|---|---|---|---|---|---|

| Subject | Valid Frames | Mean Exposure | Observation | Valid Frames | Mean Exposure | Observation |

| S01 | 423 | 208 | 61 | 215 | ||

| S02 | 306 | 185 | - | - | face out of area | |

| S03 | 212 | 253 | 350 | 252 | ||

| S04 | - | - | face out of area | - | - | face out of area |

| S05 | - | - | over exposure | 163 | 229 | |

| S06 | - | - | over exposure | - | - | over exposure |

| S07 | 219 | 212 | 260 | 214 | ||

| S08 | 327 | 244 | - | - | face out of area | |

| S09 | 49 | 230 | 390 | 114 | ||

| S10 | 390 | 147 | 390 | 230 | ||

| S11 | 213 | 230 | 394 | 230 | ||

| S12 | 218 | 180 | 280 | 232 | ||

| S13 | 288 | 163 | 208 | 252 | ||

| S14 | - | - | over exposure | - | - | face out of area |

| S15 | 279 | 253 | 220 | 252 | ||

| S16 | 170 | 225 | 68 | 253 | ||

| S17 | 365 | 228 | - | - | face out of area | |

| S18 | - | - | over exposure | - | - | over exposure |

| Recordings Outside Exposure Range | Recordings Inside Exposure Range | All Exposure Ranges | ||||

|---|---|---|---|---|---|---|

| Number of Recordings | RMSE (bpm) | Number of Recordings | RMSE (bpm) | Number of Recordings | RMSE (bpm) | |

| High Motion Recordings | 5 | 19.3 | 6 | 12.7 | 11 | 16.0 |

| Low Motion Recordings | 5 | 13.9 | 7 | 11.4 | 12 | 12.5 |

| High and Low Motion Recordings | 10 | 16.8 | 13 | 12.0 | 23 | 14.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mösch, L.; Barz, I.; Müller, A.; Pereira, C.B.; Moormann, D.; Czaplik, M.; Follmann, A. For Heart Rate Assessments from Drone Footage in Disaster Scenarios. Bioengineering 2023, 10, 336. https://doi.org/10.3390/bioengineering10030336

Mösch L, Barz I, Müller A, Pereira CB, Moormann D, Czaplik M, Follmann A. For Heart Rate Assessments from Drone Footage in Disaster Scenarios. Bioengineering. 2023; 10(3):336. https://doi.org/10.3390/bioengineering10030336

Chicago/Turabian StyleMösch, Lucas, Isabelle Barz, Anna Müller, Carina B. Pereira, Dieter Moormann, Michael Czaplik, and Andreas Follmann. 2023. "For Heart Rate Assessments from Drone Footage in Disaster Scenarios" Bioengineering 10, no. 3: 336. https://doi.org/10.3390/bioengineering10030336

APA StyleMösch, L., Barz, I., Müller, A., Pereira, C. B., Moormann, D., Czaplik, M., & Follmann, A. (2023). For Heart Rate Assessments from Drone Footage in Disaster Scenarios. Bioengineering, 10(3), 336. https://doi.org/10.3390/bioengineering10030336