1. Introduction

The human spine exhibits a characteristic sagittal plane alignment known as lordosis in both the lumbar and cervical regions. This alignment is essential for maintaining an upright posture and facilitating a gait that enables the unrestricted use of both arms [

1,

2]. It has been well-established that maintaining proper sagittal alignment of the spine is a critical factor in the management of pain and disability in patients with adult spinal deformities [

3,

4]. Various sagittal angular parameters such as pelvic incidence minus lumbar lordosis, and T1 slope minus cervical lordosis have been reported as the primary parameters representing cervical and thoracolumbar deformities, respectively [

5,

6]. However, most spinal sagittal parameters require manual measurement by spine surgeons, which inevitably requires considerable time and effort. Moreover, this practice limits the scale of the collected data, and is insufficient for comprehensively analyzing the characteristics of spinal alignment.

While originally introduced in the 1980s, artificial intelligence is currently exhibiting rapid growth with the development of computational performance [

7]. Deep learning, a subconcept of machine learning, can process a large amount of information by generating optimized weights while learning similarly to a human, and has an advantage in repetitive tasks in a short time [

8]. Convolutional neural networks (CNNs) are widely used to extract features from image data, and a few studies have recently reported their application in spinal sagittal analysis. For instance, Aubert et al. [

9] introduced a pioneering technique for the automated reconstruction of three-dimensional spinal structures. Their approach involved the use of a convolutional neural network (CNN) to precisely fit a statistically plausible model of the spine to medical imaging data. In a similar context, Weng et al. [

10] introduced a deep learning methodology employing regression techniques to accurately estimate the sagittal vertical axis—a pivotal parameter characterizing sagittal alignment. Extending the scope of vital spinal measurements, which encompass cervical lordosis, thoracic kyphosis, pelvic incidence minus lumbar lordosis, sagittal vertical axis, and pelvic tilt, Cho et al. [

11] conducted a comprehensive study utilizing the U-net architecture for precise anatomical landmark segmentation in radiographic imagery. Additionally, Wu et al. [

12] proposed an innovative multiview correlation network architecture aimed at measuring the Cobb angle, primarily relying on the detection of anatomical landmarks. However, their approach did not specifically address parameters related to sagittal alignment. In the pursuit of automating the measurement of spinal alignment parameters through machine learning, Chae et al. [

13] and Nguyen et al. [

14,

15] developed a sophisticated algorithm grounded in distributed convolutional neural networks. Notably, their algorithm demonstrated a significant correlation with manual measurements conducted by experienced spine surgeons [

16].

To facilitate a more intuitive interpretation of spinal alignment, incidence angles of the inflection points (IAIPs) have been introduced as valuable parameters that depict the geometric relationships between the pelvis and the spine [

17]. These inflection points, specifically defined as L1, T1, and C2, give rise to corresponding incidence angles: L1 incidence (L1I), T1 incidence (T1I), and C2 incidence (C2I). These angles represent the relationship between the extension of the pelvic tilt vector line and the perpendicular line drawn from the upper endplate of each respective vertebra. These IAIPs represent the cumulative geometric summation extending from the pelvis to each individual vertebra. They are computed as the aggregate of the slope angle at each vertebral level combined with the pelvic tilt, akin to the calculation of the pelvic incidence [

18,

19,

20,

21,

22,

23,

24]. Therefore, in this study, the IAIPs were measured using CNN-based machine learning, and the accuracy of the thoracolumbar angular parameters derived through geometric relationships was evaluated. The hypothesis of this study was that machine learning-based measurement of global sagittal spinal parameters and estimates derived using spinal geometric equations would show high accuracy.

2. Materials and Methods

2.1. Patient Enrollment

We analyzed the whole-spine lateral radiographs of 595 patients who visited a single institution between March 2019 and August 2021. The inclusion criteria were patients aged over 20 years with mild pain and a visual analog score of 4 or less, and none of the patients had restrictions on walking for >30 min. The exclusion criteria were the patient who underwent previous instrumentation surgery of the spine, hip and knee replacement surgery, history of vertebral compression fractures, and pregnancy. All patients were radiographically imaged using a standardized protocol, 36-inch full-length film. Following the exclusion of subpar-quality images of the spinal endplates and both femoral heads, only images of superior quality, suitable for meticulous contrast and brightness examination, were retained for analysis. This study received approval from our institutional review board (2020-05-032-008).

2.2. Image Preparation and Measurement

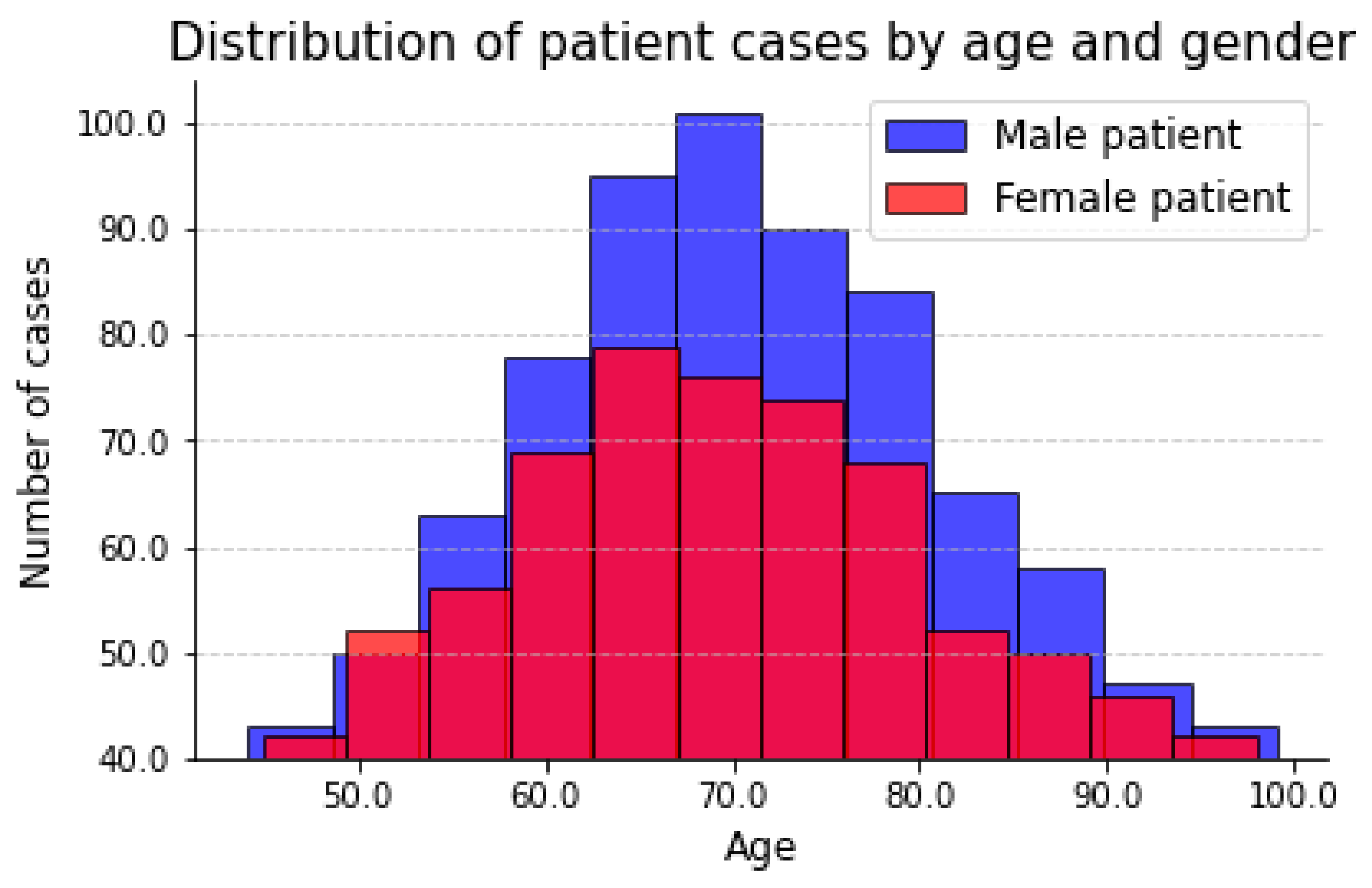

For the whole-spine standing radiographs for parameter measurement, 563 whole-spine lateral radiography sheets among the 595 high-quality images were analyzed. The distribution of patient images along age and gender is shown in the

Figure 1.

Whole-spine standing radiographs were obtained with the hands naturally placed on both clavicles, without holding the supporting bar, in a standing state, facing forward, with the hip and knee joints extended as much as possible. Radiographs were obtained using an X-ray scanner with an average size of 3240 × 1080 pixels. The ratio of the training and validation sets was set at 9:1; training was performed with images from 500 patients, and the measured values were validated with images from 63 patients.

3. Machine Learning-Based Analysis Program and Training Process

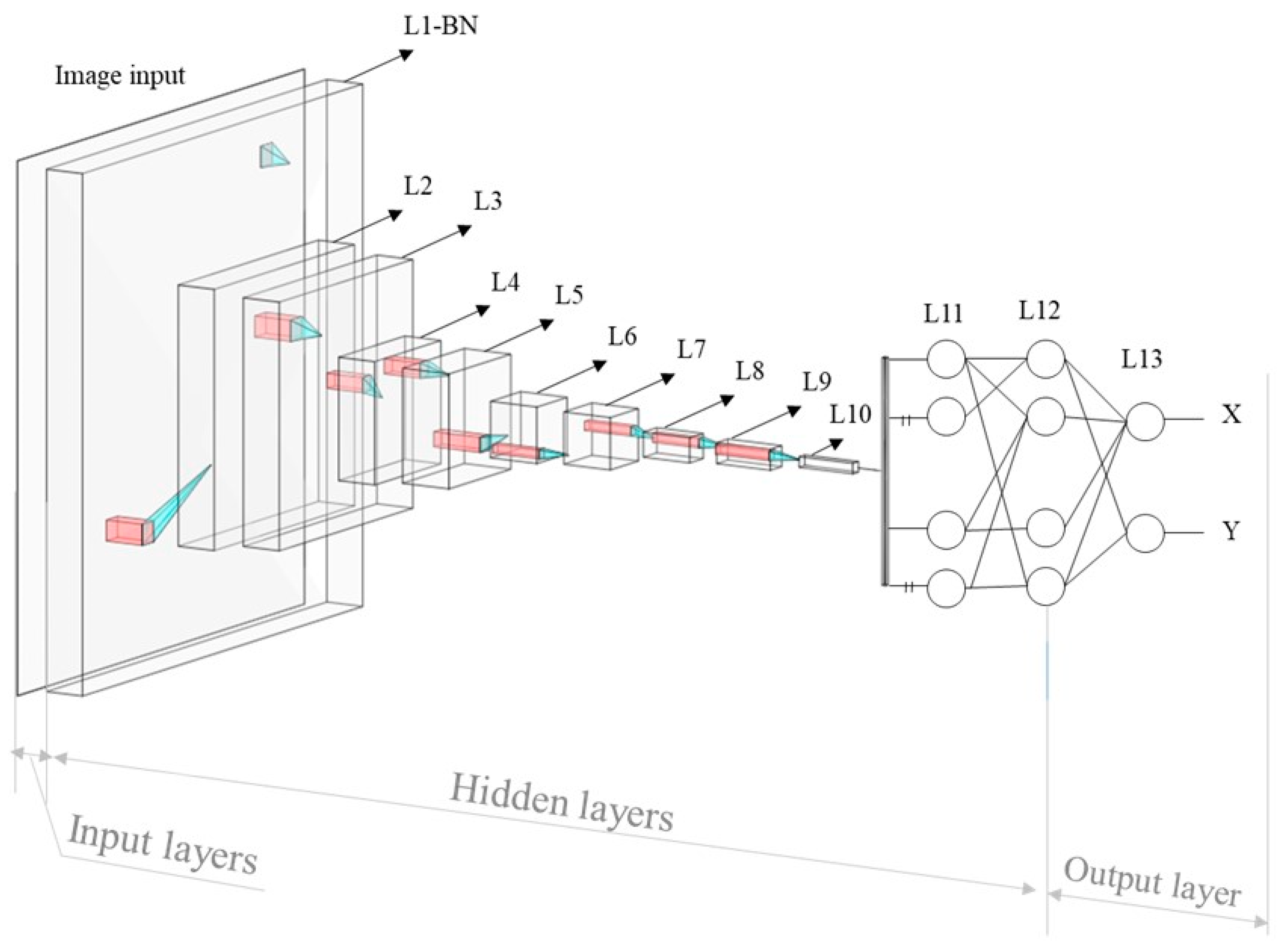

The inception of the CNN traces back to the pioneering work of Fukushima et al. [

25] in 1980, with the introduction of the ‘neocognitron’. This architectural design represents a specialized neural network engineered to discern intricate features within images, and establish the relationships between these features and desired outcomes. Comprising input, output, and multiple intermediary layers, X-ray images are initially conveyed to the network’s input layer. These intermediate layers serve the critical function of feature extraction from the input images, thereby providing valuable insights for the assessment of landmark positions, a key component of the anticipated outcomes. This function is constructed using a training process, during which the weight factors, which represent the relationship between the features and outcome, were optimized by comparing the predicted and ground truth values. The weight factor adjustment is achieved by the difference between the output

from the deep learning model containing the calculated position value j for the input image and the label

, created based on the actual position of the required points in the input image. This disparity is calculated using the mean absolute error as a loss function, as expressed in Equation (1), with

n labels in the dataset. The L value then increases as the difference between

and

increases.

The optimization of the weight factor,

, between the p and q nodes located in the neighboring layer is performed based on the difference from the loss function in the direction from the output to the input layer. After each training step, the weight factor

, is updated by

, which can be derived from Equation (2) with a learning rate, α, determining the learning speed of the model [

26,

27], where

denotes the summation of the weighted input data multiplied by the weight factor, and

is the output signal of the node, as shown in

Figure 2.

The accurate detection of landmarks is of paramount importance in this context, as it directly influences the precision of angle parameter measurements. To address this critical need, we employed a decentralized CNN model [

13,

14,

15,

16], previously established as a robust landmark detection technique for medical images. This method offers the distinct advantage of narrowing the region of interest (ROI) on a per-order basis, effectively reducing the influence of extraneous features on results, and enhancing the diversity of the training dataset. Our proposed decentralized CNN model, as depicted in

Figure 3, comprises three orders. The 1st order serves to provide a rough detection of the regions encompassing the cervical, lumbar, and hip bones. The 2nd order then refines this detection by locating specific vertebrae, while the 3rd order is tasked with the precise localization of landmarks on each identified vertebra. This multitiered approach was devised to tackle the inherent complexity of landmark detection. The required angular parameters can be measured from the detected landmarks. Each angle

consists of two vectors,

and

, which are shaped by two pairs of landmarks

,

,

,

. Therefore, the angle was calculated using Equation (3), as follows:

4. Measurement of Parameters after the Training Process

After training an algorithm that recognizes 11 points at the center of both femoral heads, endplates of the sacrum, L1, T1, C2, and the center of the sacrum for 500 people, the pelvic parameters of pelvic incidence (PI), pelvic tilt (PT), and sacral slope (SS), along with three incidence angles of L1, T1, and C2, were measured using a self-made program. The six test parameters of lumbar lordosis (LL), thoracic kyphosis (TK), C2–C7 lordosis (C2–7 L), L1S, T1S, and C2S were calculated using the following equations using the concept of IAIPs.

Table 1 and

Figure 4 present the definitions and calculation equations for the sagittal parameters.

All parameters were defined as negative for lordosis and positive for kyphosis to ensure the uniformity of measurements. The incidence angle was defined as positive when the perpendicular line of the corresponding endplate was located on the right side compared with the PT line, similar to the pelvic incidence, and negative for the left side. The slope angle was defined as positive for the right upside open angle, such as the sacral slope, and negative for the left upside open angle.

5. Validation Process

In addition to the training set of 500 individuals, whole-spine standing radiographs of 63 individuals who had not been included in the training set were used for the validation process. Each spinal sagittal parameter was manually measured twice at 2-week intervals by two spine surgeons. The mean values were employed as the established reference standards. To assess the accuracy of the parameters measured by machine learning, the success rate was determined using a Bland–Altman plot. Success rates were defined as the proportion of cases where the mean absolute error, as measured by the CNN, fell below a predefined error threshold.

To evaluate the accuracy of the proposed equation, the concordance between the direct measurement values of the six test parameters and the values derived from the calculated equation was analyzed. Concordance was analyzed by comparing the mean and standard deviation (SD) error, Pearson correlation coefficient, and coefficient of determination. Interobserver reliability and intraobserver reproducibility were analyzed using intraclass correlation coefficients (ICCs).

6. Results

The descriptive data of the CNN and standard reference values for the 63 validation sets are listed in

Table 2. The success rates of the parameters were high for PI and C2I, and low for SS and L1I (

Figure 5).

The proposed method demonstrated notable efficiency in measuring various parameters, including PI, PT, T1I, C2I, LL, C2-7 L, L1S, T1S, and C2S, achieving a detection rate of 80%, at an error threshold of 3.5° for these angles. Furthermore, visualized results exhibited distinct characteristics when compared to the remaining outcomes, particularly in cases where they achieved a 0.8 detection rate at an error threshold of 7.5° for SS and 4.5° for L1I.

The test results of our method were graphically represented through Bland–Altman (B-A) plots, as depicted in

Figure 6. These plots include horizontal lines indicating the mean difference (represented by the red solid line) and the mean difference ± 1.96 standard deviations (indicated by the blue dashed lines). Remarkably, the entire measurement process required less than 1 s to complete.

Table 3 and

Table 4 present the mean and standard error of the absolute differences between the values computed using the proposed equation and the corresponding standard reference values obtained via the CNN method for the six test parameters. The mean absolute differences between the calculated values and those directly measured by the CNN were 0.045 in L1S, T1S, and C2S; and 0.006 in LL, TK, and C2-7 L, with a coefficient of determination of 1.0 (

p < 0.01). The mean absolute difference value between the value calculated by CNN and the standard reference was 1.8, with a coefficient of determination of 0.96 (

p < 0.01). All test parameters showed statistically significant ICC value over 0.9 with statistical significance (

Table 5,

p < 0.01).

7. Discussion

This study conducted a machine learning-based geometric analysis of global spinal alignment. The algorithms were able to accurately detect 11 key anatomical landmarks, such as the center of the femoral head and sacrum upper endplate, each endpoint of the superior sacrum, L1, T1 superior endplate, and C2 inferior endplate. CNNs are the most optimized method for image learning, and are being actively studied in the field of imaging medicine. In an early study on the analysis of spinal sagittal parameters using machine learning, Zhang et al. [

28] automatically measured the Cobb angle in patients with scoliosis. When 235 of 340 simple radiographic examinations were subjected to machine learning and 105 were tested, the difference between the artificial neural network and manual measurement by the spinal surgeon was more than 5°. Similarly, Galbusera et al. [

29] reported the results of machine learning for measuring thoracic kyphosis of T4–T12, lumbar lordosis, Cobb angle, pelvic incidence, sacral slope, and pelvic tilt based on a fully convolutional neural network. After training on data from 443 individuals and subsequent evaluation by 50 individuals, it was observed that all predicted parameters exhibited a robust correlation with values assessed by experienced spine surgeons. The standard error of the estimated parameters varied from 2.7° for the pelvic tilt angle to 11.5° for the lumbar spine.

Recently, Yeh et al. [

30] also published a convolutional neural network model that detected 45 anatomical landmarks and measured major sagittal alignment parameters using sagittal radiographs. The accuracy was improved using 2200 evaluation datasets and was related to the number of detection points of the anatomical indicators. That is, the accuracy of the parameters of the cervical and lumbar vertebrae composed of two anatomical indicators was high, whereas the accuracy of the thoracic and pelvic indicators composed of four indicators difficult to distinguish from the structures around the thoracic vertebrae in the thoracic vertebrae was relatively low. The performance of the convolution model was highest in the cervical vertebrae, and the error ranged from 1.75 to 2.64 mm, followed by the lumbar vertebrae, with an error ranging from 1.76 to 2.63 mm. However, the thoracic region showed a larger error, and the error range was 2.21~3.07 mm; the error of the pelvic incidence was the highest, and the central error for the measurement of the center of the femoral head was 3.39 mm. In their study, Yeh et al. localized 45 anatomical landmarks; however, many of them were considered to have contributed to increasing error. In addition, the localization error in finding the center of the femur head was the greatest among all the anatomic landmarks, and the error distribution was the widest; therefore, the accuracy of the pelvic parameters was low in their study.

The decentralized CNN employed in this study offers a decentralized approach across multiple detection orders, enabling the attainment of high accuracy, even with a limited number of training images—an aspect of paramount significance in the realm of medical metrology. Based on the conventional CNN model structure for locating a landmark’s horizontal and vertical positions, the developed method utilizes multiple trained CNN models intentionally arranged in order. In increasing order, the landmark positions are predicted from rough to precise accuracy, which consequently provides sufficient final detected positions of the considered vertebrae, as well as good measured angles. This advantage comes from the procedure of narrowing the ROI according to each order, which not only reduces the number of unrelated features that can affect the results, but also increases the diversity of the training dataset.

In this study, six geometrical equations were proposed to efficiently measure the angular parameters from three pelvic parameters and the IAIPs. The accuracies of the six test parameters were 99% between the measured values and those calculated by the spine surgeons. For the manual measurement of a specific angular parameter, the observer must set two vectors and measure the Cobb angle between them. Therefore, 24 vectors must be obtained to measure 12 parameters, and measurement errors can occur during this process. However, 12 angular parameters could be measured with high accuracy using only 11 key anatomical landmarks, machine learning, and the equations proposed in this study. In addition, lordosis of the cervical and lumbar spines should be defined as negative; however, in clinical settings, absolute values are used with confusion. These mistakes can occur frequently in parameters with ranges including zero, such as the L1 incidence, L1 slope, C2–7 lordosis, and C2 slope. If the orientational definition of angular parameters is not accurately defined, large-scale data may be too heterogeneous to interpret the sagittal alignment. Disorganized data increases the entropy of the data, and this can be a confounding factor in revealing the characteristics of spinal sagittal alignment. Using the orientational definition of the slope and incidence angles, we collected homogeneous data with high accuracy. The authors believe that the definitions proposed in this study will be effective for large-scale data collection on spinal sagittal parameters.

The ICC values, as observed in this study, demonstrated a high level of accuracy in the detection of anatomical points by the CNN when compared to direct measurements by human experts. However, it is notable that as the detection rate of anatomical landmarks located in regions overlapping with the rib cage and shoulder girdle diminished, the ICC values for parameters such as T1 slope and T1 incidence exhibited reduced accuracy. Consequently, we acknowledge the need for further algorithmic enhancements to improve generalizability. This could entail adjustments in image contrast or substituting the T1 slope with the measurement of C7 slope.

Additionally, it should be noted that the algorithm for assessing distance parameters could not be fully evaluated in this study, and ongoing efforts are dedicated to its refinement. Lastly, we recognize the potential limitation posed by the use of spine images collected solely from a single institution, which may restrict external validation. Therefore, there is a compelling need for future algorithmic enhancements based on the incorporation of multicenter image data to ensure broader applicability and robustness.

8. Conclusions

The CNN-based deep learning algorithm and the concept of IAIPs were able to accurately measure the spinal sagittal parameters. Based on the three pelvic parameters and three incidence angles used, six additional parameters were accurately estimated. The advantages of machine learning and the concept of IAIPs shown herein suggest their utility for large-scale data accumulation in sagittal spinal alignment.

Author Contributions

Conceptualization, T.P.N.; Methodology, T.P.N.; Software, T.P.N.; Validation, J.-H.K.; Data curation, S.-H.K.; Supervision, J.Y. and S.-H.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the “Regional Innovation Strategy (RIS)” through the National Research Foundation of Korea (NRF) funded by the Ministry of Education (MOE) (2021RIS-001). This work was also supported by the Technology Innovation Program (20020499, development of the integrated manufacturing technology for 1.5 GPa sill side member in multi-purpose electric vehicles with enhancing the side impact crashworthiness of battery case) funded By the Ministry of Trade, Industry & Energy (MOTIE, Korea). Finally, this research was financially supported by the Ministry of Trade, Industry, and Energy (MOTIE), Korea (reference number 20022814) supervised by the Korea Institute for Advancement of Technology (KIAT).

Institutional Review Board Statement

This study received approval from Institutional Review Board of Hanyang University College of Medicine (2020-05-032-008).

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this study is not available for public sharing due to privacy and confidentiality considerations.

Conflicts of Interest

There are no conflict of interest to declare.

References

- Le Huec, J.C.; Saddiki, R.; Franke, J.; Aunoble, S. Equilibrium of the human body and the gravity line: The basics. Eur. Spine J. 2011, 20 (Suppl. 5), 558–563. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.K.; Hyun, S.J.; Kim, K.J. Reciprocal changes in the entire body following realignment surgery for adult spinal deformities. Asian Spine J. 2022, 16, 958–967. [Google Scholar] [CrossRef] [PubMed]

- Diebo, B.G.; Ferrero, E.; Lafage, R.; Challier, V.; Liabaud, B.; Liu, S.; Vital, J.-M.; Errico, T.J.; Schwab, F.J.; Lafage, V. Recruitment of compensatory mechanisms in sagittal spinal malalignment is age-and regional deformity-dependent: A full-standing axis analysis of key radiographic parameters. Spine 2015, 40, 642–649. [Google Scholar] [CrossRef]

- Miura, T.; Tominaga, R.; Sato, K.; Endo, T.; Iwabuchi, M.; Ito, T.; Shirado, O. Relationship between lower-limb pain intensity and dynamic lumbopelvic hip alignment in patients with degenerative lumbar spinal canal stenosis: A cross-sectional study. Asian Spine J. 2022, 16, 918–926. [Google Scholar] [CrossRef] [PubMed]

- Yilgor, C.; Sogunmez, N.; Yavuz, Y.; Abul, K.; Boissiére, L.; Haddad, S.; Obeid, I.; Kleinstück, F.; Pérez-Grueso, F.J.S.; Acaroğlu, E.; et al. Relative lumbar lordosis and lordosis distribution index: Individualized pelvic incidence-based proportional parameters that quantify lumbar lordosis more precisely than pelvic incidence minus lumbar lordosis. Neurosurg. Focus 2017, 43, E5. [Google Scholar] [CrossRef]

- Staub, B.N.; Lafage, R.; Kim, H.J.; Shaffrey, C.I.; Mundis, G.M.; Hostin, R.; Burton, D.; Lenke, L.; Gupta, M.C.; Ames, C.; et al. Cervical mismatch: Normative value of t1 slope minus cervical lordosis and its ability to predict ideal cervical lordosis. J. Neurosurg. Spine 2018, 30, 31–37. [Google Scholar] [CrossRef]

- Holzinger, A.; Langs, G.; Denk, H.; Zatloukal, K.; Müller, H. Causability and explainability of artificial intelligence in medicine. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, e1312. [Google Scholar] [CrossRef]

- Wang, F.; Casalino, L.P.; Khullar, D. Deep Learning in Medicine-Promise, Progress, and Challenges. JAMA Intern. Med. 2019, 179, 293–294. [Google Scholar] [CrossRef]

- Aubert, B.; Vazquez, C.; Cresson, T.; Parent, S.; de Guise, J.A. Toward automated 3D spine reconstruction from biplanar radiographs using a CNN for statistical spine model fitting. IEEE Trans. Med. Imaging 2019, 38, 2796–2806. [Google Scholar] [CrossRef]

- Weng, C.H.; Wang, C.L.; Huang, Y.J.; Yeh, Y.C.; Fu, C.J.; Yeh, C.Y.; Tsai, T.-T. Artificial intelligence for automatic measurement of sagittal vertical axis using the ResUNet framework. J. Clin. Med. 2019, 8, 1826. [Google Scholar] [CrossRef]

- Cho, B.H.; Kaji, D.; Cheung, Z.B.; Ye, I.B.; Tang, R.; Ahn, A.; Carrillo, O.; Schwartz, J.T.; Valliani, A.A.; Oermann, E.K.; et al. Automated Measurement of Lumbar Lordosis on Radiographs Using Machine Learning and Computer Vision. Glob. Spine J. 2020, 10, 611–618. [Google Scholar] [CrossRef]

- Wu, H.; Bailey, C.; Rasoulinejad, P.; Li, S. Automated comprehensive Adolescent Idiopathic Scoliosis assessment using the MVC-net. Med. Image Anal. 2018, 48, 1–11. [Google Scholar] [CrossRef]

- Chae, D.S.; Nguyen, T.P.; Park, S.J.; Kang, K.Y.; Won, C.; Yoon, J. Decentralized convolutional neural network for evaluating spinal deformity with spinopelvic parameters. Biomed. Comput. Methods Programs Biomed. 2020, 197, 105699. [Google Scholar] [CrossRef]

- Nguyen, T.P.; Chae, D.S.; Park, S.J.; Kang, K.; Yoon, J. Deep learning system for modeling classification and segmental motion measurement in diagnosis of lumbar spondylolisthesis. Biomed. Signal Process. Control 2021, 65, 102371. [Google Scholar] [CrossRef]

- Nguyen, T.P.; Chae, D.S.; Park, S.J.; Kang, K.Y.; Lee, W.S.; Yoon, J. Intelligent analysis of coronal alignment in lower limbs based on radiographic image with convolutional neural networ. Comput. Biol. Med. 2020, 120, 103732. [Google Scholar] [CrossRef]

- Nguyen, T.P.; Jung, J.W.; Yoo, Y.J.; Choi, S.H.; Yoon, J. Intelligent Evaluation of Global Spinal Alignment Using a Decentralized CNN. J. Digit. Imaging 2022, 35, 213–225. [Google Scholar] [CrossRef] [PubMed]

- Choi, S.H.; Hwang, C.J.; Cho, J.H.; Lee, C.S.; Kang, C.N.; Jung, J.W.; Ahn, H.S.; Lee, D.-H. Influence of spinopelvic morphology on sagittal spinal alignment: Analysis of the incidence angle of inflection points. Eur. Spine J. 2020, 29, 831–839. [Google Scholar] [CrossRef]

- Choi, S.H.; Lee, D.H.; Hwang, C.J.; Son, S.M.; Woo, Y.; Goh, T.S.; Kang, S.W.; Lee, J.S. Effectiveness of the c2 incidence angle in evaluating global spinopelvic alignment in patients with mild degenerative spondylosis. World Neurosurg. 2019, 127, e826–e834. [Google Scholar] [CrossRef] [PubMed]

- Schwartz, J.T.B.; Cho, B.H.B.; Tang, P.; Schefflein, J.; Arvind, V.B.; Kim, J.S.; Doshi, A.H.; Cho, S.K. Deep learning automates measurement of spinopelvic parameters on lateral lumbar radiographs. Spine 2021, 46, E671–E678. [Google Scholar] [CrossRef] [PubMed]

- Cina, A.; Bassani, T.; Panico, M.; Luca, A.; Masharawi, Y.; Brayda-Bruno, M.; Galbusera, F. 2-step deep learning model for landmarks localization in spine radiographs. Sci. Rep. 2021, 11, 9482. [Google Scholar] [CrossRef]

- Grover, P.; Siebenwirth, J.; Caspari, C.; Drange, S.; Dreischarf, M.; Le Huec, J.C.; Putzier, M.; Franke, J. [Abstracts of the 15th German Spine Congress] Can artificial intelligence support or even replace physicians in measuring the sagittal balance?—A validation study on preoperative and postoperative images of 170 patients. Eur. Spine J. 2020, 29, 2865–2866. [Google Scholar] [CrossRef]

- Tran, V.L.; Lin, H.-Y.; Liu, H.-W. MBNet: A multi-task deep neural network for semantic segmentation and lumbar vertebra inspection on X-ray images. In Proceedings of the 15th Asian Conference on Computer Vision—ACCV 2020, Kyoto, Japan, 30 November–4 December 2021; pp. 635–651. [Google Scholar] [CrossRef]

- Franke, J.; Grover, P.; Siebenwirth, J.; Caspari, C.; Dreischarf, M.; Putzier, M. [Abstracts of EUROSPINE 2021] Can artificial intelligence support or even replace physicians in measuring the sagittal balance? A validation study on preoperative and postoperative images of 170 patients. Brain Spine 2021, 1, 100062. [Google Scholar] [CrossRef]

- Zhou, S.; Yao, H.; Ma, C.; Chen, X.; Wang, W.; Ji, H.; He, L.; Luo, M.; Guo, Y. Artificial intelligence X-ray measurement technology of anatomical parameters related to lumbosacral stability. Eur. J. Radiol. 2022, 146, 110071. [Google Scholar] [CrossRef] [PubMed]

- Fukushima, K. Neocognitron: A self organizing neural network model for a mechanism of pattern recognition unaffected by shift in position. Biol. Cybern. 1980, 36, 193–202. [Google Scholar] [CrossRef]

- Madhavan, S.; Tripathy, R.K.; Pachori, R.B. Time–frequency domain deep convolutional neural network for the classification of focal and nonfocal EEG signals. IEEE Sens. J. 2020, 20, 3078–3086. [Google Scholar] [CrossRef]

- Arena, P.; Fortuna, L.; Occhipinti, L.; Xibilia, M.G. Neural networks for quaternion-valued function approximation. In Proceedings of the IEEE International Symposium on Circuits and Systems—ISCAS’94, London, UK, 30 May–2 June 1994; Volume 6, pp. 307–310. [Google Scholar] [CrossRef]

- Zhang, R.; Zheng, Y.; Mak, T.W.; Yu, R.; Wong, S.H.; Lau, J.Y.W.; Poon, C.C.Y. Automatic detection and classification of colorectal polyps by transferring low-level CNN features from nonmedical domains. IEEE J. Biomed. Health Inform. 2017, 21, 41–47. [Google Scholar] [CrossRef]

- Galbusera, F.; Niemeyer, F.; Wilke, H.J.; Bassani, T.; Casaroli, G.; Anania, C.; Costa, F.; Brayda-Bruno, M.; Sconfienza, L.M. Fully automated radiological analysis of spinal disorders and deformities: A deep-learning approach. Eur. Spine J. 2019, 28, 951–960. [Google Scholar] [CrossRef]

- Yeh, Y.C.; Weng, C.H.; Huang, Y.J.; Fu, C.J.; Tsai, T.T.; Yeh, C.Y. Deep-learning approach for automatic landmark detection and alignment analysis of whole-spine lateral radiographs. Sci. Rep. 2021, 11, 7618. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).