Impact of Hydrological Modellers’ Decisions and Attitude on the Performance of a Calibrated Conceptual Catchment Model: Results from a ‘Modelling Contest’

Abstract

1. Introduction

- (1)

- (2)

- (3)

- (1)

- Do different modellers achieve different model results by applying the same model to the same catchment? (Section 3.1)

- (2)

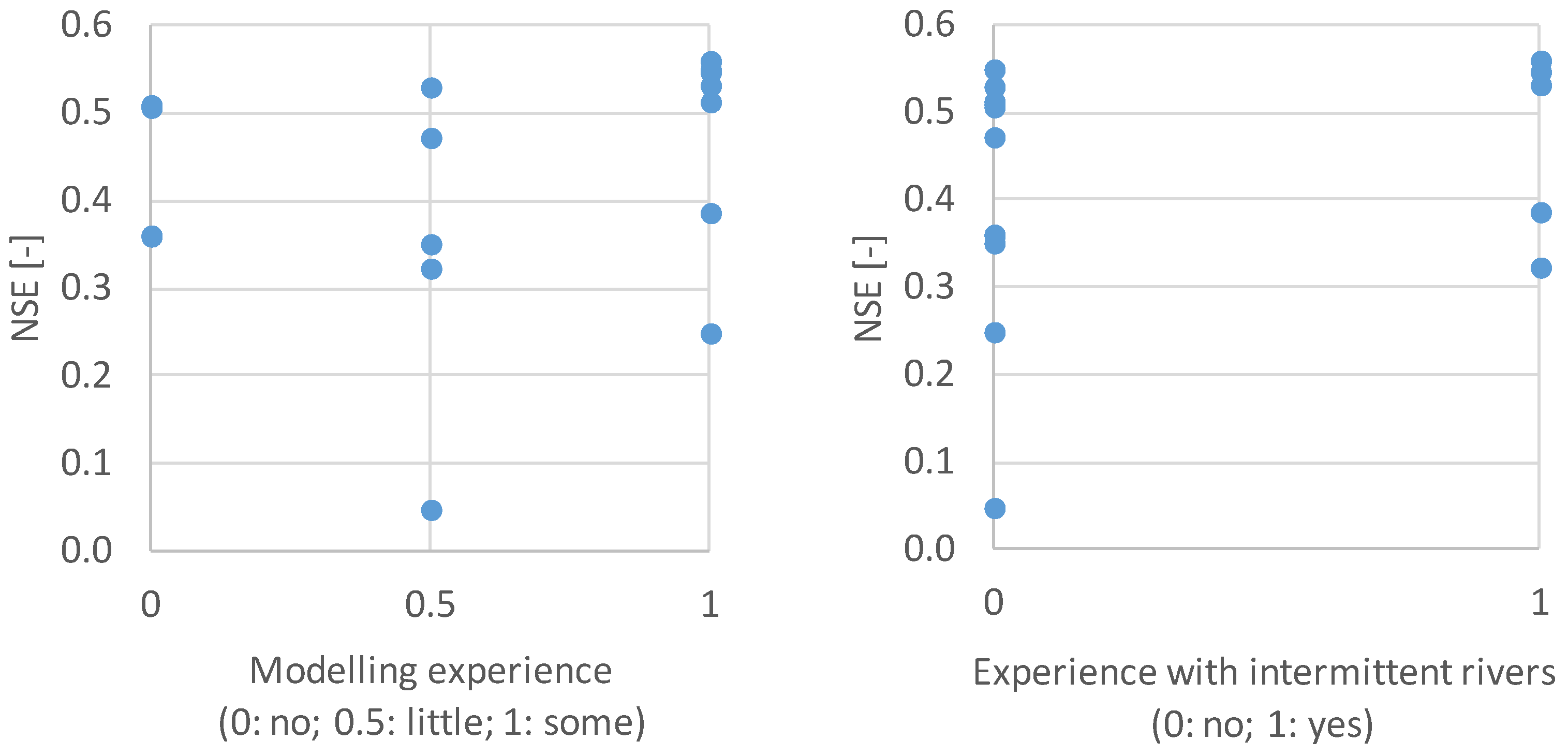

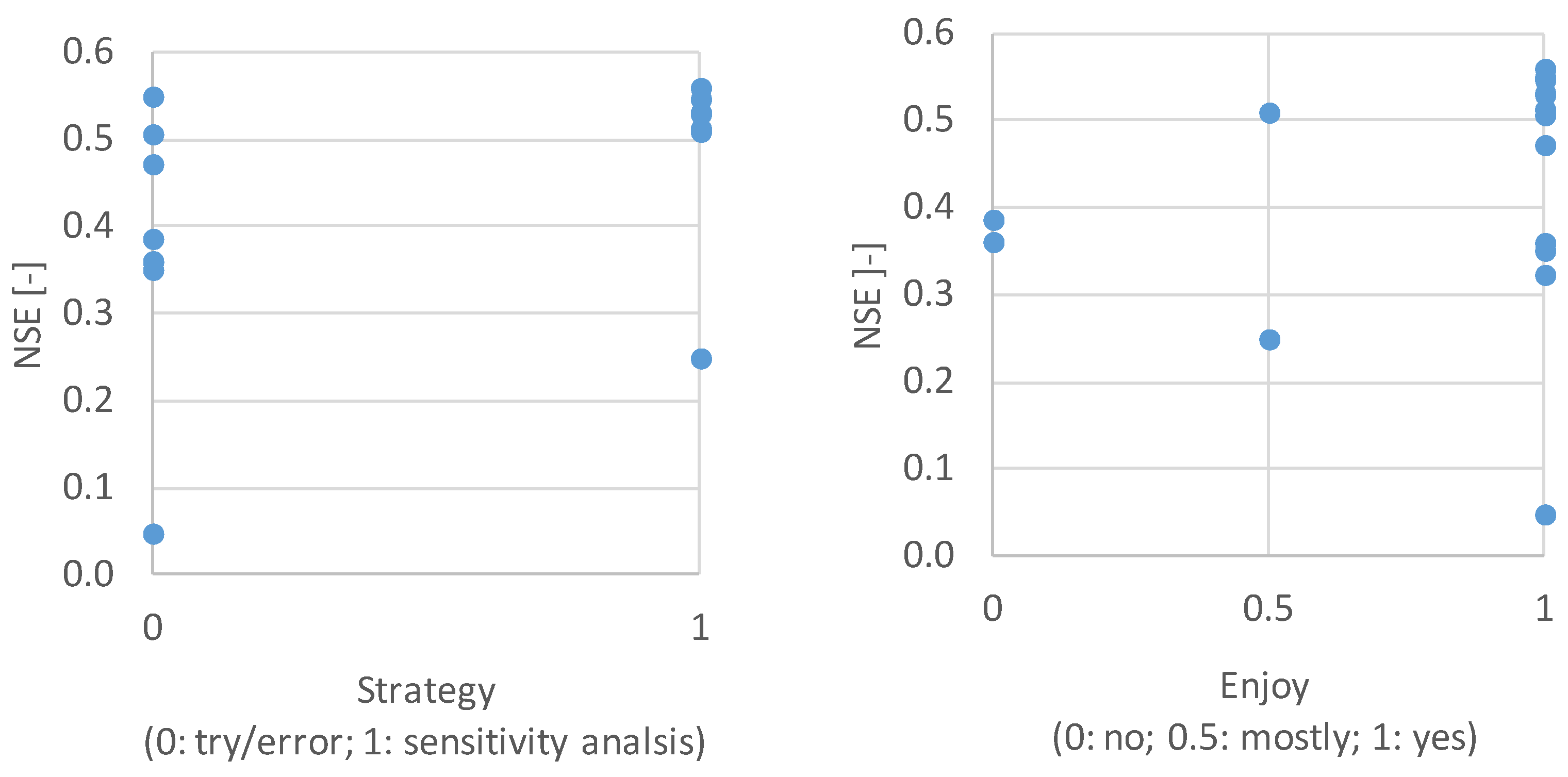

- Can the variability in the model results be explained by the experience, attitudes, and calibration strategy of the different modellers? (Section 3.3)

- (3)

- Do building modellers’ ensembles improve the model performance compared to the results achieved by individual modellers? (Section 3.4)

2. Materials and Methods

2.1. HBV Model

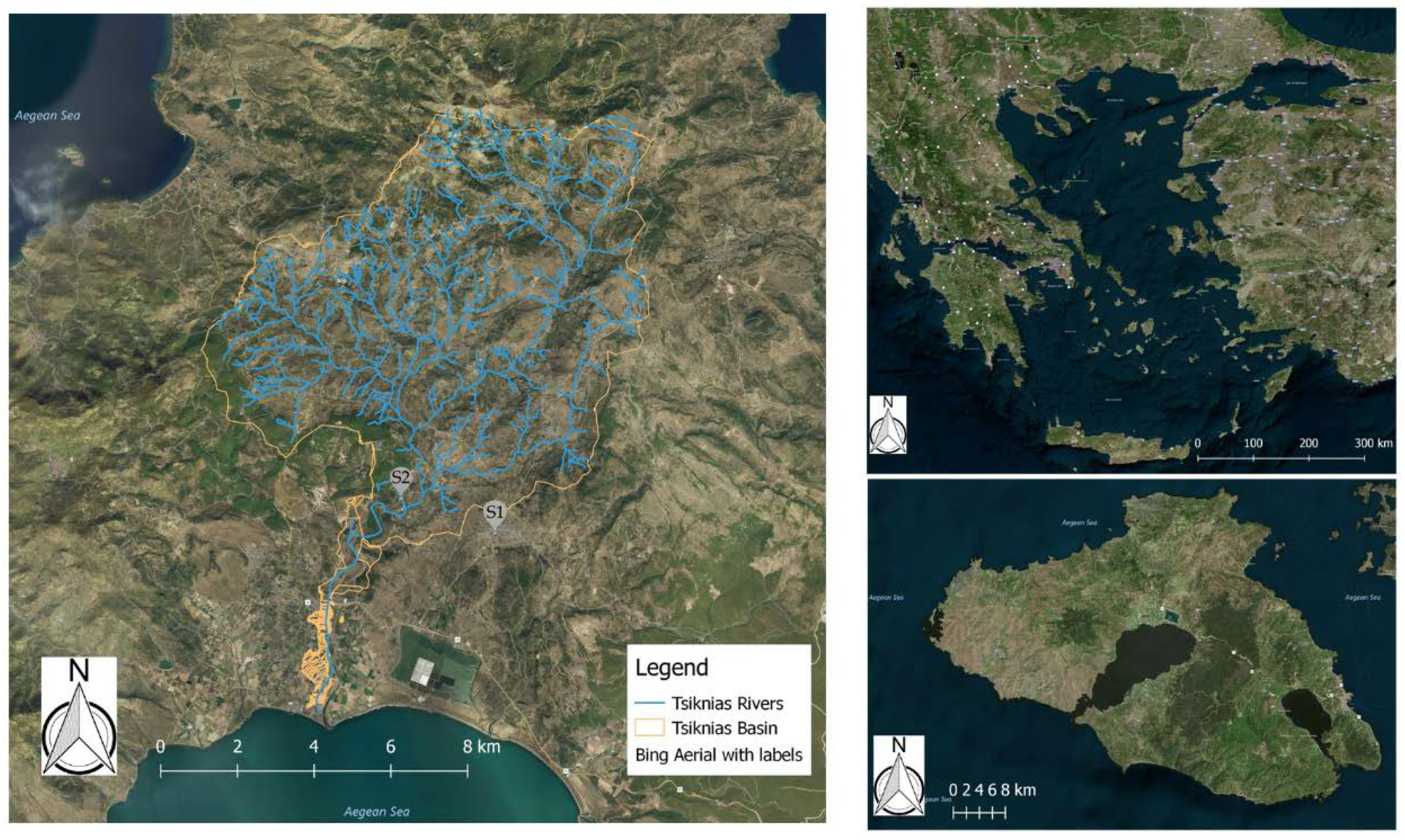

2.2. Tsiknias River Catchment

2.3. Design of the Modelling Contest

2.4. Design of the Questionnaire

- (1)

- Modelling experience was classified into three different classes: No experience, little experience (1 model, max. 1 year experience), some experience (either more than one model, or more than one year experience, or both); and

- (2)

- The degree of enjoyment was directly taken from the survey (yes/no); however, some participants replied that they enjoyed the contest in general, but they mentioned some criticism (“yes, but...”). They were ranked as a third class between “enjoyed” and “did not enjoy” for the evaluation (“mostly”).

3. Results and Discussion

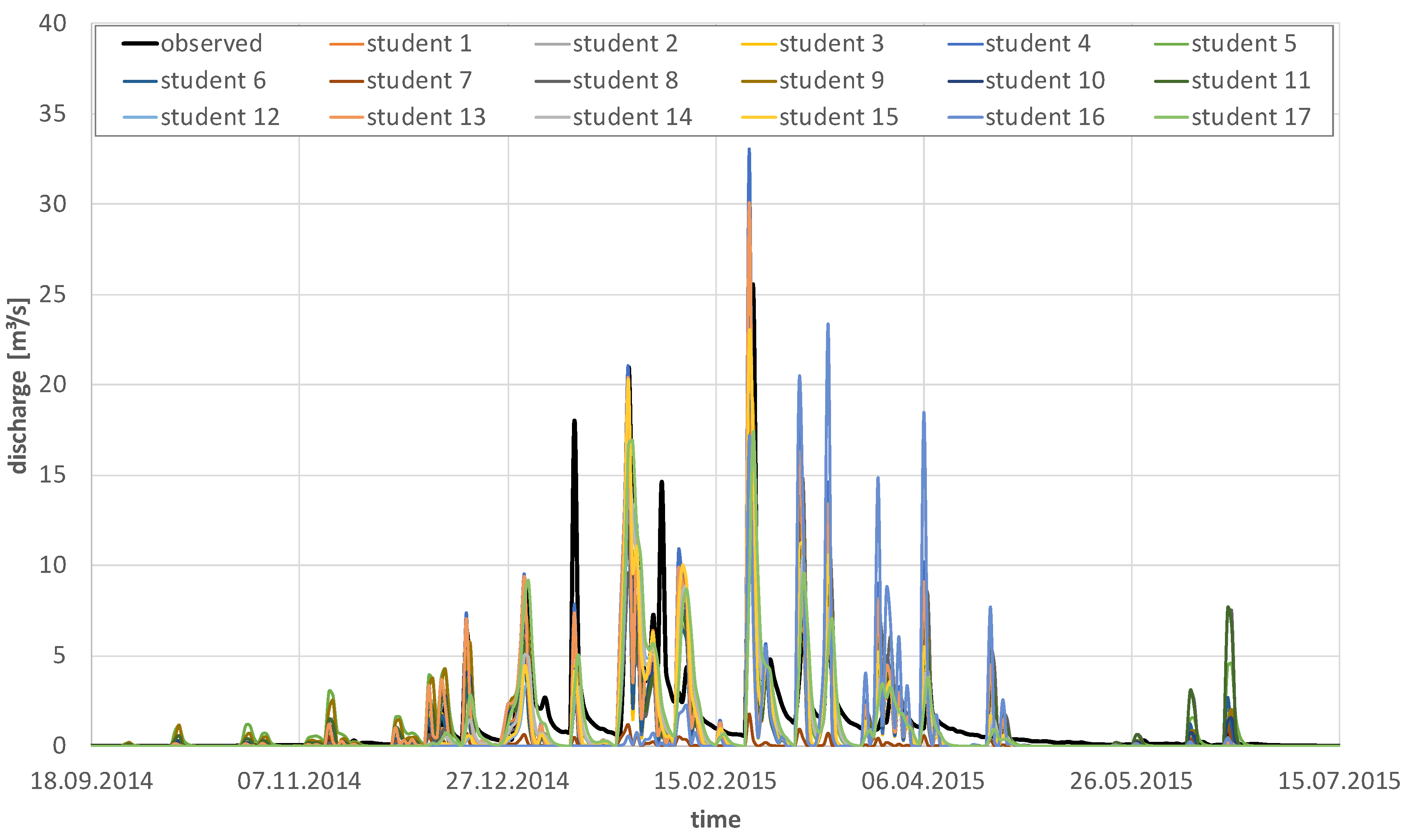

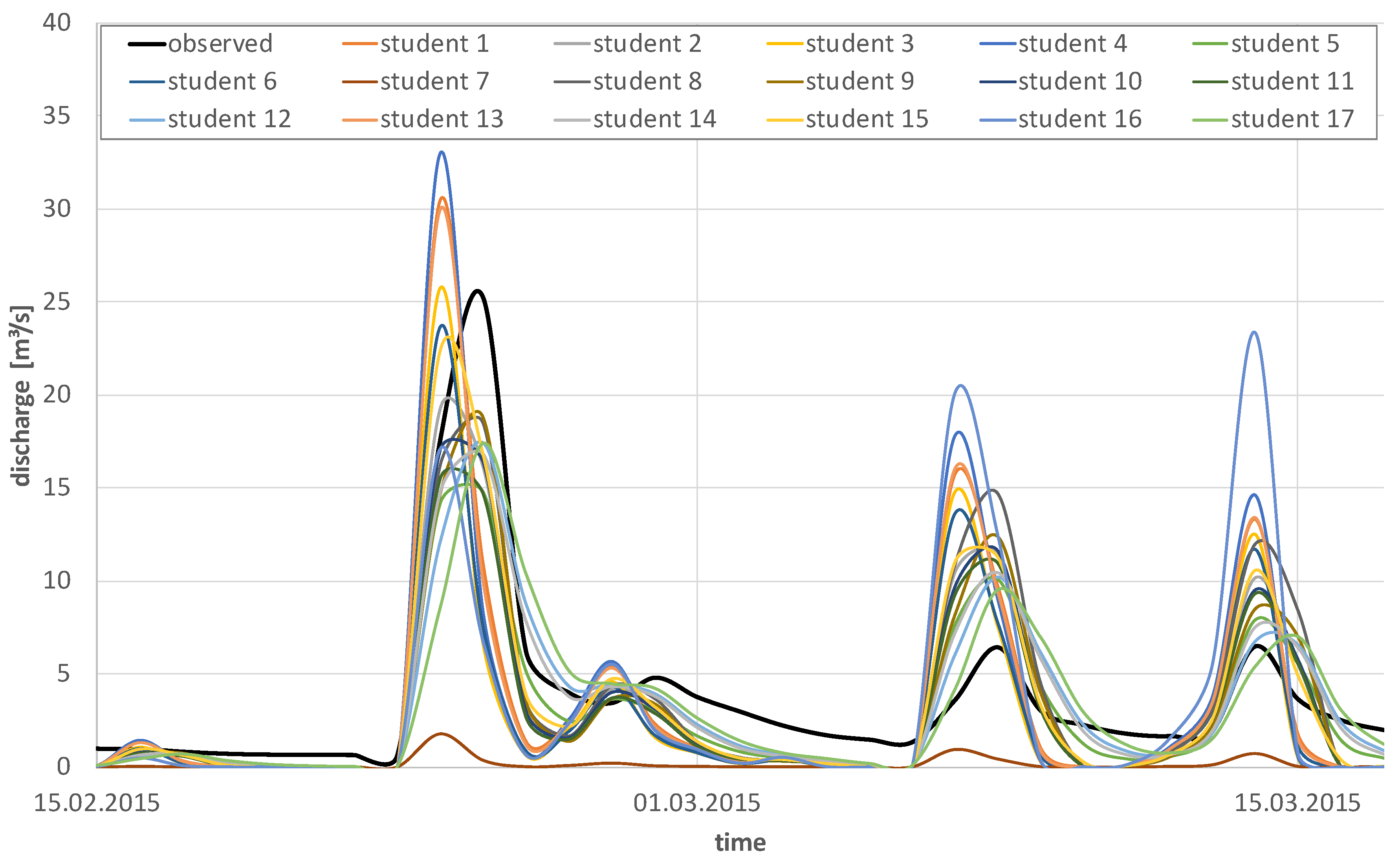

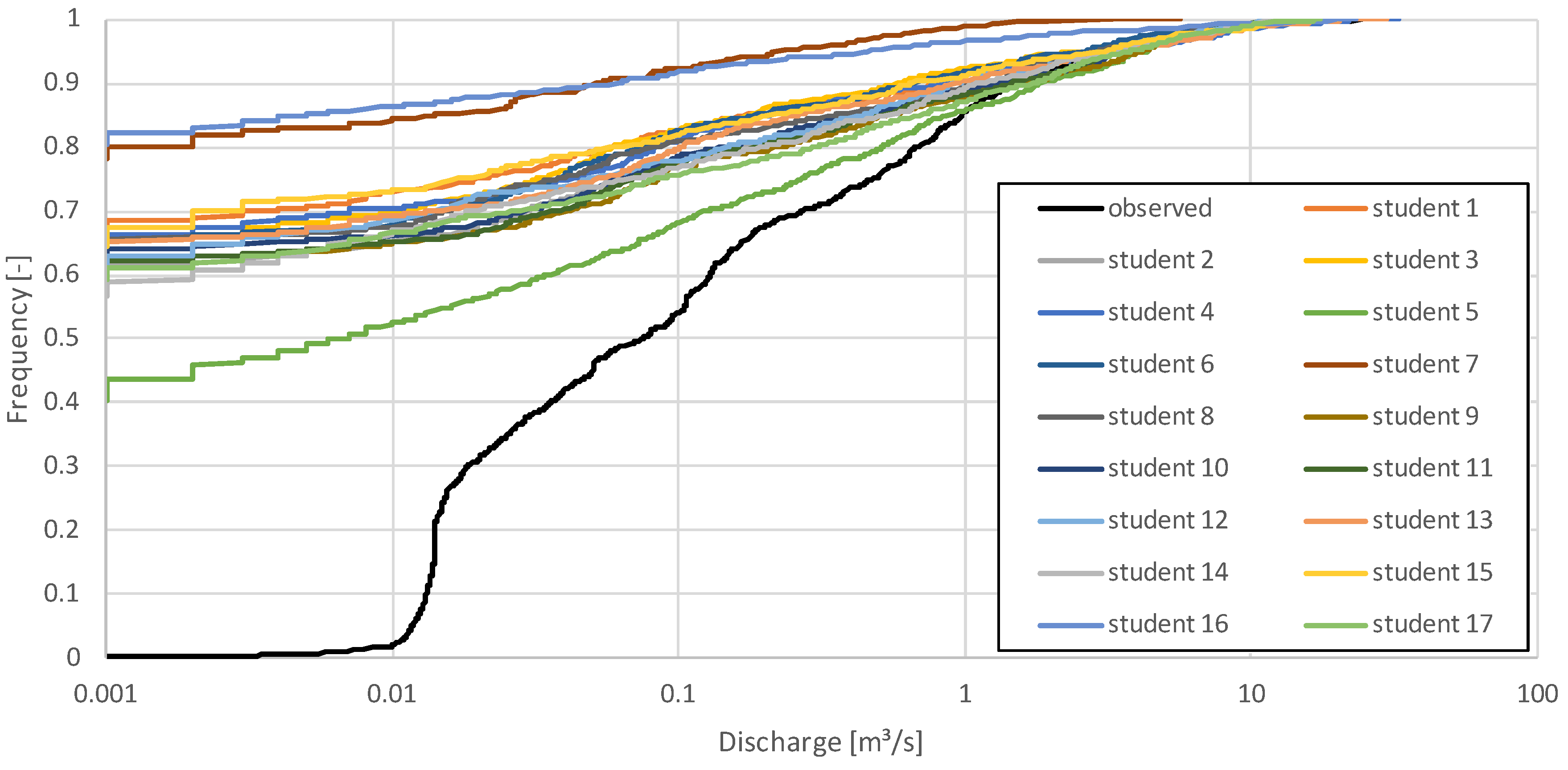

3.1. HBV Model Application to Tsiknias River Catchment

3.2. Experience, Decisions, and Attitudes of the Modellers

3.3. Evaluation of Model Results against Modellers Experience, Decisions, and Attitudes

3.4. Improvement of the Model Results through Calculating Model(ler) Ensembles

- (1)

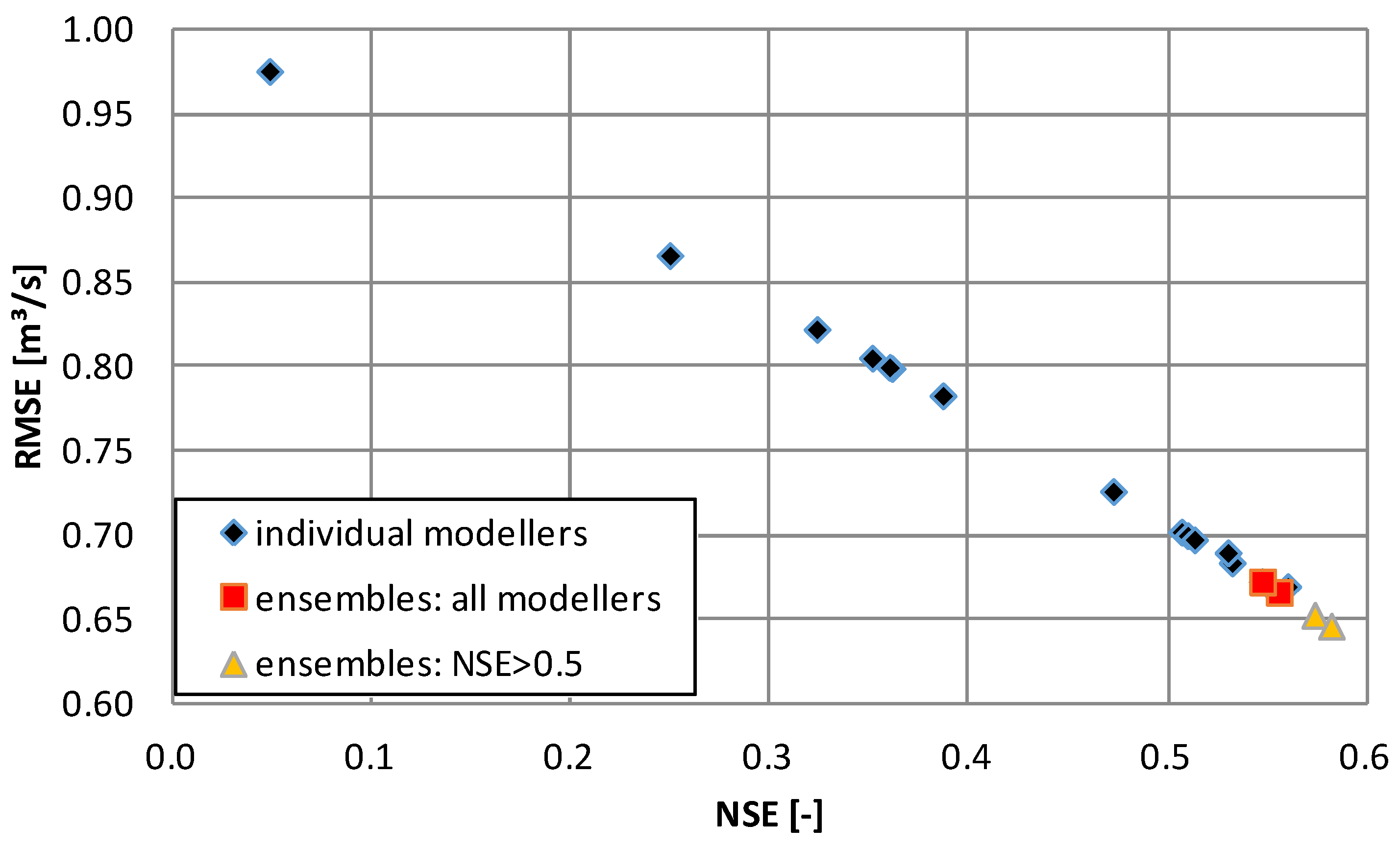

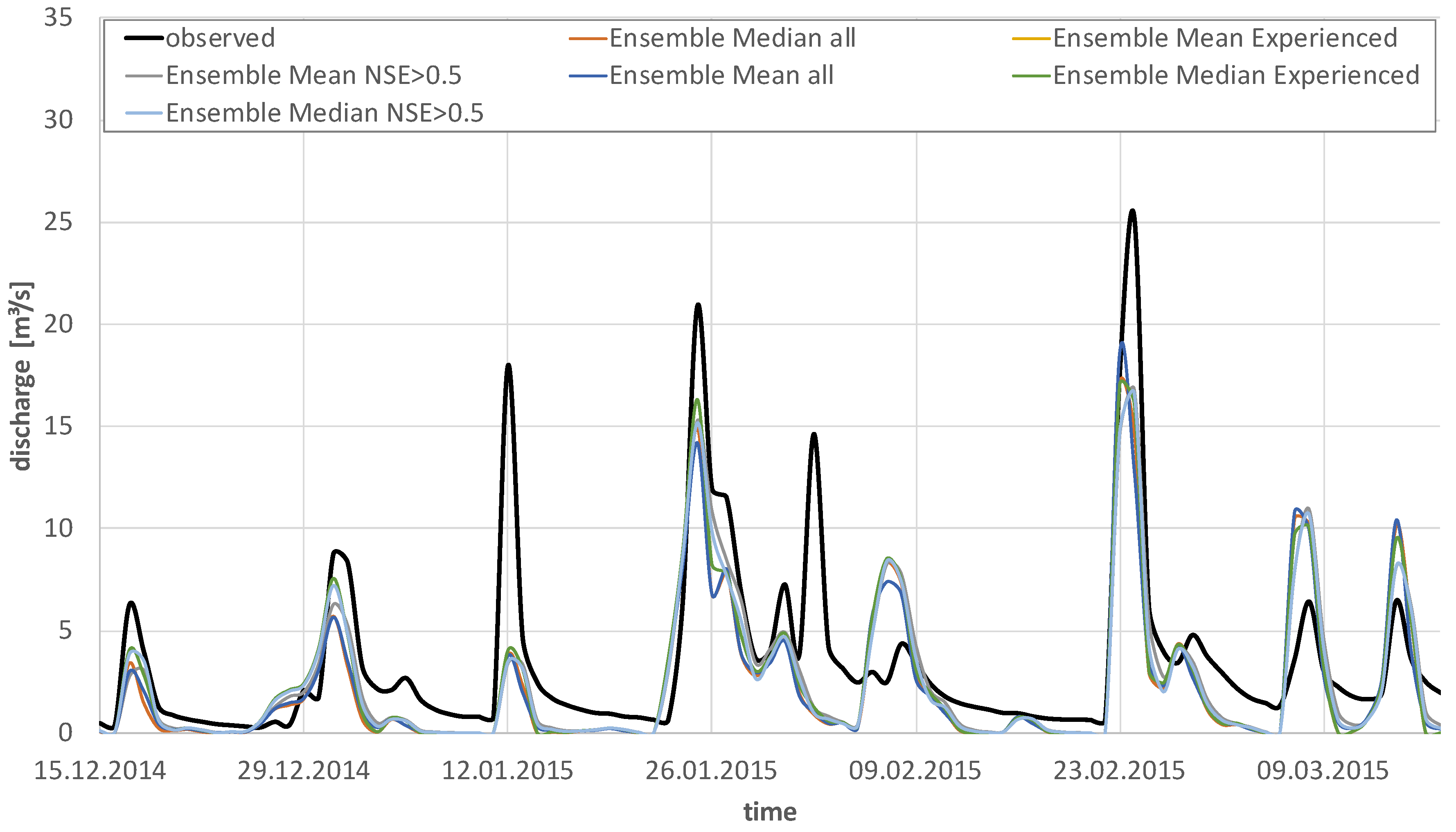

- Considering all modellers: For this first multi-modeller-ensemble, the best simulated hydrograph (that with the highest NSE) simulated by each participating modeller was considered, independent of quality, strategy, or experience.

- (2)

- Considering only those modellers who achieved satisfactory results (a posteriori): For this second multi-modeller-ensemble, only the best simulated hydrograph (that with the highest NSE) simulated by those participating modellers was considered who achieved satisfactory modelling results according to [25] (NSE > 0.5).

- (3)

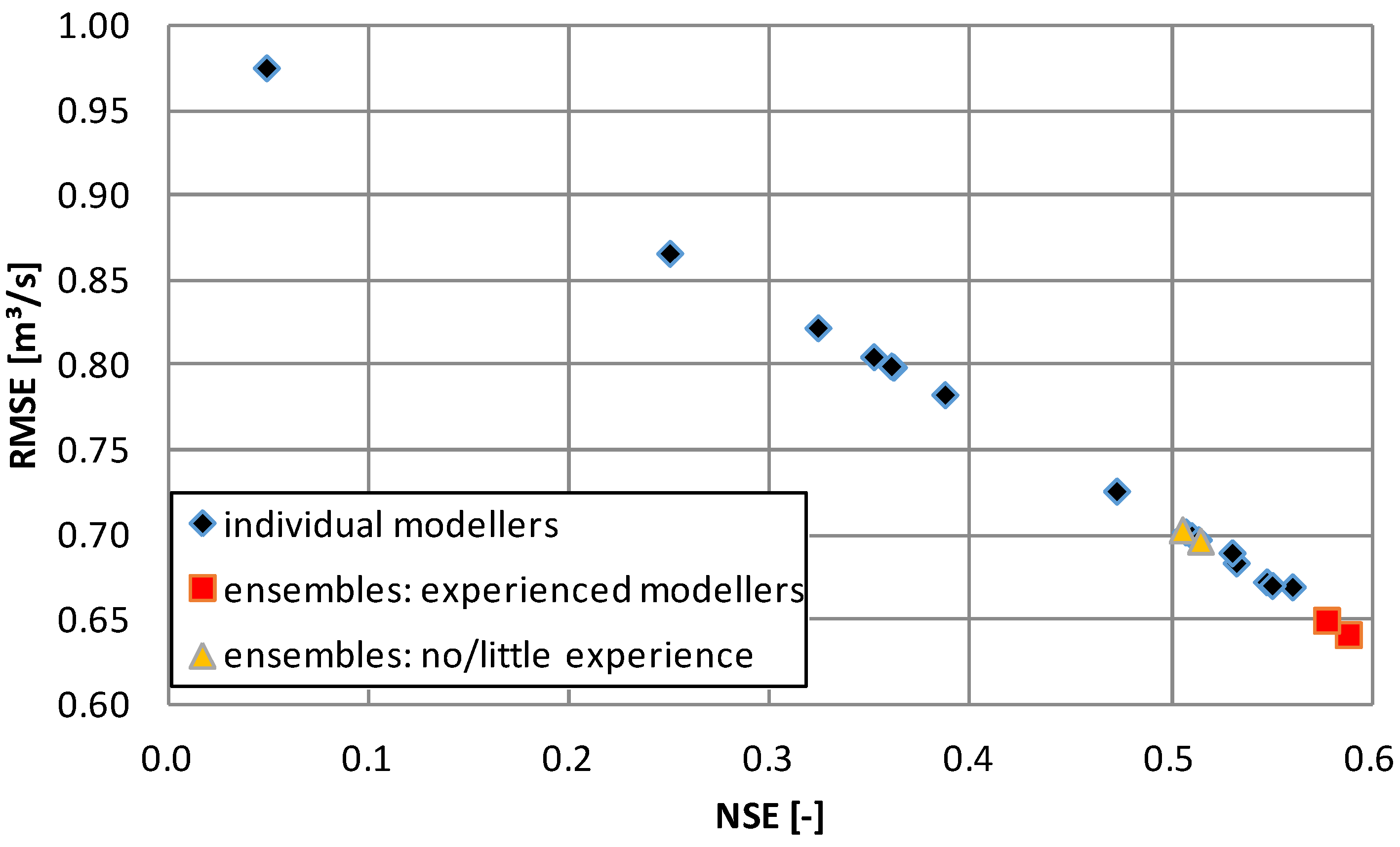

- Considering modellers according to their modelling experience (a priori): For this third strategy, the group of modellers was divided in two sub-groups, “participants with modelling experience” and “participants with little or without modelling experience”. For those two sub-groups, ensembles were calculated based on the best simulated hydrograph (that with the highest NSE) simulated by the members of the sub-groups.

- (4)

- Independently of the quality of the individual model applications, those two ensembles based on all modellers’ results have a simulation quality comparable to the best individual modeller (Figure 7).

- (5)

- The two ensembles based on the simulations of those modellers’ results who achieved “satisfactory” results even outperformed the best individual simulation and revealed the most robust simulation results (Figure 7).

- (6)

- The two ensembles based on the simulated hydrographs of the experienced modellers also outperform all individual simulations (Figure 8) and even all other modellers ensembles investigated in this study (Figure 7). Additionally, both ensembles based on the simulated hydrographs of the less and unexperienced modellers achieved satisfactory modelling results according to [25] (NSE > 0.5).

4. Conclusions

- (1)

- One key requirement of successful application of a conceptual catchment model is that a modeller has a good calibration strategy. A systematic sensitivity analysis helps a lot to identify the most sensitive model parameters and to make better decisions in the (manual) calibration process.

- (2)

- Available experience in model application and knowledge on regional processes of course helps to achieve reasonable model results. However, it does not necessarily guarantee a high model performance.

- (3)

- To enjoy what one is doing—hydrological model application in this case—is a supporting factor. The analysis of the contest results confirmed this also for hydrological model application.

- (4)

- “Modeller ensembles” can be used to show the uncertainty caused by behavioural aspects. Combining model results of different modellers helps to make predictions more robust, also with regard to the modellers’ decisions during the model application process. The good performance of a priori ensembles based on modellers’ experience emphasizes the power of collective intelligence also for the case of hydrological model applications.

Author Contributions

Funding

Conflicts of Interest

References

- Walker, W.E.; Harremöes, P.; Rotmans, J.; van der Sluijs, J.P.; van Asselt, M.B.A.; Janssen, P.; Krayer von Krauss, M.P. Defining Uncertainty: A Conceptual Basis for Uncertainty Management in Model-based Decision Support. Integr. Assess. 2003, 4, 5–17. [Google Scholar] [CrossRef]

- Breuer, L.; Huisman, J.A.; Willems, P.; Bormann, H.; Bronstert, A.; Croke, B.F.W.; Frede, H.-G.; Gräff, T.; Hubrechts, L.; Jakeman, A.J.; et al. Assessing the impact of land use change on hydrology by ensemble modeling (LUCHEM) I: Model intercomparison of current land use. Adv. Water Resour. 2009, 32, 129–146. [Google Scholar] [CrossRef]

- Beven, K. On the concept of model structural error. Water Sci. Technol. 2005, 52, 167–175. [Google Scholar] [CrossRef] [PubMed]

- Beven, K.; Freer, J. Equifinality, data assimilation, and uncertainty estimation in mechanistic modelling of complex environmental systems using the GLUE methodology. J. Hydrol. 2001, 249, 11–29. [Google Scholar] [CrossRef]

- Kuczera, G.; Parent, E. Monte Carlo assessment of parameter uncertainty in conceptual catchment models: The Metropolis algorithm. J. Hydrol. 1998, 211, 69–85. [Google Scholar] [CrossRef]

- Bormann, H. Sensitivity of a regionally applied soil vegetation atmosphere scheme to input data resolution and data classification. J. Hydrol. 2008, 351, 154–169. [Google Scholar] [CrossRef]

- Kavetski, D.; Kuczera, G.; Franks, S.W. Bayesian analysis of input uncertainty in hydrological modeling: 1. Theory. Water Resour. Res. 2006, 42, W03407. [Google Scholar] [CrossRef]

- Hämäläinen, R.P. Behavioural issues in environmental modelling—The missing perspective. Environ. Model. Softw. 2015, 73, 244–253. [Google Scholar] [CrossRef]

- Linkov, I.; Burmistrov, D. Model Uncertainty and Choices Made by Modelers: Lessons Learned from the International Atomic Energy Agency Model Intercomparisons. Risk Anal. 2003, 23, 1297–1308. [Google Scholar] [CrossRef]

- Holländer, H.M.; Blume, T.; Bormann, H.; Buytaert, W.; Chirico, G.B.; Exbrayat, J.-F.; Gustafsson, D.; Hölzel, H.; Kraft, P.; Stamm, C.; et al. Comparative predictions of discharge from an artificial catchment (Chicken Creek) using sparse data. Hydrol. Earth Syst. Sci. 2009, 13, 2069–2094. [Google Scholar] [CrossRef]

- Holländer, H.M.; Bormann, H.; Blume, T.; Buytaert, W.; Chirico, G.B.; Exbrayat, J.-F.; Gustafsson, D.; Hölzel, H.; Krauße, T.; Kraft, P.; et al. Impact of modellers’ decisions on hydrological a priori predictions. Hydrol. Earth Syst. Sci. 2014, 18, 2065–2085. [Google Scholar] [CrossRef]

- Bormann, H.; Holländer, H.M.; Blume, T.; Buytaert, W.; Chirico, G.B.; Exbrayat, J.-F.; Gustafsson, D.; Hölzel, H.; Kraft, P.; Krauße, T.; et al. Comparative discharge prediction from a small artificial catchment without model calibration: Representation of initial hydrological catchment development. Die Bodenkultur 2011, 62, 23–29. [Google Scholar]

- Molteni, F.; Buizza, R.; Palmer, T.N.; Petroliagis, T. The ECMWF Ensemble Prediction System. Methodology and validation. Q. J. R. Meteorol. Soc. 1996, 122, 73–119. [Google Scholar] [CrossRef]

- Ziehmann, C. Comparison of a single-model EPS with a multi-model ensemble consisting of a few operational models. Tellus 2000, 52A, 280–299. [Google Scholar] [CrossRef]

- Viney, N.R.; Bormann, H.; Breuer, L.; Bronstert, A.; Croke, B.F.W.; Frede, H.; Gräff, T.; Hubrechts, L.; Huisman, J.A.; Jakeman, A.J.; et al. Assessing the impact of land use change on hydrology by ensemble modelling (LUCHEM) II: Ensemble combinations and predictions. Adv. Water Resour. 2009, 32, 147–158. [Google Scholar] [CrossRef]

- Doblas-Reyes, F.J.; Hagedorn, R.; Palmer, T.N. The rationale behind the success of multi-model ensembles in seasonal forecasting—II Calibration and combination. Tellus 2005, 57A, 234–252. [Google Scholar] [CrossRef]

- Cloke, H.; Pappenberger, F. Ensemble flood forecasting: A review. J. Hydrol. 2009, 375, 613–626. [Google Scholar] [CrossRef]

- Woolley, A.W.; Chabris, C.F.; Pentland, A.; Hashmi, N.; Malone, T.W. Evidence for a collective intelligence factor in the performance of human groups. Science 2010, 330, 686–688. [Google Scholar] [CrossRef] [PubMed]

- Bergström, S. The HBV Model: Its Structure and Applications; Swedish Meteorological and Hydrological Institute (SMHI): Norrköpping, Sweden, 1992. [Google Scholar]

- Bergström, S. The HBV model (Chapter 13). In Computer Models of Watershed Hydrology; Singh, V.P., Ed.; Water Resources Publications: Highlands Ranch, CO, USA, 1995; pp. 443–476. [Google Scholar]

- Seibert, J.; Vis, M.J.P. Teaching hydrological modeling with a user-friendly catchment-runoff-model software package. Hydrol. Earth Syst. Sci. 2012, 16, 3315–3325. [Google Scholar] [CrossRef]

- Hessling, M. Hydrological modelling and a pair basin study of Mediterranean catchments. Phys. Chem. Earth (B) 1999, 24, 59–63. [Google Scholar] [CrossRef]

- Bormann, H.; Diekkrüger, B. A conceptual hydrological model for Benin (West Africa): Validation, uncertainty assessment and assessment of applicability for environmental change analyses. Phys. Chem. Earth 2004, 29, 759–768. [Google Scholar] [CrossRef]

- Nash, J.E.; Sutcliffe, J.V. River flow forecasting through conceptual models part I—A discussion of principles. J. Hydrol. 1970, 10, 282–290. [Google Scholar] [CrossRef]

- Moriasi, D.N.; Arnold, J.G.; Van Liew, M.W.; Bingner, R.L.; Harmel, R.D.; Veith, T.L. Model evaluation guidelines for systematic quantification of accuracy in watershed simulations. Trans. ASABE 2007, 50, 885–900. [Google Scholar] [CrossRef]

| Model Parameters | Relevance | Indicative Value |

|---|---|---|

| x | Weighting factor related to the streams dimensions | 0.25 |

| k | Storage coefficient related to the stream dimensions | 0.55 |

| HL1/Z | Parameter related to the elevation | 30.00–63.00 |

| K0 | 0.45–10.50 | |

| K1 | Recession rate of the various reservoirs parameters | 10.70–20.70 |

| K2 | 30.5 | |

| PERC | The maximum percolation rate from the upper to the lower groundwater box | 1.00 |

| MAXBAS | Routing parameter of the triangular weighting function | 5.00–10.00 |

| FC | Maximum soil moisture storage | 800–1000 |

| BETA | Soil parameter | 2.00–10.00 |

| Goodness of Fit Index | Best Simulation | 75%-Percentile | 50%-Percentile | 25%-Percentile | Worst Simulation |

|---|---|---|---|---|---|

| Nash-Stutcliffe efficiency (NSE) | 0.56 | 0.53 | 0.47 | 0.35 | −45.9 |

| Coefficient of determination | 0.56 | 0.55 | 0.51 | 0.42 | 0.082 |

| Percent bias (PB) | 3.5% | 14.6% | 24.9% | 31.2% | 526% |

| Root mean squared error (RMSE) | 0.67 mm/d | 0.69 mm/d | 0.73 mm/d | 0.81 mm/d | 6.85 mm/d |

| Model Parameters | Minimum Value | Median Value | Maximum Value |

|---|---|---|---|

| HL1/Z | 0.0004 | 0,037 | 65 |

| K0 | 0.0095 | 0.2 | 18 |

| K1 | 0.002 | 0.2 | 3.6 |

| K2 | 0.001 | 0.1 | 1 |

| PERC | 0.001 | 0.02 | 3 |

| MAXBAS | 4 | 7 | 98 |

| FC | 150 | 250 | 1140 |

| BETA | 1 | 2 | 10 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bormann, H.; De Brito, M.M.; Charchousi, D.; Chatzistratis, D.; David, A.; Grosser, P.F.; Kebschull, J.; Konis, A.; Koutalakis, P.; Korali, A.; et al. Impact of Hydrological Modellers’ Decisions and Attitude on the Performance of a Calibrated Conceptual Catchment Model: Results from a ‘Modelling Contest’. Hydrology 2018, 5, 64. https://doi.org/10.3390/hydrology5040064

Bormann H, De Brito MM, Charchousi D, Chatzistratis D, David A, Grosser PF, Kebschull J, Konis A, Koutalakis P, Korali A, et al. Impact of Hydrological Modellers’ Decisions and Attitude on the Performance of a Calibrated Conceptual Catchment Model: Results from a ‘Modelling Contest’. Hydrology. 2018; 5(4):64. https://doi.org/10.3390/hydrology5040064

Chicago/Turabian StyleBormann, Helge, Mariana Madruga De Brito, Despoina Charchousi, Dimitris Chatzistratis, Amrei David, Paula Farina Grosser, Jenny Kebschull, Alexandros Konis, Paschalis Koutalakis, Alkistis Korali, and et al. 2018. "Impact of Hydrological Modellers’ Decisions and Attitude on the Performance of a Calibrated Conceptual Catchment Model: Results from a ‘Modelling Contest’" Hydrology 5, no. 4: 64. https://doi.org/10.3390/hydrology5040064

APA StyleBormann, H., De Brito, M. M., Charchousi, D., Chatzistratis, D., David, A., Grosser, P. F., Kebschull, J., Konis, A., Koutalakis, P., Korali, A., Krauzig, N., Meier, J., Meliadou, V., Meinhardt, M., Munnelly, K., Stephan, C., De Vos, L. F., Dietrich, J., & Tzoraki, O. (2018). Impact of Hydrological Modellers’ Decisions and Attitude on the Performance of a Calibrated Conceptual Catchment Model: Results from a ‘Modelling Contest’. Hydrology, 5(4), 64. https://doi.org/10.3390/hydrology5040064