1. Introduction

The increase in the number of traffic accidents due to driver fatigue has become a serious problem for our society. The alertness of drivers is greatly affected by their level of fatigue. Fatigue can also manifest in drowsiness, an intermediate state between consciousness and sleep, a state in which the driver’s ability to control the vehicle is diminished mainly due to increased reaction time [

1]. In the following three subsections, the motivation for this study as well as a brief description of the most commonly used methods for determining driver fatigue are provided.

1.1. Some Statistics

According to the National Highway Traffic Safety Administration in the United States, an estimated 100,000 traffic accidents each year are directly caused by driver drowsiness, resulting in an average of 1550 deaths, 71,000 injuries, and USD 12.5 billion in economic losses annually [

2].

The American National Sleep Foundation reported in 2009 that 5% of drivers have driven a vehicle with a significantly increased level of fatigue, and 2% of them have fallen asleep while driving [

3].

The German Road Safety Council has reported that one in four victims of accidents on the motorway are the result of the driver’s momentary drowsiness (falling asleep at the wheel) [

4].

It was also found that driver drowsiness was the cause of 30% of accidents on French motorways between 1979 and 1994 [

5].

Statistics between 2017 and 2021 show an estimated 17% of fatal car crashes involve a drowsy driver [

6].

1.2. Driver Fatigue and Drowsiness

Even if some people think that the words “drowsiness” and “fatigue” are very close, they are different: fatigue can refer to a feeling of tiredness or exhaustion due to a physical activity, while drowsiness refers specifically to the state right before sleep. Fatigue can appear after doing a task for many hours without a rest without a person necessarily being drowsy.

Fatigue is a psychophysiological condition caused by long exertion. The degree and dimension of this condition depend on the type, form, dynamics, and context. The context is described by the meaning of performance to the individual, rest and sleep, psychosocial factors, individual traits (diet, health, fitness and other individual states), and environmental conditions. Fatigue results in reduced levels of mental processing and/or physical activity.

“Drowsiness” refers to the moment right before sleep onset occurs. This state is very dangerous for drivers, as they have no control over it, and consequently over the car. Thus, it is very clear that drowsiness is much more dangerous than fatigue from a safe driving perspective. Drowsiness can strike even when a driver is not expecting it, which puts that person’s safety and the safety of others in jeopardy.

As a remark, inactivity can relieve fatigue, but at the same time will make drowsiness worse.

As a particular case, drowsy driving occurs when a driver operates a vehicle and is too tired or sleepy to stay attentive/alert, making the driver less aware of the vehicle and what is happening around its surroundings.

There are some signs that show the presence of drowsy driving, including difficulty concentrating, inability/difficulty in remembering the last few kilometers driven, frequently adjusting driving position without a real reason, yawning and blinking frequently, difficulty in keeping eyes open, frequent and unreasonably changing lanes, etc. All this can lead to attention difficulties, slower reaction times, slower and muddled thinking, erratic/imprecise speed control, and sloppy/wrong steering control. Many drivers claim to have their own strategies to overcome drowsiness, such as cold and fresh air or turning up the radio, but unfortunately many researches have proven that these methods have a limited effect.

1.3. Methods for Detecting and Measure Fatigue and Drowsiness

Over time, especially in recent decades, many methods for detecting and measuring fatigue and drowsiness have been proposed.

The detection and measurement of the degree of fatigue and drowsiness is mainly done using physiological measures, vehicle-based measures, and behavioral measures.

In the case of physiological measurements, the driver’s condition is assessed using the heart rate, pulse rate, brain activity, body temperature, etc. For example, the following could be used: electrocardiography (ECG) [

7], electroencephalography (EEG) [

8], electro-oculogram (EOG) [

9], electromyography (EMG) [

10], or a hybridization of these.

The driving pattern of a drowsy driver is different from the driving pattern of a normal driver. As a result, measurements that show how the driver interacts with the vehicle are useful in the assessment, falling into the category of vehicle-based measures. The frequently used metrics are: steering movement [

11], deviation from the lane [

12], sudden changes in acceleration [

13], and use of gas pedal and brake pedal [

13]. It should be mentioned that vehicle-based measure may lead to more false positives [

14].

The behavioral measures are based on eye closure, eye blink, frequent yawning, or facial expressions using captured images or videos.

Due to the major importance of the “drowsy driver” problem, there are currently many technologies for its automatic detection, sometimes also known as “driver alertness monitoring”, that are car safety technologies which help prevent accidents caused by the driver getting drowsy.

Such automated systems, called “driver drowsiness and attention warning”, implement a system that assesses the driver’s alertness through vehicle systems analysis and sometimes warns the driver. Currently, many vehicle manufacturers equip their products with such systems: Audi (Rest Recommendation system), BMW (Active Driving Assistant), Ford (Driver Alert), Mercedes-Benz (Attention Assist), Renault/Dacia (Tiredness Detection Warning), etc.

Advanced techniques from Artificial Intelligence (AI) and Convolutional Neural Networks (CNNs) are used in the method proposed in the paper [

15]. In this method of detecting driver fatigue, the percentage of eyelid closure over the pupil over time and mouth opening degree are two parameters used for fatigue detection, detected from the analysis of the driver’s facial image.

Using the same parameters (eyes and mouth) as paper [

16], another method is proposed that uses MCNNs (Multi-Task Convolutional Neural Networks) to detect and monitor driver fatigue. According to the data reported by the authors in the comparative study with other methods, their proposed model achieved 98.81% fatigue detection on a public dataset like YawdDD (Yawning Detection Dataset).

In [

17], a method is proposed to detect driver fatigue based on abnormal driver behavior by analyzing facial characteristics (yawning) and 3D head pose estimation. The authors reported an accuracy of their method of about 97%.

In [

18], a method for detecting driver fatigue using brain electroencephalogram signals is proposed. The authors reported in the comparative study an accuracy of approximately 97% for their proposed method.

Heart rate variability (HRV) is used for detecting driver fatigue in the method proposed in [

19]. The paper clearly highlights the link between HRV and driver fatigue, while also listing some reasons why the use of HRV in the past has not always led to the best results.

Paper [

20] offers the reader a systematic literature review of the research in the period 2007–2021 about the evolution of various driver fatigue detection. The study includes analyses such as: scientific publication trend, country-wise number of publications in many countries, year-wise development in driver fatigue detection approaches, the proposed methods and a classification of them in the analyzed period, and many other interesting and useful data and statistics.

In work [

21] a complex analysis regarding fatigue identification and prediction is made. The study, based on works in the field, discusses aspects such as fatigue influential indicators, methods for fatigue identification, metrics for measuring fatigue levels, and methods for predicting fatigue. The paper presents a literature review of the research conducted over the 15 years regarding the evolution of various driver fatigue detection technologies. It is mentioned that hybrid methods are preferred to identify driving fatigue or drowsiness.

In [

22], two physiological measures for determining driver fatigue levels were investigated, specifically HRV and EDA (Electrodermal Activity). Machine learning was used for classification, achieving an accuracy rate of 88.7% when HRV and EDA features were used as inputs.

2. The Proposed Hybrid Driver Assistance System

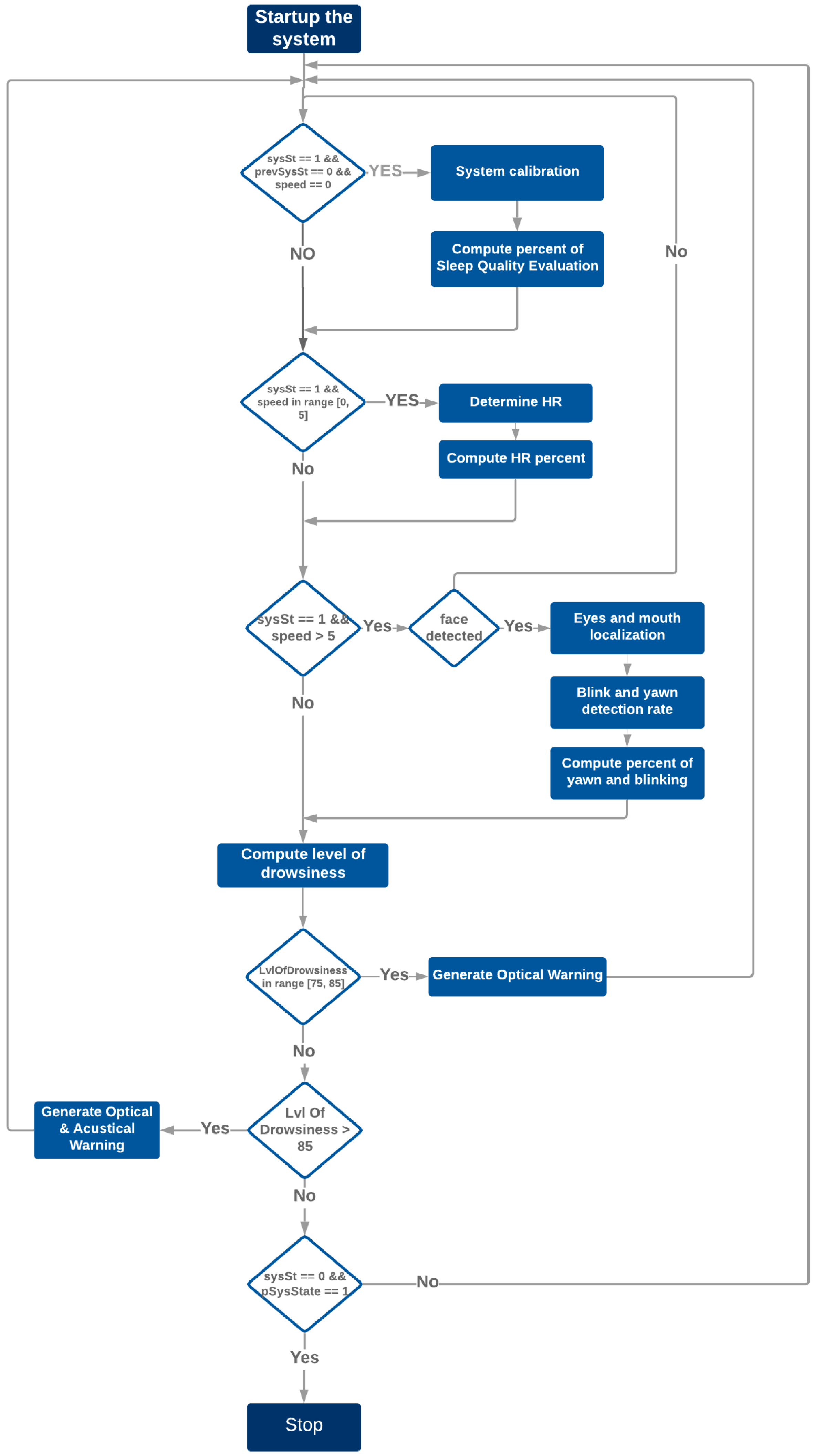

This paper proposes a hybrid system for monitoring and determining the level of driver fatigue based on the analysis of visual characteristics, heart rate variability measurements, and the evaluation of sleep quality from the previous night. The proposed system includes four metrics: the first metric refers to the self-assessment of sleep quality from the previous night, the second metric analyzes heart rate variation, and the last two metrics analyze the driver’s visual (behavioral) characteristics, monitoring blink rate, and yawn detection. A graphical representation of the proposed system structure can be seen in

Figure 1.

As can be seen in

Figure 1, sleep quality assessment is the first metric used in the proposed system; the assessment taking place when the driver starts the car and the speed is 0 km/h. In such a case, the system must be calibrated. After calibration, the driver is asked if he has performed this assessment before during the same days, and if this assessment has been performed, the system will go to the next step. If the answer is NO, the driver is asked to evaluate the quality of sleep with a score from 1 to 5, where 1 means that he did not sleep at all the previous night and 5 means that he had a very restful sleep. Depending on this assessment, the system will set a percentage score.

The driver’s heart rate assessment can be performed when the car is started and the speed does not exceed 5 km/h, which allows the driver to assess the heart rate when the car starts and has not yet left the place, when waiting at a traffic light, when stuck in a traffic jam, or in any other situation in which the speed of the vehicle he is driving does not exceed 5 km/h. The heart rate variation is determined according to the reference value obtained following the system calibration process.

The blink rate assessment is mainly aimed at determining the number of blinks of the driver, as an increased blink rate represents an increase in the level of fatigue. The blink rate is only assessed when the vehicle is moving at a speed greater than 5 km/h. As in the case of heart rate, the driver’s blink rate at the current moment will be compared with the reference value determined following the system calibration process.

Yawning is one of the main visual signs of fatigue, so it is particularly important to determine the occurrence of yawning. This metric is evaluated when the vehicle is moving at a speed greater than 5 km/h.

The main algorithm in pseudocode is presented below (Algorithm 1):

| Algorithm 1 The main procedure |

| 1: main procedure HYBRIDSYSTEM |

| 2: System initialization() |

| 3: while True do |

| 4: if System state == OFF and System previous state == ACTIVE then |

| 5: Stop processing |

| 6: if System state == ACTIVE and System previous state == OFF and speed == 0 then |

| 7: System calibration() AND Sleep quality evaluation () |

| 9: if System state == ACTIVE and speed in [0–5 km/h] then |

| 10: Heart rate determination() |

| 11: if System state == ACTIVE and speed > 5 km/h then |

| 12: Blink rate determination() |

| 13: Yawn detection() |

| 14: Fatigue level determination() |

| 15: if Fatigue level in the range [75–85%] then |

| 16: visual signal generation |

| 17: if Fatigue level > 85% then |

| 18: acoustic and visual signal generation |

Having the scores related to the metrics used by us, the driver’s fatigue level will be calculated, each score having a weight in determining the fatigue level. If the fatigue level is between the threshold values for the visual signals (between 75% and 85%), then a visual signal will be generated. If the fatigue level exceeds the threshold for the acoustic and light signals, i.e., 85%, then acoustic and light warning signals will be generated. If none of the previously mentioned thresholds have been reached, the measurements continue cycle by cycle, measuring the fatigue level in each cycle. If the system status changes to Off, i.e., the machine has been stopped, then the processing is stopped.

3. Implementation

3.1. Hardware and Software Tools Used

The primary programming language used was Python 3.5.0., along with the NumPy and OpenCV Python libraries.

The main hardware used was an Arduino UNO board, which is based on the Microchip ATmega328P microcontroller and programmed with Arduino IDE using an USB cable.

The heart rate sensor used was essentially a photoplethysmograph (PPG), which is a method of exploiting peripheral circulation based on the variation of the pulse using a photoelectric cell. The heart rate signal that comes out of the PPG is an analog voltage fluctuation, and the pulse wave is called a PPG. It is a plug-and-play sensor designed and built for Arduino boards.

The video camera used was the Camera Auto DVR CE600, which is a car video camera that can capture audio-video images at a Full HD resolution. The 12 infrared sensors with which the camera is equipped offer the possibility of use even at night. The camera has a 1.5-inch LCD screen, a viewing angle of 140 degrees and can film at 12 MP, 8 MP, 5 MP, or 3 MP.

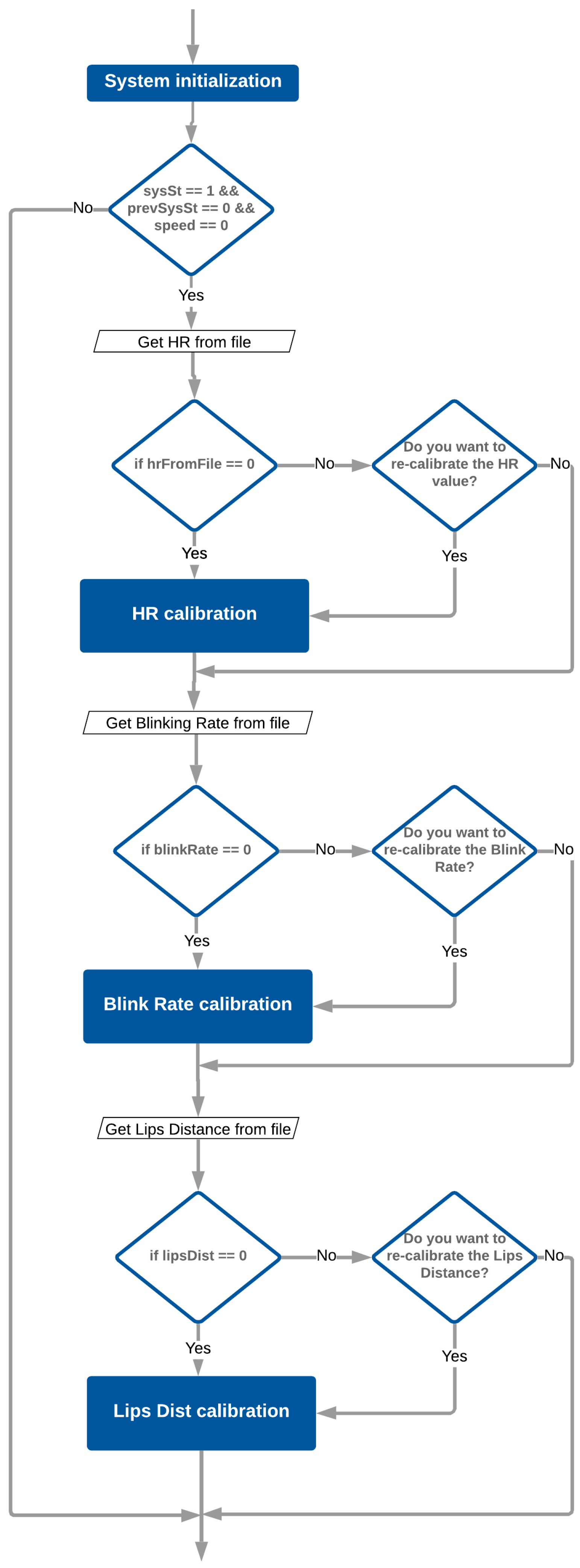

3.2. System Calibration

Prior to initial use, the proposed system requires calibration. All customizable parameters are described below, while

Figure 2 illustrates the calibration process.

OPTICAL_WARNING_THRESHOLD_L: This parameter is used to determine the lower limit of the driver’s fatigue level. The default value is 75, which means that when the fatigue level is greater than or equal to this configurable parameter, light signals will be generated.

OPTICAL_WARNING_THRESHOLD_H: This parameter is used to determine the upper limit of the driver’s fatigue level for generating visual signals. The default value is 5, which means that light signals will be generated as long as the fatigue level value is less than or equal to the value of this configurable parameter.

ACUSTICAL_WARNING_THRESHOLD: This parameter is used to determine when the generation of acoustic and light signals will begin. If the fatigue level value is greater than or equal to this threshold value, in this case the default value being 85.001, then the acoustic and visual warning signals will be generated.

HR_SPEED_THRESHOLD_L: This parameter is used to set the lower limit of the vehicle’s speed in order to call the heart rate measurement function. The default value is 0, i.e., 0 km/h.

HR_SPEED_THRESHOLD_H: This parameter is used to set the upper limit of the vehicle’s speed in order to call the heart rate value retrieval function. The default value is 5, i.e., 5 km/h.

TIME_FOR_COUNTING BLINKING: The time interval in which the number of driver blinks is counted, the default value being 300 s.

TIME_FOR_GETTING_HR: The time interval required to obtain the heart rate, the default value being 30 s.

TIME_FOR_COUNTING_YAWNING: The time interval required to count the yawn rate, the default value being 300 s.

TIME_FOR_OPTICAL_WARNING: The period of time for which visual signals are generated, the default value being 20 s.

TIME_FOR_ACUSTICAL_WARNING: The period of time for which acoustic and visual warnings are generated, the default value being 15 s.

TIME_FOR_COUNTING_BLINKING_CALIB: The time required to calibrate the blinking rate, the default value being 60 s.

TIME_FOR_COUNTING_YAWNING_CALIB: The time required to calibrate the threshold value used in yawn detection, the default value being 30 s

The driver fatigue monitoring and detection application consists of evaluating four metrics, each of which generates a score, this score contributing to determining the driver’s fatigue percentage. The parameters described above help create a flexible system in terms of establishing the threshold values used.

3.3. System Initialization

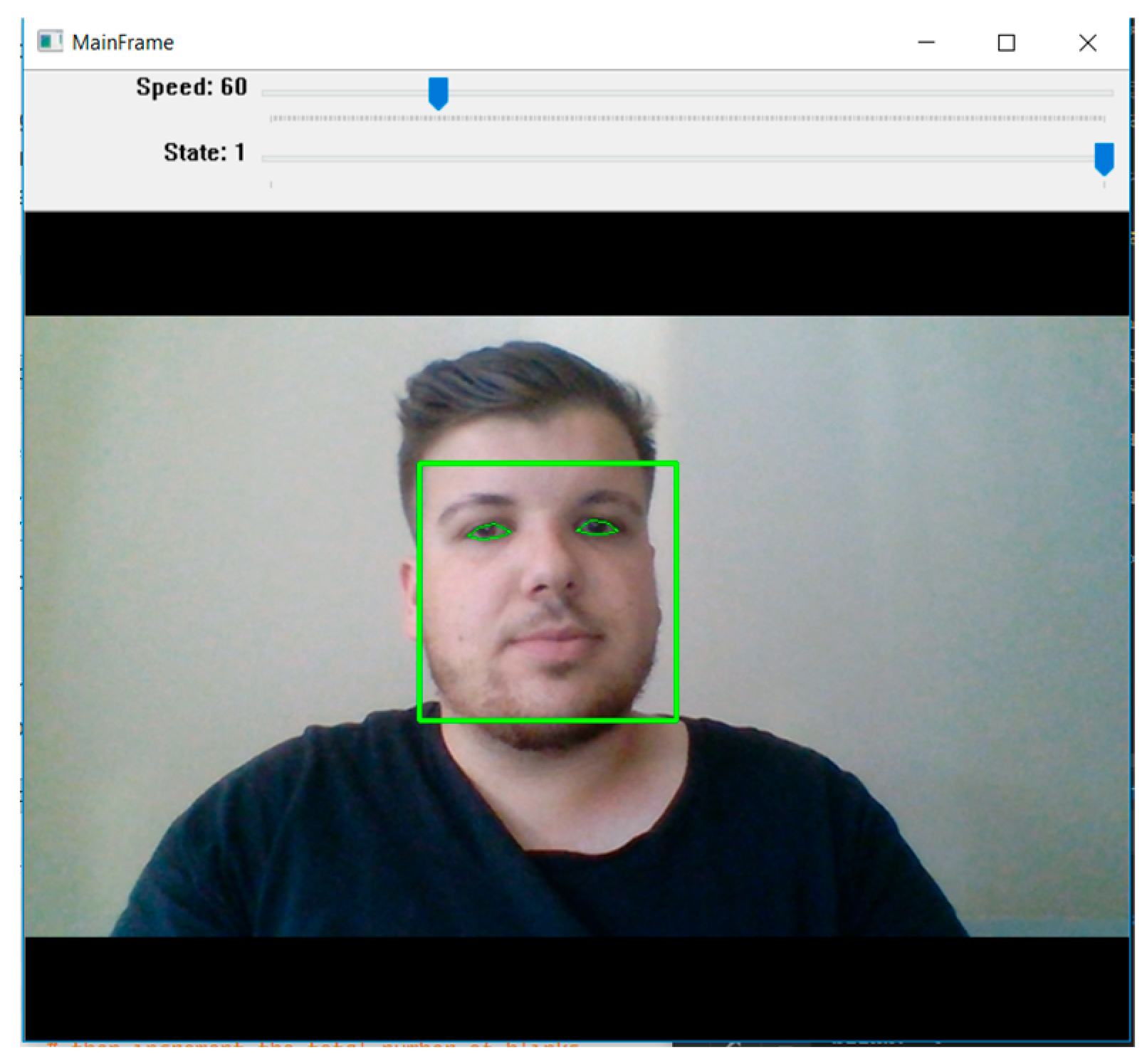

Since the proposed system depends on the state of the system and the speed of the vehicle, a simple graphical interface was created, an interface with which a simulation of the process can be made but also the images from the video camera can be watched in real time (see

Figure 3).

In order to obtain images from the camera, the first step is to create a VideoCapture time object. The ‘O’ parameter of the VideoCapture function represents the fact that we have only one camera connected. To simulate the system speed and motion speed, the trackbars shown in

Figure 3 are used, positioned at the top of the image.

In the initialization part, the time intervals for determining the blink rate, the time required for evaluating the heart rate, the time for yawning rate, and the establishment of the time for generating light or acoustic and light signals are defined, these times being based on the previously defined configurable parameters.

3.4. Sleep Quality Evaluation

If the current system state is ACTIVE, and the previous system state was OFF, and the speed is equal to 0, then the first metric necessary to determine the fatigue level is evaluated. The variable sleep_quality_evaluation_performed is initially initialized with False; it takes on the True state only after the sleep quality evaluation score from the previous night is a valid one. This variable helps the sleep quality evaluation process to be performed only once when the vehicle is started.

The GetSleepQualityEvaluation() function has no input parameters, and as a result it will return the sleep quality evaluation score. The first time, the driver is asked if it is a new day, because if it is not a new day, it means that the sleep quality evaluation from the previous night has already been performed, and the result will be read from a file. If the driver enters an invalid option when asked if it is a new day, the sleep quality evaluation score will automatically be set to the error value, i.e., −1. If it is a new day, the driver must rate the quality of sleep from the previous night on a scale of 1 to 5, with 5 representing a very good quality of sleep and 1 representing that the driver did not sleep the previous night. Grade 5 represents a score of 0%, grade 4 represents a score of 25%, grade 3 represents a score of 75%, and grade 1 indicates a score of 100%. If the driver enters an invalid option when assessing sleep quality, the sleep quality assessment score will automatically be set to the error value, i.e., −1. If the sleep quality assessment score is different from the error value and it is a new day, then this score is written to the file and will be used for the entire day. After the fatigue level score has been established, the reference values for blink rate and heart rate are read from the file.

3.5. Determining Heart Rate Values

The next step consists of determining the heart rate. If the system state is ACTIVE, and the speed of movement is between HR_SPEED_THRESHOLD_L and HR_SPEED_THRESHOLD-H, the GetHeartRate() function is called, with the help of which the heart rate is obtained.

If the time required to determine the heart rate, stored in the variable end_time_for _getting_hr, has not passed, then the heart rate values are read until the time required to determine the average heart rate is reached, the heart rate values being read using a pulse sensor connected to an Arduino board. When the GetHeartRate() function is called, it sends a signal to the Arduino system announcing that the heart rate values are to be read.

The heart rate sensor used is a photoplethysmograph, a plug-and-play sensor designed for Arduino. The heart rate signal coming out of the photoplethysmograph is an analog form of voltage, read every 2 ms.

3.6. Blink Rate Determination

Facial feature detection is a subset of the shape prediction problem. Given an input image (and typically a region of interest [ROI]), a shape predictor attempts to locate key points of interest along the shape.

In the context of facial feature detection, the goal is to detect important facial structures using the shape prediction method. Facial feature detection is a two-step process:

For facial detection, a specially designed object detector was used. It is a HOG (Histogram of Oriented Gradient)- and SVM (Support Vector Machine)-based detector, proposed by Dalal and Triggs in [

23], who demonstrated that the HOG (Histogram of Oriented Gradient) image descriptor and an SVM (Support Vector Machine) can be used to train accurate object classifiers or, in the case of particular objects, person detection. What is important to determine is the facial delineation (i.e., the (x, y) coordinates of the face in the image).

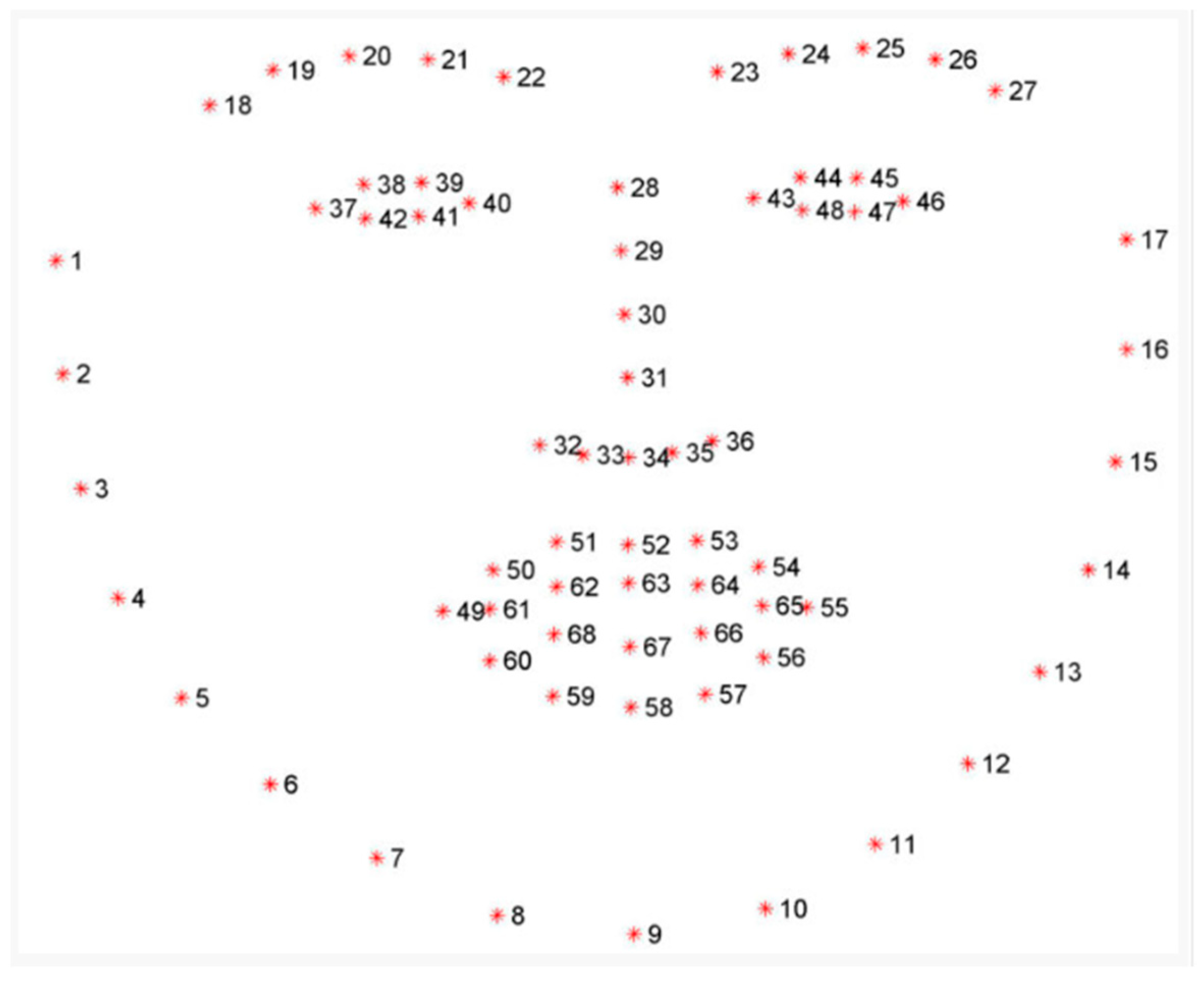

The facial landmark detector implemented in the Python library dlib is used to estimate the location of 68 (x, y) coordinates that map to facial structures (see

Figure 4). Examining the image, we can see that facial regions can be accessed by simple indexing in Python: the mouth can be accessed using points (49, 68), the right eyebrow using points (18, 22), the left eyebrow using points (23, 27), the right eye using (37, 42), the left eye using (3, 4), the nose using (2, 36), and the forehead using points (1, 17).

The blink detection process occurs when the system state is ACTIVE and the speed of movement is greater than 5 km/h.

The first step in face and facial feature detection requires initializing the detector and creating the predictor. Before visual features can be detected, the face must first be located by the detector which returns the bounding box (x, y) coordinates for each face detected in the image. After the face in the video stream has been detected, the next step is to apply the predictor to the region of interest, which provides the 68 coordinates (x, y) that represent the specific facial features.

Once the 6 coordinates (x, y) representing the facial landmarks are obtained, the coordinates (x, y) for the left and right eyes are extracted, then the ocular aspect ratio is calculated. To obtain the best possible estimate of blinking, the average of the two ocular aspect ratios is calculated, a suggestion offered by Soukupova and Cech in their work [

23], since it is assumed that a person blinks with both eyes at once.

A function called EAR() has been defined to calculate the ratio of the distances between the vertical landmarks of the eyes and the distances between the horizontal landmarks. The vertical distance is calculated as the Euclidean distance between two sets of vertical ocular landmarks of coordinates (x, y), for both the left and right eyes. The distance of the horizontal landmarks is also determined using the Euclidean distance. The determined Euclidean distances are used in determining the ocular aspect ratio. The value returned by the EAR() function is approximately constant when the eye is open. The value tends to zero during the blink. If the eye is closed, the ocular aspect ratio remains approximately constant and tends to zero (see

Figure 5).

The code in Python for EAR function is presented below:

#eye aspect ration

def EAR(e):

# use Euclidean distance because we have a pair of (x, y)

# coordinates

# compute Euclidean dist between (x, y) vertical eye landmarks

# vertical dist 1 and 2

VD1 dist.euclidean(e [l], e [5])

VD2 = dist.euclidean(e [2], e [4])

compute HD for horizontal eye Landmark

# horizontal dist

HD = dist.euclidean(e [0], e [3])

# compute the ear

eye_aspect_ratio = (VD1 + VD2)/(2.0 * HD)

return eye_aspect_ratio |

At this point, the eye aspect ratio is calculated (averaged), but it is not determined whether a blink has occurred or not. To detect a blink, it is checked whether the eye aspect ratio value is less than the threshold value, EAR_THRESHOLD, and if this condition is met, the number of consecutive frames indicating that a blink has occurred is incremented. If the eye aspect ratio value is not less than the threshold value, then it is checked whether the eyes have been closed for a sufficient number of consecutive frames to be considered a blink. If the check is positive, then the TOTAL variable is incremented, indicating the number of blinks. If the eyes are detected closed for more than 10 consecutive frames, acoustic and visual warning signals will be generated.

After the time interval in which the number of blinks is counted, this value is stored in a vector, which is then used to determine the fatigue score based on the blink rate. To reduce errors that may occur, the blink rate is counted in five intervals, each of which is 5 min long. After the five time intervals have elapsed, the average of the five values is calculated. The average of the five values is compared with the reference value, i.e., the value resulting from the calibration process, and the percentage difference is determined in order to determine the fatigue score based on the blink rate. The percentage difference is only significant if the reference value is lower than the current value, because if the reference value is lower, it means that the fatigue level has started to increase. A faster blink rate means a higher fatigue level.

After the percentage difference between the reference value and the current value has been determined, best_blinking_value is initialized with the average of the five values, if the reference value is lower than the current value. The percentage difference is checked only once, then it is checked again if the current blinking rate has increased again, and the blinking rate score is incremented by 10 percent.

3.7. Yawning Determination

The yawning determination process is based on the same principle as in the case of blink rate determination. It is based on the previously extracted facial landmarks, but this time focusing on the mouth afferent index, that is (48, 68).

To determine yawning, the difference between the upper lip and the lower lip is applied. First, the center of the upper lip is determined, as the average of the indices (50, 53) and (61, 6), then the center of the lower lip is determined, as the average of the indices (65, 6) and (56, 59). The next and only step that remains to be performed is the calculation of the difference between the center value of the upper lip and the center value of the lower lip, this difference being the result returned by the yawn_detection_parameters() function.

The distance between the upper lip and the lower lip is then used to determine yawning. If the distance between the lips is greater than the threshold value that was previously calibrated, LIPS_DISTANCE_THRESHOLD, in the current cycle, but in the next cycle the value is less than the threshold value, it means that a yawn has been detected. The yawn rate is evaluated once every 5 min, and if at least one yawn has been detected in the last 5 min, the yawn score is increased by 10 percent.

3.8. Determination of the Level of Fatigue

The level of fatigue is determined using the computeLevelOfDrowsiness() function, which has as input parameters the score for each metric used, as well as flags confirming that the score for each metric has been previously determined. Initially the level of fatigue, LevelOfDrowsiness, is initialized with 0, then determined based on the scores recorded for each metric used separately.

Four cases were considered, namely: when all metrics were evaluated, when only two out of three metrics were evaluated, or the case in which only blink rate and yawning were considered.

If all four scores, related to the usage metrics, have been determined, then the weight of the metrics in determining the level of fatigue is as follows: the score provided by the heart rate is the highest and has an influence of 35% on the level of fatigue, while the second most important metric is the score provided by the blink rate, which has a weight of 30%. Yawning has a weight of 25%, and self-assessment of sleep quality has the lowest percentage of 10%. Assessing sleep quality can be subjective, which is why the score related to this metric has such a low weight in determining the level of fatigue.

If the score was obtained only for blink rate, yawning, and sleep quality assessment, then the weight is calculated as 40% blink rate score, 40% yawn score, and 20% sleep quality assessment score. If the sleep quality assessment was not performed and the heart rate score was determined, then the weight calculation is influenced in proportion of 40% for the heart rate score, with blink rate and yawn score having equal weights of 30% each.

Taking into account that the blink rate and yawning are calculated automatically, without the need for driver intervention, then the fatigue level can be calculated only based on these two scores, both scores having equal weight, i.e., 50% each. In order to calculate the fatigue level, it is necessary that at least the score for the blink rate and yawning be determined.

We mention that since we did not find studies showing the influence of each of the four metrics we used, we considered that they influence fatigue approximately equally, except for the one that is evaluated by the driver, namely sleep quality, to which we gave a lower weight.

3.9. Generation of Warning Signals

If the fatigue level has reached or exceeded the value, OPTICAL_WARNING_ THRESHOLD_L, but is less than or equal to OPTICAL_WARNING_THRESHOLD_H, light signals will be generated. If the fatigue level exceeds the value ACUSTICAL_WAR ING_THRESHOLD, the generation of acoustic and light signals will begin. If the fatigue level has not reached one of the thresholds, then no warning signals will be generated.

The generation of visual or visual and sound signals is generated using an Arduino board. Light signals are generated by the yellow LED, and light and sound signals use both the yellow LED and the red LEDs. The acoustic signal is generated by a speaker.

4. Experimental Results

For easier understanding of the experimental process and data interpretation, a part of an experiment set can be seen in

Table 1. The meanings of the data in the table are described below.

Day 1: Test day 1, weekday

Day 2: Test day 2, non-weekday

HRC: Heart rate reference value

HRTl: Heart rate value at time 1

HRT2: Heart rate value at time 2

BRC: Blink rate reference value

BRTl: Blink rate at time 1

BRT2: Blink rate at time 2

CTI: Yawn rate at time 1

CT2: Yawn rate at time 2

ECS: Sleep quality assessment percentage

LvlTl: Fatigue level at time 1

Lv1T2: Fatigue level at time 2

The subject of the test was a male, aged 20–25 years. The system calibration took place in the early morning hours (9:00–10:00), when the subject was resting, this being the first stage. The results of the system calibration are presented in the HRC and BRC columns. The threshold value used in determining yawning, i.e., the distance between the upper and lower lips, was also calibrated.

The second stage consisted of collecting new data on heart rate, blink rate, sleep assessment from the last night and yawn detection. The second stage took place between 16:00 and 18:30, which is the time point T1 when the subject was tested. On the first day of the test, the subject was tested immediately after returning from work.

According to the results at time T1, it can be seen that there were changes in the heart rate value, but also changes in the blink rate. In the ECS column, it can be seen that the subject rated his sleep quality the previous night with a score of 4, which means that he had a good sleep the night before being evaluated. The heart rate value at time T1, column HRT1 related to day 1, decreased by beats per minute. The blink rate value at time T1, column BRT1, also underwent changes, registering an increase of approximately 40%. According to the results obtained, it can be deduced that the subject’s fatigue level began to increase. Following the evaluation of the subject’s fatigue level at time T1, a percentage of 17.75% resulted, the value returned by the system being in the column LvlT1.

Time point T2 represents the time interval 21:00–24:00. From time point T1 to time point T2, changes occurred in the blink rate, column BRT2, and the heart rate value, column HRT2. It can be observed that the heart rate value decreased by approximately 10% compared to the reference value and by approximately 6% compared to the heart rate value at time point T1. The blink rate value increased by approximately 3.5% from time point T1 and by approximately 49% compared to the reference value. Also, during the system testing at time point T2, the presence of yawning was detected. The subject yawned three times during the fatigue assessment, column CT2.

According to the results obtained at time T2, it can be observed that the subject’s fatigue level was much higher than at the time of system calibration, but it also recorded an almost double increase from time T2, the subject’s fatigue level at time T2 being 30.25%. The second day the system was tested, the time points from Day 1 of the test were respected, but this time the subject was tested after a day off, a day on which no difficult tasks were performed. It can be seen from

Table 1 that the subject had a very good and deep sleep the night before testing, the sleep quality being evaluated with a score of 5. At time T1, the heart rate value decreased by only two beats per minute compared to the reference value. Also, the blink rate increased by approximately 24% compared to the reference value. At time T1, the subject did not yawn. The fatigue level determined by the system at the moment of time T1 being 9.25%.

At time point T2 on day 2, there were no significant changes in the metric values compared to the reference values. The heart rate value was the same as the reference value. An increase was recorded only in the blink rate value, but it is a very small increase compared to the value at time point T1 and an increase of approximately 25% compared to the reference value. During the system testing at time point T2, the subject yawned only once, the fatigue level determined by the system based on the four metrics being 11.5%.

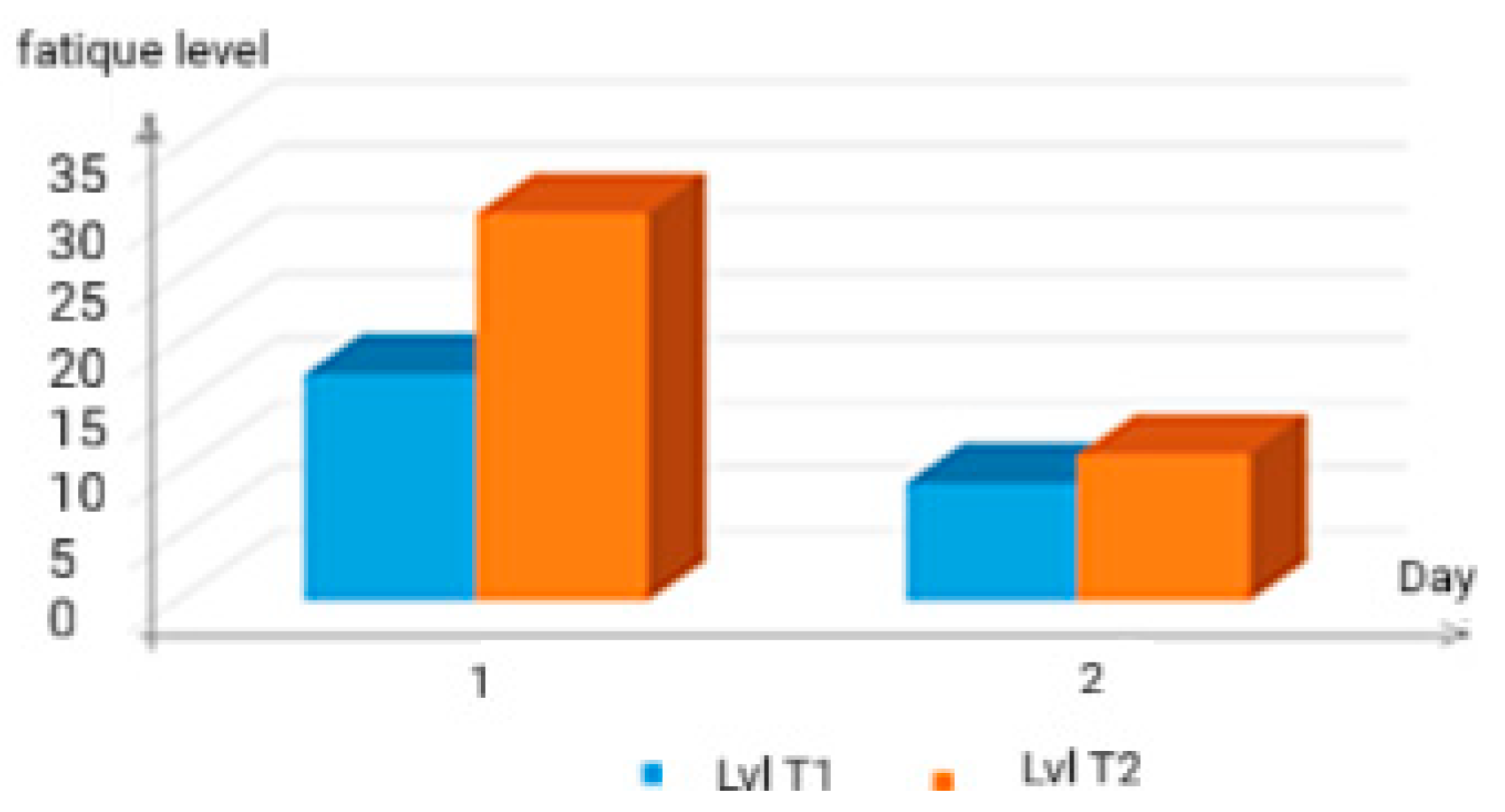

According to

Figure 6, a very large difference can be observed between the fatigue level values from test day 1 and test day 2. Differences of approximately 50% were recorded at time point T1 and a difference of over 50% at time point T2.

Table 1 presents only a subset of experiments, along with their interpretation, which is useful for understanding how the proposed system works. To draw relevant conclusions, we conducted 10 experiments for each age group and each time interval. Specifically, we tested two age groups (20–25 and 55–60) across three time intervals (09:00–10:00, 12:00–14:00, and 21:00–24:00).

The average values obtained for the fatigue level for each case can be observed in

Figure 7. It can be seen that for the age group 55–60, the fatigue level values are higher than those for the age group 20–25, especially for the time interval T3. That is normal, because it is known that high fatigue level in older adults is a common issue with various potential causes, like medical conditions, medication side effects, poor sleep quality or sleep deprivation, nutritional deficiencies, stress, anxiety or depression, lack of physical activity that can lead to muscle weakness and decreased energy, etc.

On the other hand, the higher level of fatigue in the evening is a normal part of the body’s natural sleep–wake cycle, also influenced by energy consumption during the day.

It is very clear that the four metrics used by us only approximate the real level of driver fatigue. As mentioned above, there are many factors that influence the level of fatigue, both in the young and especially in the elderly. Unfortunately, measuring them is difficult, involving complicated and expensive technical equipment. Moreover, our system hardware part is designed only for four metrics.

5. Conclusions and Future Work

In this paper, a hybrid system was proposed for determining the level of driver fatigue. Analysis of visual characteristics, blink rate and yawning, along with heart rate variability and assessment of sleep quality from the previous night, are the four metrics used. The four metrics used by us improve the approximation in determining the real level of driver fatigue, adding new metrics being one of our future research directions. For example, voice, tone, and verbal flow in a conversation with another passenger in the car will be taken into consideration to assess the driver’s fatigue state.

Fatigue monitoring must be as accurate as possible; therefore, in order to minimize the error in calculating the fatigue level, the system must be calibrated before use. The calibration process involves determining reference values for the following metrics: the heart rate, the driver’s blink rate, and the distance between the upper lip and the lower lip.

A warning, visual or acoustic, transmitted in time to the driver, if the level of fatigue is high, can prevent the driver from falling asleep, thus avoiding events that could occur and reducing the large number of accidents that occur due to driver drowsiness. In the first phase, a visual warning is generated followed by a visual–audible warning when the system has determined a value greater than 85% for the fatigue level.

According to the results obtained, there is a close connection between the tasks or activities carried out by the subject during the day and the level of fatigue.

Another direction of future research—an operation that has already begun and has promising preliminary results—is the creation of a programmable automaton that, with the help of a video display, would replace the human person in front of the camera. Basically, it would simulate a video with any desired experimental scenario, including heart rate and other parameters that could be taken into account in assessing the driver’s fatigue. Such an auxiliary/helper system would exclude false alarms and especially would allow the creation of complex scenarios for experiments.