1. Introduction

Pesticide ingestion remains a significant public health issue, particularly in many Asian countries, where it is a common method of suicide and a leading cause of toxic exposure requiring emergency intervention. Despite national-level regulations, including the ban on paraquat in Korea, pesticide ingestion continues to cause a substantial clinical burden [

1]. In recent years, although deaths from highly toxic pesticides such as paraquat and methomyl have declined due to regulatory bans, there has been a relative increase in suicide attempts involving less irritating, water-soluble, and lower-toxicity herbicides. Notably, fatalities related to glyphosate- and glufosinate-containing herbicides have been steadily rising in Korea [

2]. As a result, pesticide poisoning persists as a major clinical concern, often requiring intensive care and posing significant challenges in acute management [

3].

Compared to other chemical exposures, pesticide poisoning is associated with considerably higher fatality rates, owing to its diverse toxicological mechanisms and frequent complications including acid–base imbalance, shock, arrhythmias, hypotension, and respiratory failure [

3,

4,

5]. In addition, it is often difficult to accurately determine the type and amount of pesticide ingested, and the heterogeneity of clinical presentations makes early severity assessment and treatment decisions particularly challenging. Although few studies have provided detailed time-to-death distributions across pesticide types, existing evidence indicates that most acute fatalities occur within the first few days after ingestion, with many deaths occurring within 1–2 days depending on the compound [

6]. Given the rapid progression and high lethality of many pesticide toxicities, early resuscitation and timely initiation of treatment are critical to improving clinical outcomes [

7].

According to a nationwide study on poisoning trends based on Korea’s national emergency medical data, pesticides were identified as the leading cause of both emergency department and in-hospital mortality among poisoning cases. Given the continued presence of a sizable agricultural population, a significant reduction in pesticide-related deaths remains unlikely. In particular, pesticides are a major cause of death among elderly patients with poisoning, who often have poorer prognoses due to age-related physiological vulnerability. As such, timely evaluation and early therapeutic intervention are especially critical when pesticide poisoning is suspected in this population [

8].

To support clinical decision making, several prognostic tools—such as APACHE II (Acute Physiologic and Chronic Health Evaluation II) score, PSS (Poisoning Severity Score), SAPS (Simplified Acute Physiology Score) and SOFA (Sequential Organ Failure Assessment)—have been used, along with key clinical indicators like mechanical ventilation and vasopressor use [

5,

9,

10,

11,

12,

13]. Although they are useful in general critical care populations, their predictive performance in pesticide-poisoned patients has been reported to be limited, with reported Area under the Receiving Operating Characteristic Curves (AUCs) ranging widely from 0.72 to 0.90. The Paraquat Poisoning Prognostic Score (PQPS) has been studied only in paraquat intoxication, while the PSS was designed for general toxic exposures and is not pesticide-specific. Likewise, APACHE II, SOFA, and SAPS II were originally developed for general ICU populations, and, although they have been applied to pesticide poisoning, they may not adequately reflect its distinct pathophysiology. Furthermore, as these tools were primarily developed for intensive care unit (ICU) patients based on data collected within first 24 h of ICU admission, they are not specifically tailored for early risk stratification in the emergency department setting, where rapid decision making is crucial [

13,

14,

15]. Recent methodological studies in machine learning and time-series forecasting have also noted that increasing model complexity does not necessarily guarantee superior predictive performance, emphasizing the importance of balancing accuracy with interpretability in applied settings [

16].

Given the continued clinical burden of pesticide poisoning and the challenge of early severity assessment, there is a need to improve prognostic tools that can help clinicians promptly identify high-risk patients. In this study, we aimed to develop and validate machine learning-based models to predict 14-day in-hospital mortality in patients with acute pesticide poisoning. Using only clinical and laboratory data available within the first 2 h of presentation, our goal is to enable prompt risk stratification and assist clinicians in early prognostication. Through this approach, we sought to support early prognostication and ultimately improve the care of pesticide-intoxicated patients.

2. Materials and Methods

2.1. Study Population and Study Design

This observational retrospective cohort study was conducted at Soonchunhyang University Cheonan Hospital from January 2015 to December 2020. Over the study period, a total of 1081 patients diagnosed with acute pesticide poisoning were admitted to the Institute of Pesticide Poisoning.

Patients were excluded if laboratory test results were unavailable within the first two hours of hospital arrival or if key data were missing due to repeated hospital visits. Additionally, patients who died more than 14 days after admission were excluded, as pesticide-related mortality typically occurs within this time frame, and later deaths were more likely attributable to non-toxicological causes. After applying these criteria, 1056 patients were included in the final analysis. Of these, 885 survived, and 171 died within 14 days of admission. A detailed flow diagram outlining the inclusion and exclusion criteria is presented in

Figure 1.

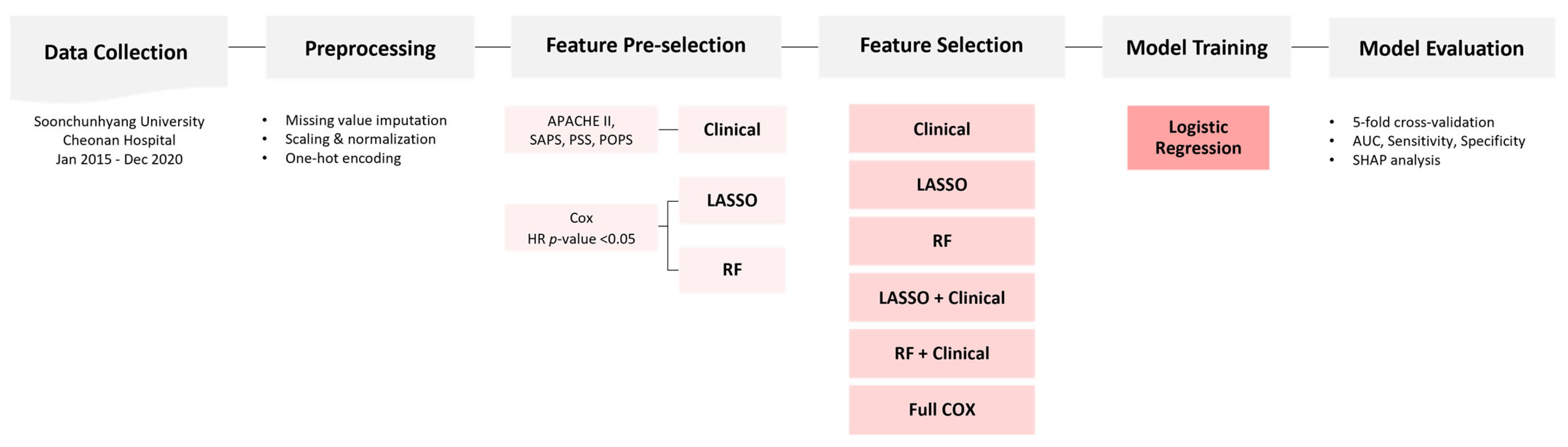

The study cohort was randomly divided into train and test sets in a 7:3 ratio. Stratified sampling was applied to maintain the distribution of pesticide types and mortality outcomes across both subsets. An overview of the feature selection, model development, and evaluation pipeline is illustrated in

Figure 2.

This study was conducted in accordance with the ethical principles of the Declaration of Helsinki and was approved by the Institutional Review Board of Soonchunhyang University Cheonan Hospital (IRB number: 2020-02-016). The requirement for written informed consent was waived by the Institutional Review Board due to the retrospective nature of the study and the use of anonymized patient data.

2.2. Data Collection

Demographic and clinical data were extracted from electronic medical records (EMRs) and documented by attending physicians using standardized data collection forms. The timing of pesticide exposure and hospital arrival was determined through a review of emergency department charts. The ingested volume of pesticide was estimated based on the number of reported swallows, with each swallow approximated to be 20 mL, as previously described in toxicology studies. Information was primarily obtained from patient or caregiver recall, occasionally corroborated by emergency records or remaining containers. To minimize recall bias, ingestion volume was categorized into broad groups (<100 mL, 100–300 mL, >300 mL, unknown). Only laboratory test results obtained within the first two hours of admission were included in the analysis to ensure consistency in early clinical assessment.

2.3. Data Processing

All preprocessing procedures were conducted using Python (version 3.11) and relevant libraries, including pandas, scikit-learn, and NumPy. Among the collected variables, only those with a missing rate of less than 10% were included for model development. One exception was the variable “seizure,” which was retained despite exceeding this threshold, based on its known clinical relevance to acute outcomes.

Variables were classified as numerical or categorical based on their data type and the number of unique values. To accurately reflect each patient’s initial clinical condition, only the first available test values were used. Numerical variables underwent outlier removal using the 1st and 99th percentiles and were subsequently rescaled to maintain unit consistency. Variables with skewed distributions—including procalcitonin (PCT), ethanol, creatine kinase (CK), CK-MB, and C-reactive protein (CRP)—were log-transformed.

Additional variables were rescaled based on clinical interpretability: specific gravity (SG) was multiplied by 1000; pH and PT-INR by 10; and triglycerides (TG), glucose, partial pressure of oxygen (pO2), partial pressure of carbon dioxide (pCO2), and platelet count were divided by 10. These steps were applied only to adjust the numeric range for easier interpretation of regression coefficients, without affecting model performance. All continuous variables were subsequently standardized using the StandardScaler, so prior rescaling only changed the units of coefficients without altering data distribution or model discrimination.

Categorical variables—including comorbidities, neurological symptoms, and urinalysis results—were binarized based on clinical relevance. Urine dipstick findings were semi-quantitatively encoded, while urinary red and white blood cell counts were categorized into ordinal tiers. Sex and smoking status were standardized and subsequently binarized or one-hot encoded. Pesticide type and estimated dose were also converted to dummy variables via one-hot encoding, with glufosinate and the lowest dose category designated as reference groups to prevent multicollinearity. Glufosinate was chosen due to its sufficient sample size and a median-level mortality rate among pesticides.

Troponin-T values were dichotomized using the clinical cut-off of 0.014 ng/mL, which corresponds to the standardized 99th percentile threshold for myocardial injury. Once this threshold is exceeded, the absolute magnitude of elevation reflects diverse conditions and is less interpretable as a continuous measure. Therefore, the variable was modeled as binary to reflect its established clinical significance [

17].

The final feature set included scaled continuous variables, log-transformed biomarkers, binary indicators, categorized urine test values, and one-hot encoded categorical features. Redundant or original unprocessed variables were excluded from model input.

For machine learning implementation, Boolean variables were converted to integers (0 or 1). Missing values in categorical variables were imputed using the most frequent category. For numerical variables, the Multivariate Imputation by Chained Equations (MICE) method was applied. Finally, all continuous variables were standardized using the StandardScaler function from scikit-learn.

2.4. Variable Selection

Candidate variables were initially screened using univariable Cox proportional hazards regression (p < 0.05), which evaluates the association between each variable and mortality based on time-to-event analysis. Following this initial filtering step, three distinct feature selection strategies were applied to construct the final feature sets: (1) clinical relevance, (2) Least Absolute Shrinkage and Selection Operator (LASSO) regularization, and (3) Random Forest (RF) importance ranking.

The clinically curated list was developed based on variables incorporated in established prognostic scoring systems commonly used in critically ill or poisoned patients, including APACHE II, SAPS, PSS, and POPS. Frequently used components of these scores—such as vital signs, neurological status, and laboratory values—were selected to ensure a meaningful and interpretable comparator [

9,

11,

18]. Certain variables, including serum sodium and hematocrit, were retained in this list despite not reaching statistical significance in the univariable Cox analysis, due to their recognized roles in clinical assessment tools. In addition, chronic comorbidities such as diabetes mellitus (DM), chronic kidney disease (CKD), and hypertension (HTN) were also included to reflect baseline patient risk.

The LASSO-based feature set was constructed by applying L1-regularized logistic regression (LR) using the scikit-learn implementation of the LASSO algorithm. This method imposes a penalty on the absolute magnitude of regression coefficients, which effectively reduces less informative variables to zero, thereby yielding a sparse and interpretable model.

The RF-based feature set was derived by training a Random Forest classifier on the training data and selecting features with a mean decrease in impurity (MDI) of ≥0.01. This threshold identified variables with consistent and meaningful importance across decision trees in the ensemble. Features that demonstrated robust importance across bootstrapped samples were retained in the final list.

All preprocessing procedures and feature selection strategies were applied exclusively to the training dataset to prevent data leakage and to ensure the generalizability of model evaluation on the test set.

2.5. Prediction Model Construction and Selection

We developed prediction models using LR. The moderate sample size and lack of external validation required a model with low overfitting risk and high generalizability. Compared to more complex machine learning algorithms, LR offers greater transparency and robustness, making it particularly suitable for small, heterogeneous clinical datasets—such as those involving diverse pesticide types and variable exposure profiles. The mechanistic heterogeneity across different pesticide classes poses challenges in modeling due to increased data sparsity and dimensionality when incorporating pesticide-specific variables. In such scenarios, linear models like LR are generally more resilient to overfitting and better equipped to handle sparse, high-dimensional data. Moreover, LR provides interpretable model coefficients and odds ratios (ORs), which facilitate clinical applicability and support decision making at the bedside. Based on these advantages, LR was selected as the primary modeling approach [

19,

20,

21].

Six distinct feature sets were constructed based on Cox-filtered variables and three selection strategies: (1) full Cox-filtered feature set, (2) clinically curated features based on established prognostic scoring systems, (3) features selected via LASSO regularization, (4) a combination of LASSO selected and clinical features, (5) features ranked by R importance, and (6) a combination of RF-ranked and clinical features.

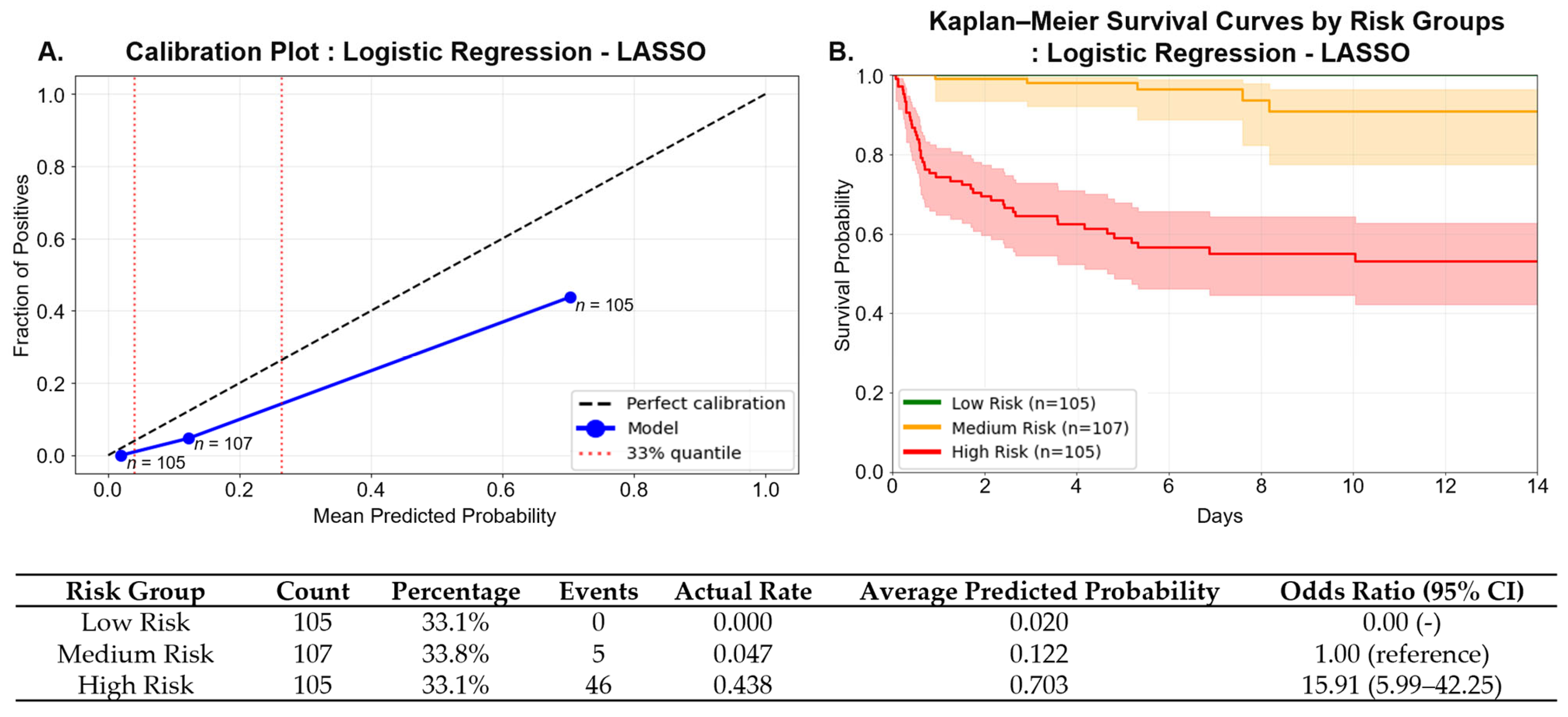

For each feature set, stratified 5-fold cross-validation was employed to preserve the distribution of the outcome variable and pesticide types across folds. Hyperparameter tuning was integrated within the cross-validation loop to determine the optimal model configuration. The best-performing model for each feature set was retrained using the entire training dataset and finalized with its corresponding optimal probability threshold.

Model performance was assessed on an independent test dataset. Data imputation and scaling procedures were applied using transformation parameters derived solely from the training set to prevent data leakage. To evaluate model robustness and generalizability, 1000 bootstrap resamples of the test set were performed, and 95% confidence intervals (CIs) for the area under the receiver operating characteristic curve (AUC) were computed. Final model evaluation considered test set AUC, number of features used, predictive accuracy, model simplicity, and performance stability, providing a comprehensive basis for model selection.

2.6. Study Outcome

The primary outcome variable for model prediction was in-hospital mortality within 14 days of admission. This 14-day window was selected based on the clinical characteristics of pesticide poisoning and the temporal distribution of deaths observed in this cohort. Mortality events occurring beyond 14 days were rare and were considered more likely to result from non-toxicological complications. Therefore, to minimize confounding from unrelated causes, patients who died after the 14-day period were excluded from the analysis. Patients who were discharged or lost to follow-up within 14 days and had no documented evidence of death were assumed to have survived and were included in the survival group. In total, 1056 patients were analyzed, including 171 in-hospital deaths (16.2%) within 14 days and 885 survivors.

2.7. Statistical Analysis

All statistical analyses were conducted using Python (version 3.11.13; Python Software Foundation,

https://www.python.org/) with the following libraries: scikit-learn for machine learning, statsmodels for statistical inference, lifelines for survival analysis, and shap for model interpretability. Model performance was evaluated using standard classification metrics, including area under the receiver operating characteristic curve (AUC), sensitivity, specificity, precision, and F1 score. Pairwise comparisons of AUC values between models were conducted using DeLong’s test to assess statistical differences in predictive performance. To interpret the contribution of individual features to model predictions, SHapley Additive exPlanations (SHAP) values were computed. A two-sided

p-value of <0.05 was considered statistically significant for all hypothesis testing.

4. Discussion

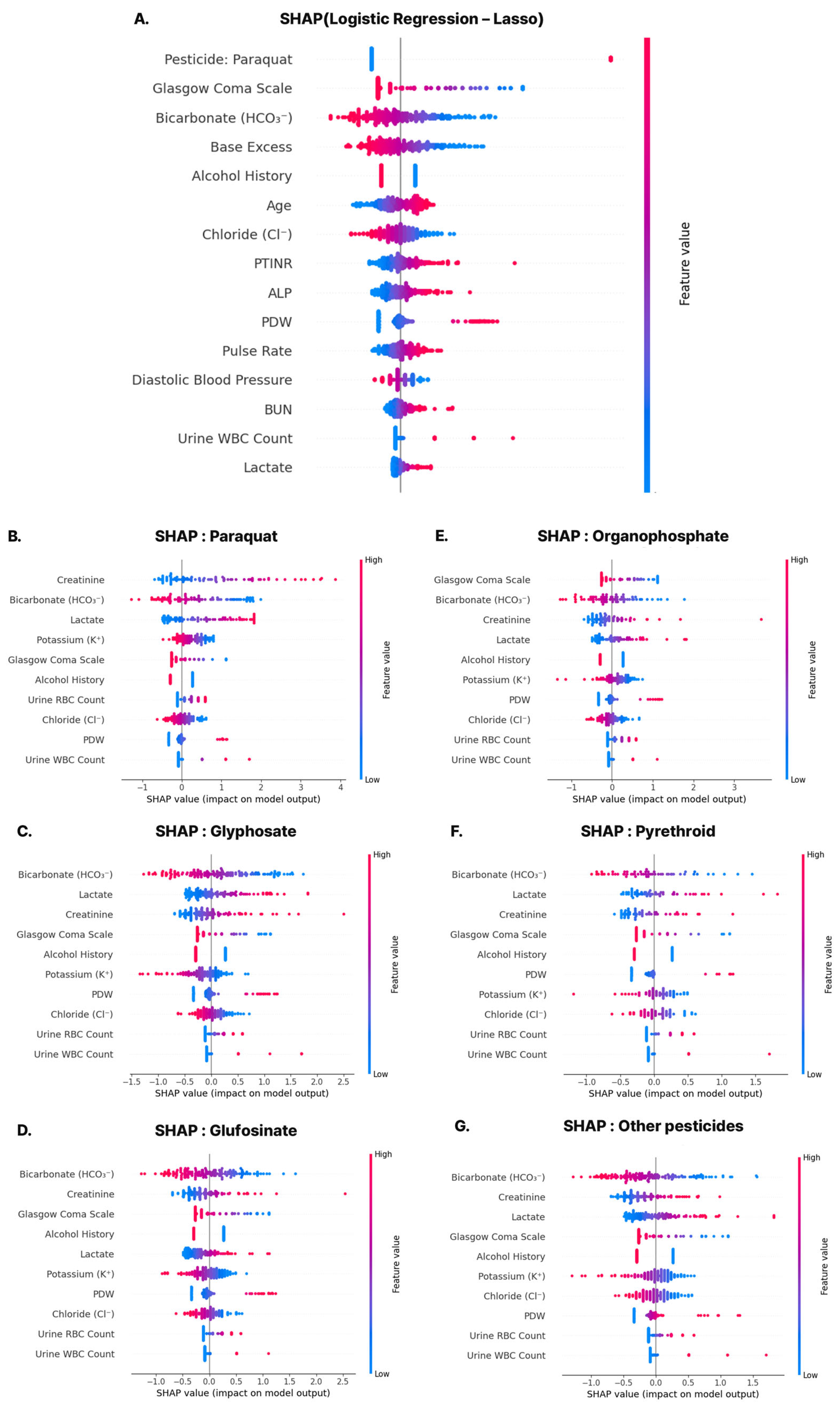

Most variables included in the predictive model developed in this study align with established clinical prognostic indicators. Notably, variables such as Glasgow Coma Scale (GCS), bicarbonate (HCO3−), base excess (BE), pulse rate (PR), diastolic blood pressure (DBP), oxygen saturation (O2sat), blood urea nitrogen (BUN), serum creatinine (Cr), lactate, red cell distribution width (RDW), potassium (Kal), and chloride (Cl) are commonly incorporated into critical care scoring systems such as APACHE II and SAPS. These features also overlapped with the predefined clinically curated list, suggesting that both clinical interpretability and statistical significance were considered during model development.

A particularly interesting finding was the inclusion of the variable ‘alcohol_bin’, which reflects the patient’s history of alcohol use. In this model, a negative regression coefficient indicated a protective association with 14-day mortality. This finding contrasts with conventional clinical expectations, which associate chronic alcohol use with worse outcomes due to immune suppression, hepatic dysfunction, and nutritional deficits. However, previous studies have primarily focused on acute co-ingestion of alcohol with pesticides, rather than evaluating the effects of chronic alcohol use as an independent risk factor [

22]. In this study, chronic alcohol use was defined only as a binary alcohol history, without accounting for amount, duration, or drinking pattern, which may have introduced measurement errors. The counterintuitive protective association may also reflect unmeasured confounding (e.g., age, socioeconomic status, medical access, or poisoning intent) or biases such as survivorship or self-reporting, rather than a true biological effect. Future research should refine the characterization of alcohol use and reassess its predictive role through external validation.

The positive regression coefficients for urinary leukocytes (‘Uleuko_num’) and erythrocytes (‘Uery_num’) suggest that elevations in these parameters on initial urinalysis may serve as early markers of acute kidney injury (AKI) or systemic inflammatory response in patients with pesticide poisoning. Perazella (2015) emphasized the diagnostic and prognostic value of urinary findings such as tubular cells, RBCs, and WBCs in AKI [

23], and studies such as the Yale PhD thesis have demonstrated that sediment-based scoring predicts mortality in AKI populations [

24]. Although pesticide-specific evidence remains limited, these findings support the use of early urinary abnormalities as valuable predictors of renal stress and inflammation in poisoning contexts, potentially enhancing the predictive accuracy of our model. However, these associations may also reflect confounding factors such as asymptomatic pyuria, pre-existing urinary tract infection, or chronic kidney disease, and thus require cautious interpretation.

Several variables that showed significant differences across predicted risk groups—such as age, paraquat exposure, GCS, HCO3−, creatinine, BUN, PT-INR, chloride, potassium, and lactate—were also retained in the final model. This concordance reinforces the clinical validity of the selected features and suggests that the model effectively captures key prognostic signals. Among these, paraquat, a highly lethal herbicide banned in many countries, exhibited one of the highest regression coefficients and SHAP values. While this raises concerns regarding paraquat-driven bias, subgroup analyses showed robust model performance across other pesticide types, mitigating concerns about over-reliance on a single agent. Additionally, some statistically significant variables—such as body temperature, AST, and anion gap—were excluded, likely due to multicollinearity or lower independent predictive value, highlighting the importance of data-driven feature selection.

Compared to our model, the APACHE II–based model achieved a test AUC of 0.835 with moderate overall balanced accuracy (0.746) and sensitivity (0.706). However, its precision was limited (0.387), resulting in a relatively low F1 score (0.500). These findings suggest that, while APACHE II can detect a substantial proportion of mortality events, its lower precision limits its utility in triaging pesticide-poisoned patients. This limitation likely stems from the non-specific nature of APACHE II, which was developed for general ICU populations and may not account for the distinct pathophysiology of pesticide poisoning. In contrast, our model, based on LASSO- and clinically selected features, demonstrated superior discriminatory power and interpretability, making it more suitable for mortality risk stratification in this specialized population. Our study compared the model mainly with APACHE II, a widely used general prognostic tool, but a broader comparison with recently developed machine learning-based models in toxicology would provide additional context. Direct comparisons were not feasible in this study because most existing models focus on different toxic agents or rely on variables and outcomes that are not directly comparable to acute pesticide poisoning. Future work should therefore include head-to-head evaluations with these emerging models to more clearly delineate the strengths and limitations of our approach.

From a clinical perspective, our model could be integrated into emergency department workflows as a decision-support tool. Using only clinical and laboratory data from the first 2 h, the model could be embedded within electronic medical records to generate risk scores. In our cohort, tertile-based stratification identified a high-risk group (≥0.265) characterized by substantially increased short-term mortality, who might warrant early ICU consultation, closer monitoring, or timely interventions such as hemoperfusion, while low-risk patients could receive standard care.

Recent time-series methods, including the TCN-Linear model, have reported good performance in chaotic or financial forecasting [

16]. These findings suggest that increasing model complexity does not always lead to better predictive accuracy. However, such approaches have not been tested in clinical risk prediction and do not address essential requirements such as interpretability, calibration, or external validation. For this reason, we chose logistic regression as a transparent first step. Temporal models may be explored in future studies, but only if they can remain interpretable and clinically reliable.

This study has several limitations. First, it was a retrospective single-center study, which may limit the generalizability of the findings and does not exclude potential biases inherent to retrospective designs. Although national reimbursement policies and clinical guidelines in Korea may reduce inter-institutional variability, external validation with independent datasets—ideally through multi-center prospective cohorts with real-time data collection—is needed to confirm the robustness and reproducibility of our model in clinical practice. Second, although we selected 14-day in-hospital mortality as the primary outcome based on our cohort’s death distribution, standardized outcome windows for pesticide poisoning have not been established. Therefore, caution is needed when generalizing our 14-day endpoint. Third, while LR was chosen for its interpretability and low risk of overfitting, alternative machine learning models—such as random forest, gradient boosting, or deep learning—may offer improved predictive performance. Future studies should explore these approaches, but, given the heterogeneous mechanisms of different pesticides and the small sample size for some agents, simpler models such as logistic regression may be preferable to avoid overfitting. Ideally, these methods should be combined with explainability techniques such as SHAP or LIME to balance accuracy with interpretability. Lastly, the relatively low precision reflects dataset imbalance and the trade-off between sensitivity and specificity. In clinical settings, we prioritized sensitivity to minimize the risk of missing high-risk patients, even at the expense of precision. This limitation should be acknowledged, and future studies should consider advanced resampling or class-weighting strategies.