High-Quality, High-Impact Augmented Virtuality System for the Evaluation of the Influence of Context on Consumer Perception and Hedonics: A Case Study in a Sports Bar Environment

Abstract

1. Introduction

2. Materials and Methods

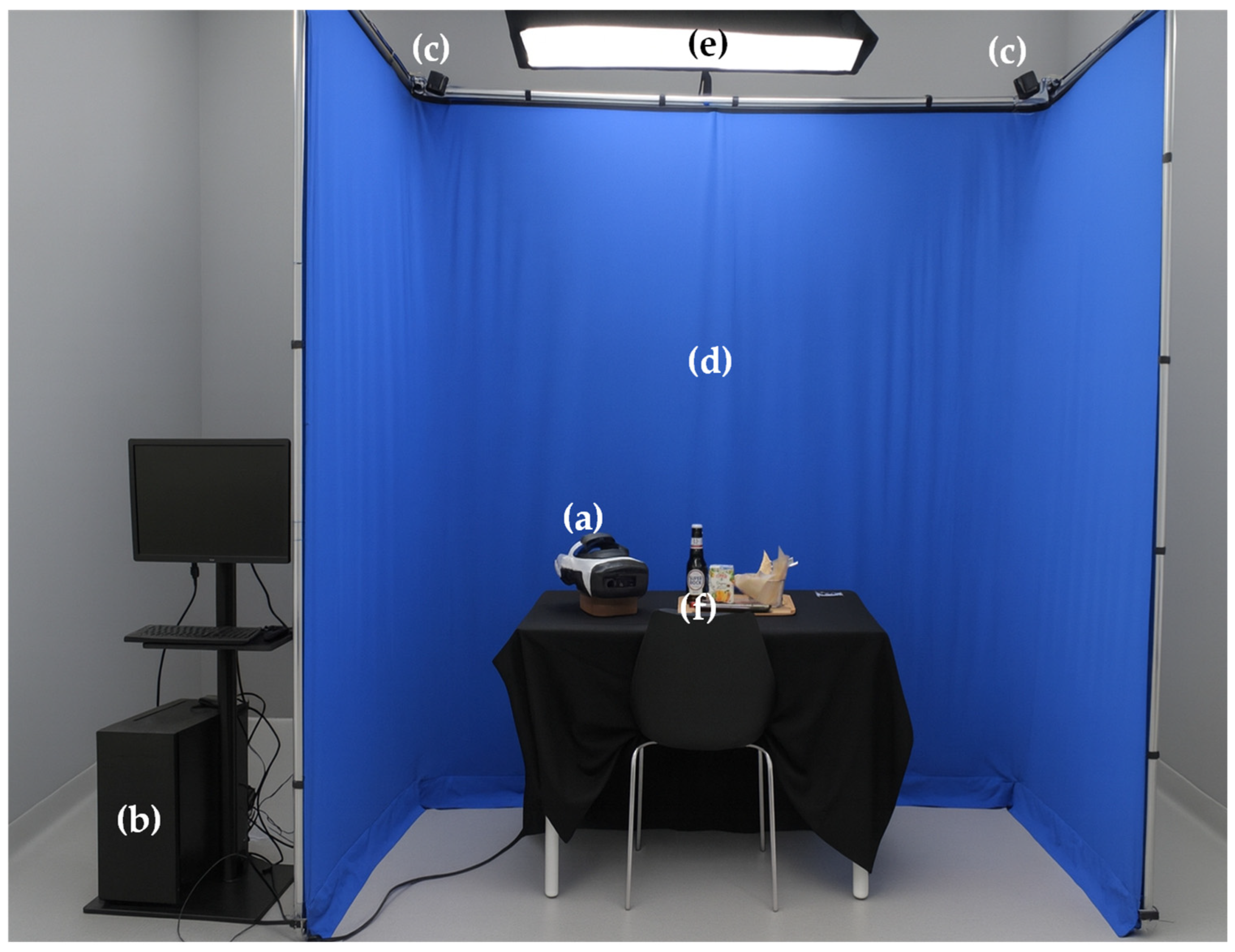

2.1. System Setup and Architecture

2.2. System Validation

2.2.1. Participants

2.2.2. Products

2.2.3. Study Environments

Laboratory Setting

Sense-AV Setting

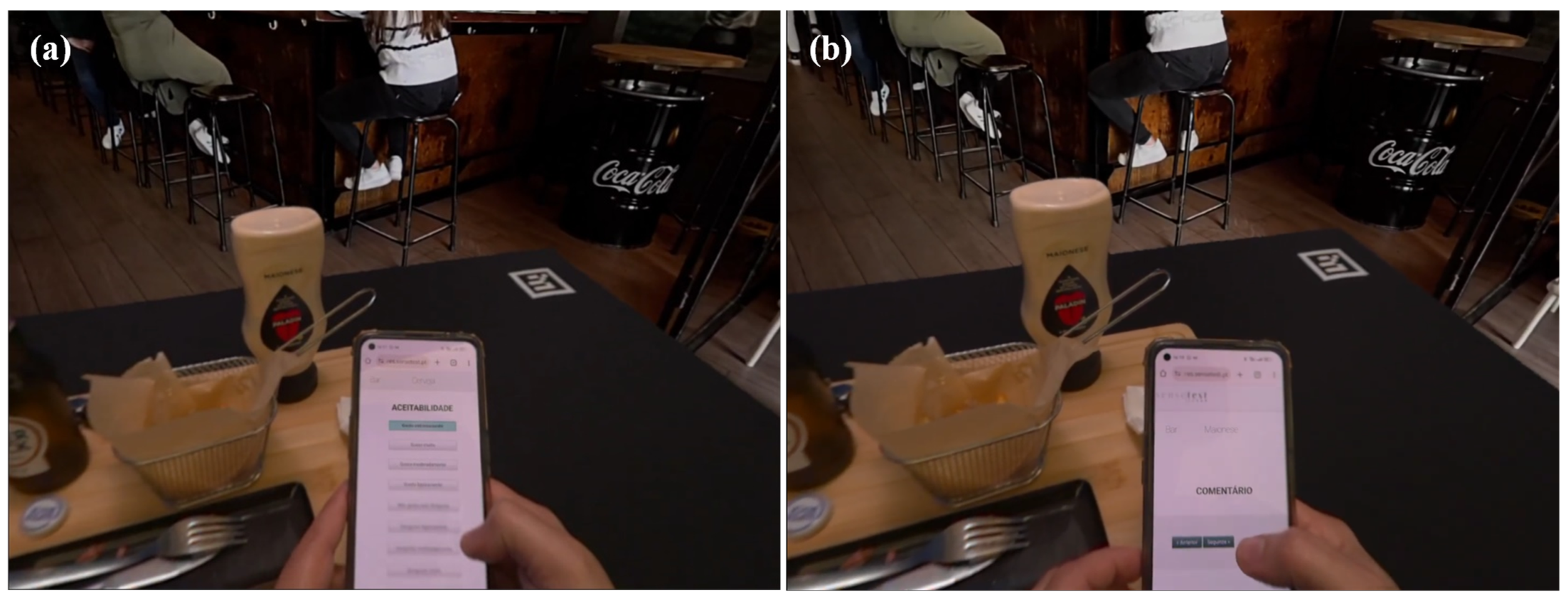

2.2.4. Evaluation Methodologies

Sensory Evaluation

Food Intake

Post-Session Questionnaires

Semi-Structured Individual Interviews

2.2.5. Statistical Analysis

3. Results

3.1. Sensory Evaluation

3.1.1. Overall Liking

3.1.2. Open Comments

3.2. Food Intake

3.3. Post-Session Questionnaires

3.3.1. Manipulation, Comprehension, Reading and Response

3.3.2. Engagement

3.3.3. System Usability Scale (SUS) and Virtual Reality System Usability Questionnaire (VRSUQ)

3.3.4. Presence and Sensory Awareness

3.3.5. Effects of Age, Sex, and Experience on Questionnaire Responses

3.4. Semi-Structured Individual Interviews

3.4.1. Initial Impressions

3.4.2. Immersion and Presence

3.4.3. User Experience

3.4.4. Recommendations and Suggestions for Improvement

4. Discussion

4.1. Sensory Evaluation

4.1.1. Overall Liking

4.1.2. Open Comments

4.2. Food Intake

4.3. Questionnaires

4.3.1. Manipulation, Comprehension, Reading and Response

4.3.2. Engagement

4.3.3. System Usability Scale (SUS) and Virtual Reality System Usability Questionnaire (VRSUQ)

4.3.4. Presence and Sensory Awareness

4.3.5. Effects of Age, Sex, and Experience on Questionnaire Responses

4.4. Semi-Structured Individual Interviews

5. Limitations

6. Conclusions

7. Patents

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Hardware Configuration

Appendix A.2. Software Development, Data Capture and Synchronisation

Appendix A.3. Environmental Setup

References

- Kotler, P.; Kartajaya, H.; Setiawan, I. Marketing 6.0: The Future Is Immersive, 1st ed.; Wiley: Hoboken, NJ, USA, 2024; p. 256. [Google Scholar]

- Suh, A.; Prophet, J. The state of immersive technology research: A literature analysis. Comput. Hum. Behav. 2018, 86, 77–90. [Google Scholar] [CrossRef]

- Flavián, C.; Ibáñez-Sánchez, S.; Orús, C. The impact of virtual, augmented and mixed reality technologies on the customer experience. J. Bus. Res. 2019, 100, 547–560. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Slater, M.; Sanchez-Vives, M. Enhancing Our Lives with Immersive Virtual Reality. Front. Robot. AI 2016, 3, 74. [Google Scholar] [CrossRef]

- Gutiérrez, M.; Vexo, F.; Thalmann, D. Stepping into Virtual Reality, 2nd ed.; Springer Nature: Cham, Switzerland, 2023. [Google Scholar]

- Yim, M.Y.-C.; Chu, S.-C.; Sauer, P. Is Augmented Reality Technology an Effective Tool for E-commerce? An Interactivity and Vividness Perspective. J. Interact. Mark. 2017, 39, 89–103. [Google Scholar] [CrossRef]

- Laine, T.H. Mobile Educational Augmented Reality Games: A Systematic Literature Review and Two Case Studies. Computers 2018, 7, 19. [Google Scholar] [CrossRef]

- Akçayır, M.; Akçayır, G. Advantages and challenges associated with augmented reality for education: A systematic review of the literature. Educ. Res. Rev. 2017, 20, 1–11. [Google Scholar] [CrossRef]

- Poushneh, A.; Vasquez-Parraga, A. Discernible impact of augmented reality on retail customer’s experience, satisfaction, and willingness to buy. J. Retail. Consum. Serv. 2017, 34, 229–234. [Google Scholar] [CrossRef]

- Barsom, E.Z.; Graafland, M.; Schijven, M.P. Systematic review on the effectiveness of augmented reality applications in medical training. Surg. Endosc. 2016, 30, 4174–4183. [Google Scholar] [CrossRef]

- Milgram, P.; Takemura, H.; Utsumi, A.; Kishino, F. Augmented reality: A class of displays on the reality-virtuality continuum. In Telemanipulator and telepresence technologies. Int. Soc. Opt. Photonics 1995, 2351, 282–292. [Google Scholar] [CrossRef]

- Meiselman, H.L. 1-The language of context research. In Context; Meiselman, H.L., Ed.; Woodhead Publishing: Cambridge, UK, 2019; pp. 3–18. [Google Scholar]

- Crofton, E.C.; Botinestean, C.; Fenelon, M.; Gallagher, E. Potential applications for virtual and augmented reality technologies in sensory science. Innov. Food Sci. Emerg. Technol. 2019, 56, 102178. [Google Scholar] [CrossRef]

- Schöniger, M.K. The role of immersive environments in the assessment of consumer perceptions and product acceptance: A systematic literature review. Food Qual. Prefer. 2022, 99, 104490. [Google Scholar] [CrossRef]

- Stelick, A.; Dando, R. Thinking outside the booth—The eating environment, context and ecological validity in sensory and consumer research. Curr. Opin. Food Sci. 2018, 21, 26–31. [Google Scholar] [CrossRef]

- Galiñanes Plaza, A.; Delarue, J.; Saulais, L. The pursuit of ecological validity through contextual methodologies. Food Qual. Prefer. 2019, 73, 226–247. [Google Scholar] [CrossRef]

- Meiselman, H.L.; Johnson, J.L.; Reeve, W.; Crouch, J.E. Demonstrations of the influence of the eating environment on food acceptance. Appetite 2000, 35, 231–237. [Google Scholar] [CrossRef] [PubMed]

- Köster, E.P. Diversity in the determinants of food choice: A psychological perspective. Food Qual. Prefer. 2009, 20, 70–82. [Google Scholar] [CrossRef]

- Payne, C.R.; Wansink, B. Quantitative Approaches to Consumer Field Research. J. Mark. Theory Pract. 2011, 19, 377–390. [Google Scholar] [CrossRef]

- Dong, Y.; Sharma, C.; Mehta, A.; Torrico, D.D. Application of Augmented Reality in the Sensory Evaluation of Yogurts. Fermentation 2021, 7, 147. [Google Scholar] [CrossRef]

- Vanhatalo, S.; Lappi, J.; Rantala, J.; Farooq, A.; Sand, A.; Raisamo, R.; Sozer, N. Meat- and plant-based products induced similar satiation which was not affected by multimodal augmentation. Appetite 2024, 194, 107171. [Google Scholar] [CrossRef]

- Gisbergen, M.; Kovacs, M.; Campos, F.; Heeft, M.; Vugts, V. What We Don’t Know. The Effect of Realism in Virtual Reality on Experience and Behaviour. In Augmented Reality and Virtual Reality; Springer: Berlin/Heidelberg, Germany, 2019; pp. 45–59. [Google Scholar]

- Weber, S.; Weibel, D.; Mast, F.W. How to Get There When You Are There Already? Defining Presence in Virtual Reality and the Importance of Perceived Realism. Front. Psychol. 2021, 12, 628298. [Google Scholar] [CrossRef]

- Newman, M.; Gatersleben, B.; Wyles, K.J.; Ratcliffe, E. The use of virtual reality in environment experiences and the importance of realism. J. Environ. Psychol. 2022, 79, 101733. [Google Scholar] [CrossRef]

- Debarba, H.G.; Montagud, M.; Chagué, S.; Herrero, J.G.-L.; Lacosta, I.; Langa, S.F.; Charbonnier, C. Content format and quality of experience in virtual reality. Multimed. Tools Appl. 2024, 83, 46481–46506. [Google Scholar] [CrossRef]

- Ribeiro, J.C.; Rocha, C.; Barbosa, B.; Lima, R.C.; Cunha, L.M. Sensory Analysis Performed within Augmented Virtuality System: Impact on Hedonic Scores, Engagement, and Presence Level. Foods 2024, 13, 2456. [Google Scholar] [CrossRef]

- Alba-Martínez, J.; Alcañiz, M.; Martínez-Monzó, J.; Cunha, L.M.; García-Segovia, P. Beyond Reality: Exploring the effect of different virtual reality environments on visual assessment of cakes. Food Res. Int. 2024, 179, 114019. [Google Scholar] [CrossRef] [PubMed]

- Low, J.Y.Q.; Lin, V.H.F.; Jun Yeon, L.; Hort, J. Considering the application of a mixed reality context and consumer segmentation when evaluating emotional response to tea break snacks. Food Qual. Prefer. 2021, 88, 104113. [Google Scholar] [CrossRef]

- Schouteten, J.J.; Van Severen, A.; Dull, D.; De Steur, H.; Danner, L. Congruency of an eating environment influences product liking: A virtual reality study. Food Qual. Prefer. 2024, 113, 105066. [Google Scholar] [CrossRef]

- Sinesio, F.; Moneta, E.; Porcherot, C.; Abbà, S.; Dreyfuss, L.; Guillamet, K.; Bruyninckx, S.; Laporte, C.; Henneberg, S.; McEwan, J.A. Do immersive techniques help to capture consumer reality? Food Qual. Prefer. 2019, 77, 123–134. [Google Scholar] [CrossRef]

- Alba-Martínez, J.; Sousa, P.M.; Alcañiz, M.; Cunha, L.M.; Martínez-Monzó, J.; García-Segovia, P. Impact of context in visual evaluation of design pastry: Comparison of real and virtual. Food Qual. Prefer. 2022, 97, 104472. [Google Scholar] [CrossRef]

- Xu, C.; Demir-Kaymaz, Y.; Hartmann, C.; Menozzi, M.; Siegrist, M. The comparability of consumers’ behavior in virtual reality and real life: A validation study of virtual reality based on a ranking task. Food Qual. Prefer. 2021, 87, 104071. [Google Scholar] [CrossRef]

- Zulkarnain, A.H.B.; Kókai, Z.; Gere, A. Immersive sensory evaluation: Practical use of virtual reality sensory booth. MethodsX 2024, 12, 102631. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.H.; Bampis, C.G.; Li, Z.; Sole, J.; Bovik, A.C. Perceptual Video Quality Prediction Emphasizing Chroma Distortions. IEEE Trans. Image Process. 2021, 30, 1408–1422. [Google Scholar] [CrossRef]

- Bremers, A.W.D.; Yöntem, A.Ö.; Li, K.; Chu, D.; Meijering, V.; Janssen, C.P. Perception of perspective in augmented reality head-up displays. Int. J. Hum.-Comput. Stud. 2021, 155, 102693. [Google Scholar] [CrossRef]

- Batmaz, A.U.; Machuca, M.D.B.; Sun, J.; Stuerzlinger, W. The Effect of the Vergence-Accommodation Conflict on Virtual Hand Pointing in Immersive Displays. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 30 April–5 May 2022. [Google Scholar]

- ISO 8589:2007; Sensory analysis—General guidance for the design of test rooms. International Organization for Standardization: Geneva, Switzerland, 2007.

- ISO 9001:2015; Quality management systems—Requirements. International Organization for Standardization: Geneva, Switzerland, 2015.

- Peryam, D.R.; Pilgrim, F.J. Hedonic scale method of measuring food preferences. Food Technol. 1957, 11, 9–14. [Google Scholar]

- Hannum, M.E.; Simons, C.T. Development of the engagement questionnaire (EQ): A tool to measure panelist engagement during sensory and consumer evaluations. Food Qual. Prefer. 2020, 81, 103840. [Google Scholar] [CrossRef]

- Brooke, J. SUS-a “quick and dirty” usability scale. In Usability Evaluation in Industry; Taylor & Francis: London, UK, 1996; pp. 189–194. [Google Scholar]

- Martins, A.I.; Rosa, A.F.; Queirós, A.; Silva, A.; Rocha, N.P. European Portuguese Validation of the System Usability Scale (SUS). Procedia Comput. Sci. 2015, 67, 293–300. [Google Scholar] [CrossRef]

- Kim, Y.M.; Rhiu, I. Development of a virtual reality system usability questionnaire (VRSUQ). Appl. Ergon. 2024, 119, 104319. [Google Scholar] [CrossRef]

- Makransky, G.; Lilleholt, L.; Aaby, A. Development and validation of the Multimodal Presence Scale for virtual reality environments: A confirmatory factor analysis and item response theory approach. Comput. Hum. Behav. 2017, 72, 276–285. [Google Scholar] [CrossRef]

- Bangcuyo, R.G.; Smith, K.J.; Zumach, J.L.; Pierce, A.M.; Guttman, G.A.; Simons, C.T. The use of immersive technologies to improve consumer testing: The role of ecological validity, context and engagement in evaluating coffee. Food Qual. Prefer. 2015, 41, 84–95. [Google Scholar] [CrossRef]

- Crofton, E.; Murray, N.; Botinestean, C. Exploring the Effects of Immersive Virtual Reality Environments on Sensory Perception of Beef Steaks and Chocolate. Foods 2021, 10, 1154. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.-E.; Lee, S.M.; Kim, K.-O. Consumer acceptability of coffee as affected by situational conditions and involvement. Food Qual. Prefer. 2016, 52, 124–132. [Google Scholar] [CrossRef]

- Sinesio, F.; Saba, A.; Peparaio, M.; Saggia Civitelli, E.; Paoletti, F.; Moneta, E. Capturing consumer perception of vegetable freshness in a simulated real-life taste situation. Food Res. Int. 2018, 105, 764–771. [Google Scholar] [CrossRef]

- Kong, Y.; Sharma, C.; Kanala, M.; Thakur, M.; Li, L.; Xu, D.; Harrison, R.; Torrico, D.D. Virtual Reality and Immersive Environments on Sensory Perception of Chocolate Products: A Preliminary Study. Foods 2020, 9, 515. [Google Scholar] [CrossRef]

- Torrico, D.D.; Sharma, C.; Dong, W.; Fuentes, S.; Gonzalez Viejo, C.; Dunshea, F.R. Virtual reality environments on the sensory acceptability and emotional responses of no- and full-sugar chocolate. LWT 2021, 137, 110383. [Google Scholar] [CrossRef]

- Höhne, J.K.; Claassen, J. Examining final comment questions with requests for written and oral answers. Int. J. Mark. Res. 2024, 66, 550–558. [Google Scholar] [CrossRef]

- Höhne, J.K.; Gavras, K.; Claassen, J. Typing or Speaking? Comparing Text and Voice Answers to Open Questions on Sensitive Topics in Smartphone Surveys. Soc. Sci. Comput. Rev. 2024, 42, 1066–1085. [Google Scholar] [CrossRef]

- Sousa, P.M.; Zaragoza, L.G.; Marín-Morales, J.; Lima, R.C.; Alcañiz-Raya, M.; García-Segovia, P.; Cunha, L.M. Influence of discourse characteristics on the acceptability of chocolates and plant-based drinks. In Proceedings of the 13th International Conference on Culinary Arts & Sciences (ICCAS), Kristianstad, Sweeden, 17–20 June 2024. [Google Scholar]

- Schindler, D.; Maiberger, T.; Koschate-Fischer, N.; Hoyer, W.D. How speaking versus writing to conversational agents shapes consumers’ choice and choice satisfaction. J. Acad. Mark. Sci. 2024, 52, 634–652. [Google Scholar] [CrossRef]

- Berger, J.; Rocklage, M.D.; Packard, G. Expression Modalities: How Speaking Versus Writing Shapes Word of Mouth. J. Consum. Res. 2021, 49, 389–408. [Google Scholar] [CrossRef]

- Hayes, J.R. A new framework for understanding cognition and affect in writing. In Perspectives on Writing: Research, Theory, and Practice; International Reading Association: Washington, DC, USA, 2000; pp. 6–44. [Google Scholar]

- Yang, Q.; Nijman, M.; Flintham, M.; Tennent, P.; Hidrio, C.; Ford, R. Improving simulated consumption context with virtual Reality: A focus on participant experience. Food Qual. Prefer. 2022, 98, 104531. [Google Scholar] [CrossRef]

- Hannum, M.E.; Forzley, S.; Popper, R.; Simons, C.T. Further validation of the engagement questionnaire (EQ): Do immersive technologies actually increase consumer engagement during wine evaluations? Food Qual. Prefer. 2020, 85, 103966. [Google Scholar] [CrossRef]

- Breves, P.; Stein, J.-P. Cognitive load in immersive media settings: The role of spatial presence and cybersickness. Virtual Real. 2023, 27, 1077–1089. [Google Scholar] [CrossRef]

- Jeffri, N.F.S.; Rambli, D.R.A. A review of augmented reality systems and their effects on mental workload and task performance. Heliyon 2021, 7, e06277. [Google Scholar] [CrossRef]

- Sauro, J.; Lewis, J. Standardized Usability Questionnaires. In Quantifying the User Experience; Morgan Kaufmann: Burlington, MA, USA, 2012; pp. 185–240. [Google Scholar]

- Gonçalves, G.; Melo, M.; Vasconcelos-Raposo, J.; Bessa, M. Impact of Different Sensory Stimuli on Presence in Credible Virtual Environments. IEEE Trans. Vis. Comput. Graph. 2020, 26, 3231–3240. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Lee, I.K. Studying the Effects of Congruence of Auditory and Visual Stimuli on Virtual Reality Experiences. IEEE Trans. Vis. Comput. Graph. 2022, 28, 2080–2090. [Google Scholar] [CrossRef]

- Long, J.W.; Masters, B.; Sajjadi, P.; Simons, C.; Masterson, T.D. The development of an immersive mixed-reality application to improve the ecological validity of eating and sensory behavior research. Front. Nutr. 2023, 10, 1170311. [Google Scholar] [CrossRef]

- Zandstra, E.H.; Kaneko, D.; Dijksterhuis, G.B.; Vennik, E.; De Wijk, R.A. Implementing immersive technologies in consumer testing: Liking and Just-About-Right ratings in a laboratory, immersive simulated café and real café. Food Qual. Prefer. 2020, 84, 103934. [Google Scholar] [CrossRef]

- Ito, K.; Tada, M.; Ujike, H.; Hyodo, K. Effects of the Weight and Balance of Head-Mounted Displays on Physical Load. Appl. Sci. 2021, 11, 6802. [Google Scholar] [CrossRef]

- Varjo. Varjo XR-4 Series. Available online: https://varjo.com/products/xr-4/ (accessed on 20 March 2025).

| Products | Overall Liking | p Value * | |

|---|---|---|---|

| Laboratory (Booth) | Sense-AV System | ||

| Sausage | 8.22 (±0.74) | 8.20 (±0.70) | 0.869 |

| Mayonnaise | 7.78 (±1.19) | 8.01 (±0.88) | 0.019 |

| Nectar | 8.11 (±1.10) | 8.10 (±0.96) | 0.794 |

| Beer | 7.94 (±1.29) | 8.00 (±0.89) | 0.850 |

| Products | Intake | p Value * | |

|---|---|---|---|

| Laboratory (Booth) | Sense-AV System | ||

| Sausage (initial serving: 41.22 ± 1.84 g) | 31.50 (±9.94) g | 24.69 (±11.85) g | <0.001 |

| Mayonnaise (nominal capacity: 450 mL) | 7.61 (±5.73) g | 6.54 (±4.79) g | 0.091 |

| Chips (initial serving: 46.63 ± 14.37 g) | 23.24 (±10.95) g | 19.86 (±15.16) g | 0.023 |

| Nectar (initial serving: 200 mL) | 151.02 (±49.97) mL | 102.52 (±53.65) mL | <0.001 |

| Beer (initial serving: 200 mL) | 144.12 (±44.90) mL | 109.92 (±47.04) mL | <0.001 |

| MCRRQ Factor | Session | p Value * | |

|---|---|---|---|

| Laboratory (Booth) | Sense-AV System | ||

| Manipulation | 6.74 (±0.42)/98.88% | 5.97 (±0.96)/90.20% | <0.001 |

| Reading through the phone | 6.62 (±0.63)/99.02% | 4.70 (±1.87)/57.84% | <0.001 |

| Response through the phone | 6.68 (±0.61)/99.02% | 5.38 (±1.71)/75.49% | <0.001 |

| Understanding the info (Booth—provided by the assistant; Sense-AV—provided by voice prompts) | 6.86 (±0.34)/100% | 6.67 (±0.63)/98.04% | 0.002 |

| Providing the open comment (Booth—written; Sense-AV—verbal) | 6.78 (±0.50)/100% | 6.26 (±1.04)/91.18% | <0.001 |

| EQ Factor | Session | p Value * | |

|---|---|---|---|

| Laboratory (Booth) | Sense-AV System | ||

| Active Involvement | 19.57 (±3.11) | 19.08 (±2.77) | 0.024 |

| Purposeful Intent | 26.54 (±2.03) | 26.22 (±2.35) | 0.114 |

| Affective Value | 18.70 (±2.45) | 18.84 (±2.41) | 0.616 |

| Questionnaires | Age | p Value * | |

|---|---|---|---|

| Younger Consumers (18–49) (n = 52) | Older Consumers (50–65) (n = 50) | ||

| MCRRQ | |||

| Manipulation (Booth) | 6.70 (±0.43) | 6.78 (±0.40) | 0.042 |

| Manipulation (Sense-AV) | 5.67 (±1.09) | 6.28 (±0.69) | 0.005 |

| Reading through the phone (Booth) | 6.50 (±0.72) | 6.73 (±0.49) | 0.113 |

| Reading through the phone (Sense-AV) | 4.26 (±1.86) | 5.16 (±1.80) | 0.011 |

| Response through the phone (Booth) | 6.56 (±0.72) | 6.79 (±0.45) | 0.090 |

| Response through the phone (Sense-AV) | 4.98 (±1.77) | 5.81 (±1.56) | 0.003 |

| Understanding the info provided by the assistant (Booth) | 6.92 (±0.26) | 6.79 (±0.40) | 0.061 |

| Understanding the info provided by voice prompts (Sense-AV) | 6.60 (±0.68) | 6.73 (±0.56) | 0.325 |

| Providing the written comment (Booth) | 6.81 (±0.52) | 6.75 (±0.48) | 0.279 |

| Providing the verbal comment (Sense-AV) | 6.13 (±1.20) | 6.40 (±0.81) | 0.467 |

| EQ | |||

| EQ—Active Involvement (Booth) | 19.41 (±3.03) | 19.73 (±3.24) | 0.079 |

| EQ—Active Involvement (Sense-AV) | 18.62 (±3.25) | 19.57 (±2.08) | 0.253 |

| EQ—Purposeful Intent (Booth) | 26.15 (±2.31) | 26.95 (±1.6) | 0.030 |

| EQ—Purposeful Intent (Sense-AV) | 25.86 (±2.76) | 26.59 (±1.79) | 0.253 |

| EQ—Affective Value (Booth) | 18.62 (±2.29) | 18.77 (±2.65) | 0.482 |

| EQ—Affective Value (Sense-AV) | 18.84 (±2.56) | 18.83 (±2.28) | 0.751 |

| SUS score | 80.75 (±15.65) | 82.65 (±13.18) | 0.796 |

| VRSUQ | |||

| VRSUQ Efficiency score | 83.54 (±14.61) | 85.26 (±14.54) | 0.538 |

| VRSUQ Satisfaction score | 85.42 (±16.99) | 89.22 (±14.62) | 0.264 |

| PSAQ | |||

| PSAQ—Physical Presence | 5.51 (±1.12) | 5.61 (±1.27) | 0.489 |

| PSAQ—Social Presence | 5.08 (±1.22) | 5.02 (±1.29) | 0.891 |

| PSAQ—Self-Presence | 5.12 (±1.21) | 5.42 (±1.36) | 0.136 |

| PSAQ—Sensory Awareness | 5.34 (±1.05) | 5.70 (±0.95) | 0.067 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marques, J.P.; Ribeiro, J.C.; Lima, R.C.; Baião, L.; Barbosa, B.; Rocha, C.; Cunha, L.M. High-Quality, High-Impact Augmented Virtuality System for the Evaluation of the Influence of Context on Consumer Perception and Hedonics: A Case Study in a Sports Bar Environment. Foods 2025, 14, 3950. https://doi.org/10.3390/foods14223950

Marques JP, Ribeiro JC, Lima RC, Baião L, Barbosa B, Rocha C, Cunha LM. High-Quality, High-Impact Augmented Virtuality System for the Evaluation of the Influence of Context on Consumer Perception and Hedonics: A Case Study in a Sports Bar Environment. Foods. 2025; 14(22):3950. https://doi.org/10.3390/foods14223950

Chicago/Turabian StyleMarques, João Pedro, José Carlos Ribeiro, Rui Costa Lima, Luís Baião, Bruna Barbosa, Célia Rocha, and Luís Miguel Cunha. 2025. "High-Quality, High-Impact Augmented Virtuality System for the Evaluation of the Influence of Context on Consumer Perception and Hedonics: A Case Study in a Sports Bar Environment" Foods 14, no. 22: 3950. https://doi.org/10.3390/foods14223950

APA StyleMarques, J. P., Ribeiro, J. C., Lima, R. C., Baião, L., Barbosa, B., Rocha, C., & Cunha, L. M. (2025). High-Quality, High-Impact Augmented Virtuality System for the Evaluation of the Influence of Context on Consumer Perception and Hedonics: A Case Study in a Sports Bar Environment. Foods, 14(22), 3950. https://doi.org/10.3390/foods14223950