Nondestructive Detection of Soluble Solids Content in Apples Based on Multi-Attention Convolutional Neural Network and Hyperspectral Imaging Technology

Abstract

1. Introduction

2. Materials and Method

2.1. Apple Samples

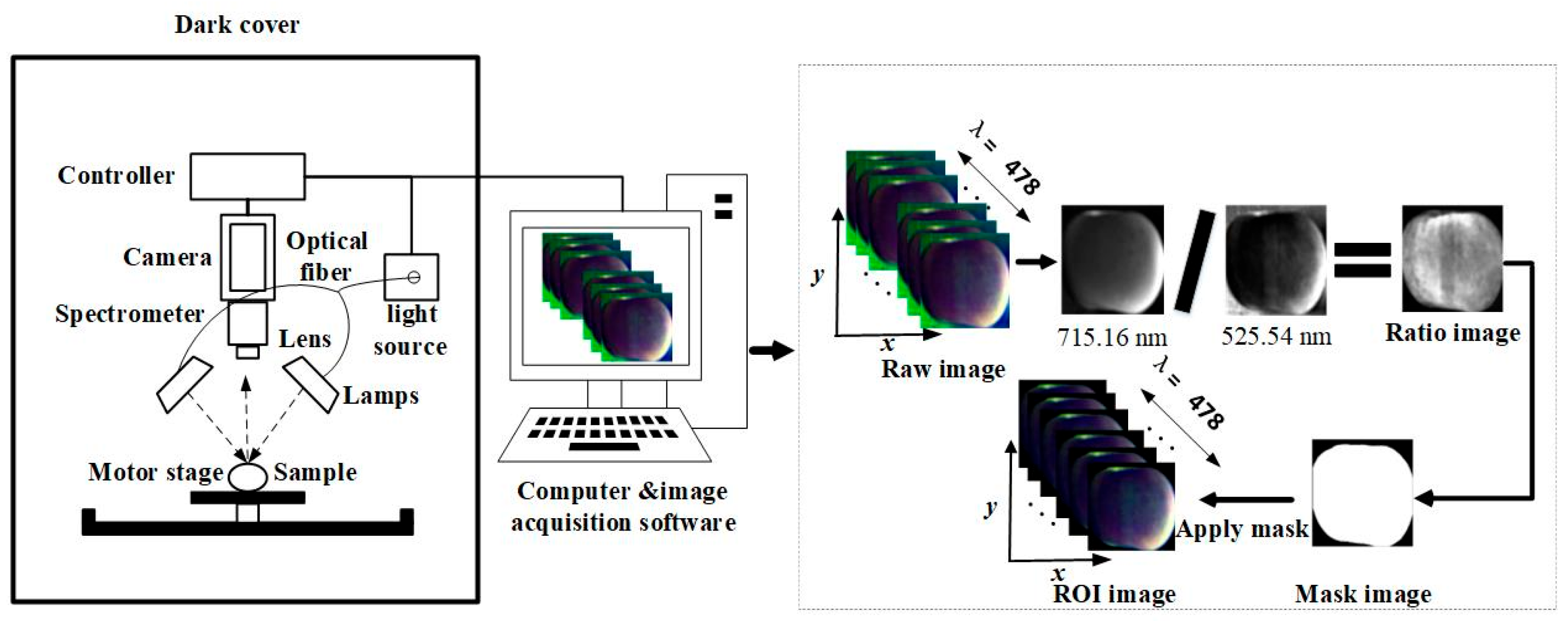

2.2. Region of Interest Extraction and Hyperspectral Data Processing

2.3. Determination of SSC in Apple Samples

2.4. CNN

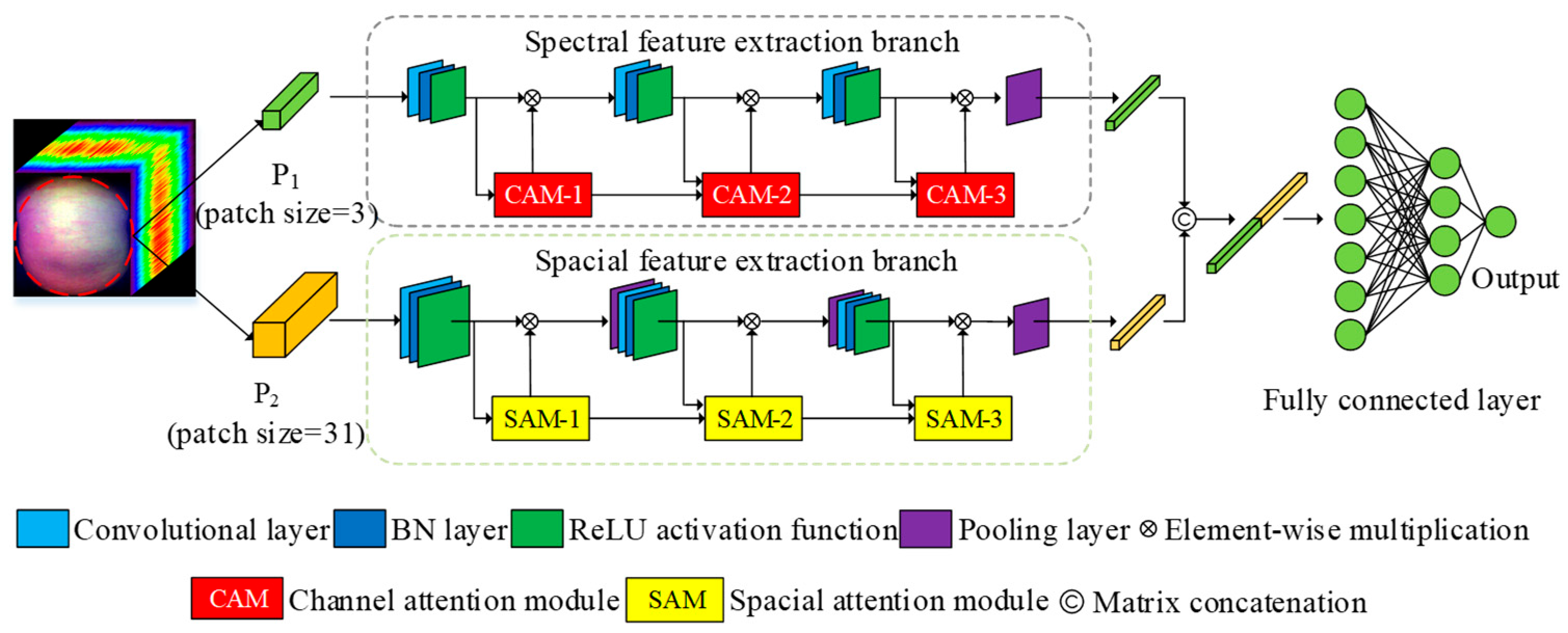

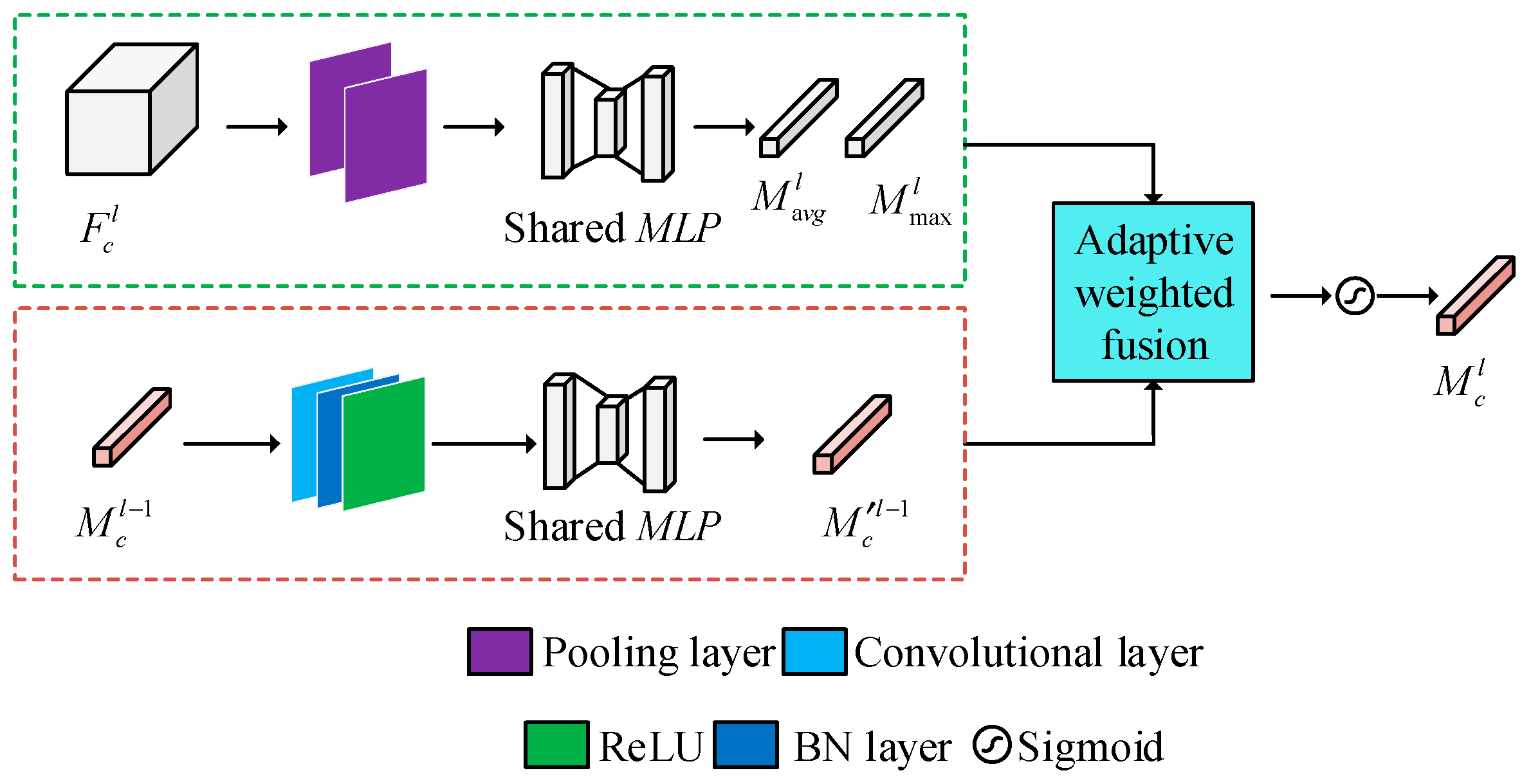

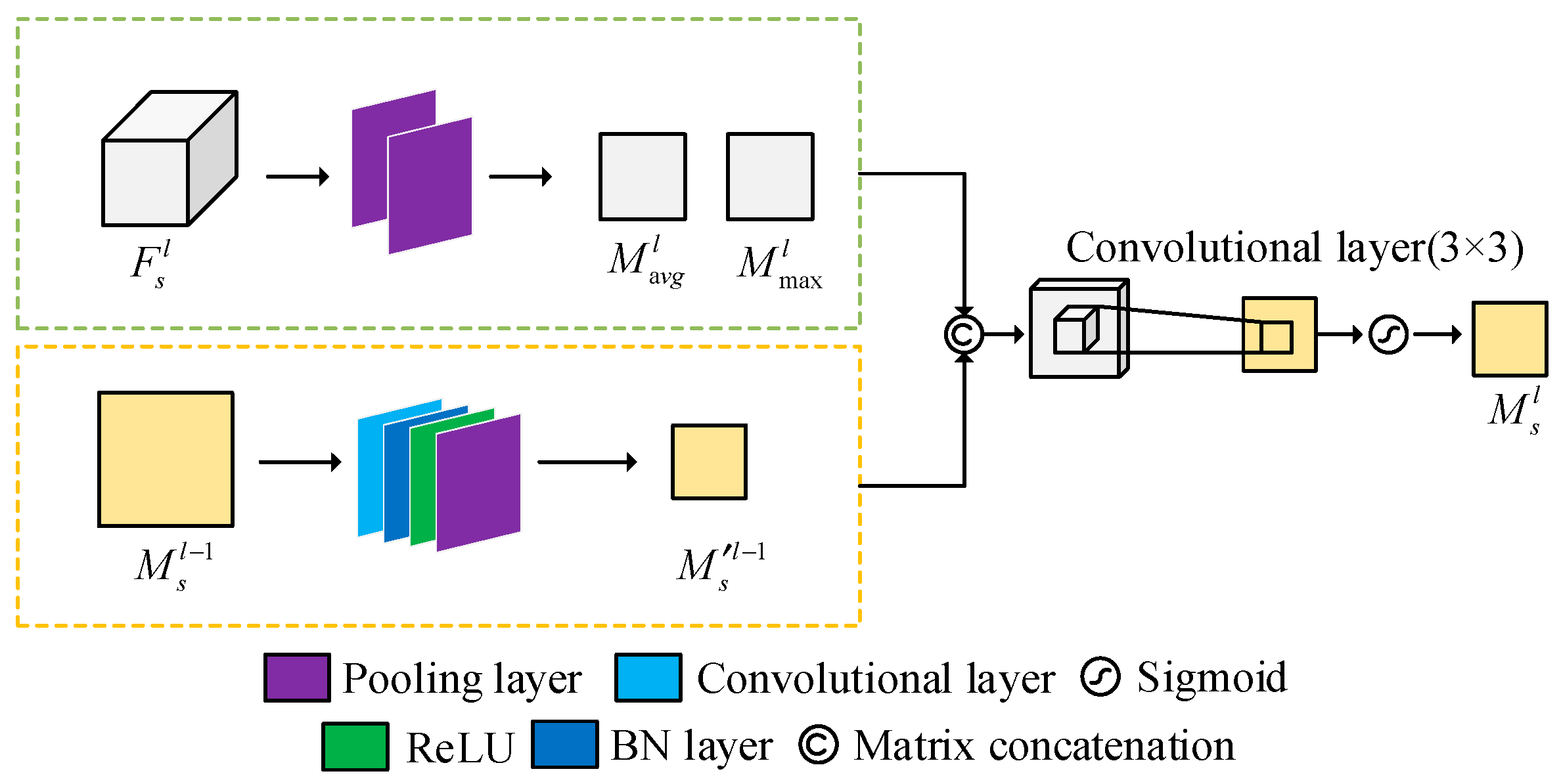

2.5. MA-CNN

2.6. BOA

2.7. Model Evaluation

3. Results and Discussion

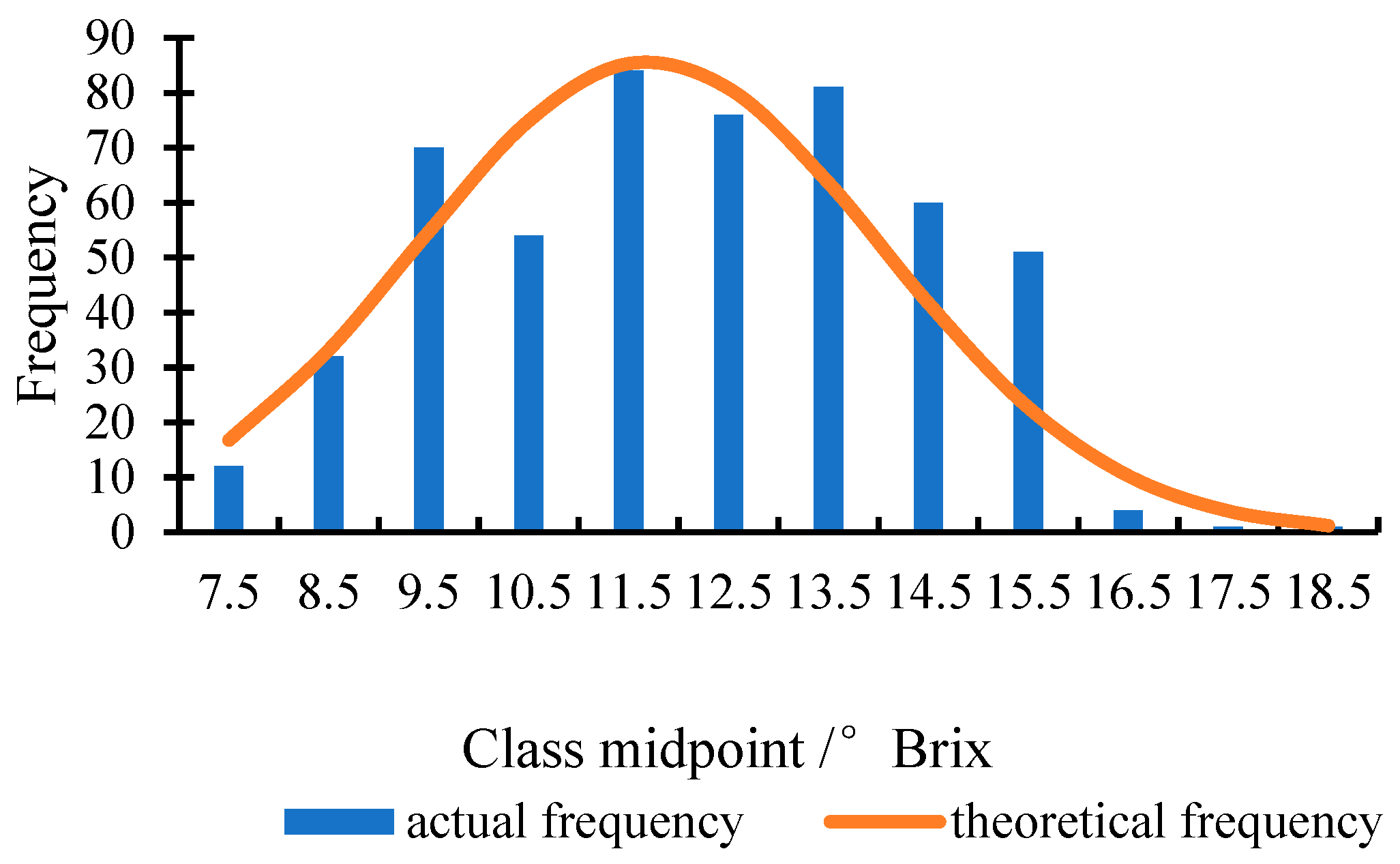

3.1. Statistics of Reference Values

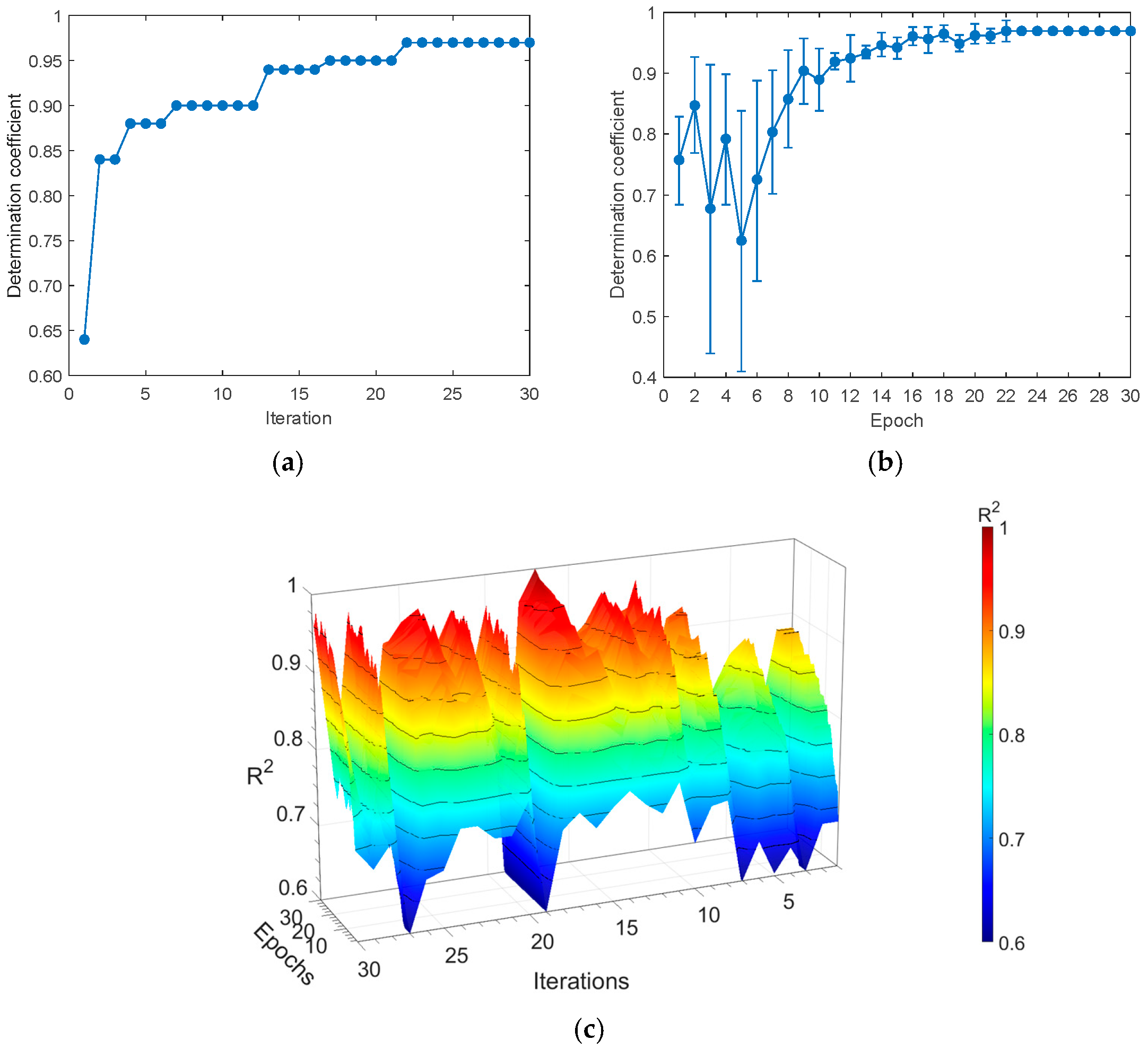

3.2. CA-CNN Model

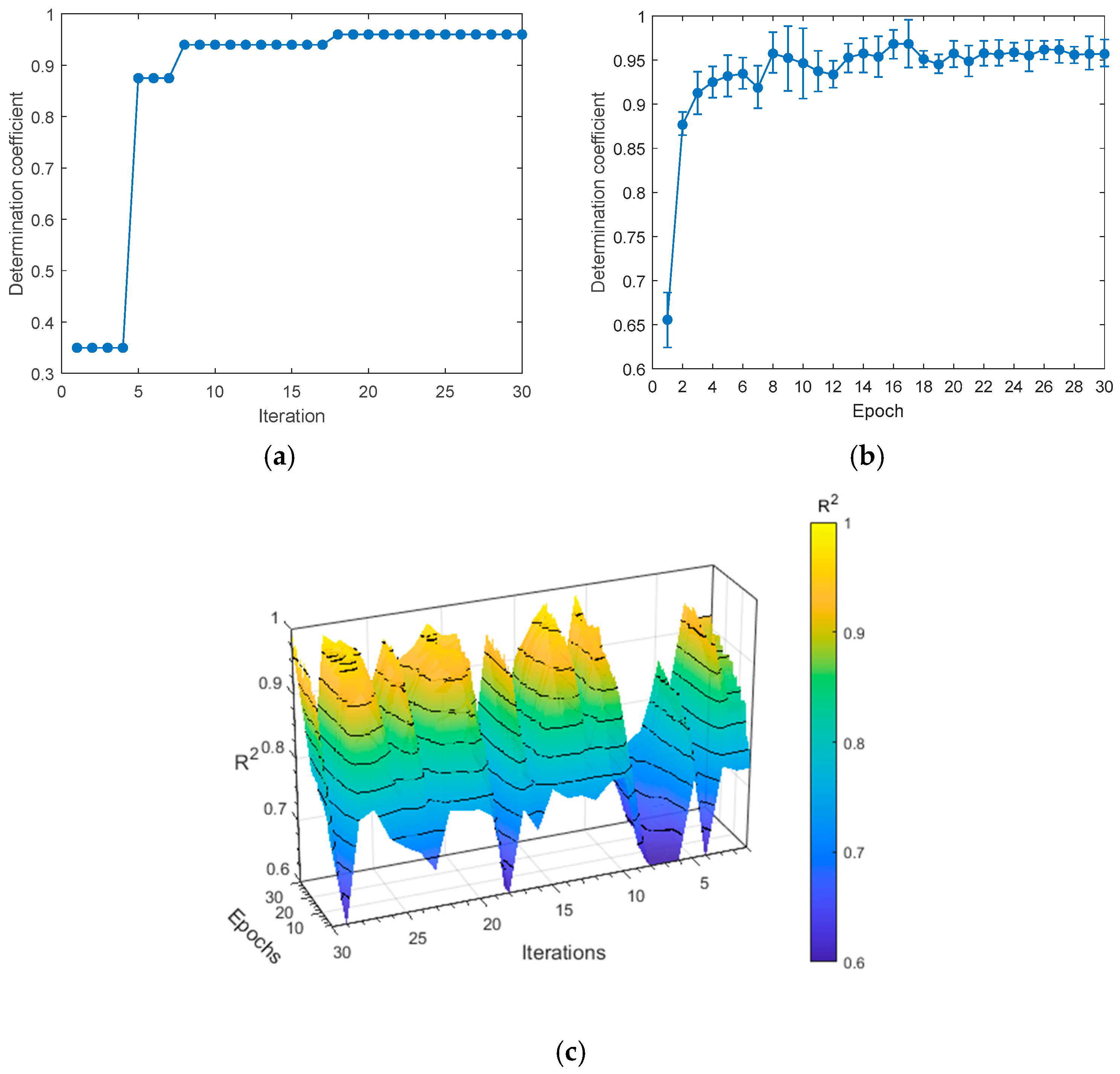

3.3. SA-CNN Model

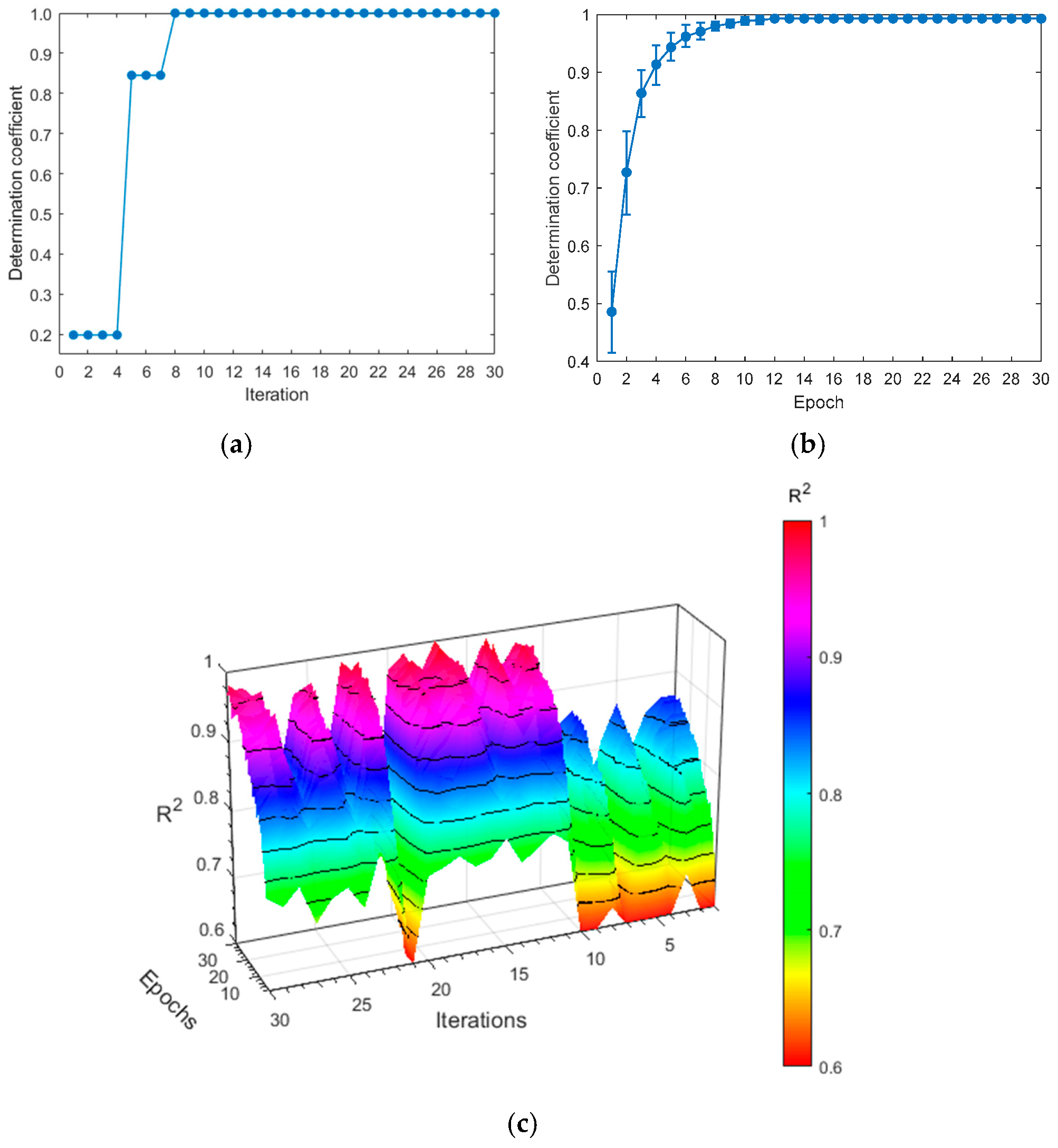

3.4. MA-CNN Model

3.5. Comparison of SSC Detection Model

3.6. Comparative Evaluation and Computational Complexity Analysis of Different Models

3.7. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mendoza, F.; Lu, R.; Cen, H. Grading of apples based on firmness and soluble solids content using Vis/SWNIR spectroscopy and spectral scattering techniques. J. Food Eng. 2014, 125, 59–68. [Google Scholar] [CrossRef]

- Wu, X.; Zhou, H.; Wu, B.; Fu, H. Determination of apple varieties by near infrared reflectance spectroscopy coupled with improved possibilistic Gath-Geva clustering algorithm. J. Food Process. Preserv. 2020, 44, e14561. [Google Scholar] [CrossRef]

- Xu, Q.; Wu, X.; Wu, B.; Zhou, H. Detection of apple varieties by near-infrared reflectance spectroscopy coupled with SPSO-PFCM. J. Food Process Eng. 2022, 45, e13993. [Google Scholar] [CrossRef]

- Bobelyn, E.; Serban, A.; Nicu, M.; Lammertyn, J.; Nicolai, B.M.; Saeys, W. Postharvest quality of apple predicted by NIR-spectroscopy: Study of the effect of biological variability on spectra and model performance. Postharvest Biol. Technol. 2010, 55, 133–143. [Google Scholar] [CrossRef]

- Tian, Y.; Sun, J.; Zhou, X.; Yao, K.; Tang, N. Detection of soluble solid content in apples based on hyperspectral technology combined with deep learning algorithm. J. Food Process. Preserv. 2022, 46, e16414. [Google Scholar] [CrossRef]

- Rong, Y.; Zareef, M.; Liu, L.; Din, Z.; Chen, Q.; Ouyang, Q. Application of portable Vis-NIR spectroscopy for rapid detection of myoglobin in frozen pork. Meat Sci. 2023, 201, 109170. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.; Zareef, M.; Chen, Q.; Ouyang, Q. Application of visible-near infrared spectroscopy in tandem with multivariate analysis for the rapid evaluation of matcha physicochemical indicators. Food Chem. 2023, 421, 136185. [Google Scholar] [CrossRef]

- Ouyang, Q.; Rong, Y.; Wu, J.; Wang, Z.; Lin, H.; Chen, Q. Application of colorimetric sensor array combined with visible near-infrared spectroscopy for the matcha classification. Food Chem. 2023, 420, 136078. [Google Scholar] [CrossRef]

- Liu, L.; Zareef, M.; Wang, Z.; Li, H.; Chen, Q.; Ouyang, Q. Monitoring chlorophyll changes during Tencha processing using portable near-infrared spectroscopy. Food Chem. 2023, 412, 135505. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Fang, Y.; Wu, B.; Liu, M. Application of Near-Infrared Spectroscopy and Fuzzy Improved Null Linear Discriminant Analysis for Rapid Discrimination of Milk Brands. Foods 2023, 12, 3929. [Google Scholar] [CrossRef]

- Li, Q.; Wu, X.; Zheng, J.; Wu, B.; Jian, H.; Sun, C.; Tang, Y. Determination of Pork Meat Storage Time Using Near-Infrared Spectroscopy Combined with Fuzzy Clustering Algorithms. Foods 2022, 11, 2101. [Google Scholar] [CrossRef]

- Li, H.; Zhang, W.; Nunekpeku, X.; Sheng, W.; Chen, Q. Investigating the change mechanism and quantitative analysis of minced pork gel quality with different starches using Raman spectroscopy. Food Hydrocoll. 2025, 159, 110634. [Google Scholar] [CrossRef]

- Jiang, H.; Wang, Z.; Deng, J.; Ding, Z.; Chen, Q. Quantitative detection of heavy metal Cd in vegetable oils: A nondestructive method based on Raman spectroscopy combined with chemometrics. J. Food Sci. 2024, 89, 8054–8065. [Google Scholar] [CrossRef] [PubMed]

- Zhu, J.; Jiang, X.; Rong, Y.; Wei, W.; Wu, S.; Jiao, T.; Chen, Q. Label-free detection of trace level zearalenone in corn oil by surface-enhanced Raman spectroscopy (SERS) coupled with deep learning models. Food Chem. 2023, 414, 135705. [Google Scholar] [CrossRef] [PubMed]

- Liang, J.; Wang, Y.; Shi, Y.; Huang, X.; Li, Z.; Zhang, X.; Zou, X.; Shi, J. Non-destructive discrimination of homochromatic foreign materials in cut tobacco based on VIS-NIR hyperspectral imaging. J. Sci. Food Agric. 2023, 103, 4545–4552. [Google Scholar] [CrossRef]

- Yu, X.; Lu, H.; Liu, Q. Deep-learning-based regression model and hyperspectral imaging for rapid detection of nitrogen concentration in oilseed rape (Brassica napus L.) leaf. Chemom. Intell. Lab. Syst. 2017, 172, 188–193. [Google Scholar] [CrossRef]

- Tian, X.; Aheto, J.; Huang, X.; Zheng, K.; Dai, C.; Wang, C.; Bai, J. An evaluation of biochemical, structural and volatile changes of dry-cured pork using a combined ion mobility spectrometry, hyperspectral and confocal imaging approach. J. Sci. Food Agric. 2021, 101, 5972–5983. [Google Scholar] [CrossRef]

- Shi, L.; Sun, J.; Zhang, B.; Wu, Z.; Jia, Y.; Yao, K.; Zhou, X. Simultaneous detection for storage condition and storage time of yellow peach under different storage conditions using hyperspectral imaging with multi-target characteristic selection and multi-task model. J. Food Compos. Anal. 2024, 135, 106647. [Google Scholar] [CrossRef]

- Tian, Y.; Sun, J.; Zhou, X.; Wu, X.; Lu, B.; Dai, C. Research on apple origin classification based on variable iterative space shrinkage approach with stepwise regression–support vector machine algorithm and visible-near infrared hyperspectral imaging. J. Food Process. Eng. 2020, 43, e13432. [Google Scholar] [CrossRef]

- Shanthini, K.; George, S.N.; Chandran, O. NorBlueNet: Hyperspectral imaging-based hybrid CNN-transformer model for non-destructive SSC analysis in Norwegian wild blueberries. Comput. Electron. Agric. 2025, 235, 110340. [Google Scholar] [CrossRef]

- Yu, X.; Lu, H.; Wu, D. Development of deep learning method for predicting firmness and soluble solid content of postharvest Korla fragrant pear using Vis/NIR hyperspectral reflectance imaging. Postharvest Biol. Technol. 2018, 141, 39–49. [Google Scholar] [CrossRef]

- Xu, M.; Sun, J.; Yao, K. Nondestructive detection of total soluble solids in grapes using VMD-RC and hyperspectral imaging. J. Food Sci. 2022, 87, 236–338. [Google Scholar] [CrossRef]

- Guo, Z.; Zou, Y.; Sun, C.; Jayan, H.; Jiang, S.; El-Seedi, H.; Zou, X. Nondestructive determination of edible quality and watercore degree of apples by portable Vis/NIR transmittance system combined with CARS-CNN. J. Food Meas. Charact. 2024, 18, 4058–4073. [Google Scholar] [CrossRef]

- Cheng, J.; Sun, J.; Yao, K.; Xu, M.; Dai, C. Multi-task convolutional neural network for simultaneous monitoring of lipid and protein oxidative damage in frozen-thawed pork using hyperspectral imaging. Meat Sci. 2023, 201, 109196. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Li, T.; Chen, T.; Zhang, X.; Taha, M.; Yang, N.; Mao, H.; Shi, Q. Cucumber Downy Mildew Disease Prediction Using a CNN-LSTM Approach. Agriculture 2024, 14, 1155. [Google Scholar] [CrossRef]

- Zhao, L.; Zhou, S.; Liu, Y.; Pang, K.; Yin, Z.; Chen, H. Strawberry defect detection and visualization using hyperspectral imaging. Spectrosc. Spectr. Anal. 2025, 45, 1310–1318. [Google Scholar] [CrossRef]

- Roy, S.; Manna, S.; Song, T.; Bruzzone, L. Attention-based adaptive spectral-spatial kernel ResNet for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 7831–7843. [Google Scholar] [CrossRef]

- Alam, M.; Sultana, N.; Hossain, S. Bayesian optimization algorithm based support vector regression analysis for estimation of shear capacity of FRP reinforced concrete members. Appl. Soft Comput. 2021, 105, 107281. [Google Scholar] [CrossRef]

- Sun, J.; Zhou, X.; Hu, Y.; Wu, X.; Zhang, X.; Wang, P. Visualizing distribution of moisture content in tea leaves using optimization algorithms and NIR hyperspectral imaging. Comput. Electron. Agric. 2019, 160, 153–159. [Google Scholar] [CrossRef]

- Vega, D.; Aldana, A.; Zuluaga, D. Prediction of dry matter content of recently harvested ‘Hass’ avocado fruits using hyperspectral imaging. J. Sci. Food Agric. 2020, 101, 897–906. [Google Scholar] [CrossRef]

- Huang, Y.; Li, J.; Yang, R.; Wang, F.; Qian, W. Hyperspectral imaging for identification of an invasive plant mikania micrantha kunth. Front. Plant Sci. 2021, 12, 626516. [Google Scholar] [CrossRef] [PubMed]

- Hu, Y.; Sheng, W.; Adade, S.; Wang, J.; Li, H.; Chen, Q. Comparison of machine learning and deep learning models for detecting quality components of vine tea using smartphone-based portable near-infrared device. Food Control 2025, 174, 111244. [Google Scholar] [CrossRef]

- Xue, Y.; Jiang, H. Monitoring of chlorpyrifos residues in corn oil based on raman spectral deep-learning model. Foods 2023, 12, 2402. [Google Scholar] [CrossRef]

- Plakias, S.; Boutalis, Y. Fault detection and identification of rolling element bearings with attentive dense CNN. Neurocomputing 2020, 405, 208–217. [Google Scholar] [CrossRef]

- Shi, H.; Sun, H.; Zhao, C.; Han, G.; Wu, R.; Liu, Y. Bearing fault diagnosis based on residual networks and grouped two-level attention mechanism for multisource signal Fusion. IEEE Trans. Instrum. Meas. 2025, 74, 3532211. [Google Scholar] [CrossRef]

- Huang, T.; Fu, S.; Feng, H.; Kuang, J. Bearing fault diagnosis based on shallow multi-scale convolutional neural network with attention. Energies 2019, 12, 3937. [Google Scholar] [CrossRef]

- Liu, H.; Wei, C.; Sun, B. Quantitative Evaluation for robustness of intelligent fault diagnosis algorithms based on self-attention mechanism. J. Internet Technol. 2024, 25, 921–929. [Google Scholar] [CrossRef]

- Zhou, X.; Luo, C.; Ren, P.; Zhang, B. Multiscale Complex-Valued Feature Attention Convolutional Neural Network for SAR Automatic Target Recognition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 2052–2066. [Google Scholar] [CrossRef]

- Xu, Q.; Jiang, H.; Zhang, X.; Li, J.; Chen, L. Multiscale convolutional neural network nased on channel space attention for gearbox compound fault diagnosis. Sensors 2023, 23, 3827. [Google Scholar] [CrossRef]

- Ni, J.; Xue, Y.; Zhou, Y.; Miao, M. Rapid identification of greenhouse tomato senescent leaves based on the sucrose-spectral quantitative prediction model. Biosyst. Eng. 2024, 238, 200–211. [Google Scholar] [CrossRef]

- Visweswaran, M.; Mohan, J.; Kumar, S.; Soman, K. Synergistic detection of multimodal fake news leveraging TextGCN and vision transformer. Procedia Comput. Sci. 2024, 235, 142–151. [Google Scholar] [CrossRef]

- Roy, S.; Krishna, G.; Dubey, S.; Chaudhuri, B. HybridSN: Exploring 3-D–2-D CNN feature hierarchy for hyperspectral image classification. IEEE Geosci. Remote Sens. Lett. 2019, 17, 277–281. [Google Scholar] [CrossRef]

- Zhao, J.; Wang, J.; Chao, R.; Huang, L. Dual-Branch Spectral-spatial attention network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5504718. [Google Scholar] [CrossRef]

- Yang, X.; Cao, W.; Tang, D.; Zhou, Y.; Lu, Y. ACTN: Adaptive coupling transformer network for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5503115. [Google Scholar] [CrossRef]

- Li, X.; Huang, J.; Xiong, Y.; Zhou, J.; Tan, X.; Zhang, B. Determination of soluble solid content in multi-origin ‘Fuji’ apples by using FT-NIR spectroscopy and an origin discriminant strategy. Comput. Electron. Agric. 2018, 155, 23–31. [Google Scholar] [CrossRef]

- Guo, Z.; Huang, W.; Peng, Y.; Chen, Q.; Ouyang, Q.; Zhao, L. Color compensation and comparison of shortwave near infrared and long wave near infrared spectroscopy for determination of soluble solids content of ‘Fuji’ apple. Postharvest Biol. Technol. 2016, 115, 81–90. [Google Scholar] [CrossRef]

- Qi, H.; Li, H.; Chen, L.; Chen, F.; Luo, J.; Zhang, C. Hyperspectral Imaging Using a Convolutional Neural Network with Transformer for the Soluble Solid Content and pH Prediction of Cherry Tomatoes. Foods 2024, 13, 251. [Google Scholar] [CrossRef] [PubMed]

| Dataset | Samples | Max (°Brix) | Min (°Brix) | Mean (°Brix) | SD (°Brix) |

|---|---|---|---|---|---|

| Calibration sets | 320 | 18.10 | 7.20 | 12.23 | 2.21 |

| Prediction sets | 95 | 15.41 | 8.05 | 11.34 | 1.89 |

| Total samples | 570 | 18.10 | 7.20 | 11.76 | 1.92 |

| Parameters | Search Space | Search Results |

|---|---|---|

| Number of filters of Conv1 to Conv3 | (4, 64), (8, 128), (16, 256) | (32, 64, 128) |

| Neurons in FC1 to FC2 | (32, 128), (32, 128) | (83, 51) |

| Learning rate | (1 × 10−4, 1 × 10−1) | 0.0009 |

| Batch size | (2, 128) | 32 |

| Activation function | [ReLU, SoftMax, Sigmoid, ELU] | ReLU |

| Optimization method | [SGD, Adam, AdaBound, RMSProp] | AdaBound |

| Parameters | Search Space | Search Results |

|---|---|---|

| Number of filters of Conv1 to Conv3 | (4, 64), (16, 256), (8, 128) | (64, 128, 56) |

| Neurons in FC1 to FC2 | (64, 256), (64, 256) | (125, 93) |

| Learning rate | (1 × 10−4, 1 × 10−2) | 0.0007 |

| Batch size | (2, 128) | 53 |

| Activation function | [ReLU, SoftMax, Sigmoid, ELU] | ELU |

| Optimization method | [SGD, Adam, AdaBound, RMSProp] | RMSProp |

| Parameters | Search Space | Search Results |

|---|---|---|

| Neurons in FC1 to FC2 | (64, 256), (64, 256) | (86, 86) |

| Learning rate | (1 × 10−5, 1 × 10−1) | 0.0001 |

| Batch size | (4, 128) | 69 |

| Activation function | [ReLU, SoftMax, Sigmoid, ELU] | ReLU |

| Optimization method | [SGD, Adam, AdaBound, RMSProp] | AdaBound |

| Input Data | Model | Calibration Set | Prediction Set | |||

|---|---|---|---|---|---|---|

| RMSEC | RMSEP | RPD | ||||

| P1 | CA-CNN | 0.9754 | 0.0698 | 0.9571 | 0.0738 | 2.9876 |

| CNN | 0.9607 | 0.0771 | 0.9409 | 0.0842 | 2.6384 | |

| P2 | SA-CNN | 0.9732 | 0.0583 | 0.9516 | 0.0795 | 2.8593 |

| CNN | 0.9588 | 0.0897 | 0.9389 | 0.0906 | 2.3529 | |

| Pl and P2 | MA-CNN | 0.9796 | 0.0513 | 0.9602 | 0.0612 | 3.3417 |

| CNN | 0.9691 | 0.0659 | 0.9578 | 0.0743 | 3.0635 | |

| Model | Training Set | Test Set | |||

|---|---|---|---|---|---|

| RMSEC | RMSEP | RPD | |||

| ViT | 0.9514 | 0.0541 | 0.9151 | 0.1496 | 2.7837 |

| HybridSN | 0.9633 | 0.0438 | 0.9210 | 0.0938 | 3.3121 |

| SSAN | 0.9678 | 0.0437 | 0.9230 | 0.0875 | 3.3252 |

| HybridViT | 0.9758 | 0.0406 | 0.9357 | 0.0806 | 3.2863 |

| Methods | Trainable Params (M) | Training Time (s) | Testing Time (s) |

|---|---|---|---|

| ViT | 0.25 | 1570 | 5.2 |

| HybridSN | 0.29 | 1457 | 4.8 |

| SSAN | 0.14 | 630 | 2.3 |

| HybridViT | 0.22 | 1289 | 4.1 |

| MA-CNN | 0.23 | 1103 | 4.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, Y.; Sun, J.; Zhou, X.; Cong, S.; Dai, C.; Shi, L. Nondestructive Detection of Soluble Solids Content in Apples Based on Multi-Attention Convolutional Neural Network and Hyperspectral Imaging Technology. Foods 2025, 14, 3832. https://doi.org/10.3390/foods14223832

Tian Y, Sun J, Zhou X, Cong S, Dai C, Shi L. Nondestructive Detection of Soluble Solids Content in Apples Based on Multi-Attention Convolutional Neural Network and Hyperspectral Imaging Technology. Foods. 2025; 14(22):3832. https://doi.org/10.3390/foods14223832

Chicago/Turabian StyleTian, Yan, Jun Sun, Xin Zhou, Sunli Cong, Chunxia Dai, and Lei Shi. 2025. "Nondestructive Detection of Soluble Solids Content in Apples Based on Multi-Attention Convolutional Neural Network and Hyperspectral Imaging Technology" Foods 14, no. 22: 3832. https://doi.org/10.3390/foods14223832

APA StyleTian, Y., Sun, J., Zhou, X., Cong, S., Dai, C., & Shi, L. (2025). Nondestructive Detection of Soluble Solids Content in Apples Based on Multi-Attention Convolutional Neural Network and Hyperspectral Imaging Technology. Foods, 14(22), 3832. https://doi.org/10.3390/foods14223832