Food Image Recognition Based on Anti-Noise Learning and Covariance Feature Enhancement

Abstract

1. Introduction

2. Materials and Methods

2.1. Datasets

2.2. Modification of the Original Dataset

2.3. Neural Network Architecture

2.3.1. Noise Adaptive Recognition Module (NARM)

2.3.2. Weighted Multi-Granularity Fusion (WMF)

| Algorithm 1 Weighted Multi-Granularity Fusion | |

| Require: Given a dataset (where represents the total number of batches in ) | |

| 1: | for epoch = 1 to num_of_epochs do |

| 2: | for (input, target) in do |

| 3: | for n = 1 to S do |

| 4: | |

| 5: | # NARM |

| 6: | |

| 7: | BACKWARD() |

| 8: | end for |

| 9: | # WMF |

| 10: | |

| 11: | |

| 12: | BACKWARD() |

| 13: | end for |

| 14: | end for |

2.3.3. Progressive Temperature-Aware Feature Distillation (PTAFD)

2.4. Experimental Design

2.4.1. Comparison with State-of-the-Art Methods

2.4.2. Ablation Study Design

2.4.3. Effect of Noise Intensity

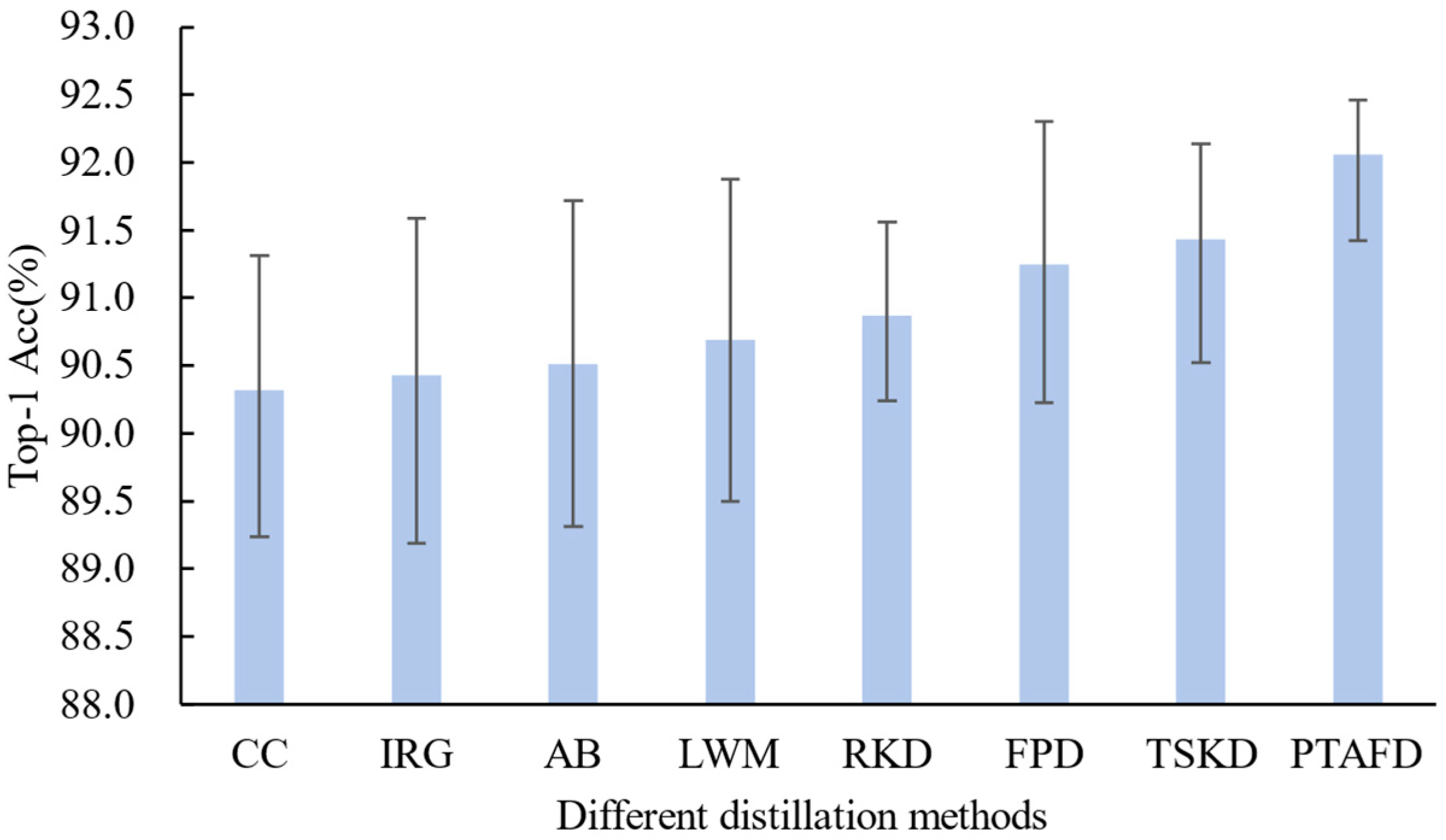

2.4.4. Evaluation of Distillation Strategies

2.5. Performance Metrics

3. Results

3.1. Comparison with Baselines

3.2. Ablation Study Results

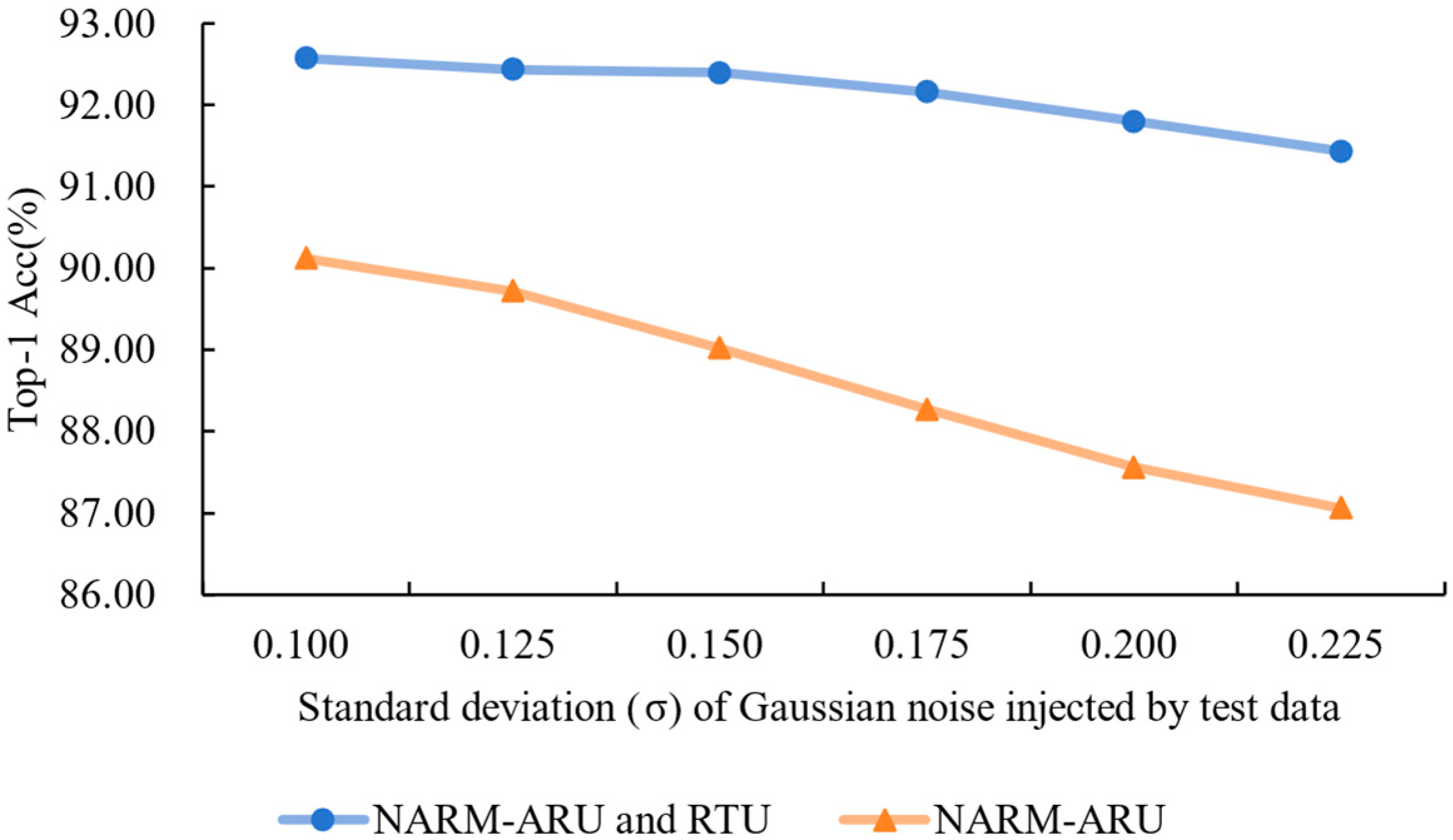

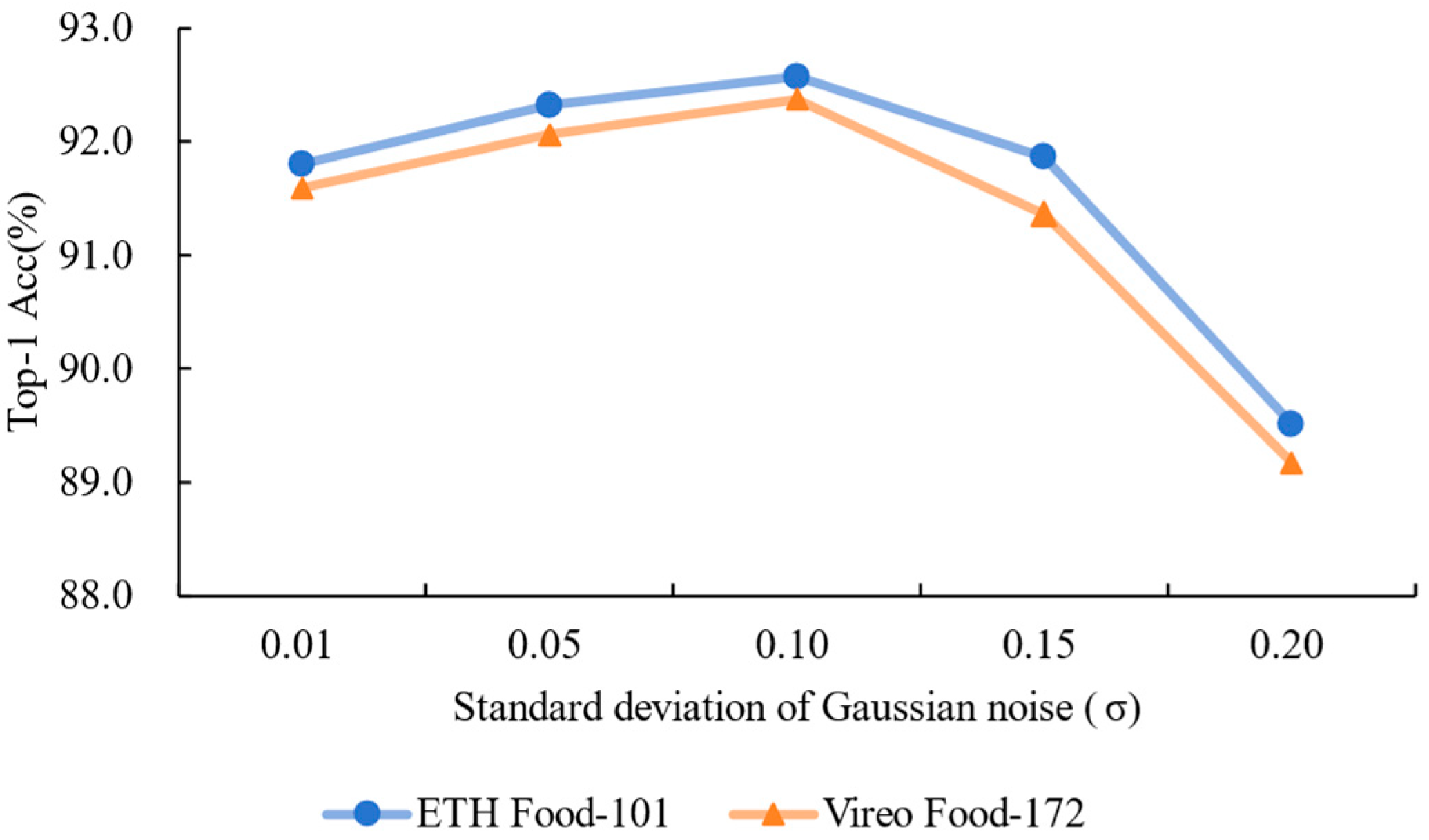

3.3. Effect of Noise Intensity

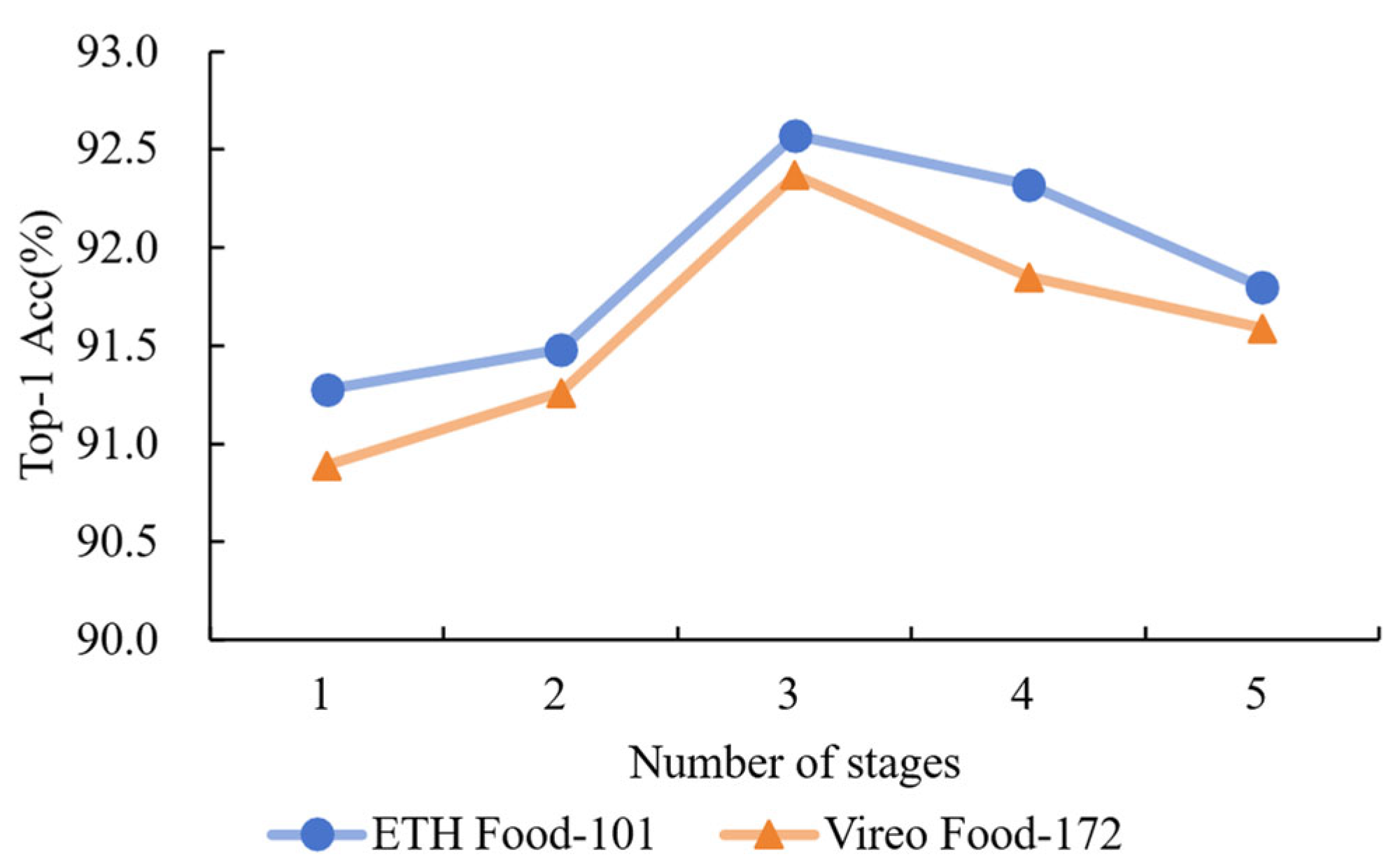

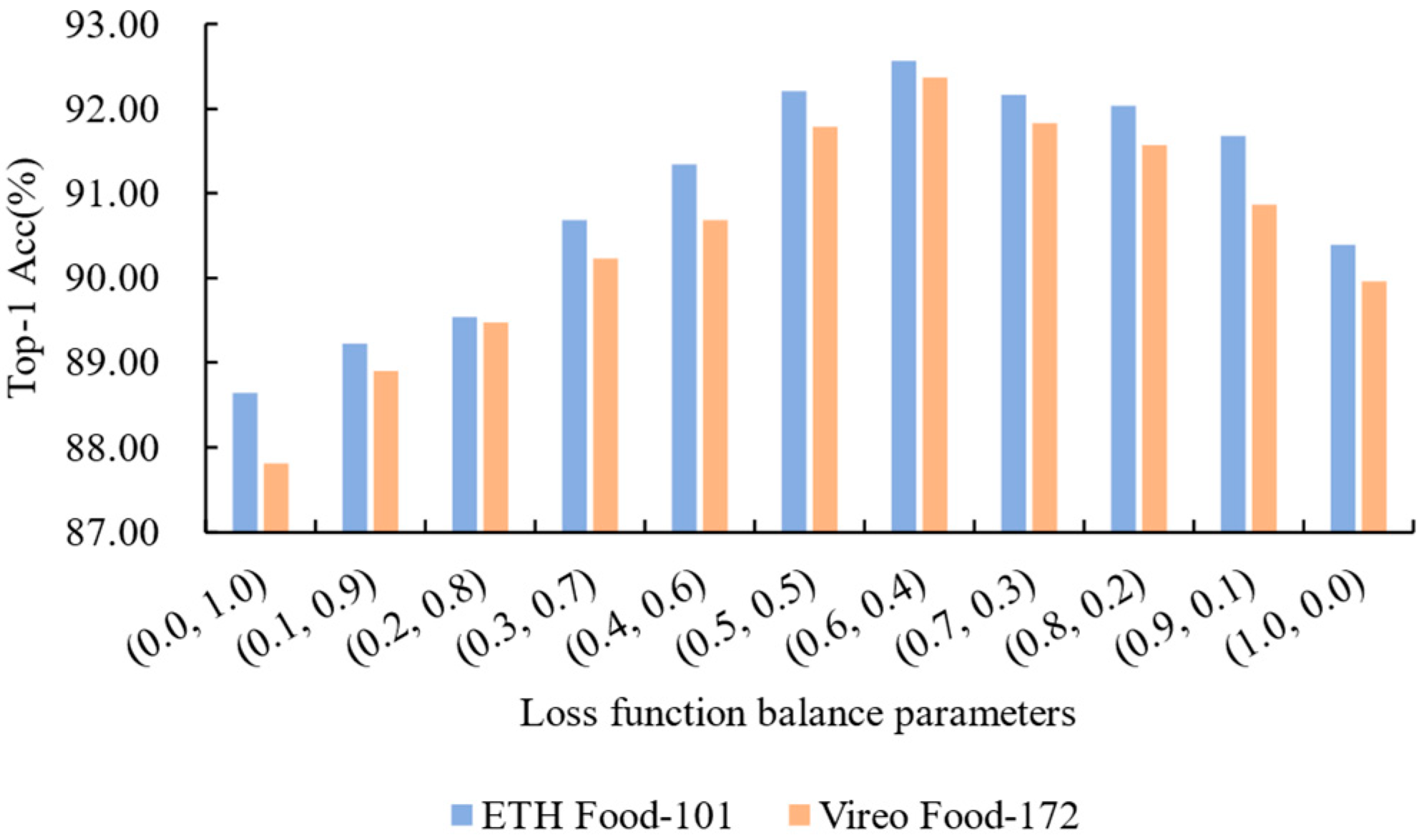

3.4. Evaluation of Distillation Strategies

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhao, Z.; Wang, R.; Liu, M.; Bai, L.; Sun, Y. Application of machine vision in food computing: A review. Food Chem. 2025, 463, 141238. [Google Scholar] [CrossRef]

- Zhang, Y.; Deng, L.; Zhu, H.; Wang, W.; Ren, Z.; Zhou, Q.; Lu, S.; Sun, S.; Zhu, Z.; Gorriz, J.M.; et al. Deep learning in food category recognition. Inf. Fusion 2023, 98, 101859. [Google Scholar] [CrossRef]

- Min, W.; Jiang, S.; Liu, L.; Rui, Y.; Jain, R. A Survey on Food Computing. ACM Comput. Surv. 2019, 52, 92. [Google Scholar] [CrossRef]

- Allegra, D.; Battiato, S.; Ortis, A.; Urso, S.; Polosa, R. A review on food recognition technology for health applications. Health Psychol. Res. 2020, 8, 9297. [Google Scholar] [CrossRef]

- Rostami, A.; Nagesh, N.; Rahmani, A.; Jain, R. In Proceedings of the 7th International Workshop on Multimedia Assisted Dietary Management (MADiMa), Lisboa, Portugal, 24 October 2022; Association for Computing Machinery: New York, NY, USA, 2022.

- Rostami, A.; Pandey, V.; Nag, N.; Wang, V.; Jain, R. In Proceedings of the 28th ACM International Conference on Multimedia (ACM MM), Seattle, WA, USA, 12–16 October 2020; Association for Computing Machinery: New York, NY, USA, 2020.

- Ishino, A.; Yamakata, Y.; Karasawa, H.; Aizawa, K. RecipeLog: Recipe Authoring App for Accurate Food Recording. In Proceedings of the 29th ACM International Conference on Multimedia (ACM MM), Virtual, 20–24 October 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar]

- Wang, W.; Min, W.; Li, T.; Dong, X.; Li, H.; Jiang, S. A review on vision-based analysis for automatic dietary assessment. Trends Food Sci. Technol. 2022, 122, 223–237. [Google Scholar] [CrossRef]

- Lee, D.-s.; Kwon, S.-k. Amount Estimation Method for Food Intake Based on Color and Depth Images through Deep Learning. Sensors 2024, 24, 2044. [Google Scholar] [CrossRef]

- Vasiloglou, M.F.; Marcano, I.; Lizama, S.; Papathanail, I.; Spanakis, E.K.; Mougiakakou, S. Multimedia Data-Based Mobile Applications for Dietary Assessment. J. Diabetes Sci. Technol. 2023, 17, 1056–1065. [Google Scholar] [CrossRef]

- Yamakata, Y.; Ishino, A.; Sunto, A.; Amano, S.; Aizawa, K. In Proceedings of the 30th ACM International Conference on Multimedia (ACM MM), Lisboa, Portugal, 10–14 October 2022; Association for Computing Machinery: New York, NY, USA, 2022.

- Nakamoto, K.; Amano, S.; Karasawa, H.; Yamakata, Y.; Aizawa, K. In Proceedings of the 1st International Workshop on Multimedia for Cooking, Eating, and Related APPlications (MECAPP), Lisboa, Portugal, 10 October 2022; Association for Computing Machinery: New York, NY, USA, 2022.

- Zhu, Y.; Zhao, X.; Zhao, C.; Wang, J.; Lu, H. Food det: Detecting foods in refrigerator with supervised transformer network. Neurocomputing 2020, 379, 162–171. [Google Scholar] [CrossRef]

- Mohammad, I.; Mazumder, M.S.I.; Saha, E.K.; Razzaque, S.T.; Chowdhury, S. In Proceedings of the International Conference on Computing Advancements (ICCA), Dhaka, Bangladesh, 10–12 January 2020; Association for Computing Machinery: New York, NY, USA, 2020.

- Aguilar, E.; Remeseiro, B.; Bolanos, M.; Radeva, P. Grab, Pay, and Eat: Semantic Food Detection for Smart Restaurants. IEEE Trans. Multimed. 2018, 20, 3266–3275. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Bossard, L.; Guillaumin, M.; Van Gool, L. Computer Vision–ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 446–461. [Google Scholar]

- Chen, J.; Ngo, C.-W. In Proceedings of the 24th ACM international conference on Multimedia (ACM MM), Amsterdam, The Netherlands, 15–19 October 2016; Association for Computing Machinery: New York, NY, USA, 2016.

- Peng, D.; Jin, C.; Wang, J.; Zhai, Y.; Qi, H.; Zhou, L.; Peng, J.; Zhang, C. Defects recognition of pine nuts using hyperspectral imaging and deep learning approaches. Microchem. J. 2024, 201, 110521. [Google Scholar] [CrossRef]

- Sheng, G.; Min, W.; Zhu, X.; Xu, L.; Sun, Q.; Yang, Y.; Wang, L.; Jiang, S. A Lightweight Hybrid Model with Location-Preserving ViT for Efficient Food Recognition. Nutrients 2024, 16, 200. [Google Scholar] [CrossRef]

- Wang, H.; Tian, H.; Ju, R.; Ma, L.; Yang, L.; Chen, J.; Liu, F. Nutritional composition analysis in food images: An innovative Swin Transformer approach. Front. Nutr. 2024, 11, 1454466. [Google Scholar] [CrossRef] [PubMed]

- Alahmari, S.S.; Gardner, M.R.; Salem, T. Attention guided approach for food type and state recognition. Food Bioprod. Process. 2024, 145, 1–10. [Google Scholar] [CrossRef]

- Sreedharan, S.E.; Sundar, G.N.; Narmadha, D. NutriFoodNet: A High-Accuracy Convolutional Neural Network for Automated Food Image Recognition and Nutrient Estimation. Trait. Signal 2024, 41, 1953–1965. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, J.; Wang, Y. Enhancing Food Image Recognition by Multi-Level Fusion and the Attention Mechanism. Foods. 2025, 14, 461. [Google Scholar] [CrossRef] [PubMed]

- Shah, B.; Bhavsar, H. Depth-restricted convolutional neural network—A model for Gujarati food image classification. Vis. Comput. 2024, 40, 1931–1946. [Google Scholar] [CrossRef]

- Liu, Y.-C.; Onthoni, D.D.; Mohapatra, S.; Irianti, D.; Sahoo, P.K. Deep-Learning-Assisted Multi-Dish Food Recognition Application for Dietary Intake Reporting. Electronics 2022, 11, 1626. [Google Scholar] [CrossRef]

- Wang, C.; Liu, S.; Wang, Y.; Xiong, J.; Zhang, Z.; Zhao, B.; Luo, L.; Lin, G.; He, P. Application of Convolutional Neural Network-Based Detection Methods in Fresh Fruit Production: A Comprehensive Review. Front. Plant Sci. 2022, 13, 868745. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Liu, Y.; Min, W.; Jiang, S.; Rui, Y. Convolution-Enhanced Bi-Branch Adaptive Transformer with Cross-Task Interaction for Food Category and Ingredient Recognition. IEEE Trans. Image Process. 2024, 33, 2572–2586. [Google Scholar] [CrossRef]

- Xu, G.; Han, Z.; Gong, L.; Jiao, L.; Bai, H.; Liu, S.; Zheng, X. ASQ-FastBM3D: An Adaptive Denoising Framework for Defending Adversarial Attacks in Machine Learning Enabled Systems. IEEE Trans. Reliab. 2023, 72, 317–328. [Google Scholar] [CrossRef]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Gool, L.V.; Timofte, R. SwinIR: Image Restoration Using Swin Transformer. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Conference on Computer Vision and Pattern Recognition (CVPR). In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Min, W.; Wang, Z.; Liu, Y.; Luo, M.; Kang, L.; Wei, X.; Wei, X.; Jiang, S. Large Scale Visual Food Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 9932–9949. [Google Scholar] [CrossRef]

- Wang, Z.; Min, W.; Li, Z.; Kang, L.; Wei, X.; Wei, X.; Jiang, S. Ingredient-Guided Region Discovery and Relationship Modeling for Food Category-Ingredient Prediction. IEEE Trans. Image Process. 2022, 31, 5214–5226. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- McAllister, P.; Zheng, H.; Bond, R.; Moorhead, A. Combining deep residual neural network features with supervised machine learning algorithms to classify diverse food image datasets. Comput. Biol. Med. 2018, 95, 217–233. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Wu, J.; Yang, Y. Wi-HSNN: A subnetwork-based encoding structure for dimension reduction and food classification via harnessing multi-CNN model high-level features. Neurocomputing 2020, 414, 57–66. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Conference on Computer Vision and Pattern Recognition (CVPR). In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Qiu, J.; Lo, F.P.-W.; Sun, Y.; Wang, S.; Lo, B.P.L. Mining Discriminative Food Regions for Accurate Food Recognition. In Proceedings of the British Machine Vision Conference, Cardiff, UK, 9–12 September 2019. [Google Scholar]

- Chen, Y.; Bai, Y.; Zhang, W.; Mei, T. Conference on Computer Vision and Pattern Recognition (CVPR). In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5152–5161. [Google Scholar]

- Du, R.; Chang, D.; Bhunia, A.K.; Xie, J.; Ma, Z.; Song, Y.-Z.; Guo, J. Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.-M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 153–168. [Google Scholar]

- Hu, T.; Qi, H.; Huang, Q.; Lu, Y. See better before looking closer: Weakly supervised data augmentation network for fine-grained visual classification. arXiv 2019, arXiv:1901.09891. [Google Scholar]

- Yang, Z.; Luo, T.; Wang, D.; Hu, Z.; Gao, J.; Wang, L. Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 438–454. [Google Scholar]

- Min, W.; Liu, L.; Wang, Z.; Luo, Z.; Wei, X.; Wei, X.; Jiang, S. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; Association for Computing Machinery: New York, NY, USA, 2020.

- Xia, Z.; Pan, X.; Song, S.; Li, L.E.; Huang, G. Conference on Computer Vision and Pattern Recognition (CVPR). In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4794–4803. [Google Scholar]

- Park, W.; Kim, D.; Lu, Y.; Cho, M. Conference on Computer Vision and Pattern Recognition (CVPR). In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3962–3971. [Google Scholar]

- Peng, B.; Jin, X.; Liu, J.; Li, D.; Wu, Y.; Liu, Y.; Zhou, S.; Zhang, Z. Correlation Congruence for Knowledge Distillation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5006–5015. [Google Scholar]

- Liu, Y.; Cao, J.; Li, B.; Yuan, C.; Hu, W.; Li, Y.; Duan, Y. Knowledge Distillation via Instance Relationship Graph. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7089–7097. [Google Scholar]

- Heo, B.; Lee, M.; Yun, S.; Choi, J.Y. Knowledge Transfer via Distillation of Activation Boundaries Formed by Hidden Neurons. In Proceedings of the AAAI conference on artificial intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 3779–3787. [Google Scholar]

- Dhar, P.; Singh, R.V.; Peng, K.C.; Wu, Z.; Chellappa, R. Conference on Computer Vision and Pattern Recognition (CVPR). In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 5133–5141. [Google Scholar]

- Wang, Q.; Liu, L.; Yu, W.; Zhang, Z.; Liu, Y.; Cheng, S.; Zhang, X.; Gong, J. paper presented at the Neural Information Processing. In Proceedings of the 29th International Conference, ICONIP 2022, Virtual Event, 22–26 November 2022. Proceedings, Part I, New Delhi, India, 2023. [Google Scholar]

- Xu, C.; Gao, W.; Li, T.; Bai, N.; Li, G.; Zhang, Y. Teacher-student collaborative knowledge distillation for image classification. Appl. Intell. 2023, 53, 1997–2009. [Google Scholar] [CrossRef]

- Liu, D.; Zuo, E.; Wang, D.; He, L.; Dong, L.; Lu, X. Deep Learning in Food Image Recognition: A Comprehensive Review. Appl. Sci. 2025, 15, 7626. [Google Scholar] [CrossRef]

| Method | Backbone | ETH Food-101 | Vireo Food-172 | ||

|---|---|---|---|---|---|

| Top-1 Acc | Top-5 Acc | Top-1 Acc | Top-5 Acc | ||

| ResNet152 + SVM-RBF [38] | ResNet152 | 64.98 | - | - | - |

| FS_UAMS [39] | Inceptionv3 | - | - | 89.26 | - |

| ResNet50 [16] | ResNet50 | 87.42 | 97.40 | - | - |

| DenseNet161 [33] | DenseNet161 | - | - | 86.98 | 97.31 |

| SENet-154 [40] | ResNeXt-50 | 88.68 | 97.62 | 88.78 | 97.76 |

| PAR-Net [41] | ResNet101 | 89.30 | - | 89.60 | - |

| DCL [42] | ResNet50 | 88.90 | 97.82 | - | - |

| PMG [43] | ResNet50 | 86.93 | 97.21 | - | - |

| WS-DAN [44] | Inceptionv3 | 88.90 | 98.11 | - | - |

| NTS-NET [45] | ResNet50 | 89.40 | 97.80 | - | - |

| PRENet [34] | ResNet50 | 89.91 | 98.04 | - | - |

| PRENet [34] | SENet154 | 90.74 | 98.48 | - | - |

| SGLANet [46] | SENet154 | 89.69 | 98.01 | 90.30 | 98.03 |

| Swin-B [28] | Transformer | 89.78 | 97.98 | 89.15 | 98.02 |

| DAT [47] | Transformer | 90.04 | 98.12 | 89.25 | 98.12 |

| EHFR-Net [20] | Transformer | 90.70 | - | 90.30 | - |

| IVRDRM [35] | ResNet-101 | 92.36 | 98.68 | 93.33 | 99.15 |

| SICL(CBiAFormer-T) [29] | Swin-T | 91.11 | 98.63 | 90.70 | 98.05 |

| SICL(CBiAFormer-B) [29] | Swin-B | 92.40 | 98.87 | 91.58 | 98.75 |

| Our method * | ResNet50 | 92.57 | 98.70 | 92.37 | 98.55 |

| ETH Food-101 | Vireo Food-172 | |||||||

|---|---|---|---|---|---|---|---|---|

| p1 | p2 | p3 | Top-1 | p1 | p2 | p3 | Top-1 | |

| SFF | 86.43 | 87.23 | 86.79 | 87.86 | 82.87 | 86.12 | 85.72 | 86.63 |

| SFF + NARM (no EGCP) | 87.73 | 88.36 | 88.97 | 90.31 | 86.58 | 87.65 | 88.67 | 89.72 |

| SFF + NARM | 89.25 | 90.80 | 91.23 | 92.19 | 88.23 | 89.82 | 91.10 | 91.88 |

| SFF + NARM + WMF | 89.77 | 91.27 | 92.03 | 92.57 | 88.69 | 90.03 | 91.59 | 92.37 |

| Model | Param (M) | Epoch Time (min) | Total Time (h) | Memory (GB/GPU) | Top-1 (%) |

|---|---|---|---|---|---|

| SFF | 25.50 | 8.10 | 27.00 | 14.50 | 87.42 |

| SFF + NARM | 33.20 | 10.60 | 35.30 | 17.30 | 92.19 |

| SFF + NARM + WMF | 41.50 | 14.00 | 46.70 | 20.80 | 92.57 |

| SFF (PTAFD Student Model) | 25.70 | 8.40 | 28.00 | 15.00 | 92.01 |

| Class | Our Method | Our Method w/o EGCP | Our Method w/o WMF | Our Method w/o EGCP and WMF | ||||

|---|---|---|---|---|---|---|---|---|

| Recall | F1-Score | Recall | F1-Score | Recall | F1-Score | Recall | F1-Score | |

| Apple pie | 92.37 | 91.84 | 88.72 | 88.25 | 89.56 | 89.03 | 86.19 | 85.62 |

| Baby back ribs | 93.12 | 92.76 | 89.84 | 89.21 | 90.23 | 89.77 | 87.05 | 86.58 |

| Baklava | 91.08 | 91.53 | 87.31 | 87.06 | 88.52 | 88.39 | 83.87 | 84.29 |

| Beef carpaccio | 89.53 | 89.07 | 84.15 | 83.92 | 86.38 | 86.09 | 81.24 | 81.06 |

| Beef tartare | 88.91 | 88.65 | 83.97 | 83.84 | 85.72 | 85.43 | 80.59 | 80.32 |

| Beet salad | 90.76 | 90.28 | 87.36 | 86.89 | 88.15 | 87.64 | 84.63 | 84.17 |

| Beignets | 92.58 | 92.14 | 88.92 | 88.57 | 89.86 | 89.45 | 85.92 | 85.23 |

| Bibimbap | 90.25 | 89.83 | 86.04 | 85.58 | 85.79 | 85.62 | 82.37 | 81.95 |

| Bread pudding | 91.84 | 91.36 | 88.17 | 87.75 | 88.93 | 88.56 | 85.08 | 84.62 |

| Breakfast burrito | 91.27 | 90.85 | 87.06 | 86.53 | 86.45 | 86.07 | 83.15 | 82.74 |

| Average | 90.82 | 91.21 | 87.33 | 88.01 | 87.91 | 88.51 | 84.01 | 84.63 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Z.; Chen, H.; Wang, J.; Wang, Y. Food Image Recognition Based on Anti-Noise Learning and Covariance Feature Enhancement. Foods 2025, 14, 2776. https://doi.org/10.3390/foods14162776

Chen Z, Chen H, Wang J, Wang Y. Food Image Recognition Based on Anti-Noise Learning and Covariance Feature Enhancement. Foods. 2025; 14(16):2776. https://doi.org/10.3390/foods14162776

Chicago/Turabian StyleChen, Zengzheng, Hao Chen, Jianxin Wang, and Yeru Wang. 2025. "Food Image Recognition Based on Anti-Noise Learning and Covariance Feature Enhancement" Foods 14, no. 16: 2776. https://doi.org/10.3390/foods14162776

APA StyleChen, Z., Chen, H., Wang, J., & Wang, Y. (2025). Food Image Recognition Based on Anti-Noise Learning and Covariance Feature Enhancement. Foods, 14(16), 2776. https://doi.org/10.3390/foods14162776