1. Introduction

Globalization has led to diversification in food demands around the world, and the production, sales, and consumption chains have become increasingly fragmented and intricate [

1]. In the fruit and vegetable supply industry, rigorous sorting and grading of produce—including the removal of damaged, diseased, or substandard fruits—can effectively ensure the quality of market-bound products [

2]. For example, different preservation techniques are employed for various fruits, such as the widespread application of chitosan for controlling weight loss in fresh strawberries (

Fragaria ×

ananassa), raspberries (

Rubus idaeus), mangoes (

Mangifera indica), lychee, and blueberries [

3], while papaya is preserved by reducing the respiration rate to delay the ripening process [

4]. The ability to maintain optimal freshness during storage under limited time is a critical market requirement. Consequently, fruit freshness during circulation is directly linked to consumer health and purchasing behavior, rendering freshness detection a vital task in modern food quality control [

5]. Due to environmental factors such as temperature, humidity, and illumination affecting fruits during storage, transportation, and sale, their freshness is in constant flux. Elevated temperatures accelerate the consumption of sugars, acids, and other nutrients in the fruit, leading to over-ripening, softening, and rotting. Furthermore, enzymatic reactions—such as the browning reaction induced by polyphenol oxidase—speed up, resulting in changes in color and deterioration of texture [

6]. For instance, fresh peaches, apricots, and cherries may be preserved for approximately 4 months at −12 °C, about 18 months at −18 °C, and for over two years under freezing conditions with sugar treatment and storage at −24 °C [

7]. Ultraviolet light can degrade light-sensitive compounds (such as chlorophyll and certain vitamins), causing fading of fruit color and loss of nutritional components (e.g., vitamin C, vitamin A) [

8]. In recent years, research on the microbial safety and quality control of fruits has increased. The freshness of fruits and vegetables is highly associated with their microbial safety; during harvesting, transportation, and storage, fruits are prone to microbial contamination which accelerates spoilage [

9]. Traditional manual inspection methods primarily rely on visual assessment and simple instrumentation (e.g., colorimeters and hardness testers) for quality evaluation, but these approaches capture only a subset of the parameters affected by microbial invasion or intrinsic metabolic changes [

10]. Although some laboratories employ microbial culturing and chemical analysis methods—which yield accurate results—the prolonged detection cycle and high cost render them unsuitable for real-time monitoring [

11]. Therefore, the development of an automated, real-time, and accurate detection technology for assessing fruit freshness holds significant theoretical and practical value.

Deep learning technologies, especially convolutional neural networks (CNNs), have achieved remarkable success in image classification and object detection tasks, and have demonstrated extensive application prospects in fruit detection. For instance, Valentino et al. designed a CNN-based fruit freshness detection system using a Kaggle dataset to classify fruits as fresh or rotten, implementing real-time detection via a web application to offer an efficient, automated solution for the fruit supply chain [

12]. Similarly, Pathak and Makwana proposed a CNN-based model for automatically classifying fruit as “fresh” or “rotten”, achieving a classification accuracy of 98.23% through hyperparameter optimization and the integration of multiple convolutional and pooling layers [

13]. Gao et al. employed data augmentation to expand 800 images to 12,800, achieving efficient detection of fruits in dense canopies with a mean average precision (mAP) of 87.9%, thereby supporting robotic harvesting path planning and collision avoidance [

14]. Apolo-Apolo et al. developed an unmanned aerial vehicle (UAV)-based citrus fruit monitoring system combined with deep learning, which significantly improved the accuracy of fruit size estimation and yield prediction [

15]. Amin et al. proposed a transfer-learning-based CNN method using the AlexNet model to efficiently classify fruits as fresh or rotten, achieving nearly 100% accuracy on multiple public datasets, thus offering an automated solution for the fruit processing industry [

16].

Although machine learning algorithms based on color, shape, and texture features can perform well in controlled scenarios, they exhibit limited representation ability in complex environments—where factors such as lighting variations, occlusions (e.g., by leaves or branches), and fruit density undermine detection accuracy. Even though deep learning models exhibit excellent performance in fruit detection, they are highly dependent on large-scale, high-quality annotated datasets. The acquisition and annotation of such datasets are time-consuming and labor-intensive tasks, particularly in complex environments where high annotation precision is required [

17]. Hence, there is a need to improve models to better cope with complex scenarios.

The self-attention mechanism is a powerful tool for capturing dependencies among different positions of the input data and has been widely applied in natural language processing (NLP) and computer vision [

18]. For instance, Lin et al. proposed a transfer-learning- and attention-mechanism-based CBAM-SEResNet-50 model for sweetener identification, which achieved a feature extraction accuracy of 95.6% by combining Squeeze-and-Excitation (SE) module and a Convolutional Block Attention Module (CBAM) [

19]. In maize leaf disease detection, Qian et al. introduced a self-attention mechanism that significantly improved the model’s ability to focus on subtle lesions in complex backgrounds, effectively suppressing background noise and further enhancing classification accuracy [

20]. The Non-local Attention mechanism, which is able to capture relationships between any two pixels in an image through global modeling, overcomes the limitations of conventional CNNs that rely solely on local receptive fields and enhances sensitivity toward subtle changes. Chen et al. proposed an image compression method that integrates the Non-local Attention mechanism and context optimization, achieving compression efficiencies that surpass those of conventional and mainstream deep learning methods [

21]. Huang et al. introduced a TransFuser model based on Non-local Attention for fusing key features and retinal images, achieving significantly superior performance compared to existing models on multiple evaluation metrics [

22]. In the context of fruit freshness detection, the incorporation of Non-local Attention enables the model to better recognize subtle damage such as slight rotting areas or color discrepancies, thereby enhancing both classification accuracy and robustness [

23].

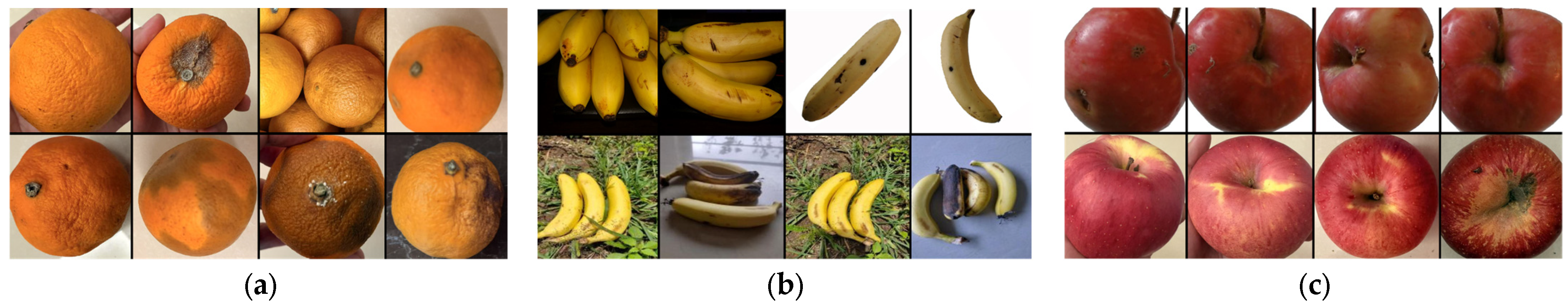

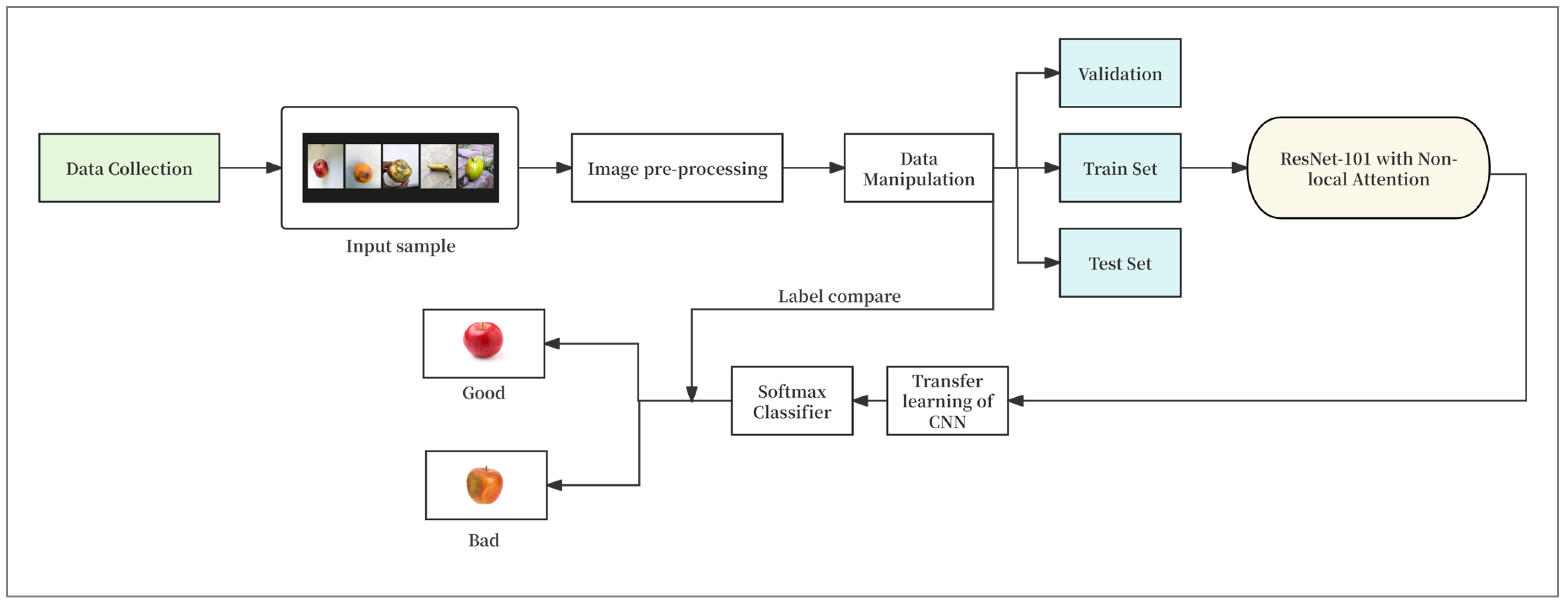

This study proposes a novel fruit freshness classification model that fuses ResNet-101 with a Non-local Attention mechanism. By embedding non-local modules into the deep convolutional layer 4 (Conv4) and convolutional layer 5 (Conv5) stages of Residual Network-101 (ResNet-101), the model more effectively captures subtle features—such as localized decay and color fading—thereby markedly enhancing robustness and classification accuracy under complex environmental conditions. To improve generalization and practical applicability, we construct a comprehensive dataset that combines publicly available images with photographs acquired in realistic settings featuring diverse illumination, occlusion, and background scenarios, encompassing multiple fruit types at varying freshness levels. The experimental protocol incorporates a systematic preprocessing pipeline and class-balancing strategies and evaluates performance using multiple metrics—precision, recall, and F1-score—followed by comparative validation against baseline networks. Leveraging Gradient-weighted Class Activation Mapping (Grad-CAM) for visualization, we further provide an interpretability analysis at the attention-region level, confirming that the Non-local Neural Network Attention (Non-local Attention) mechanism strengthens the model’s ability to identify critical decay regions. The preliminary assessment of deployment on edge devices indicates that, despite a modest increase in parameter count and inference latency, the overall computational overhead remains acceptable. This study supports real-time implementation in smart agriculture and intelligent logistics scenarios, enabling timely decision making and efficient resource management.

5. Conclusions

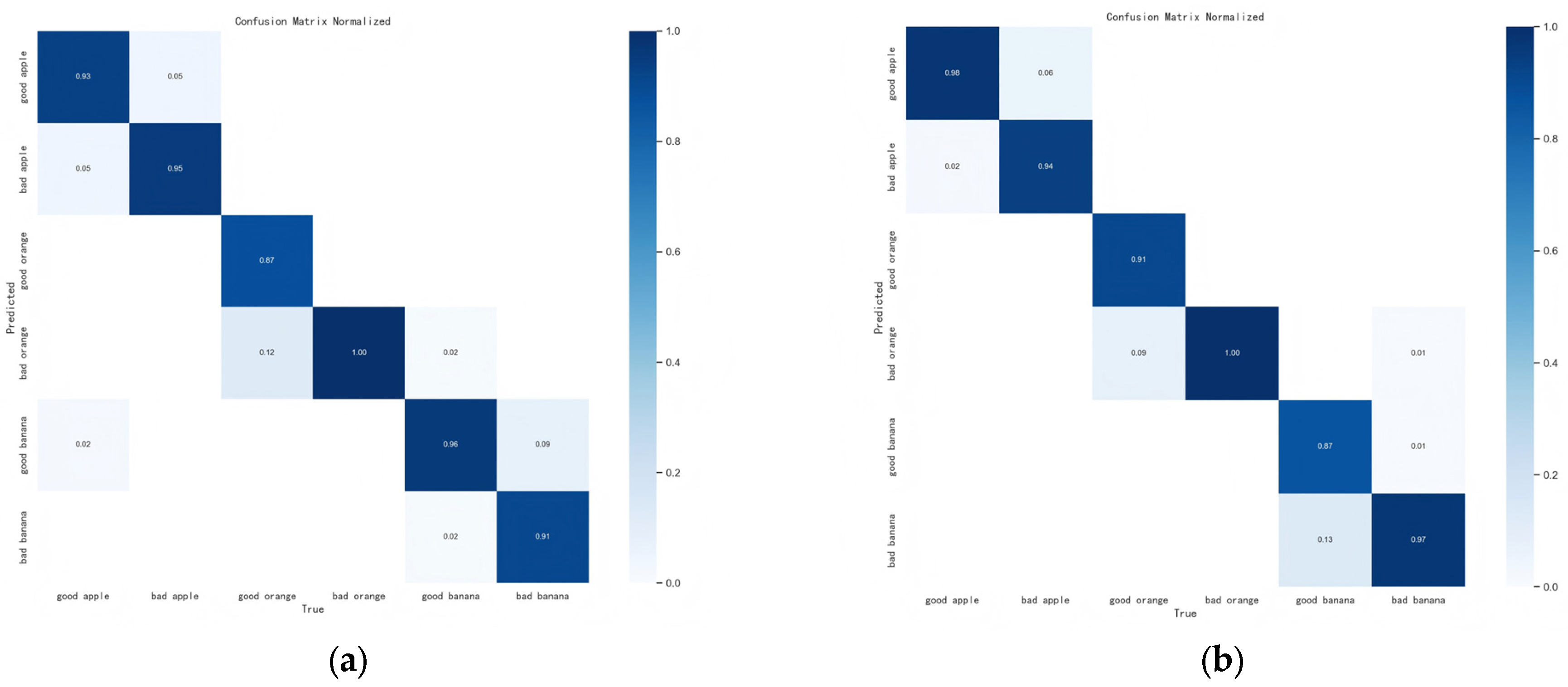

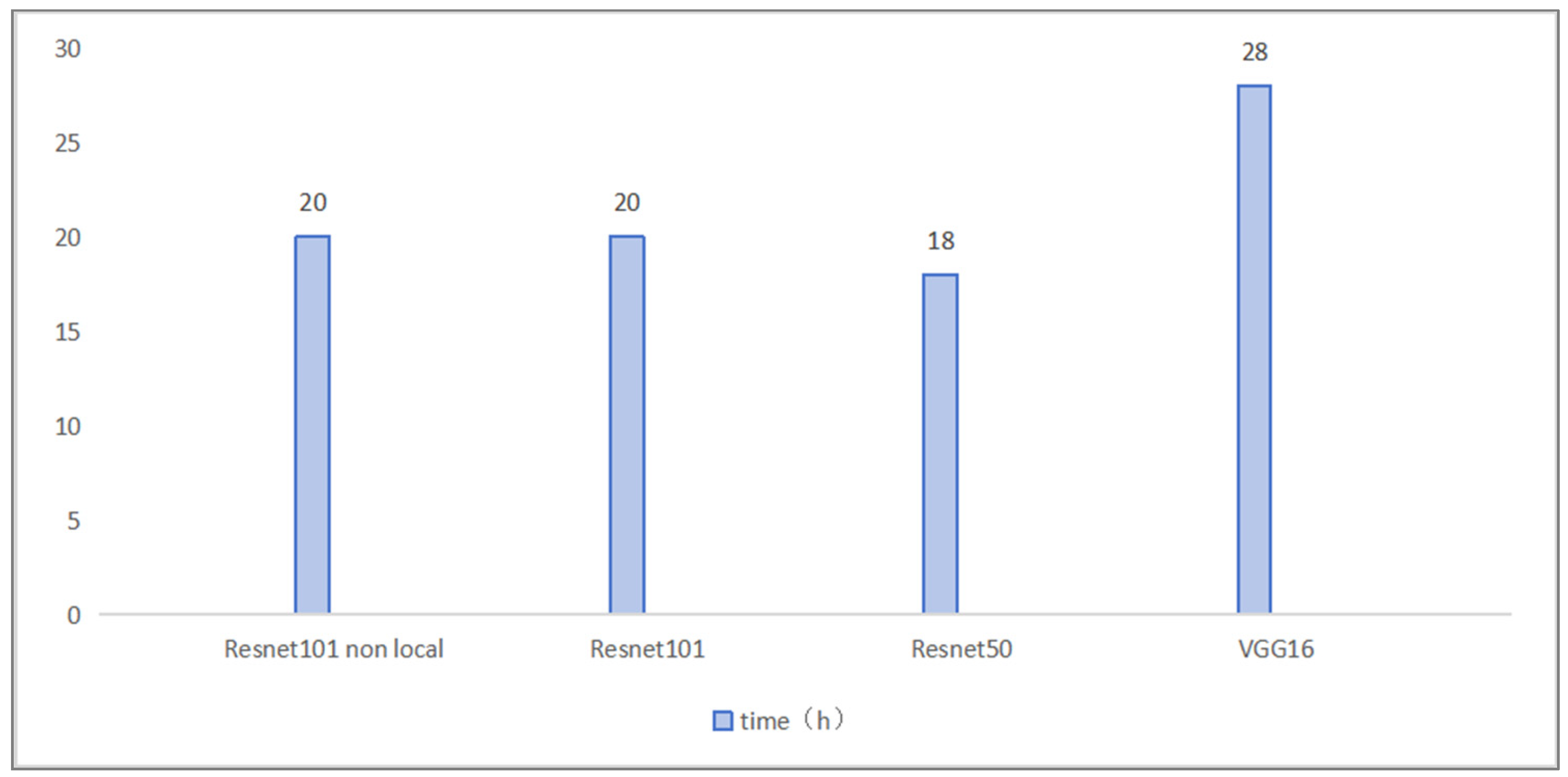

This study focuses on the problem of fruit freshness classification and detection based on advanced image processing techniques, aiming to improve the accuracy and robustness of freshness assessment through deep learning models. By integrating the ResNet-101 network with a Non-local Attention mechanism, an optimized image processing approach was devised to enhance fruit freshness detection performance. Experimental results indicate that the improved model outperforms the conventional ResNet-101 as well as other common deep learning networks like VGG-16 and ResNet-50 across multiple evaluation metrics. In particular, under complex conditions (such as varied lighting, complex backgrounds, and occlusion), the model incorporating Non-local Attention demonstrates superior robustness. Although the inference time is slightly increased, it remains within an acceptable range for real-time applications on edge devices and large-scale deployments. The inclusion of the Non-local Attention mechanism in the network architecture significantly improves the model’s capacity to capture subtle rotted areas and color discrepancies on fruit surfaces. Comprehensive data collection, augmentation strategies, and visualization techniques provide a broader foundation for error analysis and further model refinement. With these improvements, the model holds promise for offering more precise and reliable fruit freshness monitoring solutions in intelligent agriculture and smart logistics.

Future research will focus on further optimizing the model’s lightweight design to enhance real-time performance and computational efficiency. In view of the current limitations regarding data scale and environmental complexity, subsequent work will expand the dataset to include a wider range of fruits and vegetables, and further study the effects of various environmental factors (such as temperature, humidity, and microbial contamination) on freshness detection. Collaborations with experts in agriculture, horticulture, and quality monitoring will be pursued to further refine data collection protocols, sample annotation, and evaluation criteria, thereby enhancing both data quality and model interpretability. Establishing an online learning mechanism for real-time model updates and adaptive adjustments is also a critical future direction, which will enable the model to better cope with evolving environmental and data distribution changes, thereby enhancing long-term stability and practicality in fruit and vegetable freshness monitoring and contributing to the development of a sustainable food industry.