Deep Learning Network of Amomum villosum Quality Classification and Origin Identification Based on X-ray Technology

Abstract

1. Introduction

2. Materials and Methods

2.1. Samples

2.2. X-ray Detection System

2.3. X-ray Image Acquisition and Pre-Processing

2.4. The Analysis Process and Network Structure

2.4.1. The Analysis Process

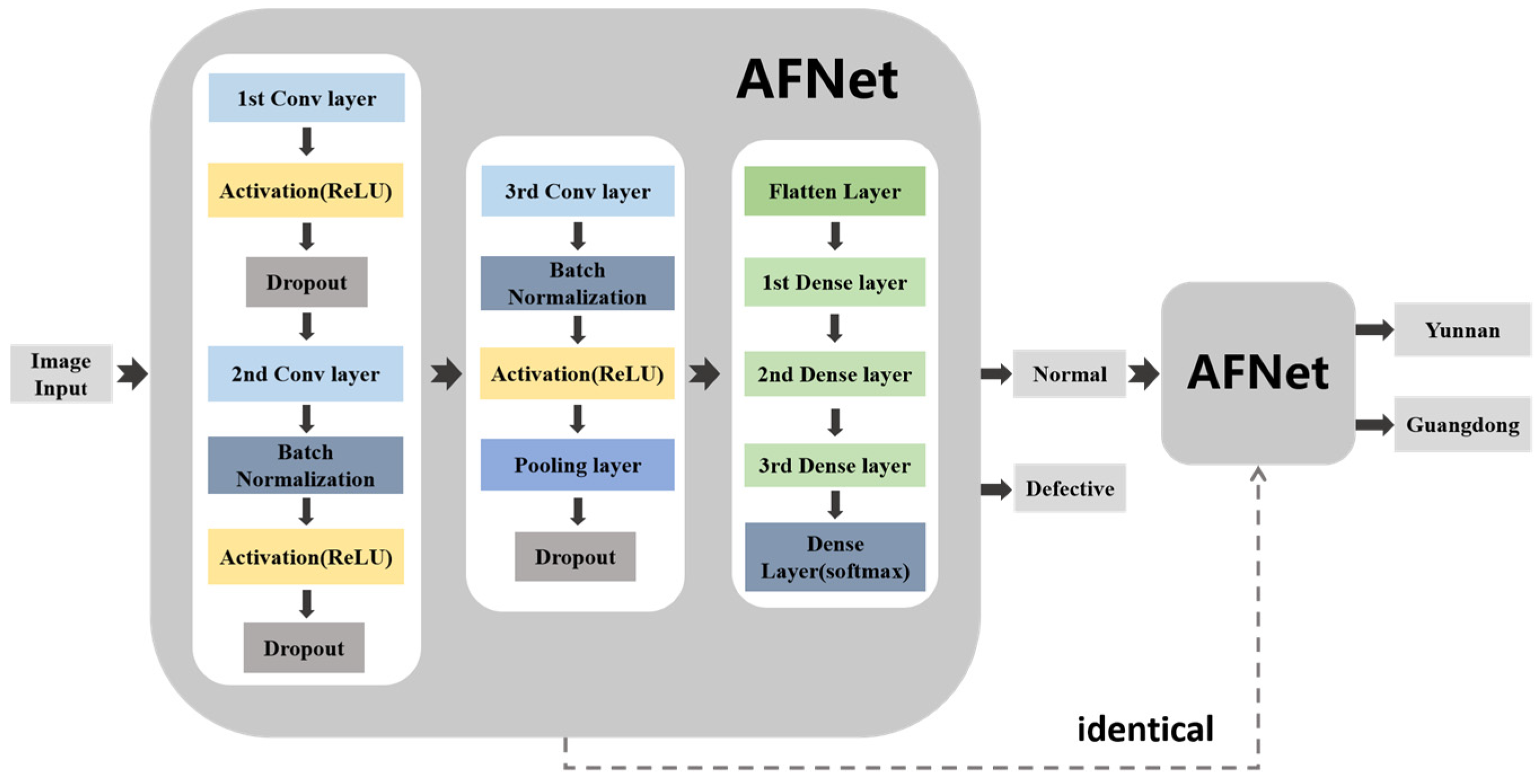

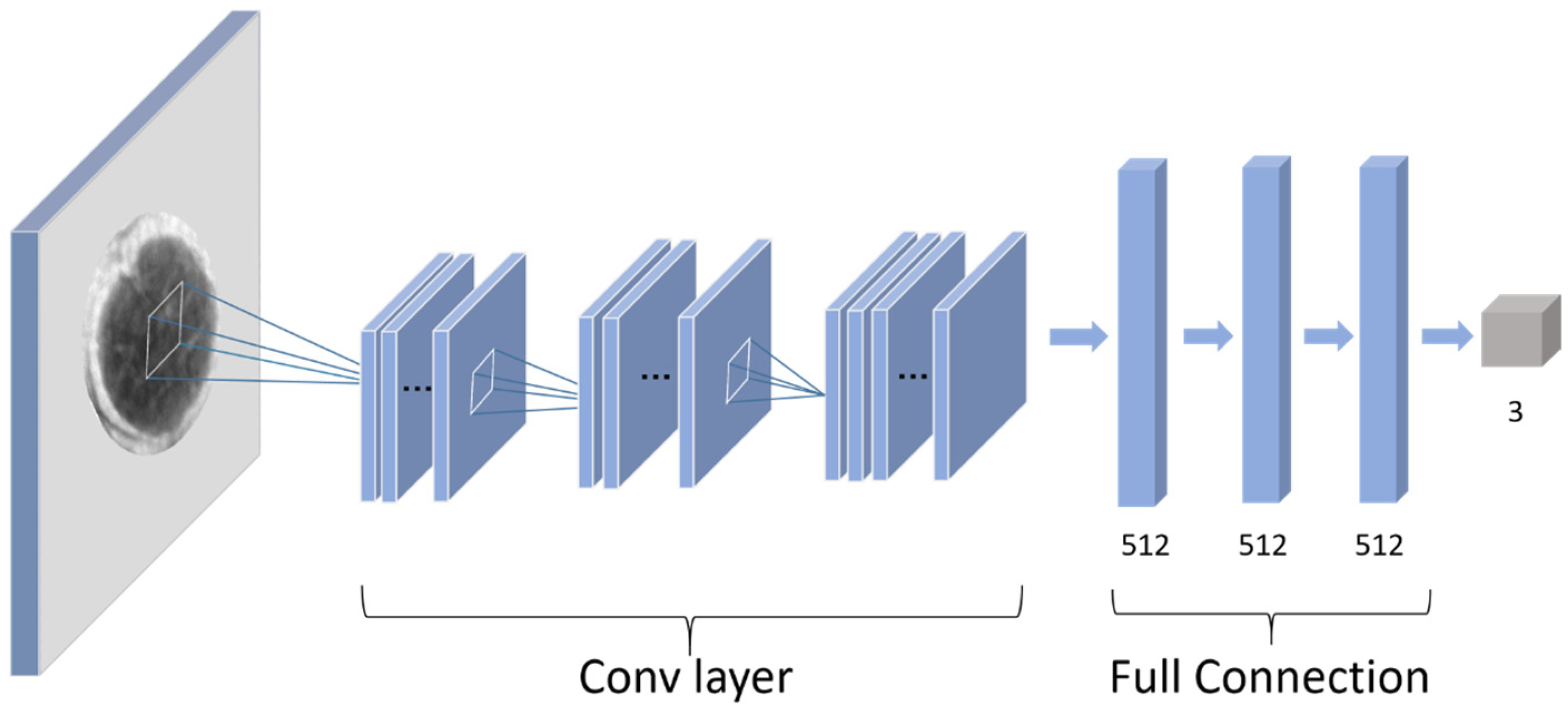

2.4.2. Structure of the Proposed Amomum villosum Fruit Network

2.5. Quality Classification of Amomum villosum Fruit

Training Process of Quality Classification

2.6. Origin Identification

Training Process of Origin Identification

2.7. The Multi-Category Classification of Amomum villosum Fruits

2.7.1. Training Process of Multi-Category Classification

2.7.2. Parameters Optimization

2.8. Model Comparison

2.9. Evaluation Standards

3. Results

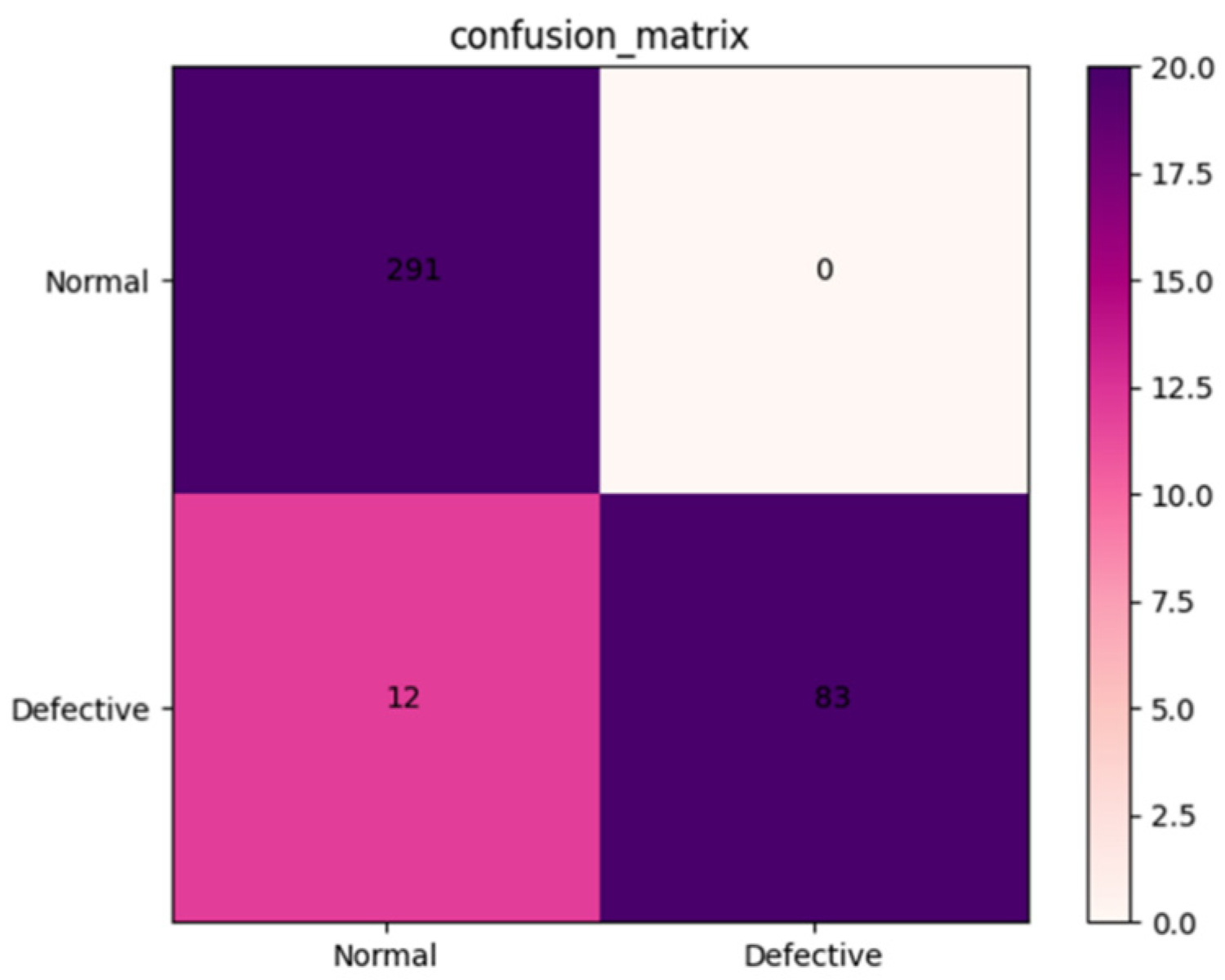

3.1. Performance of AFNet in Detecting Defective Fruits

Confusion Matrix of the Validation Dataset

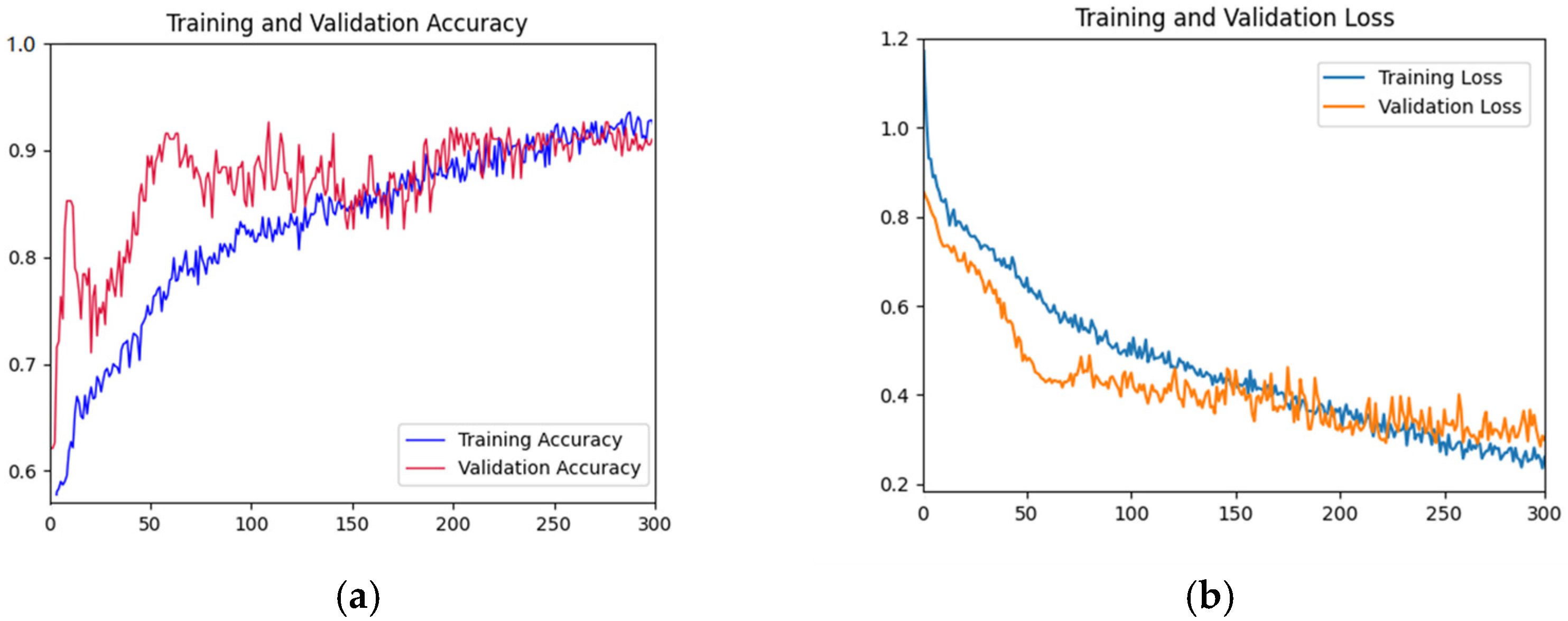

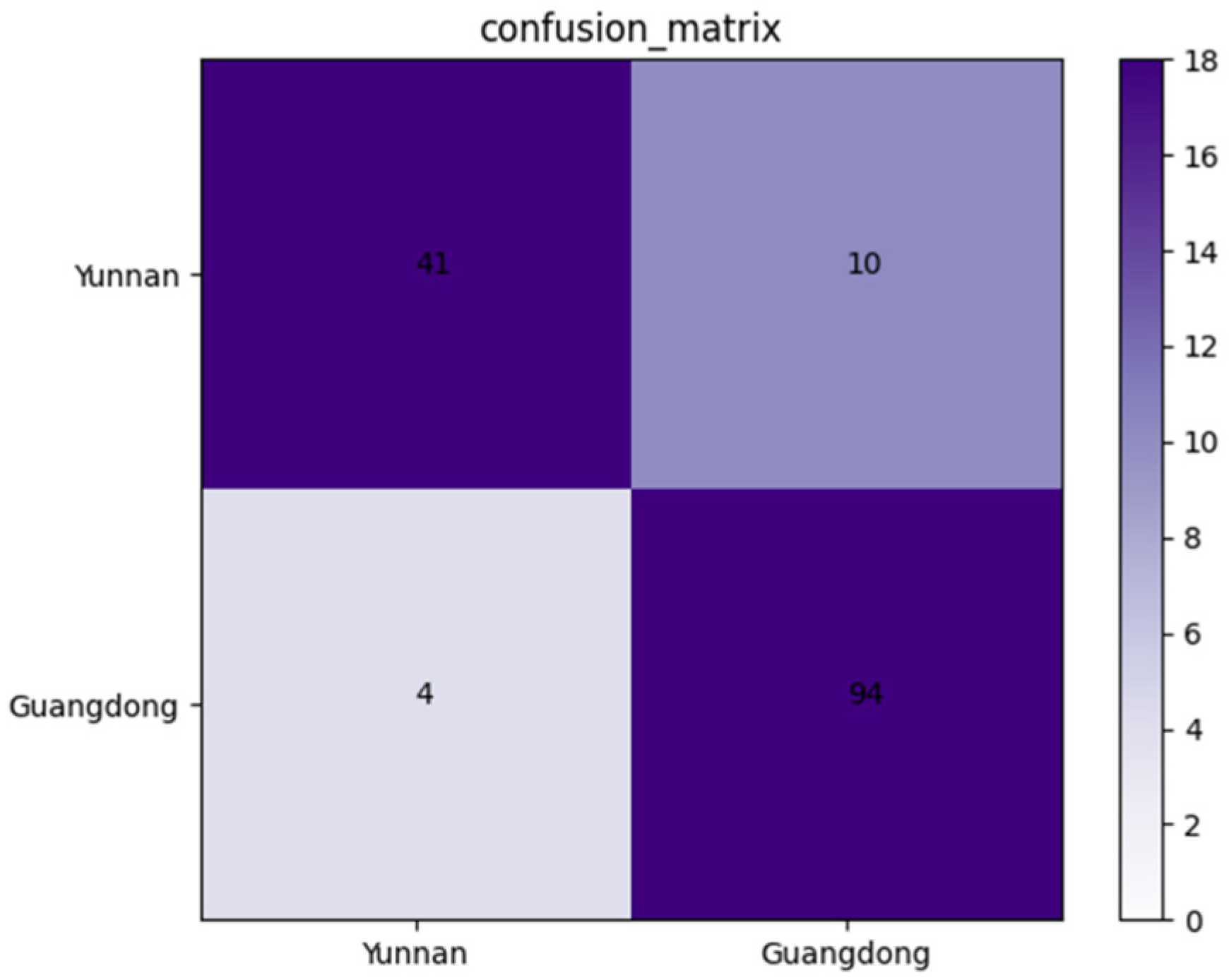

3.2. The Performance of AFNet in Distinguishing Places of Origin

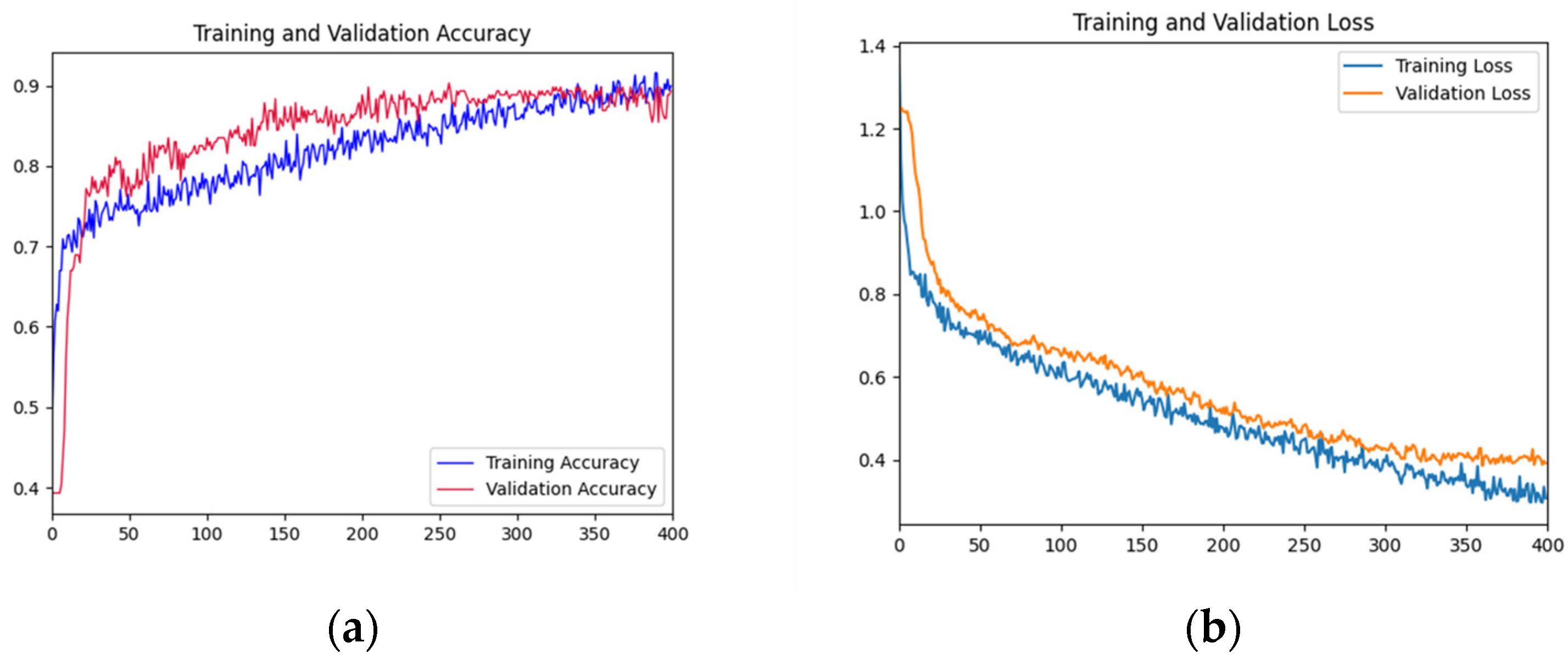

3.2.1. Accuracy and Loss Curve

3.2.2. Confusion Matrix of the Validation Dataset

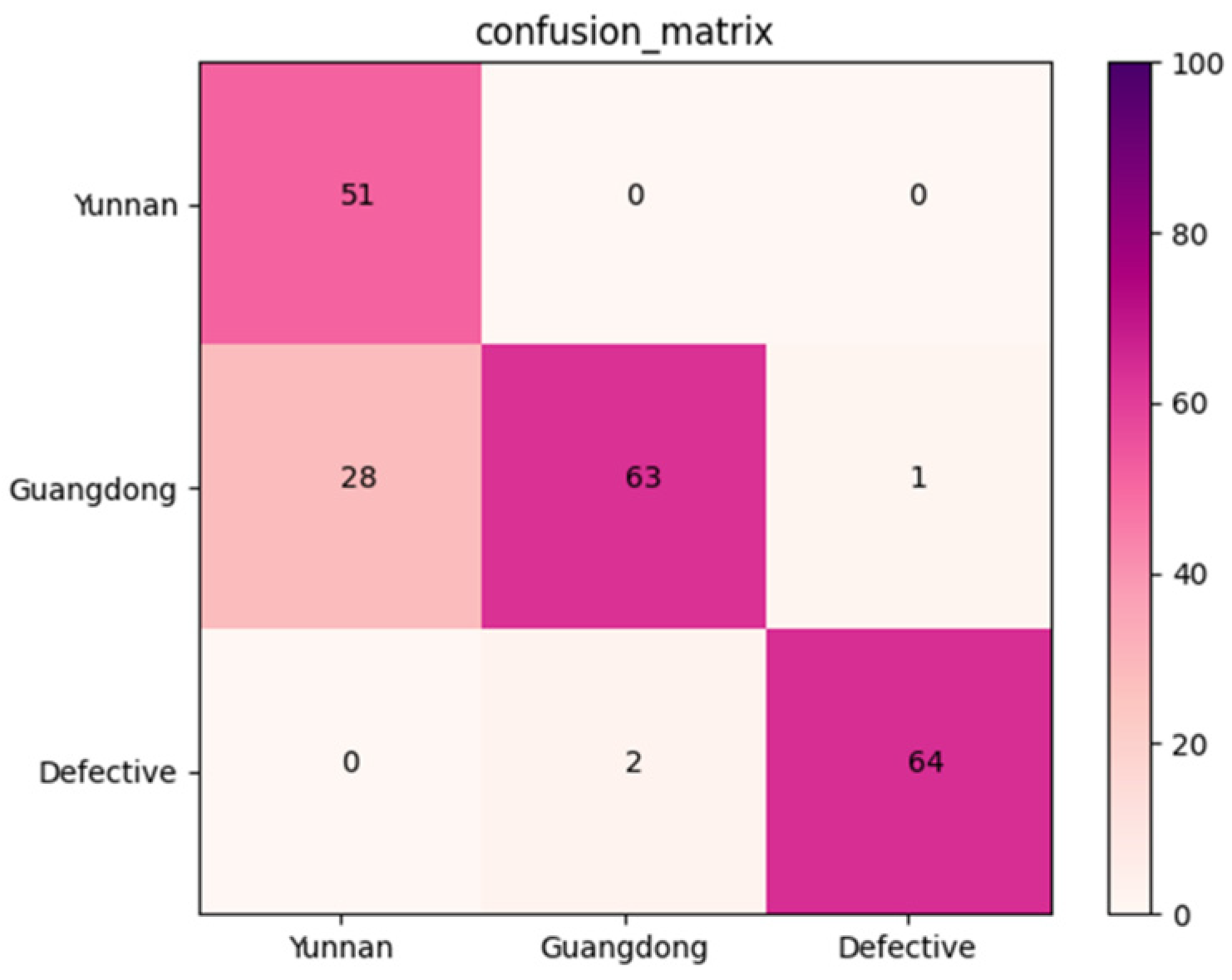

3.3. Performance of AFNet in Multi-Category Classification

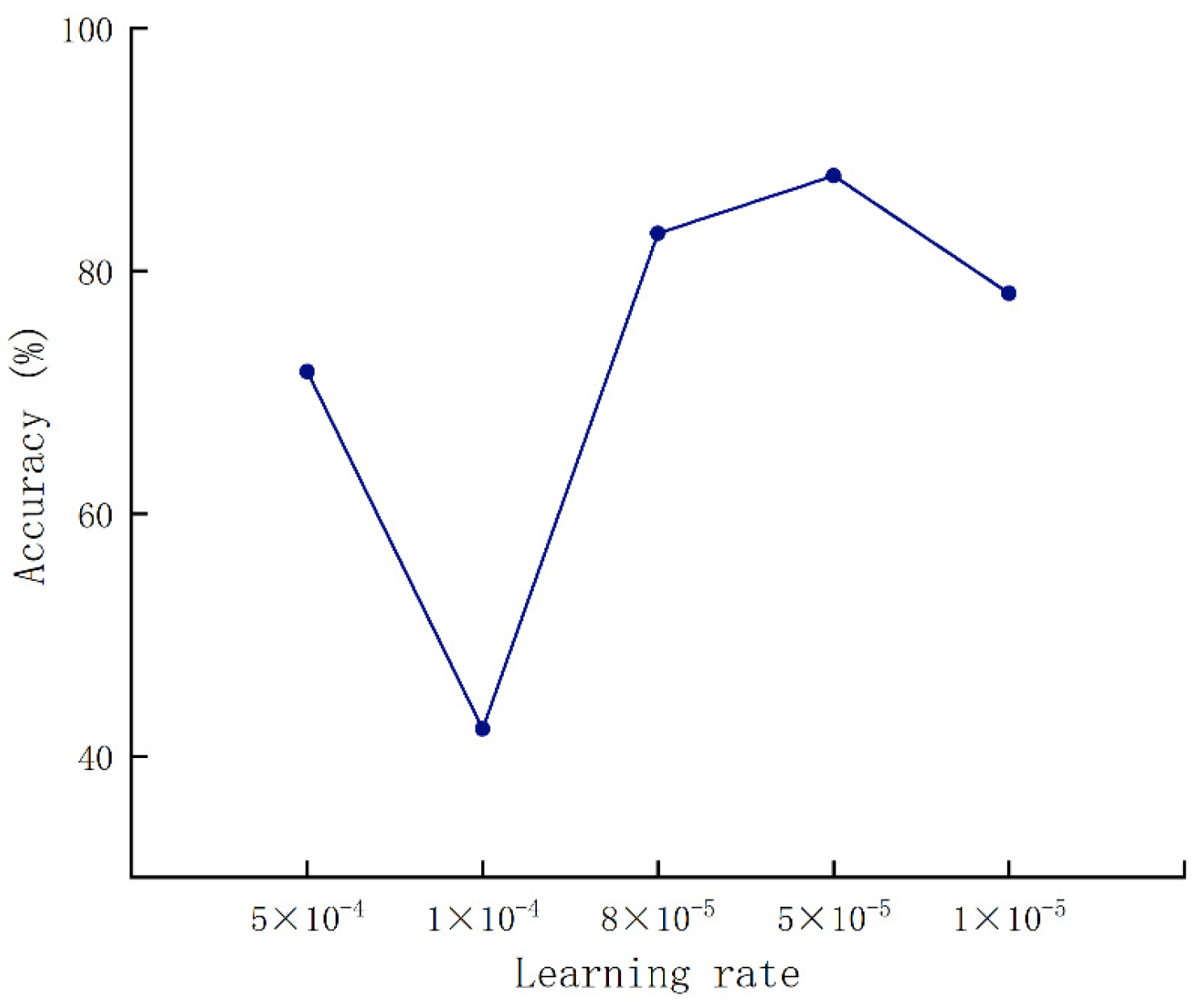

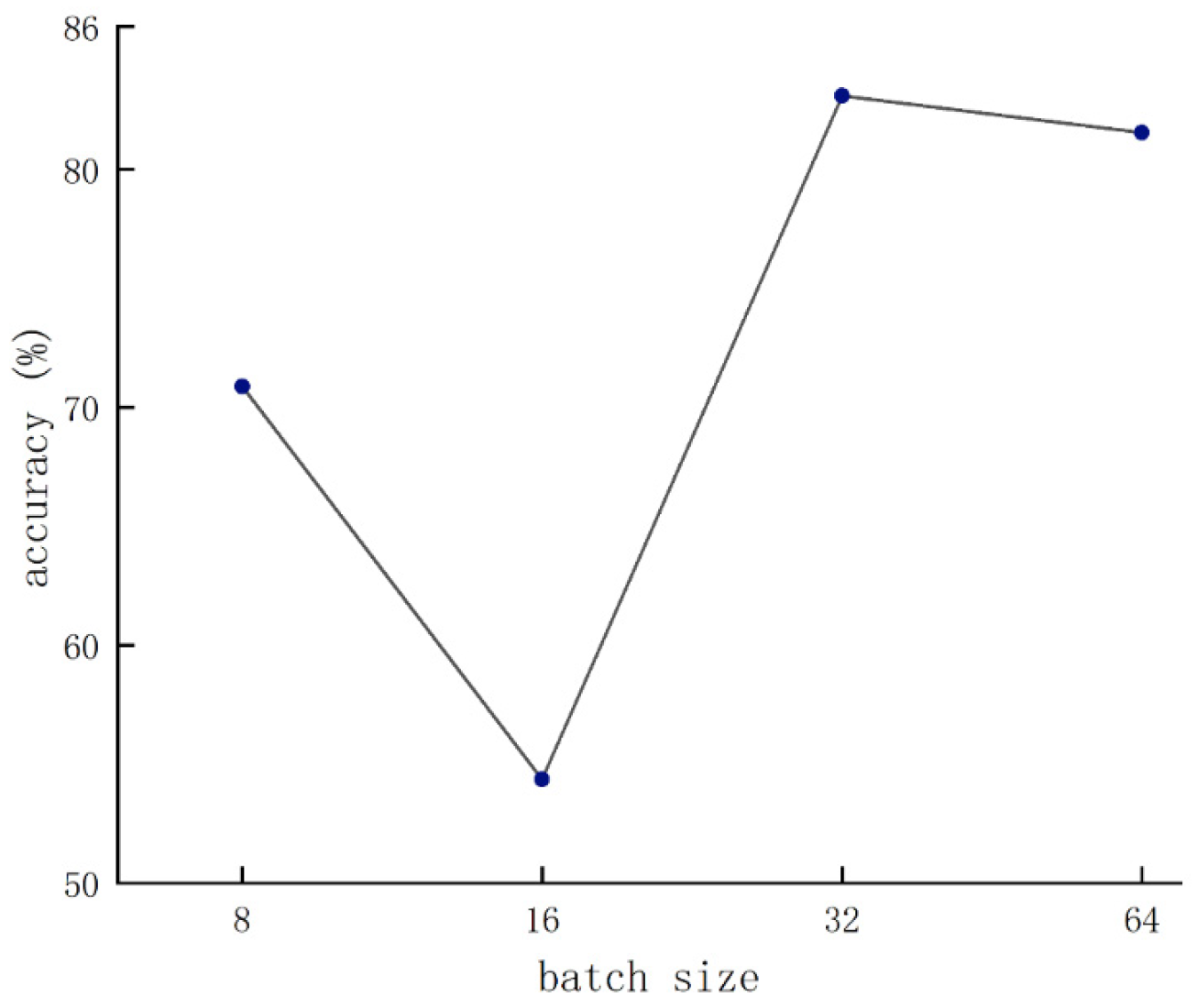

3.3.1. Parameter Optimization

3.3.2. Accuracy and Loss Curve

3.3.3. Confusion Matrix of the Validation Dataset

3.4. Comparison with Traditional CNN Model

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ai, Z.P.; Mowafy, S.; Liu, Y.H. Comparative analyses of five drying techniques on drying attributes, physicochemical aspects, and flavor components of Amomum villosum fruits. LWT Food Sci. Technol. 2022, 154, 112879. [Google Scholar] [CrossRef]

- Doh, E.J.; Kim, J.H.; Lee, G. Identification and monitoring of Amomi fructus and its Adulterants Based on DNA Barcoding Analysis and Designed DNA Markers. Molecules 2019, 24, 4193. [Google Scholar] [CrossRef] [PubMed]

- Droop, A.J.; Newman, M.F. A revision of Amomum (Zingiberaceae) in sumatra. Edinb. J. Bot. 2014, 71, 193–258. [Google Scholar] [CrossRef]

- Huang, Q.L.; Duan, Z.G.; Yang, J.F.; Ma, X.Y.; Zhan, R.T.; Xu, H.; Chen, W.W. SNP Typing for Germplasm Identification of Amomum villosum Lour. Based on DNA Barcoding Markers. PLoS ONE 2014, 9, e114940. [Google Scholar] [CrossRef]

- Ao, H.; Wang, J.; Chen, L.; Li, S.M.; Dai, C.M. Comparison of Volatile Oil between the Fruits of Amomum villosum Lour. and Amomum villosum Lour. var. xanthioides T. L. Wu et Senjen Based on GC-MS and Chemometric Techniques. Molecules 2019, 24, 1663. [Google Scholar] [CrossRef]

- Guo, H.-J.; Weng, W.-F.; Zhao, H.-N.; Wen, J.-F.; Li, R.; Li, J.-N.; Zeng, C.-B.; Ji, S.-G. Application of Fourier transform near-infrared spectroscopy combined with GC in rapid and simultaneous determination of essential components in Amomum villosum. Spectrochim. Acta Part A 2021, 251, 119426. [Google Scholar] [CrossRef]

- Ireri, D.; Belal, E.; Okinda, C.; Makange, N.; Ji, C. A computer vision system for defect discrimination and grading in tomatoes using machine learning and image processing. Artif. Intell. Agric. 2019, 2, 28–37. [Google Scholar] [CrossRef]

- Deng, L.; Li, J.; Han, Z. Online defect detection and automatic grading of carrots using computer vision combined with deep learning methods. LWT Food Sci. Technol. 2021, 2, 111832. [Google Scholar] [CrossRef]

- Wang, F.; Zheng, J.; Tian, X.; Wang, J.; Niu, L.; Feng, W. An automatic sorting system for fresh white button mushrooms based on image processing. Comput. Electron. Agric. 2018, 151, 416–425. [Google Scholar] [CrossRef]

- Soffer, S.; Ben-Cohen, A.; Shimon, O.; Amitai, M.M.; Greenspan, H.; Klang, E. Convolutional Neural Networks for Radiologic Images: A Radiologist’s Guide. Radiology 2019, 290, 590–606. [Google Scholar] [CrossRef]

- Urazoe, K.; Kuroki, N.; Maenaka, A.; Tsutsumi, H.; Iwabuchi, M.; Fuchuya, K.; Hirose, T.; Numa, M. Automated Fish Bone Detection in X-Ray Images with Convolutional Neural Network and Synthetic Image Generation. IEEJ Trans. Electr. Electron. Eng. 2021, 16, 1510–1517. [Google Scholar] [CrossRef]

- Mery, D.; Lillo, I.; Loebel, H.; Riffo, V.; Soto, A.; Cipriano, A.; Aguilera, J.M. Automated fish bone detection using X-ray imaging. J. Food Eng. 2011, 105, 485–492. [Google Scholar] [CrossRef]

- Soric, M.; Pongrac, D.; Inza, I. Using Convolutional Neural Network for Chest X-ray Image classification. In Proceedings of the 2020 43rd International Convention on Information, Communication and Electronic Technology (MIPRO 2020), Opatija, Croatia, 28 September–2 October 2020. [Google Scholar] [CrossRef]

- Farooq, M.S.; Rehman, A.U.; Idrees, M.; Raza, M.A.; Ali, J.; Masud, M.; Al-Amri, J.F.; Kazmi, S.H.R. An Effective Convolutional Neural Network Model for the Early Detection of COVID-19 Using Chest X-ray Images. Appl. Sci. 2021, 11, 10301. [Google Scholar] [CrossRef]

- Ansah, F.A.; Amo-Boateng, M.; Siabi, E.K.; Bordoh, P.K. Location of seed spoilage in mango fruit using X-ray imaging and convolutional neural networks. Sci. Afr. 2023, 20, e01649. [Google Scholar] [CrossRef]

- Tollner, E.W.; Gitaitis, R.D.; Seebold, K.W.; Maw, B.W. Experiences with a food product X-ray inspection system for classifying onions. Appl. Eng. Agric. 2005, 21, 907–912. [Google Scholar] [CrossRef]

- Tao, Y.; Chen, Z.; Jing, H.; Walker, J. Internal inspection of deboned poultry using X-ray imaging and adaptive thresholding. Trans. ASAE 2001, 44, 1005–1009. [Google Scholar]

- Ye, X.; Jin, S.; Wang, D.; Zhao, F.; Yu, Y.; Zheng, D.; Ye, N. Identification of the Origin of White Tea Based on Mineral Element Content. Food Anal. Methods 2017, 10, 191–199. [Google Scholar] [CrossRef]

- Dan, S.J. NIR Spectroscopy Oranges Origin Identification Framework Based on Machine Learning. Int. J. Semant. Web Inf. Syst. 2022, 18, 16. [Google Scholar] [CrossRef]

- Cui, Z.Y.; Liu, C.L.; Li, D.D.; Wang, Y.Z.; Xu, F.R. Anticoagulant activity analysis and origin identification of Panax notoginseng using HPLC and ATR-FTIR spectroscopy. Phytochem. Anal. 2022, 33, 971–981. [Google Scholar] [CrossRef]

- Patel, K.K.; Kar, A.; Khan, M.A. Monochrome computer vision for detecting common external defects of mango. J. Food Sci. Technol. 2021, 58, 4550–4557. [Google Scholar] [CrossRef]

- Nasiri, A.; Omid, M.; Taheri-Garavand, A. An automatic sorting system for unwashed eggs using deep learning. J. Food Eng. 2020, 283, 110036. [Google Scholar] [CrossRef]

- Fan, S.; Li, J.; Zhang, Y.; Tian, X.; Huang, W. On line detection of defective apples using computer vision system combined with deep learning methods. J. Food Eng. 2020, 286, 110102. [Google Scholar] [CrossRef]

- Liu, C.; Shen, T.; Xu, F.; Wang, Y. Main components determination and rapid geographical origins identification in Gentiana rigescens Franch. based on HPLC, 2DCOS images combined to ResNet. Ind. Crops Prod. 2022, 187, 115430. [Google Scholar] [CrossRef]

- Hika, W.A.; Atlabachew, M.; Amare, M. Geographical origin discrimination of Ethiopian sesame seeds by elemental analysis and chemometric tools. Food Chem. 2023, 17, 100545. [Google Scholar] [CrossRef]

- Gu, L.; Xie, X.; Wang, B.; Jin, Y.; Wang, L.; Wang, J.; Yin, G.; Bi, K.; Wang, T. Discrimination of Lonicerae japonicae Flos according to species, growth mode, processing method, and geographical origin with ultra-high performance liquid chromatography analysis and chemical pattern recognition. J. Pharm. Biomed. Anal. 2022, 219, 114924. [Google Scholar] [CrossRef]

- Xue, Q.L.; Miao, P.Q.; Miao, K.H.; Yu, Y.; Li, Z. X-ray-based machine vision technique for detection of internal defects of sterculia seeds. J. Food Sci. 2022, 87, 3386–3395. [Google Scholar] [CrossRef]

- Yadav, S.; Sengar, N.; Singh, A.; Singh, A.; Dutta, M.K. Identification of disease using deep learning and evaluation of bacteriosis in peach leaf. Ecol. Inform. 2021, 61, 101247. [Google Scholar] [CrossRef]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Andriiashen, V.; van Liere, R.; van Leeuwen, T.; Batenburg, K.J. CT-based data generation for foreign object detection on a single X-ray projection. Sci. Rep. 2023, 13, 1881. [Google Scholar] [CrossRef]

- Dasenaki, M.E.; Thomaidis, N.S. Quality and Authenticity Control of Fruit Juices—A Review. Molecules 2019, 24, 1014. [Google Scholar] [CrossRef]

- Munera, S.; Rodriguez-Ortega, A.; Aleixos, N.; Cubero, S.; Gomez-Sanchis, J.; Blasco, J. Detection of Invisible Damages in “Rojo Brillante” Persimmon Fruit at Different Stages Using Hyperspectral Imaging and Chemometrics. Foods 2021, 10, 2170. [Google Scholar] [CrossRef] [PubMed]

| Dataset Name | Categories | Total Size | Proportion |

|---|---|---|---|

| quality classification | Normal | 1430 | 3:1:1 |

| Defective | 170 | ||

| origin identification | Yunnan | 455 | 7:2:2 |

| Guangdong | 594 | ||

| three-category classification | Yunnan | 452 | 4:1:1 |

| Guangdong | 587 | ||

| Defective | 101 |

| Layers | Number of Filters | Size of Filters | Stride |

|---|---|---|---|

| Input | - | - | - |

| 1st Conv + Relu | 4 | 3 × 3 | 1 |

| Dropout | 30% | - | - |

| 2nd Conv + Relu | 8 | 3 × 3 | 1 |

| Dropout | 40% | - | - |

| 3rd Conv + Relu | 16 | 3 × 3 | 1 |

| MaxPooling | - | 2 × 2 | 2 |

| Dropout | 50% | - | - |

| Flatten Layer | - | - | - |

| 1st Dense Layer | - | - | - |

| Dropout | 20% | - | - |

| 2nd Dense Layer | - | - | - |

| Dropout | 30% | - | - |

| 3rdt Dense Layer | - | - | - |

| Dropout | 30% | - | - |

| Output Layer + Softmax | - | - | - |

| No. | Model | Accuracy | Precision | Specificity |

|---|---|---|---|---|

| 1 | AFNet model | 96.33% | 96.27% | 100.0% |

| 2 | BSSNet model | 94.05% | 95.56% | 96.16% |

| 3 | VGG16 model | 96.13% | 96.86% | 97.89% |

| 4 | Resnet18 model | 94.33% | 93.38% | 99.29% |

| 5 | Inception model | 95.87% | 95.27% | 99.29% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Z.; Xue, Q.; Miao, P.; Li, C.; Liu, X.; Cheng, Y.; Miao, K.; Yu, Y.; Li, Z. Deep Learning Network of Amomum villosum Quality Classification and Origin Identification Based on X-ray Technology. Foods 2023, 12, 1775. https://doi.org/10.3390/foods12091775

Wu Z, Xue Q, Miao P, Li C, Liu X, Cheng Y, Miao K, Yu Y, Li Z. Deep Learning Network of Amomum villosum Quality Classification and Origin Identification Based on X-ray Technology. Foods. 2023; 12(9):1775. https://doi.org/10.3390/foods12091775

Chicago/Turabian StyleWu, Zhouyou, Qilong Xue, Peiqi Miao, Chenfei Li, Xinlong Liu, Yukang Cheng, Kunhong Miao, Yang Yu, and Zheng Li. 2023. "Deep Learning Network of Amomum villosum Quality Classification and Origin Identification Based on X-ray Technology" Foods 12, no. 9: 1775. https://doi.org/10.3390/foods12091775

APA StyleWu, Z., Xue, Q., Miao, P., Li, C., Liu, X., Cheng, Y., Miao, K., Yu, Y., & Li, Z. (2023). Deep Learning Network of Amomum villosum Quality Classification and Origin Identification Based on X-ray Technology. Foods, 12(9), 1775. https://doi.org/10.3390/foods12091775