Abstract

The rapid detection of chestnut quality is a critical aspect of chestnut processing. However, traditional imaging methods pose a challenge for chestnut-quality detection due to the absence of visible epidermis symptoms. This study aims to develop a quick and efficient detection method using hyperspectral imaging (HSI, 935–1720 nm) and deep learning modeling for qualitative and quantitative identification of chestnut quality. Firstly, we used principal component analysis (PCA) to visualize the qualitative analysis of chestnut quality, followed by the application of three pre-processing methods to the spectra. To compare the accuracy of different models for chestnut-quality detection, traditional machine learning models and deep learning models were constructed. Results showed that deep learning models were more accurate, with FD-LSTM achieving the highest accuracy of 99.72%. Moreover, the study identified important wavelengths for chestnut-quality detection at around 1000, 1400 and 1600 nm, to improve the efficiency of the model. The FD-UVE-CNN model achieved the highest accuracy of 97.33% after incorporating the important wavelength identification process. By using the important wavelengths as input for the deep learning network model, recognition time decreased on average by 39 s. After a comprehensive analysis, FD-UVE-CNN was deter-mined to be the most effective model for chestnut-quality detection. This study suggests that deep learning combined with HSI has potential for chestnut-quality detection, and the results are encouraging.

1. Introduction

Chestnuts have a long history as an agricultural product and are widely distributed worldwide, but mainly concentrated in Asia and Europe. China is a major producer and consumer of chestnuts, with production reaching 295,661 ha in 2021, according to FAO. Chestnuts are rich in nutritional value, consisting of 42.2–66.5% starch, 40.3–60.1% moisture, 9.5–23.4% total sugar, 4.8–9.6% crude protein, 2.2–3.7% crude fiber, 1.8–3.4% ash, 2.8–3.2% fat, and essential amino acids and minerals [1]. In recent years, the demand for chestnuts has significantly increased due to the growing popularity of cooked chestnuts and various chestnut-based foods. However, there are numerous low-quality chestnuts in the market that are affected by factors such as planting terrain, ripening time, and storage conditions. These low-quality chestnuts can pose a serious health risk to consumers after processing and reaching the market.

In southern China, most chestnuts are planted in the hills and mountains, and these chestnuts are planted by farmers and purchased by processing companies from the farmers without forming large-scale plantation industrial parks, and the ripening time of chestnuts varies. Therefore, due to the lack of large-scale planting and inconsistent ripening time of chestnuts, the quality of chestnuts procured by processing enterprises varies. As a result, processing enterprises have an urgent need for technology that can identify the quality of chestnuts.

The quality of chestnuts is affected by factors such as mold, stiffness, sweetness, insects, and many others. Among these factors, mold has the greatest impact on the chestnut. The moisture content of chestnuts after harvest will change with storage time and environment, and they are susceptible to mold and mildew during storage and transportation due to their rich nutrition and moisture [2]. Compared to other agricultural products, because the chestnut has a hard shell and chestnut mold often occurs inside the chestnut, there is no obvious change in the appearance; it is difficult to distinguish the internal mold of the chestnut by the naked eye [3], which brings great challenges to the processing and eating quality of chestnut.

Typically, several techniques are now available to determine the quality of chestnuts, including machine vision [4], near-infrared spectroscopy (NIR) [1], and computed tomography (CT) [3]. Machine vision technology is widely used to detect damage on the surface of agricultural products, but damage to chestnuts often occurs internally, and cannot be detected by machine vision technology when damage occurs inside the chestnut. Good results were obtained using X-ray–CT chestnut defect experiments [3,5]. For example, the accuracy of identifying healthy, severely, and slightly defective chestnuts was 0.929, 0.937, and 0.836, respectively [5]. However, it cannot yet be applied to large-scale chestnut identification and production classification because CT requires theoretical analysis by professionals, which is costly and inefficient. In addition, HIS has a unique advantage over X-ray–CT in that it can represent changes in reflectance in the near-infrared range as data in the form of images [6]. The optical properties of chestnuts vary from quality to quality, and even small changes in the diffuse reflectance coefficient due to optical properties can be detected directly. This technique is more sensitive to slight changes in chestnut quality than X-ray–CT using HIS. NIR has been combined with the chemometric approach for chestnut quality classification [1], where the highest accuracy of 0.96 was achieved using the linear discriminant analysis (LDA) model, but NIR combined with the chemometric approach was not as efficient as the HSI technique for detection.

Differently from the above methods, HSI obtains both spectral and spatial information about the sample over a wide spectral range, while also providing imaging data [7]. It is a non-contact, nondestructive, and rapid detection technique that takes advantage of the fact that different constituents of the sample have different spectral absorption, and the image will reflect significantly on defects at specific wavelengths [8]. In addition, HSI has the advantage of capturing many narrower spectral bands in a continuous spectral range [9]. In recent years, with the rapid development of HSI, many excellent machine learning and deep learning algorithms have been proposed to solve the classification problem of nondestructive inspection of agricultural products. In quality detection [10], the prediction coefficient of determination R2 reached 0.84 when using PLSR combined with variable selection for skin egg-quality detection. For lychee-browning detection [11], the highest coefficient of determination was 0.946 when collecting six days of spectral data separately and then establishing six lychee-identification models. In the wheat mildew classification study [12], hyperspectral data were collected from wheat on the second and fifth day, respectively, and an optimal classification accuracy of 0.91 was achieved. Many studies also exist in spectroscopy combined with deep learning such as rice variety identification [13], salmon adulteration identification [7], and heavy metal detection in oilseed rape [14]. These HSI, combined with deep learning models, all have an accuracy of 0.9 or higher, so the application of deep learning algorithm models to identify chestnut quality is a very promising direction.

The overall goal of this study is to explore the feasibility of hyperspectral methods combined with deep learning networks for chestnut-quality classification and to attempt to find an optimal recognition model that can be easily applied to practical processing and production. The main undertakings of this paper are as follows:

- The feasibility of the hyperspectral method combined with the deep learning model for chestnut quality classification was verified.

- To determine the optimal identification band for different-quality chestnuts, the important bands of chestnuts of varying quality were extracted and compared with the full-band chestnuts. This allowed for verification of the impact of important band classification on chestnut quality.

- The visualization of chestnut-quality detection by hyperspectral data combined with principal component analysis is presented.

- Hyperspectral detection has the potential to determine the quality of agricultural products such as grapefruit, lychee, peanut, and mangosteen, which may not be easily observable by the naked eye.

2. Materials and Methods

2.1. Material Acquisition and Processing

To ensure that the chestnut samples had a uniform level of freshness, all chestnut samples in this study were provided with 170 freshly picked chestnuts by chestnut-processing companies. Chestnuts were transported by courier, and the first day of chestnut storage (28 December 2022) was used as the starting time for chestnut storage, and the number of days was recorded as “1st d”. Chestnut data was obtained by hyperspectral instrumentation on the first day of sample acquisition, and the chestnuts were stored normally after the first phase of chestnut data collection. Chestnuts were stored in semi-enclosed boxes at a temperature and humidity of 13 °C and 67%, respectively, for the first thirty days of storage, and at a temperature and humidity of 20 °C and 80%, respectively, for the second thirty days of storage. The average temperature and humidity were significantly higher in the second thirty days of storage, and the trend of chestnut quality changes would be accelerated when the chestnut was in a higher temperature and humidity environment in the second thirty-day stage [15]. Spectral data of chestnuts were obtained on the 1st day, 30th day, and 60th day of storage, and recorded as fresh, sub-fresh (slightly moldy), and rotten (severely moldy). This data was used for subsequent experimental analysis.

2.2. Spectral Image Acquisition and Correction

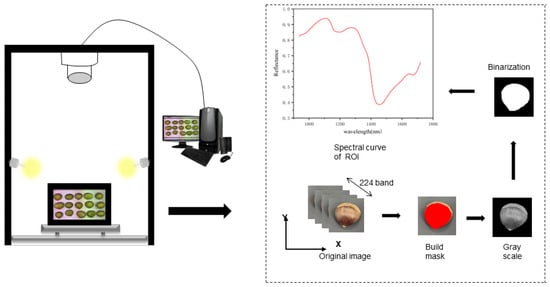

The HSI system mainly consists of a hyperspectral imaging lens (Specim FX17, Spectral Imaging Ltd., Oulu, Finland), a light source, a mobile platform, and a computer, with the hyperspectral imaging lens operating in the near-infrared band (935–1720 nm). The hyperspectral imaging lens has an image resolution of 640 × 640 px and a spectral resolution of 8 nm, and the light source is a 280 W halogen lamp. In order to obtain clear image information, the height between the lens and the sample is 32 cm, and the specific workflow is shown in Figure 1. During the spectral instrument data acquisition process, there is dark current noise and the effect of uneven illumination [6]. To increase the accuracy of the data, corrections are made by transforming the original image (Iraw) into a reflectance image (Ic) using standard white reference images (Iwhite) and dark reference images (Idark):

where Iraw is the original hyperspectral image, Ic is the corrected image, Iwhite is obtained by using a white PTFE rod with almost 100% reflectivity, and Idark is obtained by completely covering the lens with an opaque cap.

Figure 1.

Schematic diagram of hyperspectral acquisition system.

2.3. Moisture Loss Measurement

Immediately after each phase of spectral data collection, the samples were weighed using an electronic scale (longbei, Home Electronic Scale, Guangzhou, China), and considering the consistency of each weighed sample, each chestnut sample was labeled with a serial number to ensure that the same sample was measured each time. After each measurement, the sample was stored at room temperature, and the weight of the 1st d sample was measured as , the weight of 30th and 60th d was measured as Wd, and the formula of moisture loss was calculated as in Equation (2) [16]:

The mean, maximum, and variance data of the experimental sample weights are shown in Table 1.

Table 1.

Results of sample weight measurements.

2.4. Principal Component Analysis Method

Principal component analysis (PCA) is an unsupervised data dimensionality-reduction method targeted at exploring the sample space and interpreting the sample space as an effective statistical tool. An excessive number of variables can be transformed into a smaller number of new potential variables, which are also called principal components (PCs) [17]. Each PC has a corresponding score, which is provided in the form of scatter plots which illustrate the association between the samples. These score plots ensure that the spectral data can be interpreted successfully. In this study, the cluster analysis of the spectral data of good-quality chestnuts and poor-quality chestnuts was performed using principal component analysis to achieve a qualitative analysis of chestnut-quality detection.

2.5. Spectral Image Pre-Processing

Proper pre-processing of the spectral data can increase the correlated chemical peaks in the spectra and reduce the effects of baseline shift and overall curvature [18]. In this paper, four pre-processing methods are used, including standard normal-variables transformation (SNV), multiplicative scatter correction (MSC), and first-order derivative (FD). The standard normal variables transformation (SNV) can effectively eliminate the scattering problem and provide high-quality data for building the identification model [19]. MSC can effectively eliminate the spectral differences caused by different scattering levels and thus enhance the correlation between the spectra and the data [20]. FD is used to amplify the trend of the spectral images through the derivative processing of the spectral images [21]. In this study, several pre-processing methods are compared to find an optimal pre-processing method.

2.6. Feature Selection Algorithm

Hyperspectral data are characterized by a strong correlation between adjacent bands and high redundancy [22]. Appropriate use of feature-extraction methods can effectively reduce the dimensionality of the spectral data and simplify the model. In this study, three feature-extraction algorithms were used: competitive adaptive reweighted sampling (CARS), the successive projections algorithm (SPA), and the uninformative variable elimination (UVE). SPA is a forward-selection algorithm designed for selecting spectral features. It selects the least redundant band from the original spectrum in order to reduce the effect of spectral covariance [23]. CARS is a feature-selection method that combines Moncatello sampling (MC) and partial least-squares (PLS) model regression, and this approach uses cross-validation (CV) for determining the subset with the lowest root-mean-square error [24]. The equation for root-mean-square error cross validation (RMSECV) is as follows:

where y denotes the true value and ycv denotes the predicted value in cv. UVE is a variable selection method based on stability analysis of PLS regression models for eliminating redundant or uninformative spectral parameters [25].

2.7. Traditional Machine Learning Methods

The partial least-square discriminant analysis (PLS-DA) model is useful for solving classification problems in two stages. The first stage is the application of PLS components for dimensionality reduction, and the second stage is the predictive model building, i.e., discriminant analysis. In classification, PLS-DA transforms categorical variables into continuous variables, and then calculates the Latent Variable Scores (LVS) to fit the model using covariance [26].

Support vector machine (SVM) is a well-established classification method that mainly solves nonlinear classification, function-estimation, and pattern-recognition problems. The basic idea is to transform the low infinitesimal indistinguishable variables into a hyperplane that can correctly partition the training data set in a high-dimensional feature space with maximum measurement intervals. In this study, the radial basis function (RBF) is chosen as the kernel function, which has a better ability to handle nonlinear data [27]. Random forest (RF) is an effective method for analyzing high-dimensional data by constructing multiple decision trees in parallel to combine their results to produce an output [28], and the decision trees and minimum number of leaves in this paper are set to 2.

2.8. Deep Learning Models

2.8.1. Convolutional Neural Networks

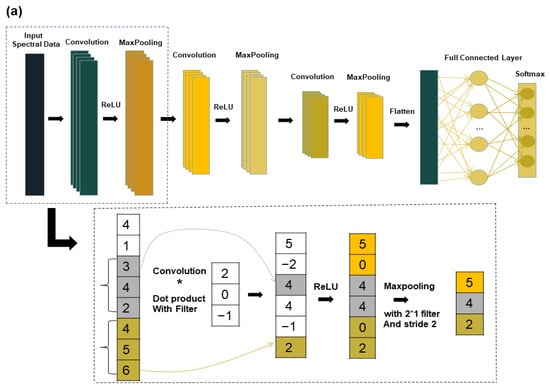

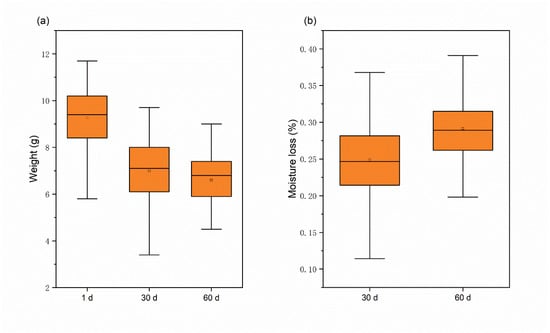

CNN is an unsupervised network, which is commonly used in visual image and speech processing, for example. With the continuous research and use of CNN, many applications using CNN for hyperspectral image classification have also emerged in the field of spectroscopy [29]. CNN consists of an input layer, convolutional layer, maximum pooling layer, fully connected layer, and output layer. In this study, a chestnut quality classification model called “1DCNN” is proposed and found to be effective. The structure diagram of the one-dimensional CNN is shown in Figure 2. The 1DCNN model comprises of three convolutional layers with a kernel size of 2 * 1. The number of kernels used were 16, 32, and 32 respectively. The step size and padding were set at 2 and 0, respectively. The maximum pooling layer has a size of 2 * 1 with a step size of 1. The fully connected layer reduces the neuron parameters to 3, and finally the output is passed through softmax. To speed up the training process and avoid the vanishing gradient problem, the rectified linear unit (ReLU) is used as the activation function [30] (Equation (4)). For classification problems, the cross-entropy function (Equation (5)) is often applied as a loss function, and the cross-entropy function to measure the distance between the actual output and the desired output [31].

where LC represents the classification loss function; y is the label vector; ỹ is the predicted vector; s is the number of samples; and q is the number of classes. In a classification task, the softmax operator is applied to the predicted output to obtain the predicted probability, and the cross-entropy loss is then compared with the true label, which is presented in Equation (6).

Figure 2.

Deep learning model structure diagram: (a) CNN structure diagram; (b) LSTM structure diagram.

2.8.2. Long Short-Term Memory Network

The long short-term memory network (LSTM) is a special kind of recurrent neural network (RNN) that solves the general recurrent neural network long-term dependency problem [32]. LSTM can perform a single operation across all sequence lengths and optimize vanishing gradient problems through its gating features [33]. T Due to the advantages of the LSTM model, it has also been more widely used in the field of spectroscopy in recent years [9,34]. The LSTM model in this study is shown in Figure 2. The LSTM model consists of an input layer, two LSTM layers, and one fully connected layer. Among them, ReLU is chosen for the activation function, thus speeding up the learning speed and avoiding the problem of vanishing gradients. The deep learning model in this study is executed in MATLAB R2022a (Mathworks, Natick, MA, USA).

3. Results and Discussion

3.1. Change in Water Content

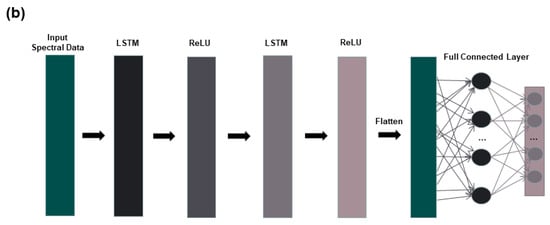

The study found that the moisture content of chestnuts decreased as the number of storage days increased, and the rate of moisture loss was significantly faster during the first 30 days than the last 30 days. Measurements taken of the weight of fresh, sub-fresh, and rotten chestnuts led to this conclusion. The weight change and water loss of chestnuts increased with the increase of storage days, as shown in Figure 3, and water loss slows down for 30–60 days, probably because the chestnut mold develops during this period and mold multiplies rapidly [35]. The average weight of chestnuts decreased by 2.68 g after 60 days of storage at room temperature, and the average moisture loss was 29.1%, which also indicated that there was a large difference in the moisture of rotten chestnuts stored for a long time compared to fresh chestnuts.

Figure 3.

Weight and moisture loss of chestnuts: (a) weight variation; (b) moisture loss.

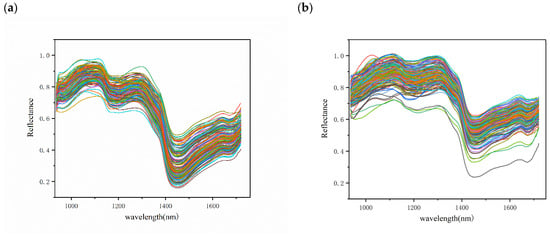

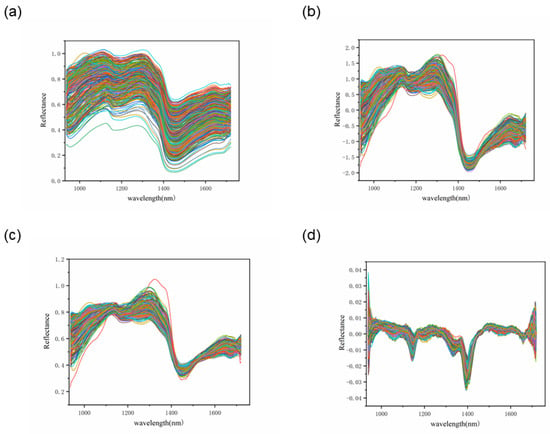

3.2. Spectral Overview

The average spectra of the different chestnut qualities are presented in Figure 4, with the spectral wavelength range in the NIR band (935–1720 nm). In general, the three different qualities of chestnuts have the same spectral curve waveforms with similar peaks and troughs, but different reflectance values due to the consistent internal composition of the chestnuts [22]. Among them, the sub-fresh and rotten chestnuts showed a more scattered spectral curve, which may be due to the different degrees of quality variation in these chestnuts.

Figure 4.

Reflectance spectra of different quality chestnuts: (a) fresh chestnuts; (b) sub-fresh chestnuts; (c) rotten chestnuts; (d) average spectrum.

Compared to fresh chestnuts, sub-fresh chestnuts showed a higher reflectance due to the water-absorption peak associated with the O–H bond at 970 and 1450 nm (Figure 4) [36]. The measurement of chestnut moisture loss also showed that chestnut moisture loss was rapid during the first thirty days of storage, showing a large difference in spectral water absorption peaks. In the second thirty days, it is likely that the moisture was produced by the rapid multiplication of chestnut mold bacteria, as has been seen in some studies on chestnut mold [35]. The appearance of an absorption peak at 1200 may be related to C–H bonded sugars and starch [37]. Although the average spectra of different quality chestnuts exhibit differences, it is clear from the overall spectral map that this is due to large variations in individual chestnuts weighted to the average spectrum. Therefore, a more reliable way to identify chestnuts with different degrees of variation needs to be established.

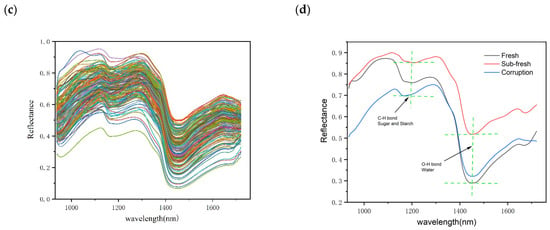

3.3. PCA Qualitative Analysis

Before building the model, the raw spectral data were visualized and qualitatively clustered using PCA. Since the cumulative contribution of the first two principal components (PC1, PC2) reached 97.4%, with PC1 explaining 79.8% of the variance and PC2 explaining 17.6% of the variance, PC1 and PC2 were chosen to explain the clustering analysis of the chestnut spectral data. As shown in the scatter plot of PCA scores in Figure 5, the distribution of the three chestnut species can be effectively separated using confidence ellipses. There is a certain degree of overlap between fresh chestnuts and sub-fresh chestnuts, and a small overlap between sub-fresh chestnuts and rotten chestnuts, which may be caused by the different change rates of different chestnuts.

Figure 5.

PCA score graph of chestnut samples.

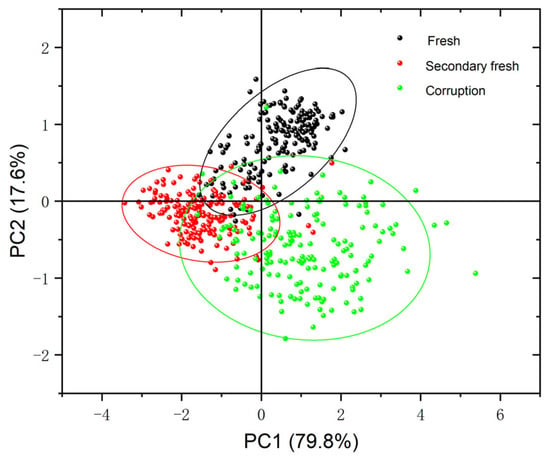

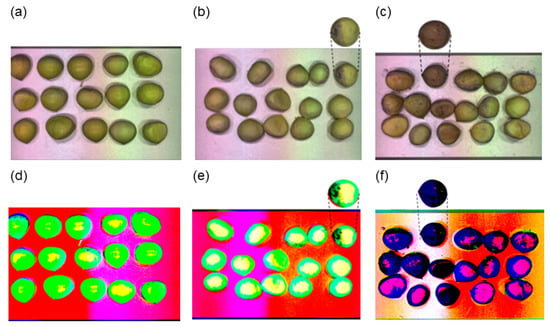

In the visualization of hyperspectral data, the first three principal component images were obtained by PCA dimensionality reduction; as shown in Figure 6, there was no obvious difference between the original images of fresh and sub-fresh chestnuts, while there was a slight difference in the principal component images, and at this time when moldy chestnuts appeared on the principal component images of sub-fresh chestnuts could be distinguished more intuitively by means of images. When comparing the principal component images of the sub-fresh and moldy chestnuts, there is a significant difference, which further illustrates the feasibility of spectra for chestnut-quality differentiation.

Figure 6.

Sample raw images and principal component images: (a) fresh chestnuts; (b) sub-fresh chestnuts; (c) rotten chestnuts; (d–f) images of the first 3 principal components of the corresponding quality chestnut.

3.4. Chestnut Quality Identification Results Using Machine Learning Models

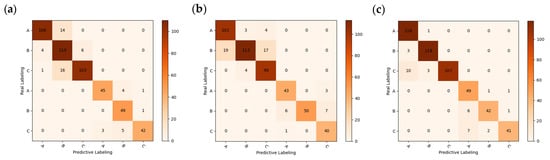

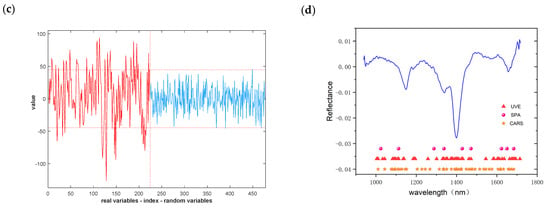

For the three pre-processing methods of RAW, MSC, SNV, and FD spectra (Figure 7), a chestnut-quality classification model was developed at 224 wavelengths. The identification models used PLS-DA, SVM, and RF for fresh (1st d), sub-fresh (30th d), and rotten chestnuts (60th d), respectively. The data are divided into training and test sets in the ratio of 3:1, where the training and test sets for RF are used in a disordered order to improve their generalization ability. The recognition results of PLS-DA, SVM, and RF models for different pre-processing methods are shown in Table 2, and Figure 8 shows the corresponding confusion matrix for the test set with higher accuracy.

Figure 7.

Pre-processing curves: (a) RAW; (b) SNV; (c) MSC; (d) FD.

Table 2.

Recognition results of machine learning models based on different pre-processing methods.

Table 2.

Recognition results of machine learning models based on different pre-processing methods.

| Model | Pre-Processing Method | |||||||

|---|---|---|---|---|---|---|---|---|

| RAW | SNV | MSC | FD | |||||

| Train 2 | Test 3 | Train | Test | Trian | Test | Trian | Test | |

| PLS-DA | 88.61 | 90.67 | 88.89 | 90 | 88.89 | 90 | 93.33 | 93.33 |

| RF | 95.27 | 88 | 95.28 | 89.33 | 95.56 | 91.33 | 96.39 | 94 |

| SVM | 87.45 | 87.06 | 93.73 | 93.33 | 77.84 | 76.27 | 88.43 | 88.43 |

2 Train set; 3 test set.

Figure 8.

Confusion matrix for different recognition models: (a) PLS-DA + RAW; (b) SVM + RAW; (c) RF + RAW; (d) RF + MSC; (e) RF + FD; (f) SVM + SNV.

It can be observed that in most cases, the use of pre-processing can improve the accuracy of the model for the spectra. The RF + SNV and RF + MSC approaches achieved 95.28% and 95.56% in the training set, respectively, while the RF + FD and SVM + SNV approaches achieved the highest accuracy of 94% and 93.33% in the test set, respectively, with good results in overall accuracy using both PLS-DA and RF models.

The confusion matrix was used to further analyze the degree of misclassification within each model, with A, B, and C labels representing fresh, sub-fresh, and rotten chestnuts, respectively. From an overall perspective, A and B are susceptible to misclassification, while C is also somewhat susceptible to misclassification with B and C. This phenomenon is analogous to the behavior of spectral curves, where fresh and sub-fresh chestnuts exhibit similar curves, but curves of rotten chestnuts are considerably more dispersed, leading to a higher likelihood of misclassification as fresh or sub-fresh chestnuts. It is because of the similarity in spectral curve that it is more difficult for the traditional classification model to obtain satisfactory accuracy directly [9], so further deep learning approaches are needed to obtain satisfactory accuracy.

3.5. Chestnut Quality Recognition Results Using Deep Learning Models

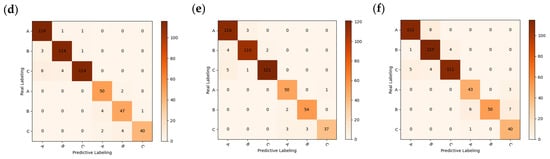

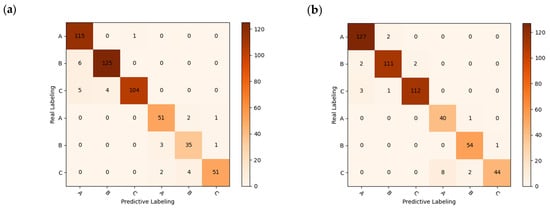

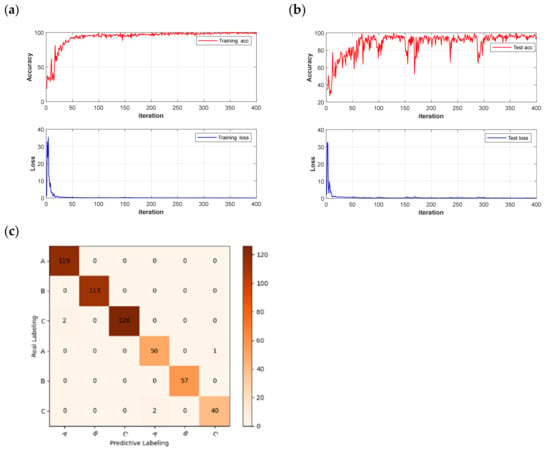

The dataset was partitioned using the same method as before, and data was randomized to enhance the model’s generalization ability. The CNN and LSTM deep learning models were built to quickly detect different quality chestnuts, respectively, and the models were trained for 500 rounds using segmented learning with a learning rate of 0.01 for the first 80% iterations and a decreasing learning rate of 0.1 for the last 20%, respectively. The recognition performance of the models is shown in Table 3. Observing the results in the table shows that the use of deep learning models is significantly better than traditional machine learning models, which is not an exception: in potato-disease detection [38], CNN models outperform traditional models in terms of recognition results. The same phenomenon is demonstrated in this experiment, where the CNN model also outperforms the LSTM model in terms of deep learning in general. The CNN models achieved 100% accuracy in the training set after pre-processing, and the highest accuracy of 98.67% was achieved in the MSC-CNN test set. However, the FD-LSTM test set has the highest accuracy of 99.72%, and the model accuracy and loss function are shown in Figure 9.

Table 3.

The results of chestnut-quality recognition based on deep learning models.

Table 3.

The results of chestnut-quality recognition based on deep learning models.

| Model | Time 4 | Pre-Processing Method | |||||||

|---|---|---|---|---|---|---|---|---|---|

| RAW | SNV | MSC | FD | ||||||

| Train | Test | Train | Test | Train | Test | Train | Test | ||

| CNN | 50 s | 99.17 | 96 | 100 | 96.67 | 100 | 98.67 | 100 | 98.33 |

| LSTM | 56 s | 98.33 | 95.33 | 99.44 | 97.33 | 98.33 | 94 | 99.33 | 99.72 |

4 Average model running time.

Figure 9.

Loss function and accuracy curve of LSTM models: (a) training set; (b) test set.

Although the recognition accuracy is improved by using a deep learning network, the deep learning network requires constant iterations, and the network has a low recognition efficiency with an average running time of 53 s. Therefore, it is important for practical use to reduce the amount of input information and improve the model recognition efficiency by screening spectral feature wavelengths.

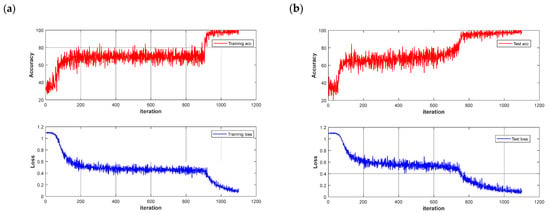

3.6. Identification of Important Wavelengths

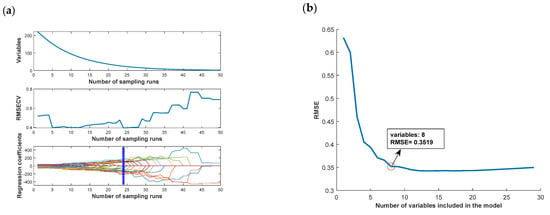

The accuracy of the traditional classification model does not achieve more satisfactory results, and the deep learning model has good results in terms of recognition accuracy, but the recognition efficiency is low. In this paper, we try to identify important wavelengths by spectral feature selection to improve the recognition efficiency of the deep learning model and improve the accuracy of the traditional model at the same time. The feature selection is performed by the SPA, CARS, and UVE algorithms, and the important wavelengths are recognized by the FD pre-processing method, which performs better in traditional classification models and deep learning models, while RAW is used as a control.

Different algorithms for the extraction results are shown in Figure 10. From the figure, it can be observed that although different feature wavelength-identification algorithms are used, the identification results are mostly concentrated around 1000, 1400, and 1600 nm wavelengths, which can be used as important wavelengths for chestnut = quality identification.

Figure 10.

Important wavelength extraction results: (a) CARS algorithm; (b) SPA algorithm; (c) UVE algorithm; (d) important wavelength distribution.

3.7. Important Wavelength-Identification Results

RF and CNN, which have higher accuracy in machine learning and deep learning models, were applied in further studies, and the specific results are shown in Table 4 and Table 5. The model confusion matrix is shown in Figure 11, where there is a small improvement in the accuracy of the test set before the identification of important wavelengths using the RF model. The RAW-UVE-CNN recognition model has a slight increase in the accuracy of the test set, and a slight decrease in the accuracy of the test set of FD pre-processing, which is likely to have been caused by the reduction of the network input parameters.

Table 4.

Results of different important wavelength recognition algorithms based on RF.

Table 4.

Results of different important wavelength recognition algorithms based on RF.

| Method | Variables 5 | Preprocessing Method | |||

|---|---|---|---|---|---|

| RAW | FD | ||||

| Train | Test | Train | Test | ||

| SPA | 9, 9 6 | 96.67 | 91.33 | 94.44 | 87.33 |

| CARS | 19, 44 | 93.89 | 90 | 96.94 | 92 |

| UVE | 121, 54 | 97.5 | 89.66 | 96.94 | 90 |

5 The number of input variables in RF model. 6 Denotes the RAW spectra and FD pre-processing mode input variables, respectively.

Table 5.

Results of different important wavelength recognition algorithms based on CNN.

Table 5.

Results of different important wavelength recognition algorithms based on CNN.

| Method | Variables | Time | Preprocessing Method | |||

|---|---|---|---|---|---|---|

| RAW | FD | |||||

| Trian | Test | Trian | Test | |||

| SPA | 9, 9 | 8 s | 98.89 | 95.33 | 100 | 97.33 |

| CARS | 19, 44 | 10 s | 98.33 | 94 | 99.17 | 94 |

| UVE | 121, 54 | 11 s | 98.61 | 97.33 | 99.44 | 98 |

Figure 11.

Confusion matrix for RF models: (a) RAW-SPA; (b) FD-CARS.

However, although there is a slight decrease in accuracy by identifying significant wavelengths using the deep learning network, this approach significantly reduces the recognition time of the network and improves the network recognition efficiency. The model results and confusion matrix are shown in Figure 12. The average running time of the deep learning network was reduced from 50 s to 11 s. The improvement in recognition efficiency offers potential for the practical applications of chestnut-quality detection.

Figure 12.

Model results and confusion matrix: (a) CNN training set accuracy and loss function; (b) CNN test set accuracy and loss function; (c) FD-UVE-CNN model confusion matrix.

4. Discussion

In food processing, it is crucial to identify the quality of chestnuts quickly and non-destructively. There have been previous studies on chestnuts investigating the identification of quality, mold, and origin, and this paper builds on that to conduct a more in-depth study. From the perspective of similar studies, researchers have studied chestnut origin and mold; for example, one investigated the application of backpropagation neural network (BPNN) to achieve the classification of healthy and moldy chestnuts with an accuracy of 0.99 [35]. Chestnut origin identification experiments using 1D-CNN and PLS-DA algorithms both achieved an accuracy of 0.971 [39]. In addition, the overall accuracy of HIS combined with deep learning for classification in nut-quality assessment was 0.958 [40]. This paper offers significant improvements in chestnut quality detection, distinguishing itself from prior research in the following ways. Firstly, a more accurate quality classification is utilized to categorize chestnuts into three groups for detection, with the application of the latest deep learning methodologies that further improve accuracy. Additionally, this paper shows that the moisture loss value of chestnuts is strongly correlated with the 1450 nm water absorption peak that increases as chestnuts are stored over time. Wavelength identification algorithms such as SPA, CARS, and UVE are employed to identify that the key wavelength range for chestnuts is mainly concentrated around 1200, 1400, and 1600 nm. Utilizing the identified wavelength distribution characteristics, multispectral equipment can be developed, making chestnut quality inspection more accessible and efficient.

5. Conclusions

This paper utilized a combination of HSI and traditional classification models, as well as deep learning models, to detect chestnut quality. By comparing the recognition effects of different models, combined with different treatments, the best model for chestnut-quality detection and the important wavelengths for chestnut-quality detection were identified. It was observed that FD-RF achieved the highest accuracy of 94% in the traditional classification model, while FD-LSTM achieved the highest accuracy of 99.72% in the deep learning network, with various pre-processing methods and different classification models employed.

Furthermore, feature extraction was used to identify the important wavelengths for chestnut-quality identification, which were mainly distributed around 1000, 1400, and 1600 nm. The significant wavelengths were identified with a slight increase in the original spectral accuracy in the RF model, and the significant wavelengths were used as input for the deep learning network, which had a slight decrease in accuracy but significantly reduced the network identification time (39 s on average).

Overall, the aim of this paper is to investigate the possibility of using hyperspectral imaging in conjunction with a deep learning model for chestnut-quality detection. Through the identification of significant wavelengths, we were able to enhance the effectiveness of the hyperspectral detection method. Furthermore, a FD-UVE-CNN model was developed, which showed high levels of accuracy and recognition efficiency with percentages of 97.33% and 11 s, respectively. These findings suggest that deep learning models have the potential to be applied in the practical detection of chestnut quality.

Author Contributions

Data collection and analysis, Q.Z. and H.Z.; writing—original draft, software, algorithm analysis, Q.Z.; software, formal algorithm analysis, P.L. and S.T.; formal algorithm analysis, C.L.; experimental design, data analysis, L.Z.; writing—review and editing, methodology, funding acquisition, model analysis, N.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Heyuan Branch, Guangdong Laboratory for Lingnan Modern Agriculture Project (DT20220030), Maoming Science and Technology Plan (2022S034), Guangdong Science and Technology Plan (2021XNYNYKJHZGJ001, 2021B1212040009), Qingyuan Science and Technology Plan (2022KJJH063).

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hu, J.; Ma, X.; Liu, L.; Wu, Y.; Ouyang, J. Rapid Evaluation of the Quality of Chestnuts Using Near-Infrared Reflectance Spectroscopy. Food Chem. 2017, 231, 141–147. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Li, X.; Li, P.; Wang, W.; Zhang, J.; Zhou, W.; Zhou, Z. Non-Destructive Measurement of Sugar Content in Chestnuts Using Near-Infrared Spectroscopy. In Computer and Computing Technologies in Agriculture IV; Li, D., Liu, Y., Chen, Y., Eds.; IFIP Advances in Information and Communication Technology; Springer: Berlin/Heidelberg, Germany, 2011; Volume 347, pp. 246–254. ISBN 978-3-642-18368-3. [Google Scholar]

- Donis-González, I.R.; Guyer, D.E.; Pease, A.; Fulbright, D.W. Relation of Computerized Tomography Hounsfield Unit Measurements and Internal Components of Fresh Chestnuts (Castanea spp.). Postharvest Biol. Technol. 2012, 64, 74–82. [Google Scholar] [CrossRef]

- Xiao, Z.; Wang, J.; Han, L.; Guo, S.; Cui, Q. Application of Machine Vision System in Food Detection. Front. Nutr. 2022, 9, 888245. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Lu, Z.; Xiao, X.; Xu, M.; Lin, Y.; Dai, H.; Liu, X.; Pi, F.; Han, D. Non-Destructive Determination of Internal Defects in Chestnut (Castanea mollissima) during Postharvest Storage Using X-Ray Computed Tomography. Postharvest Biol. Technol. 2023, 196, 112185. [Google Scholar] [CrossRef]

- Zhang, P.; Wang, H.; Ji, H.; Li, Y.; Zhang, X.; Wang, Y. Hyperspectral Imaging-Based Early Damage Degree Representation of Apple: A Method of Correlation Coefficient. Postharvest Biol. Technol. 2023, 199, 112309. [Google Scholar] [CrossRef]

- Li, P.; Tang, S.; Chen, S.; Tian, X.; Zhong, N. Hyperspectral Imaging Combined with Convolutional Neural Network for Accurately Detecting Adulteration in Atlantic Salmon. Food Control 2023, 147, 109573. [Google Scholar] [CrossRef]

- Xu, P.; Sun, W.; Xu, K.; Zhang, Y.; Tan, Q.; Qing, Y.; Yang, R. Identification of Defective Maize Seeds Using Hyperspectral Imaging Combined with Deep Learning. Foods 2022, 12, 144. [Google Scholar] [CrossRef]

- Pang, L.; Wang, L.; Yuan, P.; Yan, L.; Yang, Q.; Xiao, J. Feasibility Study on Identifying Seed Viability of Sophora Japonica with Optimized Deep Neural Network and Hyperspectral Imaging. Comput. Electron. Agric. 2021, 190, 106426. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Q.; Fan, W.; Xu, B. Non-Destructive Determination and Visualization of Gel Springiness of Preserved Eggs during Pickling through Hyperspectral Imaging. Food Biosci. 2023, 53, 102605. [Google Scholar] [CrossRef]

- Yang, Y.-C.; Sun, D.-W.; Wang, N.-N. Rapid Detection of Browning Levels of Lychee Pericarp as Affected by Moisture Contents Using Hyperspectral Imaging. Comput. Electron. Agric. 2015, 113, 203–212. [Google Scholar] [CrossRef]

- Xuan, G.; Li, Q.; Shao, Y.; Shi, Y. Early Diagnosis and Pathogenesis Monitoring of Wheat Powdery Mildew Caused by Blumeria Graminis Using Hyperspectral Imaging. Comput. Electron. Agric. 2022, 197, 106921. [Google Scholar] [CrossRef]

- Meng, Y.; Yuan, W.; Aktilek, E.U.; Zhong, Z.; Wang, Y.; Gao, R.; Su, Z. Fine Hyperspectral Classification of Rice Varieties Based on Self-Attention Mechanism. Ecol. Inform. 2023, 75, 102035. [Google Scholar] [CrossRef]

- Zhou, X.; Zhao, C.; Sun, J.; Cao, Y.; Yao, K.; Xu, M. A Deep Learning Method for Predicting Lead Content in Oilseed Rape Leaves Using Fluorescence Hyperspectral Imaging. Food Chem. 2023, 409, 135251. [Google Scholar] [CrossRef] [PubMed]

- Tan, Z.L.; Wu, M.C.; Li, J.; Wang, Q.Z. Decay Mechanism of the Chestnut Stored in Low Temperature. Adv. Mater. Res. 2012, 554–556, 1337–1345. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, J.; Wang, A. Comparison of Various Approaches for Estimating Leaf Water Content and Stomatal Conductance in Different Plant Species Using Hyperspectral Data. Ecol. Indic. 2022, 142, 109278. [Google Scholar] [CrossRef]

- Gewers, F.L.; Ferreira, G.R.; Arruda, H.F.D.; Silva, F.N.; Comin, C.H.; Amancio, D.R.; Costa, L.D.F. Principal Component Analysis: A Natural Approach to Data Exploration. ACM Comput. Surv. 2022, 54, 1–34. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, C.; Zhu, S.; Gao, P.; Feng, L.; He, Y. Non-Destructive and Rapid Variety Discrimination and Visualization of Single Grape Seed Using Near-Infrared Hyperspectral Imaging Technique and Multivariate Analysis. Molecules 2018, 23, 1352. [Google Scholar] [CrossRef]

- Guan, H.; Yu, M.; Ma, X.; Li, L.; Yang, C.; Yang, J. A Recognition Method of Mushroom Mycelium Varieties Based on Near-Infrared Spectroscopy and Deep Learning Model. Infrared Phys. Technol. 2022, 127, 104428. [Google Scholar] [CrossRef]

- Fearn, T.; Riccioli, C.; Garrido-Varo, A.; Guerrero-Ginel, J.E. On the Geometry of SNV and MSC. Chemom. Intell. Lab. Syst. 2009, 96, 22–26. [Google Scholar] [CrossRef]

- Yang, Y.; Zhao, Y.; Zuo, Z.; Wang, Y. Determination of Total Flavonoids for Paris polyphylla var. yunnanensis in Different Geographical Origins Using UV and FT-IR Spectroscopy. J. AOAC Int. 2019, 102, 457–464. [Google Scholar] [CrossRef]

- Wieme, J.; Mollazade, K.; Malounas, I.; Zude-Sasse, M.; Zhao, M.; Gowen, A.; Argyropoulos, D.; Fountas, S.; Van Beek, J. Application of Hyperspectral Imaging Systems and Artificial Intelligence for Quality Assessment of Fruit, Vegetables and Mushrooms: A Review. Biosyst. Eng. 2022, 222, 156–176. [Google Scholar] [CrossRef]

- Araújo, M.C.U.; Saldanha, T.C.B.; Galvão, R.K.H.; Yoneyama, T.; Chame, H.C.; Visani, V. The Successive Projections Algorithm for Variable Selection in Spectroscopic Multicomponent Analysis. Chemom. Intell. Lab. Syst. 2001, 57, 65–73. [Google Scholar] [CrossRef]

- Sánchez-Esteva, S.; Knadel, M.; Kucheryavskiy, S.; de Jonge, L.W.; Rubæk, G.H.; Hermansen, C.; Heckrath, G. Combining Laser-Induced Breakdown Spectroscopy (LIBS) and Visible Near-Infrared Spectroscopy (Vis-NIRS) for Soil Phosphorus Determination. Sensors 2020, 20, 5419. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Chen, J.; Zhang, J.; Tan, X.; Ali Raza, M.; Ma, J.; Zhu, Y.; Yang, F.; Yang, W. Assessing Canopy Nitrogen and Carbon Content in Maize by Canopy Spectral Reflectance and Uninformative Variable Elimination. Crop J. 2022, 10, 1224–1238. [Google Scholar] [CrossRef]

- Mansuri, S.M.; Chakraborty, S.K.; Mahanti, N.K.; Pandiselvam, R. Effect of Germ Orientation during Vis-NIR Hyperspectral Imaging for the Detection of Fungal Contamination in Maize Kernel Using PLS-DA, ANN and 1D-CNN Modelling. Food Control 2022, 139, 109077. [Google Scholar] [CrossRef]

- Qiu, Z.; Chen, J.; Zhao, Y.; Zhu, S.; He, Y.; Zhang, C. Variety Identification of Single Rice Seed Using Hyperspectral Imaging Combined with Convolutional Neural Network. Appl. Sci. 2018, 8, 212. [Google Scholar] [CrossRef]

- Hu, Y.; Sun, J.; Zhan, C.; Huang, P.; Kang, Z. Identification and Quantification of Adulterated Tieguanyin Based on the Fluorescence Hyperspectral Image Technique. J. Food Compos. Anal. 2023, 120, 105343. [Google Scholar] [CrossRef]

- Zhang, M.; Li, W.; Du, Q. Diverse Region-Based CNN for Hyperspectral Image Classification. IEEE Trans. Image Process. 2018, 27, 2623–2634. [Google Scholar] [CrossRef]

- Lee, W.; Lenferink, A.T.M.; Otto, C.; Offerhaus, H.L. Classifying Raman Spectra of Extracellular Vesicles Based on Convolutional Neural Networks for Prostate Cancer Detection. J. Raman Spectrosc. 2020, 51, 293–300. [Google Scholar] [CrossRef]

- Kong, D.; Shi, Y.; Sun, D.; Zhou, L.; Zhang, W.; Qiu, R.; He, Y. Hyperspectral Imaging Coupled with CNN: A Powerful Approach for Quantitative Identification of Feather Meal and Fish by-Product Meal Adulterated in Marine Fishmeal. Microchem. J. 2022, 180, 107517. [Google Scholar] [CrossRef]

- Zhou, F.; Hang, R.; Liu, Q.; Yuan, X. Hyperspectral Image Classification Using Spectral-Spatial LSTMs. Neurocomputing 2019, 328, 39–47. [Google Scholar] [CrossRef]

- Gers, F.A.; Schraudolph, N.N.; Schmidhuber, J. Learning Precise Timing with LSTM Recurrent Networks. J. Mach. Learn. Res. 2002, 3, 115–143. [Google Scholar]

- Kang, R.; Park, B.; Ouyang, Q.; Ren, N. Rapid Identification of Foodborne Bacteria with Hyperspectral Microscopic Imaging and Artificial Intelligence Classification Algorithms. Food Control 2021, 130, 108379. [Google Scholar] [CrossRef]

- Feng, L.; Zhu, S.; Lin, F.; Su, Z.; Yuan, K.; Zhao, Y.; He, Y.; Zhang, C. Detection of Oil Chestnuts Infected by Blue Mold Using Near-Infrared Hyperspectral Imaging Combined with Artificial Neural Networks. Sensors 2018, 18, 1944. [Google Scholar] [CrossRef]

- Moscetti, R.; Haff, R.P.; Saranwong, S.; Monarca, D.; Cecchini, M.; Massantini, R. Nondestructive Detection of Insect Infested Chestnuts Based on NIR Spectroscopy. Postharvest Biol. Technol. 2014, 87, 88–94. [Google Scholar] [CrossRef]

- Nicolaï, B.M.; Defraeye, T.; De Ketelaere, B.; Herremans, E.; Hertog, M.L.A.T.M.; Saeys, W.; Torricelli, A.; Vandendriessche, T.; Verboven, P. Nondestructive Measurement of Fruit and Vegetable Quality. Annu. Rev. Food Sci. Technol. 2014, 5, 285–312. [Google Scholar] [CrossRef]

- Qi, C.; Sandroni, M.; Cairo Westergaard, J.; Høegh Riis Sundmark, E.; Bagge, M.; Alexandersson, E.; Gao, J. In-Field Classification of the Asymptomatic Biotrophic Phase of Potato Late Blight Based on Deep Learning and Proximal Hyperspectral Imaging. Comput. Electron. Agric. 2023, 205, 107585. [Google Scholar] [CrossRef]

- Li, X.; Jiang, H.; Jiang, X.; Shi, M. Identification of Geographical Origin of Chinese Chestnuts Using Hyperspectral Imaging with 1D-CNN Algorithm. Agriculture 2021, 11, 1274. [Google Scholar] [CrossRef]

- Han, Y.; Liu, Z.; Khoshelham, K.; Bai, S.H. Quality Estimation of Nuts Using Deep Learning Classification of Hyperspectral Imagery. Comput. Electron. Agric. 2021, 180, 105868. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).