We define the task of personalized healthy food recommendation as follows: given the , the goal is to learn a prediction function with parameters that can capture both the user’s preference and health requirements, and output the matching degree between user and recipe .

5.1. Preliminary

Before delving into the details of our proposed model, we introduce the data preprocessing steps and how the model works in this section. This will help readers understand the underlying processes.

When constructing the positive and negative sample sets for user preference, for each user, the recipes that have been interacted with are considered as the positive sample set , while the recipes that have not been interacted with are considered as the negative sample set .

When constructing the positive and negative sample sets for user health, the

calculation method proposed in

Section 4 is first applied to calculate the

between all users and recipes in the recommendation dataset. Based on the

values between the user and the recipe, the positive and negative sample sets for healthy recipes,

and

, are defined for each user. If the

between a user and a recipe is greater than or equal to 36, the recipe is added to the negative sample set

for that user. The positive and negative sample sets for user health defined here will be used for the health learning task of the model.

The multi-task learning stage of

learns the user’s preference and health needs through the preference learning task and the health learning task. In both learning tasks, the Bayesian Personalized Ranking [

43] loss is used as the loss function [

44] to optimize the model parameters. The optimization goal is to make the model’s recommendation results closer to the recipes in the positive sample set. In deep learning, the loss function is a function that measures the gap between the model’s prediction results and the true results. During the training process, the optimization algorithm adjusts the model’s parameters continuously, making the value of the loss function gradually decrease, thereby making the model’s prediction results closer and closer to the target results.

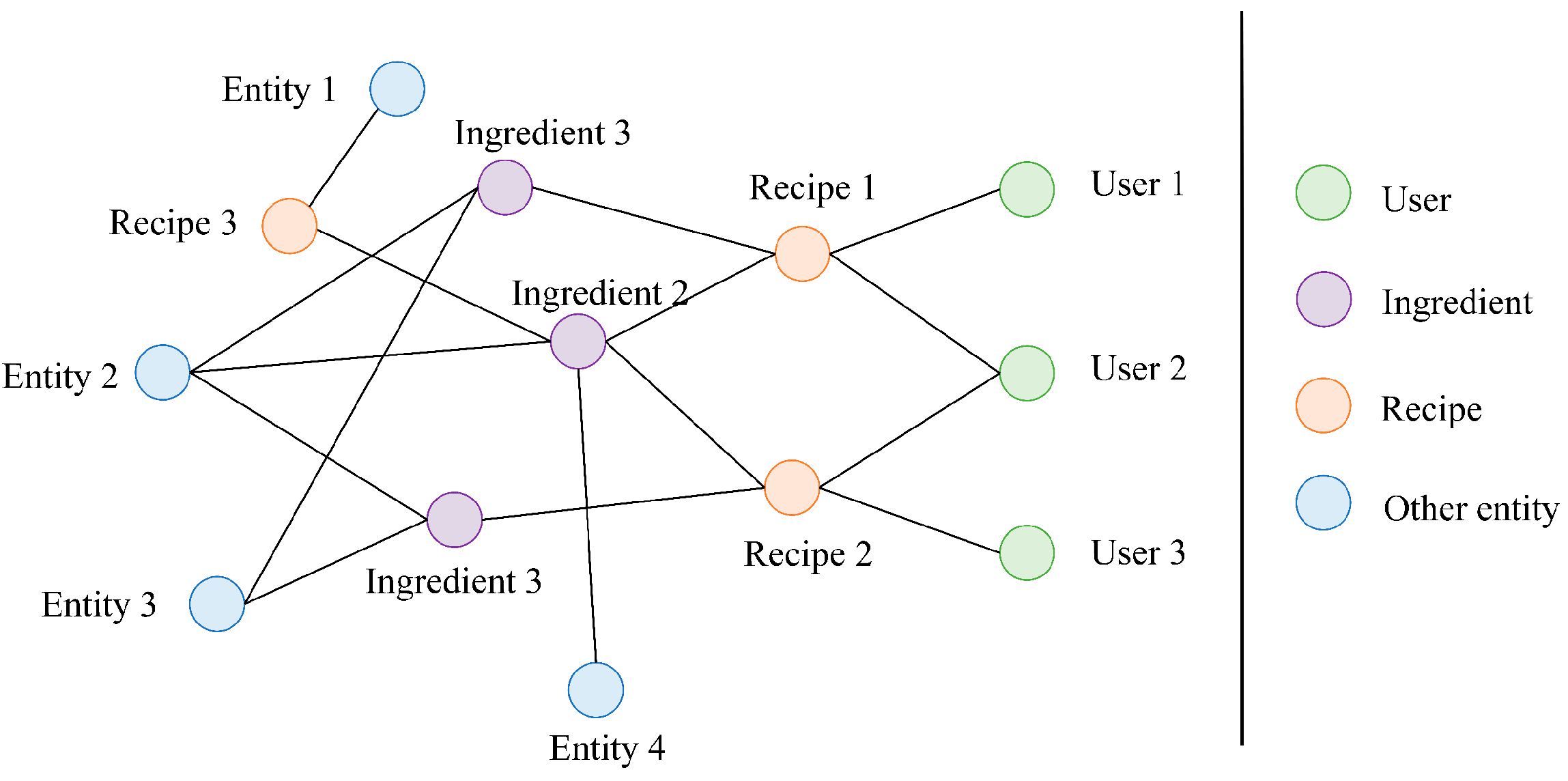

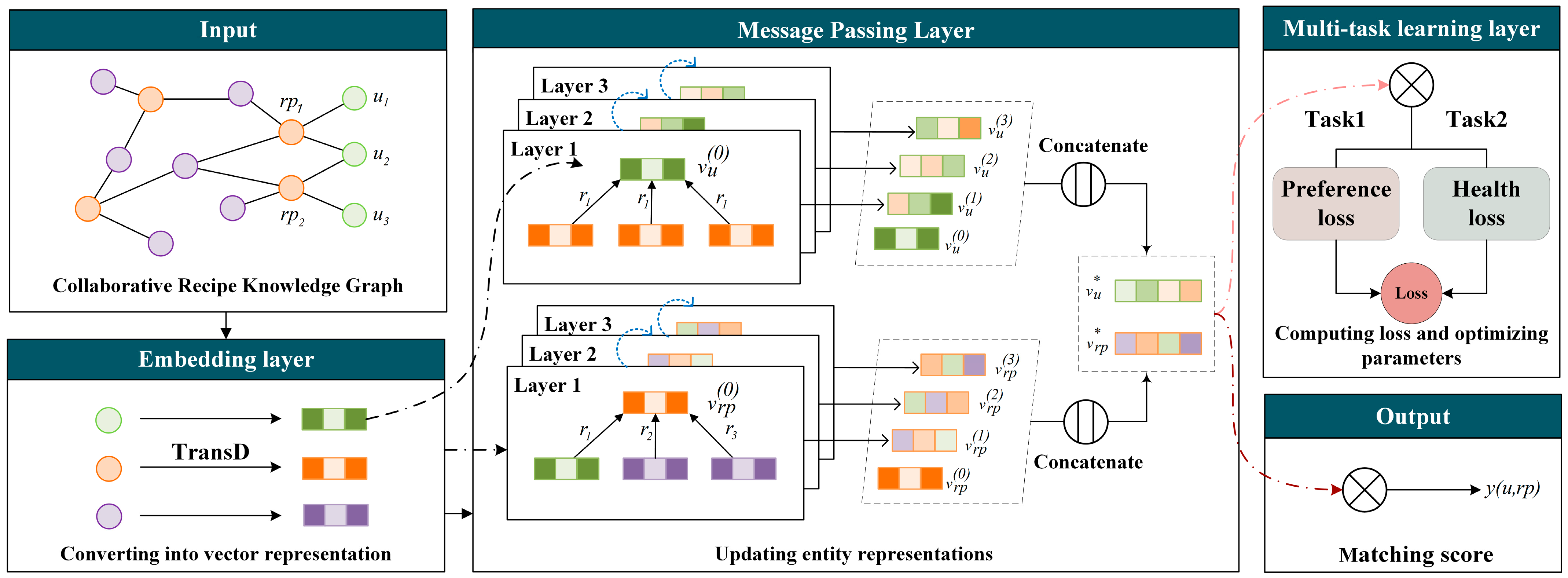

The overall framework and operation process of the model is introduced below. The framework of the healthy food recommendation model

is illustrated in

Figure 2, which consists of three components: (1) the embedding layer, which vectorizes each entity and relation in

into a vector through knowledge graph embedding; (2) the message passing layer, which utilizes a knowledge-aware attention graph convolutional neural network to perform iterative updates on the vector representation of each entity by receiving messages from its neighborhood; and (3) the multi-task learning layer, performing the preference learning task and health learning task, respectively.

The input of the

is

constructed in

Section 3. Firstly, in the embedding layer, all the entities and relations on the

are embedded into vector [

45] forms so that the computer can better understand and process this data. The vectors corresponding to entities or relations are also known as their representation. Then, in the message passing layer, each entity on the

iteratively receives information from its neighboring entities that are connected to itself and assigns different weights to the information from different neighboring entities according to the relation attention coefficients. This process is also known as message passing. Through multiple layers of message passing, each entity can obtain extensive neighborhood information, thereby better capturing the complex relations in the graph structure.

Figure 2 shows an example of three-layer message passing, where an entity is updated to a new representation after each layer of message passing. The final representation of an entity is obtained by concatenating its vector representation before message passing and its vector representations after each layer of updating.

Then, the model predicts the matching score between a user and a recipe by taking the inner product of vectors [

46], a method commonly used in recommendation or classification tasks to calculate the similarity between two vectors. During the optimization phase of the model, which is the multi-task learning layer, the preference learning task calculates the loss of the model in terms of preference prediction and the health learning task calculates the loss of the model in predicting health requirements. The two losses are then weighted and summed to obtain the final loss used for optimizing the model parameters.

5.2. Embedding Layer

Embedding is the process of transforming entities and edges into vectors, which are usually called embedding vectors. Embedding vectors can map entities and edges from their original symbolic form (such as strings or IDs) to a continuous vector space, which facilitates computation and processing. We first used xavier_uniform to initialize the vectors of entities and relations. Then we used knowledge graph embedding to update the vector representations. Knowledge graph embedding aims to learn the latent representations of entities and relationships in the knowledge graph while preserving the structural information of the graph.

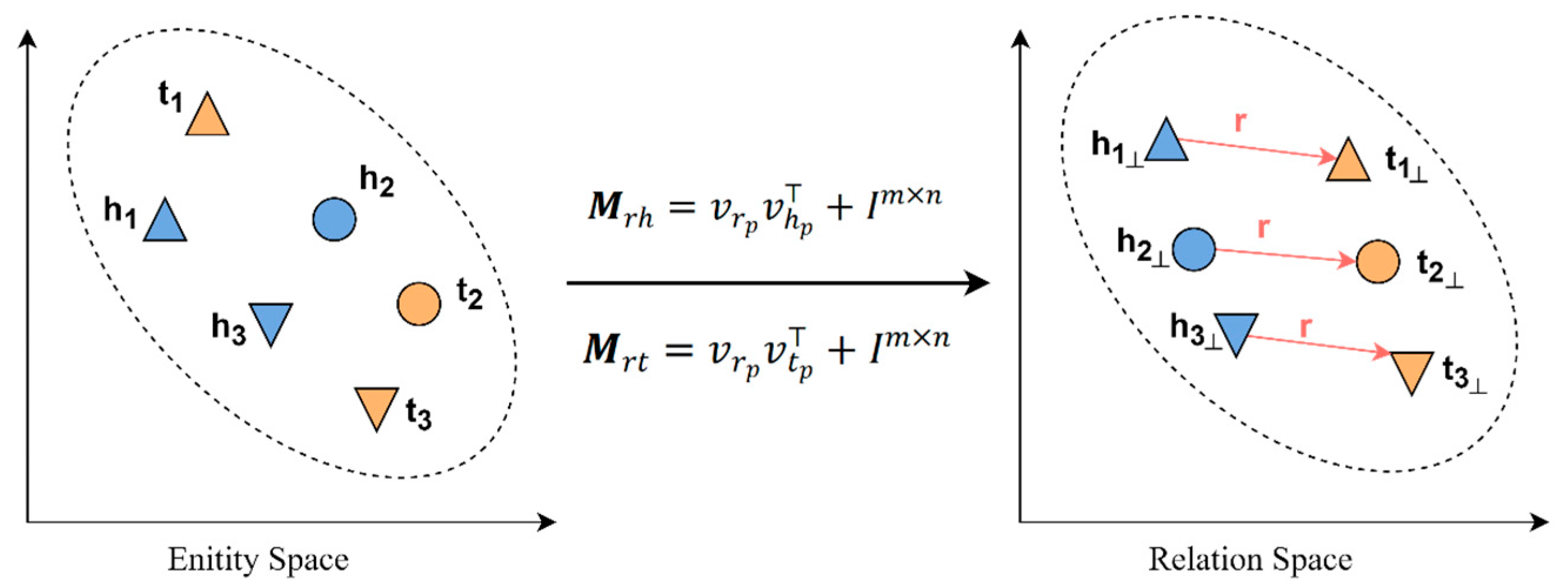

is a heterogeneous graph with rich semantic information. We adopted

[

15] as the method of knowledge graph embedding, which embeds entities and edges in

into continuous vector space while retaining its structural information.

employs a method of dynamically constructing mapping matrices. Given a triplet

, its vector includes

,

,

,

,

, and

, where the subscript

indicates the projected vector,

,

,

,

and

,

. As shown in

Figure 3,

maps the head entity

and tail entity

into a common space constructed by the entity and relation in the triplet using two mapping matrices,

and

.

The head entity

and tail entity

are mapped to the relation space by constructing the projection matrix, as shown in Equation (5).

The symbols

and

in Equation (5) indicate the representations of the head entity

and the tail entity

in the relation space, respectively. The plausibility score

of a triplet is defined by Equation (6).

uses embedding scores to measure the plausibility of a triplet’s embedding in a knowledge graph. The embedding score of a triplet

is defined by Equation (6), where a higher score indicates a more plausible embedding and a lower score indicates a less plausible one. The

scoring function first projects the head and tail entities into vector spaces that correspond to the relation and then calculate the distance between the projected head entity and the projected tail entity plus the relation vector. The smaller the distance, the higher the score, indicating the plausibility of the triplet. In other words, if the projected head entity is close to the projected tail entity plus the relation vector, then it is more likely that the triplet

is valid in the knowledge graph.

adopts the Bayesian Personalized Ranking loss, which aims to maximize the margin between positive and negative samples. The loss function is shown in Equation (7).

In Equation (7), represents the set of true triplets in the , which has positive samples, while represents the negative samples. Negative samples are constructed by randomly selecting an entity to replace the true tail entity in the triplet . denotes the function.

5.3. Message Passing Layer

In this layer, the model constructs a knowledge-aware attention graph convolutional neural network, which updates entity representations through message passing [

47]. In the message passing layer, each entity in

broadcasts its representation to its first-order neighborhood entities that are directly connected to it and receive information from its neighborhood entities. The first-order neighborhood of an entity refers to the entities that are directly connected to it, while the high-order neighborhood refers to the entities that are indirectly connected to it. In one layer of message passing, all entities in

integrate the received neighborhood information to generate their new representations. By stacking multiple layers of such operations, each entity can receive information from high-order neighborhoods, thus capturing the high-order similarity between entities. Inspired by

[

23], we introduced a knowledge-aware attention mechanism in the message-passing process, which can better distinguish the influence of different relations on entities in

.

The first stage of message passing is to receive information from neighboring entities. The computation method for propagating neighborhood information for an entity

on

is defined as Equation (8).

Here,

represents the neighborhood information of entity

,

represents the set of all neighboring entities of entity

,

denotes the representation of the neighboring entity

, and

is the normalized relation attention coefficient between entity

and entity

under relation

. When entity

and entity

are mapped to relation space

and their distance is closer to

, it indicates that entity

is more important to entity

under relation space

. In this step, the representations of all neighboring entities of entity

are multiplied by the normalized relation attention coefficients and summed to obtain the neighborhood information of entity

. The definition of relation attention coefficient

is shown in Equation (9).

We used the

function to normalize the relation attention coefficients for all neighboring entities connected to entity

, as shown in Equation (10). The relation attention coefficient distinguishes the different importance levels of neighboring entities when entity

e receives their information.

After obtaining all the information from the neighborhood of entity

, the next step is to aggregate the information. In this step, the neighborhood information of entity

and the representation of entity

itself are aggregated to update the representation of entity

. We adopted the bi-interaction approach to aggregate the neighborhood information, which can comprehensively capture and process the interaction information between entities. The specific aggregation formula is shown in Equation (11).

The matrices

are trainable parameter matrices, and the

operator denotes element-wise multiplication between vectors. The

activation function was used. After one layer of message passing, the entity representation is updated, which is abstracted as Equation (12).

Here, represents the representation of entity in the - layer of message passing. After one layer of message passing, the entity updates its representation based on the aggregation function and neighborhood information, enabling it to obtain information from the first-order neighborhood. By stacking more layers, entities on graph can capture information from higher-order neighborhoods, and thus mine users’ latent preferences.

After

layers of message passing, entities on

are updated to a new representation at each layer. The final representation of entities on

is obtained by concatenating their representations across all layers.

The model outputs the predicted matching score by calculating the inner product between the user entity representation

and the recipe entity representation

. The larger the inner product between the user entity representation

and the recipe entity representation

, the higher the degree of match between them predicted by the model.

5.4. Multi-Task Learning Layer

The task of the model is to learn the user’s preferences and health requirements, so two learning tasks are set at the multi-task learning layer, namely the preference learning task and the health learning task.

5.4.1. Health Learning Task

In

Section 4.3, we define the Nutrient Discrepancy Score of a recipe for a user, where a higher

means that in terms of health, recipe

is less suitable for user

. Based on the Nutrient Discrepancy Score, we defined a healthy positive sample set

and a healthy negative sample set

for each user. In the context of the health learning task, it is anticipated that the value of

will be higher when the recipe

is better aligned with the health requirements of user

, and conversely, lower when the recipe is less suitable for user

’s health needs. We used the idea of the Bayesian Personalized Ranking loss, and for the health learning task, the loss function

is shown in Equation (15).

In Equation (15), and represent the between user and recipe and between user and recipe , respectively. is the function. Recipe is randomly selected from user healthy positive sample set , while recipe is randomly selected from user healthy negative sample set . For user , the of recipes in is lower than that of recipes in . The difference in between the healthy positive sample and the healthy negative sample is used as the weight for the score difference of the predicted matching between the healthy positive and negative samples. When the difference in between the two samples is larger, the match between these two recipes and user health requirements is further apart, and the loss in the health learning task is greater.

5.4.2. Preference Learning Task

For the preference learning task, the expectation is that

should be greater than

when there exists an interaction between user

and recipe

but not between user

and recipe

. We used the Bayesian Personalized Ranking loss as the loss function

to optimize the model parameters, which is formulated as Equation (16).

In Equation (16), is the set of positive samples of user interactions, i.e., the interactions between user and recipe that exist in the interaction history. is the set of negative samples of user interactions, i.e., the interactions between user and recipe that do not exist.

5.4.3. Multi-Task Loss Combination

The final objective function is a weighted combination of

and

, as defined in Equation (17), where

is the health loss weight of the health learning task and

is the regularization term [

48] used to prevent overfitting.

We used mini-batch Adam [

49] to optimize the prediction loss and update the model’s parameters. Adam is an algorithm used for gradient descent optimization, typically used for training deep learning models. Adam has the characteristic of an adaptive learning rate and performs well in handling large-scale datasets and high-dimensional parameter spaces.