1. Introduction

I had always envisaged a time when scholars would become citation conscious, and to a large extent they have, for information retrieval, evaluation, and measuring impact…I did not imagine that the worldwide scholarly enterprise would grow to its present size, or that bibliometrics would become so widespread.

Eugene Garfield advanced the theory and practice of information science, raised awareness about citations and academic impact, actively promoted English as the lingua franca of science, and became a powerful force in the globalization of research. He envisioned innovative information systems that made the retrieval of scientific information much more efficient.

Eugene Garfield

The creation of the Science Citation Index (SCI) is one of the most important events in modern science [

1,

2,

3]. Garfield’s idea of using citations in articles to index scientific literature offered a new way of collecting, analyzing, disseminating, and discovering scientific information. The SCI laid the foundation for building new information products such as Web of Science (one of the most widely used databases for finding scientific literature today), Essential Science Indicators, and Journal Citation Reports. It triggered the development of new disciplines such as scientometrics, infometrics, and webometrics [

4] and preceded the search engines (some have called Garfield “The Grandfather of Google”), which use “citation linking”—a core concept of the SCI—to connect and rank documents. Was “citation linking” on Sergey Brin’s and Larry Page’s minds when they were writing their article in which Google was mentioned for the first time [

5]?

Some important developments prepared the ground for the emergence of a tool such as the SCI. In the years after World War II, there was a significant increase in funding for research, which led to a rapid expansion of science and literature growth. There was widespread dissatisfaction with the traditional indexing and abstracting services, because they were discipline-focused and took a long time to reach users. Researchers wanted recognition, and there was a need for quantifiable tools to evaluate journals and individuals’ work [

6].

The journal impact factor (IF) has become the most accepted tool for measuring the quality of journals. Originally designed to select journals for the SCI, it has been used [largely inappropriately] for measuring the quality of individual researchers’ work, as well as in science policy and research funding [

3,

7,

8,

9,

10]. As researchers are evaluated, hired, promoted, and funded based on the impact of their work, the importance of publishing in high-impact journals has created an extremely competitive environment, where the “publish or perish” philosophy dominates academic life.

Many articles, books, and conference presentations discuss and analyze Garfield’s ideas and legacy [

3]. I have conducted two interviews with him [

1,

11], which provided information about how he came to the idea of creating the SCI and his views about the future of scientific publishing, discovery of scientific information, and measuring academic impact. The American Chemical Society (ACS) celebrated Garfield’s legacy in a special presidential symposium, “Information Legacy of Eugene Garfield: From the Chicken Coop to the World Wide Web”, held at the ACS Spring National Meeting in New Orleans in 2018. Some of the information included in this article was presented at that event [

12]. This article looks at how Garfield’s ideas and legacy have influenced the culture of research.

2. Revolutionizing Scientific Information

The citation becomes the subject … It was a radical approach to retrieving information.

Eugene Garfield (Interviewed by Eric Ramsay) [

13]

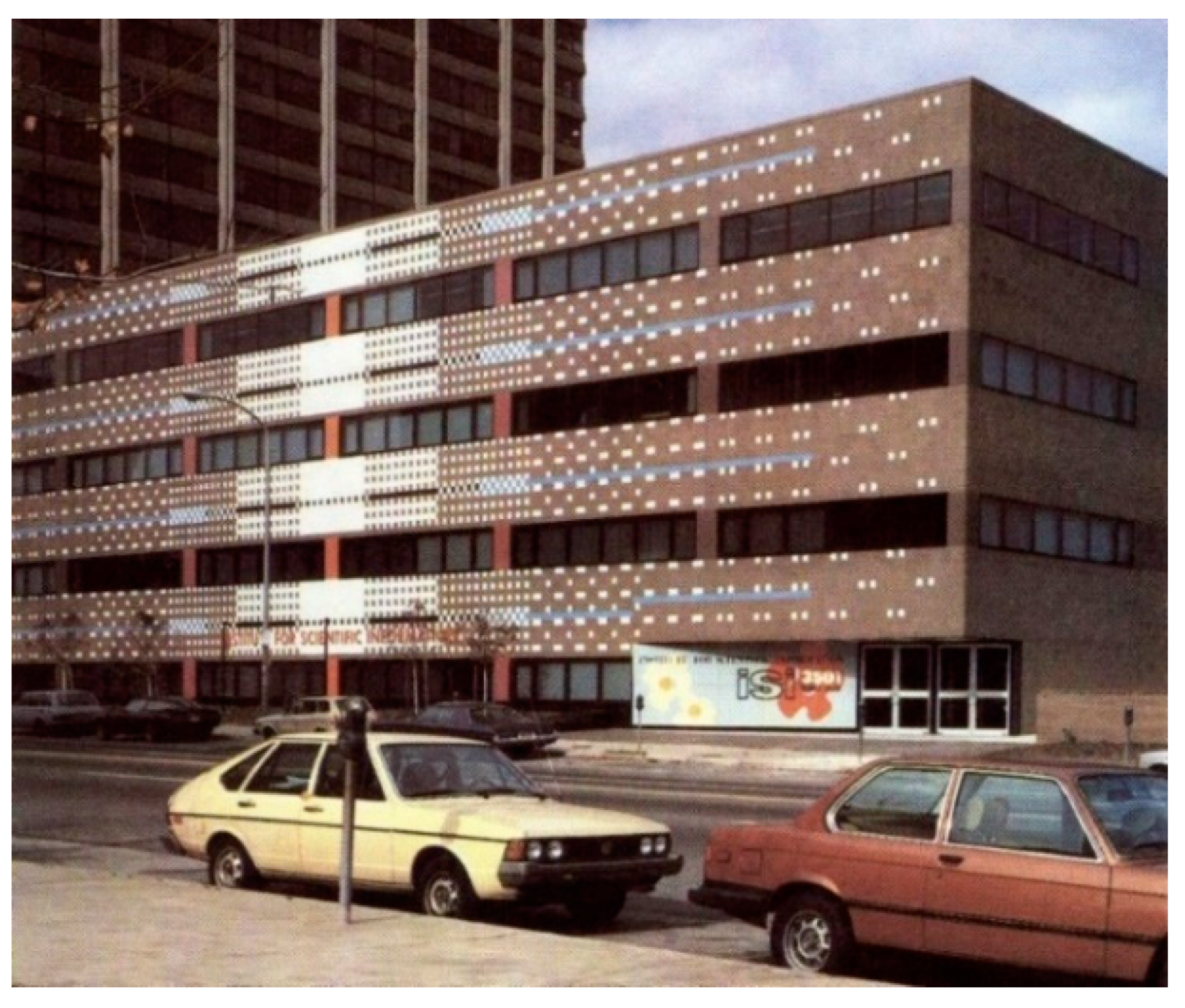

In 1960, Garfield founded the Institute for Scientific Information (ISI) in Philadelphia (

Figure 1), which became a powerful engine for developing innovative information products [

14,

15].

Garfield will be remembered mostly for the

Current Contents and the Science Citation Index (SCI), but other important information products are also part of his legacy (

Table 1). Garfield was very interested in chemistry and often said that chemical information was his first love. He earned a BS in chemistry from Columbia University and devoted his dissertation to the translation of chemical names to molecular formulas. ISI launched Index Chemicus, Current Chemical Reactions, and Reaction Citation Index at a time when no such resources were available to chemists. These databases preceded the Chemical Abstracts Service’s database that researchers now use through SciFinder and STN.

While working at the Welch Medical Library at Johns Hopkins University in the early 1950s, Garfield studied the role and importance of review articles and their linguistic structure. He concluded that each sentence in a review article could be an indexing statement and that there should be a way to structure it. About that time, he also became interested in the idea of creating an equivalent to Shepard’s Citations (a legal index for case law) for science. In an interview that this author conducted with him in 2015, Garfield described how these events led him to conceive the SCI [

1]. It is strange to imagine today how one of the most important resources for science could have been created in a converted chicken coop in rural New Jersey (

Figure 2).

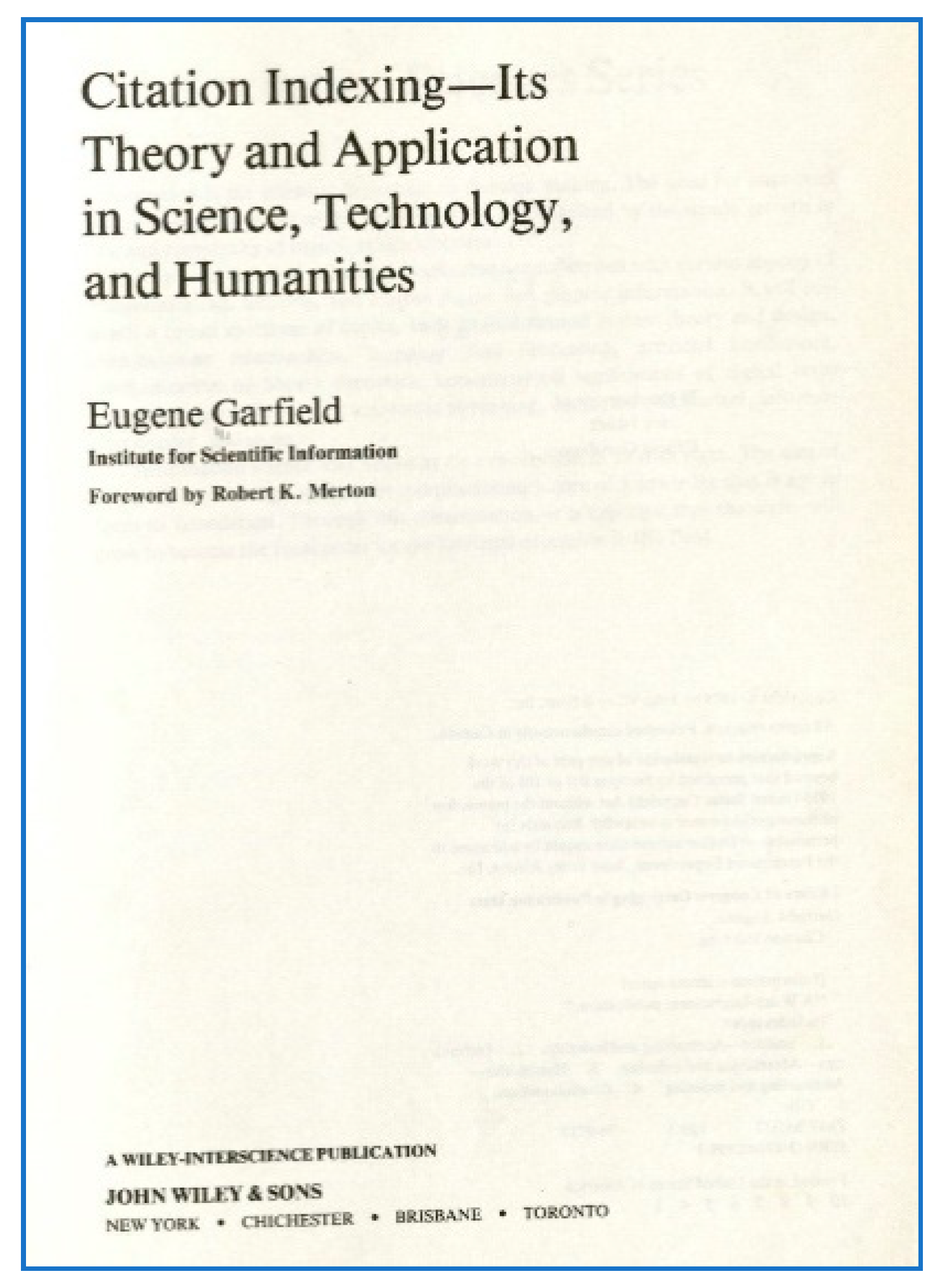

In 1964, Garfield created the SCI and introduced it as a “new dimension in indexing” [

2]. Citation indexing uses the concept that the literature cited in an article represents the most significant research performed before and that, when an article cites another article, there should be something in common between the two. In this case, each bibliographic citation acts as a descriptor or symbol that describes a question, treated from a certain aspect in the cited work. In his famous book (

Citation Indexing—Its Theory and Application in Science, Technology, and Humanities), Garfield presented the core concepts on which SCI was built [

16] (

Figure 3).

The SCI is a multidisciplinary directory to scientific literature. It provided a new way of organizing, disseminating, and retrieving scientific information. The SCI facilitated interdisciplinary research and stimulated international collaborations. The citation indexes produced by ISI were the most important sources of bibliometric information before Reed Elsevier launched Scopus in 2004. Most of the products associated with Garfield’s legacy are now part of the Clarivate Analytics’ platform Web of Science (WoS). In addition to the SCI and the Social Sciences Citation Index (SSCI), this platform includes other databases such as the Conference Proceedings Citation Index, Arts and Humanities Citation Index, Book Citation Index, Index Chemicus, and Current Chemical Reactions. It also provides access to the Derwent Patent Database. WoS is now one of the most widely used resources for scientific information.

3. A Little Weekly Journal with a Global Reach

At a time when there were no online databases and internet, Garfield came up with the simple idea of making current scientific information available to researchers all over the world by compiling the tables of contents of key scientific journals and publishing them in a weekly journal called Current Contents (CC). Pocket-sized and printed on thin paper (to reduce mailing costs), this portable journal had series for agricultural, biological, and environmental sciences; arts and humanities; clinical practice; engineering, technology, and applied sciences; life science; physical chemical and earth sciences; and social and behavioral sciences. Instead of browsing the actual print issues, researchers could look quickly at the journals’ tables of contents. For many years, CC was the only source for current scientific information for researchers all over the world. An author index allowed them to send requests for article reprints—an invaluable resource for researchers in countries that could not afford subscriptions to these journals.

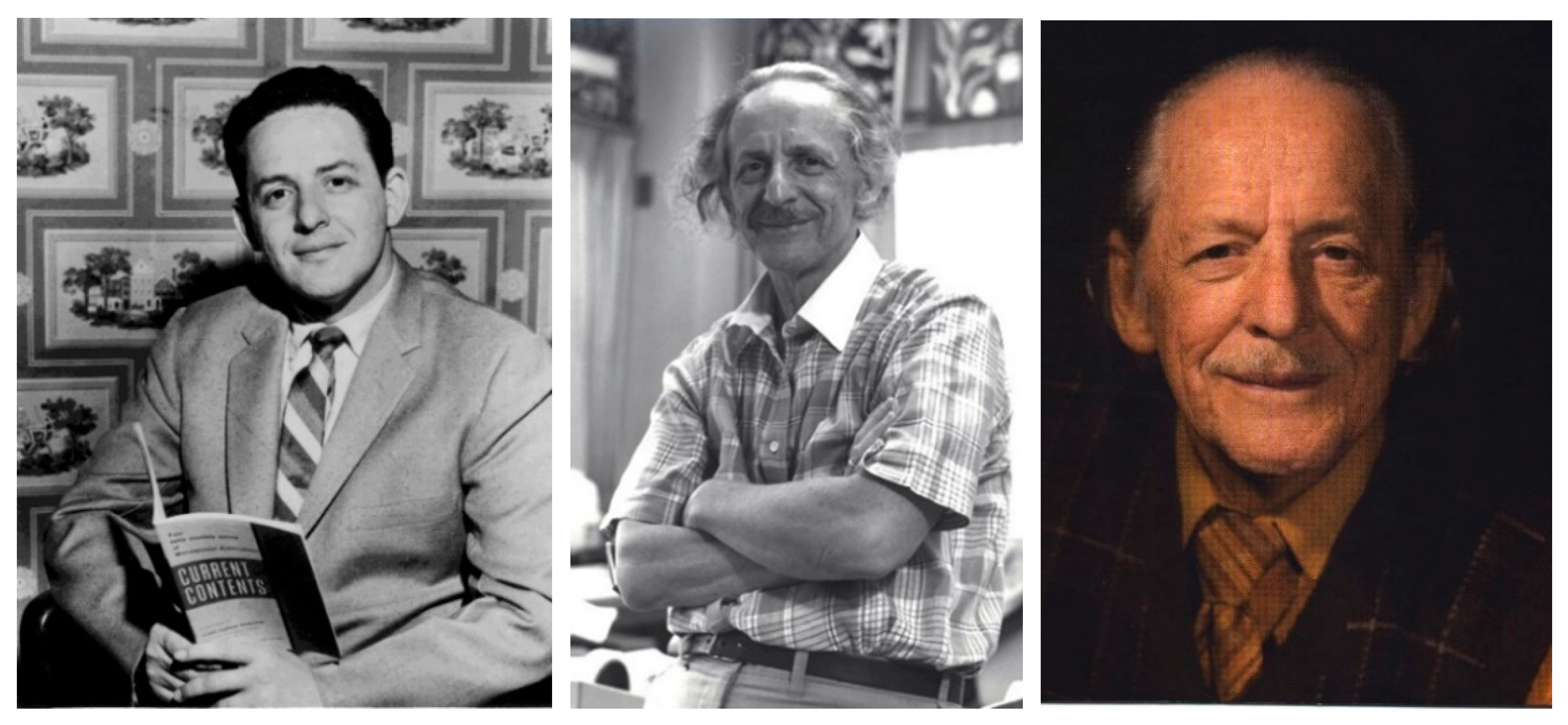

Almost every issue of

Current Contents included an essay by Garfield, accompanied by his picture (

Figure 4). Garfield was a prolific writer; he wrote more than 1 500 essays for

CC, which were later collected in a 15-volume series under the title

Essays of an Information Scientist (

Figure 5). The essays reflected his own interests, but they also touched on topics of interest to a larger audience. He wrote about medical problems, jazz, psychedelic art, ice cream, and windsurfing, but he also discussed citation indexing, new information technologies, Nobel Prizes, scientific publishing, and cultural institutions. In his essays, Garfield was able to convey his ideas in a such way that even people who were not familiar with the topic could understand what he was saying. Some have blamed him for being too personal and that the essays were autobiographical—“a journey to himself”. He defended his style and the topics he wrote about by saying that it was these essays for which he received the most requests for reprints. In one of his interviews, he talked about the essays and their impact: “… there never was a shortage of topics to cover in CC. We had meetings every week and put out ideas on topics to cover and then we would assign them to one of my many assistants. As the years went by the scope became larger and larger. One series of essays we did took over two years to complete, since we had to generate a database for each part. Some of the more personal topics just came up in the course of everyday events. Eventually I realized that our readers were happy to have a distraction from the scientific topics and found personal subjects more interesting. Topics like jazz and family appealed to a lot of people. The CC essays were very popular in East Europe because CC was not censored. I wrote in such a way that it gave those scientists a window on the west, but did not offend their bosses” [

11].

Reading and discussing Garfield’s essays became a favorite pastime for many scientists who impatiently waited to see the next issue of CC—not only to find out what was published in the newest issues of the scientific journals, but also to read his essay. Garfield’s writings had a huge educational impact on readers, as they allowed them to see beyond their own discipline and narrow area of research.

4. The Making of a Citation-Conscious [or Citation-Obsessed] Society

There are many reasons why the IF has attracted so much attention. The impact of researchers’ work is measured by the quality of the journals in which they have published. Having articles in high-impact journals is of critical importance for researchers’ promotions and research funding. The term “impact factor” has gradually changed to include both journal and author impact. The IF of the journals in which articles are published and the number of times they have been cited are taken into account when evaluating researchers’ work. The IF [

7], a byproduct from the SCI, was originally designed as a tool to select journals for coverage in the SCI. It measures the journal’s performance by the frequency with which it was cited. IF is used to compare and rank journals—those with higher IF are often considered to be of higher quality than journals with lower IF within their subject areas. Journal Citation Reports (JCR) publishes the IFs of journals and their relative ranking in different disciplines.

Many articles have discussed the use [and abuse] of citations and the IF [

3,

7,

8,

9,

10,

17,

18,

19,

20]. Satyanarayana [

6] pointed out the limitations and deficiencies of using citation-based evaluation systems. Some of the criticisms are about the non-transparent policy of journal selection for inclusion in the citation databases and the awkward calculation of the IF. There are many possible scenarios for manipulating citations and IFs. Publishing longer papers, controversial articles, and more reviews (which receive more citations than original research papers) will boost a journal’s IF. Changing journal content in favor of fields with higher citation rates (such as molecular biology) while dropping sections that bring few citations and avoiding publishing articles that are less likely to be cited or such that do not fall in the editors’ view of “novelty” will also increase citations and the IF [

17,

18,

19,

20].

Citations and IFs heavily depend on the citation behavior of individual scientists and vary from discipline to discipline [

1,

11]. Some of the intentional misuse that can skew IFs include avoiding citing competitors, extensive self-citing, “citation padding” (scholars citing each other to boost their citation numbers), citation bias (for example, not citing articles by authors from some countries), or copying (plagiarizing) citations from other articles. As people tend to read papers from higher-ranking journals, such papers are more likely to be cited, which will also increase the IFs of these journals.

SCI made it easy for researchers to find literature on past research by using the citations included in articles. This also makes it easy to find literature without making a big effort. Mike Kessler, at ISI, looked at the similarities between individual papers in terms of what these papers were citing [

21]. He used the term “bibliographic coupling” to measure similarities between individual papers. For example, if Paper A cites a group of papers in common with the papers cited in Paper B, papers A and B have something in common. If the two papers have 100% bibliographic coupling, this could suggest a case of plagiarism. Bibliographic coupling could lead to the marginalization of some papers in the field in favor of others. Biased citations, if copied by others, could perpetuate a distorted view of a field.

There are also other factors that could influence citation counts, such as the online availability of articles or continued citing of articles retracted from journals. Some journals may limit the number of references included in a paper. When there are many papers that are important, authors may decide to (1) cite a review paper rather than original primary research articles, (2) mention the original paper only in the text but omit it from the list of references, which will not count the original paper as a citation, or (3) cite papers published in highly ranked journals rather than ones published in lower-tier journals. Some authors may intentionally cite review papers, to avoid citing original research papers of competitors. As more and more of the primary research data and methods are buried in the supplementary sections of papers and are not counted as citations, reviews get an even bigger share of the citations.

SCI and IFs have caught the attention of those who direct the course of scientific research. Academic administrators closely monitor bibliometric and citation patterns to make strategic and funding decisions. Garfield has often argued against using SCI in science policy, because SCI was designed only for information retrieval [

1].

Some governments have implemented policies that provide incentives for researchers to publish in highly ranked international journals, particularly in

Science and

Nature [

9]. It is inappropriate to apply the IF of a journal to an individual article published in it (even though the article may have never been cited) or to use it as proxy to evaluate researchers for hiring, promotion, and monetary rewards. What matters is the citation count for an individual article and not the IF of the journal.

The existing criteria for getting positions and funding are unfavorable to researchers at an early stage of their careers, as it takes time to accumulate citations and have papers published in high-impact journals. Kun shows how the advantage of heritage, social status, and good education are likely to secure successful careers. He is concerned that the “publish or perish” principle could lead to “… an excessive focus on the publication of groundbreaking results in prestigious journals. But science cannot only be groundbreaking, as there is a lot of important digging to do after new discoveries—but there is not enough credit in the system for this work, and it may remain unpublished because researchers prioritize their time on the eye-catching papers, hurriedly put together” [

9].

The pressure to publish quickly in high-impact journals could tempt researchers to engage in unethical practices, most common of which are plagiarism and the fabrication of results. Graduate students and postdocs depend on their supervisors for current financial support and recommendations for future jobs, and obtaining results that differ from expected or previously published ones could cost them their positions and jeopardize their careers. Many young researchers do not realize how damaging unethical behavior could be to their reputations and careers.

As pointed out by many authors, the number of incidents of scientific fraud is increasing [

9,

22,

23,

24]. Retraction Watch, a web site monitoring the retraction of articles from scientific journals, shows that even reputable journals have to retract articles because of authors’ misconduct [

25]. Publishing fraudulent results has tremendous implications for researchers who try to reproduce false results in published articles. It could take years trying and failing to confirm fraudulent results, before finding out that it was not their fault.

Researchers are sharing their frustration with the existing environment on social media. A young scientist recently shared her indignation on Twitter about how a journal editor had asked her to provide her h-Index as part of the submission process of a paper. The needed change in the existing academic environment is also a hot topic on social media. A recent newspaper article (“A PhD should be about improving society, not chasing academic kudos”) [

26] was shared with more than 48,000 people in just the four days after it was published and was widely commented on in social media.

5. Facilitating the Globalization of Science

Through his writings and the selection of journals for the SCI, Garfield actively promoted English as the lingua franca of science and became a powerful force in the globalization of research. Many of his essays, as well as his articles in

The Scientist were devoted to convincing the world that science needs a common language and that this language should be English (

Figure 6). The selection of journals for the SCI and SSCI favors English-language journals, which has worked against content published in other languages.

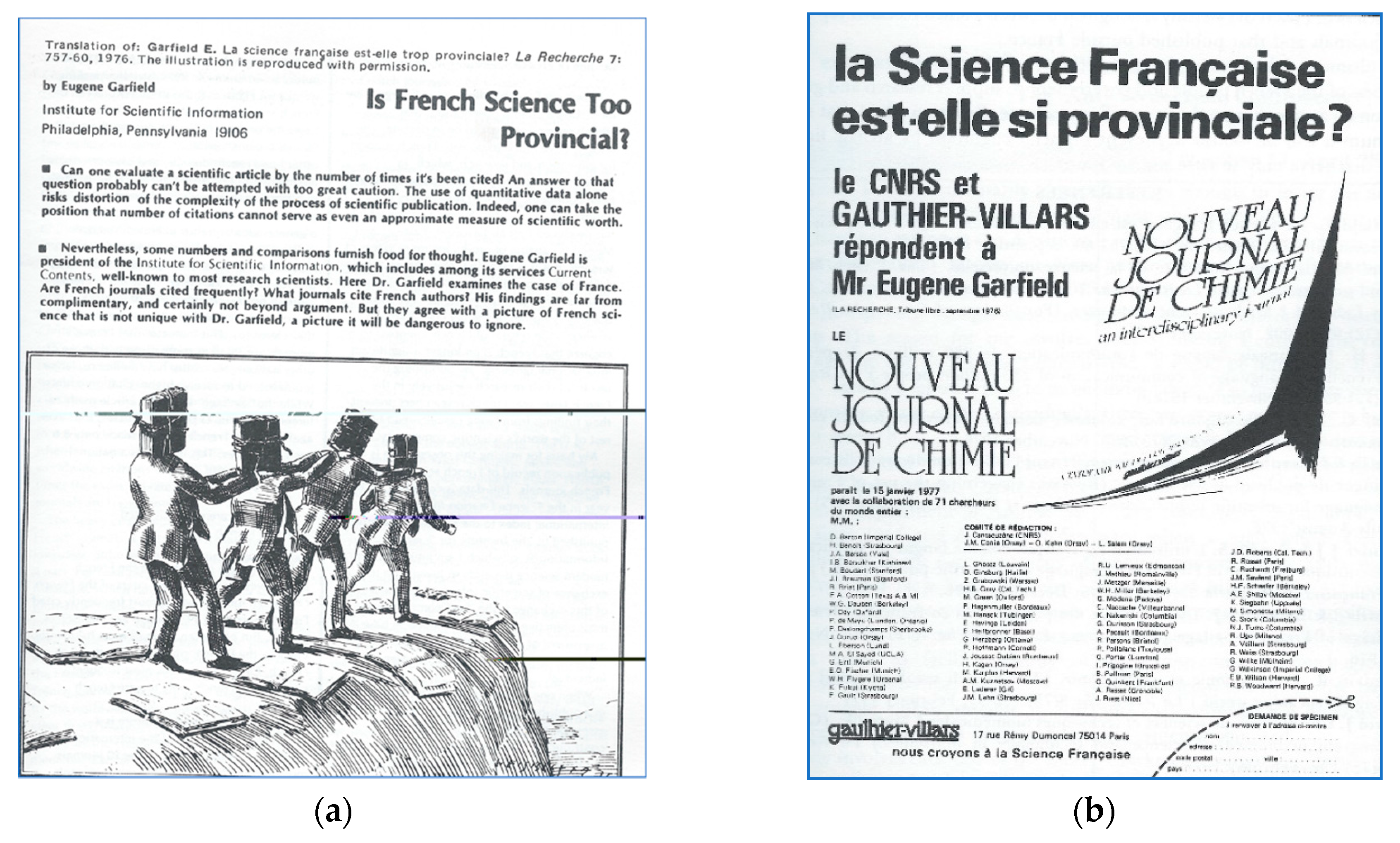

Garfield’s efforts to promote English have not always been welcomed by other countries. In an article that he published in the French journal

La Recherche, he attributed the low citation rate of French articles to the fact that French researchers publish their work in French (

Figure 7a). This conclusion and the provocative title of the article (“Is French Science Too Provincial?”) caused a storm in the academic and political circles in France in the 1970s. A former prime minister of France, Michel Debré, angrily responded to Garfield’s challenge and called it a “linguistic imperialism”. The French reacted by launching a new multi-language chemistry journal.

Having English as the dominant language of science has its pros and cons. We have one language in which we can easily communicate with peers from other countries. This gives, though, native speakers of English the benefit of writing and speaking in their own language that they learned when they were kids, while all others have to make an enormous effort to learn it. In an environment where there is a fierce competition for publishing in top-tier journals, non-native speakers have to spend time learning how to write in English, while native speakers could use that time to do research. Many articles are rejected from scholarly journals just because of their poor English.

Gingras and Khelfaoui [

27] assessed “the significance of a parameter that is seldom taken into account in evaluation studies: the existence of a USA comparative citation (visibility) advantage built in the database [SCI] and thus affecting countries that collaborate more with the USA than with other countries”. They analyzed “… how this USA citation advantage affects the measure of the scientific impact (usually measured through citations received) of major countries …”. They concluded that, “… given the strong presence of the USA in the WoS database, the comparative rankings tend, by construction, to give a citation advantage to countries having the closest relation to that country.”

In his book,

Scientific Babel [

28], Michael Gordin discussed the evolution of scientific languages and how English became the dominant language of science. Until the early 20th century, scientific writing was evenly split between English, French, and German. When America emerged as a global scientific power after World War II, English gradually became the dominant language of scientific communication [

28,

29].

English has become the international language through which researchers communicate and collaborate. The top scientific journals are now published in English, and the official language of most conferences is English. Citation networks based on SCI connect researchers from all over the world and allow them to find new partnerships. Tools such as ResearcherID, a unique author-identifying site, connects authors with their works across the Web of Science system (Web of Science, Publons, and InCites) and visualizes authors’ publications and citing networks.

6. Conclusions

Nobody reads journals … People read papers.

The scientific publishing landscape is changing quickly, with more countries becoming involved. As researchers are evaluated, hired, promoted, and funded based on the impact of their work, the importance of publishing in high-impact journals has created an extremely competitive environment. Many national governments have increased their budgets for research, which caused a tremendous growth of the number of papers submitted for publication and significantly increased the competition for publishing in high-impact journals. According to Franzoni et al. [

31], “Although still the top publishing nation, the United States has seen its share of publications decline from 34.2% in 1995 to 27.6% in 2007 as the number of articles published by U.S. scientists and engineers has plateaued and that of other countries has grown.” This trend is attributed to the policies adopted by other countries to reward their scientists. This is how Maureen Rouhi, former editor of the ACS journal

Chemical & Engineering News, describes the global research and publishing enterprise today [

32]:

R&D in Asia is growing at a rapid rate. At the American Chemical Society, evidence of that comes from the publishing services: submissions to ACS journals from India, South Korea, and China grew at annual compounded rates of 17.3%, 16.6%, and 14.7%, respectively, compared with 5.4% from the U.S., during 2008–12. Researchers in Asia are significant users of ACS information services, including SciFinder. The databases that underpin SciFinder increasingly are based on molecules discovered in Asia. China now leads the world in patent filings.

For decades, the IF has been the most widely used and accepted tool for measuring the quality of journals and the impact of research. It may not be perfect, but it is still considered an important metric [

7], and no other tool has replaced it for evaluation of impact in academic institutions. Other indicators that have emerged more recently for measuring academic impact and the quality of journals include the h-Index, the Eingen Factor, Scopus Journal Analyzer, SCImago Journal Rank (SJR), Source Normalized Impact per Paper (SNIP), and Impact per Publication (IPP) [

33]. It should be acknowledged that they were developed in reaction to the IF and the citation data developed by Garfield.

Academic social sites such as Academia.edu, ResearchGate, ResearcherID, and Mendeley connect researchers and serve as a medium for sharing and finding scientific information. They provide analytical tools to see connections between posted documents. Altmetrics is a new area of assessment of research, which monitors views/downloads, likes, and other statistics to measure interest in research.

The interest in obtaining information about published research—an area dominated by the IF for decades—has triggered the development of new tools and services that perform analysis of research patterns in a more sophisticated way to identify trends and make comparisons. New research analytics suites, such as Elsevier’s SciVal (included in Scopus), Clarivate Analytics’ “InCites”, and “Essential Science Indicators” (included in Web of Science) allow for the analysis of research performance by going further than just measuring the quality of journals—evaluating the impact of individual articles, authors, and institutions.

With the advent of open access, the impact “thinking” has been moving away from journals, focusing on article- and researcher-level impact. This is how Garfield discussed the future of scientific information in an interview conducted in 2015 [

1]:

If everything becomes open access, so to speak, then we will have access to, more or less, all the literature. The literature then becomes a single database. Then, you will manipulate the data the way you do in Web of Science, for example. All published articles will be available in full text. When you do a search, you will see not only the references for articles, which have cited a particular article, but you will also be able to access the paragraph of the article to see the context for the citation. That makes it possible to differentiate articles much more easily and the full text will be available to anybody.

Garfield’s vision for the future of science, scientific communication, and information technology has become a reality, but he could not have foreseen some of the unexpected ramifications of his ideas and their impact on the global scholarly ecosystem, where fierce competition for publishing in high-impact journals and obsession with citations and IFs would permeate so deeply the culture of research.