A Novel Rubric for Rating the Quality of Retraction Notices

Abstract

:1. Introduction

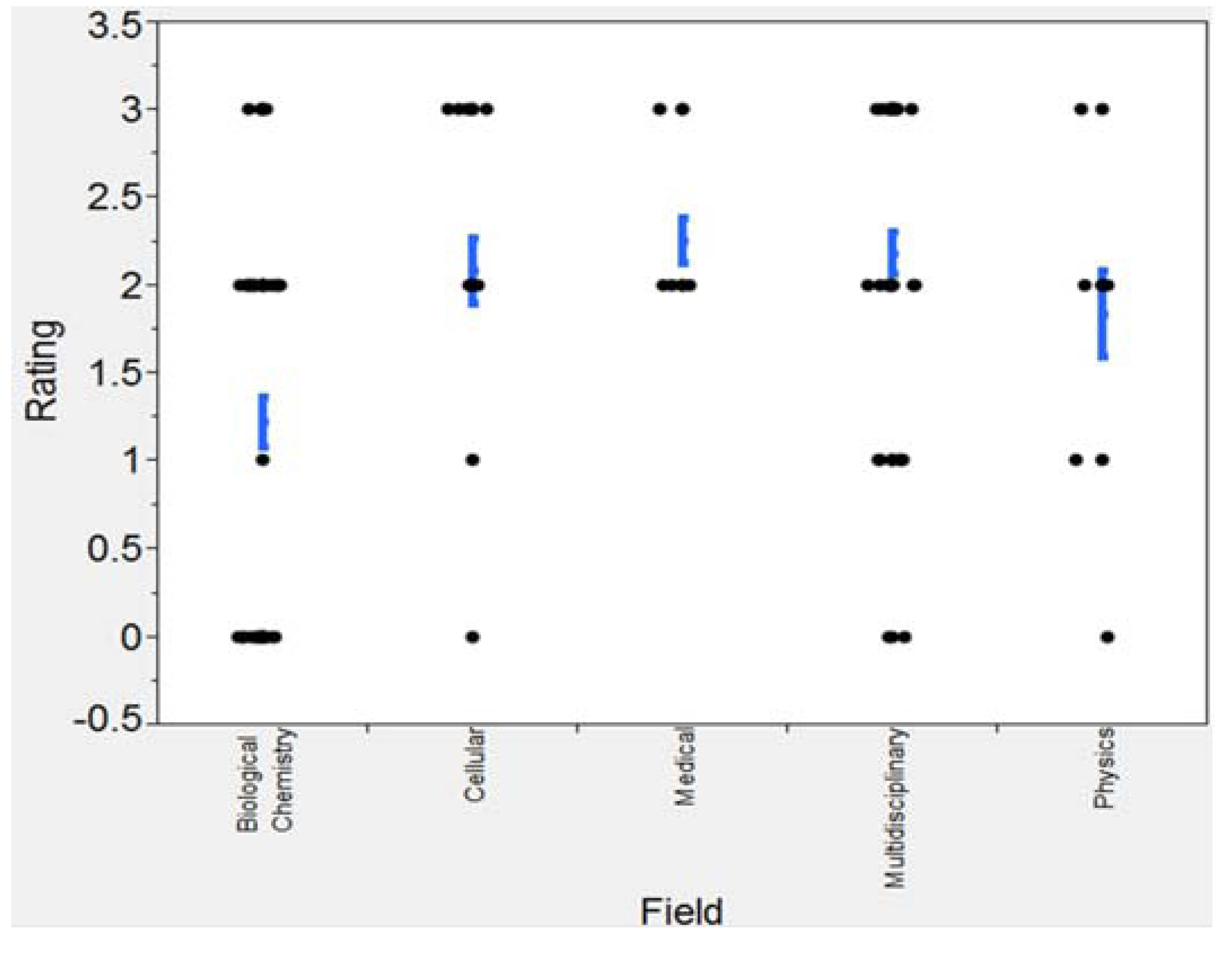

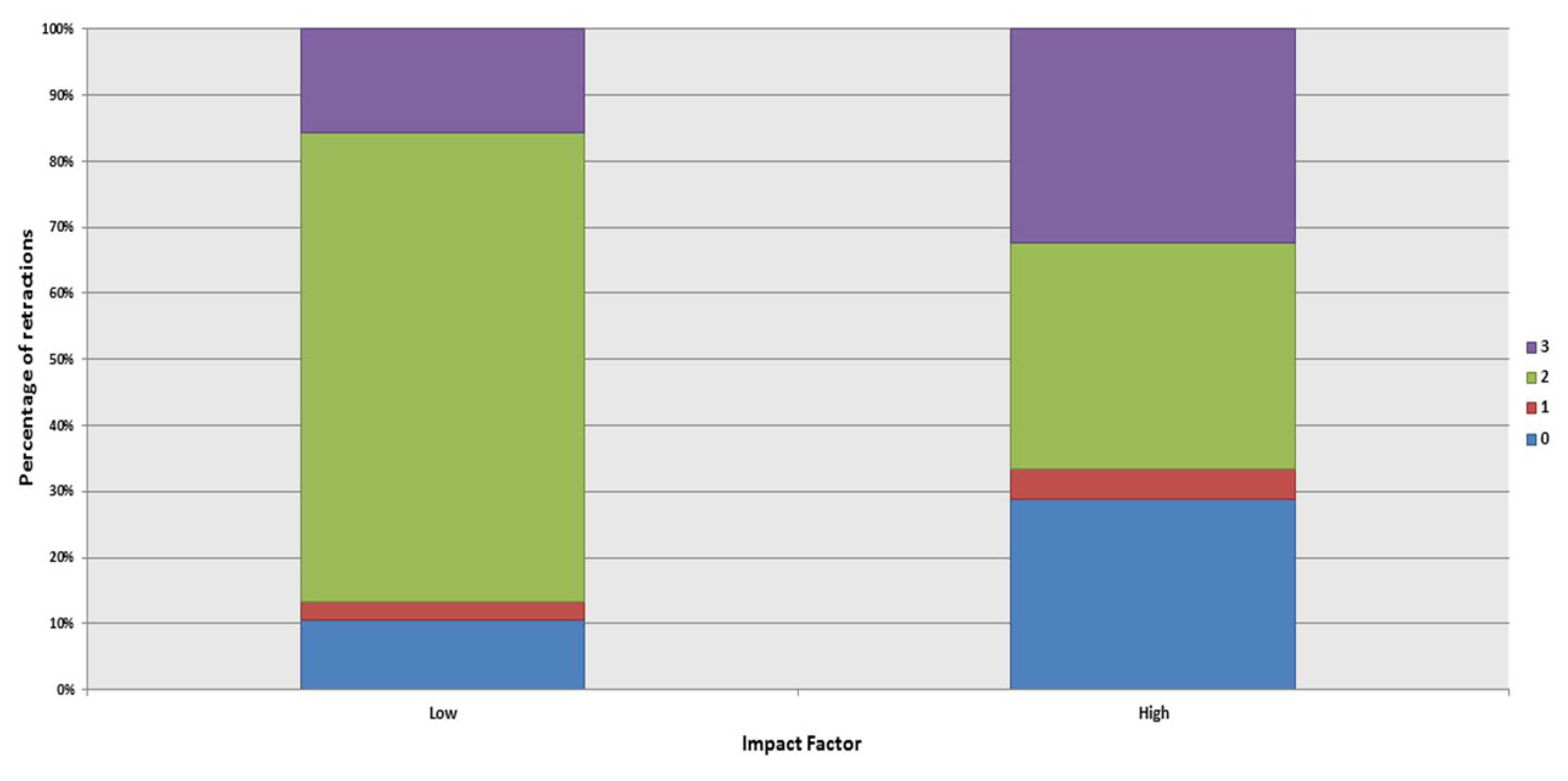

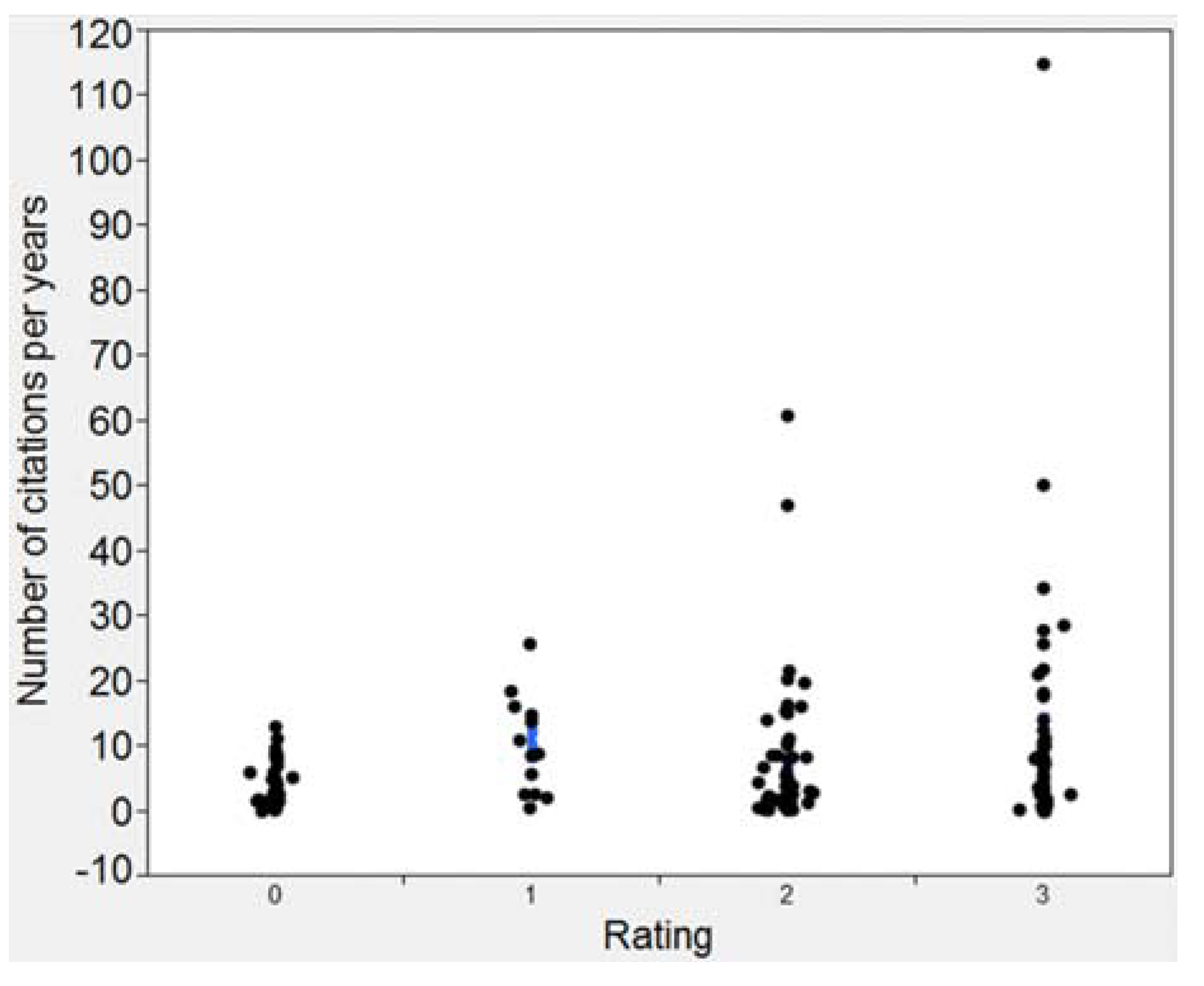

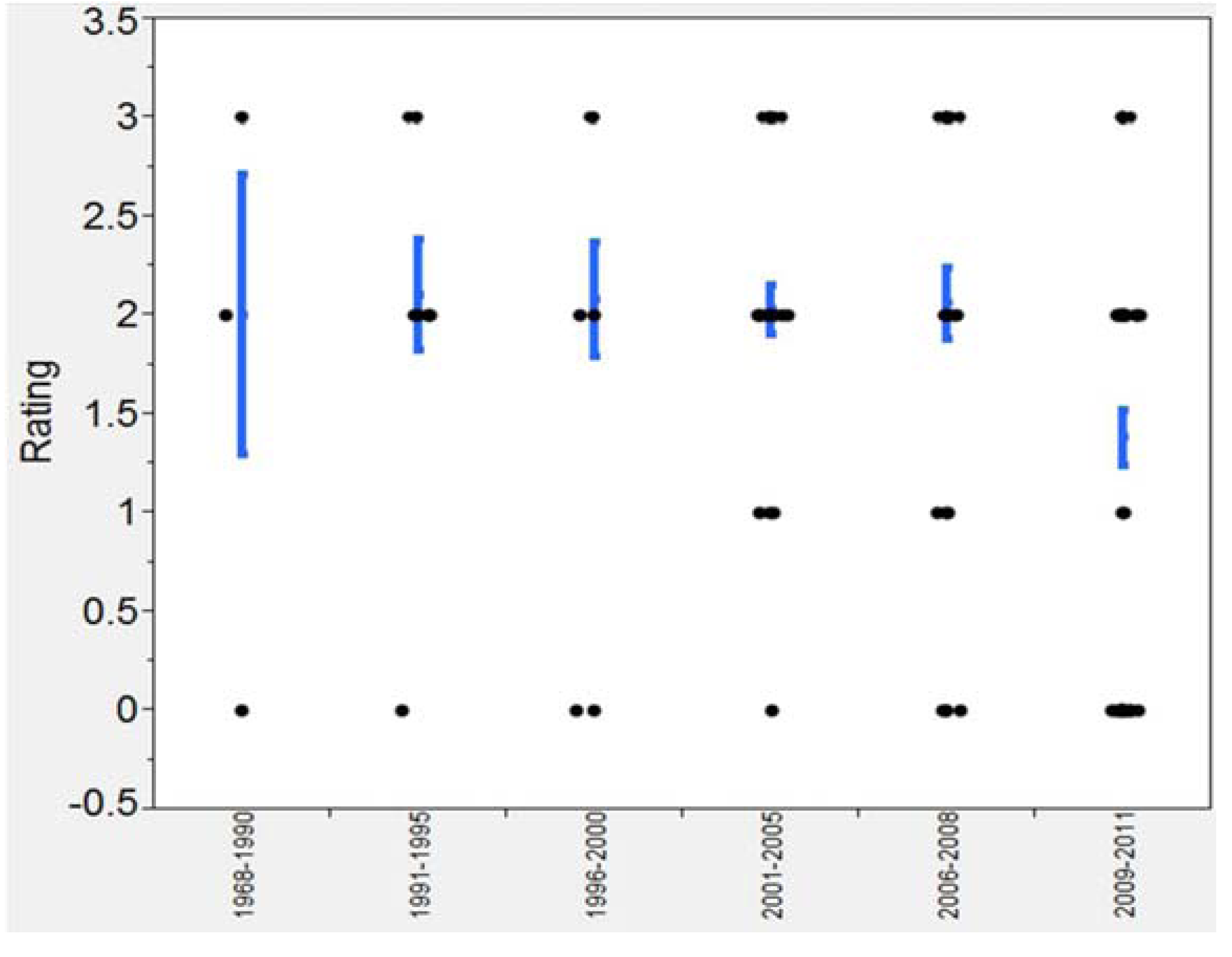

2. Experimental Section

2.1. Development of a Rubric to Assess Retraction Notices

- 0—No reason for retraction can be discerned from the notice.

- 1—The reason for retraction can be inferred but is not stated clearly through the naming or definition of a category.

- 2—The reason for retraction is clearly stated, but explanation is not given as to how the rest of the article was affected by retraction.

- 3—The reason for retraction is clearly stated and explanation is given for if and how the entirety of the article was affected by the fault.

2.2. Selection of Journals for Assessment

2.3. General Analysis of the Retractions

| Journal Title | Field | Retracted Articles | 5-Year Impact Factor | Eigen Factor™ Score | Article Influence™ Score | Retractions in terms of total publications (%) |

|---|---|---|---|---|---|---|

| Cell (CELL) | Cellular | 17 | 34.931 | 0.70027 | 20.591 | 0.0651 |

| Journal of Biological Chemistry (J BIO CHEM) | Biological Chemistry | 48 | 5.498 | 0.88116 | 2.188 | 0.0173 |

| Science (SCIENCE) | Multidisciplinary | 51 | 31.777 | 1.45546 | 16.818 | 0.11167 |

| New England Journal of Medicine (NEW ENGL J MED) | Medical | 18 | 52.363 | 0.68835 | 21.349 | 0.0655 |

| Physical Review Letters (PHYS REV LETT) | Physics | 3 | 7.155 | 1.23313 | 3.470 | 0.0034 |

| Annals of the New York Academy of Sciences (ANN NY ACAD SCI) | Multidisciplinary | 5 | 2.649 | 0.10051 | 0.892 | 0.0106 |

| Biochemical and Biophysical Research Communications (BIOCHEM BIOPH RES CO) | Biological Chemistry | 19 | 2.720 | 0.18990 | 0.887 | 0.0149 |

| Molecular and Cellular Biology (MOL CELL BIOL) | Cellular | 8 | 6.381 | 0.22917 | 3.250 | 0.0198 |

| European Journal of Physics (EUR J PHYS) | Physics | 2 | 0.675 | 0.00287 | 0.220 | 0.1531 |

| Nuovo Cimeto Della Societa Italiana di Fisica B (NUOVO CIM B/EPJ PLUS) | Physics | 2 | 0.293 | 0.00171 | 0.106 | 0.0336 |

| Journal of the Chinese Medical Association (J CHIN MED ASSOC) | Medical | 2 | n/a | 0.00191 | n/a | 0.4348 |

| Molecular Biology of the Cell (MOL BIOL CELL) | Cellular | 1 | 5.949 | 0.14329 | 3.140 | 0.0077 |

| Journal of the Korean Physical Society (J KOREAN PHYS SOC) | Physics | 1 | 0.447 | 0.01082 | 0.124 | 0.0084 |

| Physica Scripta (PHYS SCRIPTA) | Physics | 3 | 0.876 | 0.01628 | 0.335 | 0.0203 |

| Journal of the Physical Society of Japan (J PHYS SOC JPN) | Physics | 1 | 2.128 | 0.05644 | 1.066 | 0.0038 |

2.4. Statistical Analyses

3. Results and Discussion

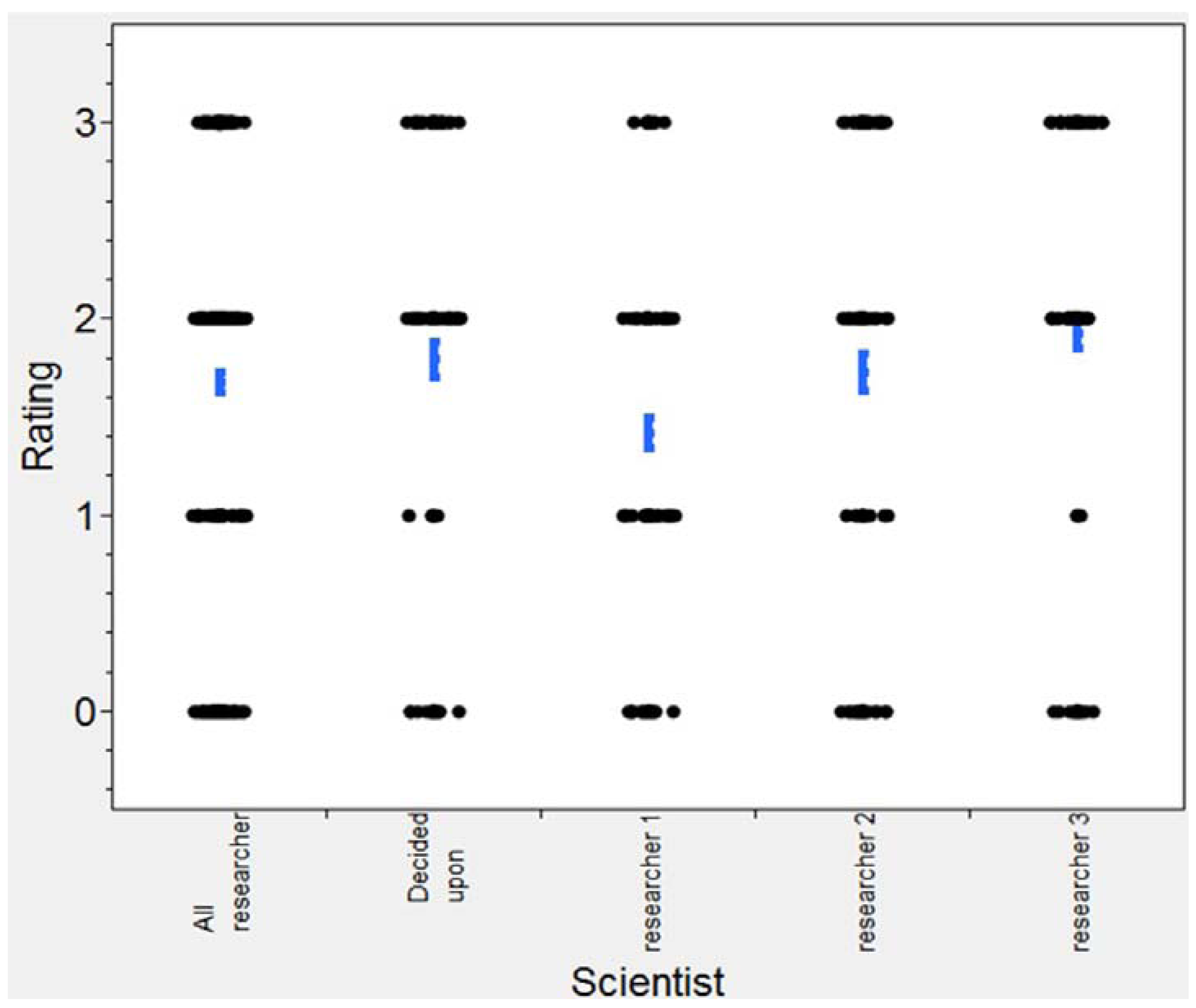

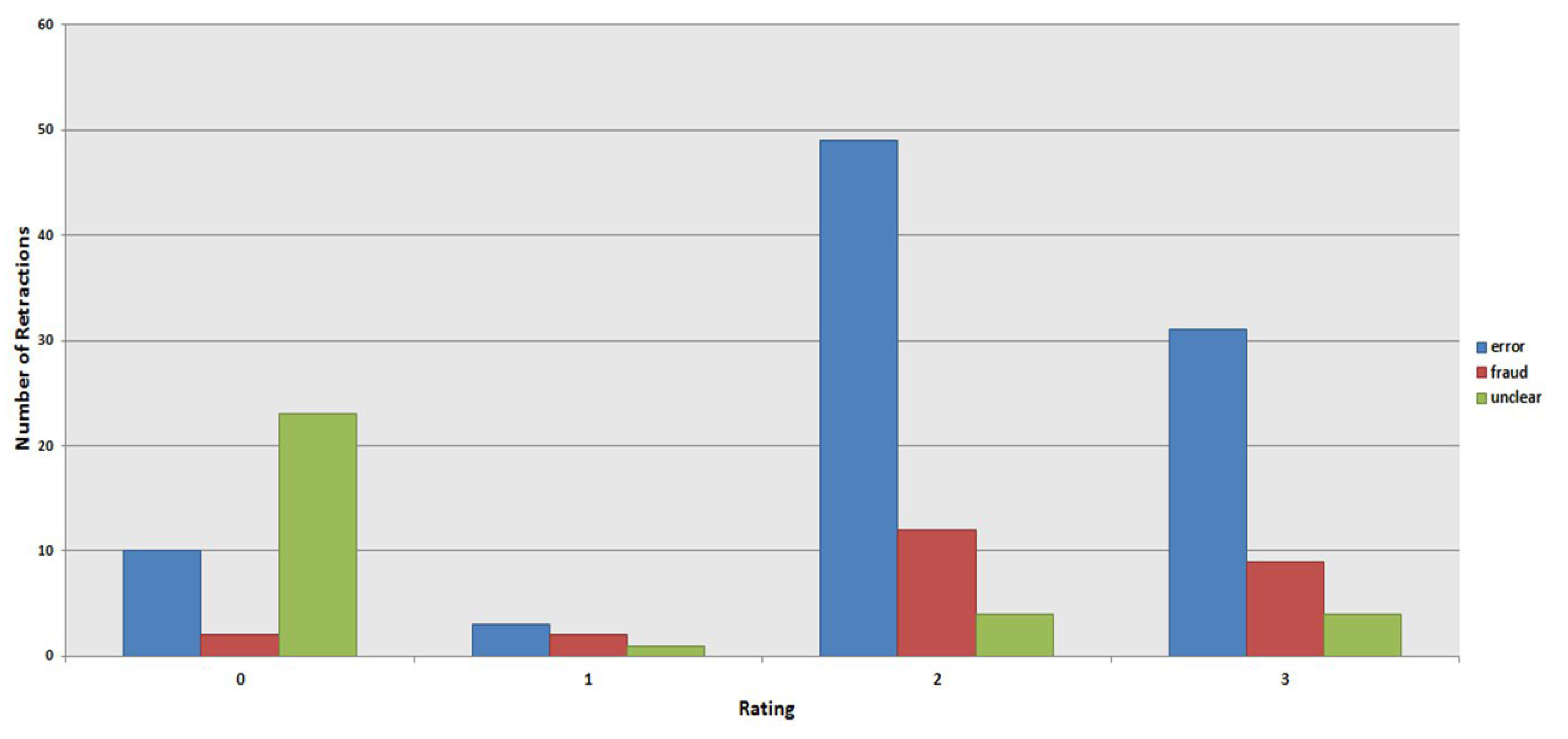

3.1. Analysis of the Rubric

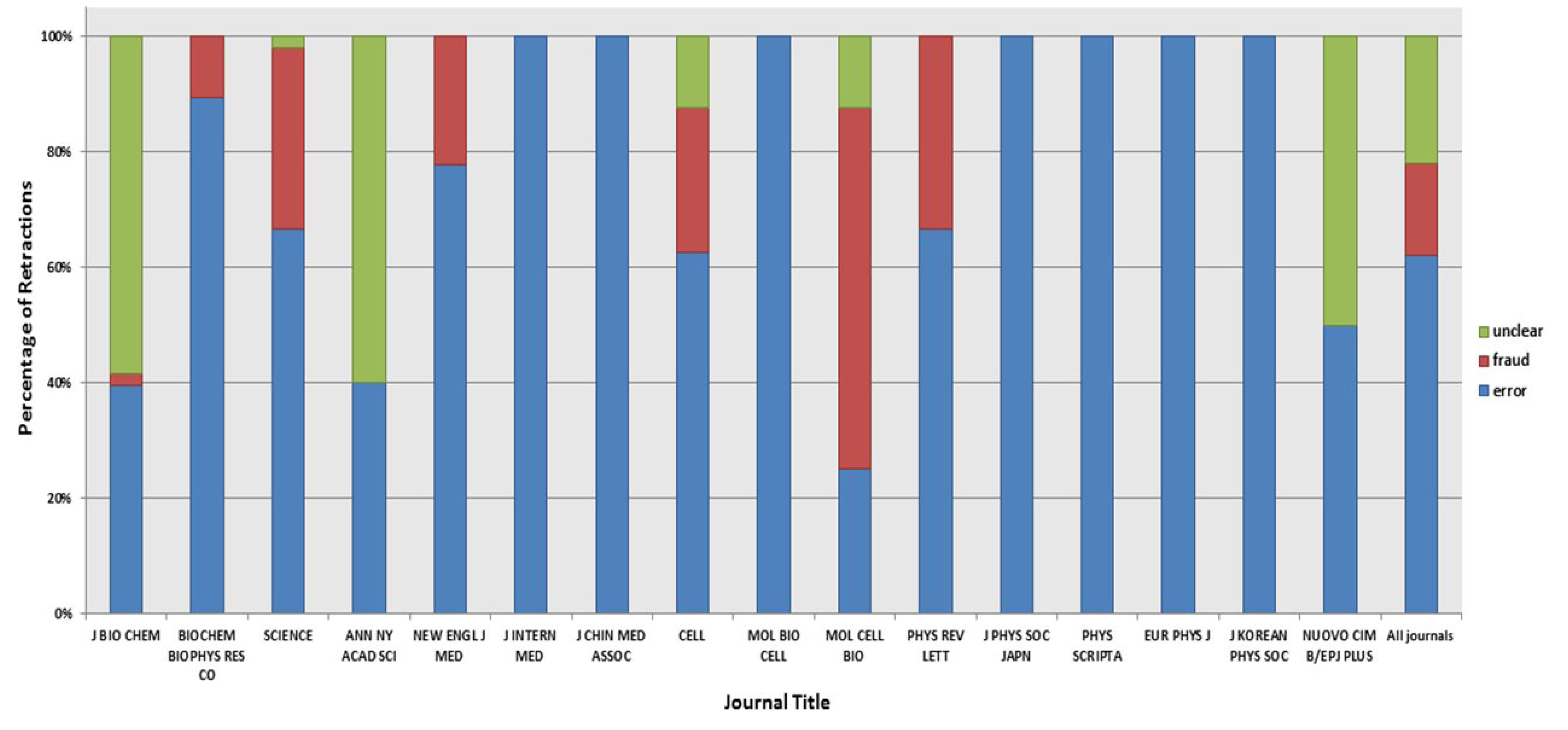

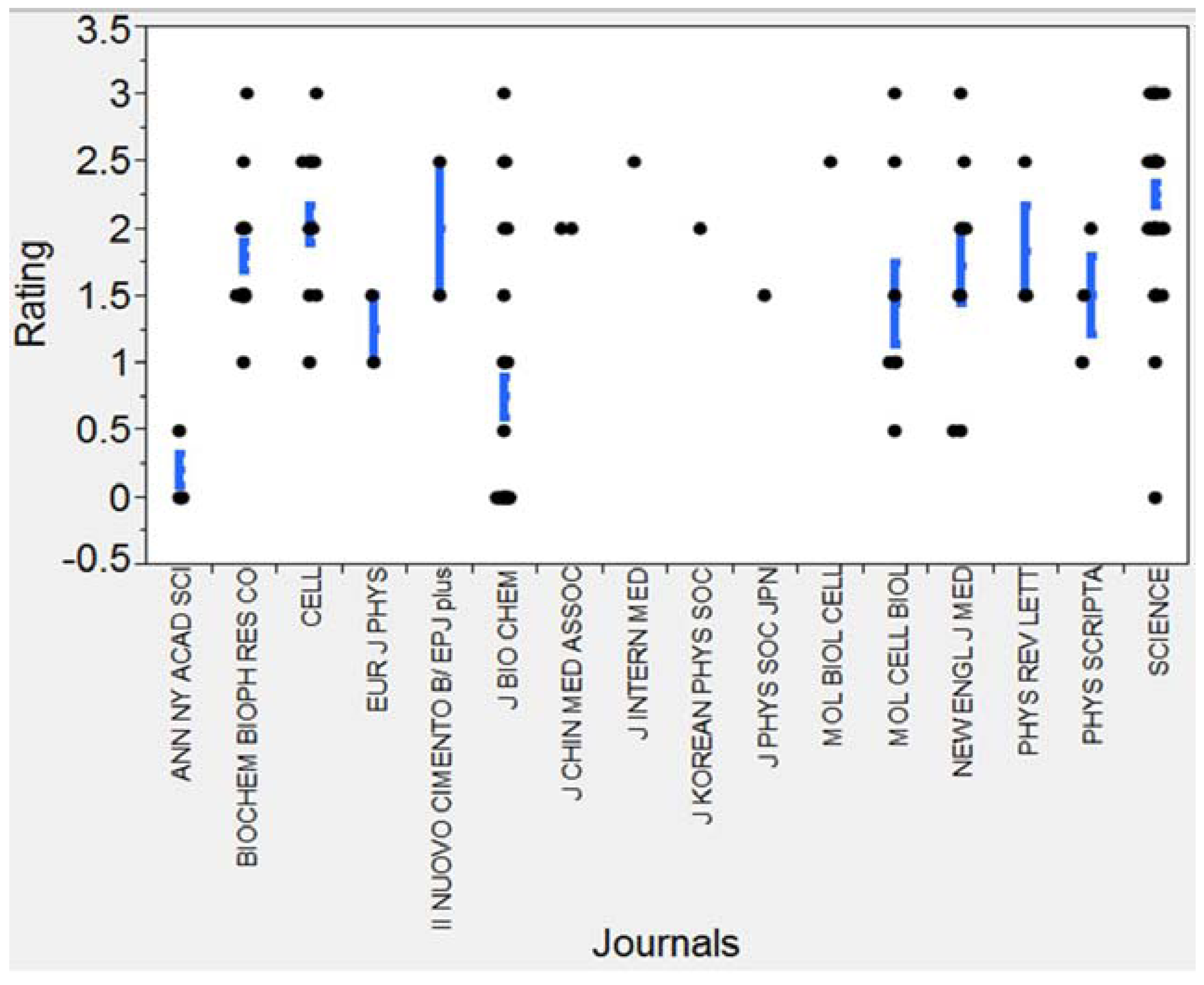

3.2. Analysis of the Retraction Notices

4. Conclusions

Acknowledgments

Conflicts of Interest

References

- Marcus, A. Allergy Researchers Lose Second Paper Over “Severe Problems” with Data. Available online: http://retractionwatch.wordpress.com/2012/09/21/allergy-researchers-lose-second-paper-over-severe-problems-with-data/#more-9843 (accessed on 21 September 2012).

- Oransky, I. Tsuji Explains why JBC Paper Was Retracted: Western Blot Problems. Available online: http://retractionwatch.wordpress.com/2012/01/31/tsuji-explains-why-jbc-paper-was-retracted-western-blot-problems/ (accessed on 27 June 2012).

- Wager, E.; Barbour, V.; Yentis, S.; Kleinert, S. Committee of Publication Ethics Retraction Guidelines. Available online: http://publicationethics.org/files/retraction%20guidelines.pdf (accessed on 12 September 2013).

- Atlas, M.C. Retraction policies of high-impact biomedical journals. J. Med. Libr. Assoc. 2004, 92, 242–250. [Google Scholar]

- Wager, E.; Fiack, S.; Graf, C.; Robinson, A.; Rowlands, I. Science journal editors’ views on publication ethics: Results of an international survey. J. Med. Ethics 2009, 35, 348–353. [Google Scholar] [CrossRef]

- Steen, R.G. Misinformation in the medical literature: What role do error and fraud play? J. Med. Ethics 2011, 37, 498–503. [Google Scholar] [CrossRef]

- Nath, S.B.; Marcus, S.C.; Druss, B.G. Retractions in research literature: misconduct or mistakes? Med. J. Aust. 2006, 185, 152–154. [Google Scholar]

- Steen, R.G. Retractions in the scientific literature: do authors deliberately commit research fraud? J. Med. Ethics 2011, 37, 113–117. [Google Scholar] [CrossRef]

- Woolley, L.K.; Lew, R.A.; Stretton, S.; Ely, J.A.; Bramich, N.J.; Monk, J.A.; Woolley, M.J. Lack of involvement of medical writers and the pharmaceutical industry in publications retracted for misconduct: A systematic, controlled, retrospective study. Curr. Med. Res. Opin. 2011, 27, 1175–1182. [Google Scholar] [CrossRef]

- Resnik, D.B.; Dinse, E.G. Scientific retractions and corrections related to misconduct findings. J. Med. Ethics 2013, 39, 146–150. [Google Scholar]

- Wager, E.; Williams, P. Why and how do journals retract articles? An analysis of Medline retractions 1988–2008. J. Med. Ethics 2011, 37, 567–570. [Google Scholar] [CrossRef]

- Fang, F.C.; Steen, R.G.; Casadevall, A. Misconduct accounts for the majority of retracted scientific publications. Proc. Natl. Acad. Sci. USA 2012, 109, 17028–17033. [Google Scholar] [CrossRef]

- Steen, R.G. Retractions in the scientific literature: Is the incidence of research fraud increasing? J. Med. Ethics 2011, 37, 249–253. [Google Scholar] [CrossRef]

- Furman, J.L.; Jensen, K.; Murray, F. Governing knowledge in the scientific community: Exploring the role of retractions in biomedicine. Res. Policy 2012, 41, 276–290. [Google Scholar] [CrossRef]

- Lu, S.F.; Jin, G.Z.; Uzzi, B.; Jones, B. The retraction penalty: evidence from the Web of Science. Sci. Rep. 2013, 3. Available online: http://www.nature.com/srep/2013/131106/srep03146/full/srep03146.html (accessed on 18 December 2013). [Google Scholar] [CrossRef]

- Azoulay, P.; Furman, J.L.; Krieger, J.L.; Murray, F.E. Retractions. 2012. Working Paper 18499. Available online: http://www.nber.org/papers/w18499.pdf (accessed on 18 December 2013).

- National Institutes of Health Office of Extramural Research. Research Integrity. Available online: http://grants.nih.gov/grants/research_integrity/research_misconduct.htm (accessed on 6 June 2012).

- Lacetera, N.; Zirulia, L. The economics of scientific misconduct. J. Law Econ. Organ. 2011, 27, 568–603. [Google Scholar] [CrossRef]

- Oransky, I. JBC Publisher ASBMB Hiring Manager of Publication Ethics—And Why Retraction Watch Is Cheering. Available online: http://retractionwatch.com/2012/11/19/jbc-publisher-asbmb-hiring-manager-of-publication-ethics-and-why-retraction-watch-is-cheering/ (accessed on 23 December 2013).

- Oransky, I. Another Win for Transparency: JBC Takes a Step Forward, Adding Details to Some Retraction Notices. Available online: http://retractionwatch.com/2013/03/12/another-win-for-transparency-jbc-takes-a-step-forward-adding-details-to-some-retraction-notices/ (accessed on 23 December 2013).

- Marcus, A.; Oransky, I. Bring on the Transparency Index. Available online: http://the-scientist.com/2012/08/01/bring-on-the-transparency-index/ (accessed on 5 September 2012).

© 2014 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Bilbrey, E.; O'Dell, N.; Creamer, J. A Novel Rubric for Rating the Quality of Retraction Notices. Publications 2014, 2, 14-26. https://doi.org/10.3390/publications2010014

Bilbrey E, O'Dell N, Creamer J. A Novel Rubric for Rating the Quality of Retraction Notices. Publications. 2014; 2(1):14-26. https://doi.org/10.3390/publications2010014

Chicago/Turabian StyleBilbrey, Emma, Natalie O'Dell, and Jonathan Creamer. 2014. "A Novel Rubric for Rating the Quality of Retraction Notices" Publications 2, no. 1: 14-26. https://doi.org/10.3390/publications2010014

APA StyleBilbrey, E., O'Dell, N., & Creamer, J. (2014). A Novel Rubric for Rating the Quality of Retraction Notices. Publications, 2(1), 14-26. https://doi.org/10.3390/publications2010014