Abstract

Aim: To evaluate the reliability of linear and angular measurements taken using different software programs in orthodontics. Materials and Methods: A sample of four software programs from different manufacturers, namely MicroDicom viewer, Photoshop® CS3, AutoCAD®, and Image-Pro®, were used for measuring the geometric features of four types of miniscrews from different manufacturers. Each miniscrew type presented a group: Group I, Tomas® (Dentaurum, Ispringen, Germany); Group II, HUBIT® (HUBIT, Gyeonggi-do, Korea); Group III, AbsoAnchor® (Dentos, Daegu, Korea); and Group IV, Creative (Creative, Zhejiang, China). Measurements of apical face angle, thread angle, lead angle, flank, pitch depth, and width were taken on 45 × magnification scanning electron microscope images of the shafts of the miniscrews. One assessor measured the seven geometric features for the four types of miniscrews using the four software programs twice in two sessions separated by a three week interval. Results: Pairwise comparisons, for each of the four miniscrew groups, showed that the only common result observed was the significant difference (p < 0.001) between measurements of flank taken by the four software programs. When measurements of the four types of miniscrews were pooled into one group, a high degree of intra-rater reliability (ICC range from 0.9 to 1.0) for all the seven geometric features was found with all the four software programs. The paired t-test showed insignificant difference (at p ≤ 0.05) between the first and second measurements, except for a few measurements including pitch width measured by Image-Pro® (p = 0.012), MicroDicom (p = 0.023), and Photoshop® (p = 0.001). Conclusions: Results did not give absolute superiority to one software program over the others and suggested an assessor effect. Assessor estimates could have been affected, among other factors, by the design of the miniscrews and the technical features of the software programs.

1. Introduction

Different instruments [1,2] and software programs [3] are available for mathematical measurements. Medical and dental software programs with a wide range of applications in clinical and research practices are now affordable and within reach of everyone [4,5,6]. Software programs for anatomical identifications and measurements largely replaced manual or anthropometric methods to save time and effort and sometimes because of allegedly increased precision, accuracy, and/or reliability, or wishfully, for both reasons [7,8]. Using software programs is sometimes inevitable when measurements of mini-and micro-structures are required [9]. Previous studies [10,11,12] compared manual versus digital measurements and evaluated the accuracy and reliability of software programs. This was done on both soft and hard structures. In a study that compared soft tissue measurements obtained using software programs for surgical purposes to direct clinical measurements, different software programs showed varied accuracy and reproducibility [10]. In a study that assessed the accuracy and reliability of imaging software for measurements of upper airway from cone beam computed tomography CBCT images, Chen et al. [11] compared three software programs and found different accuracies of the volume, length, and cross section. Another study about soft tissue measurements’ accuracy compared Dolphin imaging software® and nasopharyngoscopy resulted in weak support for the use of the software for volume and minimal cross-sectional airway measurements. The software measurements from CBCT scans did not correlate well with the measurements from nasopharyngoscopy [12]. A research group [13] that designed a software program for automated identification of craniofacial landmarks on CBCT images measured the distances of coordinates for any of the landmarks to evaluate the software accuracy. Another group [14] that opted to design its software program evaluated measurements of subcutaneous adipose tissue using software for semi automated measurements of subcutaneous adipose tissue and found high accuracy and reliability of measurements obtained by well trained assessors.

Previous studies measured the dimensions of different components of orthodontic appliances including brackets [15], archwires [16], and miniscrews [17,18,19]. The purpose of these studies was to evaluate the accuracy of the measured dimensions in comparison to the dimensions given by the manufactures or to detect the correlation between dimensions and the mechanical performance of the studied orthodontic components. Linear and angular measurements of small size objects present additional difficulty because measurements on a microscopic scale are needed. For these purposes, scanning electron microscope (SEM) is widely used because it gives magnified images of high resolution, which makes micro measurements possible. Recognized as a valuable scientific instrument, multiple factors that could affect the measurements taken with the SEM have been studied; these factors include the acquisition of the image, the SEM calibration, and the effects of specimen contamination [20,21,22]. Because of the accuracy and reliability concerns, scientists studied these factors and tried to develop methods to improve the technique and overcome its shortcomings [9,23,24].

It is evident from the above studies that software programs have become increasingly used for mathematical measurements in the medical field. Digital measurements of soft and hard tissues and measurements of devices and components of appliances are all needed in orthodontics for diagnosis, treatment planning and evaluation, biomechanical choices, as well as in related research. The impetus behind the current study was the observed lack of evaluation of software packages as an influencing factor on the accuracy and/or reliability of mathematical measurements. Very few studies have evaluated the accuracy and reliability of different software packages for mathematical measurements [11]. Therefore, this study was conducted to evaluate and compare the reliability of linear and angular measurements taken by different software programs used in orthodontics.

2. Materials and Methods

Miniscrews: Four types of miniscrews from different manufacturers were used in this study. Each type presented a group: Group I, Tomas® (Dentaurum, Ispringen, Germany); Group II, HUBIT® miniscrew (HUBIT, Gyeonggi-do, Korea); Group III, AbsoAnchor® (Dentos, Daegu, Korea); and Group IV, Creative (Creative, Zhejiang, China). Each group included 10 miniscrews. Although the miniscrews from the different manufacturers had different geometric linear and angular measurements, all had conical shafts of 1.6 mm diameter and 6.0 mm length.

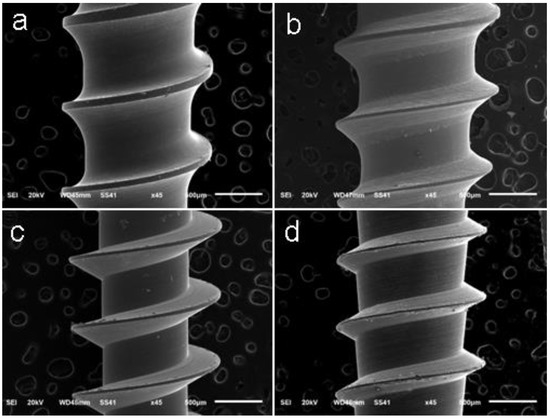

SEM images: The study used SEM (model JSM-6510LV; JEOL, Tokyo, Japan) for 3-dimensional imaging of the shafts of the miniscrews on a micrometric scale. The scans were done at 45 × magnification (Figure 1).

Figure 1.

Scanning electron microscope (SEM) images showing the thread details of the miniscrews: (a) Tomas®, (b) HUBIT®, (c) AbsoAnchor®, and (d) Creative at 45 × magnification.

Software programs: Four software programs from different manufacturers were used in this study: MicroDicom viewer (MicroDicom, Sofia, Bulgaria), Photoshop® CS3 (Adobe Inc., San Jose, CA, USA) AutoCAD® 2010 (Autodesk, San Rafael, CA, USA), and Image-Pro® (Media Cybernetics, Rockville, MD, USA).

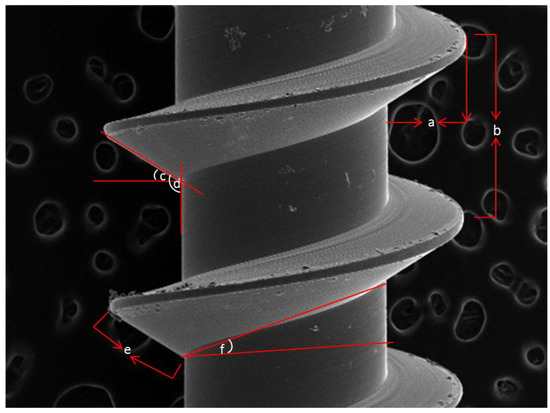

Measurements: The same linear and angular measurements (Figure 2) were taken for each miniscrew in each of the four groups. Apical face angle, thread angle, and lead angle were measured. Linear measurements included: flank, pitch depth, and pitch width. Definitions and details of the measurements have been given in previous publications [19,25].

Figure 2.

Linear and angular measurements of the geometric features of the miniscrews; (a) Thread depth: the distance from the tip to the root of the thread measured perpendicular to the longitudinal axis, (b) Thread pitch: the distance from the center of one thread crest to the center of the next. (c) Thread angle: the angle between the flank of a thread and the surface adjacent to it, (d) Apical face angle: the angle between the flank of a thread and perpendicular to the axis of a thread (e) Flank of the thread: the surface of thread that connect the tip with the root of the thread. (f) Lead angle: the angle made by the helix of the thread with a plane perpendicular to the axis. This is measured in an axial plane. Thread shape factor (TSF): calculated as the percentage between the mean thread depth and pitch.

Measurements were done after calibration of the image in each program as the scale of image had to be adjusted. To calibrate an image, calibration marks were placed on two points that were a known distance apart and by entering the actual distance into the program. The assessor measured the seven geometric features of the four types of miniscrews using each of the four software programs. Measurements were done in two sessions separated by a three week interval.

Statistical analyses: Descriptive statistics included minimum, maximum, mean, and standard deviation. The Kolmogorov-Smirnov test verified the normality of the data distribution. F-test (ANOVA) was used for the normally distributed quantitative variables to compare between the groups, and Tukey’s Post-Hoc test was used for pairwise comparisons. Significance was set at the 5% level. Intraclass correlation coefficient (ICC) and paired t-test were used for reliability assessment. An ICC form that included a two-way random effects model, single assessor type, and consistency was selected to be applied for the results of the current study. Mean estimates and the 95% confidence intervals (CI) had been reported for each ICC. The ICCs had been interpreted using a system suggested by McGraw and Wong [26] as follows: less than 0.75 Z, poor agreements; 0.75 to less than 0.90 Z, moderate agreements; 0.90 or greater Z, high agreements. One of the well known and most commonly used normalization techniques is Fisher’s Z transformation [27]. p value less than 0.05 was considered statistically significant. Data were analyzed with SPSS software package version 20.0 (IBM Corp: Armonk, NY, USA).

3. Results

Table 1, Table 2, Table 3 and Table 4 show the minimum, maximum, mean, standard deviation, and CI of the selected miniscrews’ geometric feature measurements. Each Table shows the descriptive statistics of the measurements for one group of the miniscrews taken by the four included software programs. Each Table also shows the results of one-way ANOVA and Post Hoc Tukey’s test. The only common result observed was the significant difference (p < 0.001) of flank measurements between the different software programs in each of the four miniscrew types.

Table 1.

Comparison between the measurements of (Group I) miniscrews calculated by the four software programs.

Table 2.

Comparison between the measurements of (Group II) miniscrews calculated by the four software programs.

Table 3.

Comparison between the measurements of (Group III) miniscrews calculated by the four software programs.

Table 4.

Comparison between the measurements of (Group IV) miniscrews calculated by the four software programs.

The ICC for intra-rater reliability ranged between poor and high, while the paired t-test showed, except for a few readings, an insignificant difference between the two rounds of measurements (p ≤ 0.05), Table 5, Table 6, Table 7 and Table 8. When the variables were combined for all the four types of miniscrews (Table 9), a high degree of intra-rater reliability for all the seven geometric features was found with all the four software programs; the value of ICC ranged from 0.9 to 1.0. The t-test showed significant difference between the first and second measurements of pitch width measured by Image-Pro® (p = 0.012), MicroDicom (p = 0.023), and Photoshop® (p = 0.001).

Table 5.

Intra-assessor reliability of the geometric features’ measurements calculated by AutoCAD® software program for each miniscrew type.

Table 6.

Intra-assessor reliability of the geometric features’ measurements calculated by Image-Pro® software program for each miniscrew type.

Table 7.

Intra-assessor reliability of the geometric features’ measurements calculated by MicroDicom software program for each miniscrew type.

Table 8.

Intra-assessor reliability of the geometric features’ measurements calculated by Photoshop® software program for each miniscrew type.

Table 9.

Intra-assessor reliability of the geometric features’ measurements combined for the four types of miniscrews by each software program.

4. Discussion

Mathematical measurements in this study could have been affected by the scanned images, the software program, and/or the assessor. Frederick et al. [2] tested the accuracy of SEM linear measurements on dental implants and found values within a margin of the values given by the manufacturer. They suggested a similar performance of the SEM, Optical Microscope, and Micro-Computed Tomography. Accuracy of measurements taken by different software programs had been investigated before; many studies in the medical field have been carried out on soft tissue measurements. Quieregatto et al. [10] compared the precision and accuracy of measurements obtained with AutoCAD®, ImageTool®, and Photoshop® with reference to clinical soft tissue measurements. Precision, which reflects reproducibility of measurements, was best with AutoCAD® and lowest with ImageTool®, while Photoshop® showed intermediate precision. Another study [11] on upper airway measurements using three software programs found high reliability of the three software programs. However, they reported inaccurate measurements; the three programs underestimated all tested parameters.

In the current study, when comparing the mean values of each geometric feature in each miniscrew group between the four software programs (Table 1, Table 2, Table 3 and Table 4), there was no specific pattern noticed. The group that included Tomas® miniscrews showed significant differences between the four programs for all the features measured except pitch width. Curved and indefinite points and line angles characterized the Tomas® miniscrews, therefore variations could have happened when locating the points for drawing the lines and planes required for linear and angular measurements. Miniscrews in the other groups showed more definite points and line angles, in varying degrees, and so the statistical results were different among the groups. In this context, flank was the only feature that was significantly different in all the four miniscrew types. The scans showed characteristic indefinite points at the tip of the thread as in group I and group II or at both the tip and root of the thread as in groups III and IV, which made it difficult to locate the points required for flank measurement in a precise, repeatable way. The small size of the flank, which ranged from 228.000μ–464.832µ, should also be considered in this regard. Manufacturing specifications seemed to be a strong factor influencing the measurements obtained by each software program. Based on these results, we could not give superiority to one software program over the others.

Reliability tests are important to build confidence in measurements and consequently in any steps taken further based on these measurements. ICC could test inter-assessor, intra-assessor, or measurement-remeasurement reliability [28]. The current study depended on one assessor, which measured the seven geometric features of the four types of miniscrews using the four software programs twice in two sessions separated by three weeks. Therefore, reliability reflected variations in measurements between assessments made by the same assessor in different sessions.

In the current study, ICC and paired t-test were done; the ICC showed the strength of association between the two rounds of measurements, while the paired t-test disclosed differences between the first and second measurements. Previously, the intra-assessor reliability of mathematical measurements calculated using different software packages had shown varied reliabilities [5]. Interpreting the ICC results is very critical since there are no standard values agreed on to indicate the different degrees of reliability [25]. Lower ICC values in the current study had not for sure indicated greater variability [25,29]. The observed negative results of ICC (Table 5, Table 6, Table 7 and Table 8) could be attributed to lower differences between miniscrews rather than differences between the assessor estimates in the different sessions. These results emphasized the effect of the design of the measured object on the assessor estimates of the location of points and planes for measurements. Assessor estimates are important since these software programs are not self-reporting. Lower ICC values could also be due to a small sample size. Taking advantage of the low differences in the measurements between the four groups of miniscrews, the measurements of each variable for the four miniscrews’ types were combined (Table 9) and the statistical analysis resulted in high intra-assessor reliability for the seven geometric features. Reliability was the key point of study in this research and because measurements could be reliable but not accurate, studies investigating the accurateness of the measurements are needed. These studies would need a gold standard for comparison; this gold standard would be the measurements from the manufacturers. The paired t-test showed insignificant differences (at p ≤ 0.05) between the first and second measurements, except for very few measurements.

The technical features of the software could affect assessor estimates and consequently the measurement’s reliability; technical features could positively influence the effectiveness and efficiency of the software or could do the opposite. Technical features differed between the four software programs; to give examples, Image-pro® had a local zoom feature that was used to magnify a specific area to facilitate accurate position of points and drawing of lines when needed. Photoshop® and AutoCAD® enabled drawing perpendicular lines automatically, which was advantageous in contrast to MicroDicom where lines were drawn manually. Image-Pro® displayed X and Y axes, which helped to draw perpendicular lines. The assessor estimates could therefore be affected by the design of the object, the technical features of the software program, and factors that may include, but are not limited to, computer familiarity and level of training on the software program. In the current study, MicroDicom individual measurements were the least precise as the software gave numbers rounded to a maximum of two digits, contrary to the other software programs that gave measurements rounded to more than five digits. However, it seemed that this feature did not influence the statistical comparisons. Nevertheless, this is a feature that might need to be considered if precision of measurements is important. Extreme precision is not always a plus; it may sometime complicate the reading or may give a misleading impression about the exactness of the measurements [30]. Scientific papers should above all be written in a comprehensive, logical way. Presenting data may sometimes become tricky due to needing to balance between accuracy and precision on one side and simplicity on the other hand, including rounding to a reasonable number of digits [31]. Precision and accuracy of measurements are two different parameters; precision indicates the information obtained from a number, while accuracy indicates the correctness of a number. In another way, precision indicates the reliability as seen in the repeatability of the measurements, while accuracy indicates the closeness of a measurement compared to the real measurement. Therefore, greater precision does not mean greater accuracy and the other way around. An instrument or device could produce the same inaccurate measurement over and over again. This concern is particularly important when planning miniscrew assisted palatal expanders or other miniscrew supported orthodontic devices with a completely digital workflow [32]. In these cases, the accuracy of the measurements is particularly important, thus allowing the clinician to choose diameter and type of miniscrew as these characteristics have a great influence on the mechanical behavior of the screws [33,34].

One of the biggest considerations when choosing a software package is its user friendliness. From our subjective experience with the four software packages, they differed greatly when judging their user friendliness quality. Some technical characteristics could influence the efficiency and effectiveness of the software and then its user friendliness—for example, it was possible to export measurements taken by Image pro to excel or text files. Also, Photoshop® exported the outcome to a text file. However, MicroDicom only dealt with image files. Other general characteristics that make a software package attractive to users include installation and updating easiness, intuitiveness and simplicity of navigation, and technical support. User friendliness might be a key element when choosing software packages.

However, it was not one of our intentions to compare the user friendliness of the software packages in the current study.

As the mathematical measurements could be affected by the software program used, clinicians need evidence for the reliability of software programs for such applications, which would help them understand and compare software packages. Therefore, the focus in the present study was on the software programs. In the current study, miniscrews as one of the orthodontic appliance components was used, but applications of software programs for mathematical measurements in orthodontics also extend to hard and soft tissue measurements.

5. Conclusions

- The results did not give absolute superiority to one software program over the others and reliability tests suggested an assessor effect.

- Assessor estimates could have been affected by the design of the miniscrews, the technical features of the software programs, and other general factors.

- Even when a software program did not give highly precise individual measurements, the reliability of the measurements was not compromised.

- Studies focusing on the accurateness of the measurements are recommended.

- Studies on soft tissues measurements are also needed.

Author Contributions

Investigation, M.A.M. and E.S.R.; methodology, M.A.M. and E.S.R.; text revision, M.F.S. and A.S.; software, E.S.R.; data curating, E.S.R.; supervision, M.A.M.; validation, M.A.M.; visualization, M.A.M. and E.S.R.; writing, M.A.M.; language review, E.S.R.; formal analysis, M.A.M.; conceptualization, M.A.M.; resource, M.A.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

Authors declare no conflict of interest.

Data availability statement

All data are available upon request by contacting the Authors.

References

- Kamburoglu, K.; Barenboim, S.F.; Aritürk, T.; Kaffe, I. Quantitative measurements obtained by micro-computed tomography and confocal laser scanning microscopy. Dentomaxillofac. Radiol. 2008, 37, 385–391. [Google Scholar] [CrossRef]

- Frederick, K.K.; Karla, Z.; Thiago, d.A.P.N.C.; Murilo, N.d.O.; Caio, C.D.R.; Flávio, D.d.N. Comparative analysis of Optical Microscopy, Scanning Electron Microscopy, and Micro-Computed Tomography on measurements. BJOS 2017, 16, e17058. [Google Scholar]

- Silva, A.A.L.S.; Franco, A.; Fernandes, Â.; Costa, C.; Barbosa, J.S.; Westphalen, F.H. Accuracy of linear measurements performed with two imaging software in cone-beam computed tomography scans of dry human mandibles. An. Acad. Bras. Cienc. 2017, 89, 2865–2873. [Google Scholar] [CrossRef]

- El, H.; Palomo, J.M. Measuring the airway in 3 dimensions: A reliability and accuracy study. Am. J. Orthod. Dentofac. Orthop. 2010, 137, e1–e9. [Google Scholar] [CrossRef]

- Weissheimer, A.; Menezes, L.M.; Sameshima, G.T.; Enciso, R.; Pham, J.; Grauer, D. Imaging software accuracy for 3-dimensional analysis of the upper airway. Am. J. Orthod. Dentofac. Orthop. 2012, 142, 801–813. [Google Scholar] [CrossRef] [PubMed]

- Mantovani, E.; Castroflorio, E.; Rossini, G.; Garino, F.; Cugliari, G.; Deregibus, A.; Castroflorio, T. Scanning electron microscopy evaluation of aligner fit on teeth. Angle Orthod. 2018, 88, 596–601. [Google Scholar] [CrossRef] [PubMed]

- Döler, W.; Steinhöfel, N.; Jäger, A. Digital image processing techniques for cephalometric analysis. Comput. Biol. Med. 1991, 21, 23–33. [Google Scholar] [CrossRef]

- Farooq, M.U.; Khan, M.A.; Imran, S.; Sameera, A.; Qureshi, A.; Ahmed, S.A.; Kumar, S.; Rahman, M.A. Assessing the reliability of digitalized cephalometric analysis in comparison with manual cephalometric analysis. J. Clin. Diagn. Res. 2016, 10, ZC20–ZC23. [Google Scholar] [CrossRef]

- Jin, P.; Li, X. Correction of image drift and distortion in a scanning electron microscopy. J. Microsc. 2015, 260, 268–280. [Google Scholar] [CrossRef]

- Quieregatto, P.R.; Hochman, B.; Furtado, F.; Machado, A.F.; Sabino Neto, M.; Ferreira, L.M. Image analysis software versus direct anthropometry for breast measurements. Acta Cir. Bras. 2014, 29, 688–695. [Google Scholar] [CrossRef]

- Chen, H.; van Eijnatten, M.; Wolff, J.; de Lange, J.; van der Stelt, P.F.; Lobbezoo, F.; Aarab, G. Reliability and accuracy of three imaging software packages used for 3D analysis of the upper airway on cone beam computed tomography images. Dentomaxillofac. Radiol. 2017, 46, 20170043. [Google Scholar] [CrossRef] [PubMed]

- Pachêco-Pereira, C.; Alsufyani, N.; Major, M.; Palomino-Gómez, S.; Pereira, J.R.; Flores-Mir, C. Correlation and reliability of cone-beam computed tomography nasopharyngeal volumetric and area measurements as determined by commercial software against nasopharyngoscopy-supported diagnosis of adenoid hypertrophy. Am. J. Orthod. Dentofac. Orthop. 2017, 152, 92–103. [Google Scholar] [CrossRef] [PubMed]

- Shahidi, S.; Bahrampour, E.; Soltanimehr, E.; Zamani, A.; Oshagh, M.; Moattari, M.; Mehdizadeh, A. The accuracy of a designed software for automated localization of craniofacial landmarks on CBCT images. BMC Med. Imaging 2014, 14, 32. [Google Scholar] [CrossRef] [PubMed]

- Störchle, P.; Müller, W.; Sengeis, M.; Ahammer, H.; Fürhapter-Rieger, A.; Bachl, N.; Lackner, S.; Mörkl, S.; Holasek, S. Standardized ultrasound measurement of subcutaneous fat patterning: High reliability and accuracy in groups ranging from lean to obese. Ultrasound Med. Biol. 2017, 43, 427–438. [Google Scholar] [CrossRef] [PubMed]

- Cotrim-Ferreira, A.; Cotrim-Ferreira, F.; Vellini-Ferreira, F.; Peron, D.F.; Carvalho, C.; Torres, F.C. Evaluation of cervico-occlusal dimensions of maxillary and mandibular incisor brackets for lingual orthodontics. J. Contemp. Dent. Pract. 2014, 15, 306–311. [Google Scholar] [CrossRef] [PubMed]

- Dolci, G.S.; Spohr, A.M.; Zimmer, E.R.; Marchioro, E.M. Assessment of the dimensions and surface characteristics of orthodontic wires and bracket slots. Dent. Press J. Orthod. 2013, 18, 69–75. [Google Scholar] [CrossRef] [PubMed]

- da Cunha, A.C.; Marquezan, M.; Lima, I.; Lopes, R.T.; Nojima, L.I.; Sant’Anna, E.F. Influence of bone architecture on the primary stability of different mini-implant designs. Am. J. Orthod. Dentofac. Orthop. 2015, 147, 45–51. [Google Scholar] [CrossRef]

- da Cunha, A.C.; Marquezan, M.; Nojima, L.I.; Sant’Anna, E.F. Evaluation of mechanical performance of orthodontic mini-implants with distinct designs. Iran. J. Orthop. Surg. 2017, 12, e6527. [Google Scholar]

- Radwan, E.S.; Montasser, M.A.; Maher, A. Influence of geometric design characteristics on primary stability of orthodontic miniscrews. J. Orofac. Orthop. 2018, 79, 191–203. [Google Scholar] [CrossRef]

- Postek, M.T. Critical Issues in Scanning Electron Microscope Metrology. J. Res. Natl. Inst. Stand. Technol. 1994, 99, 641–671. [Google Scholar] [CrossRef]

- Postek, M.T.; Vladár, A.E. Does your SEM really tell the truth?—How would you know? Part 1. Scanning 2013, 35, 355–361. [Google Scholar] [CrossRef] [PubMed]

- Postek, M.T.; Vladár, A.E.; Purushotham, K.P. Does your SEM really tell the truth? How would you know? Part 2. Scanning 2014, 36, 347–355. [Google Scholar] [CrossRef] [PubMed]

- Thong, J.T.; Lee, K.W.; Wong, W.K. Reduction of charging effects using vector scanning in the scanning electron microscope. Scanning 2001, 23, 395–402. [Google Scholar] [CrossRef] [PubMed]

- de Faria, M.G.; Haddab, Y.; Le Gorrec, Y.; Lutz, P. Influence of mechanical noise inside a scanning electron microscope. Rev. Sci. Instrum. 2015, 86, 045105. [Google Scholar] [CrossRef]

- Lee, K.M.; Lee, J.; Chung, C.Y.; Ahn, S.; Sung, K.H.; Kim, T.W.; Lee, H.J.; Park, M.S. Pitfalls and important issues in testing reliability using intraclass correlation coefficients in orthopaedic research. Clin. Orthop. Surg. 2012, 4, 149–155. [Google Scholar] [CrossRef]

- McGraw, K.O.; Wong, S.P. Forming inferences about some intraclass correlation coefficients. Psychol. Methods 1996, 1, 30–46. [Google Scholar] [CrossRef]

- Fisher, R.A. On the probable error of a coefficient of correlation deduced from a small sample. Metron 1921, 1, 3–32. [Google Scholar]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2017, 15, 155–163, Erratum in: 2017, 16, 346. [Google Scholar] [CrossRef]

- Portney, L.G.; Watkins, M.P. Foundations of Clinical Research: Applications to Practice, 2nd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2000. [Google Scholar]

- Cole, T.J. Too many digits: The presentation of numerical data. Arch. Dis. Child. 2015, 100, 608–609. [Google Scholar] [CrossRef]

- Altman, D.G.; Bland, J.M. Statistics notes 15. Presentation of numerical data. BMJ 1996, 312, 572. [Google Scholar] [CrossRef]

- Lo Giudice, A.; Quinzi, V.; Ronsivalle, V.; Martina, S.; Bennici, O.; Isola, G. Description of a Digital Work-Flow for CBCT-Guided Construction of Micro-Implant Supported Maxillary Skeletal Expander. Materials (Basel) 2020, 13, 1815. [Google Scholar] [CrossRef] [PubMed]

- Scribante, A.; Montasser, M.A.; Radwan, E.S.; Bernardinelli, L.; Alcozer, R.; Gandini, P.; Sfondrini, M.F. Reliability of Orthodontic Miniscrews: Bending and Maximum Load of Different Ti-6Al-4V Titanium and Stainless Steel Temporary Anchorage Devices (TADs). Materials (Basel) 2018, 11, 1138. [Google Scholar] [CrossRef] [PubMed]

- Sfondrini, M.F.; Gandini, P.; Alcozer, R.; Vallittu, P.K.; Scribante, A. Failure load and stress analysis of orthodontic miniscrews with different transmucosal collar diameter. J. Mech. Behav. Biomed. Mater. 2018, 87, 132–137. [Google Scholar] [CrossRef] [PubMed]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).