Accurate Passive 3D Polarization Face Reconstruction under Complex Conditions Assisted with Deep Learning

Abstract

1. Introduction

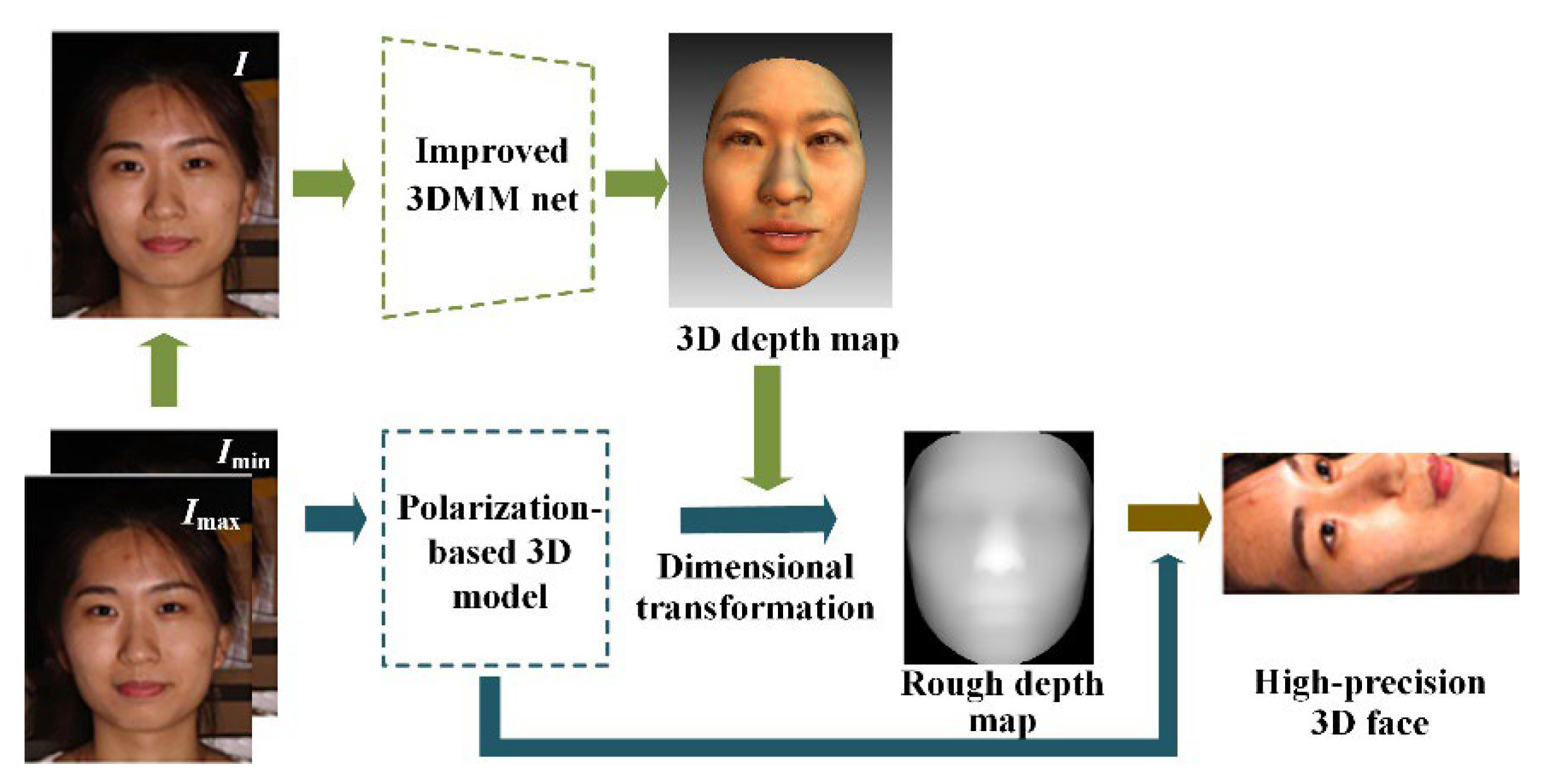

2. Models

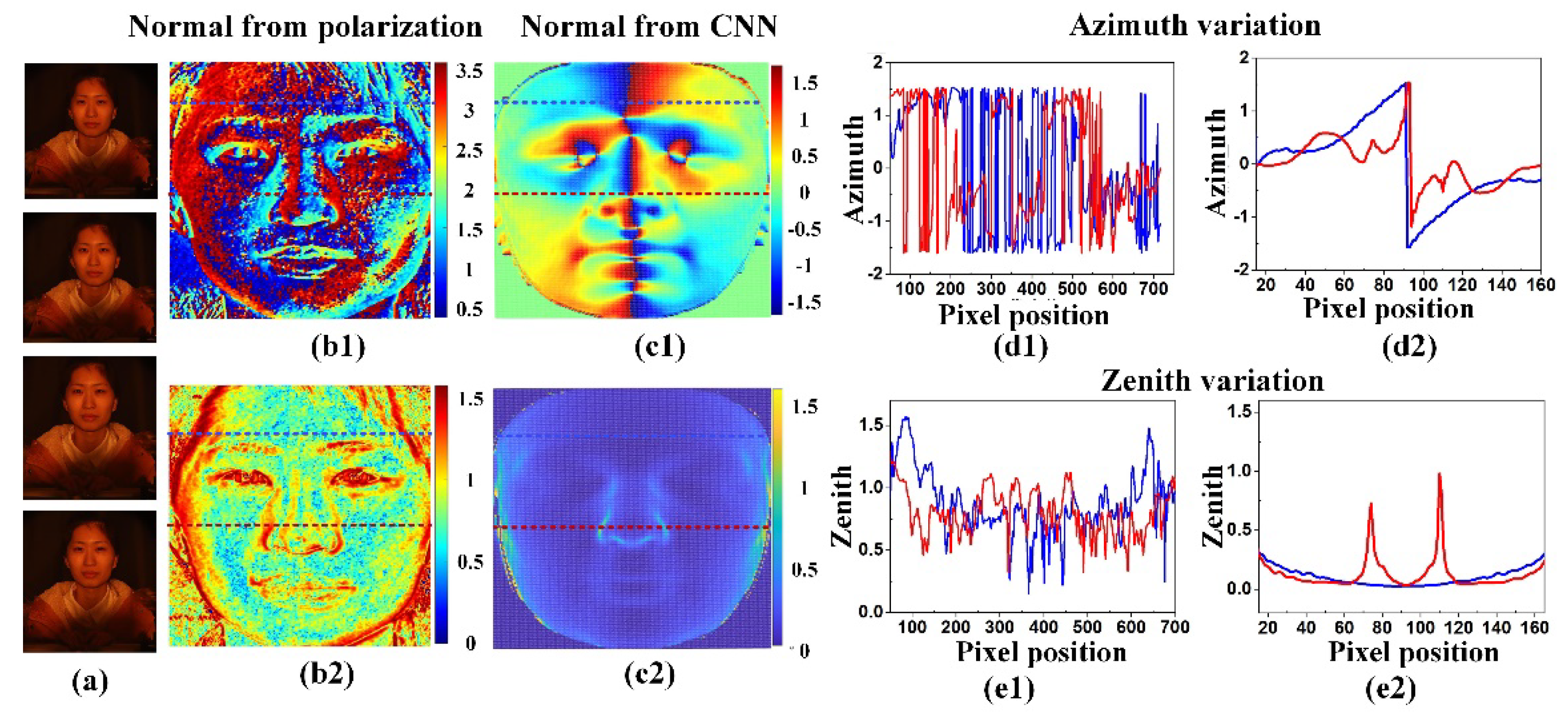

2.1. Normal Estimation from Polarization

2.2. Normal Constraint from CNN-Based 3DMM

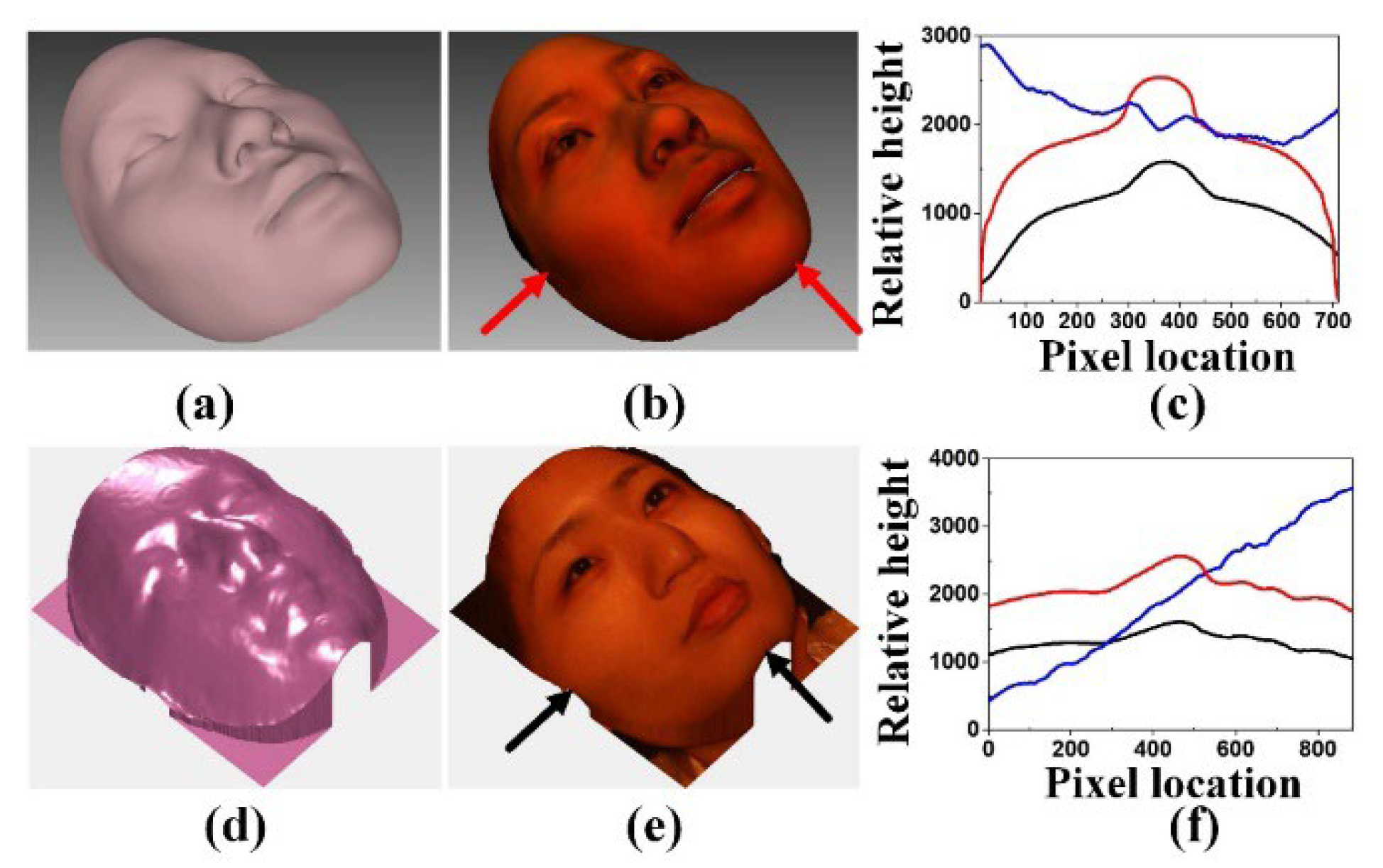

2.3. Reconstructing 3D Profile

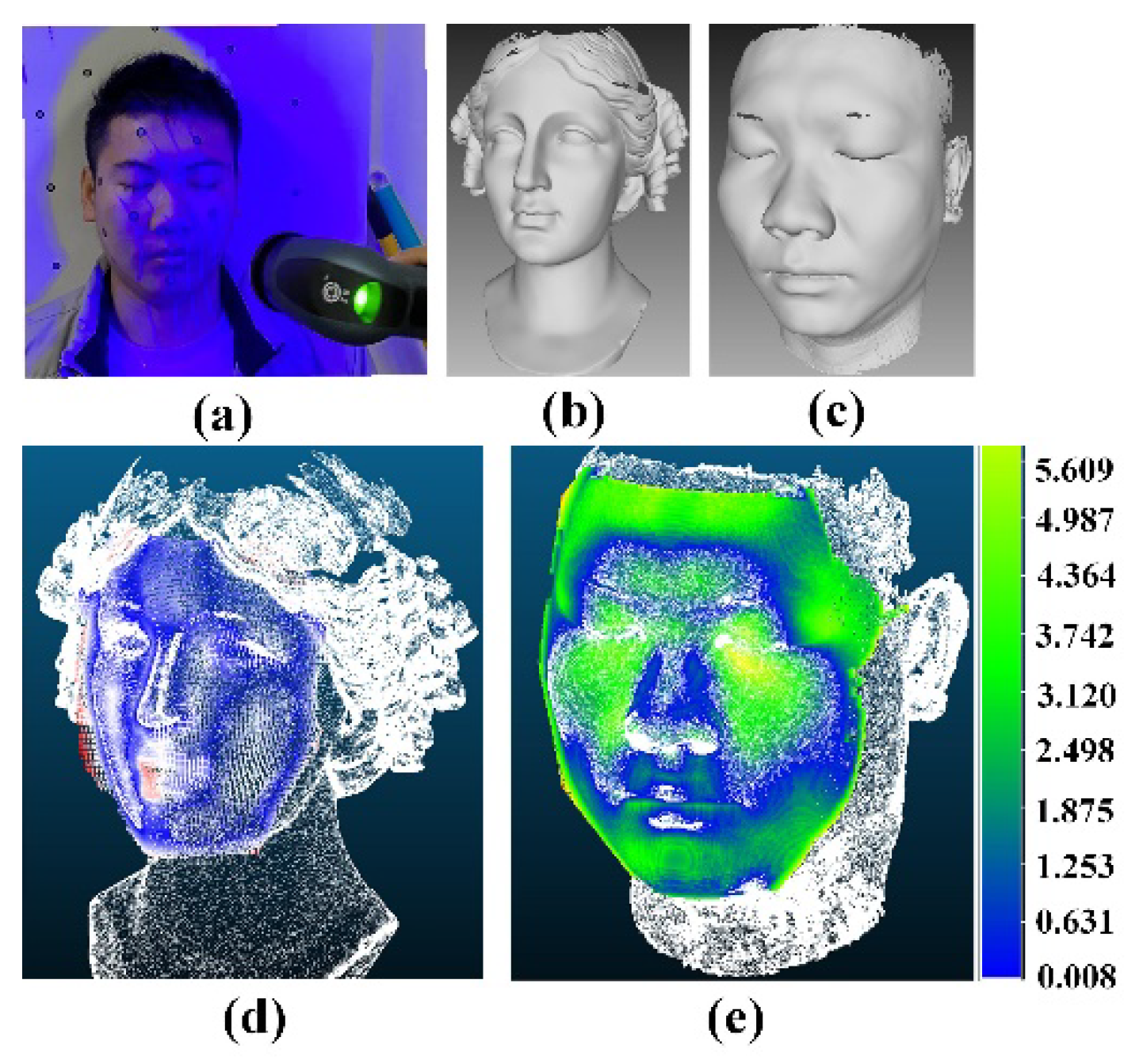

3. Experiments and Results Analysis

4. Conclusions

Author Contributions

Funding

Informed Consent Statement

Conflicts of Interest

References

- Uzair, M.; Mahmood, A.; Shafait, F.; Nansen, C.; Mian, A. Is spectral reflectance of the face a reliable biometric? Opt. Experss 2015, 23, 15160–15173. [Google Scholar] [CrossRef] [PubMed]

- Blanz, V.; Vetter, T. A morphable model for the synthesis of 3D faces. In Proceedings of the 26th Annual conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 8–13 August 1999; pp. 187–194. [Google Scholar]

- Thies, J.; Zollhofer, M.; Stamminger, M.; Theobalt, C.; Nießner, M. Face2face: Real-time face capture and reenactment of rgb videos. In Proceedings of the IEEE Conference on computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2387–2395. [Google Scholar]

- Cao, C.; Weng, Y.; Lin, S.; Zhou, K. 3D shape regression for real-time facial animation. ACM Trans. Graph. (TOG) 2013, 32, 1–10. [Google Scholar] [CrossRef]

- Hu, L.; Saito, S.; Wei, L.; Nagano, K.; Seo, J.; Fursund, J.; Sadeghi, I.; Sun, C.; Chen, Y.-C.; Li, H. Avatar digitization from a single image for real-time rendering. ACM Trans. Graph. (TOG) 2017, 36, 1–14. [Google Scholar] [CrossRef]

- Xie, W.; Kuang, Z.; Wang, M. SCIFI: 3D face reconstruction via smartphone screen lighting. Opt. Express 2021, 29, 43938–43952. [Google Scholar] [CrossRef]

- Ma, L.; Lyu, Y.; Pei, X.; Hu, Y.M.; Sun, F.M. Scaled SFS method for Lambertian surface 3D measurement under point source lighting. Opt. Express 2018, 26, 14251–14258. [Google Scholar] [CrossRef] [PubMed]

- Koshikawa, K. A polarimetric approach to shape understanding of glossy objects. Adv. Robot. 1979, 2, 190. [Google Scholar]

- Wolff, L.B.; Boult, T.E. Constraining object features using a polarization reflectance model. Phys. Based Vis. Princ. Pract. Radiom 1993, 1, 167. [Google Scholar] [CrossRef]

- Mahmoud, A.H.; El-Melegy, M.T.; Farag, A.A. Direct method for shape recovery from polarization and shading. In Proceedings of the 2012 19th IEEE International Conference on Image Processing, Orlando, FL, USA, 30 September–3 October 2012; pp. 1769–1772. [Google Scholar]

- Atkinson, G.A.; Hancock, E.R. Recovery of surface orientation from diffuse polarization. IEEE Trans. Image Process. 2006, 15, 1653–1664. [Google Scholar] [CrossRef] [PubMed]

- Gurton, K.P.; Yuffa, A.J.; Videen, G.W. Enhanced facial recognition for thermal imagery using polarimetric imaging. Opt. Lett. 2014, 39, 3857–3859. [Google Scholar] [CrossRef]

- Kadambi, A.; Taamazyan, V.; Shi, B.; Raskar, R. Polarized 3D: Synthesis of polarization and depth cues for enhanced 3D sensing. In SIGGRAPH 2015: Studio; Association for Computing Machinery: New York, NY, USA, 2015. [Google Scholar]

- Ba, Y.; Gilbert, A.; Wang, F.; Yang, J.; Chen, R.; Wang, Y.; Yan, L.; Shi, B.; Kadambi, A. Deep shape from polarization. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 554–571. [Google Scholar]

- Riviere, J.; Gotardo, P.; Bradley, D.; Ghosh, A.; Beeler, T. Single-shot high-quality facial geometry and skin appearance capture. ACM Trans. Graph. 2020, 39, 81:1–81:12. [Google Scholar] [CrossRef]

- Gotardo, P.; Riviere, J.; Bradley, D.; Ghosh, A.; Beeler, T. Practical dynamic facial appearance modeling and acquisition. ACM Trans. Graph. 2018, 37, 232. [Google Scholar] [CrossRef]

- Miyazaki, D.; Shigetomi, T.; Baba, M.; Furukawa, R.; Hiura, S.; Asada, N. Surface normal estimation of black specular objects from multiview polarization images. Opt. Eng. 2016, 56, 041303. [Google Scholar] [CrossRef]

- Kadambi, A.; Taamazyan, V.; Shi, B.; Raskar, R. Depth sensing using geometrically constrained polarization normals. Int. J. Comput. Vis. 2017, 125, 34–51. [Google Scholar] [CrossRef]

- Smith, W.A.; Ramamoorthi, R.; Tozza, S. Linear depth estimation from an uncalibrated, monocular polarisation image. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 109–125. [Google Scholar]

- Schechner, Y.Y.; Narasimhan, S.G.; Nayar, S.K. Polarization-based vision through haze. Appl. Opt. 2003, 42, 511–525. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Li, D.; Song, L.; Wang, X.; Zhan, K.; Wang, N.; Yun, M. Precise analysis of formation and suppression of intensity transmittance fluctuations of glan-taylor prisms. Laser Optoelectron. Prog. 2013, 50, 052302. [Google Scholar]

- Deng, Y.; Yang, J.; Xu, S.; Chen, D.; Jia, Y.; Tong, X. Accurate 3d face reconstruction with weakly-supervised learning: From single image to image set. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019. [Google Scholar]

- Guo, Y.; Cai, J.; Jiang, B.; Zheng, J. CNN-based real-time dense face reconstruction with inverse-rendered photo-realistic face images. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1294–1307. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Tewari, A.; Zollhöfer, M.; Garrido, P.; Bernard, F.; Kim, H.; Pérez, P.; Theobalt, C. Self-supervised multi-level face model learning for monocular reconstruction at over 250 hz. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2549–2559. [Google Scholar]

- Tewari, A.; Zollhofer, M.; Kim, H.; Garrido, P.; Bernard, F.; Perez, P.; Theobalt, C. Mofa: Model-based deep convolutional face autoencoder for unsupervised monocular reconstruction. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 1274–1283. [Google Scholar]

- Jones, M.J.; Rehg, J.M. Statistical color models with application to skin detection. Int. J. Comput. Vis. 2002, 46, 81–96. [Google Scholar] [CrossRef]

- Jackson, A.S.; Bulat, A.; Argyriou, V.; Tzimiropoulos, G. Large pose 3D face reconstruction from a single image via direct volumetric CNN regression. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1031–1039. [Google Scholar]

- Bulat, A.; Tzimiropoulos, G. How far are we from solving the 2D & 3D face alignment problem? (And a dataset of 230,000 3D facial landmarks). In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1021–1030. [Google Scholar]

- Genova, K.; Cole, F.; Maschinot, A.; Sarna, A.; Vlasic, D.; Freeman, W.T. Unsupervised training for 3d morphable model regression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8377–8386. [Google Scholar]

- Frankot, R.T.; Chellappa, R. A method for enforcing integrability in shape from shading algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 439–451. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, P.; Li, X.; Liu, F.; Cai, Y.; Yang, K.; Yan, M.; Sun, S.; Liu, Y.; Shao, X. Accurate Passive 3D Polarization Face Reconstruction under Complex Conditions Assisted with Deep Learning. Photonics 2022, 9, 924. https://doi.org/10.3390/photonics9120924

Han P, Li X, Liu F, Cai Y, Yang K, Yan M, Sun S, Liu Y, Shao X. Accurate Passive 3D Polarization Face Reconstruction under Complex Conditions Assisted with Deep Learning. Photonics. 2022; 9(12):924. https://doi.org/10.3390/photonics9120924

Chicago/Turabian StyleHan, Pingli, Xuan Li, Fei Liu, Yudong Cai, Kui Yang, Mingyu Yan, Shaojie Sun, Yanyan Liu, and Xiaopeng Shao. 2022. "Accurate Passive 3D Polarization Face Reconstruction under Complex Conditions Assisted with Deep Learning" Photonics 9, no. 12: 924. https://doi.org/10.3390/photonics9120924

APA StyleHan, P., Li, X., Liu, F., Cai, Y., Yang, K., Yan, M., Sun, S., Liu, Y., & Shao, X. (2022). Accurate Passive 3D Polarization Face Reconstruction under Complex Conditions Assisted with Deep Learning. Photonics, 9(12), 924. https://doi.org/10.3390/photonics9120924