Monitoring of OSNR Using an Improved Binary Particle Swarm Optimization and Deep Neural Network in Coherent Optical Systems

Abstract

:1. Introduction

2. Principles of the Proposed Method

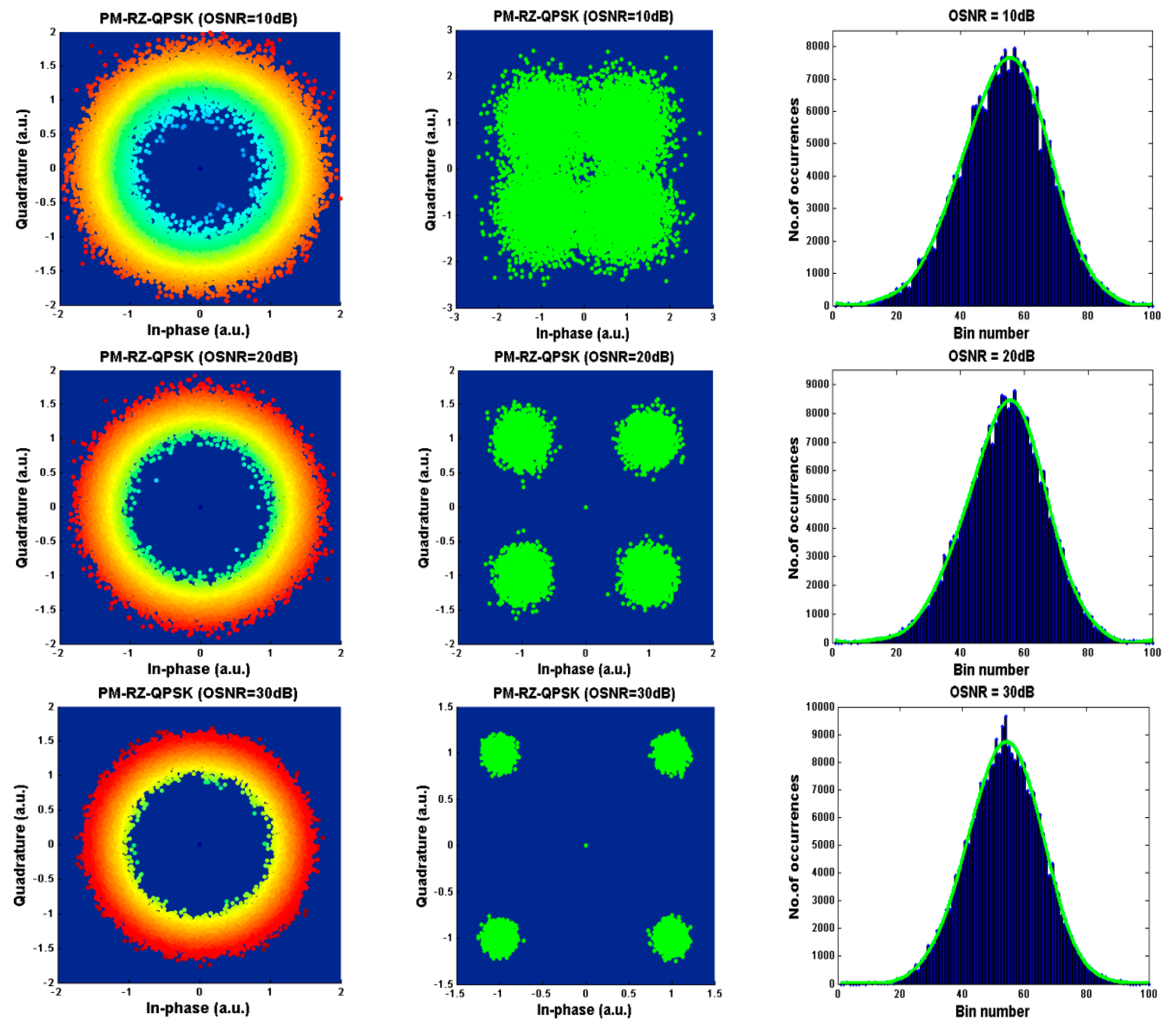

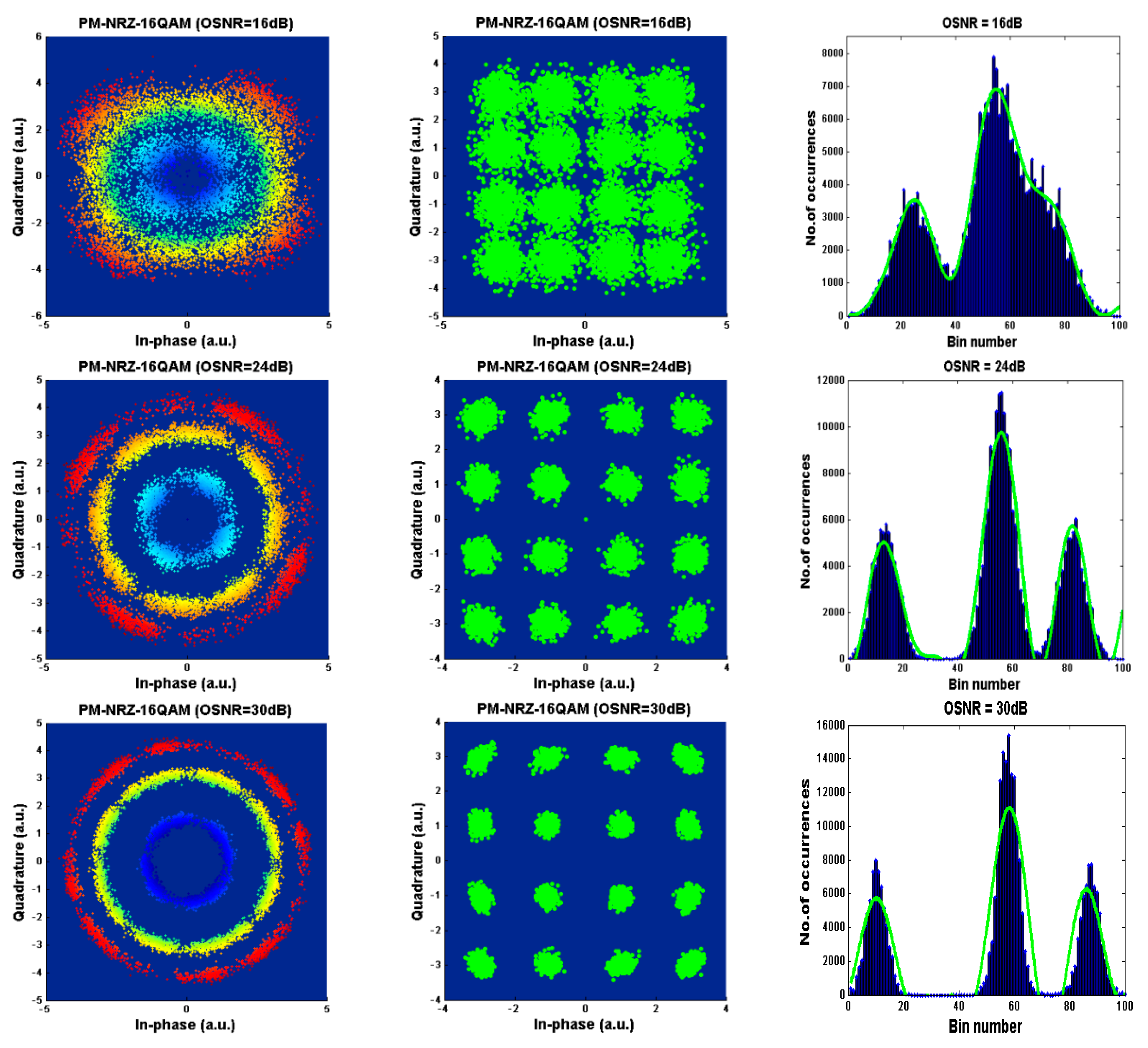

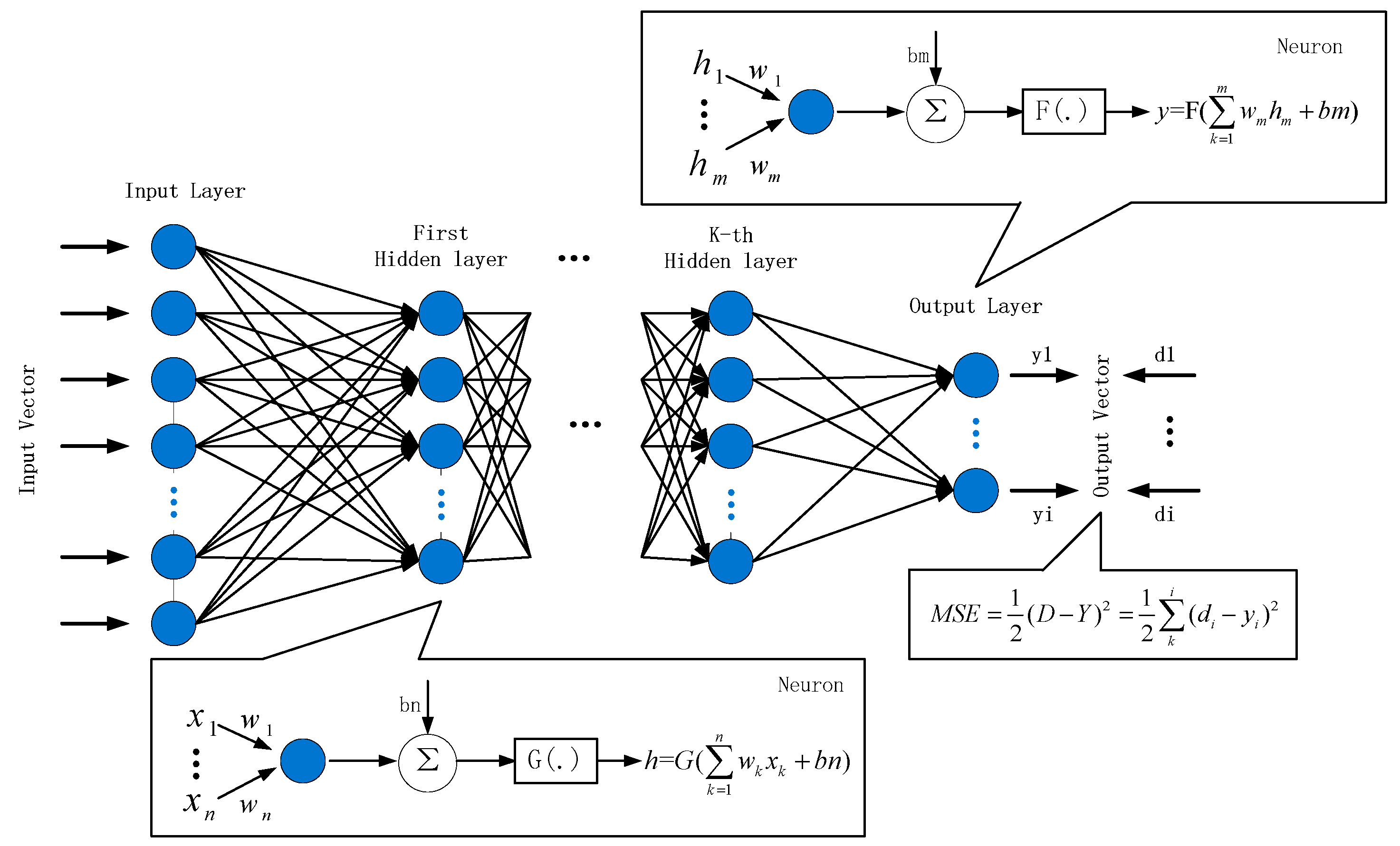

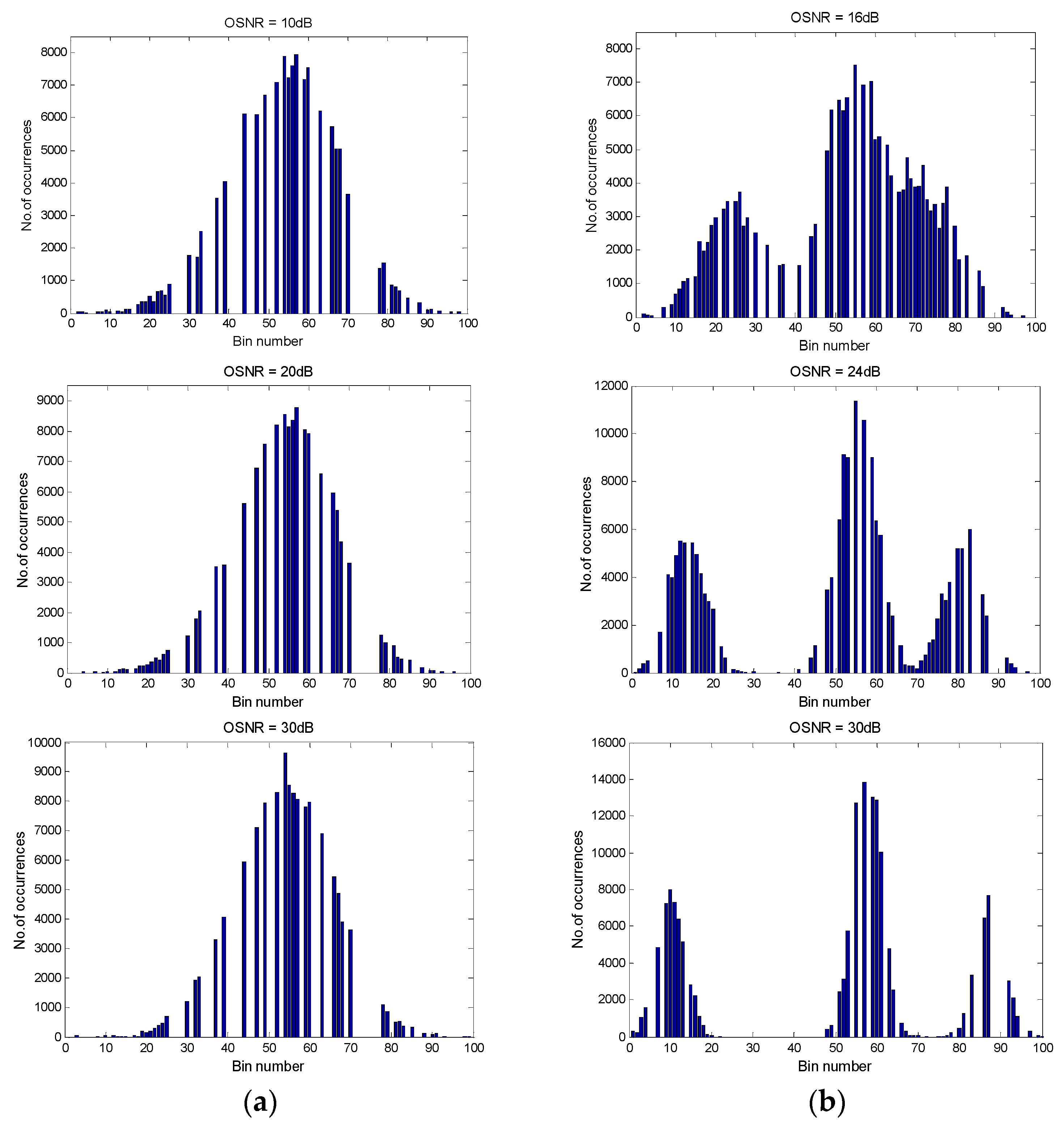

2.1. OSNR Monitoring Based on the Conventional DNN Trained with AHs

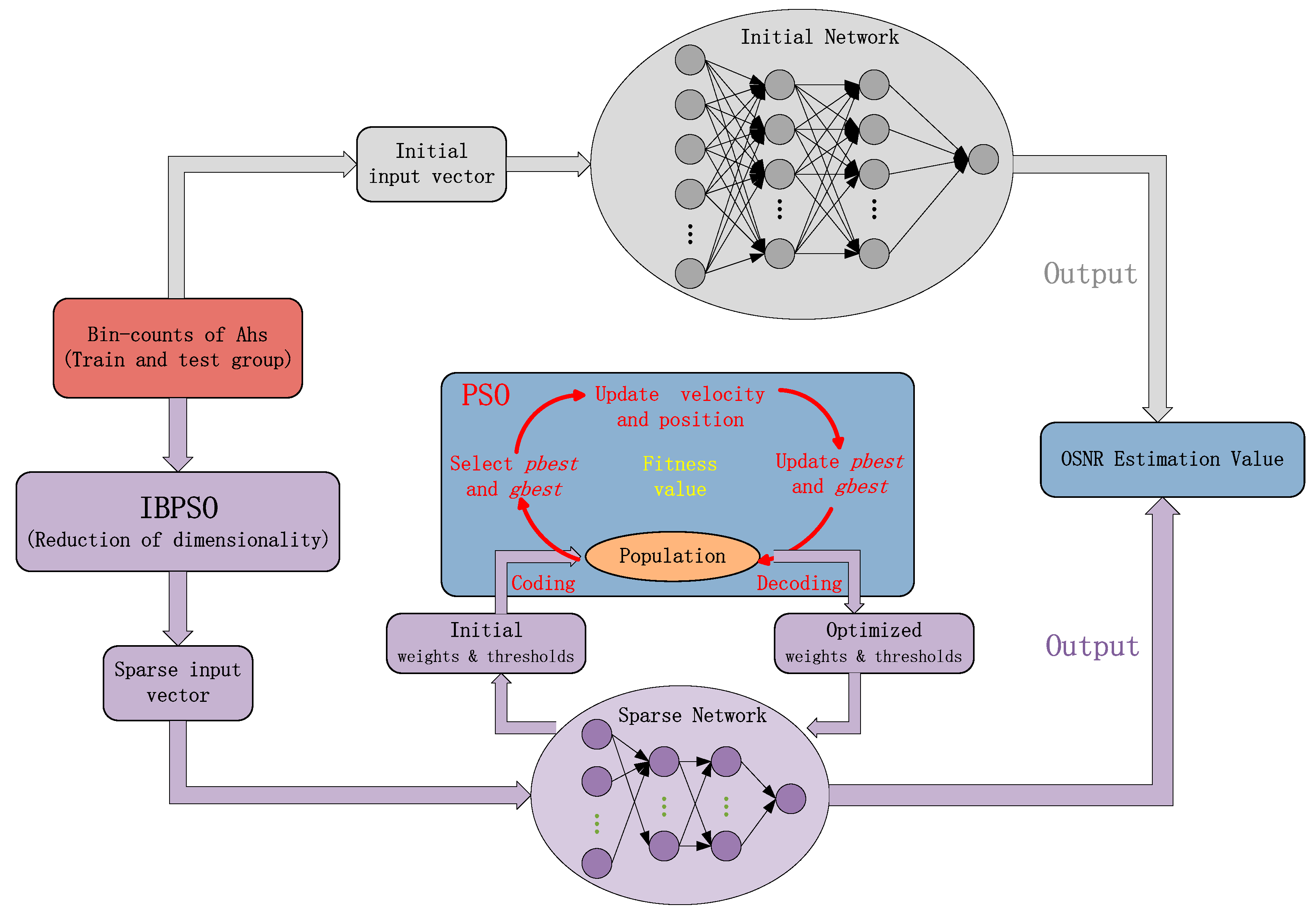

2.2. OSNR Monitoring Based on the IBPSO-Based DNN Trained with AHs

2.2.1. Principle of Particle Swarm Optimization (PSO)

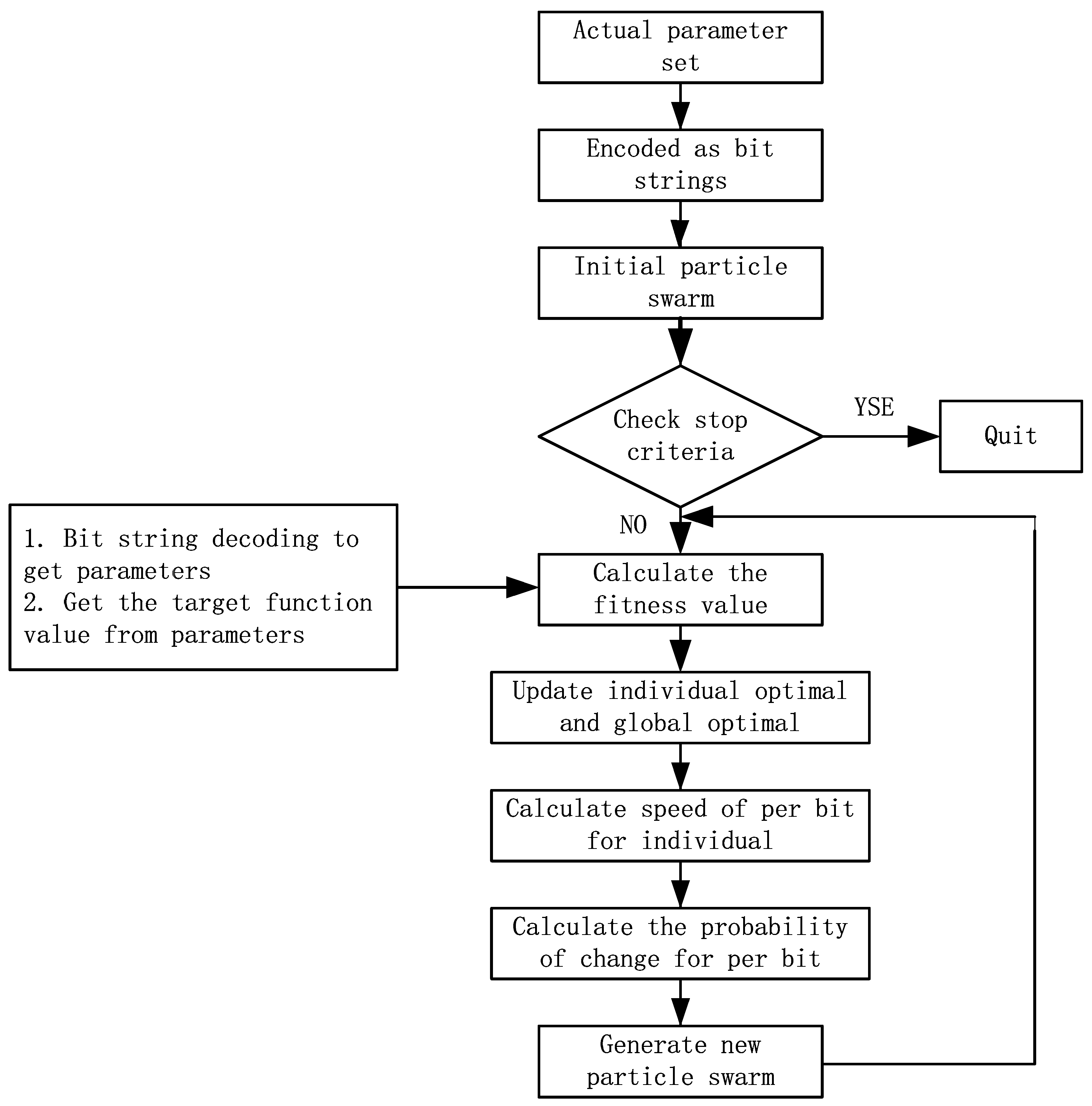

2.2.2. Principle of the IBPSO

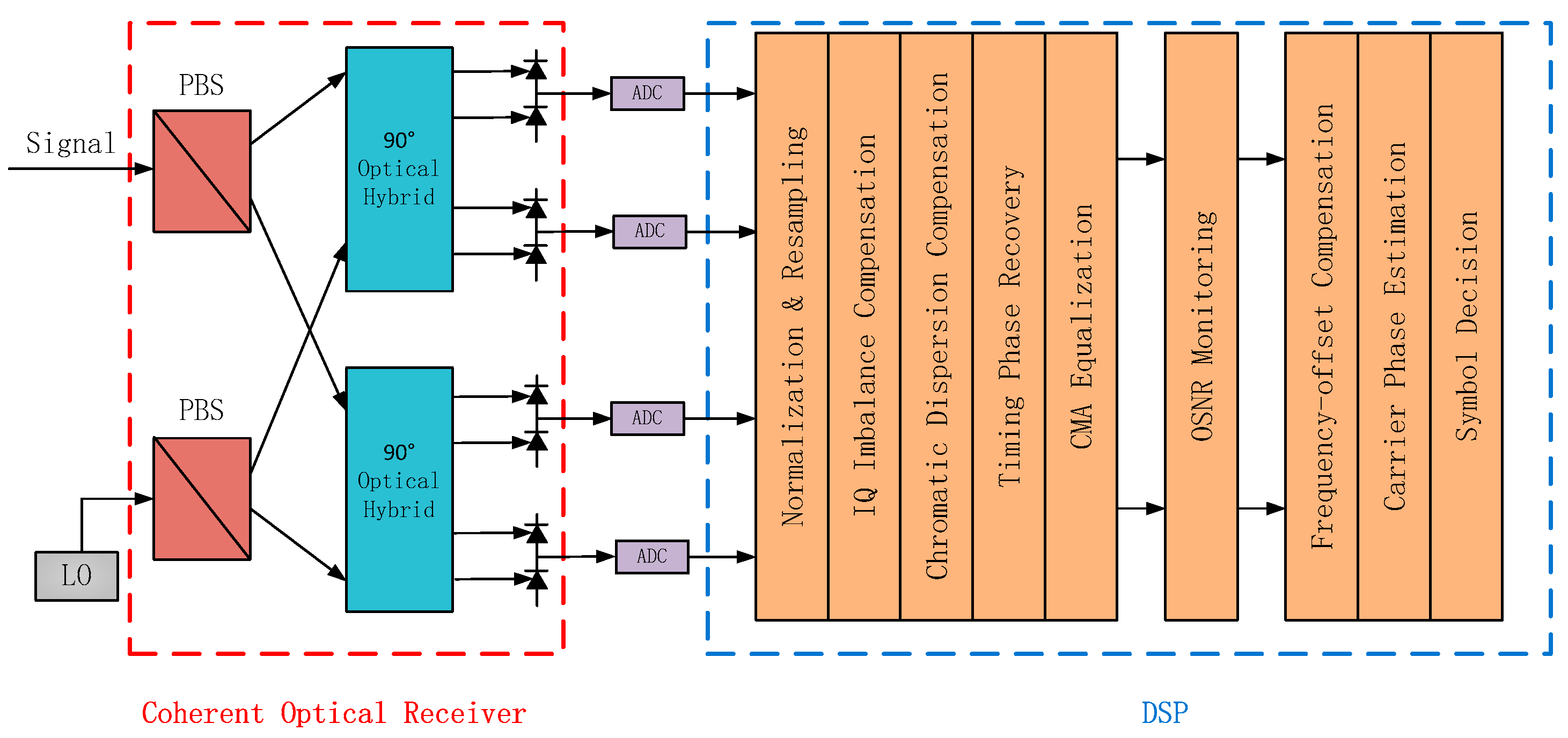

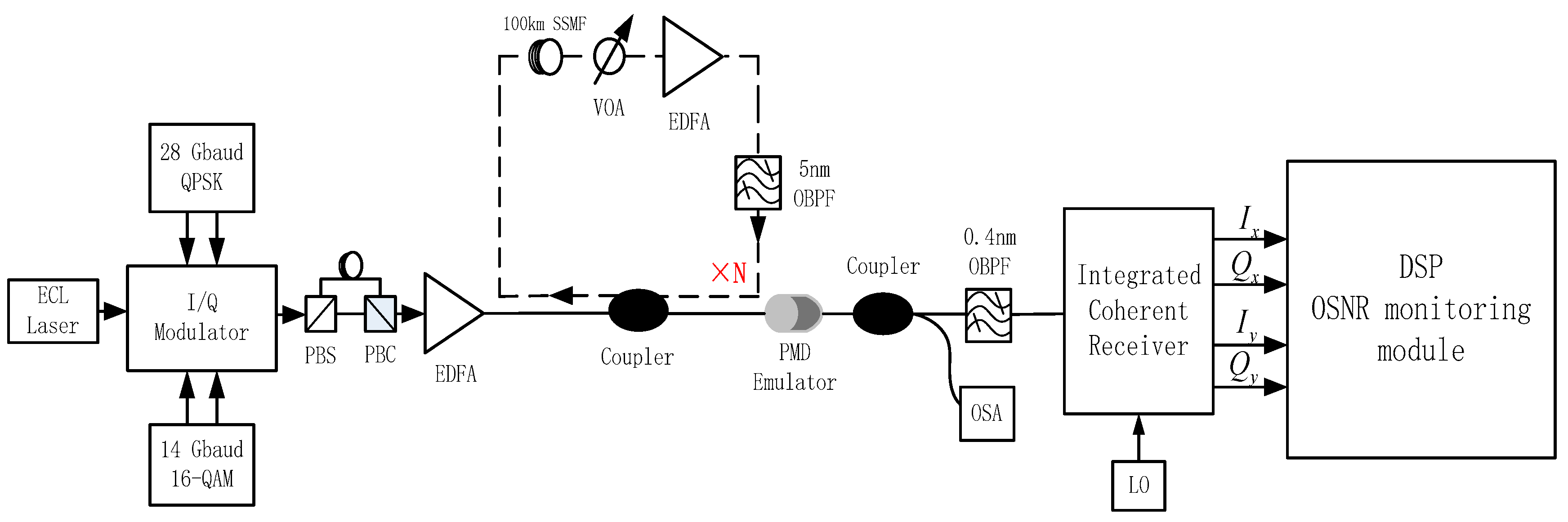

3. Experimental Setup

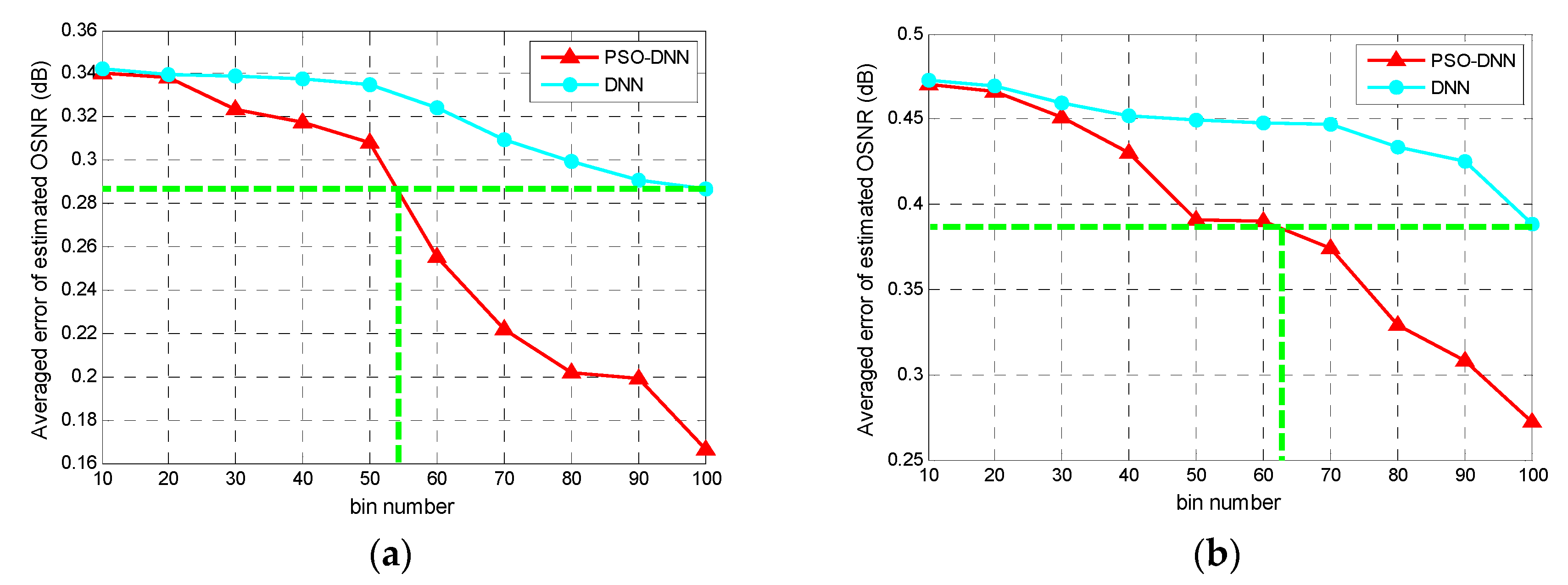

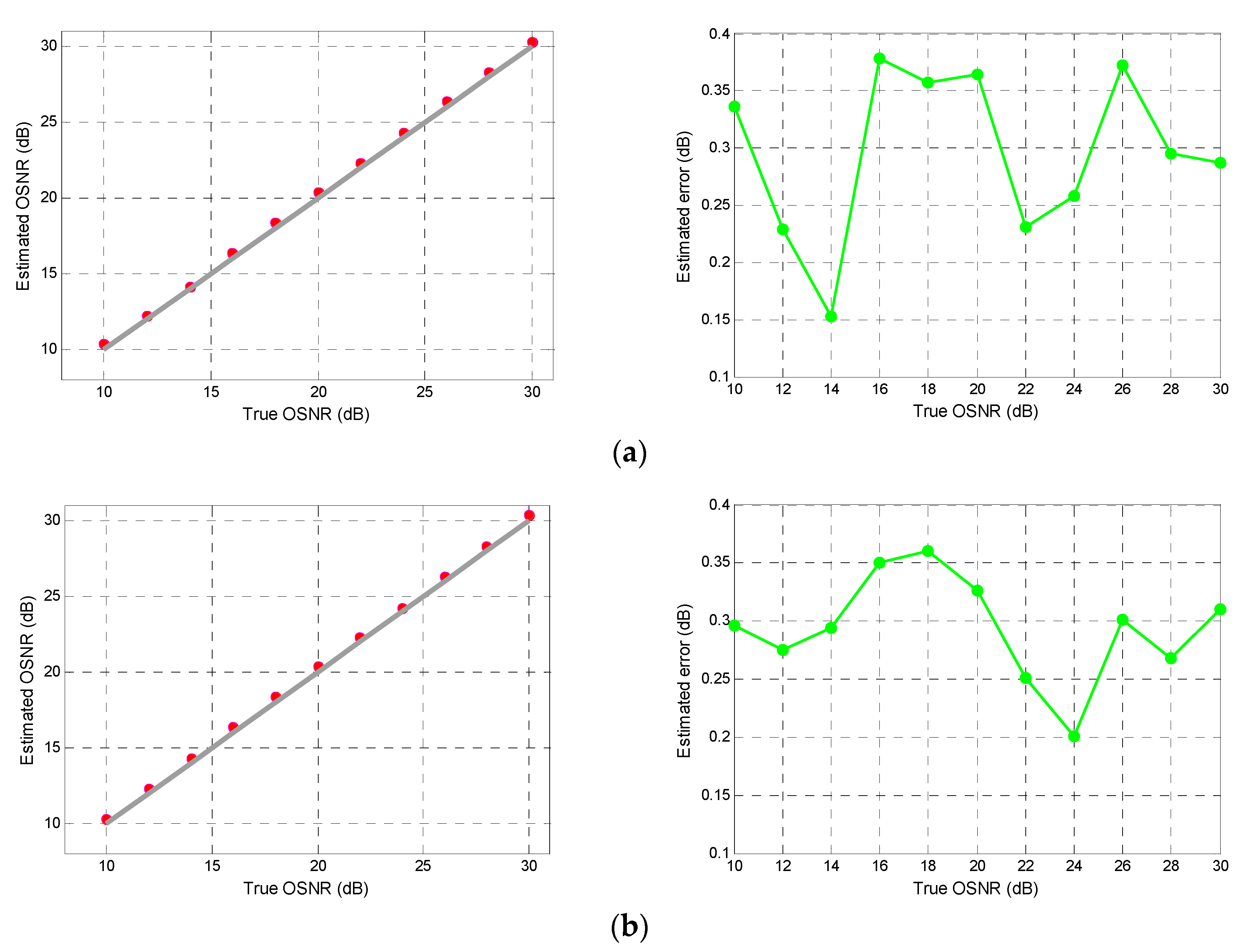

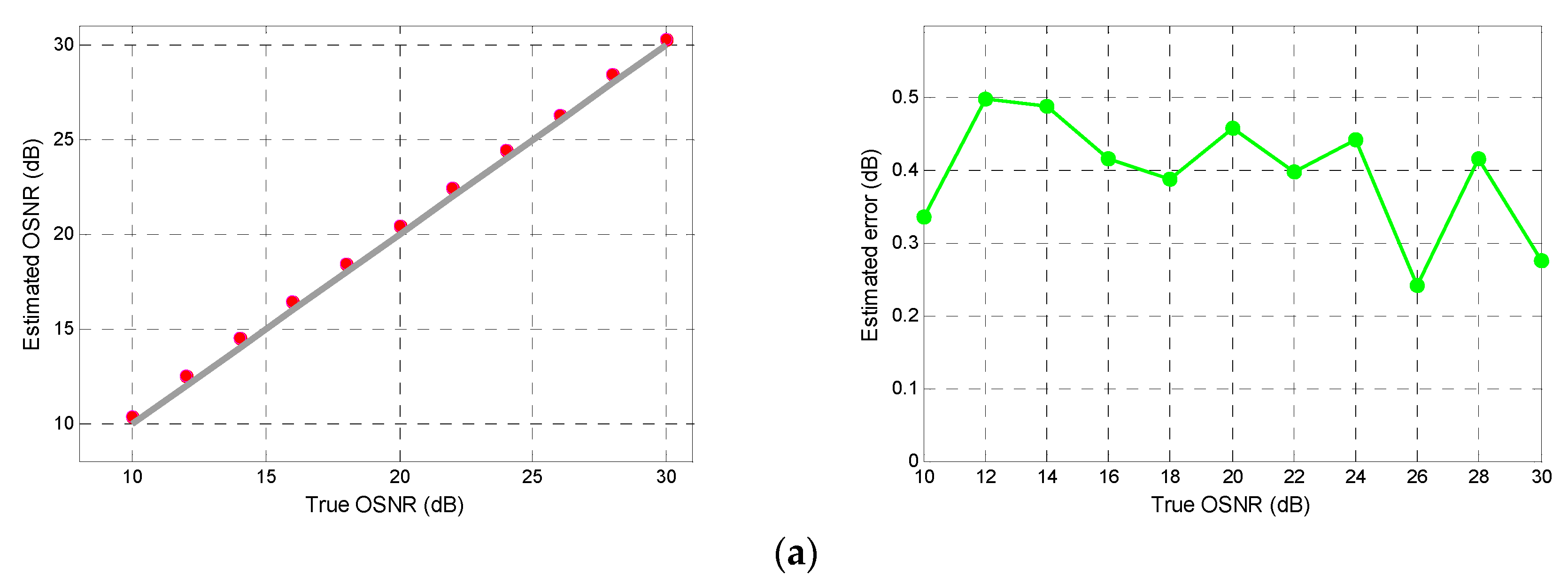

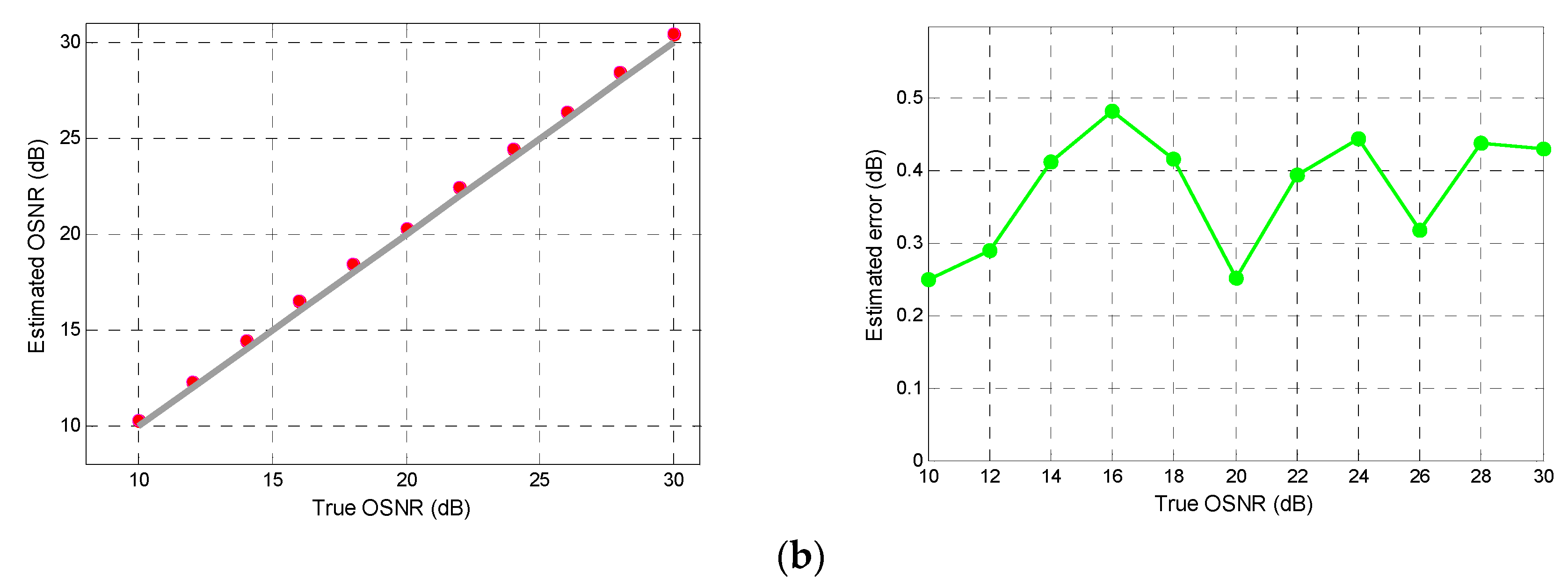

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Cugini, F.; Paolucci, F.; Fresi, F.; Meloni, G.; Sambo, N.; Potí, L.; D’Errico, A.; Castoldi, P. Toward plug-and-play software-defined elastic optical networks. IEEE/OSA J. Lightwave Technol. 2016, 34, 1494–1500. [Google Scholar] [CrossRef]

- Pan, Z.; Yu, C.; Willner, A.E. Optical performance monitoring for the next generation optical communication network. Opt. Fiber Technol. 2010, 16, 20–45. [Google Scholar] [CrossRef]

- Velasco, L.; Shariati, B.; Vela, A.P.; Comellas, J.; Ruiz, M. Learning from the optical spectrum: Soft-failure identification and localization. In Proceedings of the Exposition (OFC), San Diego, CA, USA, 11–15 March 2018. [Google Scholar]

- Chan, C.K. Optical Performance Monitoring; Academic Press: Cambridge, MA, USA, 2010. [Google Scholar]

- Dong, Z.; Khan, F.N.; Sui, Q.; Zhong, K.; Lu, C.; Lau, A.P.T. Optical performance monitoring: A review of current and future technologies. IEEE/OSA J. Lightwave Technol. 2016, 34, 525–543. [Google Scholar] [CrossRef]

- Choi, H.Y.; Lee, J.H.; Jun, S.B.; Chung, Y.H.; Shin, S.K.; Ji, S.K. Improved polarization-nulling technique for monitoring OSNR in WDM network. In Proceedings of the Optical Fiber Communication Conference and the National Fiber Optic Engineers Conference (OFC), Anaheim, CA, USA, 5–10 March 2006. [Google Scholar]

- Lin, X.; Yan, L. Multiple-channel OSNR monitoring using integrated planar lightwave circuit and fast Fourier transform techniques. In Proceedings of the IEEE Lasers and Electro-Optics Society (LEOS), Tucson, AZ, USA, 27–28 October 2003. [Google Scholar]

- Lundberg, L.; Sunnerud, H.; Johannisson, P. In-band OSNR monitoring of PM-QPSK using the Stokes parameters. In Proceedings of the Optical Fiber Communication Conference (OFC), Los Angeles, CA, USA, 22–26 March 2015. [Google Scholar]

- Chitgarha, M.R.; Khaleghi, S.; Daab, W.; Almaiman, A.; Ziyadi, M.; Mohajerin-Ariaei, A.; Rogawski, D.; Tur, M.; Touch, J.D.; Vusirikala, V.; et al. Demonstration of in-service wavelength division multiplexing optical-signal-to-noise ratio performance monitoring and operating guidelines for coherent data channels with different modulation formats and various baud rates. Opt. Lett. 2014, 39, 1605–1608. [Google Scholar] [CrossRef]

- Chen, M.; Yang, J.; Zhang, N.; You, S. Optical signal-to-noise ratio monitoring based on four-wave mixing. Opt. Eng. 2015, 54, 56109. [Google Scholar] [CrossRef]

- Wang, Z.; Yang, A.; Guo, P.; Lu, Y.; Qiao, Y. Nonlinearity-tolerant OSNR estimation method based on correlation function and statistical moments. Opt. Fiber Technol. 2017, 39, 5–11. [Google Scholar] [CrossRef]

- Huang, Z.; Qiu, J.; Kong, D.; Tian, Y.; Li, Y.; Guo, H.; Hong, X.; Wu, J. A novel in-band OSNR measurement method based on normalized autocorrelation function. IEEE Photonics J. 2018, 10, 7903208. [Google Scholar] [CrossRef]

- Schmogrow, R.; Nebendahl, B.; Winter, M.; Josten, A.; Hillerkuss, D.; Koenig, S.; Meyer, J.; Dreschmann, M.; Huebner, M.; Koos, C.; et al. Error vector magnitude as a performance measure for advanced modulation formats. IEEE Photonics Technol. Lett. 2012, 24, 61–63. [Google Scholar] [CrossRef]

- Khan, F.N.; Teow, C.H.; Kiu, S.G.; Tan, M.C.; Zhou, Y.; Al-Arashi, W.H.; Lau, A.P.T.; Lu, C. Automatic modulation format/bit-rate classification and signal-to-noise ratio estimation using asynchronous delay-tap sampling. Comput. Electr. Eng. 2015, 47, 126–133. [Google Scholar] [CrossRef]

- Do, C.C.; Zhu, C.; Tran, A.V. Data-aided OSNR estimation using low-bandwidth coherent receivers. IEEE Photonics Technol. Lett. 2014, 26, 1291–1294. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, G.; Dlugolinsky, S.; Bobák, M.; Tran, V.; García, Á.L.; Heredia, I.; Malík, P.; Hluchý, L. Machine learning and deep learning frameworks and libraries for large-scale data mining: A survey. Artif. Intell. Rev. 2019, 52, 77–124. [Google Scholar] [CrossRef]

- Sun, R.; Wang, X.; Yan, X. Robust visual tracking based on convolutional neural network with extreme learning machine. Multimed. Tools Appl. 2019, 78, 7543–7562. [Google Scholar] [CrossRef]

- Zhang, Z.; Geiger, J.; Pohjalainen, J.; Mousa, A.E.; Jin, W.; Schuller, B. Deep learning for environmentally robust speech recognition: An overview of recent developments. ACM Trans. Intel. Syst. Tec. 2017, 9, 49. [Google Scholar] [CrossRef]

- Housseini, A.E.; Toumi, A.; Khenchaf, A. Deep learning for target recognition from SAR images. In Proceedings of the Detection Systems Architectures and Technologies (DAT), Algiers, Algeria, 20–22 February 2017. [Google Scholar]

- Salani, M.; Rottondi, C.; Tornatore, M. Routing and spectrum assignment integrating machine-learning-based QoT estimation in elastic optical networks. In Proceedings of the IEEE Conference on Computer Communications (INFOCOM), Paris, France, 29 April–2 May 2019. [Google Scholar]

- Nie, L.; Wang, M.; Zhang, L.; Yan, S.; Zhang, B.; Chua, T.S. Disease inference from health-related questions via sparse deep learning. IEEE Trans. Knowl. Data Eng. 2015, 27, 2107–2119. [Google Scholar] [CrossRef]

- Zibar, D.; Schäffer, C. Machine Learning Concepts in Coherent Optical Communication Systems. In Proceedings of the Signal Processing in Photonic Communications (SSPCom), San Diego, CA, USA, 13–16 July 2014. [Google Scholar]

- Lin, X.; Dobre, O.A.; Ngatched, T.M.N.; Eldemerdash, Y.A.; Li, C. Joint modulation classification and OSNR estimation enabled by support vector machine. IEEE Photonics Technol. Lett. 2018, 30, 2127–2130. [Google Scholar] [CrossRef]

- Cui, S.; He, S.; Shang, J.; Ke, C.; Fu, S.; Liu, D. Method to improve the performance of the optical modulation format identification system based on asynchronous amplitude histogram. Opt. Fiber Technol. 2015, 23, 13–17. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, M. Chromatic dispersion and OSNR monitoring based on generalized regression neural network. Elector-Opt. Technol. Appl. 2018, 33, 30–36. [Google Scholar]

- Wang, D.; Zhang, M.; Li, Z.; Li, J.; Fu, M.; Cui, Y.; Chen, X. Modulation format recognition and OSNR estimation using CNN-based deep learning. IEEE Photonics Technol. Lett. 2017, 29, 1667–1670. [Google Scholar] [CrossRef]

- Khan, F.N.; Zhong, K.; Zhou, X.; Al-Arashi, W.H.; Yu, C.; Lu, C.; Lau, A.P.T. Joint OSNR monitoring and modulation format identification in digital coherent receivers using deep neural network. Opt. Express 2017, 25, 17767–17776. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the International Conference on Neural Networks (ICNN), Perth, Australia, 27 November–1 December 1995. [Google Scholar]

- Kiran, M.S. Particle swarm optimization with a new update mechanism. Appl. Soft Comput. 2017, 60, 670–678. [Google Scholar] [CrossRef]

- Jiang, F.; Xia, H.; Tran, Q.A.; Ha, Q.M.; Tran, N.Q.; Hu, J. A new binary hybrid particle swarm optimization with wavelet mutation. Knowl. Based Syst. 2017, 130, 90–101. [Google Scholar] [CrossRef]

- Balaji, S.; Revathi, N. A new approach for solving set covering problem using jumping particle swarm optimization method. Nat. Comput. 2016, 15, 503–517. [Google Scholar] [CrossRef]

- Karami, H.; Karimi, S.; Bonakdari, H.; Shamshirband, S. Predicting discharge coefficient of triangular labyrinth weir using extreme learning machine, artificial neural network and genetic programming. Neural Comput. Appl. 2018, 29, 983–989. [Google Scholar] [CrossRef]

- Arefi-Oskoui, S.; Khataee, A.; Vatanpour, V. Modeling and optimization of NLDH/PVDF ultrafiltration nanocomposite membrane using artificial neural network-genetic algorithm hybrid. ACS Comb. Sci. 2017, 19, 464–477. [Google Scholar] [CrossRef]

- Saidi-Mehrabad, M.; Dehnavi-Arani, S.; Evazabadian, F.; Mahmoodian, V. An ant colony Algorithm (ACA) for solving the new integrated model of job shop scheduling and conflict-free routing of AGVs. Comput. Ind. Eng. 2015, 86, 2–13. [Google Scholar] [CrossRef]

- Tran, D.C.; Wu, Z.J.; Wang, Z.L.; Deng, C.S. A novel hybrid data clustering algorithm based on artificial bee colony algorithm and K-means. Chin. J. Electron. 2015, 24, 694–701. [Google Scholar] [CrossRef]

- Pavao, L.V.; Borba, C.B.; Ravagnani, M. Heat exchanger network synthesis without stream splits using parallelized and simplified simulated annealing and particle swarm optimization. Chem. Eng. Sci. 2017, 158, 96–107. [Google Scholar] [CrossRef]

- Sharmila, T.; Leo, L.M. Image up-scaling based convolutional neural network for better reconstruction quality. In Proceedings of the International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, India, 6–8 April 2016. [Google Scholar]

- Lei, Z.; Yang, K. Sound sources localization using compressive beamforming with a spiral array. In Proceedings of the International Conference on Information and Communication Technologies (ICT), Xi’an, China, 24 April 2015. [Google Scholar]

- Kyriakides, I. Target tracking using adaptive compressive sensing and processing. Signal Process. 2016, 127, 44–55. [Google Scholar] [CrossRef]

- Savory, S.J. Digital coherent optical receivers: Algorithms and subsystems. IEEE J. Sel. Top. Quantum Electron. 2010, 16, 1164–1178. [Google Scholar] [CrossRef]

- Ashkzari, A.; Azizi, A. Introducing genetic algorithm as an intelligent optimization technique. Appl. Mech. Mater. 2014, 568, 793–797. [Google Scholar] [CrossRef]

- Dorigo, M.; Blum, C. Ant colony optimization theory: A survey. Theor. Comput. Sci. 2005, 344, 243–278. [Google Scholar] [CrossRef]

- Codetta-Raiteri, D.; Luigi, P. Dynamic Bayesian networks for fault detection, identification, and recovery in autonomous spacecraft. IEEE Trans. Syst. Man Cybern. 2015, 45, 13–24. [Google Scholar] [CrossRef]

- Chuang, L.Y.; Yang, C.H.; Li, J.C. Chaotic maps based on binary particle swarm optimization for feature selection. Appl. Soft Comput. 2011, 11, 239–248. [Google Scholar] [CrossRef]

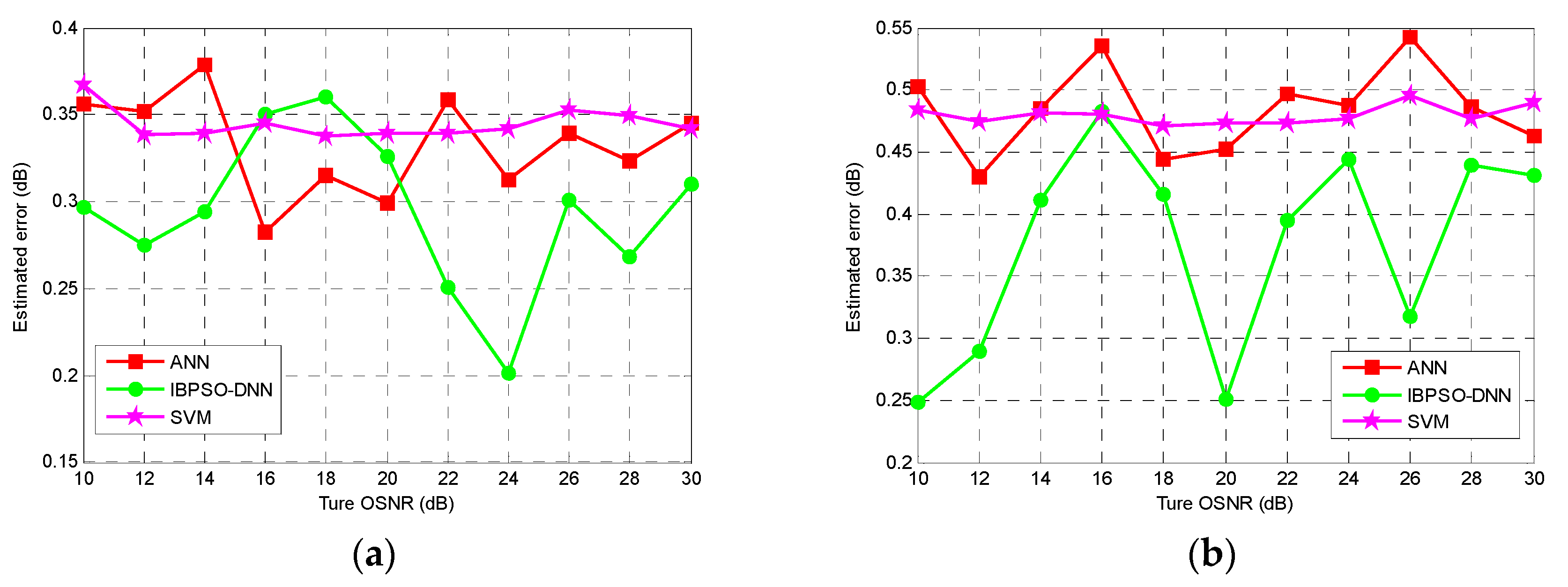

| Signal | ANN | IBPSO-DNN | SVM |

|---|---|---|---|

| Average/Maximum Error (dB) | Average/Maximum Error (dB) | Average/Maximum Error (dB) | |

| PM-RZ-QPSK | 0.33/0.54 | 0.29/0.37 | 0.34/0.61 |

| PM-NRZ-16QAM | 0.48/0.65 | 0.37/0.48 | 0.47/0.72 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, X.; Su, S.; Wei, J.; Guo, X.; Tan, X. Monitoring of OSNR Using an Improved Binary Particle Swarm Optimization and Deep Neural Network in Coherent Optical Systems. Photonics 2019, 6, 111. https://doi.org/10.3390/photonics6040111

Sun X, Su S, Wei J, Guo X, Tan X. Monitoring of OSNR Using an Improved Binary Particle Swarm Optimization and Deep Neural Network in Coherent Optical Systems. Photonics. 2019; 6(4):111. https://doi.org/10.3390/photonics6040111

Chicago/Turabian StyleSun, Xiaoyong, Shaojing Su, Junyu Wei, Xiaojun Guo, and Xiaopeng Tan. 2019. "Monitoring of OSNR Using an Improved Binary Particle Swarm Optimization and Deep Neural Network in Coherent Optical Systems" Photonics 6, no. 4: 111. https://doi.org/10.3390/photonics6040111

APA StyleSun, X., Su, S., Wei, J., Guo, X., & Tan, X. (2019). Monitoring of OSNR Using an Improved Binary Particle Swarm Optimization and Deep Neural Network in Coherent Optical Systems. Photonics, 6(4), 111. https://doi.org/10.3390/photonics6040111