Research on Power Laser Inspection Technology Based on High-Precision Servo Control System

Abstract

1. Introduction

2. Theoretical Analyses

2.1. High-Precision Servo Control System and Multi-Equipment Coordination Mechanism

2.1.1. Hardware Architecture of Servo Control System

2.1.2. Control Strategy and Multi-Equipment Coordination Logic

- 1.

- PID + Feedforward Composite Control Strategy

- 2.

- Multi-Equipment Time Synchronization and Data Alignment

- 3.

- Attitude Compensation Based on IMU Data

2.1.3. Performance Verification of Servo Control System

2.2. Laser Point Cloud Transmission Line Extraction

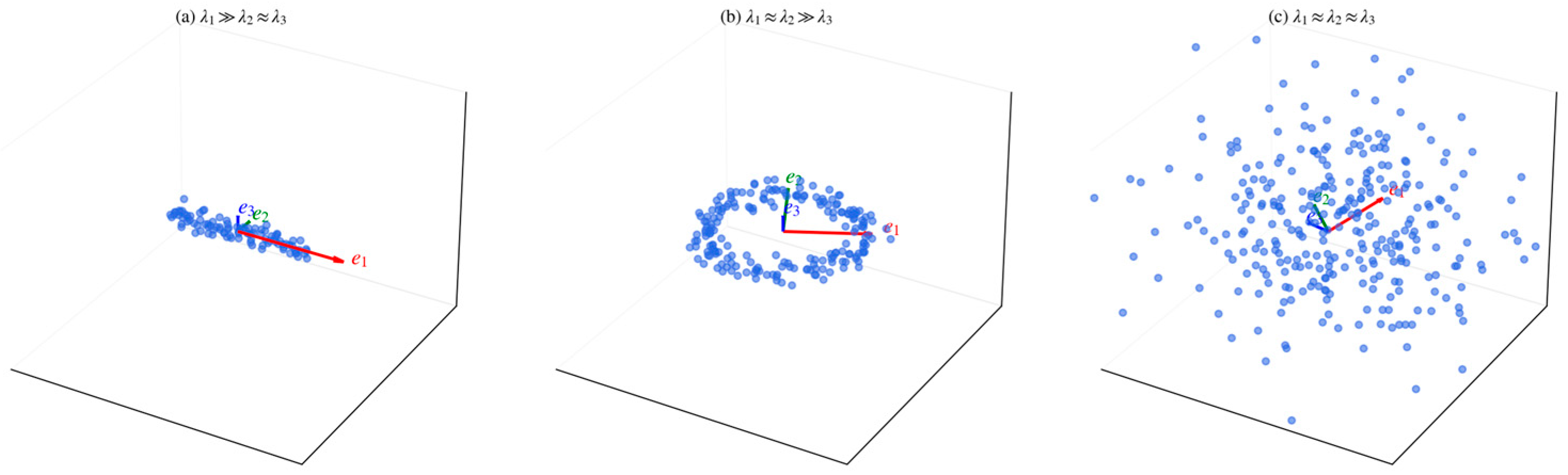

2.2.1. Transmission Line Extraction Based on Traditional Clustering Algorithm

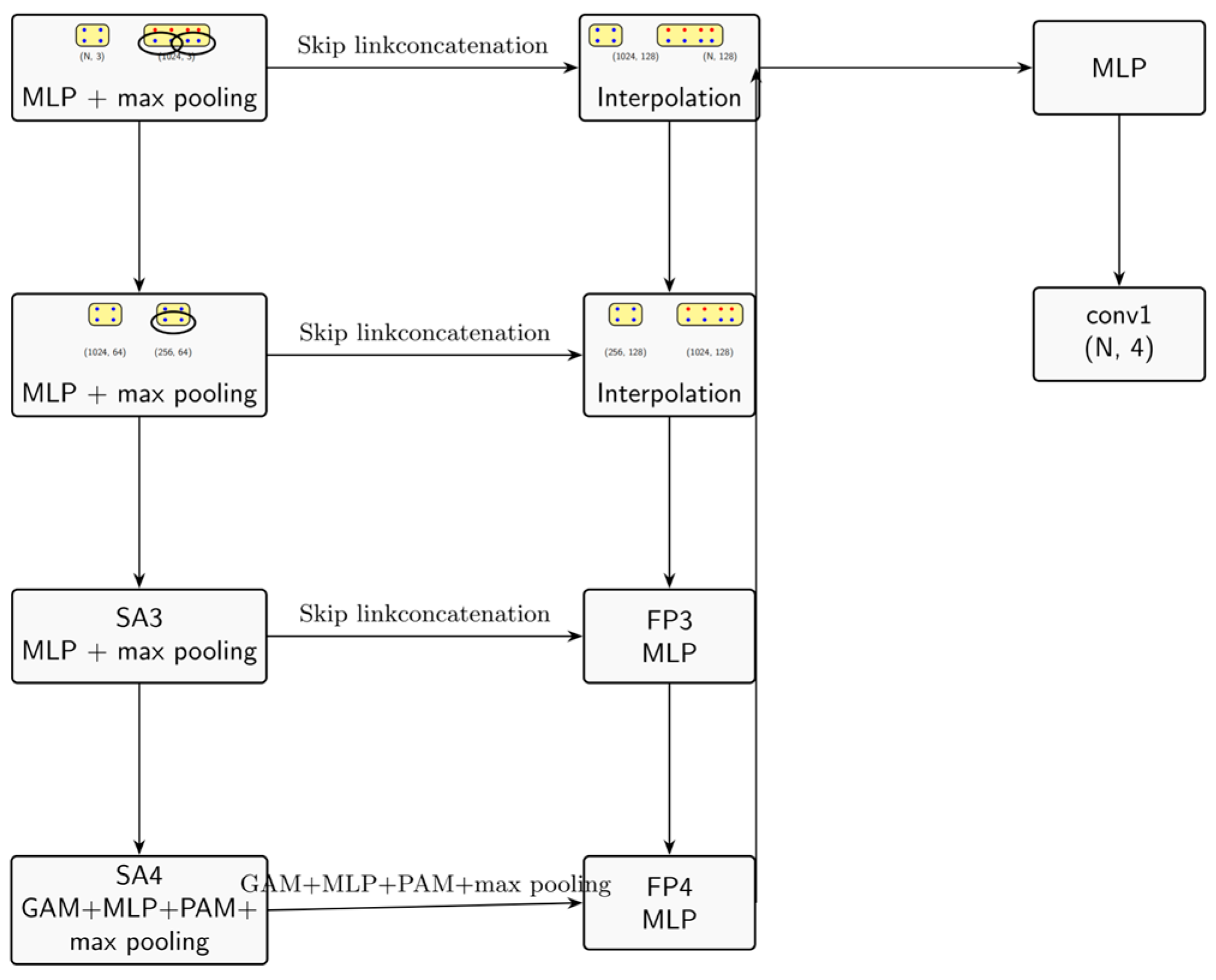

2.2.2. Deep Learning-Based Transmission Line Extraction

2.3. Point Cloud Scene Recovery

2.3.1. Principles of Power Line and Tower Point Cloud Filtering and Feature Retention

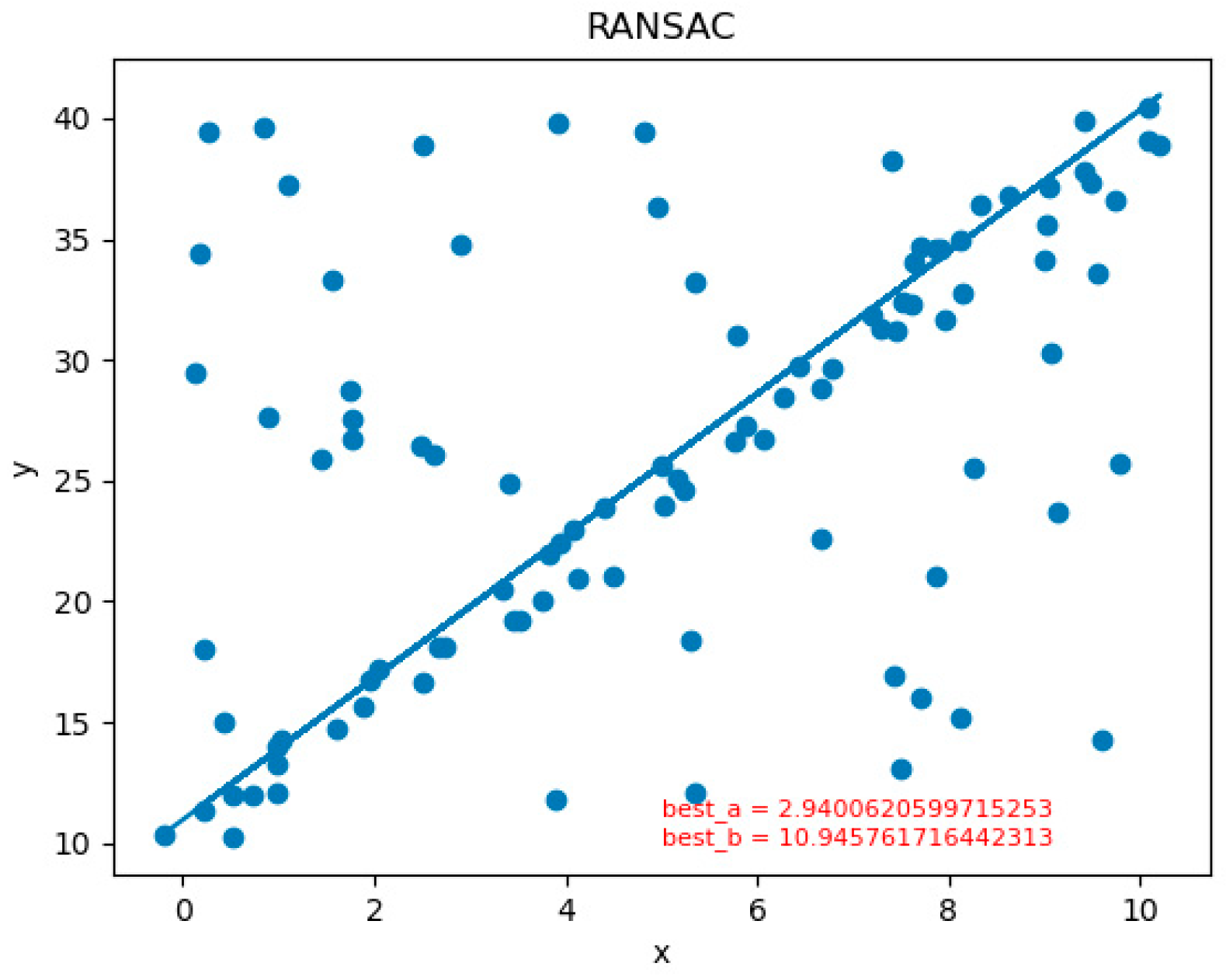

- Random sampling: Two points are randomly selected from the point cloud in each iteration.

- Model calculation: The straight-line parameters (ρ, θ) determined by these two points are calculated.

- Inner verification: The distance from each point to the line is computed, and points with distances less than the threshold are marked as inliers.

- Iterative optimisation: Repeat the above process and record the model with the highest number of interior points. The above process is repeated, and the model with the maximum number of inliers is recorded.

- Final fitting: The maximum consistent inlier set is used for least squares fitting to obtain optimal straight-line parameters.

- Effectively handles outliers in point cloud data (e.g., sensor noise, environmental interference points).

- Avoids falling into local optimal solutions via a probabilistic iterative mechanism.

- Supports custom geometric models, delivering strong adaptability.

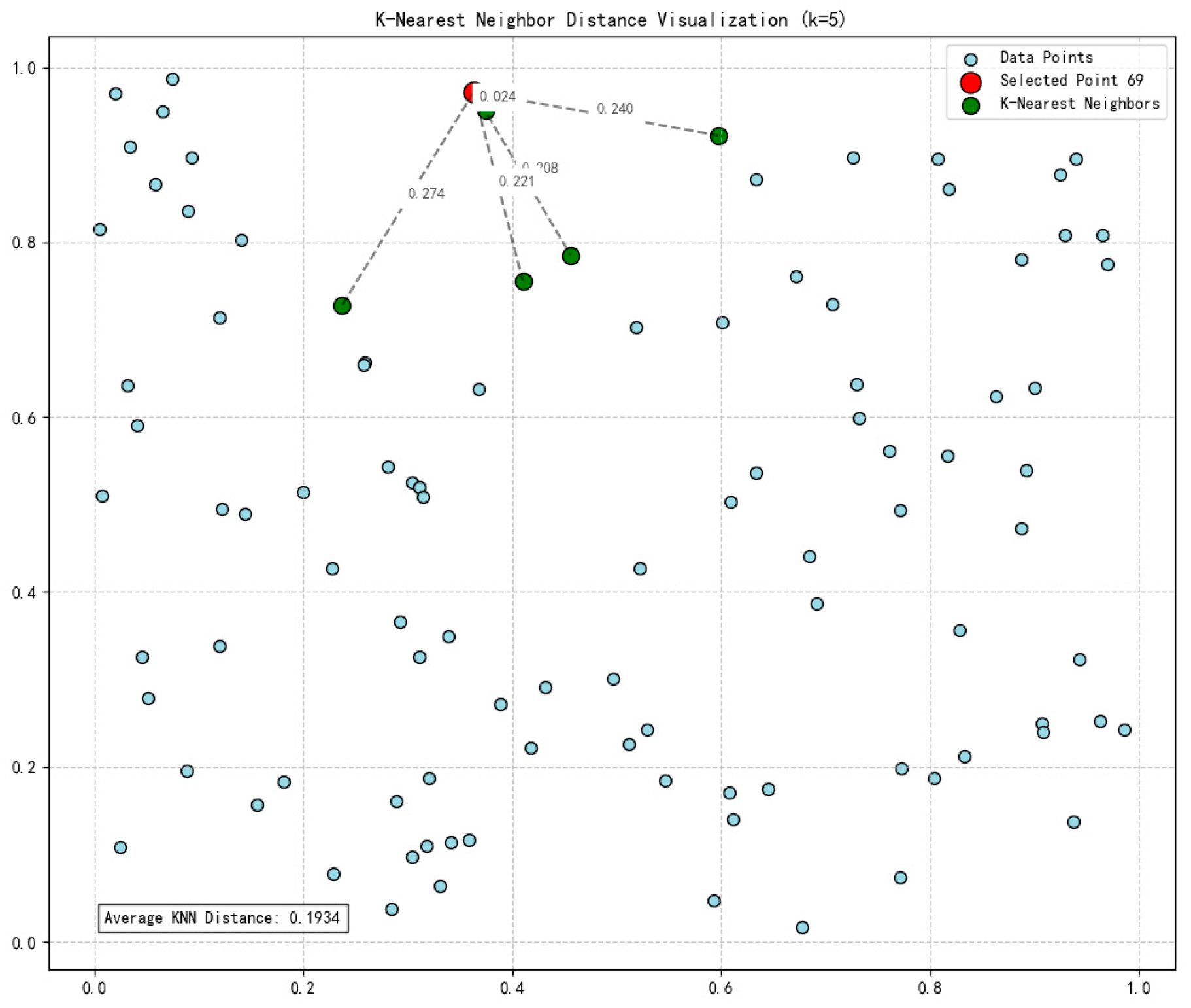

2.3.2. Principles of Vegetation Point Cloud Filtering and Feature Retention

- 1.

- Input the point cloud data classified as “vegetation”.

- 2.

- Construct a KD-Tree to accelerate neighbourhood search and improve computational efficiency.

- 3.

- For each point , calculate the evaluation distance of its K nearest neighbours using Equation (2). Aggregate all dᵢ values, set a density threshold T, and exclude points with dᵢ exceeding T.

- 4.

- The retained point cloud contains vegetation structure such as canopies and branches, with noise and discrete points effectively eliminated.

2.4. Transmission Line Operation Condition Monitoring

2.4.1. General

2.4.2. Condition Monitoring of Corridor Hazards

2.4.3. Arc Sag Condition Monitoring

3. Experimental Realisation

3.1. Experimental Environment and Experimental Data

3.1.1. Hardware and Software Configuration

3.1.2. Data Acquisition and Calibration Process

- 1.

- Data Source and Acquisition Parameters

- 2.

- Multi-Equipment Calibration

3.2. Experimental Flow

3.2.1. Transmission Line Extraction Experiments

- 1.

- Traditional clustering algorithm experimental process

- 2.

- Improvement in PointNet++ experimental process

- (i)

- Model Training and Improvement

- (ii)

- Ablation Experiment of Dual Attention Module

- (iii)

- Comparison with Advanced Methods

- (iv)

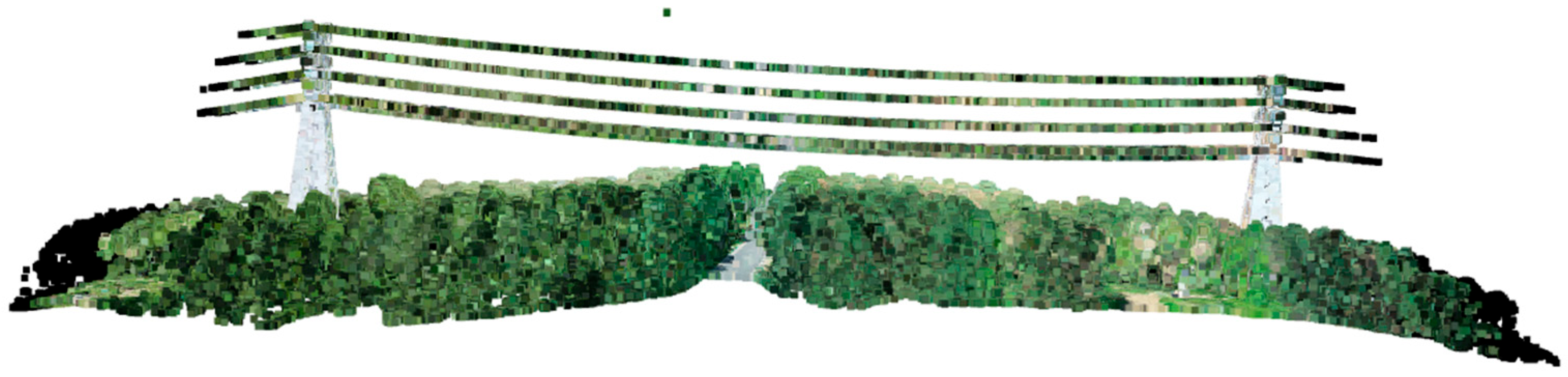

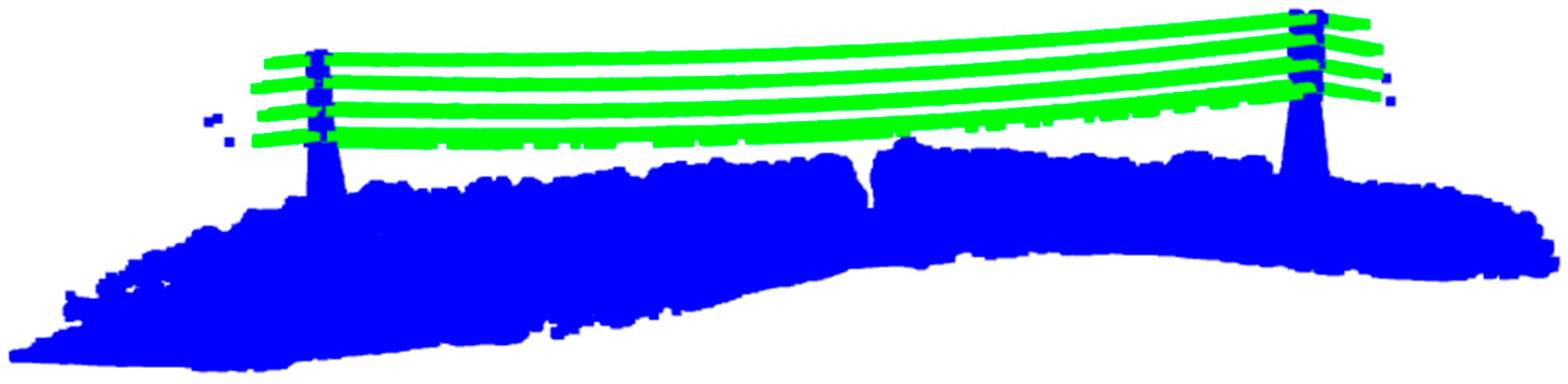

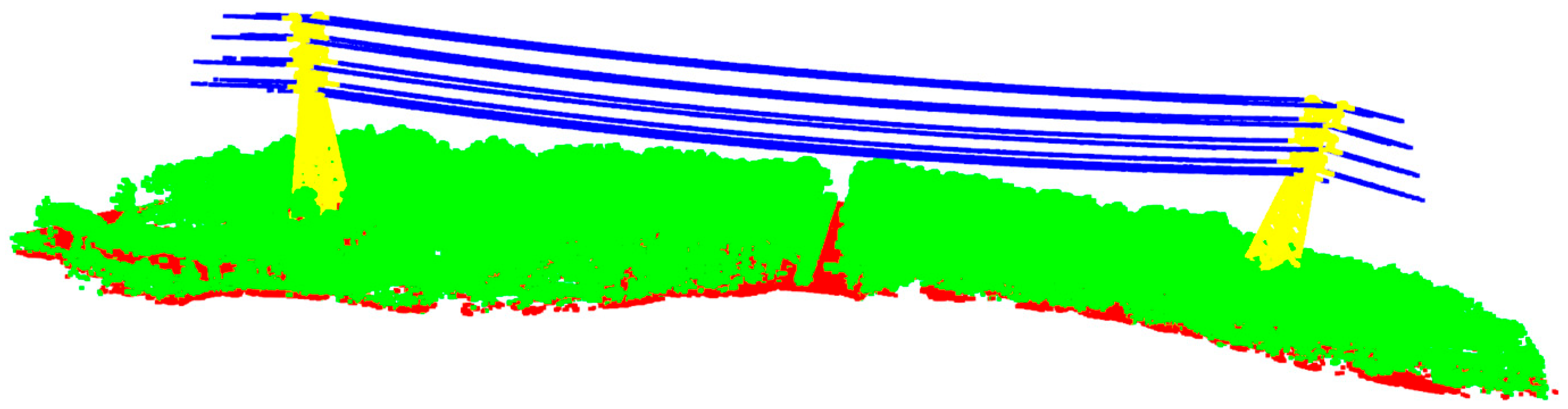

- Inference and Visualisation

- (v)

- Qualitative analysis

3.2.2. Scenario Recovery Validation

- 1.

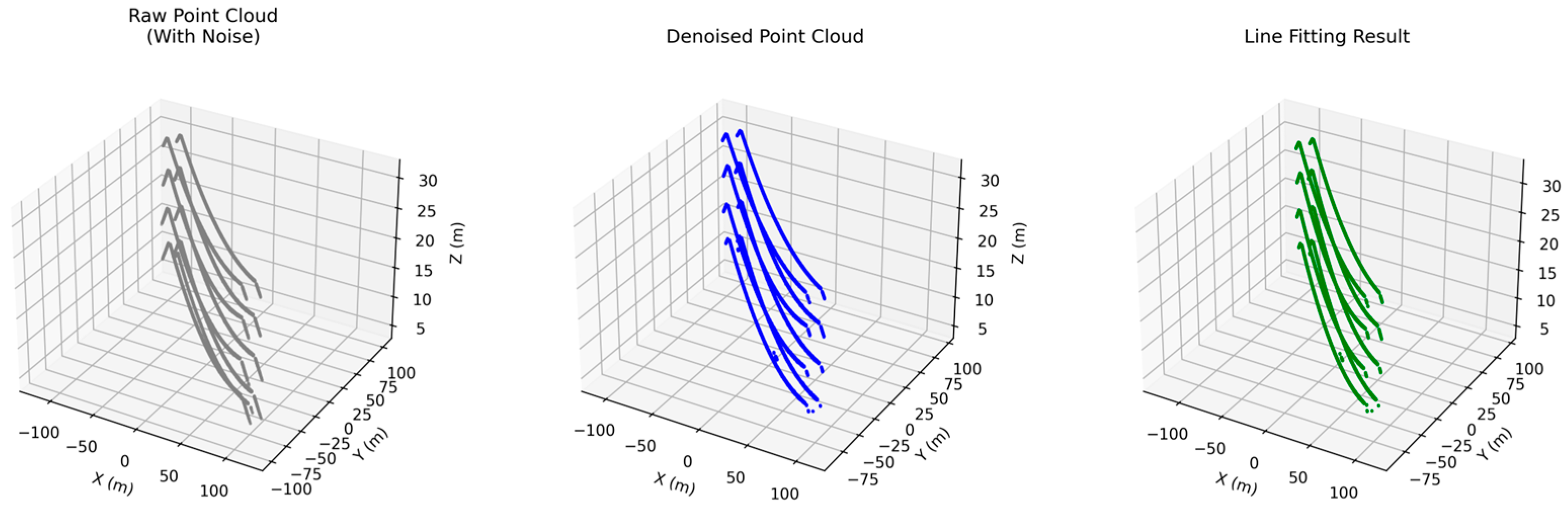

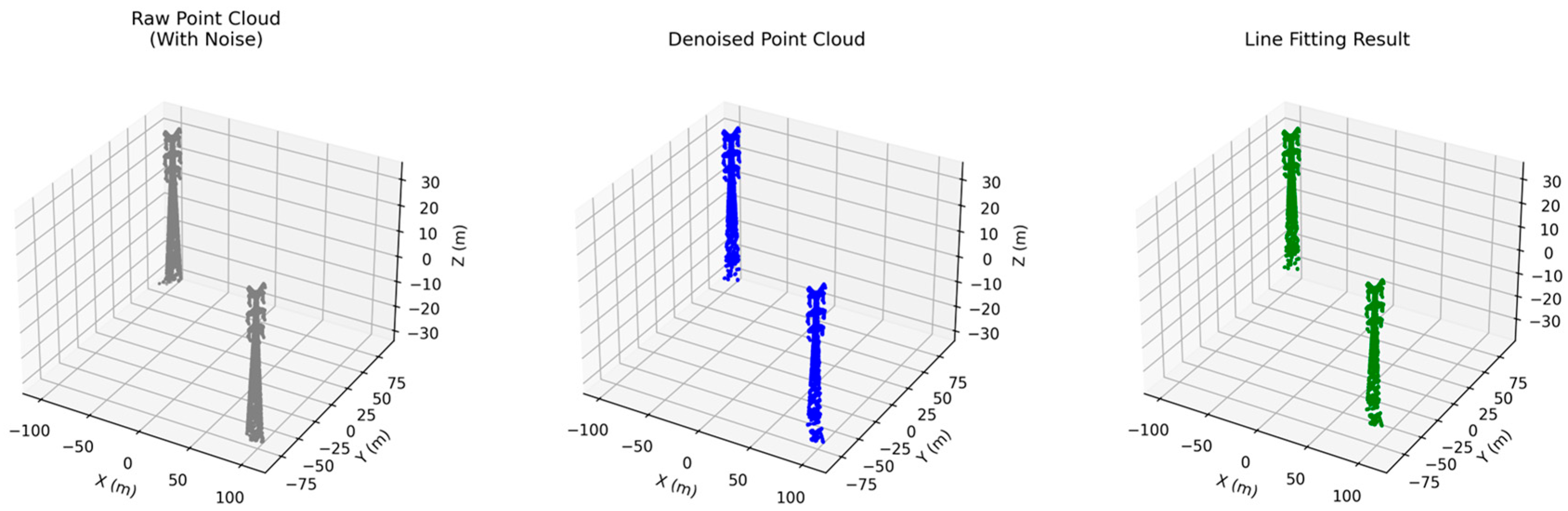

- Transmission line RANSAC straight-line fitting implementation

- (i)

- Preprocessing and parameter setting

- (ii)

- Fitting results

- 2.

- Pole tower RANSAC straight-line fitting realisation

- (i)

- Parameter adjustment strategy

- (ii)

- Fitting results

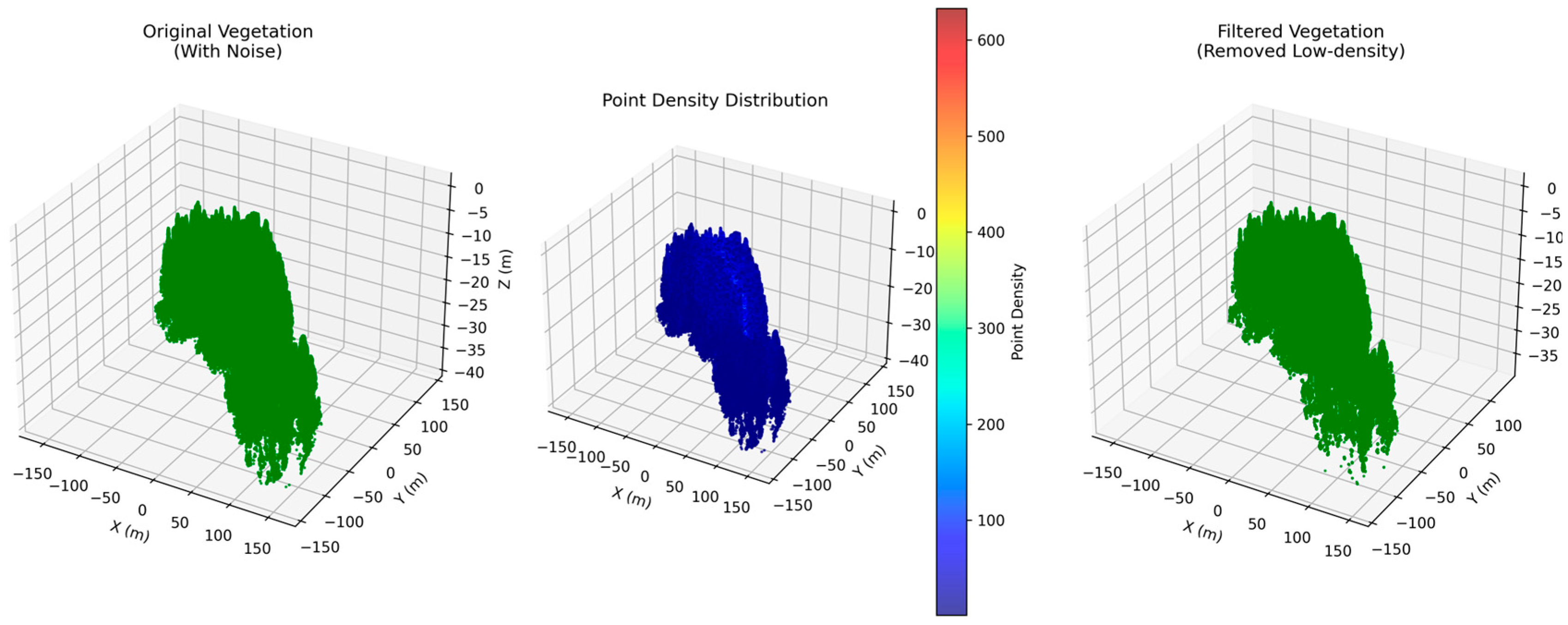

- 3.

- Realization of vegetation scenario recovery

- (i)

- Model Selection

- (ii)

- Core parameter setting

- (iii)

- Processing effect

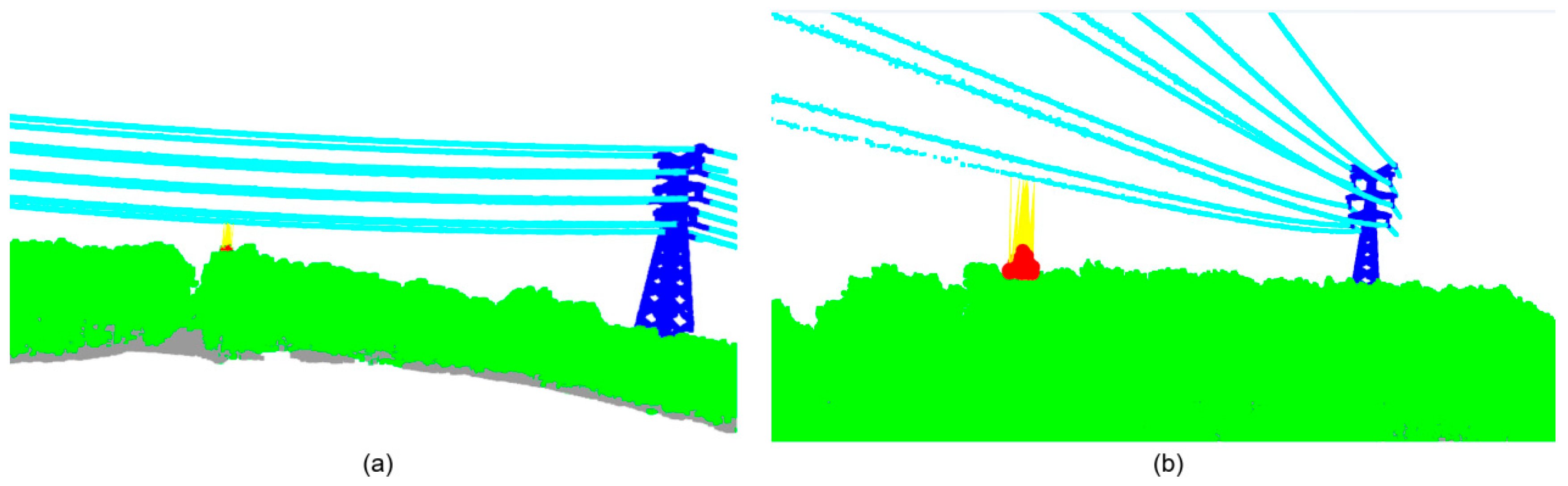

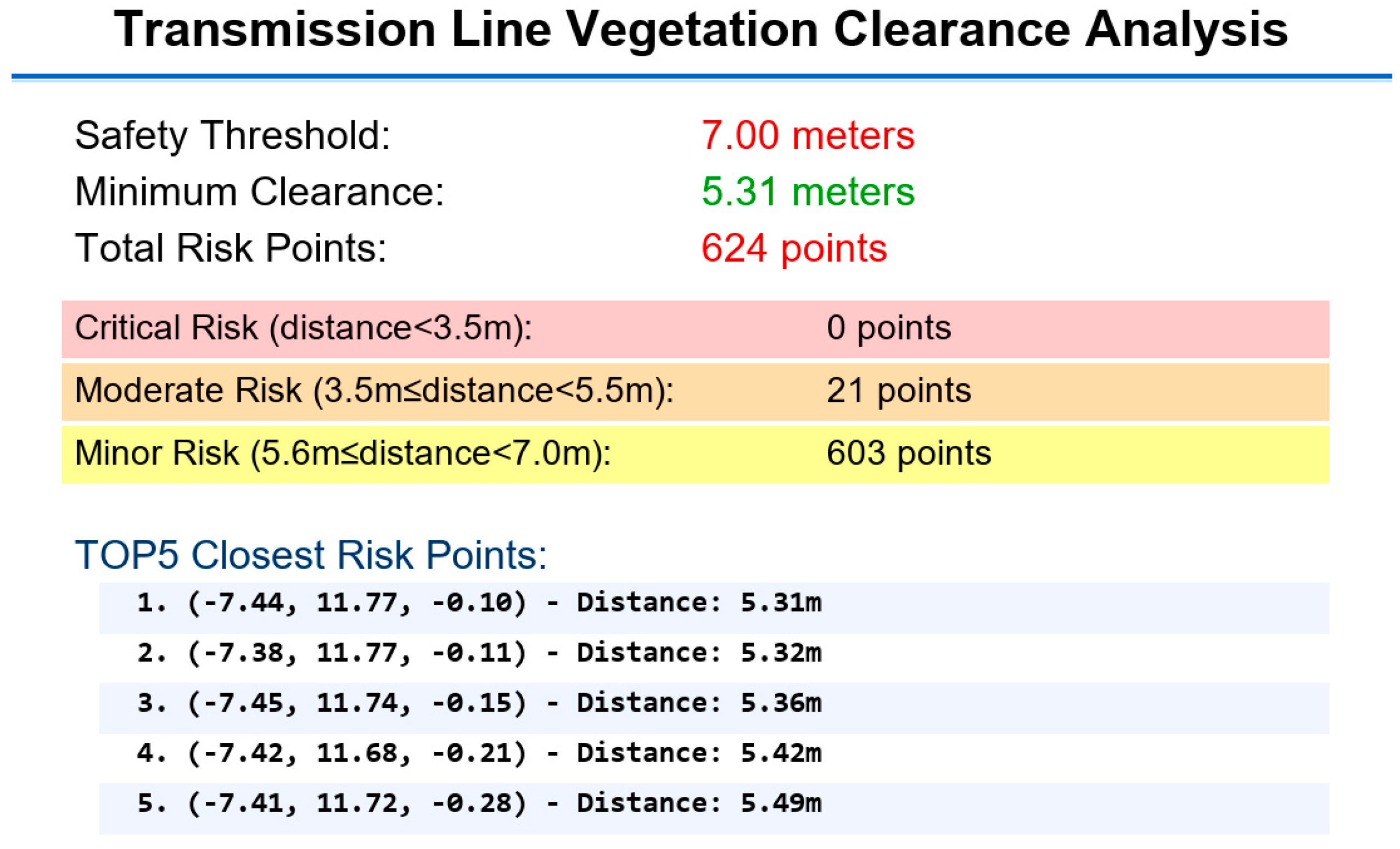

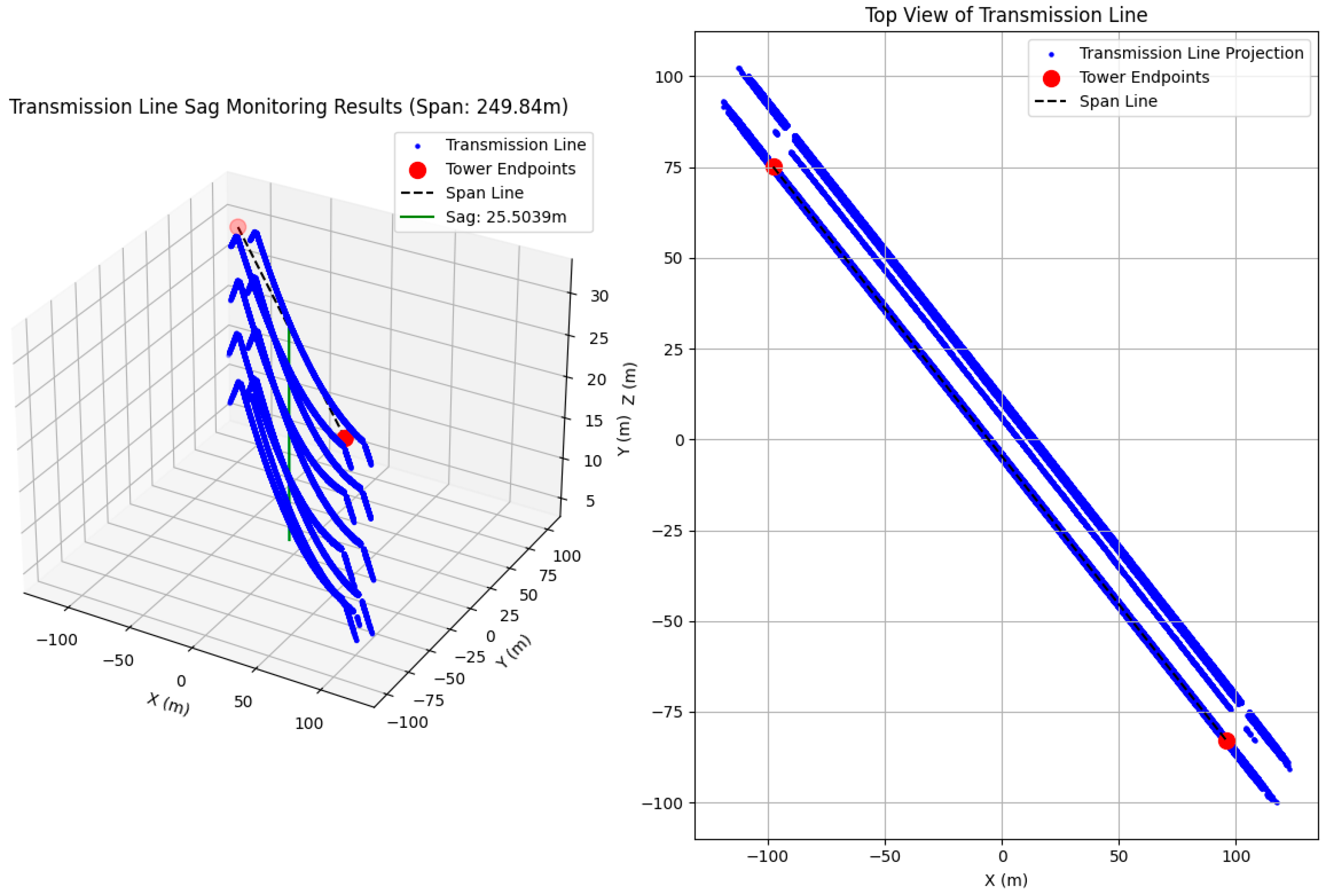

3.2.3. Operational Status Monitoring

- 1.

- Tree barrier risk monitoring

- 2.

- Arc sag state monitoring

- (i)

- Data acquisition and preprocessing

- (ii)

- Key parameters and algorithm flow

- (iii)

- Experimental results and analysis

4. Conclusions and Outlook

5. Limitations

Author Contributions

Funding

Conflicts of Interest

References

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Adv. Neural Inf. Process. Syst. 2017, 30, 5105–5114. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Jiang, M.; Wu, Y.; Zhao, T.; Zhao, Z.; Lu, C. Pointsift: A sift-like network module for 3d point cloud semantic segmentation. arXiv 2018, arXiv:1807.00652. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3D object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3dssd: Point-based 3D single stage object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11040–11048. [Google Scholar]

- Ye, M.; Xu, S.; Cao, T. Hvnet: Hybrid voxel network for lidar based 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1631–1640. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. Randla-net: Efficient semantic segmentation of large-scale point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11108–11117. [Google Scholar]

- Li, Z.; Yao, Y.; Quan, Z.; Xie, J.; Yang, W. SIENet: Spatial Information Enhancement Network for 3D Object Detection from Point Cloud. arXiv 2021, arXiv:2103.15396. [Google Scholar] [CrossRef]

- Qian, R.; Lai, X.; Li, X. BADet: Boundary-aware 3D object detection from point clouds. Pattern Recognit. 2022, 125, 108524. [Google Scholar] [CrossRef]

- Kolodiazhnyi, M.; Vorontsova, A.; Konushin, A.; Rukhovich, D. Oneformer3d: One transformer for unified point cloud segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 20943–20953. [Google Scholar]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Nießner, M. Scannet: Richly-annotated 3D reconstructions of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5828–5839. [Google Scholar]

- Zhao, W.; Zhang, R.; Wang, Q.; Cheng, G.; Huang, K. BFANet: Revisiting 3D Semantic Segmentation with Boundary Feature Analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 10–17 June 2025; pp. 29395–29405. [Google Scholar]

- Chen, C.; Mai, X.; Song, S.; Peng, X.; Xu, W.; Wang, K. Automatic power lines extraction method from airborne LiDAR point cloud. Geomat. Inf. Sci. Wuhan Univ. 2015, 40, 1600–1605. [Google Scholar]

- Guo, B.; Li, Q.; Huang, X.; Wang, C. An improved method for power-line reconstruction from point cloud data. Remote Sens. 2016, 8, 36. [Google Scholar] [CrossRef]

- Feng, Z.; Wang, X.; Zhou, X.; Hu, D.; Li, Z.; Tian, M. Point Cloud Extraction of Tower and Conductor in Overhead Transmission Line Based on PointCNN Improved. In Proceedings of the 2023 3rd International Symposium on Computer Technology and Information Science (ISCTIS), Chengdu, China, 7–9 July 2023; IEEE: New York, NY, USA, 2023; pp. 1009–1014. [Google Scholar]

- Han, J.; Liu, K.; Li, W.; Zhang, F.; Xia, X.-G. Generating Inverse Feature Space for Class Imbalance in Point Cloud Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 5778–5793. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Wu, Z.; Li, M.; Wang, B.; Zhang, X. Forest fire spread monitoring and vegetation dynamics detection based on multi-source remote sensing images. Remote Sens. 2022, 14, 4431. [Google Scholar] [CrossRef]

| Equipment Type | Model | Key Parameters |

|---|---|---|

| Servo Motor | Panasonic A6 | Control accuracy: ±0.01°, Speed response frequency: 500 Hz |

| Controller | TI TMS320F28335 | Floating-point operation frequency: 150 MHz |

| LiDAR | Velodyne VLP-16 | Scanning frequency: 10 Hz, Point cloud density: 50 points/m2, Vertical field of view: ±15° |

| IMU | Xsens MTI-100 | Attitude accuracy: 0.1°/h, Sampling rate: 200 Hz |

| GPS | Trimble R10 | Positioning mode: RTK, Plane accuracy: ±2 cm, Coordinate system: WGS84 |

| Evaluation Index | No Servo Control | Low-Precision Servo Control | High-Precision Servo Control (This Study) |

|---|---|---|---|

| Trajectory Drift (within 1 km) | ±15 cm | data ± 5 cm | ±2 cm |

| Roll Angle Attitude Error | ±2.5° | ±0.8° | ±0.1° |

| Point Cloud Density Uniformity | 60% | 85% | 98% |

| Transmission Line Geometric Fidelity | 75% | 88% | 96% |

| Model Configuration | OA (%) | Transmission Line mIoU (%) | Transmission Line F1 Score (%) | Recall Rate Under Vegetation Interference (%) |

|---|---|---|---|---|

| Original PointNet++ (No Attention) | 86.2 | 82.5 | 81.8 | 78.3 |

| PointNet++ with Only Gradient Attention | 88.5 | 85.7 | 84.9 | 83.1 |

| PointNet++ with Only Point Attention | 89.1 | 86.3 | 85.5 | 84.5 |

| PointNet++ with Dual Attention (This Study) | 92.3 | 89.8 | 89.2 | 91.7 |

| Method | Complex Scene Accuracy (%) | Inference Speed (1 Million Points, s) | Core Advantage Difference |

|---|---|---|---|

| Original PointNet++ | 86.2 | 10 | General point cloud processing, no power scene adaptation |

| RandLA-Net | 88.7 | 6 | Efficient processing of large-scale point clouds, low accuracy for small targets (transmission lines) |

| Feng et al. [19] | 85.6 | 12 | Density feature assistance, weak semantic feature capture |

| Improved PointNet++ (This Study) | 92.3 | 8 | Dual attention module + power scene loss function (cross-entropy + Dice), dual improvement of semantic and edge features |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

An, Z.; Pei, Y. Research on Power Laser Inspection Technology Based on High-Precision Servo Control System. Photonics 2025, 12, 944. https://doi.org/10.3390/photonics12090944

An Z, Pei Y. Research on Power Laser Inspection Technology Based on High-Precision Servo Control System. Photonics. 2025; 12(9):944. https://doi.org/10.3390/photonics12090944

Chicago/Turabian StyleAn, Zhe, and Yuesheng Pei. 2025. "Research on Power Laser Inspection Technology Based on High-Precision Servo Control System" Photonics 12, no. 9: 944. https://doi.org/10.3390/photonics12090944

APA StyleAn, Z., & Pei, Y. (2025). Research on Power Laser Inspection Technology Based on High-Precision Servo Control System. Photonics, 12(9), 944. https://doi.org/10.3390/photonics12090944