A Novel Method for Water Surface Debris Detection Based on YOLOV8 with Polarization Interference Suppression

Abstract

1. Introduction

2. Related Work

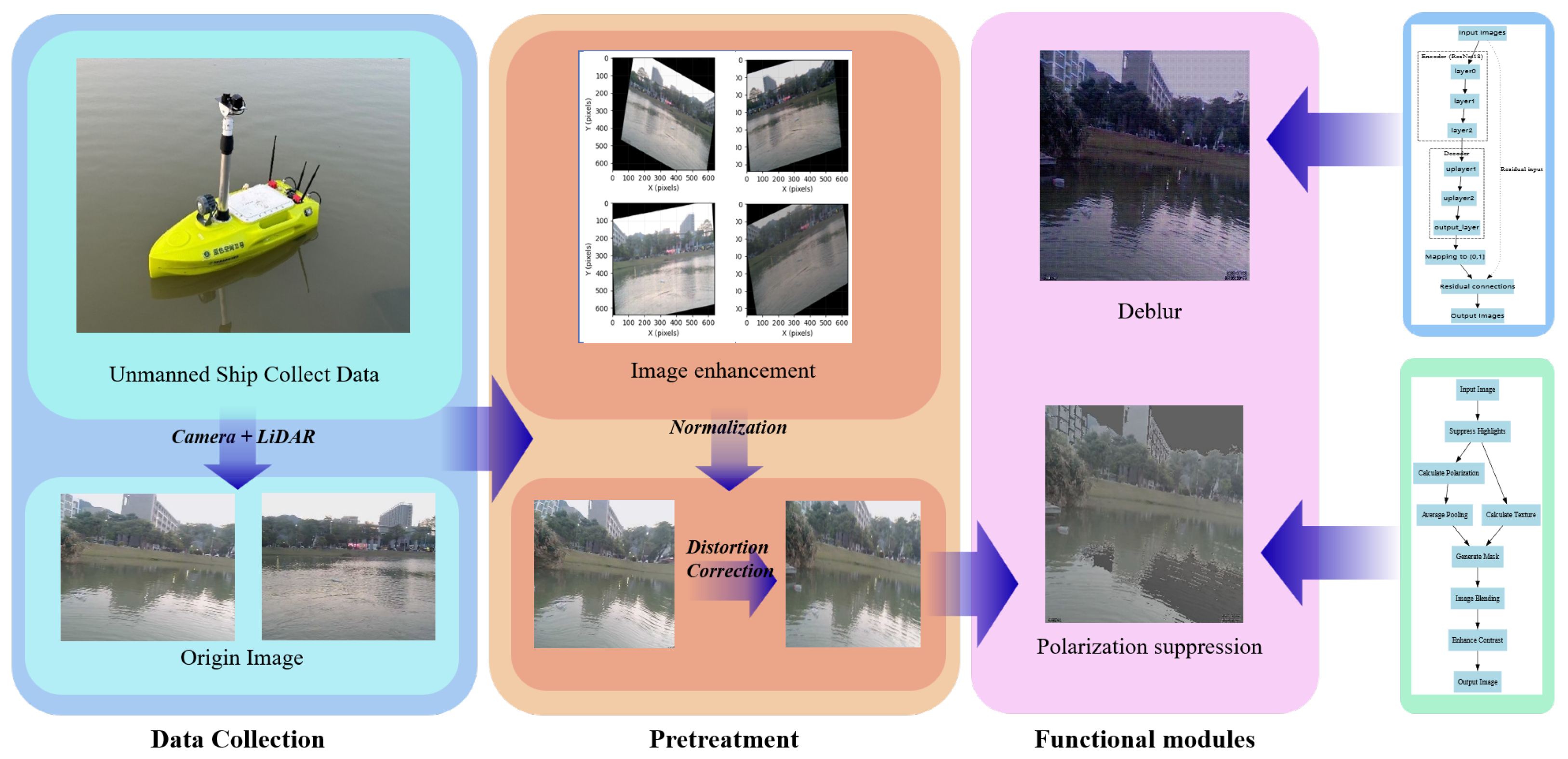

3. Methodology

3.1. Data Processing

3.2. Polarization Feature Enhancement Network

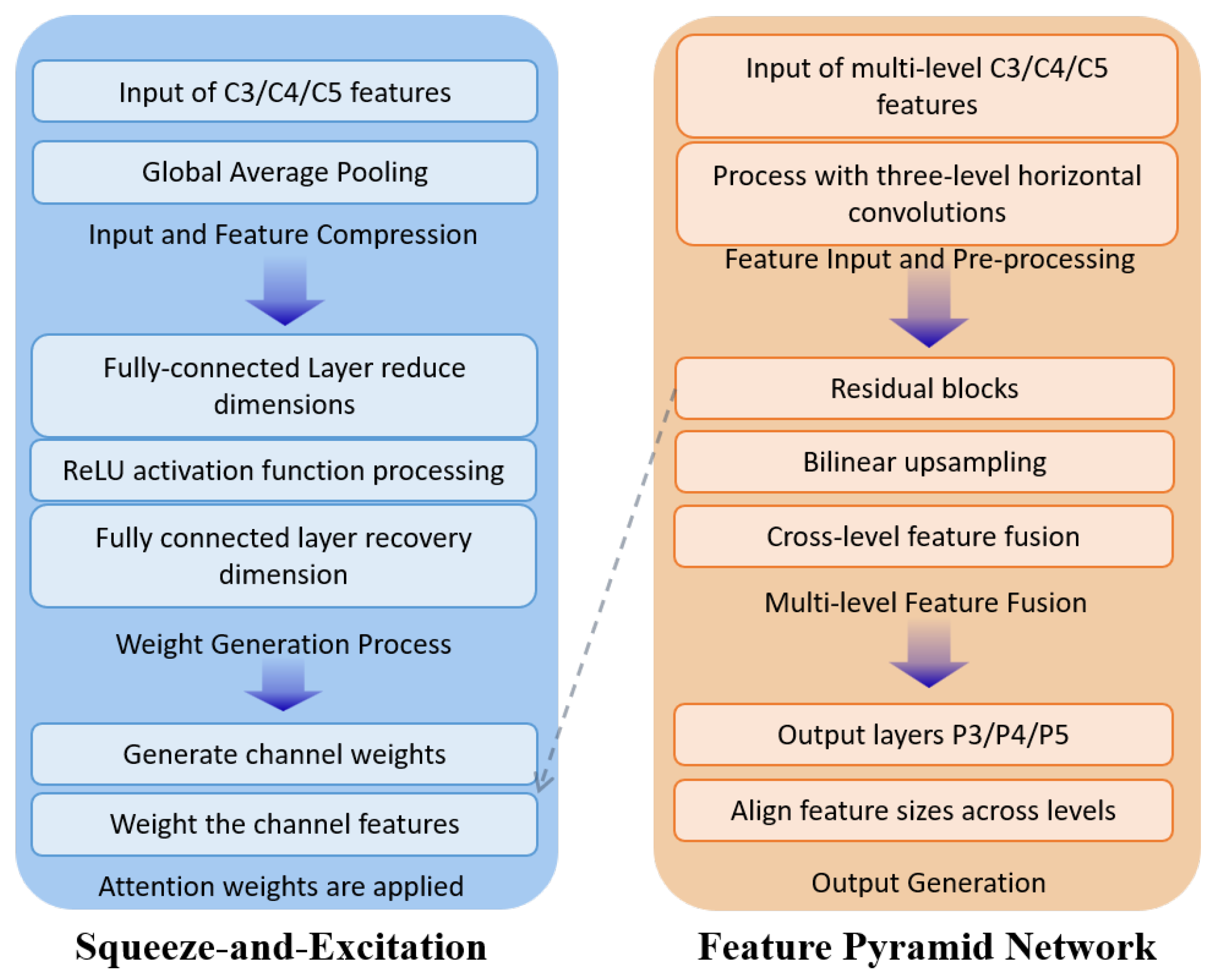

3.2.1. Feature Pyramid Network with Squeeze-and-Excitation

3.2.2. Polarization-Aware Feature Enhancement Module (PAFEM)

3.2.3. Deblurring Feature Compensation Network (DFCN)

3.2.4. Light Specular Reflection Processing Module (LSRP)

3.2.5. Module Integration and Collaborative Working

3.3. Learning Rate

3.4. Loss Function

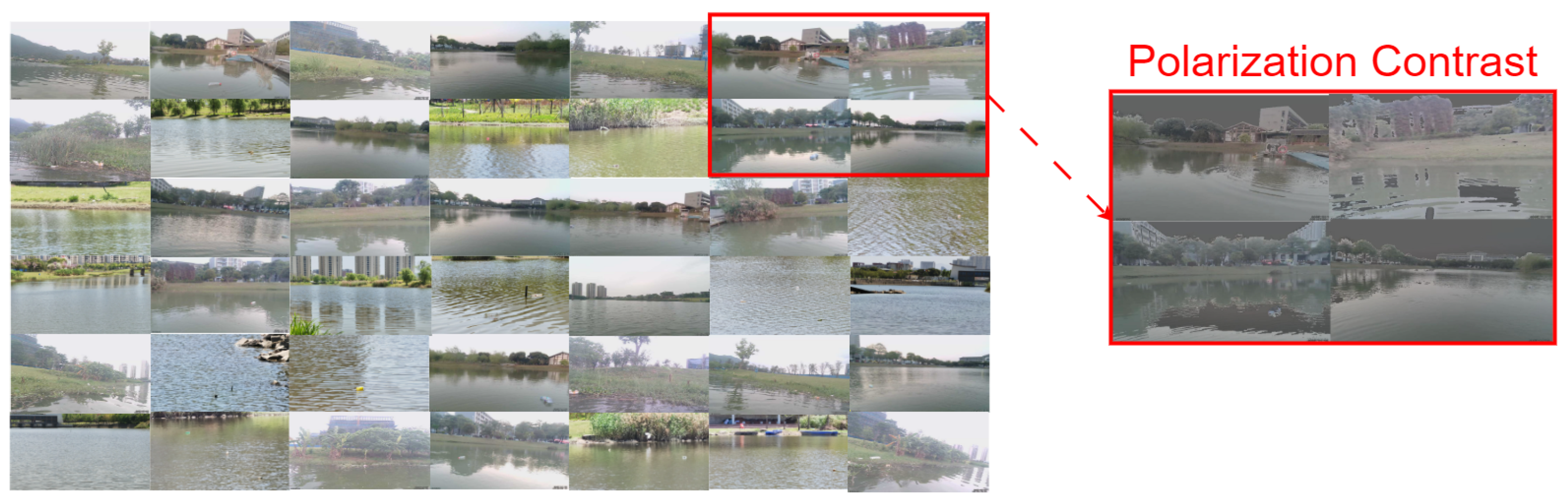

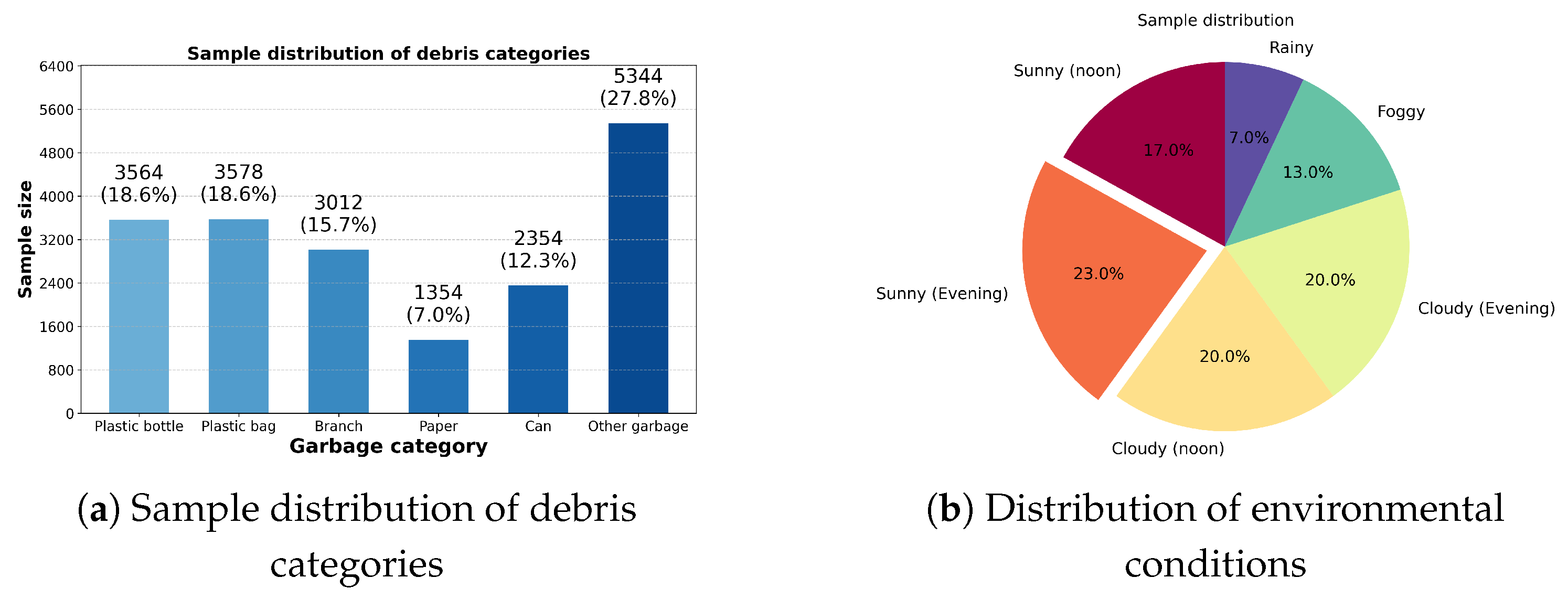

4. Description of PSF-IMG Dataset

Dataset Analysis

5. Experimental Results

Algorithmic Analysis

6. Discussion

6.1. Analysis of Key Performance Improvements

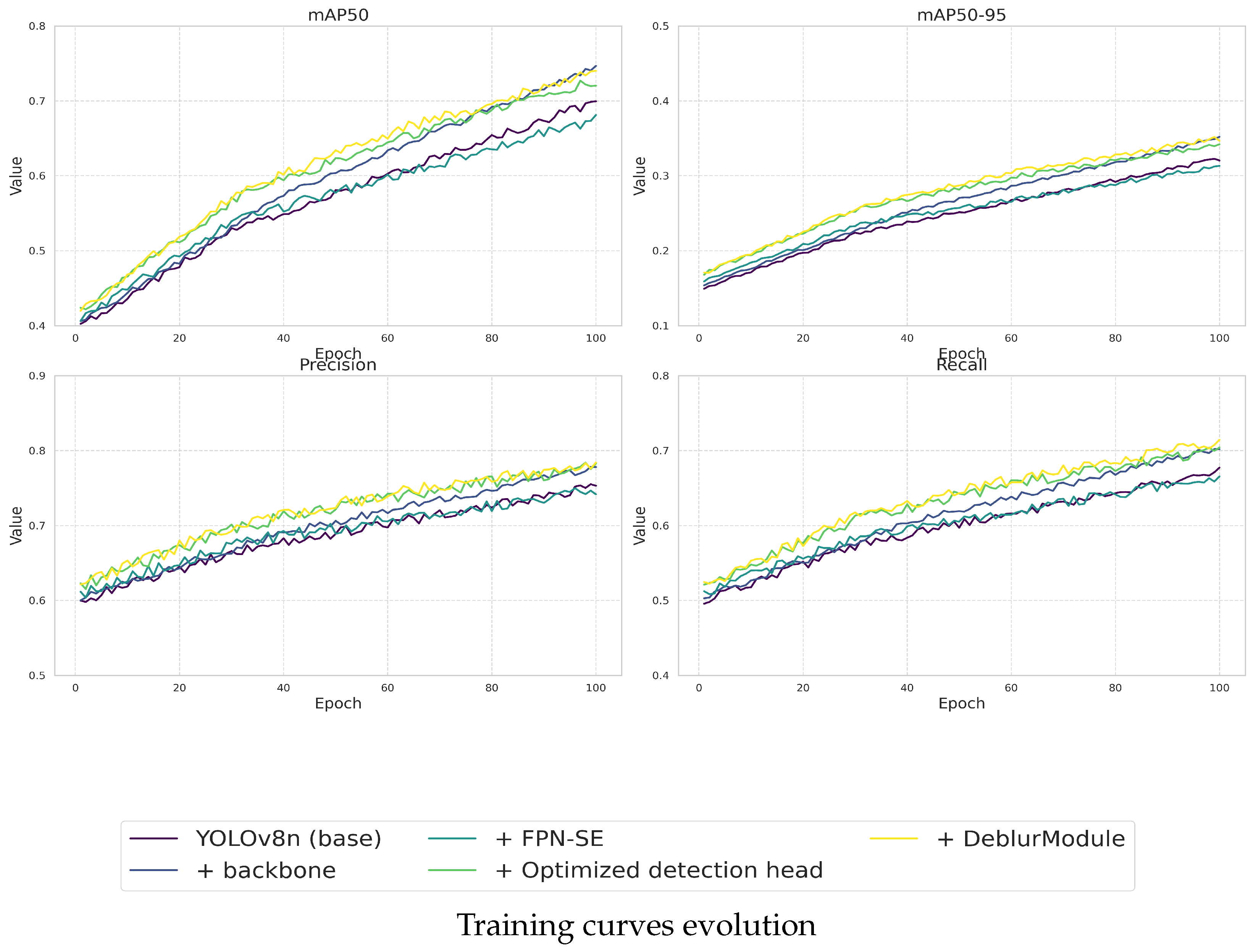

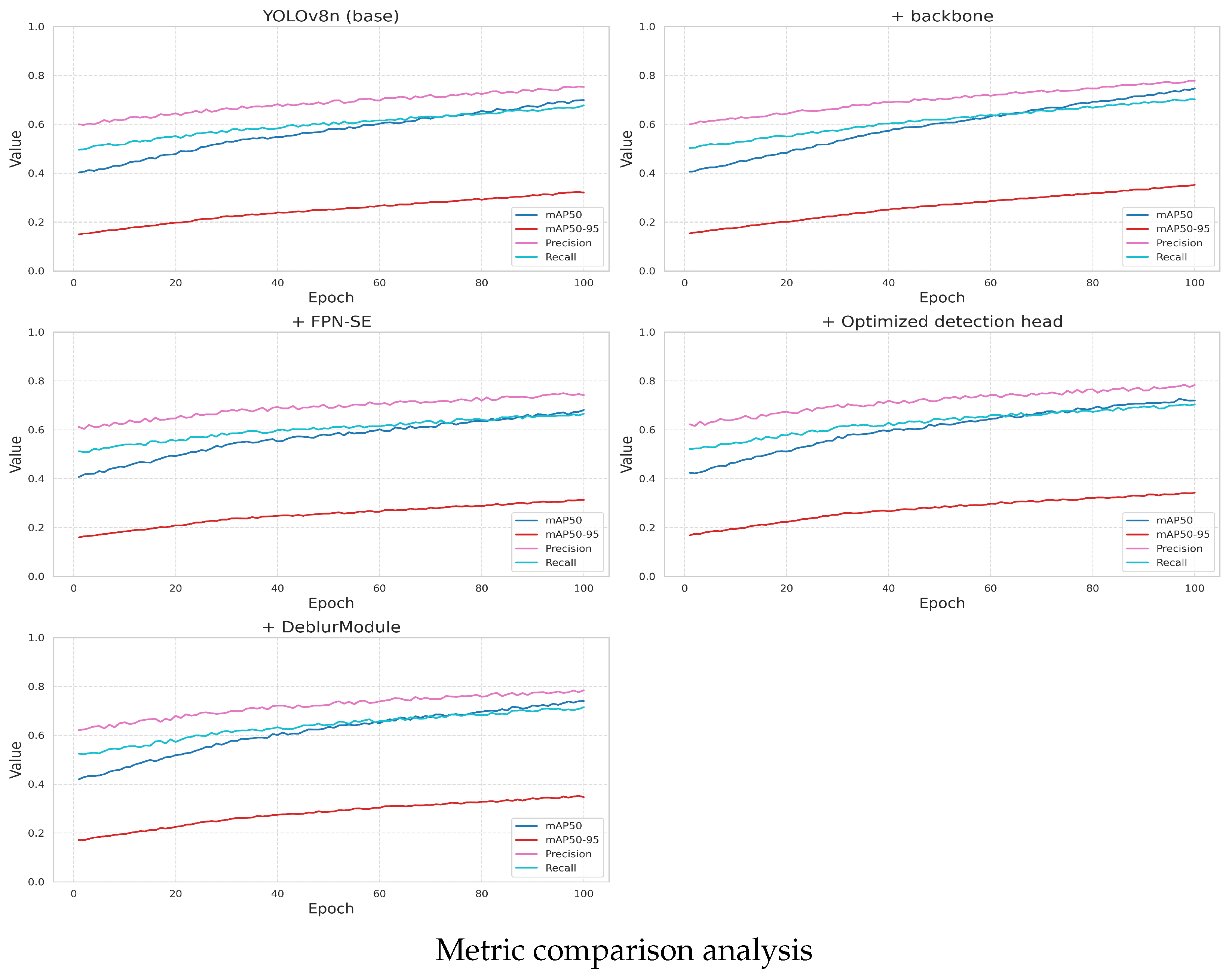

6.2. Module-Level Ablation Study

6.3. Comparison with Established Detection Frameworks

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gola, K.K.; Singh, B.; Singh, M.; Srivastava, T. PlastOcean: Detecting Floating Marine Macro Litter (FMML) Using Deep Learning Models. In Proceedings of the International Conference on Intelligent Systems Design and Applications, Malaga, Spain, 11–13 September 2023. [Google Scholar]

- Heping, Y.; Bo, Z.; Bijin, L. Multi-target floating garbage tracking algorithm for cleaning ships based on YOLOv5-Byte. Chin. J. Ship Res. 2025, 20, 1–12. [Google Scholar]

- Cheng, Y.; Zhu, J.; Jiang, M.; Fu, J.; Pang, C.; Wang, P.; Sankaran, K.; Onabola, O.; Liu, Y.; Liu, D.; et al. Flow: A dataset and benchmark for floating waste detection in inland waters. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, NA, Canada, 10–17 October 2021; pp. 10953–10962. [Google Scholar]

- Kay, S.; Hedley, J.D.; Lavender, S. Sun glint correction of high and low spatial resolution images of aquatic scenes: A review of methods for visible and near-infrared wavelengths. Remote Sens. 2009, 1, 697–730. [Google Scholar] [CrossRef]

- Yang, M.; Zhao, P.; Feng, B.; Zhao, F. Water surface Sun glint suppression method based on polarization filtering and polynomial fitting. Laser Optoelectron. Prog. 2021, 58, 125–136. [Google Scholar]

- Shi, W.; Quan, H.; Kong, L. Adaptive specular reflection removal in light field microscopy using multi-polarization hybrid illumination and deep learning. Opt. Lasers Eng. 2025, 186, 108839. [Google Scholar] [CrossRef]

- Yang, Y.; Ma, W.; Zheng, Y.; Cai, J.F.; Xu, W. Fast single image reflection suppression via convex optimization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8141–8149. [Google Scholar]

- Amer, K.O.; Elbouz, M.; Alfalou, A.; Brosseau, C.; Hajjami, J. Enhancing underwater optical imaging by using a low-pass polarization filter. Opt. Express 2019, 27, 621–643. [Google Scholar] [CrossRef] [PubMed]

- Guan, R.; Yao, S.; Zhu, X.; Man, K.L.; Lim, E.G.; Smith, J.; Yue, Y.; Yue, Y. Achelous: A fast unified water-surface panoptic perception framework based on fusion of monocular camera and 4d mmwave radar. In Proceedings of the 2023 IEEE 26th International Conference on Intelligent Transportation Systems (ITSC), Dalian, China, 2–5 October 2023; pp. 182–188. [Google Scholar]

- Kundu, S.; Ming, J.; Nocera, J.; McGregor, K.M. Integrative learning for population of dynamic networks with covariates. NeuroImage 2021, 236, 118181. [Google Scholar] [CrossRef] [PubMed]

- Abdlaty, R.; Orepoulos, J.; Sinclair, P.; Berman, R.; Fang, Q. High throughput AOTF hyperspectral imager for randomly polarized light. Photonics 2018, 5, 3. [Google Scholar] [CrossRef]

- Wang, K.; Lam, E.Y. Deep learning phase recovery: Data-driven, physics-driven, or a combination of both? Adv. Photonics Nexus 2024, 3, 056006. [Google Scholar] [CrossRef]

- Cao, Z.; Sun, S.; Wei, J.; Liu, Y. Dispersive optical activity for spectro-polarimetric imaging. Light. Sci. Appl. 2025, 14, 90. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Bian, Y.; Wang, H.; Lyu, M.; Pedrini, G.; Osten, W.; Barbastathis, G.; Situ, G. Phase imaging with an untrained neural network. Light. Sci. Appl. 2020, 9, 77. [Google Scholar] [CrossRef] [PubMed]

- Tyo, J.S.; Goldstein, D.L.; Chenault, D.B.; Shaw, J.A. Review of passive imaging polarimetry for remote sensing applications. Appl. Opt. 2006, 45, 5453–5469. [Google Scholar] [CrossRef] [PubMed]

- Powell, S.B.; Gruev, V. Calibration methods for division-of-focal-plane polarimeters. Opt. Express 2013, 21, 21039–21055. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.y.; Qu, Z.; Gao, L.y. Multi-spatial pyramid feature and optimizing focal loss function for object detection. IEEE Trans. Intell. Veh. 2023, 9, 1054–1065. [Google Scholar] [CrossRef]

- Zhang, H.; Fu, W.; Li, D.; Wang, X.; Xu, T. Improved small foreign object debris detection network based on YOLOv5. J. Real-Time Image Process. 2024, 21, 21. [Google Scholar] [CrossRef]

- Yang, H.; Chen, Y.; Pan, Y.; Yao, T.; Chen, Z.; Ngo, C.W.; Mei, T. Hi3D: Pursuing High-Resolution Image-to-3D Generation with Video Diffusion Models. In Proceedings of the 32nd ACM International Conference on Multimedia, Beijing, China, 21–25 October 2024; pp. 6870–6879. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; March, M.; Lempitsky, V. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 1–35. [Google Scholar]

- Li, Y.; Wang, Y.; Xie, J.; Zhang, K. Unmanned Surface Vehicle Target Detection Based on LiDAR. In Proceedings of the International Conference on Autonomous Unmanned Systems, Bangkok, Thailand, 20–22 November 2023; pp. 112–121. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Varga, L.A.; Kiefer, B.; Messmer, M.; Zell, A. Seadronessee: A maritime benchmark for detecting humans in open water. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Salt Lake City, UT, USA, 16–20 January 2022; pp. 2260–2270. [Google Scholar]

| Environmental Condition | Sample Size | Percentage (%) |

|---|---|---|

| Sunny (noon) | 2550 | 17.0 |

| Sunny (Evening) | 3450 | 23.0 |

| Cloudy (noon) | 3000 | 20.0 |

| Cloudy (Evening) | 3000 | 20.0 |

| Foggy | 1950 | 13.0 |

| Rainy | 1050 | 7.0 |

| Total | 15,000 | 100.0 |

| Debris Category | Sample Size | Percentage (%) |

|---|---|---|

| Plastic bottle | 1354 | 7.0 |

| Plastic bag | 2354 | 12.3 |

| Branch | 3012 | 15.7 |

| Paper | 3564 | 18.6 |

| Can | 3578 | 18.6 |

| Other debris | 5344 | 27.8 |

| Total | 19,206 | 100.0 |

| AP | AP50 | AP75 | AP-s | AP-m | AP-l | |

|---|---|---|---|---|---|---|

| Faster R-CNN | 0.18 | 0.35 | 0.08 | 0.03 | 0.30 | 0.85 |

| SSD | 0.20 | 0.42 | 0.10 | 0.05 | 0.25 | 0.75 |

| EfficientDet-D1 | 0.25 | 0.45 | 0.12 | 0.08 | 0.35 | 0.78 |

| Mask R-CNN | 0.22 | 0.38 | 0.11 | 0.04 | 0.28 | 0.88 |

| Cascade R-CNN | 0.16 | 0.39 | 0.10 | 0.04 | 0.22 | 0.89 |

| Retinanet | 0.22 | 0.48 | 0.15 | 0.06 | 0.20 | 0.92 |

| YOLOv8 | 0.37 | 0.86 | 0.27 | 0.20 | 0.40 | 0.55 |

| YOLOv8-PDL | 0.41 | 0.84 | 0.45 | 0.25 | 0.42 | 0.55 |

| Model Configuration | mAP50 | mAP50-95 | Precision | Recall |

|---|---|---|---|---|

| Baseline | 0.761 | 0.337 | 0.679 | 0.649 |

| + Enhanced Backbone | 0.766 | 0.337 | 0.691 | 0.660 |

| + FPN-SE | 0.766 | 0.346 | 0.703 | 0.671 |

| + LSRP | 0.784 | 0.346 | 0.703 | 0.690 |

| Full Model (PDL) | 0.786 | 0.366 | 0.715 | 0.690 |

| Dataset | mAP@[50-95] | AP50 | AP75 | AP-S | AP-M | AP-L |

|---|---|---|---|---|---|---|

| FLOW (CVPR2021) | 0.39 | 0.82 | 0.42 | 0.23 | 0.40 | 0.53 |

| SeaDronesSee | 0.37 | 0.79 | 0.39 | 0.21 | 0.38 | 0.52 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Lin, H.; Xiao, L.; Zhang, M.; Zhang, P. A Novel Method for Water Surface Debris Detection Based on YOLOV8 with Polarization Interference Suppression. Photonics 2025, 12, 620. https://doi.org/10.3390/photonics12060620

Chen Y, Lin H, Xiao L, Zhang M, Zhang P. A Novel Method for Water Surface Debris Detection Based on YOLOV8 with Polarization Interference Suppression. Photonics. 2025; 12(6):620. https://doi.org/10.3390/photonics12060620

Chicago/Turabian StyleChen, Yi, Honghui Lin, Lin Xiao, Maolin Zhang, and Pingjun Zhang. 2025. "A Novel Method for Water Surface Debris Detection Based on YOLOV8 with Polarization Interference Suppression" Photonics 12, no. 6: 620. https://doi.org/10.3390/photonics12060620

APA StyleChen, Y., Lin, H., Xiao, L., Zhang, M., & Zhang, P. (2025). A Novel Method for Water Surface Debris Detection Based on YOLOV8 with Polarization Interference Suppression. Photonics, 12(6), 620. https://doi.org/10.3390/photonics12060620