Abstract

With the rapid development of intelligent transportation systems, obtaining vehicle status information across large-scale road networks is essential for the coordinated management and control of traffic conditions. Distributed Acoustic Sensing (DAS) demonstrates considerable potential in vehicle status perception due to its characteristics such as high spatial resolution and robustness in complex sensing environments. This study first reviews the limitations of conventional vehicle detection technologies and introduces the operating principles and technical features of DAS. Secondly, it investigates the correlations between DAS sensing characteristics, deployment process, and driving behavior characteristics. The results indicate that both the intensity of driving behavior and the degree of deployment–process coupling are positively associated with DAS signal sensing characteristics. This study further examines the principles, advantages, limitations, and application scenarios of various DAS signal processing algorithms. Traditional methods are becoming less effective in handling massive data generated by numerous distributed nodes. Although deep learning achieves high classification accuracy and low latency, its generalization capability remains limited. Finally, this study discusses DAS-based traffic status perception frameworks and outlines key research frontiers in vehicle status monitoring using DAS technology.

1. Introduction

As of the end of 2023, China’s total highway mileage reached 5,436,800 km, with a vehicle population of 435 million. The transportation system is facing severe challenges due to the sharp increase in traffic flow and the widening gap between supply and demand. The establishment of a sustainable transportation system that is “safe, convenient, efficient, green, economical, inclusive, and resilient” is an important measure to support high-quality economic and social development and realize the aspiration of “people enjoying their travel and things flowing smoothly”, according to Projects for Developing China’s Transportation Strength. All provinces and municipalities across the country have successively promoted the construction of deeply integrated intelligent transportation; studied and established a multi-source data fusion technology system; accelerated the development of new transportation infrastructure; and vigorously promoted the construction of a multi-dimensional, intelligent, and accurate full-time domain perception system. Full-time domain perception in the transportation field encompasses full-time domain macro-traffic flow perception and micro-traffic vehicle detection. Accurate perception of micro-traffic objects is the prerequisite for observing macro-traffic flow. Domestic and foreign research institutions and scholars have conducted extensive research on traffic object perception. Most of the classical roadside vehicle perception methods use point-like roadside detectors such as visual cameras [1], lidar [2], and geomagnetic coils [3]. Among them, visual cameras are the most widely used vehicle detection sensors, but their accuracy is often compromised by complex weather and environmental factors, such as light and haze, yielding inconsistent results. Lidar is constrained by its high hardware costs and variations in algorithm performance, making it difficult to apply in complex heterogeneous traffic scenarios that require low latency and comprehensive perception [4]. Geomagnetic sensors cannot distinguish between closely following vehicles in the flow of traffic, resulting in a significant reduction in detection accuracy. Traditional vehicle perception methods primarily rely on fixed roadside sensors, which suffer from limited sensing range, high failure rates, and substantial deployment costs. These limitations hinder their ability to provide reliable, round-the-clock perception, especially under varying weather, lighting, and environmental conditions over 24 h. Therefore, establishing a full-time domain vehicle perception system has become a crucial technical challenge in the development of a sustainable transportation system.

As DAS has developed, it has been widely applied in numerous fields, including monitoring in the areas of energy and geological resources, building and transportation infrastructure, public safety, and natural disasters. Acoustic and vibrational events occurring along the deployed fiber alter its transmission properties, thereby inducing changes in the interference pattern of Rayleigh backscattering. Distributed optical fiber acoustic sensing (DAS) technology can restore the dynamic changes in the external acoustic and vibrational field along the fiber by demodulating the changes in Rayleigh backscattering and realize the accurate perception of the state information of the surrounding acoustic and vibrational events. DAS technology has become an emerging research direction in the field of full-time vehicle domain perception. By comparing the advantages and disadvantages of classical vehicle perception methods, this paper reviews the research progress and development direction of DAS technology in the field of full-time vehicle perception. It introduces the working principles and technical characteristics of DAS technology and analyzes the correlation among DAS signal sensing characteristics, deployment processes, and driving characteristics. Furthermore, it elaborates on distributed optical fiber signal processing techniques, including the research progress in traditional signal processing and deep learning methods. The application cases of full-time vehicle domain perception based on DAS technology at home and abroad are summarized, and the most prominent research directions of DAS technology in the future are forecast.

2. Classical Vehicle Perception Methods

A comprehensive and accurate vehicle perception system achieves precise and diverse traffic situation awareness and understanding by deeply analyzing the multidimensional feature data output from various sensors, thereby providing traffic management authorities with a highly effective, multi-faceted data foundation. Based on differing sensor detection principles, classical vehicle perception methods can be categorized into visual camera detection technology, lidar detection technology, and fusion-based perception detection technology.

2.1. Visual Camera Detection Technology

Visual cameras are the most commonly used sensors in various studies within the field of traffic perception, as they can capture rich perceptual information from the traffic environment (e.g., texture, color, and grayscale) and possess strong detail representation capabilities. Visual cameras offer advantages such as multi-lane traffic condition awareness and adaptable perception angles, making them a primary choice for vehicle detection by numerous research institutions and manufacturers, including Hikvision [5], Mobileye [6], and Tesla [7]. However, their detection performance is highly sensitive to variable weather conditions (e.g., fog, snow, rain, and haze). Moving vehicle detection serves as the foundation for analyzing traffic situation information, utilizing the identification of motion regions within traffic images to achieve vehicle perception. Common motion detection methods include frame differencing [8], optical flow [9], and background subtraction [10]. However, these methods for detecting moving vehicles often exhibit low robustness and real-time performance under complex environmental conditions. To enhance automatic feature extraction and adaptability in diverse scenarios, intelligent detection techniques, particularly those based on machine learning and deep learning, have gradually become a key research focus in the field of visual camera detection. Machine learning methods employ feature descriptors to extract vehicle characteristics and train classifiers for vehicle detection [11]. By generating candidate regions through multi-stage processing, these methods can improve detection accuracy to a certain extent, though they often incur high computational costs and exhibit limited robustness. Deep learning offers significant advantages over machine learning in feature extraction, data representation, and detection accuracy, with representative algorithms including the R-CNN and YOLO series. The Faster R-CNN detection algorithm performs well in small object detection and precise localization; however, it typically requires a two-stage process, involving candidate region generation and region regression, which results in a complex network structure and limited real-time performance. The YOLO series, in contrast, frames object detection as a regression task, representing a typical single-stage detection algorithm with improved real-time capabilities. Although YOLO is less effective than R-CNN for small object detection and precise bounding box localization, it efficiently handles multi-object detection and classification in densely populated scenes.

With the continued advancement of deep learning, more sophisticated algorithms have emerged. Among them, Transformer-based object detection algorithms [12,13], which leverage attention mechanisms, and BEV-based detection algorithms [14] have attracted significant attention due to their outstanding performance. The Transformer algorithm utilizes a self-attention mechanism to capture global information from the image without significantly increasing computational complexity. The Transformer algorithm demonstrates greater potential for performance improvement and better generalization performance in pre-training and large-scale data training scenarios [15,16]. The BEV (bird’s eye view)-based target-sensing algorithm obtains data about the vehicle and its surroundings through multiple sensors mounted on the vehicle or aerial photographs taken by drones and then algorithmically integrates these data into a bird’s eye view to obtain information about the target object and its surroundings [17,18]. Table 1 summarizes some classical vehicle object detection algorithms.

Table 1.

Vehicle target detection algorithms based on vision.

2.2. Lidar Detection Technology

Lidar technology generates a three-dimensional point cloud feature map of vehicles by utilizing the principle of laser beam reflection, enabling the extraction of vehicle features for accurate perception. Lidar systems can be categorized based on their detection principles, which include time-of-flight ranging, triangulation, and phase measurement. Among these, time-of-flight ranging is the most commonly used method for distance measurement. The emitter emits a laser beam toward the target object for a brief period, and a timing system measures the total flight time of the laser beam from the emitter to the target and back to the receiver. By applying the distance formula, the distance from the lidar installation point to the object can be calculated. Due to its high-ranging accuracy, strong anti-interference capabilities, and insensitivity to lighting conditions, lidar is widely employed in the field of vehicle perception. However, the high hardware costs and substantial real-time data volume associated with lidar pose challenges for achieving low-latency perception and widespread application in various scenarios.

Scholars both domestically and internationally have proposed four distinct solutions for lidar-based vehicle detection technology: grid mapping, model matching, feature classification, and deep learning. Zhou et al. [30] proposed a vehicle detection algorithm based on grid mapping within a Bayesian framework. The experimental results indicate that the original Bayesian inference algorithm and Dempster’s combination rule are employed to update the grid map, filtering out measurement noise from static obstacles and reducing false motion targets in blank areas. Deng et al. [31] proposed a vehicle detection algorithm based on multi-feature fusion, utilizing raw data obtained from lidar sensors to extract road surface points. Through gridding and spatial clustering methods, several candidate targets are identified, which are subsequently classified using support vector machines to achieve vehicle target detection. Given the significant impact of deep learning in the field of image object detection, numerous experts have successfully adapted and applied it to lidar vehicle detection, yielding promising results. These methods can be categorized into one-stage and two-stage networks, including the PointNet series [32,33], VoxelNet [34], and Point Pillars [35].

Box-based detectors have difficulties enumerating all orientations or fitting an axis-aligned bounding box to rotated objects. CenterPoint [36] instead proposes to represent, detect, and track 3D objects as points. The resulting detection and tracking algorithm is simple, efficient, and effective. Based on PointRCNN [37], Part-A^2 Net [38] consists of a part-aware stage and a part-aggregation stage. First, the part-aware stage, for the first time, makes full use of readily available part-level supervision derived from 3D ground-truth boxes to simultaneously generate high-quality 3D proposals and predict accurate intra-object part locations. Then, the part-aggregation stage learns to re-score the box and refine the box location by exploring the spatial relationship of the pooled intra-object part locations. To solve the problem of lidar point clouds only covering part of the underlying shape due to occlusion and signal misses, BtcDet [39] learns the object shape beforehand and estimates the complete object shapes that are partially occluded in point clouds. GLENet [40] formulates the 3D label uncertainty problem as the diversity of potentially plausible bounding boxes for objects, aiming to capture the one-to-many relationship between a typical 3D object and its potentially plausible ground-truth bounding boxes. It achieves the top rank among single-modal methods on the challenging KITTI test set.

Compared to vision-based methods, lidar point-cloud-based object detection approaches offer advantages such as high accuracy, wide range, and strong resistance to interference. However, the sparse and unordered nature of point clouds, along with the monochromatic characteristics of lidar, limits their ability to perceive the color and texture features of the surrounding environment and objects. Combining lidar with various sensors for perception can help mitigate the limitations of single-sensor approaches. Some vehicle detection methods based on lidar are summarized in Table 2.

Table 2.

Vehicle detection methods based on lidar.

2.3. Detection Technology Based on Fusion Perception

Fusion-based perception refers to the comprehensive analysis and evaluation of raw data from multiple sources and structures to achieve standardized processing of data content and structure. This process involves extracting key and reliable information from heterogeneous multi-source data, enabling effective data processing and utilization. With the ongoing development of multi-source heterogeneous perception theory, multimodal fusion perception technology has gradually become an integral part of the vehicle perception domain. Numerous experts and scholars have introduced multimodal data fusion methods into vehicle object detection, resulting in significant improvements.

Wang et al. [51] present a dataset-agnostic method of Frustum ConvNet (F-ConvNet) for a modal 3D object detection in an end-to-end and continuous fashion. The proposed method employs a novel grouping mechanism—sliding frustums—to aggregate local point-wise features as inputs to a subsequent FCN. This method performs well on datasets such as KITTI and can be applied to various applications, including autonomous driving and robotic object manipulation. To address 3D object detection in highly sparse LiDAR scenes, Cai et al. [52] propose a simple yet effective cascade architecture, termed 3D Cascade RCNN, which progressively improves detection performance by deploying multiple detectors on voxelized point clouds in a cascade paradigm. The completeness-aware re-weighting design elegantly upgrades the cascade paradigm to be better applicable for the sparse input data. To comprehensively exploit image information and perform accurate and diverse feature interaction fusion, Xie et al. [53] propose a novel multimodal framework, namely Point-Pixel Fusion for Multimodal 3D Object Detection (PPF-Det). PPF-Det consists of three submodules: Multi-Pixel Perception, Shared Combined Point Feature Encoder, and Point-Voxel-Wise Triple Attention Fusion. This framework addresses the above problems and shows excellent performance. Ye et al. [54] propose a novel camera–lidar fusion architecture called 3D Dual-Fusion, which is designed to mitigate the gap between the feature representations of camera and lidar data. This architecture has achieved competitive performance on the KITTI and nuScenes datasets.

To address the issues of low robustness, high rates of missed and false detections, and reduced detection accuracy associated with single-sensor systems in complex and variable environments, Qiu et al. [55] constructed a multimodal dataset comprising visible light, polarized visible light, short-wave infrared, and long-wave infrared data. They proposed a multi-scale local intensity invariant feature descriptor registration algorithm and established a multimodal YOLOv5 recognition network, which enhanced vehicle detection accuracy; however, the algorithm demonstrated excessive processing time and lacked real-time capability. Zhao et al. [56] introduced a 3D vehicle detection multi-level fusion network based on point clouds and images, performing a data-level fusion of image and point cloud features. They also proposed a novel coarse–fine detection head, which significantly improved performance in terms of occlusion and false detections of similar objects. Li et al. [57] developed a self-training multimodal vehicle detection network, which effectively handles missing sensor data during the inference process, thereby improving detection accuracy and robustness. While the aforementioned algorithms exhibit high detection accuracy in specific scenarios, their adaptability and generalization capabilities remain limited. To enhance the generalization capability of multimodal fusion modules, Zhang et al. [58] designed a multimodal adaptive feature fusion network, proposing two end-to-end trainable single-stage multimodal feature fusion methods: Point Attention Fusion and Dense Attention Fusion. These methods adaptively combine RGB and point cloud modalities, demonstrating significant improvements in filtering false positives compared to methods that rely solely on point cloud data. Roy et al. [59] employed a series of different fusion frameworks to integrate features from images, millimeter-wave radar, acoustics, and vibrations, significantly enhancing vehicle detection and tracking performance compared to unimodal networks. Wu et al. [60] introduced an adaptive multimodal feature fusion and cross-modal vehicle indexing model to improve vehicle detection accuracy under varying lighting conditions. This model detects vehicles by fusing image and thermal infrared features, with experimental results indicating that it outperforms other state-of-the-art multimodal object detection algorithms. Wang et al. [61] addressed the low robustness issues arising from modality loss in multi-sensor object detection systems by proposing an end-to-end multimodal 3D object detection framework, UniBEV, which enhances robustness against missing modalities and demonstrates superior performance compared to baseline models. Cao et al. [62] combined frame-based visual features with event-based features, proposing a fully convolutional neural network with a feature attention gate component for vehicle detection, achieving higher detection accuracy than methods that utilize only unimodal signals as inputs. Multimodal fusion vehicle object detection algorithms effectively address the issues of low robustness and susceptibility to failure inherent in unimodal vehicle detection systems under complex environments. By integrating multiple features, these algorithms enhance the accuracy of vehicle target detection. However, they also face challenges related to excessive computational demands and limited real-time performance. Some vehicle detection methods based on fusion perception are summarized in Table 3.

Table 3.

Vehicle detection methods based on multi-model fusion.

2.4. Other Perception Technologies

In addition to the aforementioned perception technologies, various sensing techniques are also applied in the field of vehicle perception. For instance, vibration sensing technology assesses the presence of vehicles based on vibrations caused by their passage, exhibiting minimal sensitivity to weather conditions [66]. Piezoelectric sensing technology detects impulse signals generated by vehicles impacting piezoelectric elements for vehicle perception; however, piezoelectric materials are prone to aging and require regular maintenance [67]. Infrared imaging technology employs infrared sensing principles to enable intelligent vehicle perception under low-visibility conditions by receiving infrared light reflected from vehicles via internal photosensitive chips [68]. Ultrasonic sensing technology facilitates vehicle perception by detecting reflected ultrasonic waves and is widely utilized in intelligent transportation systems for vehicle identification and speed detection [69]. However, all the aforementioned sensing methods employ a point-based deployment strategy, which limits the sensing range of single-point detection devices and results in high costs due to the dense deployment required for comprehensive coverage. Fixed roadside detectors are constrained by installation methods, and prolonged operation can lead to changes in external parameters and significant data errors, making system maintenance difficult and costly.

3. Vehicle Perception Method Based on DAS Technology

Distributed optical fiber sensing (DOFS) technology utilizes communication optical fibers as the sensing medium for signals. By detecting changes in the parameters (intensity, phase) of the optical signals within the communication optical fibers under the influence of external fields (temperature field, stress field, acoustic vibration field), it achieves continuous distributed sensing within the area where the optical fibers are deployed [70]. Currently, DOFS technology is commonly classified into two categories: backscatter-based and interferometric [71]. Among the backscatter types, the scattered light includes Rayleigh scattered, Brillouin scattered, and Raman scattered [72]. This paper focuses solely on DAS technology based on Rayleigh backscattered light and does not address other types.

3.1. The Sensing Principle of DAS Technology

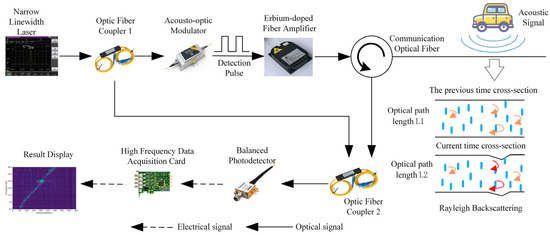

A DAS system is composed of a narrow-linewidth laser, an acousto-optic modulator, an erbium-doped fiber amplifier, a circulator, a high-frequency data acquisition card, and a communication optical fiber, as well as other components. Optical fiber coupler #1 splits the continuous optical signal emitted by the narrow-linewidth laser into two optical paths, an upper path and a lower path. The acousto-optic modulator modulates the upper-path optical signal into an optical pulse signal, which is then amplified by the erbium-doped fiber amplifier. The amplified optical pulse signal is transmitted into the optical fiber via the circulator. The optical fiber laid alongside the road is affected by vehicle vibration signals, causing minute deformations in the optical fiber that alter the optical path length of light propagation. This results in phase variations of the Rayleigh backscattered light from adjacent pulses at the same position, as described in Equation (1). The changed Rayleigh backscattered light passes through the circulator and enters optical fiber coupler #2. The lower-path light serves as a reference light and directly enters optical fiber coupler #2. The phase difference between the two optical paths causes a change in light intensity through the coherence effect. This change is then converted into an electrical signal by a balanced photodetector. The resulting signal is processed by the data processing module to produce the vehicle perception results. The sensing principle of DAS is illustrated in Figure 1.

where λ is the wavelength of incident light, ∆L is the change in the optical path caused by the sound field, and n is the refractive index of the fiber core.

Figure 1.

The sensing principle of DAS.

3.2. Analysis of DAS Signal Sensing Characteristics

Compared to traditional point-like sensors, DAS exhibits superior scalability and high-spatial-resolution measurement capabilities. DAS discretizes tens of kilometers of roadside optical fiber into tens of thousands of independent sensing points, enabling the monitoring of state information for vehicles in the vicinity of the optical fiber. Therefore, exploring the relationship between DAS signal sensing characteristics and driving characteristics and deployment processes is of great significance in promoting the application of DAS technology for full-time vehicle domain perception.

3.2.1. Correlation Between DAS Signal Sensing Characteristics and Driving Characteristics

DAS achieves the distributed perception of road traffic conditions by acquiring traffic noise generated by passing vehicles via sensing fibers deployed in the monitoring area. Traffic noise is generated by small and rapid pressure changes within individual vehicles, such as in the engine/transmission system and the exhaust system, and between the individual vehicles and the environment, such as wind noise and tire/road interaction [73]. Further, driving characteristics (such as the speed and quality of driving) are the decisive factors of traffic noise information quality. Therefore, it is of great scientific significance and practical value to deeply analyze the complex relationship between DAS signal sensing characteristics and driving characteristics and to reveal the physical mechanism and mathematical model behind it to further improve the accuracy, reliability, and application efficiency of DAS technology in traffic monitoring.

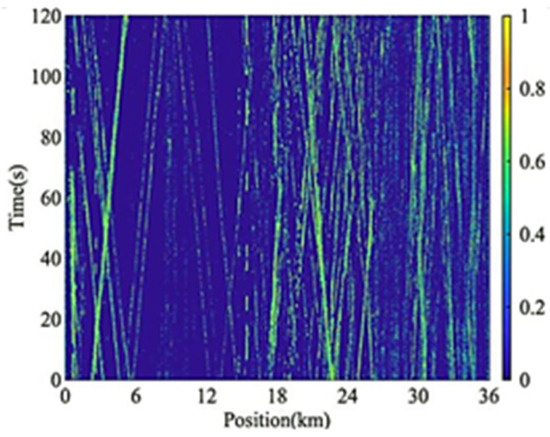

Driving speed is an important factor in compiling traffic noise information, because speed not only directly shapes the spectrum characteristics of traffic noise but is also one of the core dimensions used to evaluate the quality of DAS signals. While current research focuses on the analysis of the relationship between driving speed and DAS signal sensing characteristics, the underlying quantitative correlation remains insufficiently investigated. Therefore, it is particularly important to explore the dynamic relationship between driving speed and DAS signal characteristics. Wu et al. [74] pointed out that when a vehicle is traveling at high speed (up to 120 km/h), wind noise dominates traffic noise, but they did not conduct a quantitative analysis of the relationship between wind noise and vehicle speed. Xu et al. [75] collected two-dimensional DAS spatiotemporal signals from a section of an expressway in Guangdong Province, China, using two-minute samples from 15:00 to 15:02. The analysis found that the passing vehicles were mainly concentrated in the three sections of 1–5 km, 18–27 km, and 29–35 km. Among them, the average vehicle speed in the 1–5 km section and the 18–27 km section was about 80 km/h; the average vehicle speed in the 29–35 km section was about 56 km/h. As shown in Figure 2, the waterfall shows that the vehicle trajectories in the 1–5 km and 18–27 km sections are clearer than those in the 29–35 km section, indicating that the higher the driving speed within a certain speed range, the better the DAS signal quality.

Figure 2.

Two-dimensional spatio-temporal signal of a highway section in Guangdong [75].

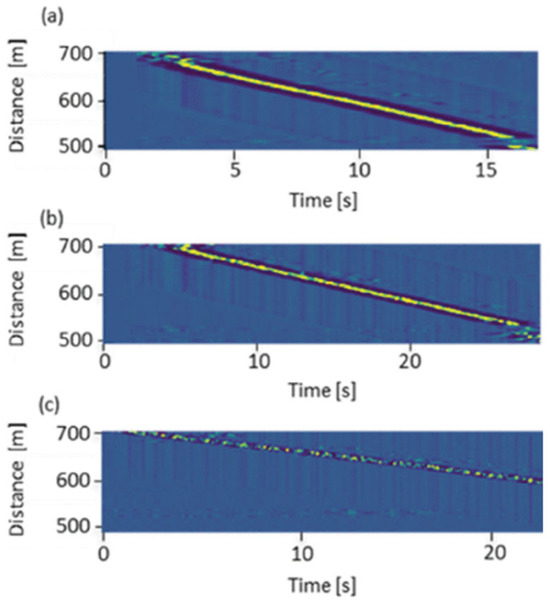

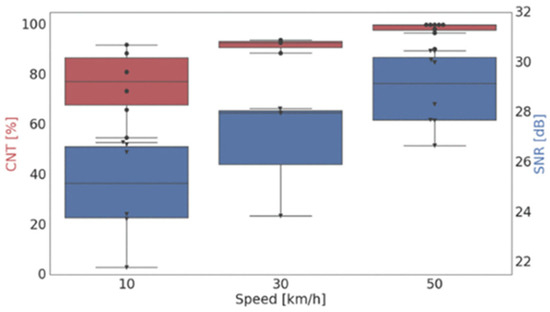

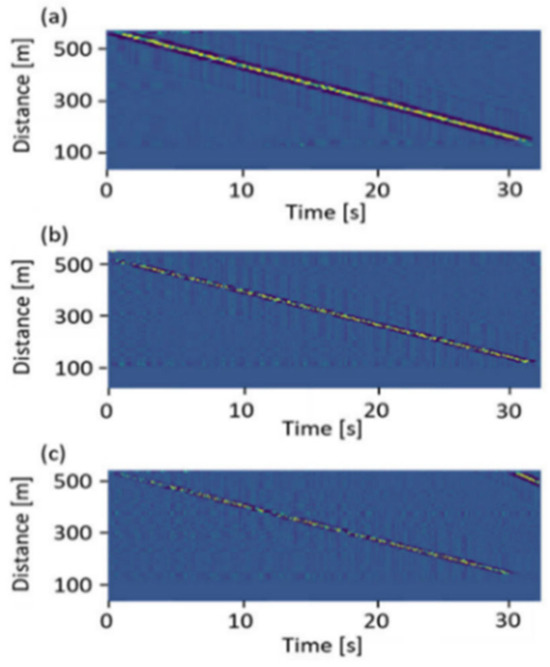

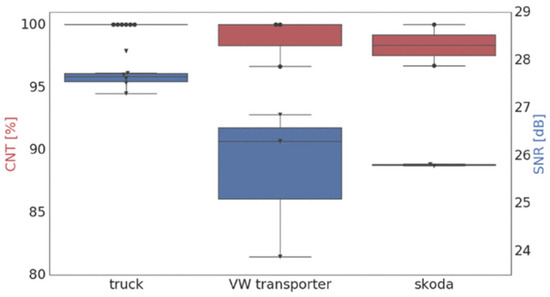

The aforementioned studies indicate a correlation between driving speed and the clarity of vehicle trajectories. However, a mathematical model that rigorously defines the logical relationship between these two variables is still lacking. Thomas et al. [76] experimentally investigated the performance of DAS signal quality at varying truck speeds. They introduced two DAS data quality indicators, namely, the signal-to-noise ratio (SNR) and continuity (CNT), to characterize the relationship between driving speed and DAS data quality. The definitions of the SNR and CNT are shown in Equations (2) and (3). The experimental findings reveal that when the truck speed was decreased from 50 km/h to 10 km/h, the SNR of DAS data decreased by an average of 4.0 ± 1.1 dB, and the CNT decreased by 23 ± 5.7%. The experimental results are shown in Figure 3. A box plot of the SNR and CNT is shown in Figure 4.

where s is the signal (the z-score associated with the track), and n is the background noise. E(·) denotes the expectation value, which for the signal and noise are approximated by the average value over a track and the average value over a track-free area.

where li is the length of the detected track segment i, and L is the expected total track length.

Figure 3.

Space–time visualization of DAS data. The approximate average speed of the truck was (a) 50 km/h, (b) 30 km/h, and (c) 10 km/h [76].

Figure 4.

DAS SNR (blue) and CNT (red) corresponding to multiple truck passes [76].

The study shows that with the continuous increase in vehicle speed, the noise generated by the interaction between the tire and the road surface is greater, so vehicle speed has a significant impact on the vehicle’s spatiotemporal DAS signal quality. The work on DAS signal sensing characteristics and driving speed shows that driving speed is proportional to the DAS signal quality under the same conditions, which has laid a research foundation for subsequent quantitative analysis work. However, evaluating the signal quality only from the perspective of the SNR and CNT is too simplistic, and there is a lack of evaluation standards for the actual performance of DAS signals. There is a lack of work on driving speed and the quantitative analysis of DAS signals.

In addition to vehicle speed, vehicle quality is also one of the important factors that determine traffic noise information. In terms of traffic noise information analysis, most studies focus on using artificial feature extraction technology to achieve DAS event classification, including the use of wavelet packet transforms [77], Mel-spectrograms [78], empirical mode decomposition [79], and other technologies. There are fewer studies on the correlation between driving quality and DAS signal characteristics. In general, different types of vehicles have different weights, and the traffic noise signals generated during driving are also different. Theoretically, the classification of vehicle types can be realized by comparing and analyzing the characteristic parameters of the signal. Through the analysis of DAS signal sensing characteristics of vehicles of different sizes, Thomas et al. [76] found that when trucks, vans, and cars passed through the deployment area at a speed of 50 km/h, the trucks were more clearly distinguishable, as shown in the waterfall plot in Figure 5. A box plot of the SNR and CNT is shown in Figure 6. The DAS SNR of the truck was 1.4 ± 0.6 dB higher than the SNR of the VW Transporter, and the SNR of the VW Transporter was 0.5 ± 0.6 dB higher than the Skoda’s SNR. The data indicate that trucks exhibit the highest SNR and CNT, followed by vans and cars, partly due to their greater weight and increased contact with the road surface. The CNT values of the signal for the truck, van, and estate car at 50 km/h were measured to be 100%, [98.3, 100]%, and [97.5, 99.2]%, respectively, where the square brackets denote the interquartile range. These closely lying values indicate that the real-world performance of DAS is potentially good across a wide range of vehicle types travelling at 50 km/h.

Figure 5.

Spatio–temporal visualization data of DAS for vehicles of different masses traveling at the same speed: (a) trucks, (b) vans, (c) cars [76].

Figure 6.

SNR (blue) and CNT (red) corresponding to different vehicle types. All vehicles were driving at 50 km/h [76].

In summary, work on driving characteristics and DAS signal quality shows that DAS signal sensing characteristics have a strict positive correlation with driving characteristics (driving quality and speed), which is of great significance for exploring the resulting correlation model. However, due to a lack of quantitative analysis of relevant work, it is difficult to show the internal mechanism of the relationship between the two. In the future, the evolution mechanism of DAS signals can be explored by combining vehicle kinematics theory and road geometry linear analysis.

3.2.2. Correlation Between DAS Signal Sensing Characteristics and Deployment Process

In addition to being closely related to driving characteristics, the quality of traffic noise information is also closely related to the optical fiber’s method of deployment (such as the deployment form and burial technology). As the sensing unit of DAS, the optical fiber greatly affects the DAS signal sensing characteristics. Traffic noise signals interact with the multi-phase transitional road surface to form a complex acoustic–solid coupling field. Due to the non-uniform longitudinal response characteristics of the DAS channel and the distortion caused by mechanical wave propagation, the DAS signal changes significantly along the sensing optical fiber [80], making it impossible to effectively identify the vehicle status information. Therefore, exploring the deployment methods for optical fibers has extremely important academic value and application prospects for understanding the propagation characteristics of traffic noise signals in complex road structures.

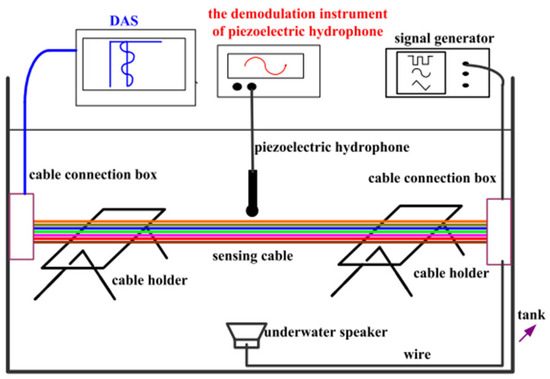

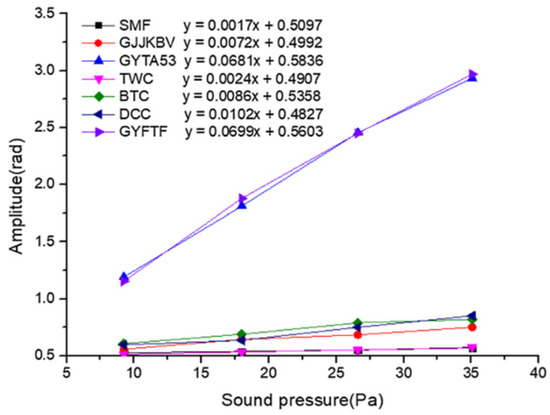

The internal structure and manufacturing process of optical fibers can also restrict the propagation of traffic noise information. Therefore, choosing a suitable sensing fiber is crucial to the performance of the DAS system. Shang et al. [81] designed an optical fiber sensitivity experimental device based on the DAS system, as shown in Figure 7, which can simultaneously measure the sensitivity of seven common optical fibers under the same conditions. The results of using different optical fiber types under different sound pressures are shown in Figure 8. Seven kinds of optical fibers were used for four sound pressure experiments of 9.24 Pa, 17.99 Pa, 26.59 Pa, and 35.07 Pa, respectively. The GYFTY non-metallic optical fibers had the highest sensitivity, with the highest value of 69.9 mrad/Pa.

Figure 7.

DAS detection system setup [81].

Figure 8.

Phase demodulation results of seven cables at different acoustic pressures [81].

Common optical fiber deployment methods include installation overhead, underground, and within pipes [82]. The burial method used for in-ground installation affects the coupling used between the optical fiber and the surrounding media, which, in turn, greatly affects the signal data quality [83]. To determine the impact of optical fiber coupling conditions on data quality, Papp et al. [84] designed an experimental analysis to compare the differences in DAS signal quality under three coupling conditions: putty powder bonding, sandbag pressure, and steel nail fixation. The results were compared with the data recorded by the detector, and it was found that the recorded waveforms differed greatly among the three burial methods. The putty powder bonding coupling had the best effect, as shown in Figure 9.

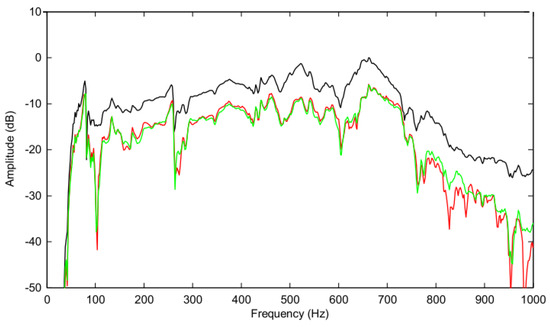

Figure 9.

Analysis results of different coupling methods: putty powder (black line), sandbag (red line), and steel nail (green line) [84].

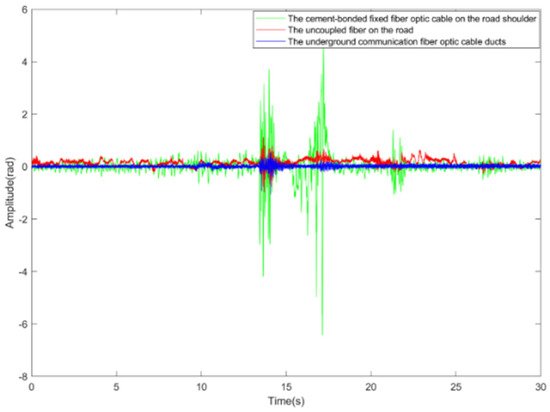

Li et al. [85] designed an experiment to compare the two optical fiber burial technologies: in-pipe suspension and cement fixation outside the casing in a well. The experimental results showed that the cement fixation outside the casing yielded a better coupling effect. An et al. [86] used three different buried-deployment methods to monitor vehicles on campus roads at Beijing Jiaotong University. The experimental results showed that the traffic noise information loss rate was the lowest when the optical fiber was fixed through cement coupling on the shoulder. This conclusion is consistent with the installation guide of the Fiber Optic Sensing Association [87]. In this study, the root mean square value of the signal-to-noise ratio and signal energy are proposed as the evaluation index of DAS data quality, and the calculation formula is shown in Equations (4) and (5). The energy levels and SNRs of various fiber deployments are presented in Table 4. Among them, the cement-bonded fixed fiber optic cable on the road shoulder demonstrates the highest values—71.0034 dB for the SNR and 943.37 for the energy level. These results indicate that the degree of coupling between the fiber and the road surface is positively correlated with DAS data quality. An amplitude comparison of the data of the different deployment modes is shown in Figure 10.

where i denotes the number of channels of fiber; j denotes the signal data of Group j; SNRi,j denotes the signal-to-noise ratio of channel i of the fiber in the signal data of Group j; SNR1 denotes the root mean square of the signal-to-noise ratio of the signal data of Group j; and RMS denotes the root mean square of the signal-to-noise ratio of the fiber with different deployment methods.

Table 4.

Data quality of different deployments of fiber.

Figure 10.

Comparison of vibration data of three different deployment methods (single channel) [86].

The morphological characteristics of the optical fiber deployment form are also important factors restricting the signal propagation characteristics of DAS. Zeng et al. [88] proposed a variable dimension analysis method for the temporal and spatial response characteristics and global frequency domain characteristics of cement concrete pavement vibration. Their experimental results show that, compared to the linearly deployed system, the optical fiber ring array provided higher spatial resolution. However, the mechanism model of the association between deployment form and DAS signal sensing characteristics has not been explored yet. The above works analyzed the influence of deployment processes by comparing the signal-to-noise ratio and amplitude spectrum. The results show that the signal-to-noise ratio quality of traffic noise information signals was higher under tight coupling conditions.

In summary, although extensive research has been conducted by domestic and international researchers on optimizing optical fiber deployment methods, the coupling mechanism of traffic noise propagation through the road surface remains insufficiently explored.

3.3. Signal Processing Algorithm for DAS

Distributed fiber optic acoustic sensing signals can be analyzed in multiple domains, including the time, frequency, and space–time domains. The road traffic status perception capability of the DAS system depends on the signal processing method. Currently, DAS signal processing algorithms are divided into two categories: those based on traditional methods and those based on deep learning.

3.3.1. Research on Signal Processing Algorithms Based on Traditional Methods

The DAS system signal can be represented in multiple domains and dimensions through the time domain, frequency domain, or space–time domain. The time domain represents the acoustic amplitude information of the perceived environment. The frequency domain represents the frequency distribution information of the perceived environment. The space–time domain represents the space–time location information of the perceived environment, and the traditional signal processing technology can process data in the corresponding domain. However, DAS data are easily mixed with environmental noise, and it is difficult to effectively identify the target state by simply relying on time domain signal processing technology. The data usually need to be combined with frequency domain signal processing technology for analysis. Therefore, traditional signal processing of DAS can be divided into two methods: time–frequency domain signal processing and space–time domain signal processing.

1. Signal processing method based on time–frequency domain information

Time domain signal processing technology uses the signal amplitude threshold method to judge different types of events, and it can achieve the recognition, classification, and location of perceived events under certain conditions. However, time domain signals contain all observed signal components, and complex signals cannot be simply judged according to thresholds. The DAS signal is a non-stationary time-varying signal that contains abundant environmental noise and can reflect severe interference from external and self-system noise. Time domain signal processing technology cannot effectively eliminate noise and enhance the signal-to-noise ratio, resulting in the DAS system being unable to accurately identify events.

Researchers usually combine frequency domain processing technologies for signal processing. To mitigate the challenges posed by the complex environment and extensive background noise of gas pipelines, Wang et al. [89] proposed an online monitoring technology for oil and gas pipeline leakage based on high-fidelity distributed optical fiber acoustic sensors to improve the signal-to-noise ratio of pipeline leakage signal detection and determine the time and location of pipeline leakage. The Haar wavelet denoising algorithm was used to improve the detection sensitivity of leakage events and realize the accurate monitoring and positioning of gas pipeline leakage. To provide real-time monitoring of the long-term jamming and breakage of rollers in belt conveyors, Liang et al. [90] proposed a roller monitoring method based on distributed optical fiber acoustic sensors. The short-time Fourier transform based on the Hamming window was used to perform time–frequency analysis on the collected signals, and the local features under different faults were extracted to realize fault identification. To address the low signal-to-noise ratio (SNR) caused by coherent noise in complex road environments and inherent white noise in DAS, Wang et al. [91] proposed a vehicle trajectory enhancement algorithm based on the S-transform. The proposed method first converts the vibration signal from each DAS channel into the S-domain, where the noise component is suppressed. Then, the vibration signal energy generated by vehicle motion is accumulated along the frequency dimension to improve the SNR of the DAS system and effectively enhance the vehicle trajectory signal. To address the limitations of the traditional dual-threshold method, which performs poorly in detecting vibration signals, Liu et al. [92] proposed an improved dual-threshold signal processing algorithm for vehicle detection. This enhanced approach significantly improves detection accuracy in high-noise environments and demonstrates strong robustness. Munoz et al. [80] proposed a fully blind DAS signal processing method based on near-field acoustic array processing, which takes into account the non-uniform response of the DAS channel and can be used for optical fibers laid out at any angle. In this method, the signal distortion is reduced, and the corresponding signal-to-noise ratio is improved by sparse beamforming spatial filtering without the location information of the signal source and the optical fiber.

In summary, time–frequency domain processing technology represents the information of the signal in two dimensions of time and frequency. The dual-dimensional description method can fully understand the signal characteristics, but its computational complexity is relatively high and requires more computing resources and time, which is not feasible for real-time scenarios and has poor migration generalization ability.

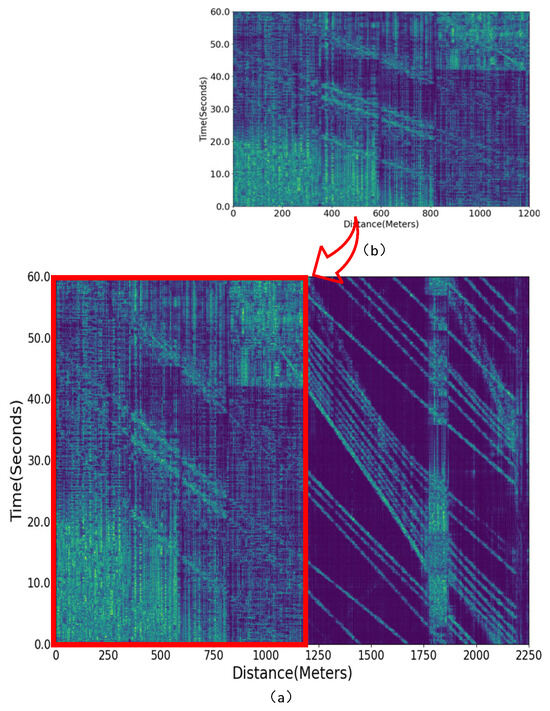

2. Signal processing algorithm based on space–time information

Space–time domain images (also called waterfall plots) output by DAS systems are not traditional conventional visual images but ST images in kinematics. A waterfall plot can be used to obtain the position change information of a vehicle in a continuous period and deduce the vehicle’s motion state information. However, the random noise in the space–time domain image has a greater impact, and unprocessed noise will seriously restrict the vehicle recognition accuracy. Figure 11 shows a time domain image of DAS. Figure 11b shows a strong noise interference image of the first 1200 m in Figure 11a. From the actual deployment area, the noise interference of the first 1200 m was mainly caused by the strong noise interference caused by the resonance of the bridge body when the vehicle drives over the bridge. It can be observed from Figure 11b that, without effective noise suppression, the system fails to retain the state information of most vehicles.

Figure 11.

Time domain image of DAS system: (a) coverage length of 2250 m, (b) coverage length of the first 1200 m.

The strong coupling between the vehicle-induced vibrations and the road surface causes the space–time trajectory in the waterfall plot to blend with background noise, making it difficult to distinguish. To further extract the characteristics of the space–time trajectory, some researchers convert DAS data into a waterfall and use the obtained 2D information space–time trajectory information to denoise the image and enhance the signal-to-noise ratio of the DAS system [93,94]. He et al. [95] proposed an adaptive image restoration algorithm based on 2D bilateral filtering to improve the signal-to-noise ratio of the intrusion location of a distributed optical fiber acoustic sensor system. By converting the spatial and temporal information of the waterfall trace into a 2D image, the noise was effectively smoothed, and the useful signal was retained. According to the relationship between the optimal grayscale standard deviation and the noise standard deviation, an adaptive parameter setting method was proposed. The experimental results show that this method could effectively maintain the spatial resolution after denoising and had high positioning accuracy. Wang et al. [96] proposed a 2D vibration event recognition method based on the Prewitt edge detection method. The edge detection of a spatiotemporal image containing a vibration signal is performed, the edge of the disturbance stripes and noise in the image edge is detected, and the grayscale difference of adjacent pixels in the image is smoothed to obtain the 2D spatiotemporal distribution information of the vibration event. Sun et al. [97] conducted an in-depth analysis of multi-event data, such as driving, walking, and digging, and proposed a morphological feature extraction method to classify events. This method uses image processing technology to preprocess the signal, extracts the event area in the image as the research object, calculates the scattering matrix for feature selection, and reduces the false detection and missed detection rate of event detection and classification. Xu [98] proposed a signal processing method combining pattern recognition and image morphological features. This method employs pattern recognition to analyze the characteristics of single-point signals. It combines image morphological operations and the Hough transform to extract spatial distribution features and integrates multi-dimensional signal information to enhance the system’s recognition accuracy. Fontana et al. [99] proposed a novel method based on harmonic analysis to estimate vehicle trajectories from DAS data, addressing the challenge that high traffic volumes, multi-lane highway environments, and noise sources can degrade the vehicle detection capability of DAS sensors. The method is based on an iterative procedure that removes the contribution of the detected vehicle’s trajectories in the notched power, which is evaluated on a finite alphabet of parameters. The comparison of the algorithm performance with a detector based on the Hough transform shows greater accuracy in terms of localization, false targets, and missed targets with both synthetic and real datasets.

In summary, these multi-domain and multi-dimensional feature extraction and analysis methods greatly improve the nonlinear mapping ability of signal features to event categories, but traditional signal processing methods cannot keep up with the changing patterns of massive data from widely distributed nodes, have poor environmental adaptability, have a long algorithm model update cycle, and are difficult to apply in complex traffic scenarios.

3.3.2. Signal Processing Algorithm Research Based on Deep Learning

Traditional DAS signal recognition methods have solved the target recognition problem to some extent, but they are hampered by issues such as a cumbersome process, low precision, and weak generalization ability. Deep learning algorithms are favored by many scholars in the field of signal processing for their high recognition accuracy, strong feature extraction ability, and end-to-end processing capabilities. Deep learning uses complex deep neural network structures to automatically extract key features from massive high-dimensional datasets, learn nonlinear mapping relationships between data, and improve the accuracy of classification and prediction tasks. At present, feature extraction and high-precision recognition of DAS signals using deep learning have become promising research directions [100,101,102,103]. Among them, supervised learning, semi-supervised learning, and unsupervised learning play an important role in the field of DAS intelligent signal processing.

1. Signal processing algorithms based on supervised learning.

Supervised learning is the process of learning a mapping between inputs (features) and outputs (labels or target values) by training on a given dataset. Most of the current deep learning methods are supervised learning networks, such as convolutional neural networks (CNNs) and long short-term memory networks, which can be expanded and combined in different forms. CNNs are multilayer perceptrons, similar to an artificial neural network. Their powerful feature extraction ability for images has led to them dominating the field of intelligent detection. Long short-term memory networks solve the problem of gradient disappearance and gradient explosion in long sequence data training and retain long-term features through gated state control feature transmission. Thus, supervised learning has shown excellent performance in detection tasks.

Researchers have focused on the problems of diverse monitoring environment noise, easily confused noise and target signals, and difficulty in identifying weak target signals due to being submerged in DAS signal recognition scenarios; data scarcity, data imbalance, and uncertainty in recognition types pose challenges to anomaly detection. Huang et al. [104] proposed a decoupled parallel CNN to realize the high-precision recognition of five events, including background noise, footsteps, and mining, in small datasets by inputting time–frequency and space–time multi-dimensional features in parallel reasoning. Their experimental results show that DPCNN achieved an optimal trade-off between speed and accuracy. Wu et al. [105] proposed an intensity and phase superposition CNN (IP-CNN), which incorporates data augmentation by combining time domain stretching and shifting to enhance DAS data. The model separately extracts intensity and phase features using CNNs for feature fusion and final classification. The experimental results show that IP-CNN with data enhancement effectively improves the DAS signal pattern recognition accuracy. Wang et al. [106] proposed a data fusion method based on deep convolutional networks to solve the problem of weak acoustic signals near the track or small defects being difficult to identify. The method combined the spatiotemporal signals of optical fiber acoustics at three different time points into RGB images, classified the acoustic signals into those with events and those without events through a pre-classifier, and input the vibration signals with events into VGG-16Net for classification. The event recognition accuracy reached 98.04%. Xiao et al. [107] proposed a detection method based on Faster R-CNN, which normalized the collected signals in time and space dimensions into space–time images and detected five kinds of target events. The experimental results show that the detection accuracy of the system for target events was 89%. Liu et al. [108] proposed a DAS signal recognition method based on an end-to-end attention-enhanced ResNet model. The Euclidean distance between the posterior probabilities of correct and incorrect classification was used to compare the discriminability of STFT and MFCC input features. Based on the time–frequency domain features, the ResNet+CBAM model was used to recognize four kinds of events, and the test accuracy reached 99.014%. The proposed model had good performance in recognition accuracy, convergence speed, generalization ability, and computational efficiency. Wu et al. [109] proposed an acoustic source identification method based on an end-to-end mCNN-HMM combined model. mCNN extracts the multi-scale structural features and mutual relationships of the signal and inputs them into the HMM model for classification. The experimental results show that the proposed method was superior to the existing CNN-HMM model in both feature extraction capability and recognition accuracy. In addition to deep learning networks dominated by CNNs, the supervised learning of DAS also includes long short-term memory networks. Jiang et al. [110] proposed an MSCNN-LSTM combined model recognition method to improve the recognition rate of an ultra-weak fiber Bragg grating array. MSCNN-LSTM was used to extract multi-scale features from time domain signals. These features were fused using an attention mechanism, and their temporal relationships were modeled through LSTM. The recognition of five typical intrusion events was realized. Yang et al. [111] proposed an end-to-end alignment-free method for detecting multiple acoustic events. The approach employs a sliding window and CNN to extract time–frequency features, uses BiLSTM to capture the temporal dependencies of signals, and applies Connectionist Temporal Classification (CTC) to assign labels to unsegmented sequence data, enabling the efficient and accurate detection of multiple acoustic signals. Li et al. [112] proposed a DAS signal recognition framework that employs a CNN to extract spatial features from DAS multi-channel signals and LSTM to analyze their temporal dependencies, enabling real-time and high-precision intrusion detection for high-speed railways. Experiments conducted in a strong background noise environment showed that this method significantly improved intrusion detection accuracy and reduced system response time. Wang et al. [113] proposed a CDIL-CBAM-BiLSTM model based on feature fusion to solve the problem of low recognition accuracy with a high sampling rate and long sequence signal data collected by DAS. CDIL-CNN was used to extract global features, and BiLSTM was used to extract temporal structure information. The CBAM spatial attention mechanism is introduced to mine the key features of the signal, and the accurate recognition of six interference events is realized. Compared with the traditional methods, the model has strong mobility and the highest recognition accuracy. Xie et al. [114] proposed a feature detection method based on a CNN combined with the Hough transform to address the issue of vehicle trajectory fragmentation in DAS-based traffic monitoring caused by severe noise. In their approach, vehicle-related features are first transformed using the Hough transform, and then a CNN is employed to detect these features. This method significantly improves the continuity, completeness, and noise robustness of vehicle trajectory reconstruction.

The supervised learning method has solved the problem of the accurate identification of DAS target signals when signals of interest are aliased to a certain extent. However, due to the complexity of DAS application scenarios, it is difficult to obtain labeled data for a large number of new situations promptly, resulting in a significant drop in model detection accuracy, thereby limiting its practical applicability.

2. Signal processing algorithms based on semi-supervised/unsupervised learning.

Supervised recognition models greatly improve the detection accuracy of recognition models with their large amount of labeled data, but their acquisition of labeled data requires a great deal of human and material resources and time costs. The collection of large labeled datasets poses a considerable challenge due to the high time and labor costs involved. In practical applications, the number of labeled samples is insufficient in new scenarios, and it is a new challenge to realize the high-precision recognition of DAS signals based on a small amount of labeled data and a large amount of unlabeled data. To improve the generalization ability of recognition models, unsupervised and semi-supervised learning methods of DAS have also emerged.

He et al. [115] proposed a semi-supervised learning method based on a Generative Adversarial Network (GAN). The generator structure of the GAN was used to provide a large amount of data for the discriminator model to train, which solved the application limitations of the DAS supervised model when the amount of labeled data was fairly small. Yang et al. [116] used unlabeled data to train a sparse stacked auto-encoder to extract DAS signal features and used a small amount of labeled data for training and target positioning and recognition, which effectively improved the utilization rate of unlabeled data and the robustness of the model. Wang et al. [117] proposed a FixMatch-based semi-supervised learning method for high-speed rail track detection, which conducts supervised training on limited labeled data and boosts the performance of unsupervised learning on extensive unlabeled data through a pseudo-label generation mechanism. Xie et al. [118] proposed an unsupervised learning method that only learns normal data features from ordinary events. A convolutional auto-encoder is used to extract DAS signal features, and a clustering algorithm is used to locate the feature center of the normal signal. The distance between the signal and the normal data cluster center is used to determine whether it is an abnormal event. In specific scenarios, it has a higher detection rate, lower false alarm rate, and fewer model parameters than a supervised network. Wu et al. [119] proposed an unsupervised learning method based on a Spiking Neural Network (SNN). The SNN has a three-layer structure of an input layer, activation layer, and inhibition layer; the network structure is simple, and the computational cost is low. The results show that the performance of the unsupervised SNN is more stable than that of a supervised CNN on unbalanced datasets, and the generalization ability is significantly improved. Martijn et al. [120] proposed a distributed fiber blind denoising technology based on a self-supervised learning method. This method uses the spatial density of DAS measurements to remove spatially incoherent noise with unknown characteristics. It does not make any assumptions about the noise characteristics, so it does not require noise-free ground truth. The experimental results show that this method can effectively separate coherent noise from real signals. Semi-supervised/unsupervised learning solves the problems of insufficient DAS training datasets and data imbalance to a certain extent and achieves effective event detection and classification. To make DAS detection algorithms run stably and reliably in a real traffic environment, Ende et al. [121] proposed a self-supervised deep learning approach that deconvolves the characteristic car impulse response from the DAS data, referred to as a Deconvolution Auto-Encoder (DAE). It shows that the deconvolution of DAS data with the DAE leads to better temporal resolution and detection performance than the original (non-deconvolved) data. The study subsequently applied the DAE to a 24 h traffic cycle, demonstrating the feasibility of the proposed method to process large volumes of DAS data, potentially in near-real time.

In summary, DAS signal recognition methods based on deep learning play a leading role with their advantages of high classification accuracy and fast response, but some problems remain, such as poor interpretability, insufficient generalization ability, and poor real-time performance, in addition to the lack of available public datasets.

4. Application of DAS Technology in Vehicle Status Information Perception

The construction of transportation infrastructure is an essential and strategically important component of the national economy [122]. The construction of advanced ubiquitous vehicle perception systems is an important part of the management and control of the transportation system, which plays a key role in promoting the development of transportation. A DAS system has the technical characteristics of global availability, low cost, strong timeliness, and durability and thus provides a feasible technical support and realization path for ubiquitous vehicle perception. Popular DAS research topics include identifying the driving direction and types of traffic targets, analyzing the formation mode and type of congestion, and evaluating the health status of road infrastructure. By reusing the communication cables on both sides or in the center of the road as the sensing unit, a DAS system does not need to destroy the main structure of the road and greatly reduces the sensing cost, which has become a priority for road management authorities. Therefore, many scholars and research institutions at home and abroad have carried out a series of technical research projects, test verifications, and application demonstrations.

In 2016, a demonstration application of OptaSense’s traffic monitoring solution was carried out on the I-29 highway in Fargo, North Dakota, USA, becoming the first smart highway in the United States to use a DAS system to monitor and count traffic on the highway [123]. OptaSense’s traffic monitoring solution used roadside pre-laid fiber as a smart sensing fiber to detect traffic objects on both sides of I-29 over 4.5 miles of test road. The project report indicates that each roadside deployed DAS host could effectively cover up to 50 miles of highway. This is the first time that OptaSense has proposed the concept of replacing multiple point-like sensors with a single roadside device, but it has not released the details of the actual project and test results.

In 2021, the Nevada Department of Transportation cooperated with Dura-Line to test the OptaSense TMS by converting the existing roadside fiber into sensing fiber [124]. The noise and vibration signals of the monitored road section were detected through the roadside sensing optical fiber and converted into key traffic flow indicators (average traffic speed, congestion detection, queue detection, journey times, traffic count, and flow rate). The test section had a variety of fiber optic deployment processes, and many vehicles crossed the road each day. The Nevada Department of Transportation conducted the test without closing any roads or lanes and without considering the influence of weather conditions, ongoing maintenance, or road surface wear. The test results show that the influencing factors of attenuation of sound propagation, such as roadside walls and medians, will restrict the perception results. When the optical fiber is well coupled, the system can accurately detect multi-lane data. In contrast, poor acoustic coupling caused by inconsistent road elevation yields little to no traffic data signal. Despite these challenges, preliminary application results are still encouraging.

5. Summary and Forecast

DAS technology, with its characteristics of high sensitivity, low cost, and high precision, lays a solid foundation for full-time vehicle domain perception and provides a practical and basic solution. By systematically comparing the strengths and limitations of DAS and traditional point-based sensors, this paper provides valuable technical insights and theoretical support for building large-scale, real-time vehicle perception capabilities. At present, the main research work of domestic and foreign scholars focuses on vehicle target detection and classification in typical traffic scenes; the application of vehicle perception in complex traffic scenes is still in the exploratory stage. Detection of complex and changeable heterogeneous traffic subjects and the use of vehicle perception technology in traffic scenes are the most promising research directions of DAS in the future, including the three specific areas outlined here.

5.1. Multi-Source Aliasing Detection in Complex Traffic Scenarios

In complex heterogeneous traffic scenarios, the existence of multiple acoustic vibration sources, such as pedestrians, vehicles, construction, and other urban noises, affects the perception results, resulting in the multi-source aliasing phenomenon of DAS sensing signals. The perception accuracy of an algorithm suitable for single-source detection decreases sharply, failing to effectively identify the target, which seriously restricts its large-scale application in the urban traffic environment. Combining directional coherence enhancement through spatial array signal synthesis with physics-informed machine learning (ML) techniques offers an effective solution for decoupling and separating nonlinear aliasing signals from an unknown number of sources in complex scenarios. This approach significantly enhances the cross-scene generalization capability of distributed optical fiber sensing technology.

5.2. Evolution Mechanism of DAS Signals in Vehicle–Road Coupling Environments

On asphalt pavement with a non-uniform multi-phase structure, and in the presence of various tires and vehicles, the key characteristics and typical patterns of acoustic vibration signal generation and propagation induced by vehicle–road interactions remain unclear. To address this, a high-confidence dynamic evolution model of vehicle–road-coupled acoustic vibration signals has been developed based on vehicle dynamics modeling, acoustic wave equations, road geometry, and vehicle kinematics theory. Meanwhile, the investigation of the evolution mechanism of DAS signals continues to be a major focus of current research.

5.3. Heterogeneous Sensing Technology to Achieve Low-Cost and Reliable Sensing of the Full-Time and Spatial Domain

In addition to its high cost and dense deployment requirements, traditional subgrade optical fiber sensing technology is limited in its ability to capture rich, high-dimensional vehicle information, leading to a high risk of target misidentification in large-scale road networks. To alleviate this challenge, sparse subbed sensing devices were deployed in key areas of the road network, and distributed optical fiber sensors were laid in the whole area. The space–time frequency coding and Siamese stereo network technology were fused to realize the space–time registration and feature fusion of heterogeneous sensor networks, and the multi-level fusion perception theory of heterogeneous sensor networks was constructed. This theory underlies one of the key technologies that shows promise in the area of promoting the trust evaluation of a ubiquitous vehicle perception system.

Author Contributions

Conceptualization, W.D., X.C., J.Z., and C.H.; methodology, W.D., W.L., and J.G.; software, W.D.; validation, W.L. and J.G.; formal analysis, W.D.; investigation, W.L.; resources, X.C., J.Z., and C.H.; data curation, W.D.; writing—original draft preparation, W.D., W.L., and J.G.; writing—review and editing, X.C., J.Z., and C.H.; visualization, W.D. and J.G.; supervision, C.H. and X.Z.; project administration, W.D., X.C., and J.Z.; funding acquisition, X.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under grant 52472337 and grant 52302491, in part by the 16th Batch of Special Support from China Postdoctoral Science Foundation under grant 2023T160129, and in part by the Key Research and Development Program of Shaanxi Province under grant 2023-YBGY-119.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DAS | Distributed optical fiber acoustic sensing |

| DOFS | Distributed optical fiber sensing |

| CNN | Convolutional neural network |

| GAN | Generative Adversarial Network |

| SNN | Spiking Neural Network |

References

- Husain, A.A.; Maity, T.; Yadav, R.K. Vehicle detection in intelligent transport system under a hazy environment: A survey. IET Image Proc. 2020, 14, 1–10. [Google Scholar] [CrossRef]

- Zhao, J.; Xu, H.; Liu, H.; Wu, J.; Zheng, Y.; Wu, D. Detection and tracking of pedestrians and vehicles using roadside LiDAR sensors. Transp. Res. Part C Emerg. Technol. 2019, 100, 68–87. [Google Scholar] [CrossRef]

- Chen, X.; Kong, X.; Xu, M.; Sandrasegaran, K.; Zheng, J. Road Vehicle Detection and Classification Using Magnetic Field Measurement. IEEE Access 2019, 7, 52622–52633. [Google Scholar] [CrossRef]

- Zhou, S.; Xu, H.; Zhang, G.; Ma, T.; Yang, Y. Leveraging Deep Convolutional Neural Networks Pre-Trained on Autonomous Driving Data for Vehicle Detection From Roadside LiDAR Data. IEEE Trans. Intell. Transp. Syst. 2022, 23, 22367–22377. [Google Scholar] [CrossRef]

- Guan, E.; Zhang, Z.; He, M.; Zhao, X. Evaluation of classroom teaching quality based on video processing technology. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017. [Google Scholar] [CrossRef]

- Hind, S.; Gekker, A. Automotive parasitism: Examining Mobileye’s ‘car-agnostic’ platformisation. New Media Soc. 2022, 26, 3707–3727. [Google Scholar] [CrossRef]

- Tomzcak, K.; Pelter, A.; Gutierrez, C.; Stretch, T.; Hilf, D.; Donadio, B.; Tenhundfeld, N.L.; Visser, E.J.d.; Tossell, C.C. Let Tesla Park Your Tesla: Driver Trust in a Semi-Automated Car. In Proceedings of the 2019 Systems and Information Engineering Design Symposium (SIEDS), Charlottesville, VA, USA, 26 April 2019; pp. 1–6, 10. [Google Scholar] [CrossRef]

- Gyujin, B.; In, C.S.; SukJu, K.; Hwan, K.Y. Dual-dissimilarity measure-based statistical video cut detection. J. Real-Time Image Process. 2019, 16, 1987–1997. [Google Scholar] [CrossRef]

- Zhang, Y.; Zheng, J.; Zhang, C.; Li, B. An effective motion object detection method using optical flow estimation under a moving camera. J. Vis. Commun. Image Represent. 2018, 55, 215–228. [Google Scholar] [CrossRef]

- Chen, Y.; Sun, Z.; Lam, K. An Effective Subsuperpixel-Based Approach for Background Subtraction. IEEE Trans. Ind. Electron. 2020, 67, 601–609. [Google Scholar] [CrossRef]

- Kim, J.; Baek, J.; Kim, E. A Novel On-Road Vehicle Detection Method Using πHOG. IEEE Trans. Intell. Transp. Syst. 2015, 16, 3414–3429. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 4 May 2021. [Google Scholar] [CrossRef]

- Philion, J.; Fidler, S. Lift, Splat, Shoot: Encoding Images from Arbitrary Camera Rigs by Implicitly Unprojecting to 3D. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; pp. 194–210. [Google Scholar] [CrossRef]

- Li, F.; Zhang, H.; Liu, S.; Guo, J.; Ni, L.M.; Zhang, L. DN-DETR: Accelerate DETR Training by Introducing Query DeNoising. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 2239–2251. [Google Scholar] [CrossRef]

- Liu, X.; Peng, H.; Zheng, N.; Yang, Y.; Hu, H.; Yuan, Y. EfficientViT: Memory Efficient Vision Transformer with Cascaded Group Attention. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14420–14430. [Google Scholar] [CrossRef]

- Li, Z.; Wang, W.; Li, H.; Xie, E.; Sima, C.; Lu, T.; Qiao, Y.; Dai, J. BEVFormer: Learning Bird’s-Eye-View Representation from Multi-camera Images via Spatiotemporal Transformers. In Proceedings of the Computer Vision—ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; pp. 1–18. [Google Scholar] [CrossRef]

- Yang, C.; Chen, Y.; Tian, H.; Tao, C.; Zhu, X.; Zhang, Z. BEVFormer v2: Adapting Modern Image Backbones to Bird’s-Eye-View Recognition via Perspective Supervision. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 17830–17839. [Google Scholar] [CrossRef]

- Song, H.; Liang, H.; Li, H.; Dai, Z.; Yun, X. Vision-based vehicle detection and counting system using deep learning in highway scenes. Eur. Transp. Res. Rev. 2019, 11, 51. [Google Scholar] [CrossRef]

- He, Y.; Liu, Z. A Feature Fusion Method to Improve the Driving Obstacle Detection Under Foggy Weather. IEEE Trans. Transp. Electrif. 2021, 7, 2505–2515. [Google Scholar] [CrossRef]

- Yi, K.; Luo, K.; Chen, T.; Hu, R. An Improved YOLOX Model and Domain Transfer Strategy for Nighttime Pedestrian and Vehicle Detection. Appl. Sci. 2022, 12, 12476. [Google Scholar] [CrossRef]

- Reddy, N.D.; Vo, M.; Narasimhan, S.G. Occlusion-Net: 2D/3D Occluded Keypoint Localization Using Graph Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7318–7327. [Google Scholar] [CrossRef]

- Zhang, W.; Zheng, Y.; Gao, Q.; Mi, Z. Part-Aware Region Proposal for Vehicle Detection in High Occlusion Environment. IEEE Access 2019, 7, 100383–100393. [Google Scholar] [CrossRef]

- Dong, X.; Yan, S.; Duan, C. A lightweight vehicles detection network model based on YOLOv5. Eng. Appl. Artif. Intell. 2022, 113, 104914. [Google Scholar] [CrossRef]

- Alam, M.K.; Ahmed, A.; Salih, R.; Al Asmari, A.F.S.; Khan, M.A.; Mustafa, N.; Mursaleen, M.; Islam, S. Faster RCNN based robust vehicle detection algorithm for identifying and classifying vehicles. J. Real Time Image Process. 2023, 20, 93. [Google Scholar] [CrossRef]

- Zarei, N.; Moallem, P.; Shams, M. Real-time vehicle detection using segmentation-based detection network and trajectory prediction. IET Comput. Vis. 2024, 18, 191–209. [Google Scholar] [CrossRef]

- Liu, H.; Ding, Q.; Hu, Z.; Chen, X. Remote Sensing Image Vehicle Detection Based on Pre-Training and Random-Initialized Fusion Network. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Xiong, C.; Yu, A.; Yuan, S.; Gao, X. Vehicle detection algorithm based on lightweight YOLOX. Signal Image Video Process. 2023, 17, 1793–1800. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, Y.; Wang, Z.; Jiang, Y. YOLOv7-RAR for Urban Vehicle Detection. Sensors 2023, 23, 1801. [Google Scholar] [CrossRef]

- Zhou, J.; Duan, J. Moving object detection for intelligent vehicles based on occupancy grid map. Syst. Eng. Electron. 2015, 37, 436–442. [Google Scholar] [CrossRef]

- Deng, Q.; Li, X. Vehicle detection algorithm based on multi-feature fusion. Transducer Microsyst. Technol. 2020, 39, 131–134. [Google Scholar] [CrossRef]

- Charles, R.Q.; Su, H.; Kaichun, M.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar] [CrossRef]

- Qi, C.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5105–5114. [Google Scholar] [CrossRef]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar] [CrossRef]

- Lang, A.H.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. PointPillars: Fast Encoders for Object Detection From Point Clouds. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 12689–12697. [Google Scholar] [CrossRef]

- Yin, T.; Zhou, X.; Krahenbuhl, P. Center-based 3d object detection and tracking. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 11779–11788. [Google Scholar] [CrossRef]

- Shi, S.; Wang, X.; Li, H. PointRCNN: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar] [CrossRef]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From Points to Parts: 3D Object Detection From Point Cloud With Part-Aware and Part-Aggregation Network. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 2647–2664. [Google Scholar] [CrossRef]

- Xu, Q.; Zhong, Y.; Neumann, U. Behind the curtain: Learning occluded shapes for 3d object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 22 February–1 March 2022; pp. 2893–2901. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, Q.; Zhu, Z.; Hou, J.; Yuan, Y. Glenet: Boosting 3d object detectors with generative label uncertainty estimation. Int. J. Comput. Vis. 2023, 131, 3332–3352. [Google Scholar] [CrossRef]

- Chen, T. 3D LIDAR-Based Dynamic Vehicle Detection and Tracking. Master’s Thesis, Graduate School of National University of Defense Technology, Hunan, China, 2017. [Google Scholar]

- Wang, Z.; Wang, X.; Fang, B.; Yu, K.; Ma, J. Vehicle detection based on point cloud intensity and distance clustering. J. Phys. Conf. Ser. 2021, 1748, 042053. [Google Scholar] [CrossRef]