Intelligent Resource Allocation for Immersive VoD Multimedia in NG-EPON and B5G Converged Access Networks

Abstract

1. Introduction

2. Related Works

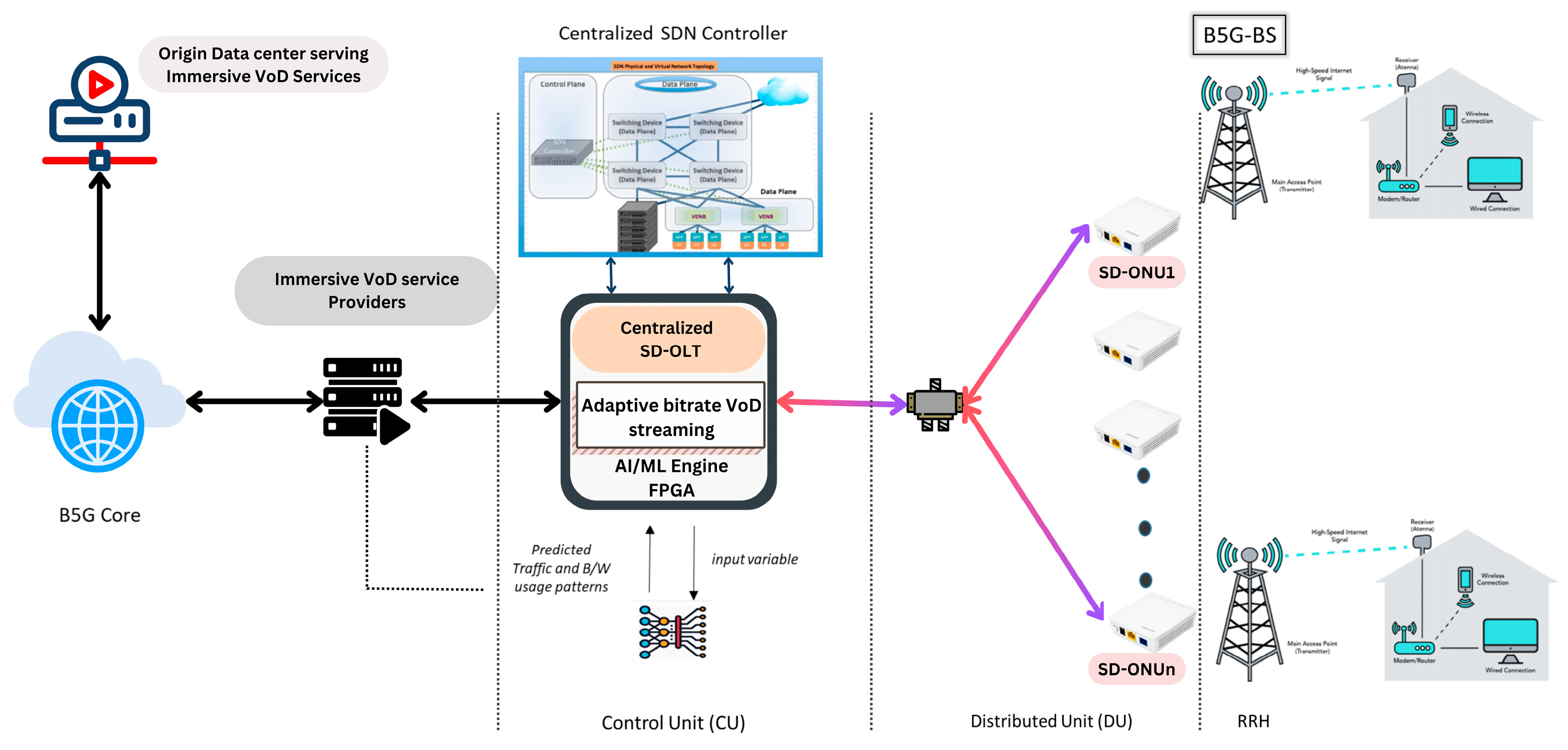

3. Framework for Centralized Storage and Delivery of Immersive VoD

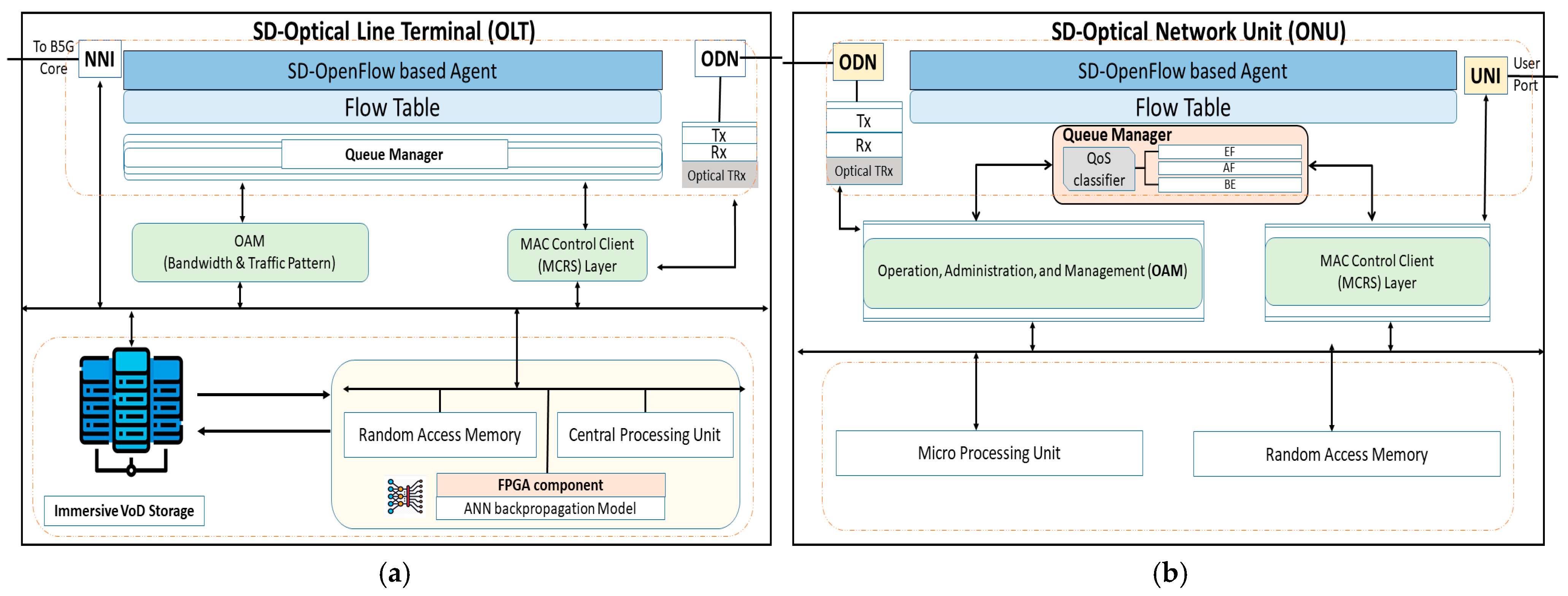

3.1. Enhanced SD-OLT with Immersive VoD Storage

3.2. SD-ONU Operations for Traffic Classification

4. Methodology

4.1. Problem Formulation

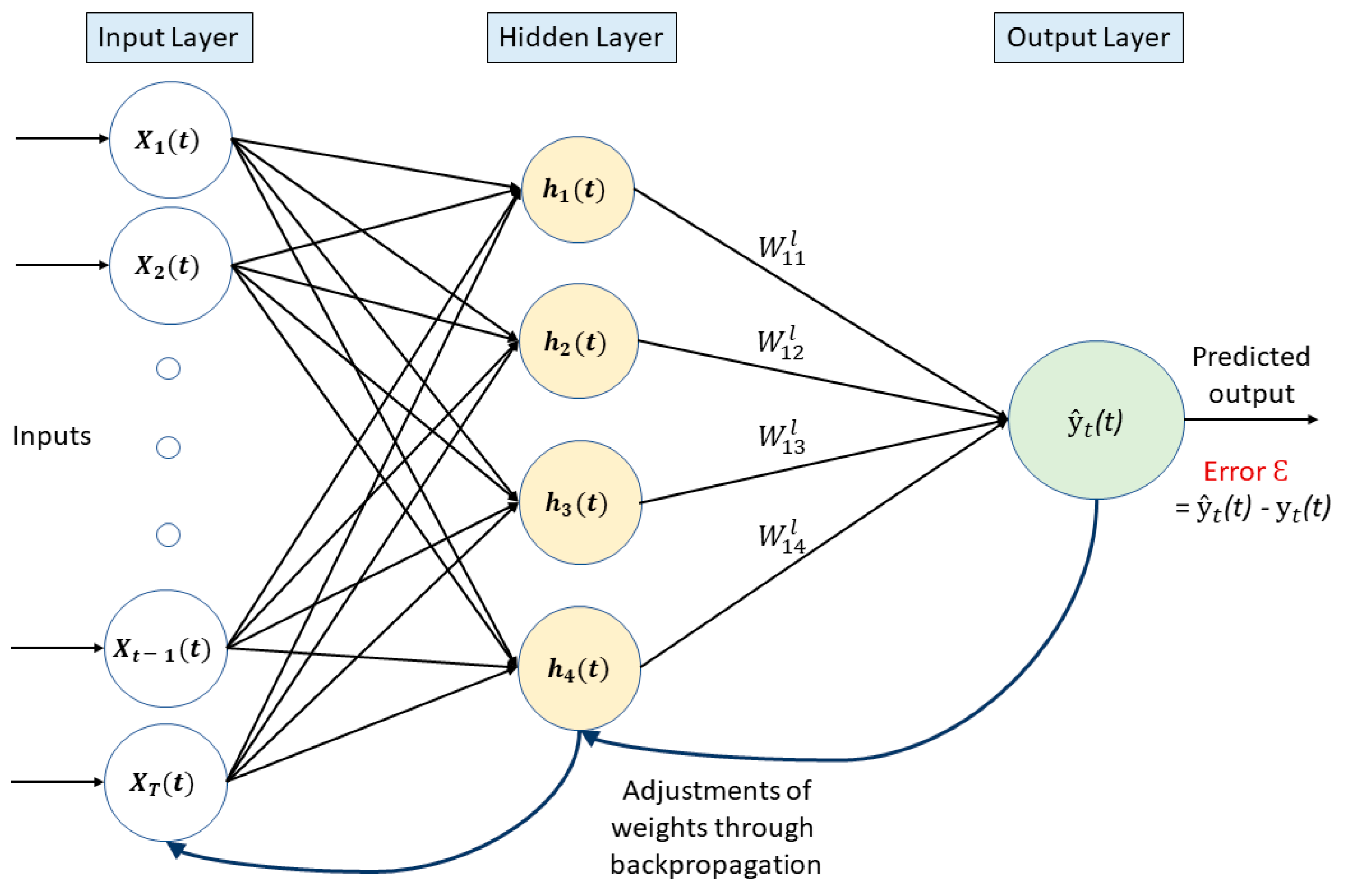

4.2. ANN Model Workflow

4.2.1. Sliding Window for Feature Preparation

4.2.2. ANN Model Formulation

- Forward Propagation

- Input Layer: set of historical datasets with sliding window features .

- Hidden layer: for each neuron in the hidden layer l = 1, 2, …, L − 1.

- Loss function adjustment with Thresholds

- Backpropagation

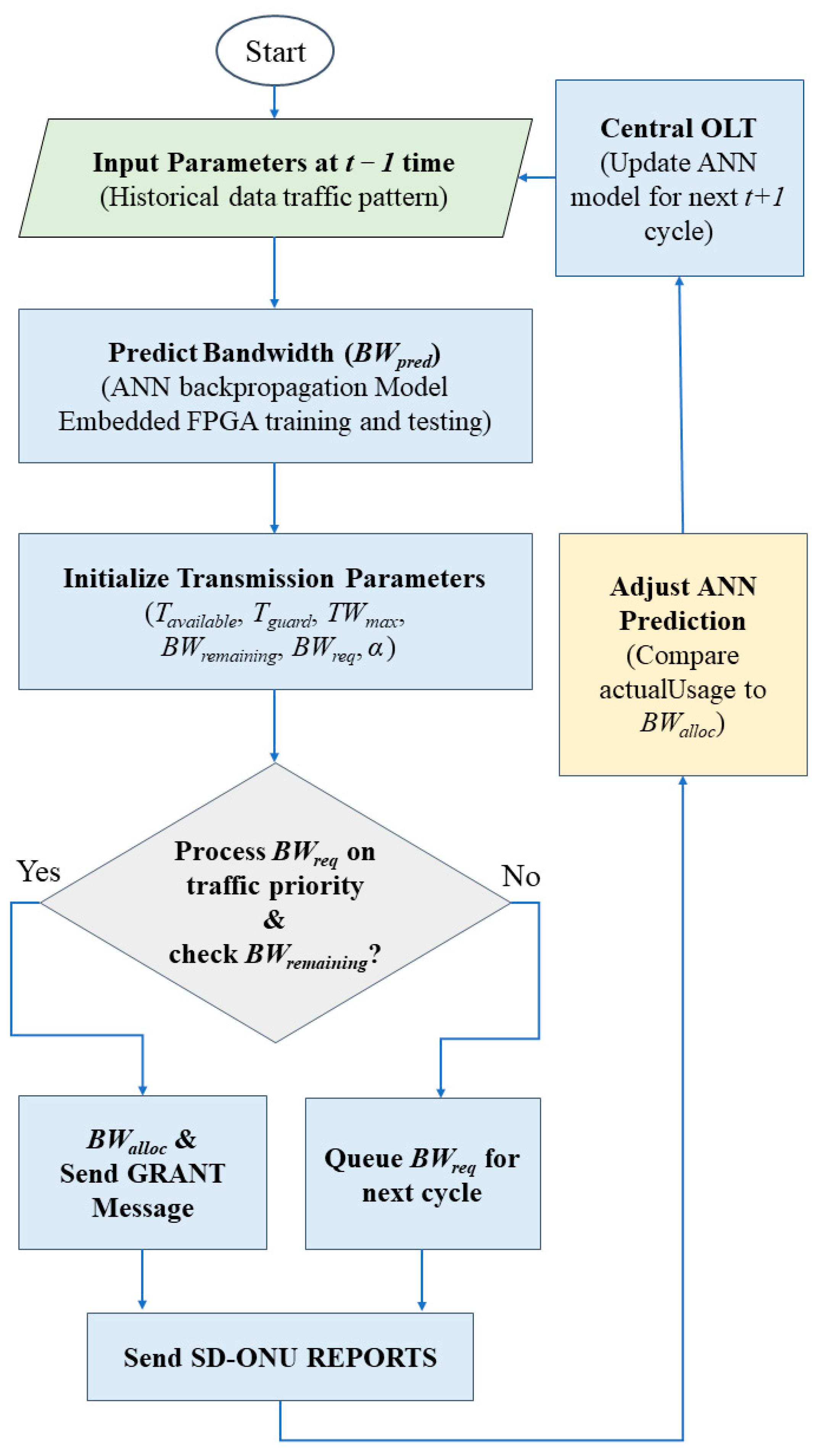

4.2.3. IMS-DBA for Immersive VoD Allocation

| Algorithm 1 IMS-DBA |

| For i = number of SD-ONUs Tavailable = upstream transmission scheduled time Tguard = guard time interval TWmax = maximum transmission timeslot of SD-ONUi BWremaining = total bandwidth for ONUi BWpred = bandwidth predicted value for all traffic classes through ANN α = dynamic threshold adjustment parameter For every received BWreq of SD-ONUi, BWreq ∈ (EF, AF, BE) //Bandwidth request REPORT from SD-ONUi do { startTime = Tavailable + Tguard // Process EF traffic (highest priority) if BWreq = EF and BWremaining > 0 then { BWalloc = min(BWreq + BWpred, TWmax) // Ensure BWalloc does not exceed BWremaining if BWalloc > BWremaining then { BWalloc = BWremaining } EF_GRANT = (startTime—RTTi, BWalloc) Send EF_GRANT message // Update remaining bandwidth and time BWremaining = BWremaining − BWalloc TWmax = BWremaining Tavailable = startTime + BWalloc } // Process AF traffic (second priority) else if BWreq = AF and BWremaining > 0 then { BWalloc = min(BWreq + BWpred, TWmax) // Ensure BWalloc does not exceed BWremaining if BWalloc > BWremaining then { BWalloc = BWremaining } AF_GRANT = (startTime—RTTi, BWalloc) Send AF_GRANT message // Update remaining bandwidth and time BWremaining = BWremaining − BWalloc TWmax = BWremaining Tavailable = startTime + BWalloc } // Process BE traffic (lowest priority) else if BWreq = BE and BWremaining > 0 then { BWalloc = min(BWreq + BWpred, TWmax) // Ensure BWalloc does not exceed BWremaining if BWalloc > BWremaining then { BWalloc = BWremaining } BE_GRANT = (startTime—RTTi, BWalloc) Send BE_GRANT message // Update remaining bandwidth and time BWremaining = BWremaining − BWalloc TWmax = BWremaining Tavailable = startTime + BWalloc } // Dynamic Prediction Adjustment for All Classes actualUsage = BWreq // SD-ONU feedback if actualUsage > BWalloc then { BWpred = BWpred + α * (actualUsage—BWalloc) } else if actualUsage < BWalloc then { BWpred = max(0, BWpred − α * (BWalloc—actualUsage)) } // Update ANN model with feedback (SD-ONU REPORTS) ANN.update (Xt, actualUsage) } } End |

5. Proposed ANN-Based Predicted Bandwidth Allocation Scheme

6. System Performance Evaluation & Discussion

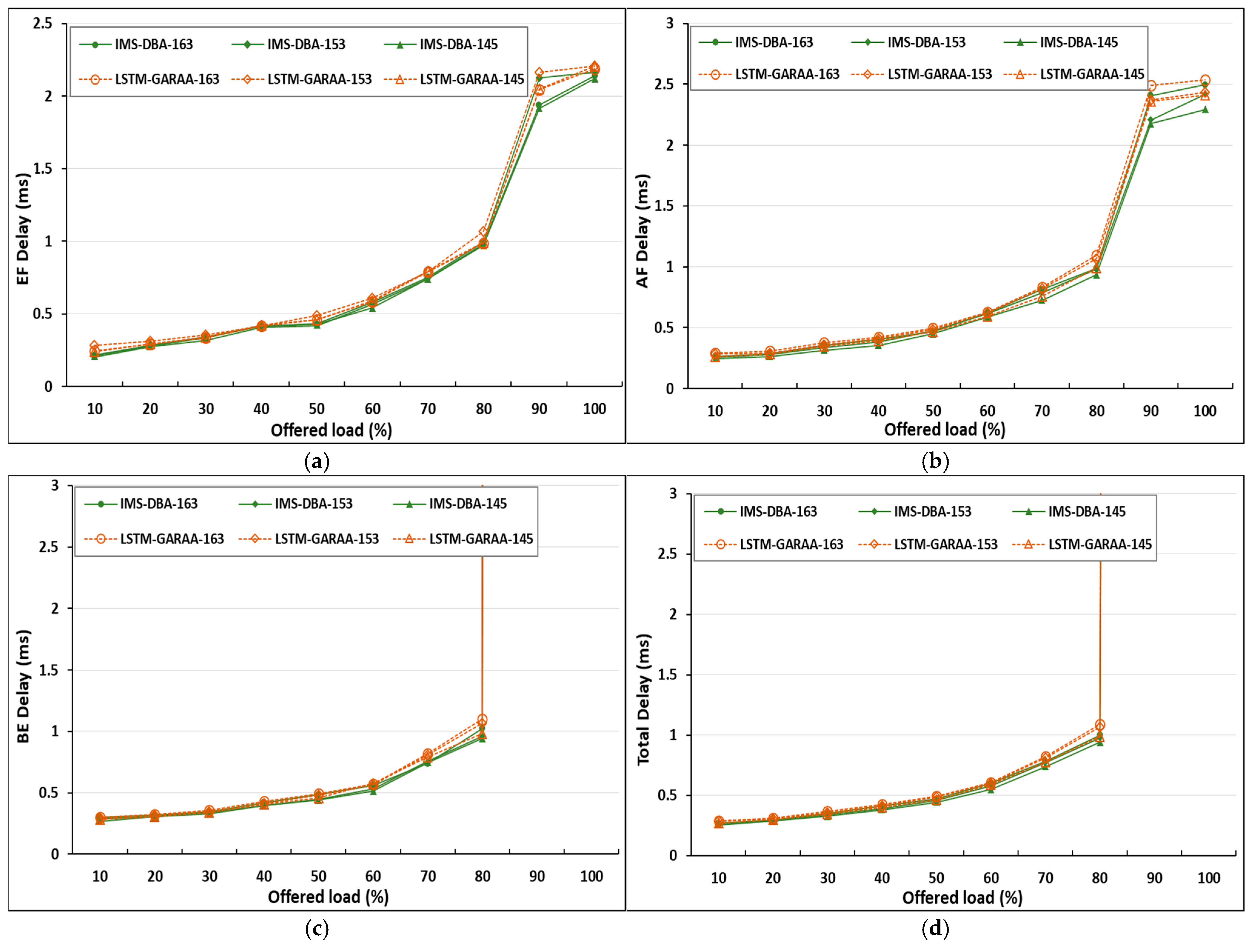

6.1. Mean Packet Delay

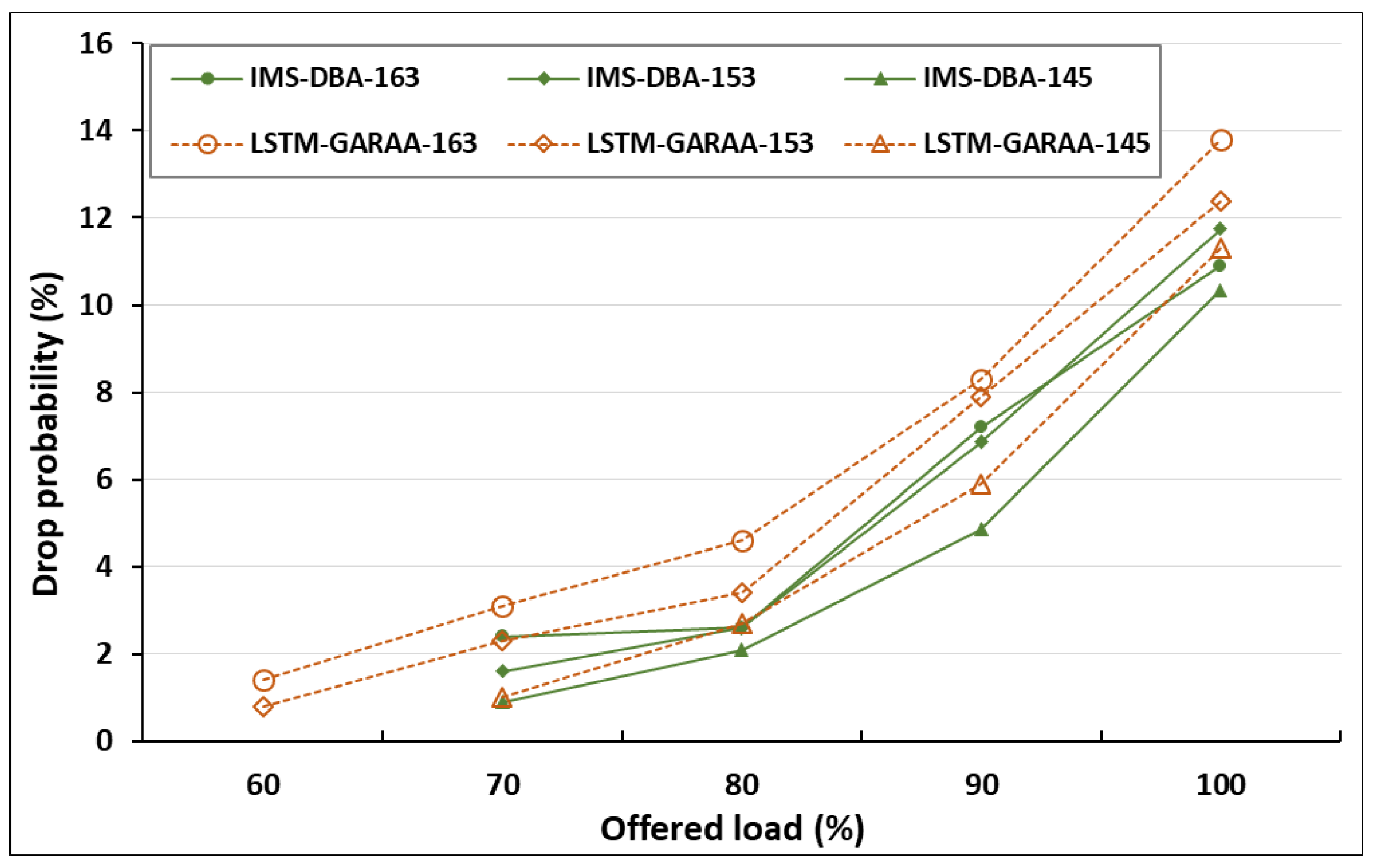

6.2. Packet Drop

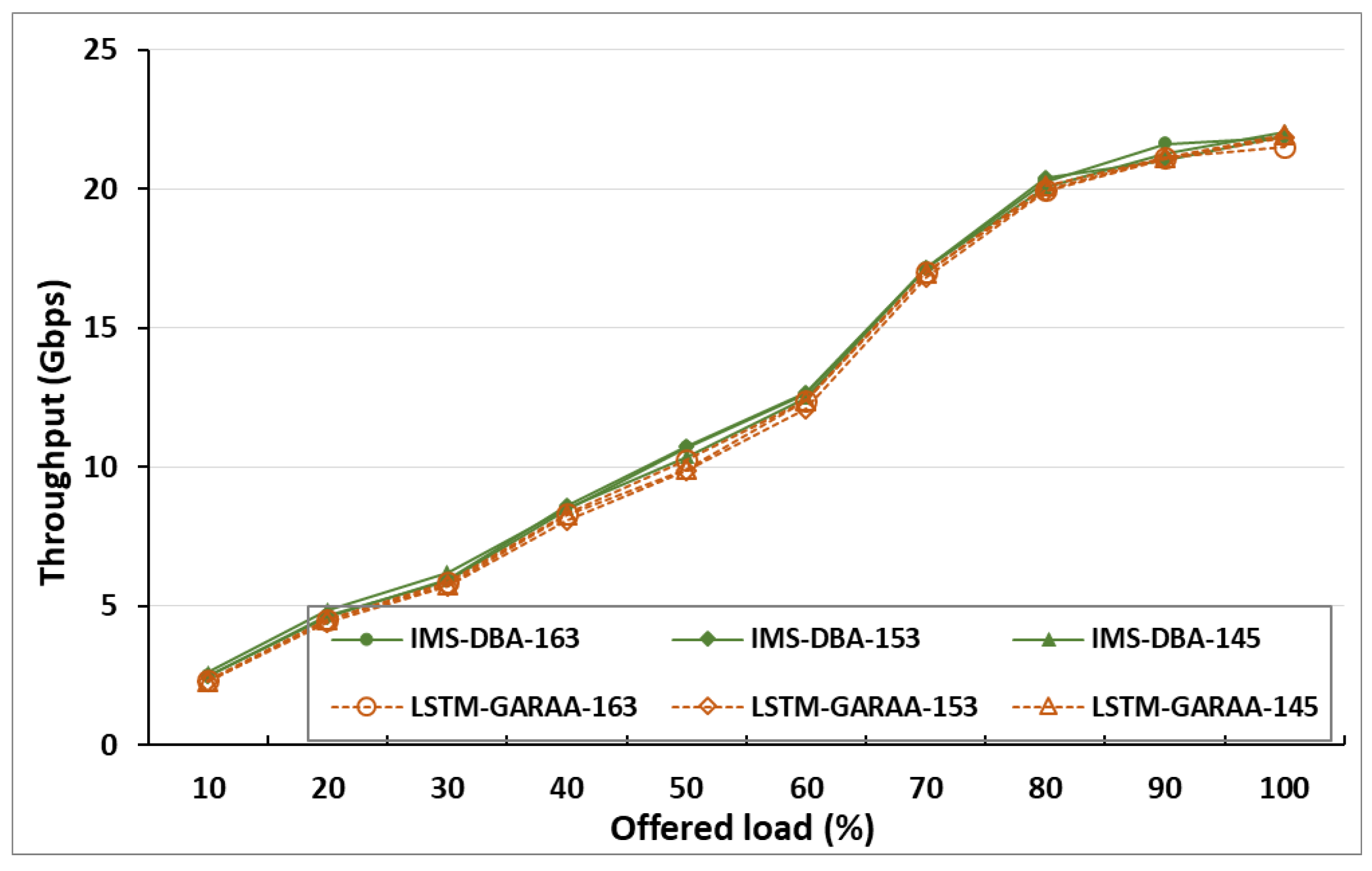

6.3. System Throughput

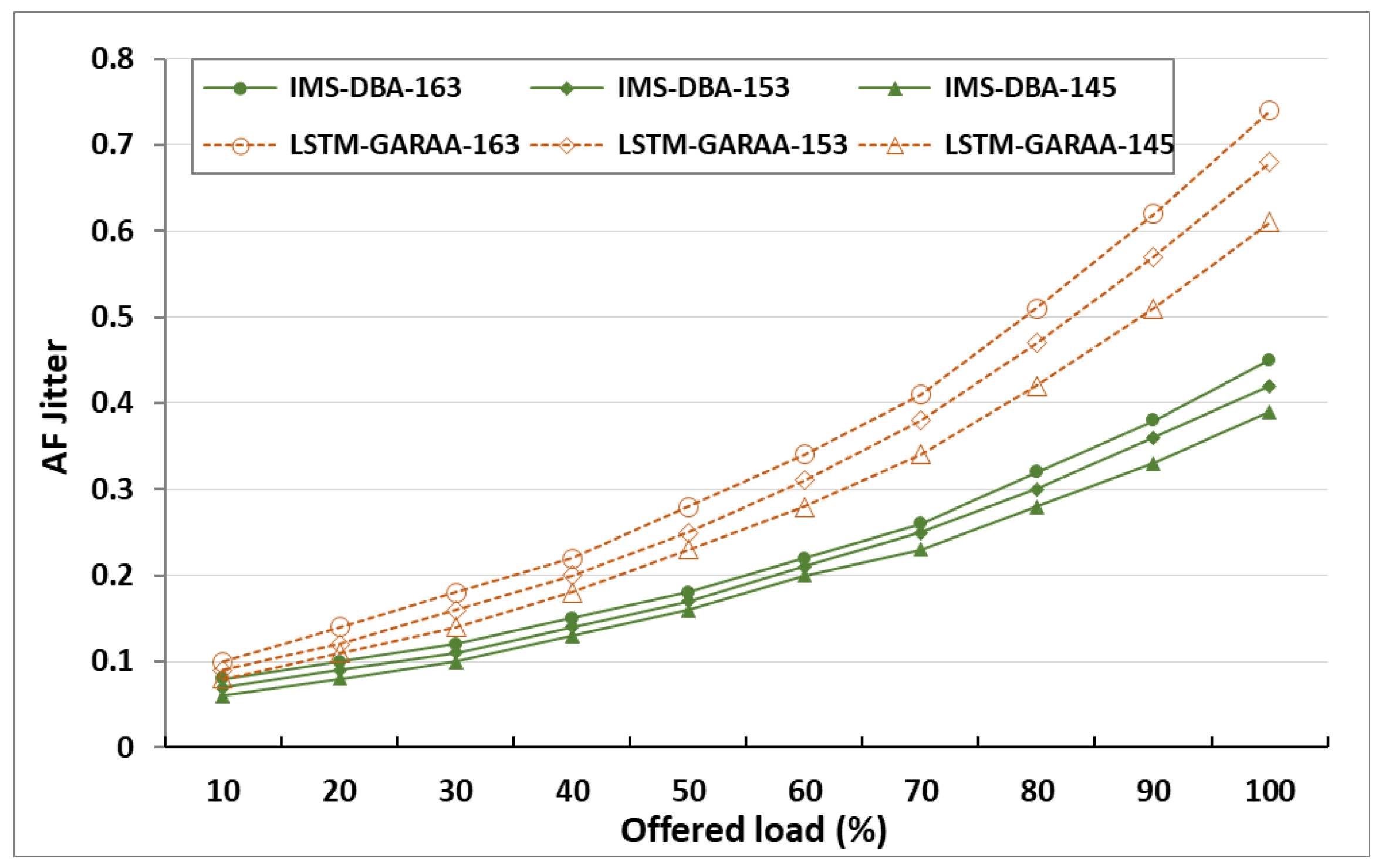

6.4. Jitter

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AF | Assured Forwarding |

| ANN | Artificial Neural Networks |

| AR | Augmented Reality |

| B5G | Beyond 5G |

| B5G-BS | Beyond 5G Base Station |

| BE | Best Effort |

| DBA | Dynamic Bandwidth Allocation |

| EF | Expedited Forwarding |

| FPGA | Field-Programmable Gate Array |

| FWA | Fixed Wireless Access |

| HCI | Human–Computer Interaction |

| ICT | Information and Communication Technology |

| IM | Immersive Media |

| IVOD | Immersive VoD |

| LLID | Logical Link Identification |

| MAC | Media Access Control |

| MCRS | Multi-Channel Reconciliation Sublayer |

| ML | Machine Learning |

| MPCP | Multi-Point Control Protocol |

| NG-EPON | Next Generation EPON |

| NNI | Network-To-Network Interface |

| OAM | Operations, Administration, And Maintenance |

| OAN | Optical Access Network |

| ODN | Optical Distribution Network |

| ONU | Optical Network Unit |

| PSC | Passive Optical Splitter-Combiners |

| SDN | Software-Defined Networking |

| SD-OLT | Software-Defined Optical Line Terminal |

| SD-ONU | Software-Defined Optical Network Unit |

| TDMA | Time Division Multiple Access |

| TW | Transmission Window |

| UNI | User-To-Network |

| VOD | Video on Demand |

| VR | Virtual Reality |

| XR | Extended Reality |

References

- Partarakis, N.; Zabulis, X. A review of immersive technologies, knowledge representation, and AI for human-centered digital experiences. Electronics 2024, 13, 269. [Google Scholar] [CrossRef]

- Insights, T.B. Spatial Computing Market Forecast 2024 to 2033. In Market Research Report; Online TBI-14188. March 2024. Available online: https://www.thebrainyinsights.com/report/spatial-computing-market-14188#:~:text=As%20per%20The%20Brainy%20Insights,USD%20815.2%20billion%20by%202033 (accessed on 19 August 2024).

- Saravanan, M.; Ajayan, J.; Parthasarathy, E.; Ramkumar, V. Architecture and Future Trends on Next Generation Networks. In Resource Management in Advanced Wireless Networks; Scrivener Publishing LLC: Beverly, MA, USA, 2025; pp. 19–43. [Google Scholar]

- Chanclou, P.; Simon, G.; Saliou, F.; Wang, M.; Bolloré, A. Optical access solutions in support of 5G and beyond. J. Opt. Commun. Netw. 2023, 15, C48–C53. [Google Scholar] [CrossRef]

- Ranaweera, C.; Lim, C.; Tao, Y.; Edirisinghe, S.; Song, T.; Wosinska, L.; Nirmalathas, A. Design and deployment of optical x-haul for 5G, 6G, and beyond: Progress and challenges. J. Opt. Commun. Netw. 2023, 15, D56–D66. [Google Scholar] [CrossRef]

- Hwang, I.-S.; Nikoukar, A.; Teng, C.-H.; Lai, K.R. Scalable architecture for VOD service enhancement based on a cache scheme in an Ethernet passive optical network. J. Opt. Commun. Netw. 2013, 5, 271–282. [Google Scholar] [CrossRef]

- Wang, J.; Qiao, C.; Li, Y.; Lu, K. On guaranteed VoD services in next generation optical access networks. IEEE J. Sel. Areas Commun. 2010, 28, 875–888. [Google Scholar] [CrossRef]

- Chitimalla, D.; Tornatore, M.; Lee, S.-S.; Lee, H.-H.; Park, S.; Chung, H.S.; Mukherjee, B. QoE enhancement schemes for video in converged OFDMA wireless networks and EPONS. J. Opt. Commun. Netw. 2018, 10, 229–239. [Google Scholar] [CrossRef]

- Shu, Z.; Deming, L.; Guangsheng, W. Research on Convergence Network of EPON and WiMAX Based on ROF. ZTE Commun. 2020, 8, 56–58. [Google Scholar]

- Ruan, L.; Dias, M.P.; Wong, E. Enhancing latency performance through intelligent bandwidth allocation decisions: A survey and comparative study of machine learning techniques. J. Opt. Commun. Netw. 2020, 12, B20–B32. [Google Scholar] [CrossRef]

- Garfias, P.; Gutiérrez, L.; Sallent, S. Enhanced DBA to provide QoS to coexistent EPON and 10G-EPON networks. J. Opt. Commun. Netw. 2012, 4, 978–988. [Google Scholar] [CrossRef]

- Cao, B.; Zheng, X.; Yuan, K.; Qin, D.; Hong, Y. Dynamic bandwidth allocation based on adaptive predictive for low latency communications in changing passive optical networks environment. Opt. Fiber Technol. 2021, 64, 102556. [Google Scholar] [CrossRef]

- Fadlullah, Z.M.; Nishiyama, H.; Kato, N.; Ujikawa, H.; Suzuki, K.-I.; Yoshimoto, N. Smart FiWi networks: Challenges and solutions for QoS and green communications. IEEE Intell. Syst. 2013, 28, 86–91. [Google Scholar] [CrossRef]

- Hanaya, N.; Nakayama, Y.; Yoshino, M.; Suzuki, K.-I.; Kubo, R. Remotely controlled XG-PON DBA with linear prediction for flexible access system architecture. In Proceedings of the Optical Fiber Communication Conference, San Diego, CA, USA, 11–15 March 2018; p. Tu3L. 1. [Google Scholar]

- Nishimoto, K.; Tadokoro, M.; Fujiwara, T.; Yamada, T.; Tanaka, T.; Takeda, A.; Inoue, T. Predictive dynamic bandwidth allocation based on the correlation of the bi-directional traffic for cloud-based virtual PON-OLT. In Proceedings of the 2017 IEEE International Workshop Technical Committee on Communications Quality and Reliability (CQR), Naples, FL, USA, 16–18 May 2017; pp. 1–6. [Google Scholar]

- Hernández, J.A.; Ebrahimzadeh, A.; Maier, M.; Larrabeiti, D. Learning EPON delay models from data: A machine learning approach. J. Opt. Commun. Netw. 2021, 13, 322–330. [Google Scholar] [CrossRef]

- Nikoukar, A.; Goudarzi, H.; Rezaei, H.; Hwang, I.-S. A predictive TDM-PON resource allocation using the ANN method based on equilibrium points of discrete dynamical systems. Opt. Fiber Technol. 2023, 81, 103587. [Google Scholar] [CrossRef]

- Ruan, L.; Dias, M.P.I.; Wong, E. Machine learning-based bandwidth prediction for low-latency H2M applications. IEEE Internet Things J. 2019, 6, 3743–3752. [Google Scholar] [CrossRef]

- IEEE 802.3ca-2020; IEEE Standard for Ethernet Amendment 9: Physical Layer Specifications and Management Parameters for 25 Gb/s and 50 Gb/s Passive Optical Network. IEEE: New York, NY, USA, 2020.

- Chien, W.-C.; Lai, C.-F.; Chao, H.-C. Dynamic resource prediction and allocation in C-RAN with edge artificial intelligence. IEEE Trans. Ind. Inform. 2019, 15, 4306–4314. [Google Scholar] [CrossRef]

| Reference Paper | Method/Technique | ML |

|---|---|---|

| [13] | Constant bit rate | No |

| [14] | Packet arrival time | No |

| [15] | Regression model | No |

| [16] | Supervised learning | Yes |

| [17] | ANN through a discreet dynamical system | Yes |

| [18] | ANN through MLP | Yes |

| [Our technique] | ANN through backpropagation | Yes |

| Parameters | Value |

|---|---|

| Number of SD-ONUs | 32 |

| Number of wavelengths | 1 |

| Downstream and upstream data rate | 25 Gbp/s & 10 Gbp/s |

| Uniform distance from SD-OLT to SD-ONU | 20 km |

| Buffer size of ONU | 10 Mb |

| Max. transmission cycle time | 1.0ms |

| Guard time | 5 µs |

| IMS-DBA computation time | 20 µs |

| Scenario | EF% | AF% | BE% |

|---|---|---|---|

| IMS-DBA/LSTM-GARAA (case163) | 10 | 60 | 30 |

| IMS-DBA/LSTM-GARAA (case153) | 15 | 50 | 35 |

| IMS-DBA/LSTM-GARAA (case145) | 10 | 40 | 50 |

| Parameter | Value/Description |

|---|---|

| Dataset | A historical record of 10,000 transmission cycles |

| Dataset Partition | 70% for training, 30% for testing |

| Learning Rate | 0.001 |

| Batch Size | 64 |

| Epochs | 50 |

| Loss Function | Mean Squared Error (MSE) |

| Regularization | L2 regularization with a penalty coefficient of 0.001 is applied |

| Sliding Window Size | 5 |

| Normalization | [0, 1] |

| Sensitivity Factor (α) | A tunable parameter, varying from 0.1 to 0.5 |

| Threshold Updates | Every 20 cycles |

| Feedback Adjustment | Based on actual Usage and BWalloc |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kharga, R.; Nikoukar, A.; Hwang, I.-S. Intelligent Resource Allocation for Immersive VoD Multimedia in NG-EPON and B5G Converged Access Networks. Photonics 2025, 12, 528. https://doi.org/10.3390/photonics12060528

Kharga R, Nikoukar A, Hwang I-S. Intelligent Resource Allocation for Immersive VoD Multimedia in NG-EPON and B5G Converged Access Networks. Photonics. 2025; 12(6):528. https://doi.org/10.3390/photonics12060528

Chicago/Turabian StyleKharga, Razat, AliAkbar Nikoukar, and I-Shyan Hwang. 2025. "Intelligent Resource Allocation for Immersive VoD Multimedia in NG-EPON and B5G Converged Access Networks" Photonics 12, no. 6: 528. https://doi.org/10.3390/photonics12060528

APA StyleKharga, R., Nikoukar, A., & Hwang, I.-S. (2025). Intelligent Resource Allocation for Immersive VoD Multimedia in NG-EPON and B5G Converged Access Networks. Photonics, 12(6), 528. https://doi.org/10.3390/photonics12060528