Design of an Underwater Optical Communication System Based on RT-DETRv2

Abstract

1. Introduction

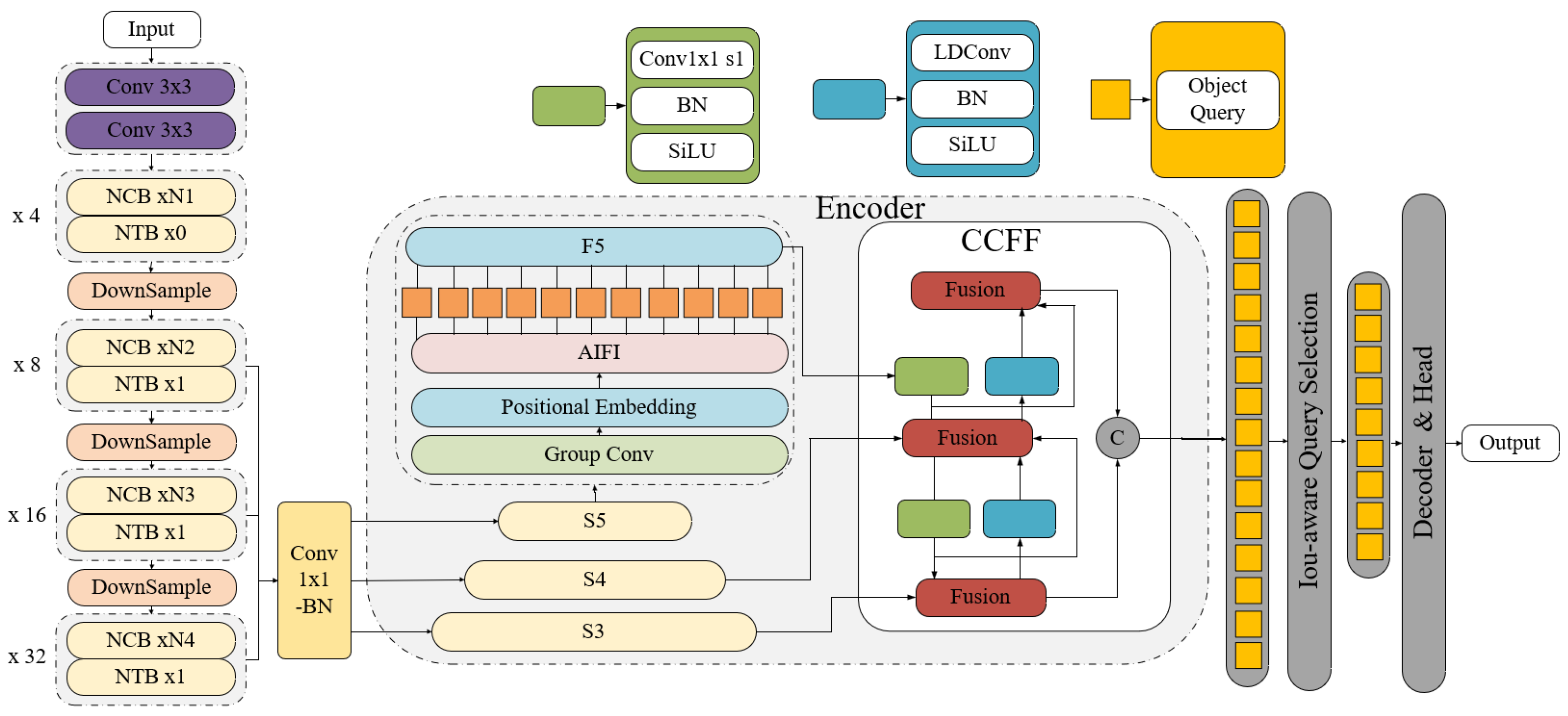

- An end-to-end detector retrofit for UWOC has been proposed, in which the Next Vision Transformer (Next-ViT) model [18] replaces the Residual Neural Network (ResNet) [19] backbone; under strong backscatter, local saturation, and small-spot conditions, detection robustness and localization stability have been improved while maintaining end-to-end speed.

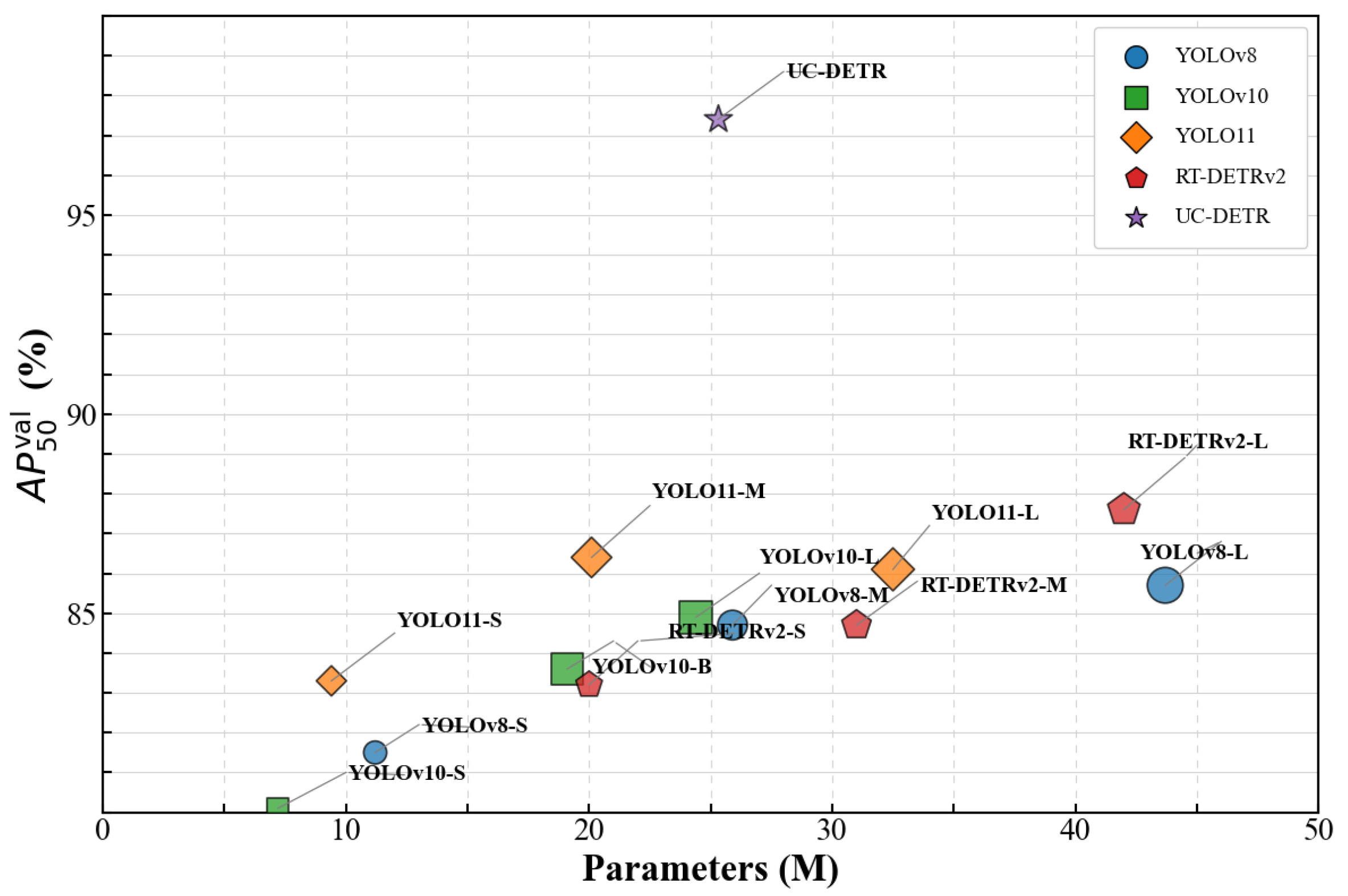

- A hybrid encoder enhanced with Lightweight Dynamic Convolution (LDConv) [20], together with a dedicated underwater light-spot dataset (11,390 images covering 15–40 m transmission range, ±45° deflection, and three illumination levels at noon, evening, and late night), was developed. Under this setting, the improved model achieves AP50 = 97.4% on the test set, outperforming the RT-DETRv2-M baseline by 12.7%.

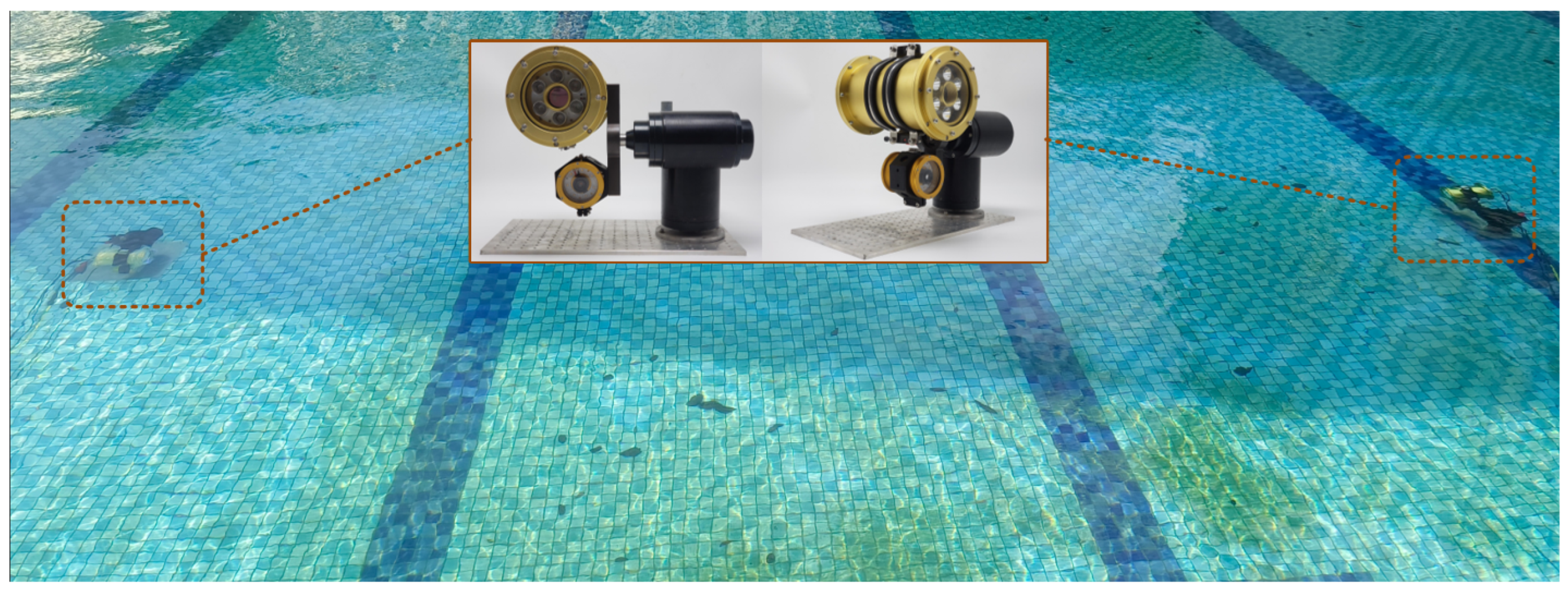

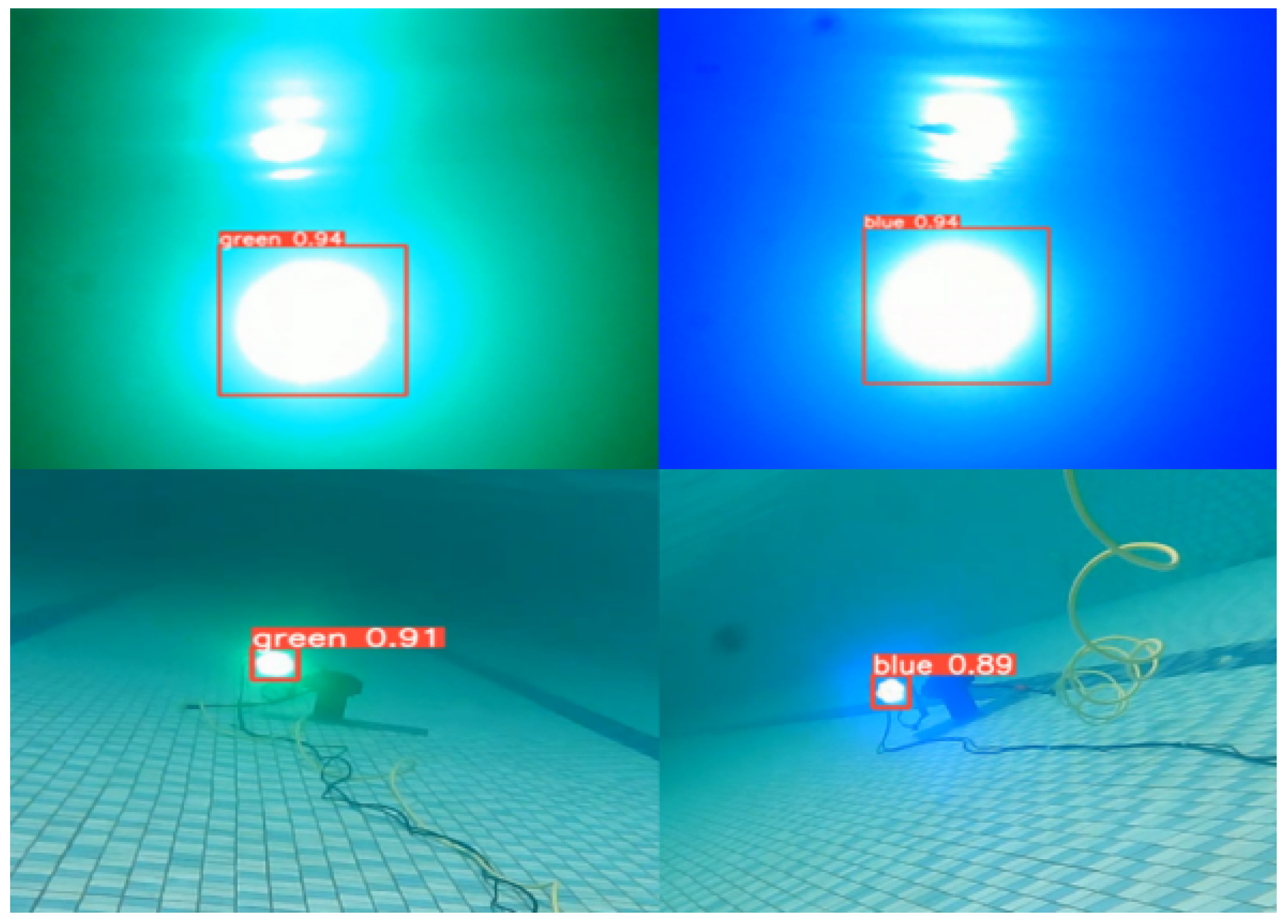

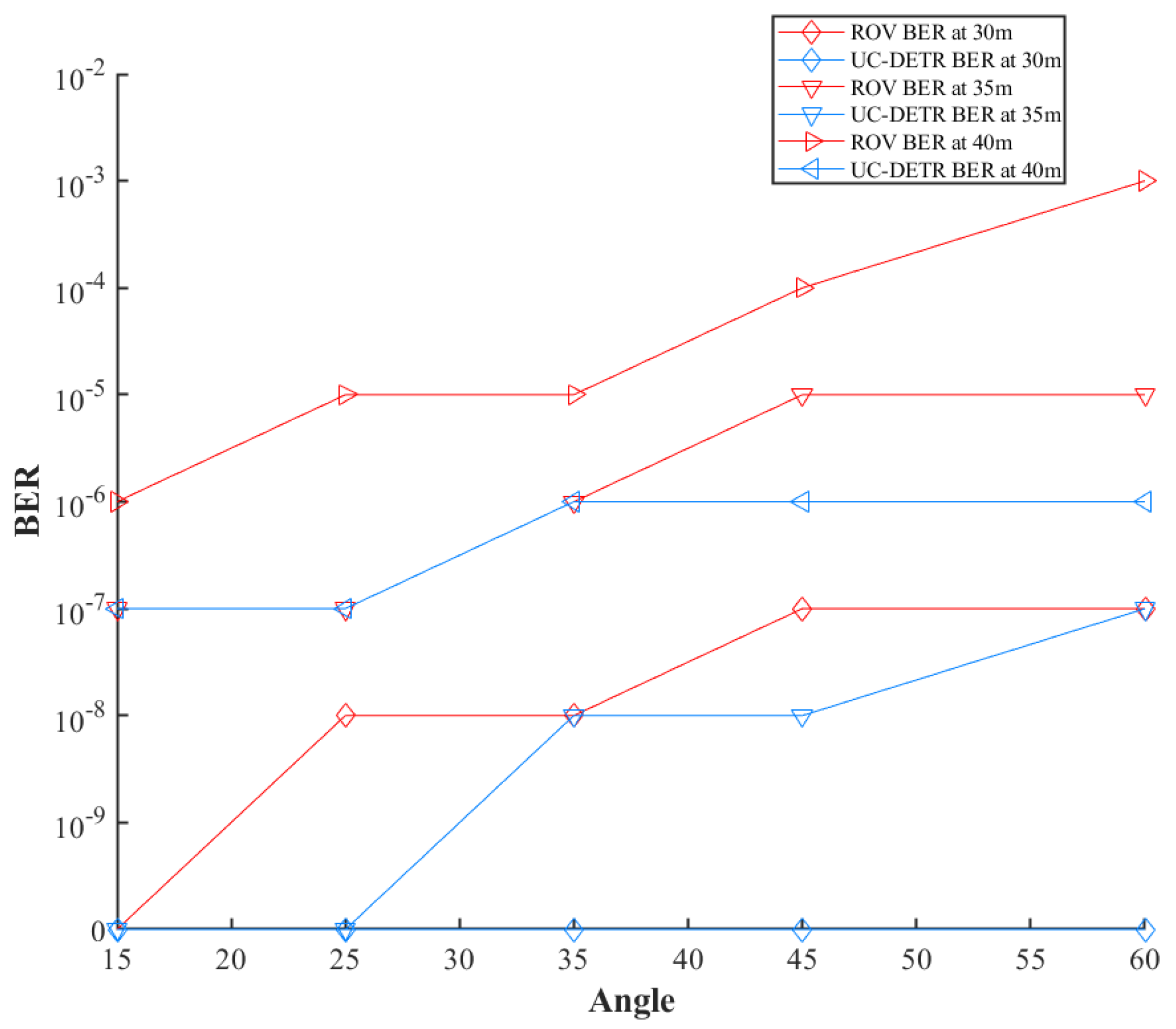

- A UWOC prototype with automatic alignment: a CCD acquires light-spot images in real time, and the improved RT-DETRv2 outputs the spot center online; the host converts the center offset to a deflection angle via perspective projection and, combined with real-time optical power, performs attitude adjustment for closed-loop alignment. In a pool environment, through multi-angle alignment experiments, error-free transmission has been achieved over 30 m, and the BER at 40 m has remained in the 10−7–10−6 range.

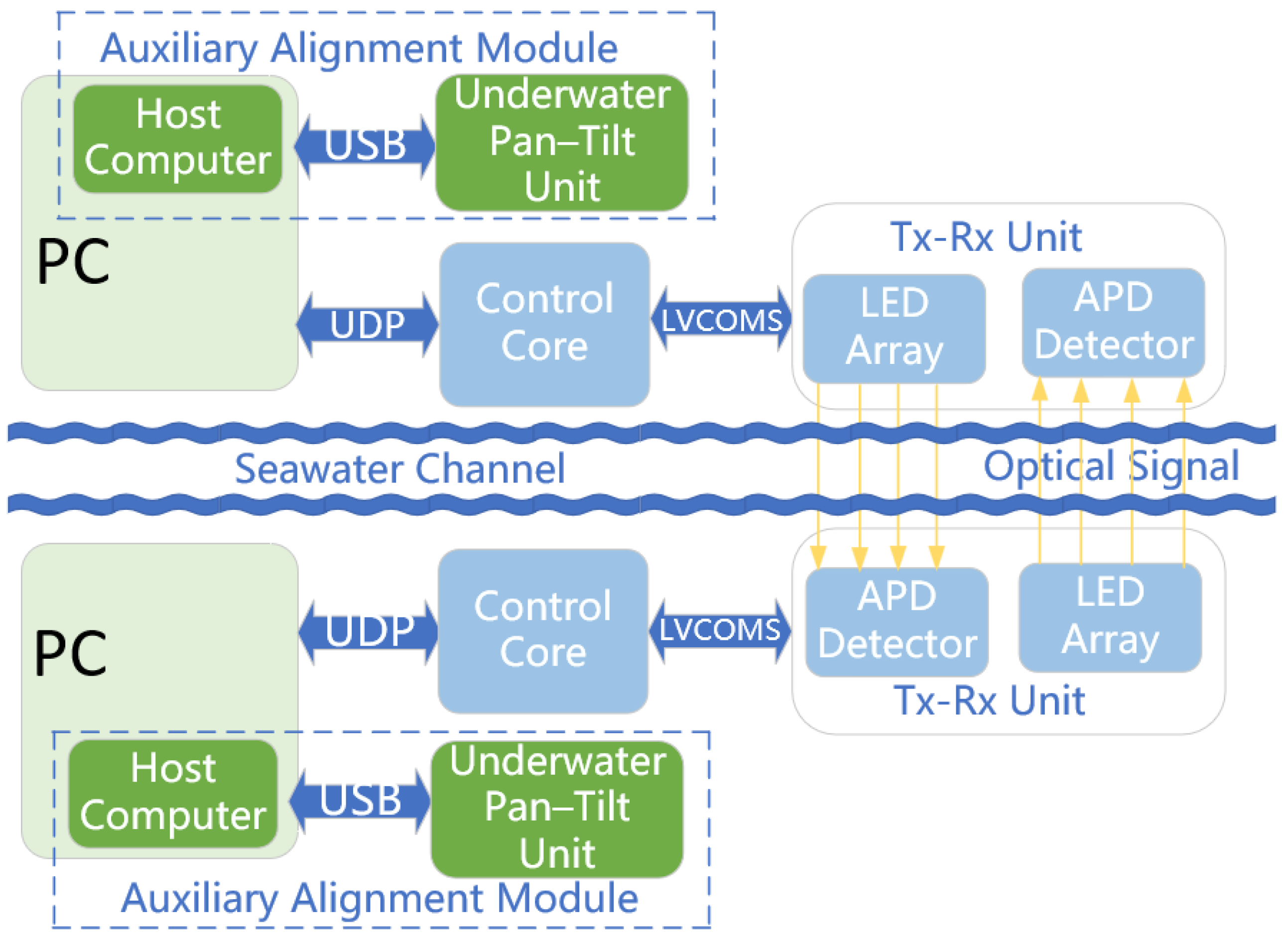

2. Underwater Optical Communication System Design

2.1. Auxiliary Alignment Module

2.1.1. UC-DETR Model Design

2.1.2. Optical Axis Deflection Calculation

2.2. Control Core Module

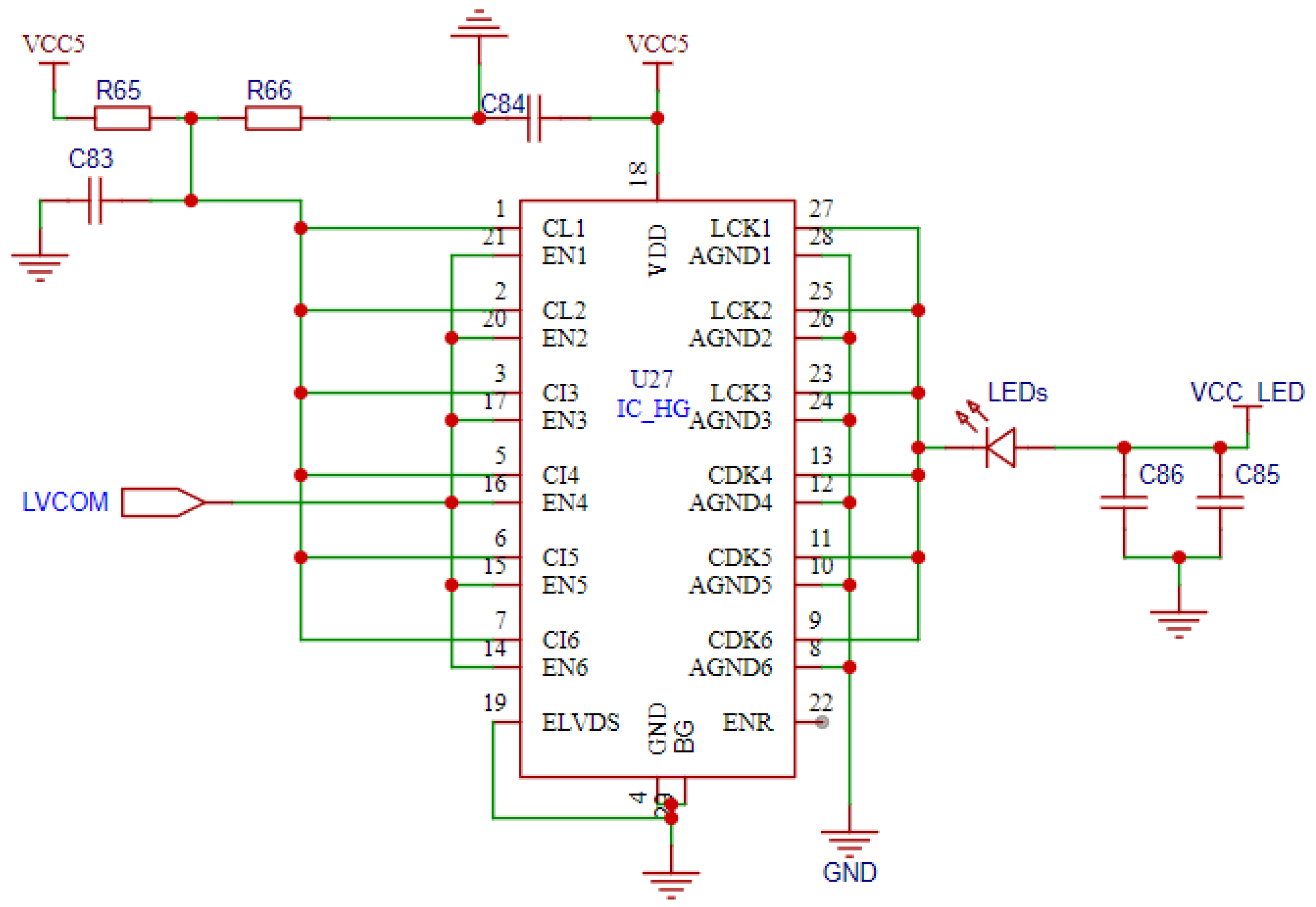

2.3. LED Array Module

2.4. APD Receiver Module

3. Experimental Test and Analysis

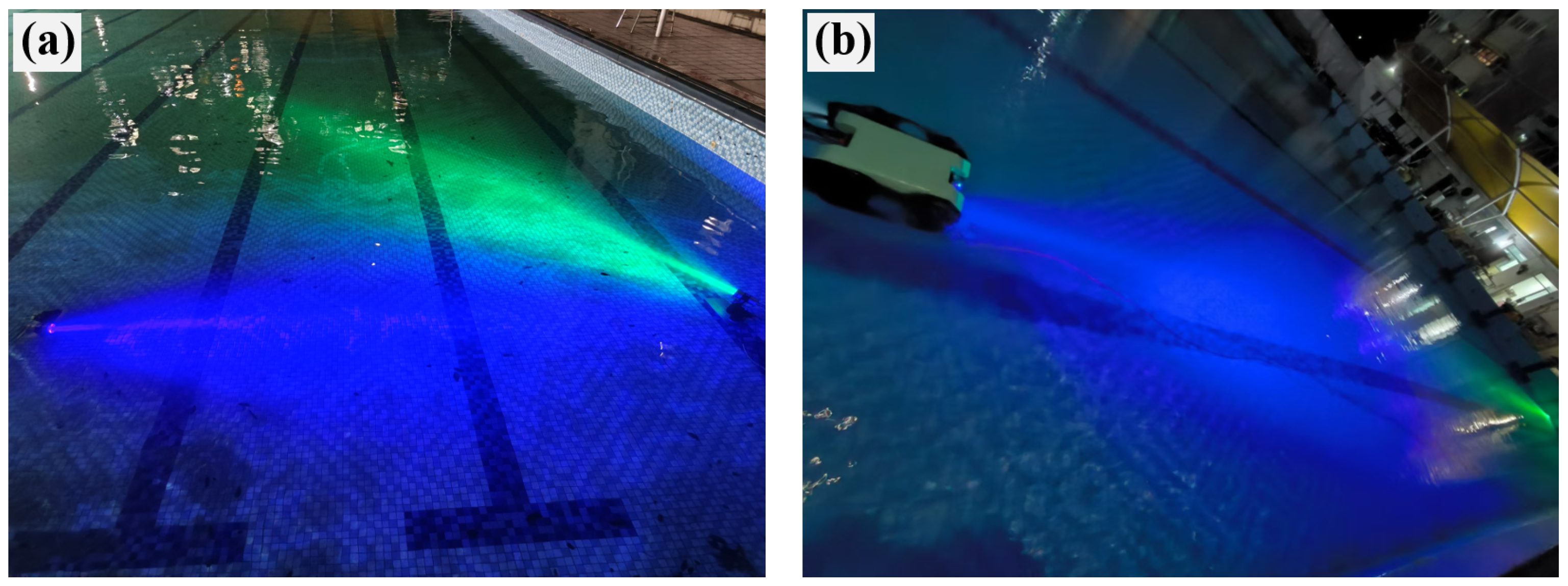

3.1. Data Set Construction

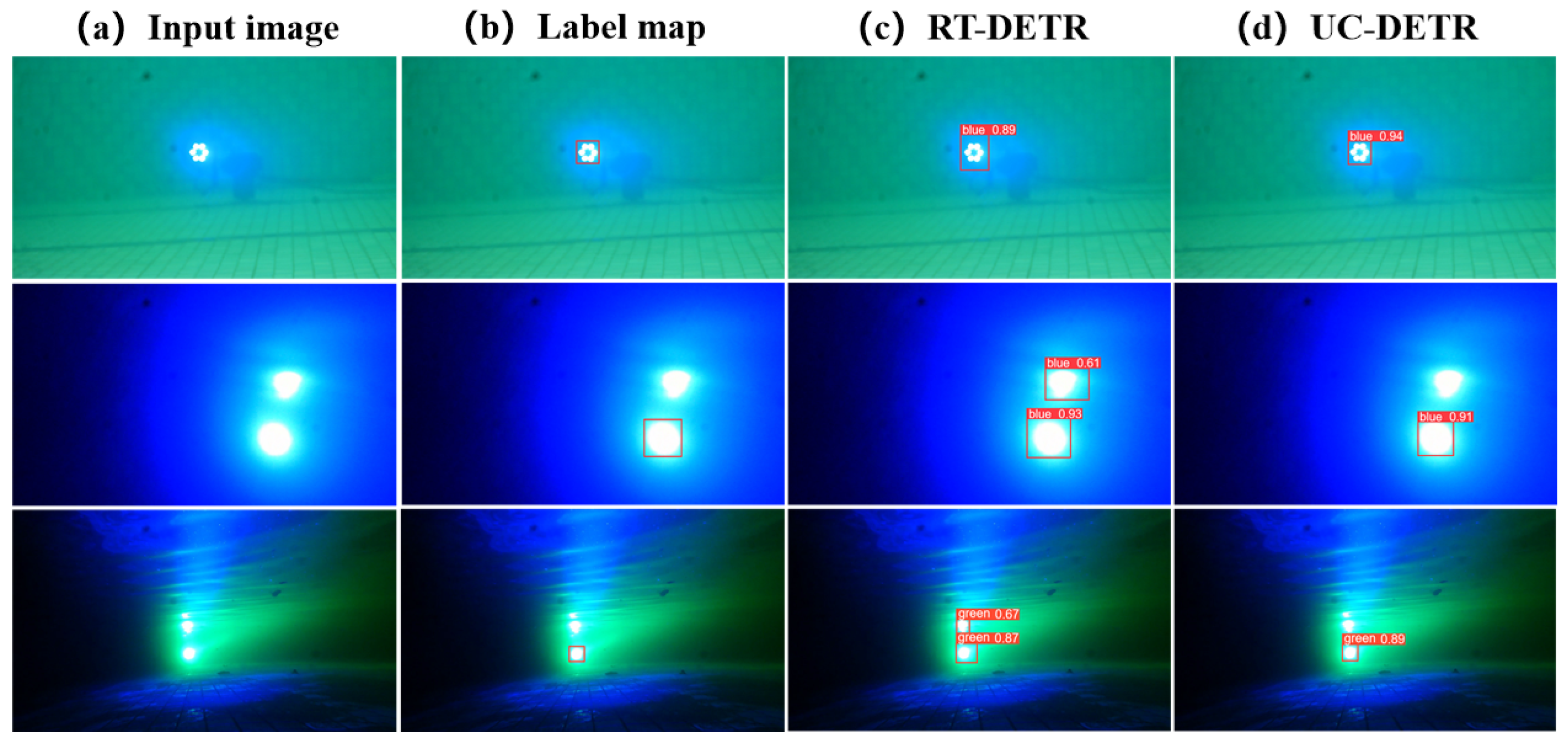

3.2. UC-DETR Comparative Analysis

3.3. UC-DETR Ablation Experiment

3.4. Underwater Optical Communication System Swimming Pool Experiment

3.5. Swimming Pool Communication Experiment Analysis

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zeng, Z.; Fu, S.; Zhang, H.; Dong, Y.; Cheng, J. A Survey of Underwater Optical Wireless Communications. IEEE Commun. Surv. Tutor. 2017, 19, 204–238. [Google Scholar] [CrossRef]

- Gabriel, C.; Khalighi, M.-A.; Bourennane, S.; Léon, P.; Rigaud, V. Monte-Carlo-Based Channel Characterization for Underwater Optical Communication Systems. J. Opt. Commun. Netw. 2013, 5, 1–12. [Google Scholar] [CrossRef]

- Lv, Z.; He, G.; Yang, H.; Chen, R.; Li, Y.; Zhang, W.; Qiu, C.; Liu, Z. The Investigation of Underwater Wireless Optical Communication Links Using the Total Reflection at the Air–Water Interface in the Presence of Waves. Photonics 2022, 9, 525. [Google Scholar] [CrossRef]

- Cui, N.; Liu, Y.; Chen, X.; Wang, Y. Active Disturbance Rejection Controller of Fine Tracking System for Free Space Optical Communication. Proc. SPIE 2013, 8906, 890613. [Google Scholar]

- Ji, X.; Yin, H.; Jing, L.; Liang, Y.; Wang, J. Analysis of Aperture Averaging Effect and Communication System Performance of Wireless Optical Channels with Weak to Strong Turbulence in Natural Turbid Water. SSRN Electron. J. 2022. [Google Scholar] [CrossRef]

- Palitharathna, K.W.S.; Suraweera, H.A.; Godaliyadda, R.I.; Herath, V.R.; Thompson, J.S. Average Rate Analysis of Cooperative NOMA Aided Underwater Optical Wireless Systems. IEEE Open J. Commun. Soc. 2021, 2, 2292–2310. [Google Scholar] [CrossRef]

- Elamassie, M.; Al-Nahhal, M.; Kizilirmak, R.C.; Uysal, M. Transmit Laser Selection for Underwater Visible Light Communication Systems. In Proceedings of the 2019 IEEE 30th Annual International Symposium on Personal, Indoor and Mobile Radio Communications (PIMRC), Istanbul, Turkey, 8–11 September 2019; pp. 1–6. [Google Scholar]

- Yousif, B.B.; Elsayed, E.E.; Alzalabani, M.M. Atmospheric Turbulence Mitigation Using Spatial Mode Multiplexing and Modified Pulse Position Modulation in Hybrid RF/FSO Orbital-Angular-Momentum Multiplexed Based on MIMO Wireless Communications System. Opt. Commun. 2019, 436, 197–208. [Google Scholar] [CrossRef]

- Zhang, D.; N’Doye, I.; Ballal, T.; Al-Naffouri, T.Y.; Alouini, M.-S.; Laleg-Kirati, T.-M. Localization and Tracking Control Using Hybrid Acoustic–Optical Communication for Autonomous Underwater Vehicles. IEEE Internet Things J. 2020, 7, 10048–10060. [Google Scholar] [CrossRef]

- Zhao, M.; Li, X.; Chen, X.; Tong, Z.; Lyu, W.; Zhang, Z.; Xu, J. Long-Reach Underwater Wireless Optical Communication with Relaxed Link Alignment Enabled by Optical Combination and Arrayed Sensitive Receivers. Opt. Express 2020, 28, 34450–34460. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Z.; Yin, H.; Wang, J.; Jing, L. A Laser Spot Tracking Algorithm for Underwater Wireless Optical Communication Based on Image Processing. In Proceedings of the 2021 13th International Conference on Communication Software and Networks (ICCSN), Chongqing, China, 4–7 June 2021; pp. 192–198. [Google Scholar]

- Li, Y.; Sun, K.; Han, Z.; Lang, J.; Liang, J.; Wang, Z.; Xu, J. Deep Learning-Based Docking Scheme for Autonomous Underwater Vehicles with an Omnidirectional Rotating Optical Beacon. Drones 2024, 8, 697. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Jia, B.; Ge, W.; Cheng, J.; Du, Z.; Wang, R.; Song, G.; Zhang, Y.; Cai, C.; Qin, S.; Xu, J. Deep Learning–Based Cascaded Light Source Detection for Link Alignment in Underwater Wireless Optical Communication. IEEE Photonics J. 2024, 16, 7801512. [Google Scholar]

- Kong, M.; Pan, Y.; Zhou, H.; Yu, R.; Le, X.; Yuan, H.; Wang, R.; Yang, Q. Deep Learning-Based Acquisition Pointing and Tracking for Underwater Wireless Optical Communication. IEEE Photonics Technol. Lett. 2025, 37, 555–558. [Google Scholar] [CrossRef]

- Lv, W.; Zhao, Y.; Chang, Q.; Huang, K.; Wang, G.; Liu, Y. RT-DETRv2: Improved Baseline with Bag-of-Freebies for Real-Time Detection Transformer. arXiv 2024, arXiv:2407.17140. [Google Scholar]

- Li, J.; Xia, X.; Li, W.; Li, H.; Wang, X.; Xiao, X.; Rao, R.; Wang, M.; Pan, X. Next-ViT: Next Generation Vision Transformer for Efficient Deployment in Realistic Industrial Scenarios. arXiv 2022, arXiv:2207.05501. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Zhang, X.; Song, Y.; Song, T.; Yang, D.; Ye, Y.; Zhou, J.; Zhang, L. LDConv: Linear Deformable Convolution for Improving Convolutional Neural Networks. Image Vis. Comput. 2024, 149, 105190. [Google Scholar] [CrossRef]

- Zhang, M.; Zhou, H. Real-Time Underwater Wireless Optical Communication System Based on LEDs and Estimation of Maximum Communication Distance. Sensors 2023, 23, 7649. [Google Scholar] [CrossRef]

- Duntley, S.Q. Light in the Sea. J. Opt. Soc. Am. 1963, 53, 214–233. [Google Scholar] [CrossRef]

- Shen, T.; Guo, J.; Liang, H.; Li, Y.; Li, K.; Dai, Y.; Ai, Y. Research on a Blue–Green LED Communication System Based on an Underwater Mobile Robot. Photonics 2023, 10, 1238. [Google Scholar] [CrossRef]

- He, J.; Li, J.; Zhu, X.; Xiong, S.; Chen, F. Design and Analysis of an Optical–Acoustic Cooperative Communication System for an Underwater Remote-Operated Vehicle. Appl. Sci. 2022, 12, 5533. [Google Scholar] [CrossRef]

| Model | Params | GFLOPs | Latency | mAP | AP50 |

|---|---|---|---|---|---|

| YOLOv8-S | 11.2 M | 28.6 | 7.07 ms | 62.8 | 81.5 |

| YOLOv8-M | 25.9 M | 78.9 | 9.50 ms | 64.2 | 84.7 |

| YOLOv8-L | 43.7 M | 165.2 | 12.39 ms | 65.9 | 85.7 |

| YOLOv10-S | 7.2 M | 21.6 | 2.49 ms | 59.9 | 80.1 |

| YOLOv10-B | 19.1 M | 92.0 | 4.74 ms | 63.3 | 83.6 |

| YOLOv10-L | 24.4 M | 120.3 | 7.28 ms | 65.1 | 84.9 |

| YOLO11-S | 9.4 M | 21.5 | 2.46 ms | 63.3 | 82.3 |

| YOLO11-M | 20.1 M | 68.0 | 4.70 ms | 66.6 | 85.4 |

| YOLO11-L | 25.3 M | 86.9 | 6.16 ms | 67.2 | 86.1 |

| RT-DETRv2-S | 20.0 M | 60.0 | 4.58 ms | 64.7 | 82.3 |

| RT-DETRv2-M | 31.0 M | 92.0 | 9.20 ms | 65.2 | 84.7 |

| RT-DETRv2-L | 42.0 M | 136.0 | 13.71 ms | 66.3 | 87.4 |

| UC-DETR | 25.3 M | 72.7 | 4.98 ms | 81.1 | 97.4 |

| Model | Backbone | Channels | Conv | Params (M) | GFLOPs | Latency (ms) | mAP | AP50 |

|---|---|---|---|---|---|---|---|---|

| UC-DETR | ResNet18 | [64, 128, 256, 512] | LDCConv | 20.3 | 60.4 | 4.67 | 65.8 | 84.7 |

| UC-DETR | ResNet50 | [256, 512, 1024, 2048] | LDCConv | 31.4 | 92.5 | 9.39 | 66.5 | 87.2 |

| UC-DETR | Next-ViT | [96, 192, 384, 768] | Conv2D | 25.0 | 73.2 | 4.71 | 79.8 | 95.9 |

| UC-DETR | Next-ViT | [96, 192, 384, 768] | LDCConv | 25.3 | 72.7 | 4.98 | 81.1 | 97.4 |

| Parameter of Electric Machine | Parameter Values |

|---|---|

| Horizontal rotation Angle | |

| Pitch angle | |

| spin velocity | – |

| Torque of rotation | |

| operating temperature range | −10 °C–40 °C |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liang, H.; Li, H.; Wu, M.; Zhang, J.; Ni, W.; Hu, B.; Ai, Y. Design of an Underwater Optical Communication System Based on RT-DETRv2. Photonics 2025, 12, 991. https://doi.org/10.3390/photonics12100991

Liang H, Li H, Wu M, Zhang J, Ni W, Hu B, Ai Y. Design of an Underwater Optical Communication System Based on RT-DETRv2. Photonics. 2025; 12(10):991. https://doi.org/10.3390/photonics12100991

Chicago/Turabian StyleLiang, Hexi, Hang Li, Minqi Wu, Junchi Zhang, Wenzheng Ni, Baiyan Hu, and Yong Ai. 2025. "Design of an Underwater Optical Communication System Based on RT-DETRv2" Photonics 12, no. 10: 991. https://doi.org/10.3390/photonics12100991

APA StyleLiang, H., Li, H., Wu, M., Zhang, J., Ni, W., Hu, B., & Ai, Y. (2025). Design of an Underwater Optical Communication System Based on RT-DETRv2. Photonics, 12(10), 991. https://doi.org/10.3390/photonics12100991