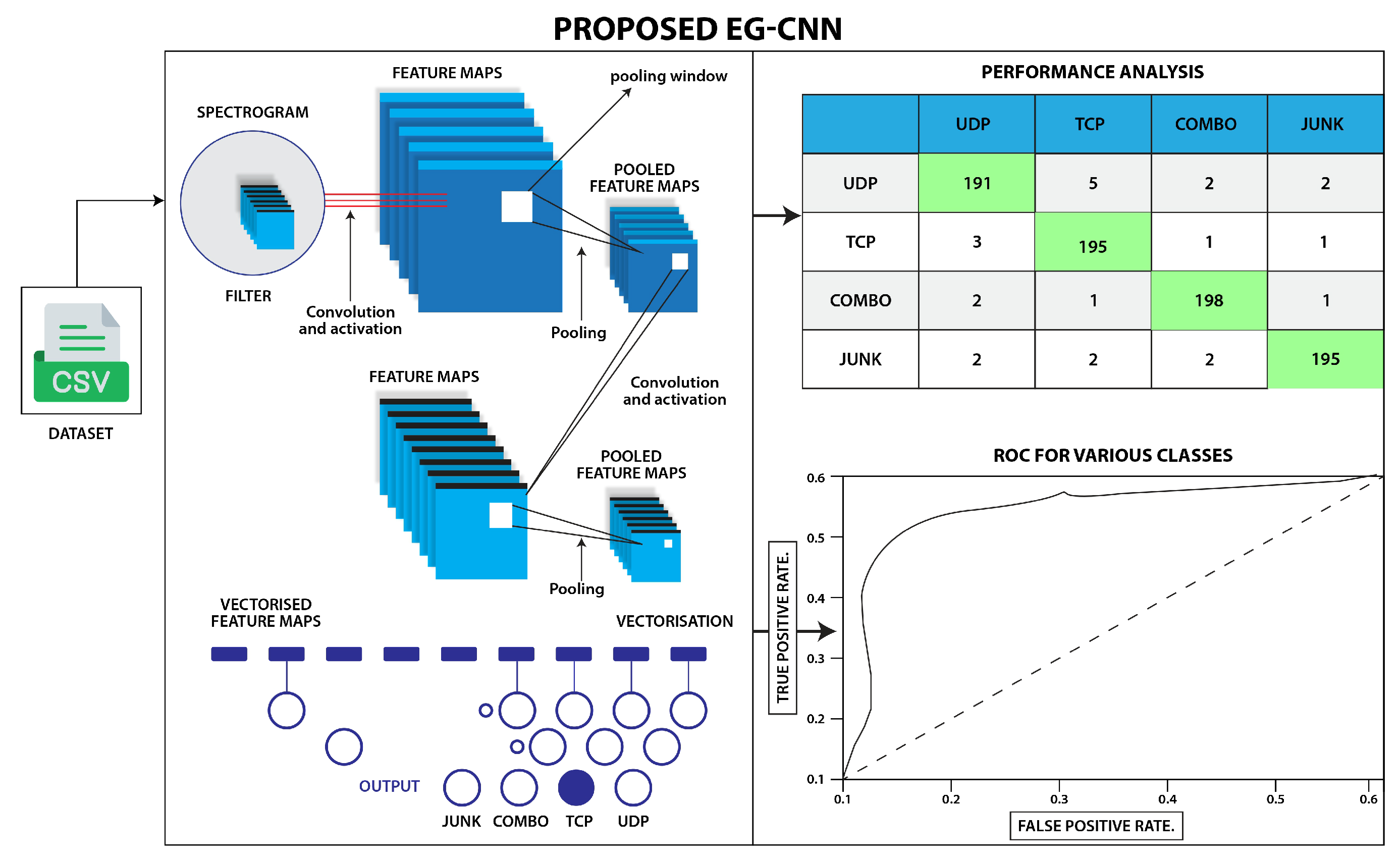

In response to these challenges, our research introduces the Explainable Internet of Things (XIoT) model, which is a novel detection system specifically designed for IoT environments. While applying Convolutional Neural Networks (CNNs) to IoT traffic for intrusion detection is a well-known technique, the XIoT model stands apart by introducing several key innovations. It harnesses the power of CNNs combined with Explainable AI (XAI) to provide deeper interpretability and transparency in the decision-making process, which is critical in real-world cybersecurity applications. This integration of CNNs with XAI ensures that both the spatial and temporal features of spectrogram images derived from IoT network traffic are efficiently analyzed, facilitating a more nuanced detection of cyber threats.

Beyond its technical capabilities, the XIoT model also improves upon existing models by enhancing interpretability, which is a critical aspect for cybersecurity practitioners. In complex IoT environments, understanding the nature and behavior of an attack is essential for implementing effective countermeasures. The XIoT model’s explainable intrusion detection decisions empower security analysts with actionable insights, facilitating faster and more accurate responses to evolving threats. This is a significant departure from the ”black box” nature of many ML-based intrusion detection systems, which often leave analysts with limited understanding of the detection process.

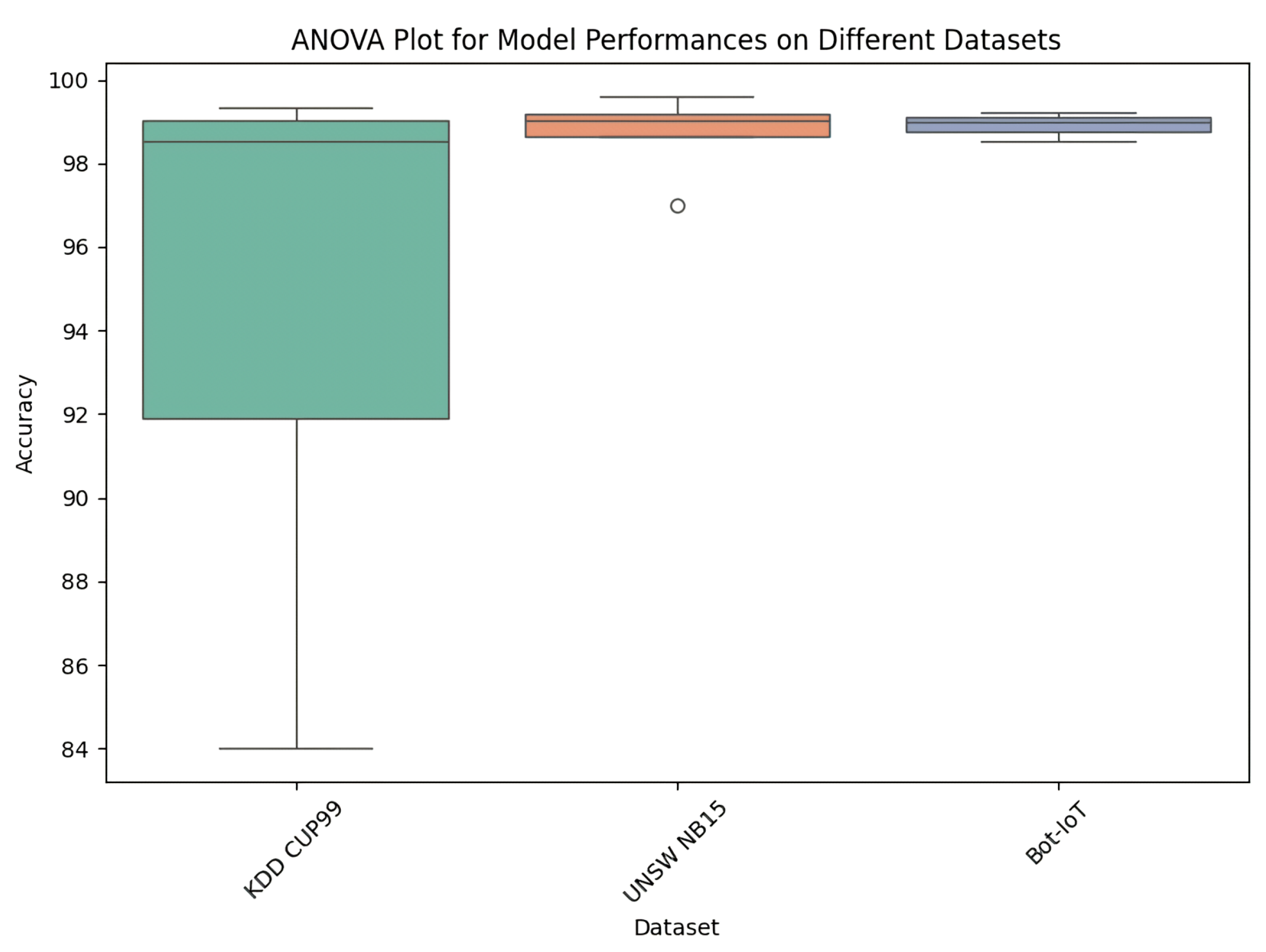

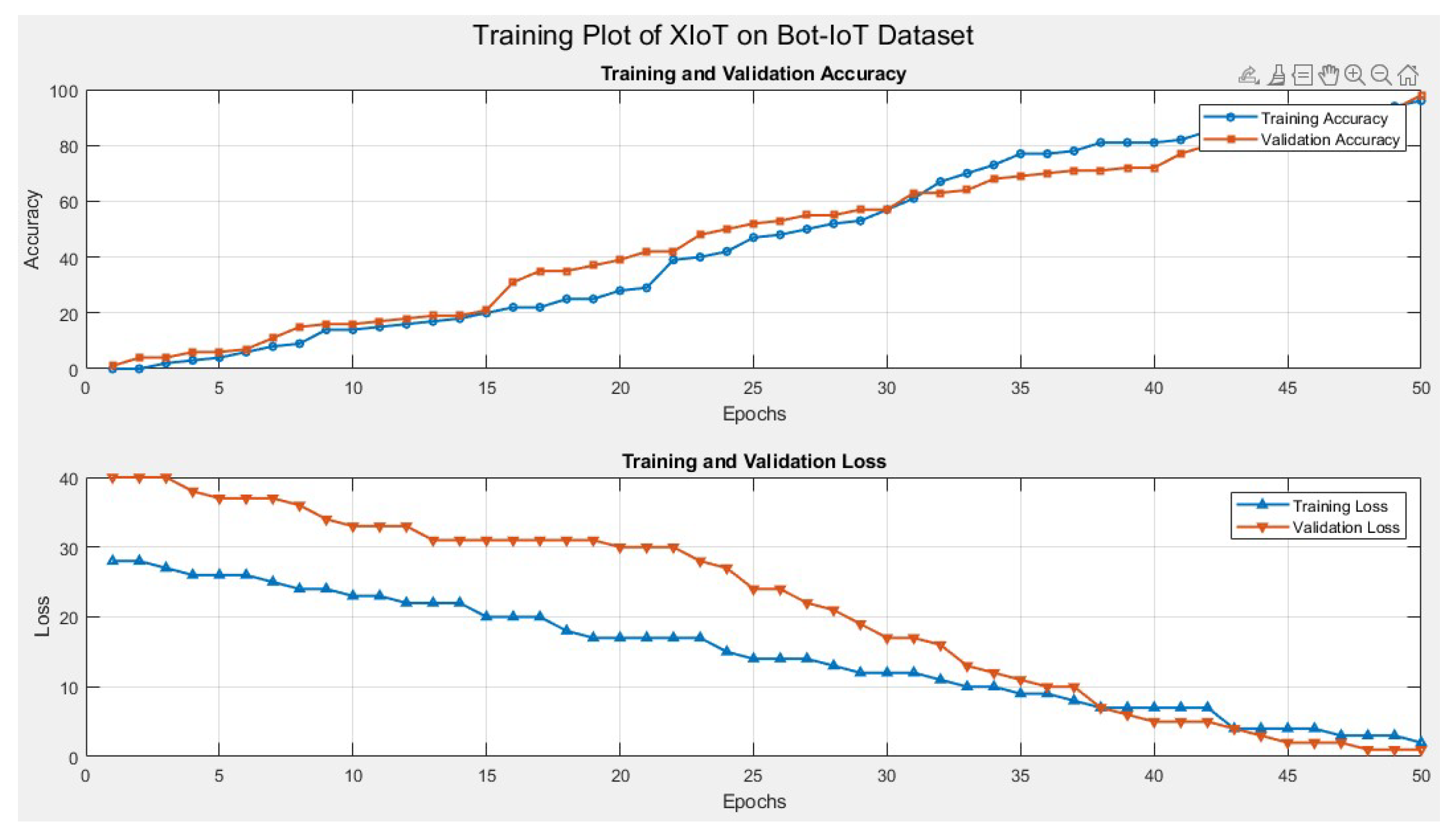

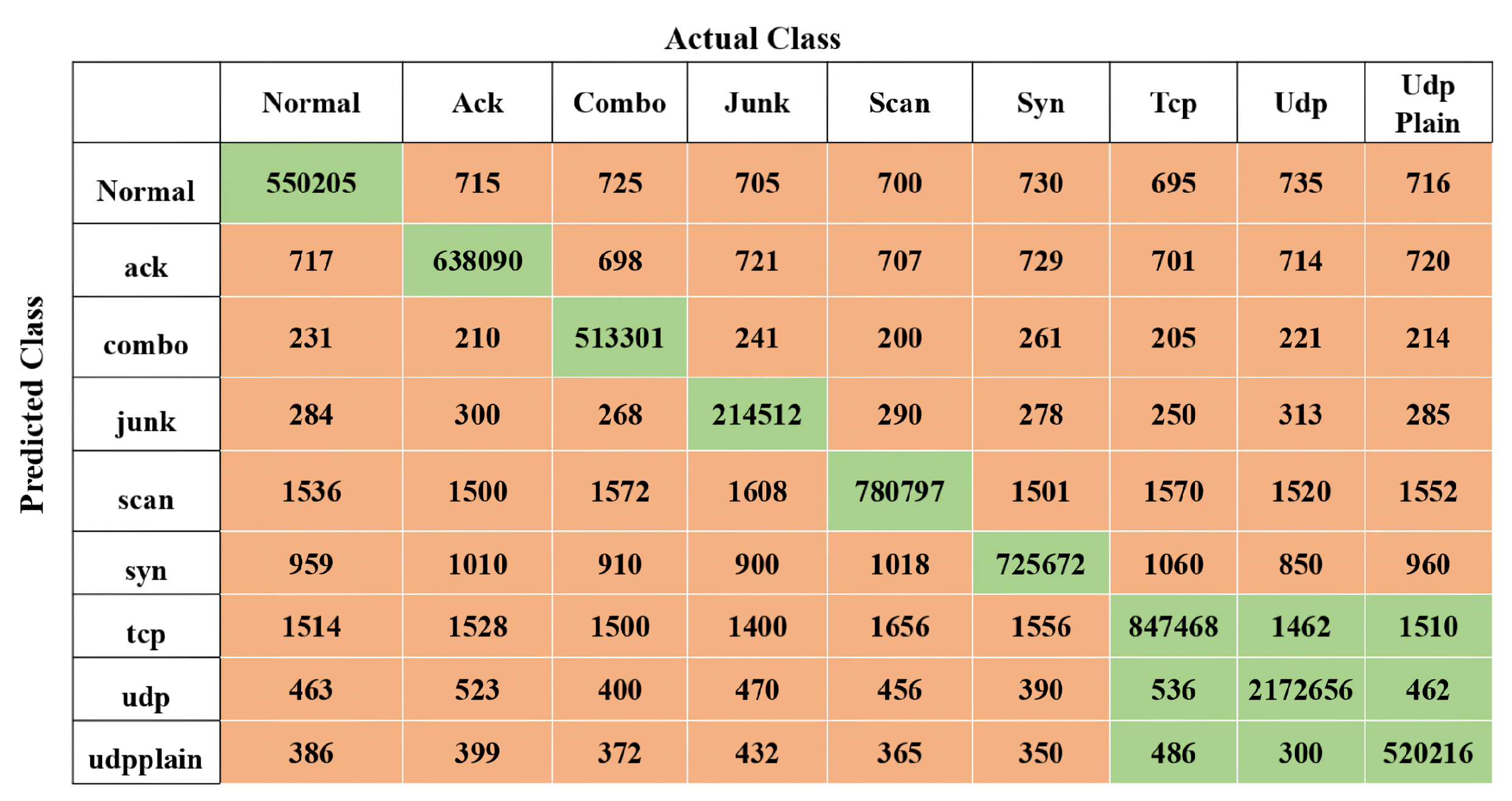

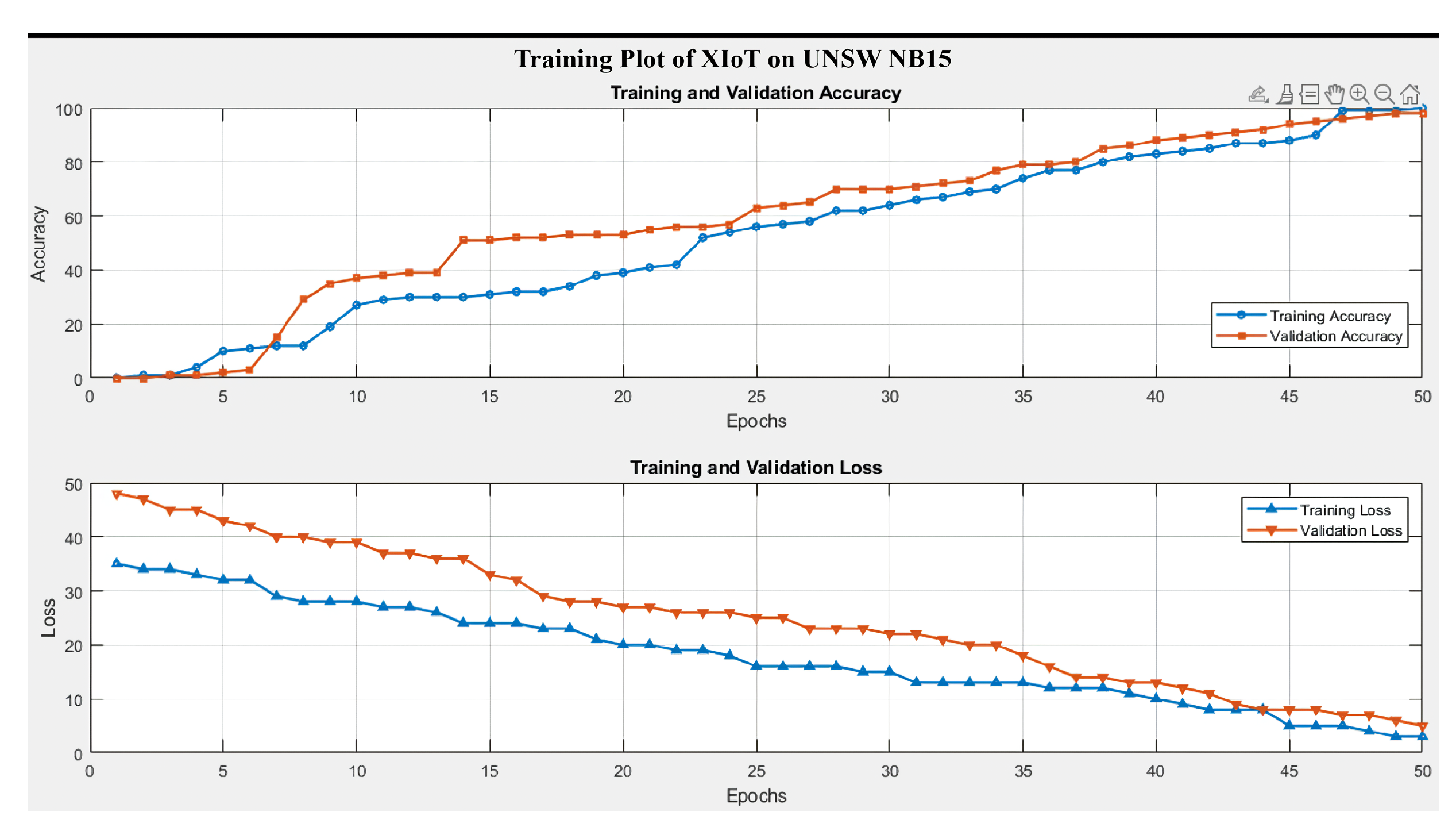

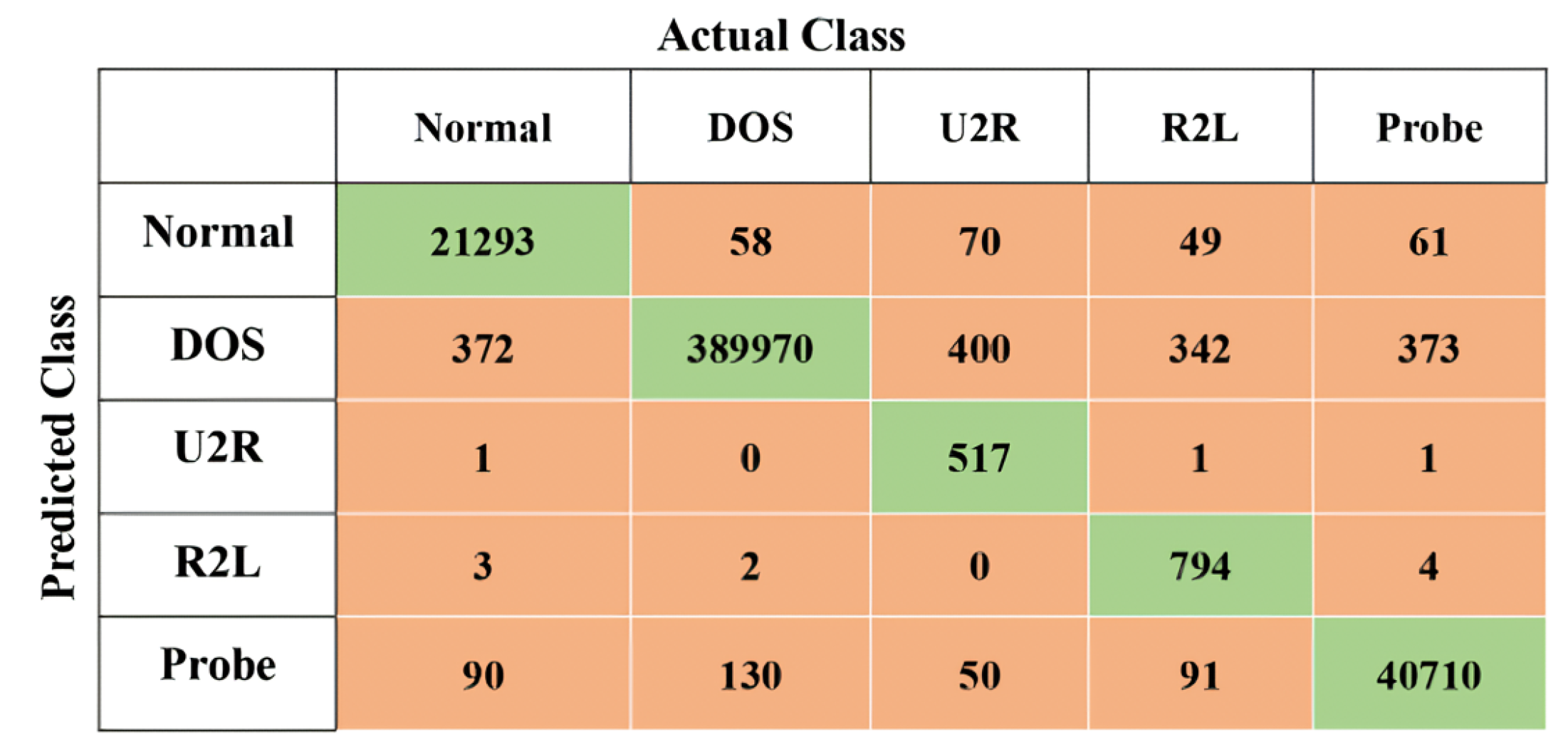

Furthermore, the rising interconnectivity of devices, often referred to as Explainable IoT (XIoT), which integrates traditional IoT with operational technology (OT) and industrial control systems (ICSs), presents additional security challenges. XIoT environments extend the attack surface significantly, necessitating even more robust and scalable detection mechanisms. The XIoT model is specifically designed to meet these demands, offering advanced detection capabilities that align with the increasing complexity and scale of these interconnected systems. By rigorously evaluating the model across diverse datasets such as KDD CUP99, UNSW NB15, and Bot-IoT, we demonstrate its ability to generalize across different IoT environments, offering a novel solution that outperforms current intrusion detection models in terms of accuracy, precision, recall, and F1-score.

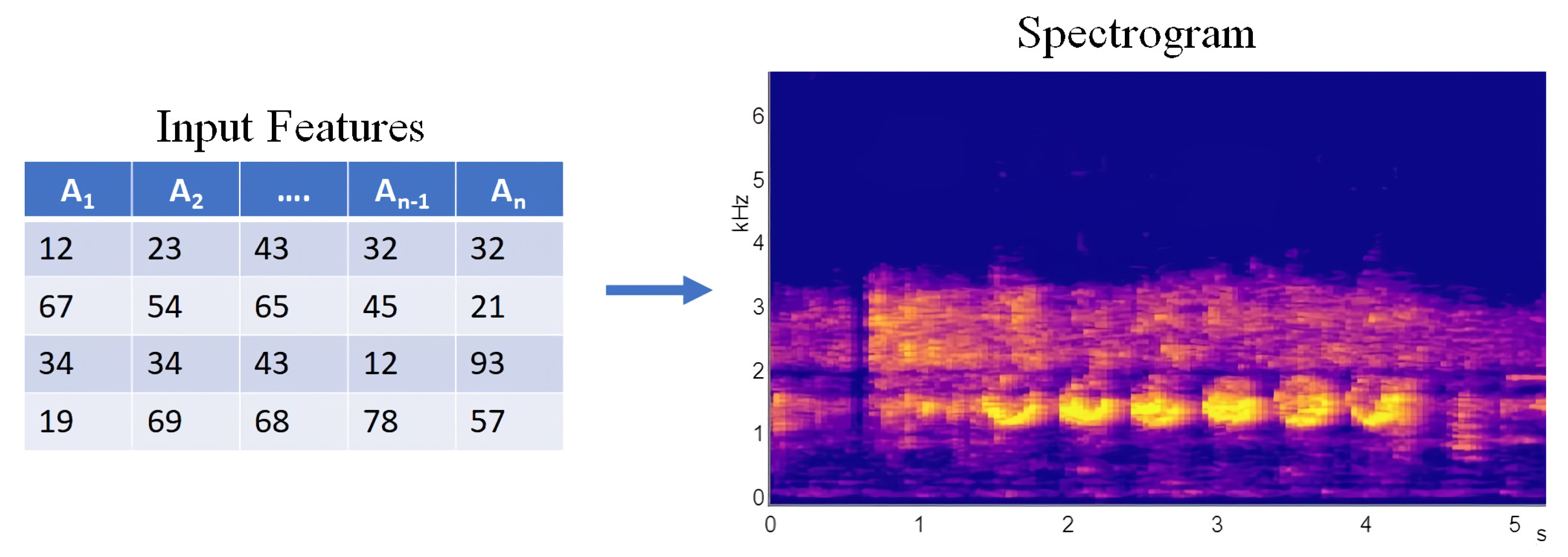

The proliferation of IoT devices connected through high-speed optical networks introduces unique challenges, such as managing heterogeneous data streams with minimal latency and ensuring robust security against increasingly sophisticated cyber threats. Existing IDSs lack the efficiency and interpretability required for real-time detection in such environments. This research aims to bridge this gap by leveraging spectrogram-based data transformation and CNNs to enhance both accuracy and explainability.

1.1. Related Work

ML techniques have been extensively employed to detect various types of cyber attacks, enabling network administrators to implement preventative measures against intrusions. Initially, traditional ML methods such as SVM [

9], k-Nearest Neighbor (KNN) [

10], RF [

11], Naïve Bayes Network [

12], and Self-Organizing Maps (SOMs) [

13] were utilized in IDS and demonstrated promising results. Reference [

14] assessed the efficacy of various ML classifiers using the NSL-KDD dataset. However, these traditional methods, characterized as shallow learning, primarily focus on feature engineering and selection, and they are often inadequate for managing the complexities of large-scale data classification in real network environments [

15,

16]. As datasets expand, the limitations of shallow learning become apparent, particularly in high-dimensional analysis required for intelligent forecasting.

Conversely, DL offers enhanced capabilities for extracting significant representations from data, thus improving model performance. Recent research has explored the application of DL in network intrusion detection, which is a relatively novel field. For instance, DL approaches like the three-layer RNN proposed by [

17] with 41 features and four output categories, despite its partial inter-layer connectivity, signify advancements in handling high-dimensional features. Additionally, Torres et al. [

18] transformed feature data into character sequences to analyze temporal characteristics using RNN. In [

14], a specific RNN-IDS model was introduced for direct classification, comparing its performance against traditional methods such as J48, ANN, RF, and SVM on the NSL-KDD dataset in binary and multi-class scenarios. Wang et al. [

19] integrated both CNN and RNN to maximize the deep neural network’s ability to learn spatial–temporal features from raw network traffic data.

As new viruses emerge and intrusion behaviors evolve, IDSs continue to innovate, integrating data mining and ML technologies to enhance detection capabilities [

20]. For example, an adaptive chicken colony optimization algorithm for efficient clustering in selecting cluster heads was introduced in [

21], along with a two-stage adaptive SVM classification to identify malicious sensor nodes, thereby reducing time consumption and enhancing network lifespan and scalability. Other authors developed an IDS model utilizing a double sparse convolution matrix framework which leverages the strong correlations in non-negative matrix decomposition to reveal hidden patterns and achieve high detection accuracy. However, despite these advancements, traditional ML-based IDSs still face significant challenges due to their reliance on complex mathematical calculations [

22].

Various methods have been developed to enhance attack detection in IoT networks. In prior research, a feed-forward neural network achieved high accuracy using the BoT-IoT dataset, though with lower precision and recall in some categories [

23]. Reference [

24] introduced a hybrid IDS combining feature selection and ensemble learning, significantly improving accuracy to 99.9%. Reference [

25] employed an LSTM autoencoder for dimensionality reduction, followed by a Bi-LSTM, which improved performance at the expense of increased computational time.

Reference [

26] used a bi-directional LSTM, achieving high detection rates for DoS attacks in cloud networks, although it struggled with non-DoS traffic like reconnaissance attacks. Reference [

27] proposed Deep-IFS, a forensic model enhanced with multi-head attention, outperforming centralized DL models but requiring numerous fog nodes for optimal performance. Reference [

28] applied correlation-based feature selection with various ML algorithms, achieving high detection rates. Reference [

29] developed a Deep Belief Network (DBN)-based IDS with strong accuracy, while other studies utilized CNN-LSTM combinations [

30,

31], achieving high detection rates across different attack types. Another novel IDS combined CNNs with stacked autoencoders for feature extraction, showing high performance. However, methods involving RNNs with self-attention mechanisms demanded extensive preprocessing time.

Research has also explored hybrid feature selection techniques like ant colony optimization and mutual information, which proved effective in improving detection with decision trees [

29]. Metaheuristic algorithms, such as particle swarm optimization (PSO) and genetic algorithms (GAs), have been used for feature selection to enhance IDS performance [

32,

33,

34,

35,

36,

37]. Despite these advances, there remains a gap in integrating deep learning with metaheuristic approaches for further improvement in IoT-based IDSs.

One-class classification is a key anomaly detection method particularly suited for datasets where one class dominates, such as in intrusion detection, where normal network traffic far exceeds attack instances. It utilizes algorithms like Meta-Learning [

38], Interpolated Gaussian Descriptor [

39], One-Class Support Vector Machine (OCSVM) [

40,

41,

42,

43,

44,

45], and Autoencoders [

46,

47,

48,

49,

50,

51,

52]. OCSVM is particularly effective with small datasets, as demonstrated by [

45], who enhanced it with hyperparameter optimization, creating a scalable and distributed IDSs for IoT, which was assessed with ensemble learning.

Autoencoders, such as the stacked self-encoder model from Song [

50], are increasingly favored as datasets grow, offering stable performance with optimization through latent layer adjustments. Ensemble learning [

53,

54,

55,

56,

57,

58,

59] has also shown promise, integrating multiple weak learners to improve overall accuracy. Reference [

56] introduced an ensemble voting classifier for IoT intrusion detection, while [

58] used a genetic algorithm for feature selection combined with SVM and DT classifiers. Reference [

59] developed a two-layer soft-voting model using RF, lightGBM, and XGBoost, achieving superior accuracy in both binary and multi-class scenarios.

Reference [

60] presented an ensemble IDS for IoT environments, mitigating botnet attacks using DNS, HTTP, and MQTT protocols. The method employed AdaBoost with DT, naive Bayes, and artificial neural networks, using the UNSW-NB15 dataset. Additionally, reference [

61] proposed a Dew Computing as a Service model to improve the IDS performance in Edge of Things (EoT) systems, integrating Deep Belief Networks (DBNs) with restricted Boltzmann machines for real-time attack classification. [

62] introduced MemAE, a memory-augmented autoencoder that improves anomaly detection by guiding reconstruction toward normal data characteristics, enhancing detection accuracy. Furthermore, SVD and SMOTE were applied to improve feature condensation and balance, achieving 99.99% accuracy in binary classification and 99.98% in multi-class classification using the ToN_IoT dataset.

Finally, reference [

63] introduced the Deep Random Neural Network, combining Particle Swarm Optimization (PSO) and Sequential Quadratic Programming to enhance attack detection in IIoT settings. The model demonstrated superior performance across both binary and multi-class scenarios using multiple IIoT datasets. Deep learning (DL) models have become integral in intrusion detection due to their ability to automatically learn hierarchical representations from network traffic data. Unlike traditional ML models, DL methods like Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs) do not rely on manual feature extraction, making them more effective in handling complex patterns in real-time attack data.

RNNs, particularly Long Short-Term Memory (LSTM) networks, have demonstrated significant improvements in detecting temporal attack patterns. For example, reference [

14] applied an LSTM-based IDS to the NSL-KDD dataset, achieving notable accuracy for probe and DoS attacks but struggling with less frequent attack types like R2L and U2R. Despite this limitation, RNNs are particularly advantageous in capturing sequential dependencies in network traffic data. On the other hand, CNNs have been successfully applied to analyze network traffic represented as images or spectrograms. In this study, the novel XIoT model utilizes CNNs to examine spectrogram images of IoT traffic data, emphasizing the interpretability of attack detection. Unlike RNNs, CNNs are primarily used for spatial feature extraction, which makes them suitable for analyzing the static features of network traffic patterns [

64]. While both RNNs and CNNs offer substantial improvements over traditional ML models, their specific use cases differ. RNNs excel in sequential data modeling, while CNNs are more effective for tasks requiring spatial feature extraction. Ensemble models combining these architectures have been explored for further performance gains.

To demonstrate the application and performance of various machine learning and deep learning models in the context of intrusion detection systems (IDSs),

Table 1 provides a comparative analysis of these models across different datasets with classification accuracy as a key metric.

Traditional ML models, such as SVM, DT, and RF, have been widely applied in earlier studies. For instance, reference [

65] achieved a high accuracy of 98.9% using a K-means clustering model on the KDD Cup 99 dataset. However, as noted in

Section 2.12, these models often struggle with underrepresented attack classes. DL approaches have been increasingly adopted due to their ability to handle complex data representations. Reference [

66] applied a CNN on the KDD Cup 99 dataset, achieving an accuracy of 97.1%, while [

67] employed an LSTM, enhancing the accuracy to 97.8%. These results highlight the effectiveness of CNNs and LSTMs in detecting network intrusions with higher accuracy compared to traditional ML models.

Table 1.

Former IDS models and their corresponding outcomes.

Table 1.

Former IDS models and their corresponding outcomes.

| Ref./Authors | Model | Dataset | Classification

Accuracy (%) |

|---|

| [66] Zhang | CNN | KDD Cup 99 | 97.1 |

| [68] Gupta | RNN | KDD Cup 99 | 96.4 |

| [67] Mishra | LSTM | KDD Cup 99 | 97.8 |

| [69] Wang | Random Forest | DARPA | 82.6 |

| [65] Ahmed Hasan | K-means | KDD Cup 99 | 98.9 |

| [70] Raj Mukkamala | CNN + RNN + LSTM | DARPA | 99.9 |

| [71] Gupta | Random Forest + K-means | KDD Cup 99 | 98.8 |

| [72] Kwon | GAN | NSL-KDD | 92.3 |

| [73] Binbusayyis | K-means | UNSW-NB15 | 95.6 |

| [74] Alzahrani | CNN | CIC-IDS2017 | 97.2 |

| [75] Wang | LSTM | CIC-IDS2018 | 98.5 |

| [76] Zhang | Ensemble Learning | NSL-KDD | 94.8 |

Ensemble models, which combine multiple machine learning algorithms or deep learning architectures, have demonstrated even greater performance. Reddy et al. [

29] integrated CNN, RNN, and LSTM networks on the DARPA dataset, achieving the highest accuracy of 99%, underscoring the potential of hybrid models in intrusion detection. The development of an IDS model is heavily influenced by the insights gathered from a review of the current literature and prior sections. A critical takeaway is that DL techniques have consistently outperformed traditional ML approaches in handling high-dimensional data and improving classification accuracy in IDS applications. For instance, the paper by Yin et al. [

77] demonstrated that DL models, particularly CNNs, are superior to traditional ML models such as SVM and decision trees (DTs) when it comes to processing complex datasets like NSL-KDD and KDDCup99.

One key advantage of DL over traditional ML lies in its ability to automatically extract features from raw data, eliminating the need for manual feature engineering, which is a limitation in shallow learning methods [

78]. Furthermore, deep learning algorithms such as feed-forward DNNs and CNNs tend to outperform Recurrent Neural Networks (RNNs), including GRU and LSTM networks, in certain IDS applications. Although LSTMs excel in time-series data analysis, DNNs have been shown to be more effective in static intrusion detection tasks due to their simpler architecture and faster training times.

Recent research also suggests that ensemble learning models, which combine multiple ML or DL algorithms, can enhance prediction accuracy and reduce variability in IDS results. Ensemble models outperform individual models by leveraging the strengths of each algorithm to correct the weaknesses of others [

79]. This finding highlights the growing importance of hybrid approaches in developing robust IDS solutions.

However, significant challenges remain in accurately detecting less frequent attack types, such as R2L and U2R attacks, due to their underrepresentation in common datasets like NSL-KDD [

80]. As most IDS models achieve high accuracy in detecting DoS and probing attacks, further research is needed to improve the detection rates for R2L and U2R attacks to ensure more comprehensive IDS performance. The IoT is a technology that links networks with sensors and other gadgets using IP-based communications. It is gaining popularity among individuals with Internet access. Individuals institutionalized because of a handicap or sickness might obtain advantages from the IoT by using it for remote monitoring, timely intervention, and healthcare services. Sensors, actuators, radio frequency identification (RFID), and other IOT components can be integrated into people’s bodies and objects. To illustrate, consider the following scenario: Caregivers can operate the equipment they have at their disposal more easily if they have the assistance of the available accessories. It is possible to read RFID patients or patient tags (including medical devices) and identify them using IOT applications, which are becoming increasingly popular. They can also be used to monitor and regulate the activities of other people.

Various approaches have been proposed to ensure that communication between nodes in the IOT network is secure and reliable, proposing a trust management mechanism that can be described as dynamic and flexible in how it is implemented and operated [

81]. This topic has been extensively discussed by [

82] Bao and Chen, who have written extensively about it in their respective publications.

The researchers developed a management framework for the layered IOT organized around the concept of services, which is built on the trustworthiness of its nodes as a foundation. When used with the IOT, which is composed of multiple layers, it is intended to be a multi-layered system. The developers’ documentation refers to this framework as ”service-oriented”, which indicates that it is designed to provide services. In addition to the core, sensor, and application layers, several other layers and components contribute to the system’s structure and function.

1.2. Motivation

The IoT is rapidly becoming an integral part of modern life with smart devices and sensors increasingly embedded in applications ranging from healthcare to transportation. The complexity and volume of IoT data present unique challenges for intrusion detection systems (IDSs), requiring robust, scalable, and interpretable machine learning solutions.

Traditionally, Recurrent Neural Networks (RNNs) and their variants, such as Long Short-Term Memory (LSTM) networks, are highly effective in dealing with sequential data patterns, including network traffic data. As acknowledged, RNNs and LSTMs have been extensively studied in recent intrusion detection research with many papers demonstrating their effectiveness in learning temporal dependencies. However, while these models excel in processing time-series data, our research focuses on leveraging the unique properties of CNNs for the specific nature of the data used in this work—namely, spectrogram images representing IoT network traffic.

The necessity for XIoT stems from the inability of existing IDSs to address critical challenges in IoT security. Traditional methods, including machine learning and some deep learning approaches, struggle to provide real-time detection, handle large-scale data, or offer interpretability. By transforming raw IoT traffic into spectrogram images, XIoT enables CNNs to extract nuanced spatial and temporal patterns, addressing these limitations effectively.

Spectrogram Data Representation: In this research, network traffic data are transformed into spectrogram images, which capture both spatial and frequency information. CNNs are particularly effective at extracting spatial features and patterns from images, making them an ideal choice for analyzing these spectrograms. The capability of CNNs to capture local patterns across the image allows them to detect subtle, localized anomalies in network traffic, which are critical for identifying cyber threats.

Computational Efficiency and Scalability: CNNs offer significant advantages in terms of computational efficiency, especially when processing large-scale data in real-time environments, which is crucial for IoT applications. RNNs and LSTMs, while powerful for sequential tasks, often suffer from higher computational costs and longer training times due to their sequential nature. In contrast, CNNs can process data in parallel, making them more scalable and efficient for the real-time analysis of vast IoT-generated traffic.

Robustness to Input Variations: IoT data are prone to a variety of attacks and perturbations, such as shifts in scale, rotation, and translation. CNNs are inherently robust to such variations due to their ability to learn hierarchical features through convolutional layers. This robustness is critical for ensuring reliable detection across diverse IoT environments, where data can be highly dynamic and variable.

Explainability and Interpretability: The ability to integrate explainable AI (XAI) mechanisms into CNNs provides a significant advantage in security-critical applications like IDS. Transparency in decision making is vital for building trust among stakeholders in IoT security. The architectural properties of CNNs make them well suited for incorporating interpretability techniques, allowing the model’s predictions to be more easily understood and trusted by security analysts.

Thus, while acknowledging the effectiveness of RNNs and LSTMs for time-series data, CNNs were selected due to their superior performance in image-based analysis, scalability, and interpretability—key attributes that align with the requirements and goals of this research. This strategic choice enhances the practicality of the proposed IDS for real-time, scalable IoT security applications.

1.3. Problem Statement

With the rapid proliferation of Internet of Things (IoT) devices, the security of these networks has become a critical concern. Traditional intrusion detection systems are often ill suited to handle the unique challenges posed by IoT networks, such as their large scale, diverse device types, and evolving attack strategies. This paper proposes a novel deep learning-based approach, using explainable gradient-based Convolutional Neural Networks (EG-CNNs), to detect and classify IoT network attacks. Our aim is to develop a model that not only achieves high detection accuracy but also provides transparency in its decision-making process, which is crucial for cybersecurity professionals to understand and trust the model’s outputs. This research contributes to the growing need for effective and interpretable IoT intrusion detection systems. The rapid proliferation of Internet of Things (IoT) devices has led to significant advancements across various sectors. However, it has also introduced substantial cybersecurity vulnerabilities. An alarming analysis revealed that 83% of interactions between IoT devices occur in plain text, and 41% of these interactions lack any form of secure communication, such as SSL. This widespread insecurity exposes IoT networks to cyberattacks, particularly wireless attacks, due to their interconnected nature. Consequently, these vulnerabilities result in frequent compromises of communication channels and component interfaces within large systems, leading to the propagation of failures across different locations.

Traditional security measures, such as access control and encryption, offer some protection but are insufficient. Many attacks exploit common vulnerabilities in IoT applications, often resulting from rushed development cycles. These attacks can significantly impact the reliability and availability of IoT services, especially in critical infrastructure, where IoT applications are heavily relied upon. Moreover, existing detection and mitigation strategies lack the robustness required to counter these evolving threats effectively.

Given the critical nature of these vulnerabilities, there is a pressing need for advanced detection and mitigation mechanisms that not only provide high accuracy but also offer explainability to enhance trust and decision making. This research introduces XIoT, an explainable deep learning-based IoT attack detection model, to comprehensively address these cybersecurity challenges.

Despite advancements in Intrusion Detection Systems (IDSs), current solutions fall short in handling large-scale IoT traffic in real time, adapting to rapidly evolving attack patterns, and offering transparent decision making. Specific challenges include the following:

Latency in high-speed networks: Current models are unable to process extensive datasets in optical networks efficiently.

Limited interpretability: Analysts lack actionable insights from existing ‘black-box’ models.

Inadequate adaptability: Many IDSs fail to generalize to novel or evolving attack scenarios. These gaps significantly compromise the security of IoT ecosystems, such as smart grids, healthcare systems, and industrial IoT, necessitating the development of a novel approach.