Enhanced Three-Axis Frame and Wand-Based Multi-Camera Calibration Method Using Adaptive Iteratively Reweighted Least Squares and Comprehensive Error Integration

Abstract

1. Introduction

2. Materials and Methods

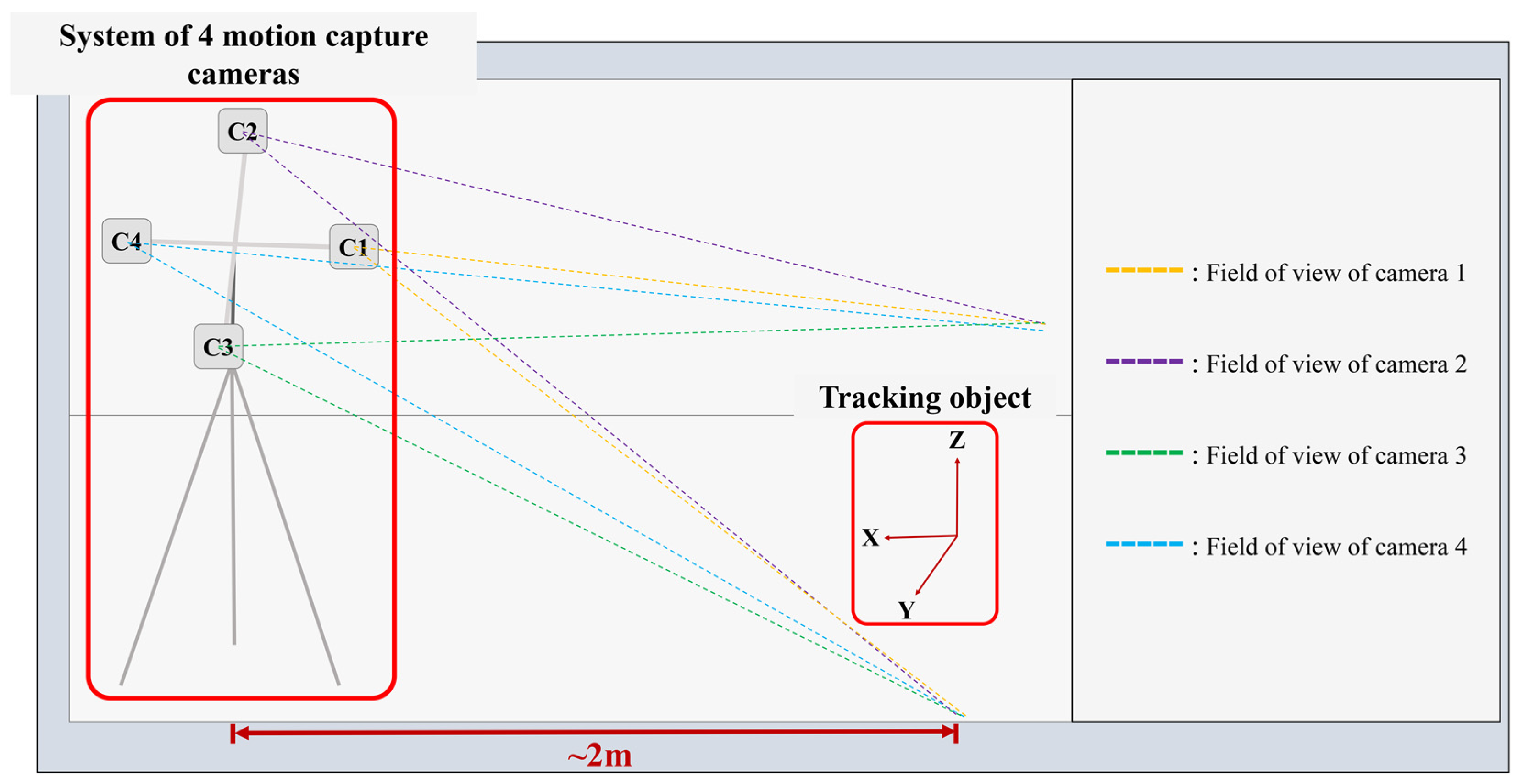

2.1. Experiments

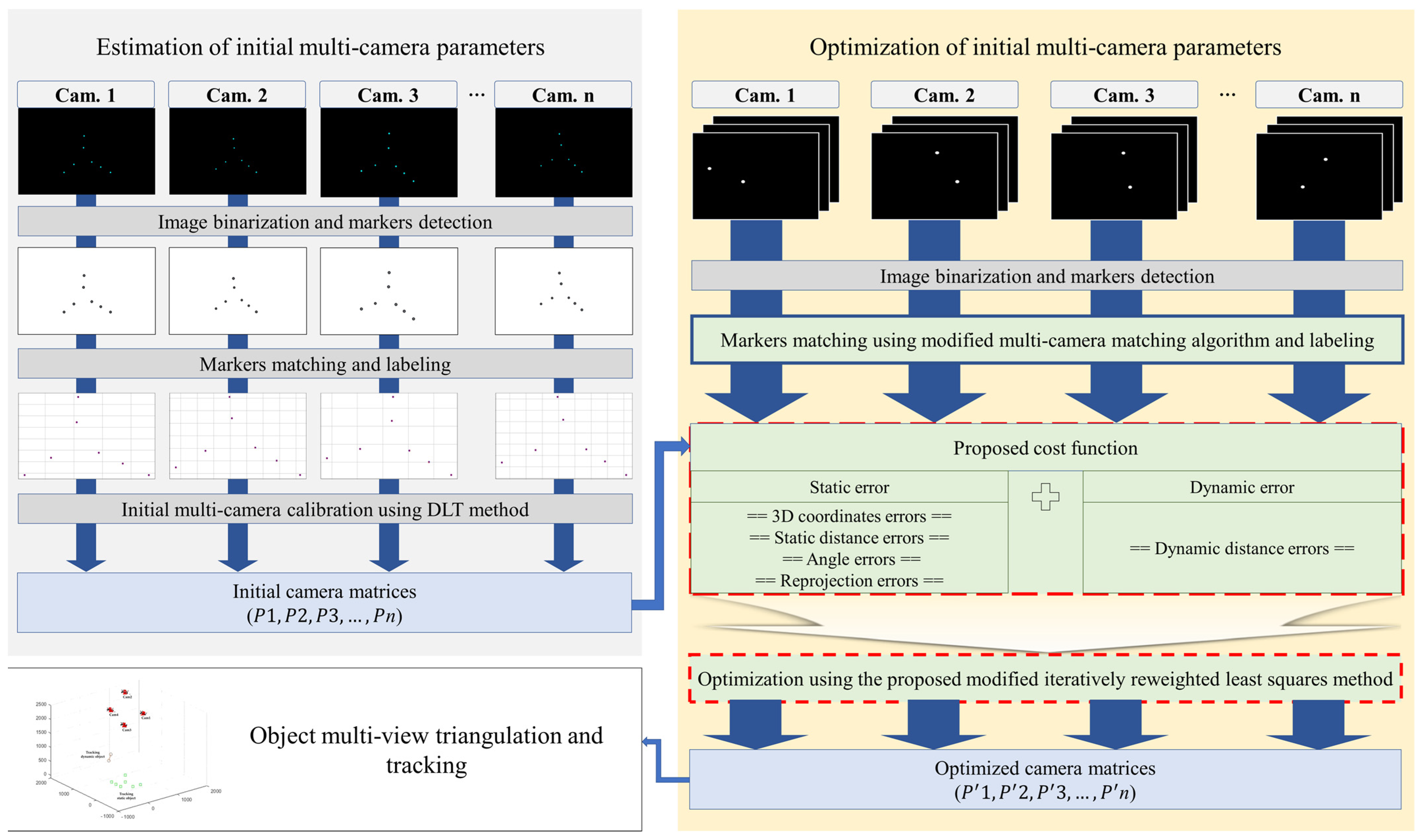

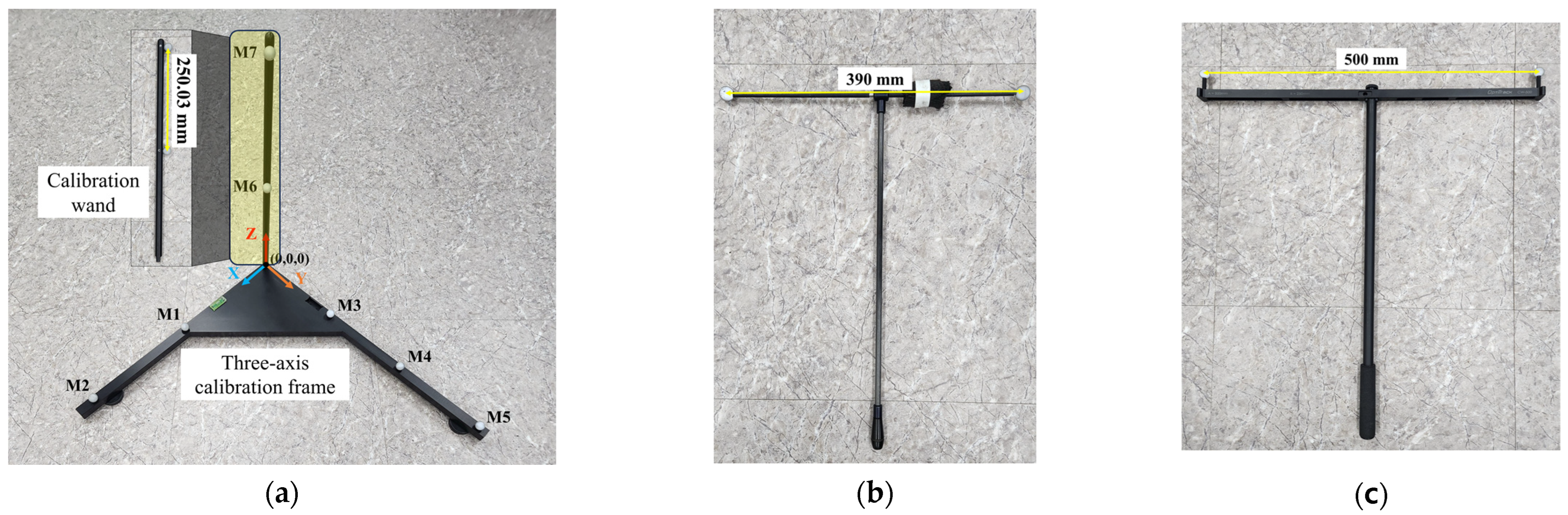

2.2. Estimation of Initial Multi-Camera Parameters

2.3. Fine-Tuning of Multi-Camera Parameters Using Optimization Technique

2.3.1. Collection and Preprocessing of Wanding Data

- Construct a cost matrix where each element represents the cost of assigning marker from one camera to marker in another camera;

- Subtract the minimum value in each row from all elements within that row for the entire cost matrix;

- Subtract the minimum value in each column from all elements within that column for the entire cost matrix;

- Cover all zeros in the resulting matrix using a minimum number of horizontal and vertical lines;

- If the minimum number of covering lines equals the number of rows (or columns), an optimal assignment can be made among the covered zeros. If not, the matrix is adjusted, and the process repeated.

- 6.

- Let represent the detected points at time and represent the detected points at time ;

- 7.

- For each point and point , calculate the Euclidean distance ;

- 8.

- Identify the pair such that the distance is minimized. This is typically achieved using the Hungarian algorithm to ensure an optimal assignment;

- 9.

- Update the matches and proceed to the next frame.

2.3.2. Proposed Cost Function and Optimization Method

2.4. Comparative Validation and Performance Evaluation of the Proposed Multi-Camera Calibration Method

3. Results and Discussion

3.1. Sensitivity Analysis of the Proposed Cost Function

3.2. Comparative Analysis of the Proposed AIRLS Optimization Method

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AIRLS | Adaptive iteratively reweighted least squares |

| DLT | Direct Linear Transformation |

| SBA | Sparse Bundle Adjustment |

| LM | Levenberg–Marquardt optimization method |

| P | Projection matrix |

| Intrinsic matrix | |

| R | Rotation matrix |

| T | Translation vector |

| F | Fundamental matrix |

| Focal lengths of the camera along the x and y axes | |

| Skew coefficient | |

| Principal point coordinates | |

| Rotation angles around the x, y, and z axes | |

| Translation vector along the x, y, and z axes | |

| Radial distortion coefficients | |

| Tangential distortion coefficients | |

| Static 3D coordinate error | |

| Static distance error | |

| Static angle error | |

| Static reprojection error | |

| Dynamic wand distance error |

References

- Wibowo, M.C.; Nugroho, S.; Wibowo, A. The use of motion capture technology in 3D animation. Int. J. Comput. Digit. Syst. 2024, 15, 975–987. [Google Scholar] [CrossRef] [PubMed]

- Yuhai, O.; Choi, A.; Cho, Y.; Kim, H.; Mun, J.H. Deep-Learning-Based Recovery of Missing Optical Marker Trajectories in 3D Motion Capture Systems. Bioengineering 2024, 11, 560. [Google Scholar] [CrossRef] [PubMed]

- Shin, K.Y.; Mun, J.H. A multi-camera calibration method using a 3-axis frame and wand. Int. J. Precis. Eng. Manuf. 2012, 13, 283–289. [Google Scholar] [CrossRef]

- Yoo, H.; Choi, A.; Mun, J.H. Acquisition of point cloud in CT image space to improve accuracy of surface registration: Application to neurosurgical navigation system. J. Mech. Sci. Technol. 2020, 34, 2667–2677. [Google Scholar] [CrossRef]

- Guan, J.; Deboeverie, F.; Slembrouck, M.; Van Haerenborgh, D.; Van Cauwelaert, D.; Veelaert, P.; Philips, W. Extrinsic Calibration of Camera Networks Using a Sphere. Sensors 2015, 15, 18985–19005. [Google Scholar] [CrossRef]

- Jiang, B.; Hu, L.; Xia, S. Probabilistic triangulation for uncalibrated multi-view 3D human pose estimation. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 14850–14860. [Google Scholar] [CrossRef]

- Abdel-Aziz, Y.I.; Karara, H.M. Direct Linear Transformation from Comparator Coordinates into Object Space Coordinates in Close-Range Photogrammetry. Photogramm. Eng. Remote Sens. 2015, 81, 103–107. [Google Scholar] [CrossRef]

- Cohen, E.J.; Bravi, R.; Minciacchi, D. 3D reconstruction of human movement in a single projection by dynamic marker scaling. PLoS ONE 2017, 12, e0186443. [Google Scholar] [CrossRef]

- Mitchelson, J.; Hilton, A. Wand-Based Multiple Camera Studio Calibration. Center Vision, Speech and Signal Process Technical Report. Guildford, England. 2003. Available online: http://info.ee.surrey.ac.uk/CVSSP/Publications/papers/vssp-tr-2-2003.pdf (accessed on 8 May 2024).

- Pribanić, T.; Sturm, P.; Cifrek, M. Calibration of 3D kinematic systems using orthogonality constraints. Mach. Vis. Appl. 2007, 18, 367–381. [Google Scholar] [CrossRef]

- Petković, T.; Gasparini, S.; Pribanić, T. A note on geometric calibration of multiple cameras and projectors. In Proceedings of the 2020 43rd International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 28 September–2 October 2020; pp. 1157–1162. [Google Scholar] [CrossRef]

- Borghese, N.A.; Cerveri, P. Calibrating a video camera pair with a rigid bar. Pattern Recogn. 2000, 33, 81–95. [Google Scholar] [CrossRef]

- Uematsu, Y.; Teshima, T.; Saito, H.; Honghua, C. D-Calib: Calibration Software for Multiple Cameras System. In Proceedings of the 14th International Conference on Image Analysis and Processing, Modena, Italy, 10–14 September 2007; pp. 285–290. [Google Scholar] [CrossRef]

- Loaiza, M.E.; Raposo, A.B.; Gattass, M. Multi-camera calibration based on an invariant pattern. Comput. Graph. 2011, 35, 198–207. [Google Scholar] [CrossRef]

- Zhang, J.; Zhu, J.; Deng, H.; Chai, Z.; Ma, M.; Zhong, X. Multi-camera calibration method based on a multi-plane stereo target. Appl. Optics. 2019, 58, 9353–9359. [Google Scholar] [CrossRef] [PubMed]

- Zhou, C.; Tan, D.; Gao, H. A high-precision calibration and optimization method for stereo vision system. In Proceedings of the 2006 9th International Conference on Control, Automation, Robotics and Vision, Singapore, 5–8 December 2006; pp. 1–5. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Pribanić, T.; Peharec, S.; Medved, V. A comparison between 2D plate calibration and wand calibration for 3D kinematic systems. Kinesiology 2009, 41, 147–155. [Google Scholar]

- Siddique, T.H.M.; Rehman, Y.; Rafiq, T.; Nisar, M.Z.; Ibrahim, M.S.; Usman, M. 3D object localization using 2D estimates for computer vision applications. In Proceedings of the 2021 Mohammad Ali Jinnah University International Conference on Computing (MAJICC), Karachi, Pakistan, 15–17 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Lourakis, M.I.A.; Argyros, A.A. SBA: A software package for generic sparse bundle adjustment. ACM Trans. Math. Softw. (TOMS) 2009, 2, 1–30. [Google Scholar] [CrossRef]

- Nutta, T.; Sciacchitano, A.; Scarano, F. Wand-based calibration technique for 3D LPT. In Proceedings of the 2023 15th International Symposium on Particle Image Velocimetry (ISPIV), San Diego, CA, USA, 19–21 June 2023. [Google Scholar]

- Zheng, H.; Duan, F.; Li, T.; Li, J.; Niu, G.; Cheng, Z.; Li, X. A Stable, Efficient, and High-Precision Non-Coplanar Calibration Method: Applied for Multi-Camera-Based Stereo Vision Measurements. Sensors 2023, 23, 8466. [Google Scholar] [CrossRef]

- Zhang, S.; Fu, Q. Wand-Based Calibration of Unsynchronized Multiple Cameras for 3D Localization. Sensors 2024, 24, 284. [Google Scholar] [CrossRef]

- Hartley, R.I. In defense of the eight-point algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 1997, 19, 580–593. [Google Scholar] [CrossRef]

- Fan, B.; Dai, Y.; Seo, Y.; He, M. A revisit of the normalized eight-point algorithm and a self-supervised deep solution. Vis. Intell. 2024, 2, 3. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 4th ed.; Pearson Education: Rotherham, UK, 2002; ISBN 978-0-13-335672-4. [Google Scholar]

- Otsu, N. A Threshold Selection Method from Gray-Level Histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Suzuki, S.; Be, K. A Topological structural analysis of digitized binary images by border following. Comput. Vis. Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Troiano, M.; Nobile, E.; Mangini, F.; Mastrogiuseppe, M.; Conati Barbaro, C.; Frezza, F. A Comparative Analysis of the Bayesian Regularization and Levenberg-Marquardt Training Algorithms in Neural Networks for Small Datasets: A Metrics Prediction of Neolithic Laminar Artefacts. Information 2024, 15, 270. [Google Scholar] [CrossRef]

- Bellavia, S.; Gratton, S.; Riccietti, E. A Levenberg-Marquardt method for large nonlinear least-squares problems with dynamic accuracy in functions and gradients. Numer. Math. 2018, 140, 791–825. [Google Scholar] [CrossRef]

- Wang, T.; Karel, J.; Bonizzi, P.; Peeters, R.L.M. Influence of the Tikhonov Regularization Parameter on the Accuracy of the Inverse Problem in Electrocardiography. Sensors 2023, 23, 1841. [Google Scholar] [CrossRef]

- Silvatti, A.P.; Salve Dias, F.A.; Cerveri, P.; Barros, R.M. Comparison of different camera calibration approaches for underwater applications. J. Biomech. 2012, 45, 1112–1116. [Google Scholar] [CrossRef]

| Initial Error | SBA Optimization [20,21] | LM Optimization [22,23] | Proposed AIRLS | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Static Error | Dynamic Error | Average Error | Static Error | Dynamic Error | Average Error | Static Error | Dynamic Error | Total Error | Static Error | Dynamic Error | Total Error | |

| Average error (mm) | 0.36 | 2.47 | 1.42 | 0.59 | 0.25 | 0.42 | 0.48 | 0.23 | 0.36 | 0.28 | 0.25 | 0.27 |

| SD (mm) | 0.30 | 1.73 | 1.02 | 0.35 | 0.18 | 0.27 | 0.28 | 0.15 | 0.22 | 0.19 | 0.25 | 0.22 |

| Min error (mm) | 0.03 | 0.00 | 0.02 | 0.10 | 0.00 | 0.05 | 0.03 | 0.00 | 0.02 | 0.00 | 0.00 | 0.00 |

| Max error (mm) | 1.43 | 6.37 | 3.90 | 1.26 | 0.96 | 1.11 | 1.05 | 0.78 | 0.92 | 0.74 | 1.07 | 0.91 |

| Parameter | Camera 1 before Optimization | Camera 1 after Optimization | Camera 2 before Optimization | Camera 2 after Optimization | Camera 3 before Optimization | Camera 3 after Optimization | Camera 4 before Optimization | Camera 4 after Optimization |

|---|---|---|---|---|---|---|---|---|

| Intrinsic parameters | ||||||||

| fx | 1232.63 | 1232.42 | 1270.59 | 1378.36 | 1278.43 | 1277.18 | 1223.13 | 1139.12 |

| fy | 1234.91 | 1240.25 | 1270.71 | 1371.16 | 1272.48 | 1280.75 | 1221.88 | 1149.17 |

| s | 11.3 | 11.6 | 2.4 | 5.7 | −18.9 | −9.8 | −4.29 | −7.78 |

| u0 | 637.0 | 598.52 | 590.84 | 722.38 | 607.11 | 620.52 | 595.02 | 592.75 |

| v0 | 529.83 | 510.28 | 481.85 | 389.02 | 556.06 | 473.49 | 513.53 | 578.96 |

| Extrinsic parameters | ||||||||

| Rx | −0.6073 | −0.6307 | −0.7438 | −0.7053 | −0.8185 | −0.8134 | −0.8402 | −0.8358 |

| Ry | 0.7929 | 0.7758 | 0.664 | 0.7089 | 0.5744 | 0.5813 | 0.5216 | 0.5289 |

| Rz | 0.0492 | 0.0166 | −0.0767 | 0.0056 | 0.0134 | 0.0218 | −0.1483 | −0.1474 |

| tx | −67.74 | 15.25 | 51.0 | −261.34 | 46.77 | 22.49 | 79.61 | 82.05 |

| ty | 79.79 | 121.24 | 154.34 | 371.23 | 46.44 | 201.87 | 26.59 | −120.11 |

| tz | 2557.62 | 2568.86 | 2938.49 | 3193.72 | 2354.75 | 2365.91 | 2714.54 | 2535.75 |

| Distortion coefficients | ||||||||

| k1 | 9.09 × 10−9 | 4.44 × 10−9 | −6.7 × 10−8 | 6.78 × 10−8 | −2.2 × 10−9 | 3.64 × 10−8 | −1.4 × 10−7 | 2.06 × 10−6 |

| k2 | −5.41 × 10−13 | −7.7 × 10−13 | 2.29 × 10−12 | −2.37 × 10−12 | 1.53 × 10−14 | −1.43 × 10−13 | 6.75 × 10−12 | −1.14 × 10−10 |

| k3 | 5.37 × 10−18 | 7.33 × 10−18 | −1.72 × 10−17 | 1.13 × 10−17 | 1.26 × 10−19 | 3.51 × 10−19 | −6.84 × 10−17 | 1.47 × 10−15 |

| p1 | −1.91 × 10−7 | −1.08 × 10−6 | 8.39 × 10−7 | −3.58 × 10−6 | 2.69 × 10−9 | 4.44 × 10−7 | 1.27 × 10−6 | −1.4 × 10−5 |

| p2 | 5.96 × 10−8 | 1.31 × 10−7 | 1.65 × 10−7 | −1.14 × 10−6 | −1.51 × 10−7 | −7.37 × 10−6 | 2.57 × 10−7 | 1.76 × 10−5 |

| Normalized DLT Calibration (1) [24,25] | Orthogonal Wand Triad Calibration (2) [10,11] | 3-Axis Frame and Wand Calibration (3) [2,3] | Proposed Calibration (4) | ANOVA Results | Post-Hoc Test | |

|---|---|---|---|---|---|---|

| 390 mm wand (mm) | ||||||

| Average | 5.50 ± 1.80 | 3.45 ± 0.15 | 1.21 ± 0.17 | 0.42 ± 0.09 | F = 31.98, p = 0.00 | (1) > (2) > (3) > (4) |

| SD | 3.02 ± 0.56 | 2.06 ± 0.15 | 1.03 ± 0.23 | 0.32 ± 0.10 | F = 69.48, p = 0.00 | (1) > (2) > (3) > (4) |

| Min | 0.01 ± 0.01 | 0.01 ± 0.01 | 0.00 ± 0.00 | 0.00 ± 0.00 | F = 2.67, p = 0.08 | (1), (2), (3), (4) |

| Max | 11.89 ± 2.67 | 7.65 ± 0.51 | 3.88 ± 1.05 | 1.48 ± 0.64 | F = 46.39, p = 0.00 | (1) > (2) > (3) > (4) |

| 500 mm wand (mm) | ||||||

| Average | 6.73 ± 1.83 | 3.77 ± 0.44 | 1.74 ± 0.07 | 0.46 ± 0.13 | F = 42.01, p = 0.00 | (1) > (2) > (3) > (4) |

| SD | 3.77 ± 0.95 | 2.46 ± 0.27 | 1.43 ± 0.19 | 0.43 ± 0.12 | F = 39.76, p = 0.00 | (1) > (2) > (3) > (4) |

| Min | 0.36 ± 0.62 | 0.01 ± 0.01 | 0.02 ± 0.05 | 0.00 ± 0.00 | F = 1.56, p = 0.24 | (1), (2), (3), (4) |

| Max | 14.22 ± 3.27 | 9.29 ± 1.14 | 5.55 ± 0.48 | 1.82 ± 0.29 | F = 45.65, p = 0.00 | (1) > (2) > (3) > (4) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuhai, O.; Cho, Y.; Choi, A.; Mun, J.H. Enhanced Three-Axis Frame and Wand-Based Multi-Camera Calibration Method Using Adaptive Iteratively Reweighted Least Squares and Comprehensive Error Integration. Photonics 2024, 11, 867. https://doi.org/10.3390/photonics11090867

Yuhai O, Cho Y, Choi A, Mun JH. Enhanced Three-Axis Frame and Wand-Based Multi-Camera Calibration Method Using Adaptive Iteratively Reweighted Least Squares and Comprehensive Error Integration. Photonics. 2024; 11(9):867. https://doi.org/10.3390/photonics11090867

Chicago/Turabian StyleYuhai, Oleksandr, Yubin Cho, Ahnryul Choi, and Joung Hwan Mun. 2024. "Enhanced Three-Axis Frame and Wand-Based Multi-Camera Calibration Method Using Adaptive Iteratively Reweighted Least Squares and Comprehensive Error Integration" Photonics 11, no. 9: 867. https://doi.org/10.3390/photonics11090867

APA StyleYuhai, O., Cho, Y., Choi, A., & Mun, J. H. (2024). Enhanced Three-Axis Frame and Wand-Based Multi-Camera Calibration Method Using Adaptive Iteratively Reweighted Least Squares and Comprehensive Error Integration. Photonics, 11(9), 867. https://doi.org/10.3390/photonics11090867