From Text to Hologram: Creation of High-Quality Holographic Stereograms Using Artificial Intelligence

Abstract

1. Introduction

2. Materials and Methods

2.1. Holographic Plates for CHIMERA Recordings

2.2. CHIMERA Recordings

2.3. Initial Image Generation Using Diffusion AI

2.4. Workflow for Perspective Image Generation

2.5. CHIMERA Printing

2.6. Computer Specifications

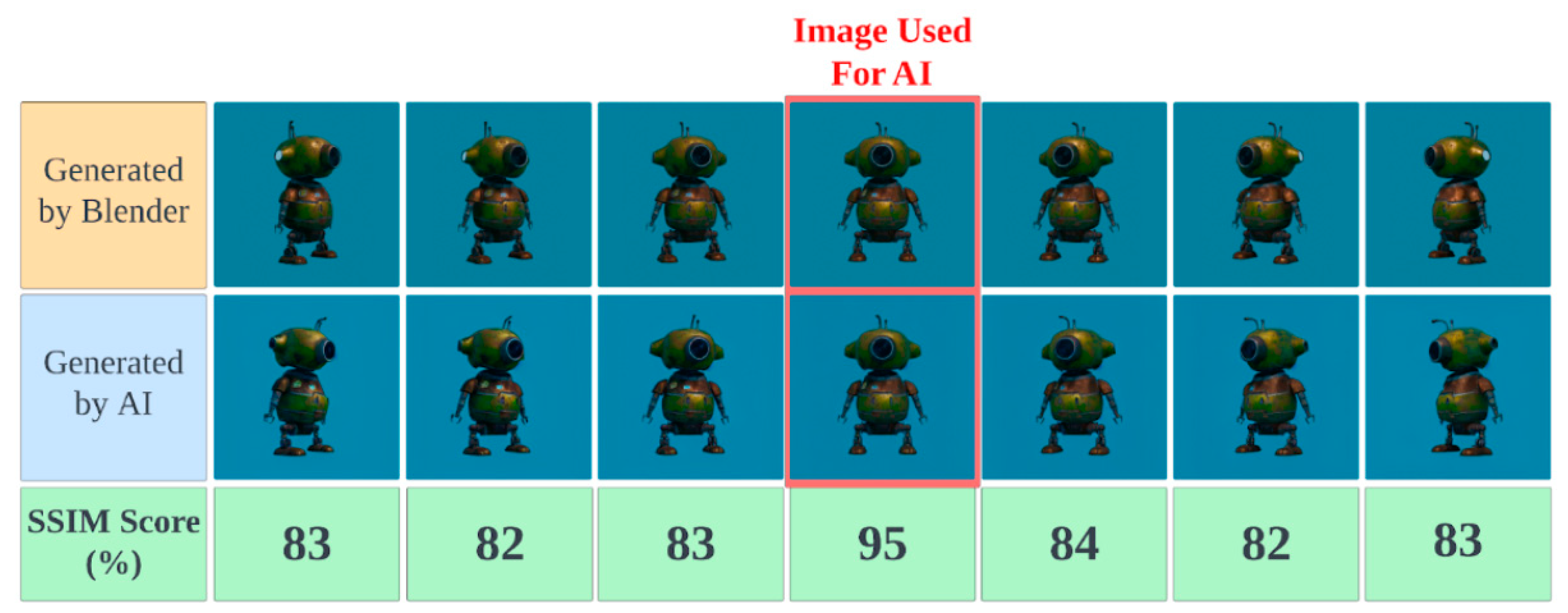

2.7. Evaluation of the Accuracy of the Reconstruction

3. Results

3.1. Testing the Viability of AI-Generated Perspective Views

3.2. Creation of the Initial Image

3.3. Perspective Image Generation and Detailed Upscaling Process

3.4. Final Holograms

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yamaguchi, M.; Koyama, T.; Endoh, H.; Ohyama, N.; Takahashi, S.; Iwata, F. Development of a prototype full-parallax holoprinter. In Practical Holography IX; International Society for Optics and Photonics: Washington, DC, USA, 1995; Volume 2406, pp. 50–56. [Google Scholar]

- Bjelkhagen, H.; Brotherton-Ratcliffe, D. Ultra-Realistic Imaging: Advanced Techniques in Analogue and Digital Colour Holography; CRC Press: Boca Raton, FL, USA, 2013. [Google Scholar]

- Su, J.; Yan, X.; Huang, Y.; Jiang, X.; Chen, Y.; Zhang, T. Progress in the synthetic holographic stereogram printing technique. Appl. Sci. 2018, 8, 851. [Google Scholar] [CrossRef]

- Gentet, P.; Gentet, Y.; Lee, S. An in-house-designed scanner for CHIMERA holograms. In Practical Holography XXXVII: Displays, Materials, and Applications; SPIE: Bellingham, WA, USA, 2023; Volume 12445, pp. 79–83. [Google Scholar]

- Malihi, S.; Valadan Zoej, M.J.; Hahn, M.; Mokhtarzade, M.; Arefi, H. 3D building reconstruction using dense photogrammetric point cloud. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2016, 41, 71–74. [Google Scholar]

- Kerbl, B.; Kopanas, G.; Leimkühler, T.; Drettakis, G. 3D Gaussian splatting for real-time radiance field rendering. ACM Trans. Graph. 2023, 42, 139–141. [Google Scholar] [CrossRef]

- Vizzo, I.; Mersch, B.; Marcuzzi, R.; Wiesmann, L.; Behley, J.; Stachniss, C. Make it dense: Self-supervised geometric scan completion of sparse 3D lidar scans in large outdoor environments. IEEE Robot. Autom. Lett. 2022, 7, 8534–8541. [Google Scholar] [CrossRef]

- Wang, H.; Fu, T.; Du, Y.; Gao, W.; Huang, K.; Liu, Z.; Van Katwyk, P.; Deac, A.; Anandkumar, A.; Bergen, K.; et al. Scientific discovery in the age of artificial intelligence. Nature 2023, 620, 47–60. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.K.; Kumar, S.; Mehra, P.S. Chat GPT & Google Bard AI: A Review. In Proceedings of the 2023 International Conference on IoT, Communication and Automation Technology (ICICAT), Gorakhpur, India, 23–24 June 2023; pp. 1–6. [Google Scholar]

- Kim, J.K.; Chua, M.; Rickard, M.; Lorenzo, A. ChatGPT and large language model (LLM) chatbots: The current state of acceptability and a proposal for guidelines on utilization in academic medicine. J. Pediatr. Urol. 2023, 19, 598–604. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Ko, H.K.; Park, G.; Jeon, H.; Jo, J.; Kim, J.; Seo, J. Large-scale text-to-image generation models for visual artists’ creative works. In Proceedings of the 28th International Conference on Intelligent User Interfaces, Sydney, NSW, Australia, 27–31 March 2023; pp. 919–933. [Google Scholar]

- Chen, X.; Wang, Y.; Zhang, L.; Zhuang, S.; Ma, X.; Yu, J.; Wang, Y.; Lin, D.; Qiao, Y.; Liu, Z. Seine: Short-to-long video diffusion model for generative transition and prediction. In Proceedings of the Twelfth International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Guo, Y.; Yang, C.; Rao, A.; Wang, Y.; Qiao, Y.; Lin, D.; Dai, B. Animatediff: Animate your personalized text-to-image diffusion models without specific tuning. arXiv 2023, arXiv:2307.04725. [Google Scholar]

- Dang, H.; Mecke, L.; Lehmann, F.; Goller, S.; Buschek, D. How to prompt? Opportunities and challenges of zero-and few-shot learning for human-AI interaction in creative applications of generative models. arXiv 2022, arXiv:2209.01390. [Google Scholar]

- Zamfirescu-Pereira, J.D.; Wong, R.Y.; Hartmann, B.; Yang, Q. Why Johnny can’t prompt: How non-AI experts try (and fail) to design LLM prompts. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; pp. 1–21. [Google Scholar]

- Zhai, Y.; Huang, H.; Sun, D.; Panezai, S.; Li, Z.; Qiu, K.; Li, M.; Zheng, Z.; Zhang, Z. End-to-end infrared radiation sensing technique based on holography-guided visual attention network. Opt. Lasers Eng. 2024, 178, 108201. [Google Scholar] [CrossRef]

- Nazir, A.; Hussain, A.; Singh, M.; Assad, A. Deep learning in medicine: Advancing healthcare with intelligent solutions and the future of holography imaging in early diagnosis. Multimedia Tools Appl. 2024, 1–64. [Google Scholar] [CrossRef]

- Gentet, P.; Coffin, M.; Gentet, Y.; Lee, S.-H. Recording of full-color snapshot digital holographic portraits using neural network image interpolation. Appl. Sci. 2023, 13, 12289. [Google Scholar] [CrossRef]

- Reda, F.; Kontkanen, J.; Tabellion, E.; Sun, D.; Pantofaru, C.; Curless, B. Film: Frame interpolation for large motion. 2022 European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2017; Springer: Cham, Switzerland, 2022; pp. 250–266. [Google Scholar]

- Gentet, Y.; Gentet, P. CHIMERA, a new holoprinter technology combining low-power continuous lasers and fast printing. Appl. Opt. 2019, 58, G226–G230. [Google Scholar] [CrossRef] [PubMed]

- Gentet, P.; Gentet, Y.; Lee, S.H. Ultimate 04 the new reference for ultra-realistic color holography. In Proceedings of the 2017 International Conference on Emerging Trends & Innovation in ICT (ICEI), Pune, India, 3–5 February 2017; pp. 162–166. [Google Scholar]

- Zhang, C.; Zhang, C.; Zhang, M.; Kweon, I.S. Text-to-image diffusion model in generative AI: A survey. arXiv 2023, arXiv:2303.07909. [Google Scholar]

- Ye, H.; Zhang, J.; Liu, S.; Han, X.; Yang, W. Ip-adapter: Text compatible image prompt adapter for text-to-image diffusion models. arXiv 2023, arXiv:2308.06721. [Google Scholar]

- Zhang, L.; Rao, A.; Agrawala, M. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 3836–3847. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Bakurov, I.; Buzzelli, M.; Schettini, R.; Castelli, M.; Vanneschi, L. Structural similarity index (SSIM) revisited: A data-driven approach. Expert Syst. Appl. 2022, 189, 116087. [Google Scholar] [CrossRef]

| SSIM Value | Quality | Description |

|---|---|---|

| SSIM > 90% | Very good quality | The differences are minimal and often imperceptible. |

| 80% < SSIM < 90% | Good quality | The differences are slight and may be perceptible but are not disturbing. |

| 70% < SSIM < 80% | Average quality | The differences are noticeable and can affect the visual experience. |

| 60% < SSIM < 70% | Fair quality | The differences are clearly visible and can be disturbing. |

| SSIM < 60% | Poor quality | The differences are significant, and the image is often unacceptable. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gentet, P.; Coffin, M.; Gentet, Y.; Lee, S.H. From Text to Hologram: Creation of High-Quality Holographic Stereograms Using Artificial Intelligence. Photonics 2024, 11, 787. https://doi.org/10.3390/photonics11090787

Gentet P, Coffin M, Gentet Y, Lee SH. From Text to Hologram: Creation of High-Quality Holographic Stereograms Using Artificial Intelligence. Photonics. 2024; 11(9):787. https://doi.org/10.3390/photonics11090787

Chicago/Turabian StyleGentet, Philippe, Matteo Coffin, Yves Gentet, and Seung Hyun Lee. 2024. "From Text to Hologram: Creation of High-Quality Holographic Stereograms Using Artificial Intelligence" Photonics 11, no. 9: 787. https://doi.org/10.3390/photonics11090787

APA StyleGentet, P., Coffin, M., Gentet, Y., & Lee, S. H. (2024). From Text to Hologram: Creation of High-Quality Holographic Stereograms Using Artificial Intelligence. Photonics, 11(9), 787. https://doi.org/10.3390/photonics11090787