7.1. Simulation Results

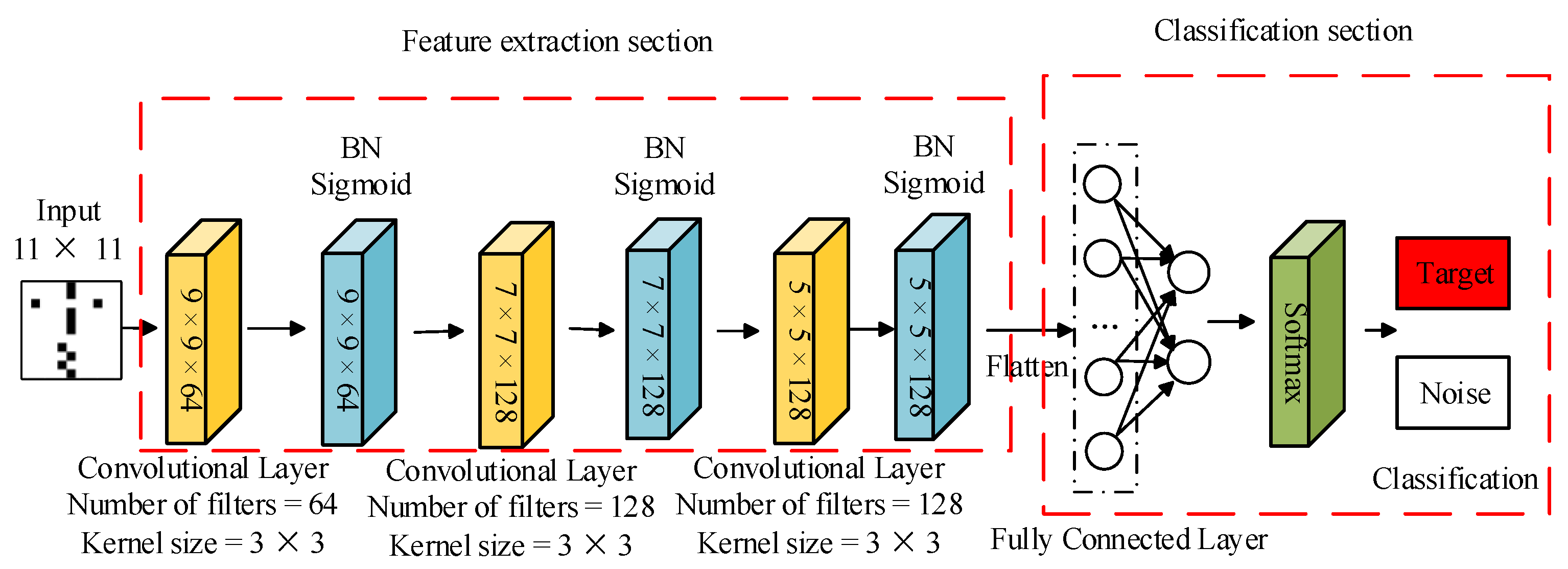

The detection performance of CNN and the CNN-PC method on the targets is analyzed through simulation experiments in this section.

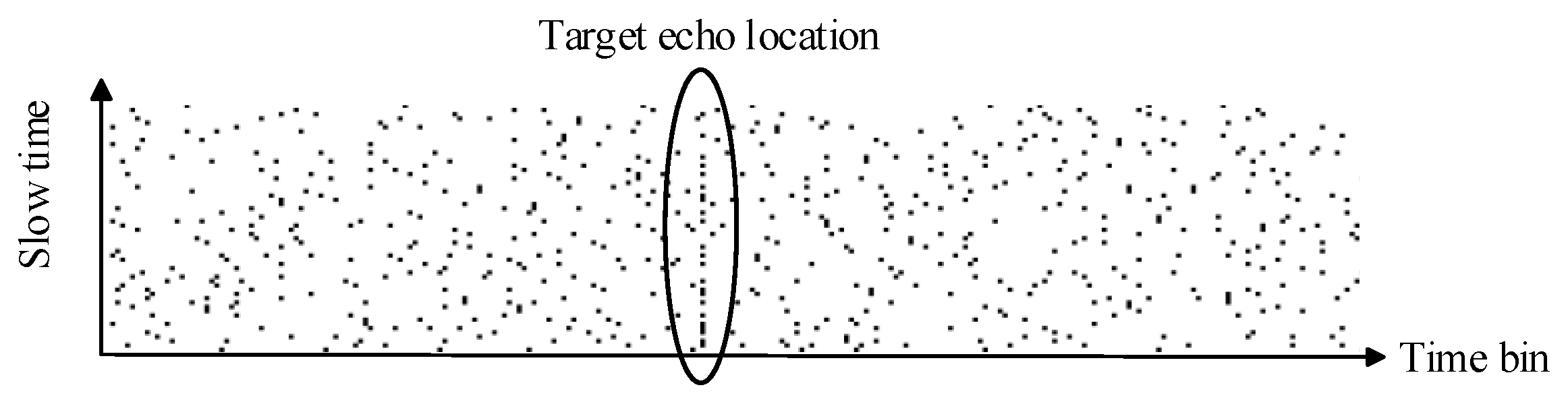

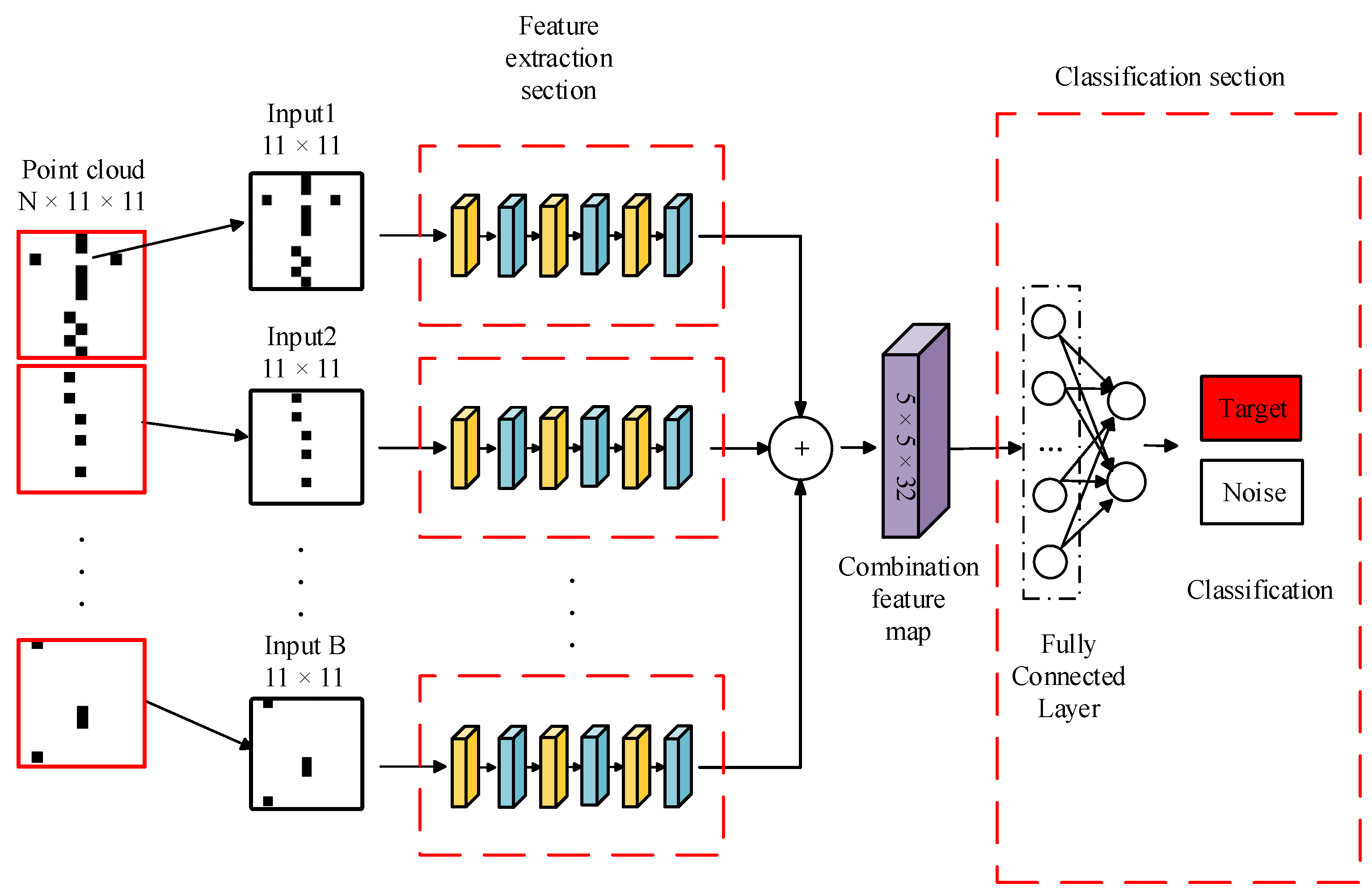

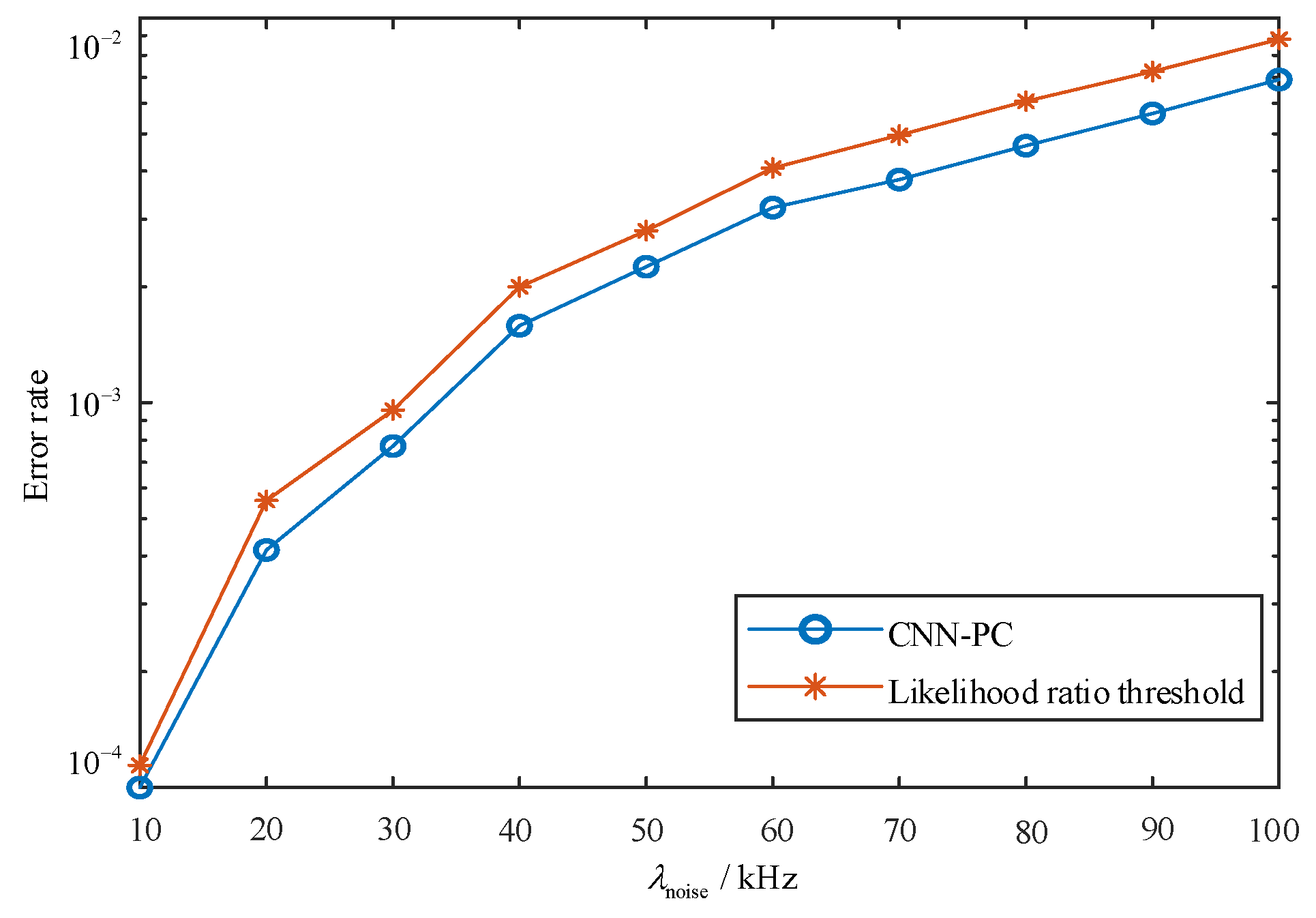

Firstly, the variation in the CNN network error rate is analyzed with respect to the noise photon rate (). The error rate refers to the proportion of incorrect judgments made by CNN regarding the presence of a target in the detection bin of the detection slice. In surveillance applications, the prior probability of target existence is typically low and unknown. Hence, this experiment solely focuses on discussing the error detection proportion in CNN when no target is present in the detection bin.

Using the parameters specified in

Section 5, we set the detection slice number

N to be 11 and SNR to be 15dB. Under varying the noise photon rate (

) within the range of [10 kHz,100 kHz], a total of 60,000 detection slices are generated for each noise photon rate: 10,000 slices with pure noise and 5000 slices with target in different reference bins. The detection of these slices is performed using CNN and likelihood ratio threshold methods. Subsequently, we calculate the error rate as a function of the noise photon rate as depicted in

Figure 6. The increase in the noise photon rate (

), as illustrated in

Figure 6, leads to an upward trend in the error rates of both the CNN and likelihood ratio threshold method. However, it is worth noting that the error rate of the CNN marginally outperforms that of the likelihood ratio threshold method.

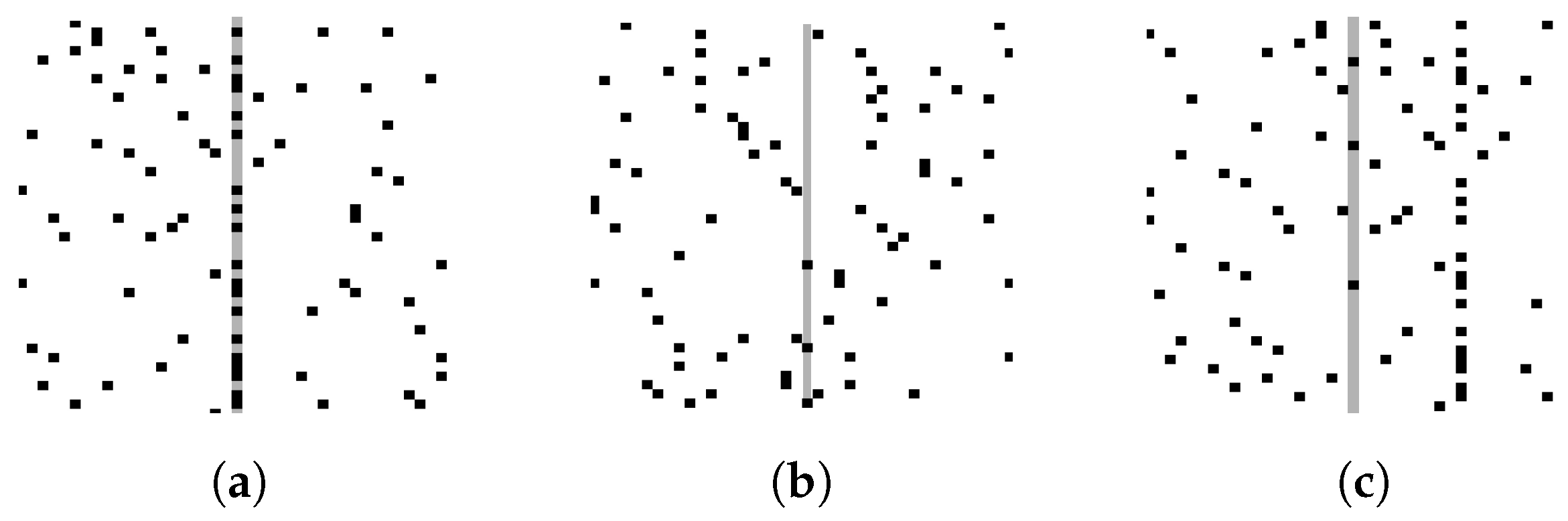

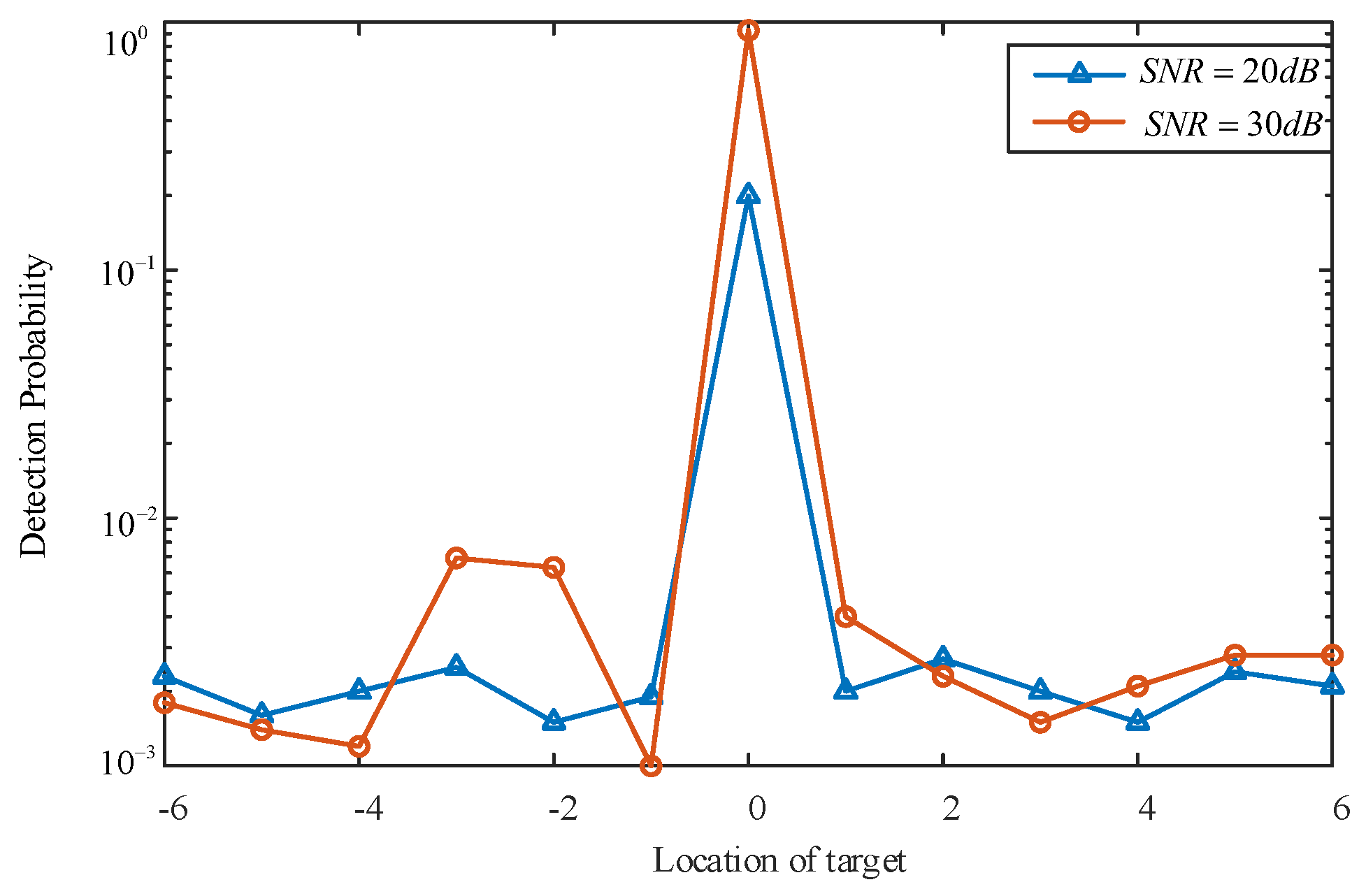

Secondly, the effectiveness of the CNN in suppressing targets within reference bins is further validated. By utilizing the parameters outlined in

Section 5, we set the noise photon rate (

) at 50 kHz and collect 5000 detection slices with varying SNRs by adjusting the peak photon number of the pulse (

). Subsequently, the window should be slid close to the time bin where the target is located, resulting in diverse positions of the target within the region of interest and generating 5000 corresponding detection slices for each position. The region of interest pertains to a zone centered on the detection bin and encompassed by both the protection bins and reference bins. The trained CNN of

Section 5 is then utilized to process these slices and determine the probability of detection as illustrated in

Figure 7. In

Figure 7, each value on the horizontal axis represents the relative time bin number where the target is located with respect to the detection bin, with 0 indicating that the target is within the detection bin itself. This experiment also considers scenarios where the target falls into protection bins, denoted by

. The range from

to

indicates different reference bins. As depicted in

Figure 7, under varying SNRs, effective target detection can only be achieved when the target resides at the detection bin; however, the probability of detecting the target is extremely low in both the reference and protection bins. This outcome aligns with the original design objective of the CNN.

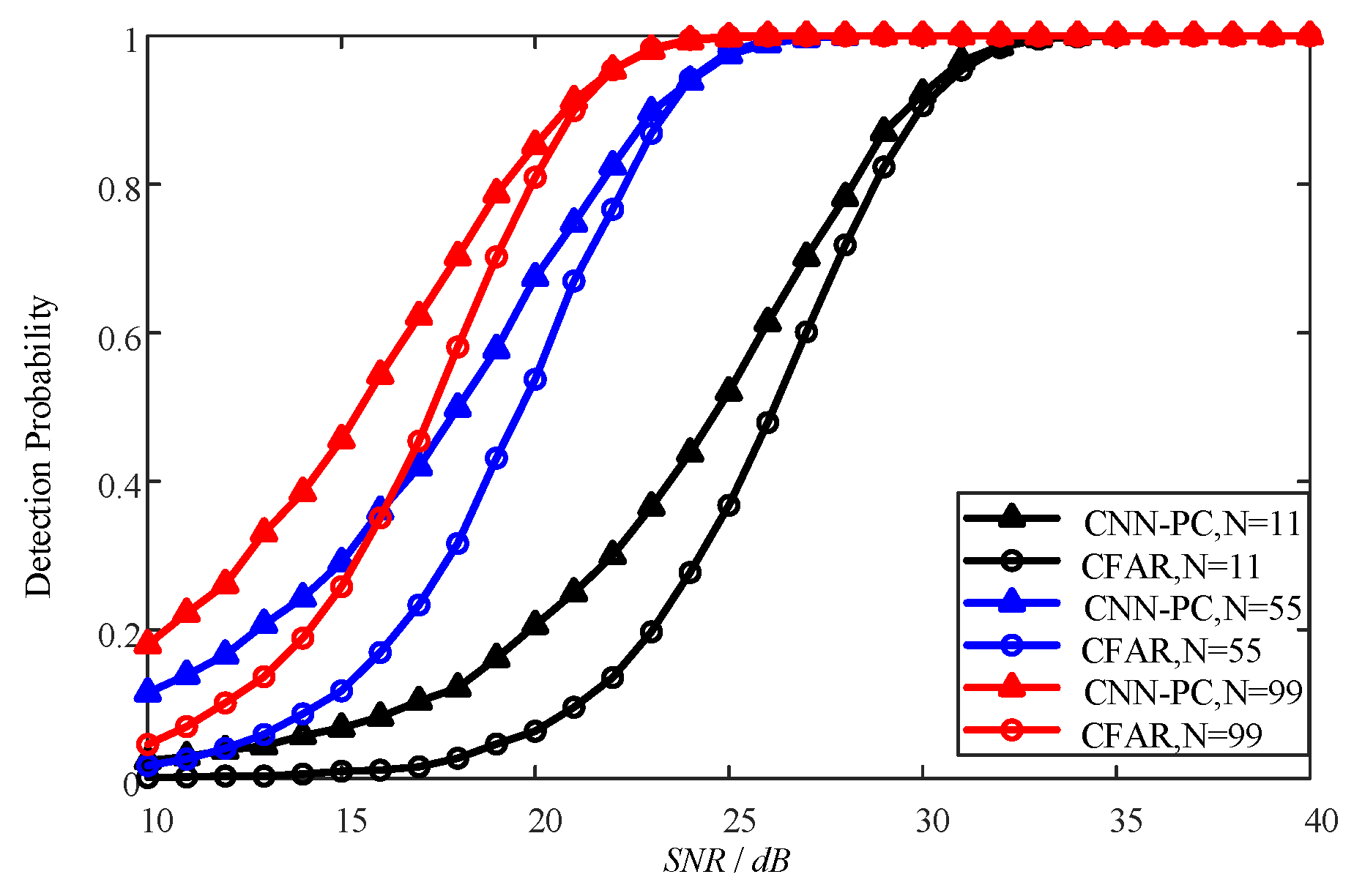

The detection performance of the CNN-PC method is further analyzed by comparing it with the classical CFAR method in the following sections. The parameters mentioned in

Section 5 are also utilized, with the exception that the noise photon rate (

) remains fixed at 50 kHz. By varying the peak photons number of the pulse (

) and the number of detection cycles (

N) according to Algorithm 1, we can obtain 5000 detection slices with different SNRs.

The CNN-PC method and the CFAR method, with a false alarm rate of

, are employed for target detection, respectively.

Figure 8 illustrates the corresponding detection probabilities achieved by these methods as a function of SNR and the number of detection cycles (

N). As depicted in

Figure 8, both the CNN-PC method and CFAR method exhibit an incremental increase in detection probability with rising SNR values. At the same SNR, the CNN-PC method has a higher detection probability. When the number of detection cycles is different, a larger number of detection cycles has superior detection performance at low SNRs. Similarly, under the same detection probability, the larger the number of detection cycles, the better the corresponding detection performance that can be achieved at a lower SNR. As the number of detection cycles increases, the corresponding improvement in the detection probability gradually slows down for both the CNN-PC and CFAR methods.

The detection performance of the CNN-PC method for the targets traversing time bins is analyzed. To demonstrate the correlation between the target echo pulse and the position within the time bin, we define the detection time difference

as the difference between the center moment of the echo pulse and that of the corresponding time bin. When

equals 0 ns, it indicates that the echo pulse coincides with its respective time bin. The parameters outlined in

Section 5 are also employed, except that the noise photon rate (

) is set at 50 kHz and the number of detection cycles (

N) is fixed at 44. By varying the peak photon number of the pulse (

), distinct detection slices with diverse SNRs for the target within the detection bin are obtained. Specifically, setting the target distance as

,

and

respectively yields corresponding detection time differences of

,

and

. Consequently, under each combination of detection time difference and SNR, 5000 slices are respectively generated. The CNN-PC method and CFAR method are employed for target detection. The percentage of slices in which the target is detected is computed, and the corresponding variations in detection probability with respect to SNR are illustrated in

Figure 9. As depicted in

Figure 9, when the target echo pulse is shifted forward relative to the detection bin (e.g.,

), the detection probability of the target no longer tends towards 100% as the SNR increases but rather reaches a peak at a certain level of SNR. Specifically, at 40 dB, there is a decline in the detection probability of the target. Although the CNN-PC method still outperforms the CFAR method under identical SNR conditions, both methods exhibit a rapid decrease in detection probability as SNR surpasses a specific threshold. This phenomenon indicates that it is not attributable to any algorithmic issue but rather stems from the inherent characteristics of the single-photon lidar system.

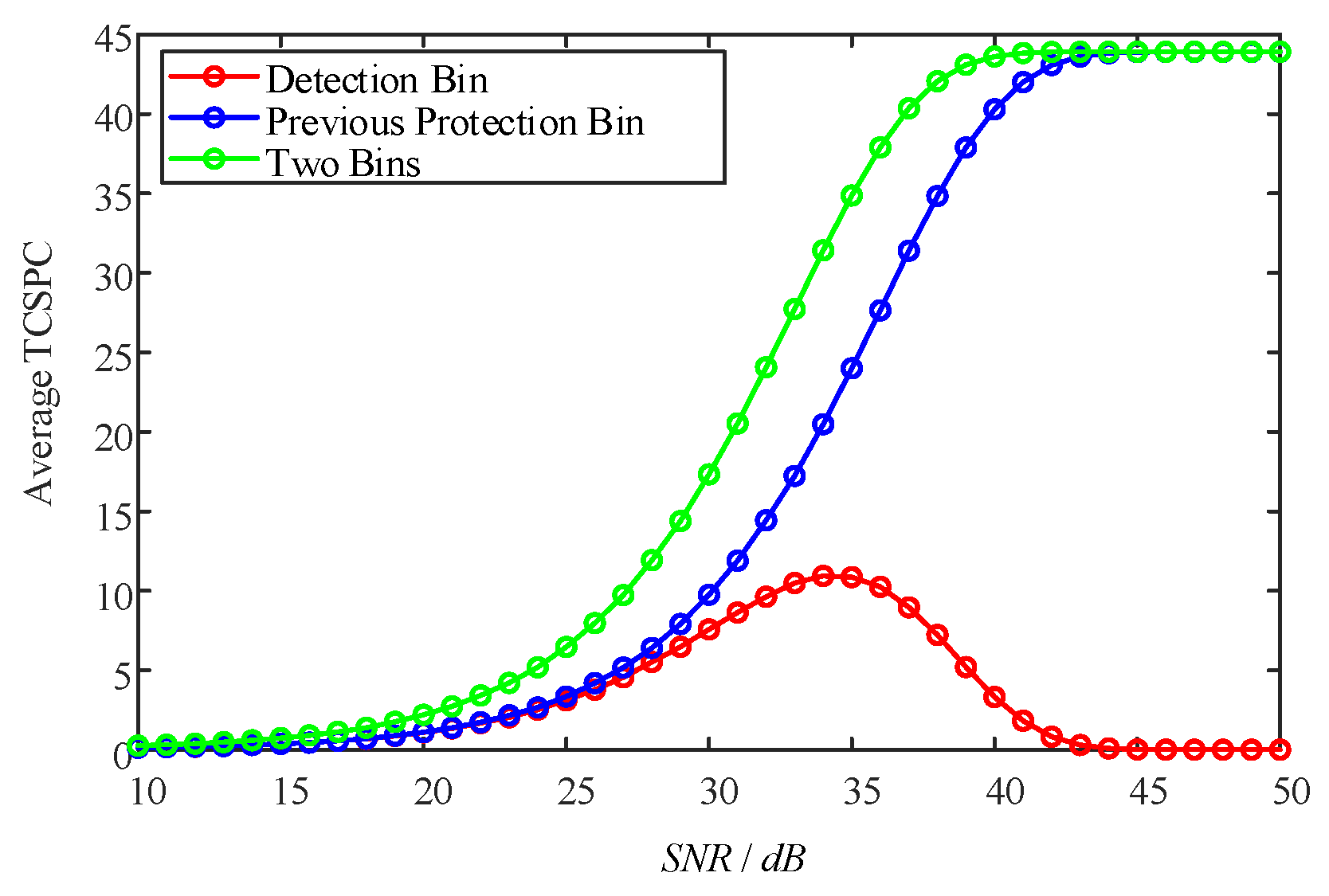

In order to analyze the cause behind the rapid decline in detection probability beyond a certain SNR threshold, when the target echo pulse is shifted forward relative to the detection bin shown in

Figure 9, the average TCSPC results of the detection bin and its preceding protection bin were calculated with

N = 44 and

as illustrated in

Figure 10. The increase in SNR indicates an increase in the target echo energy under a certain noise level. For the target echo with

, the energy in the detection bin and the protection bin in front of it are equal, implying that the average photon rate of the target is identical in both bins. As depicted in

Figure 10, at lower SNRs (SNR < 25 dB), the mean TCSPC results of both cells are nearly equivalent. However, as SNR increases, there is a gradual divergence between the average TCSPC results of these two bins, with the protection bin exhibiting higher values compared to those of the detection bin. Even when SNR exceeds 35 dB, there continues to be an upward trend for the average TCSPC results in the protection bin while it is decreasing for those in the detection bin. Nevertheless, during this period, there remains an overall increasing trend when the sum of the average TCSPC results from both bins. This observation suggests that although there is a normal change trend caused by the targets’ influence on the TCSPC results, there is a reduction specifically observed within average TCSPC results from the detection bin. The average TCSPC result of the detection bin is influenced not only by the average photon rate of the target but also by the shielding effect caused by trigger events from the protection bin. When the SNR is low, the shielding effect of the protection bin may not be apparent. However, as SNR increases, there is a significant increase in the average TCSPC result of the protection bin, leading to a more prominent shielding effect on the detection bin. This subsequently affects how well the detection bin responds to trigger events and results in a decrease in the target detection probability.

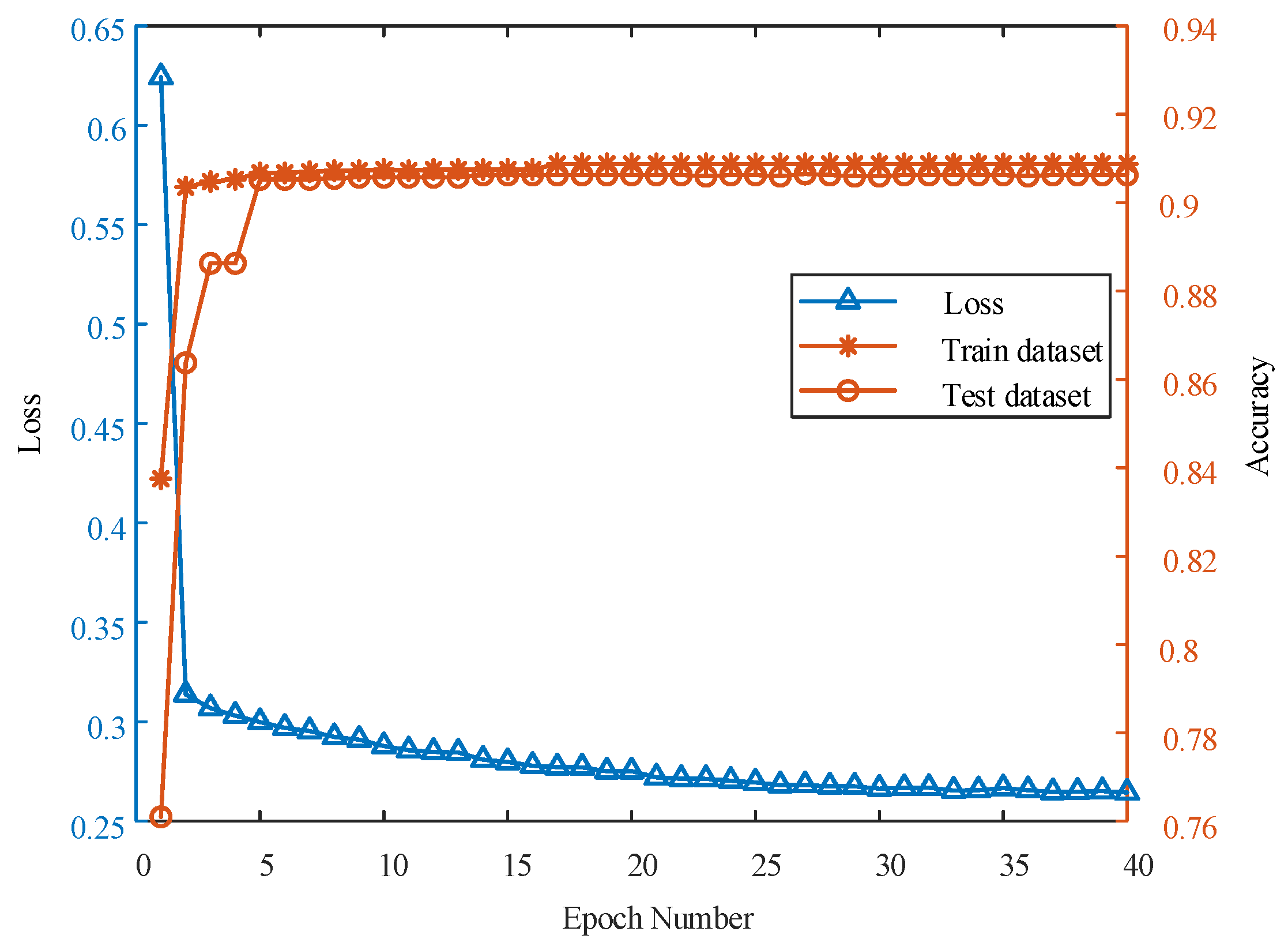

Finally, the error rate introduced by the CNN-PC method is discussed. As mentioned earlier, the prior probability of the monitored target is typically very low and unknown. Therefore, the error judgment proportion of the detection slice without a target in the detection bin can approximately represent the error rate of the CNN-PC method. By utilizing the parameters specified in

Section 5, we set the noise photon rate (

) to 50 kHz and maintain a SNR of 15 dB. Under a different number of detection cycles (

N), we generated 10,000 detection slices consisting solely of pure noise and simultaneously produced 5000 detection slices with targets in different reference bins. This allowed us to obtain a total of 60,000 detection slices under a varying number of detection cycles (

N). Both the CNN-PC method and likelihood ratio threshold method were employed for detecting these slices, and statistical analysis was conducted on each method’s error rate as

N changed. The results are illustrated in

Figure 11. As can be seen from

Figure 11, the error rates of both methods increase in tandem as the number of detection cycles (

N) increases. Over time, the error rates of both methods gradually converge towards equality. Despite the increasing error rate with a higher number of detection cycles (

N), it remains consistently low and has minimal impact on the performance of the lidar system.

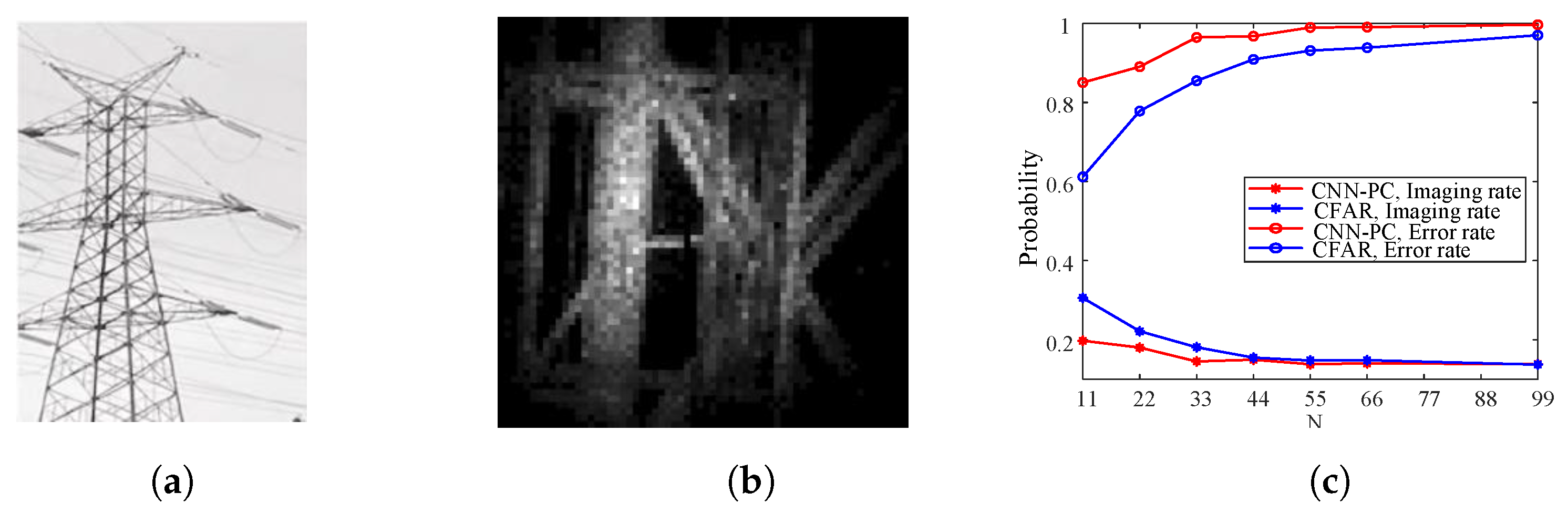

7.2. Experimental Results

The actual data collected by single-photon lidar are utilized in this section to validate the detection performance of the CNN-PC method. In the experiment, a

Gm-APD array with a parabolic laser pulse at a pulse repetition frequency of 20 kHz and laser pulse duration of

, as well as time bin width of

, is employed for the single-photon lidar. The single-photon lidar employs a single beam of floodlight to effectively detect the power line tower target located approximately

above it, operating in a fixed gaze mode.

Figure 12a illustrates the optical image of the detected target, while

Figure 12b presents results obtained using a fixed threshold of 50 based on the TCSPC results from 1980 detection cycles. According to the imaging results in

Figure 12b, the average TCSPC result corresponding to pixels without detected targets is considered as the noise intensity. Additionally, the TCSPC result corresponding to the brightest pixel in

Figure 12b is taken as the maximum intensity of the target, while the fixed threshold is regarded as the minimum intensity of the target. It can be estimated that the experimental SNR varies within a range of

.

The CNN-PC method’s detection performance is evaluated at different numbers of detection cycles by considering the pixels shown in

Figure 12b as valid pixels. The imaging rate

is then defined as the ratio of valid pixel points in the imaging result to the total number of valid pixels in

Figure 12b. In this experiment, the CNN-PC method’s detection performance is analyzed using actual data imaging results. Therefore, the corresponding error rate can be described as the proportion of error detection pixels in the entire image pixel for the CNN-PC method. Error detection pixels refer to points where the imaging results have different validity descriptions compared to their corresponding pixels in

Figure 12b. The imaging rates and error rates of both the CNN-PC method and CFAR method vary with the number of detection cycles as depicted in

Figure 12c; it should be noted that the false alarm rate for the CFAR method remains at a constant value of

.

The CNN-PC method exhibits an imaging rate of approximately

when N = 11, as depicted in

Figure 12c, which is significantly higher than that achieved by the CFAR method. Moreover, the error rate of the CNN-PC method at this point stands at around 20%, which is notably lower than that of the CFAR method. As the number of detection cycles increases, both methods demonstrate a corresponding increase in the imaging rate and a gradual decrease in the error rate. Consequently, the disparity between their respective imaging rates and error rates diminishes. Notably, the CNN-PC method achieves near-perfect imaging rates with an error rate of approximately 13% when the number of detection cycles exceeds 55. Similarly, for

N = 99, the CFAR method attains an imaging rate around 95% while maintaining an error rate of about 13%. Evidently, at equivalent numbers of detection cycles, the CNN-PC method outperforms with its superior imaging rate and reduced error rate. Additionally, the CNN-PC method requires fewer detection cycles at same level of imaging rate or error rate. Consequently, it proves more suitable for scenarios where limitations exist on the number of detection cycles.

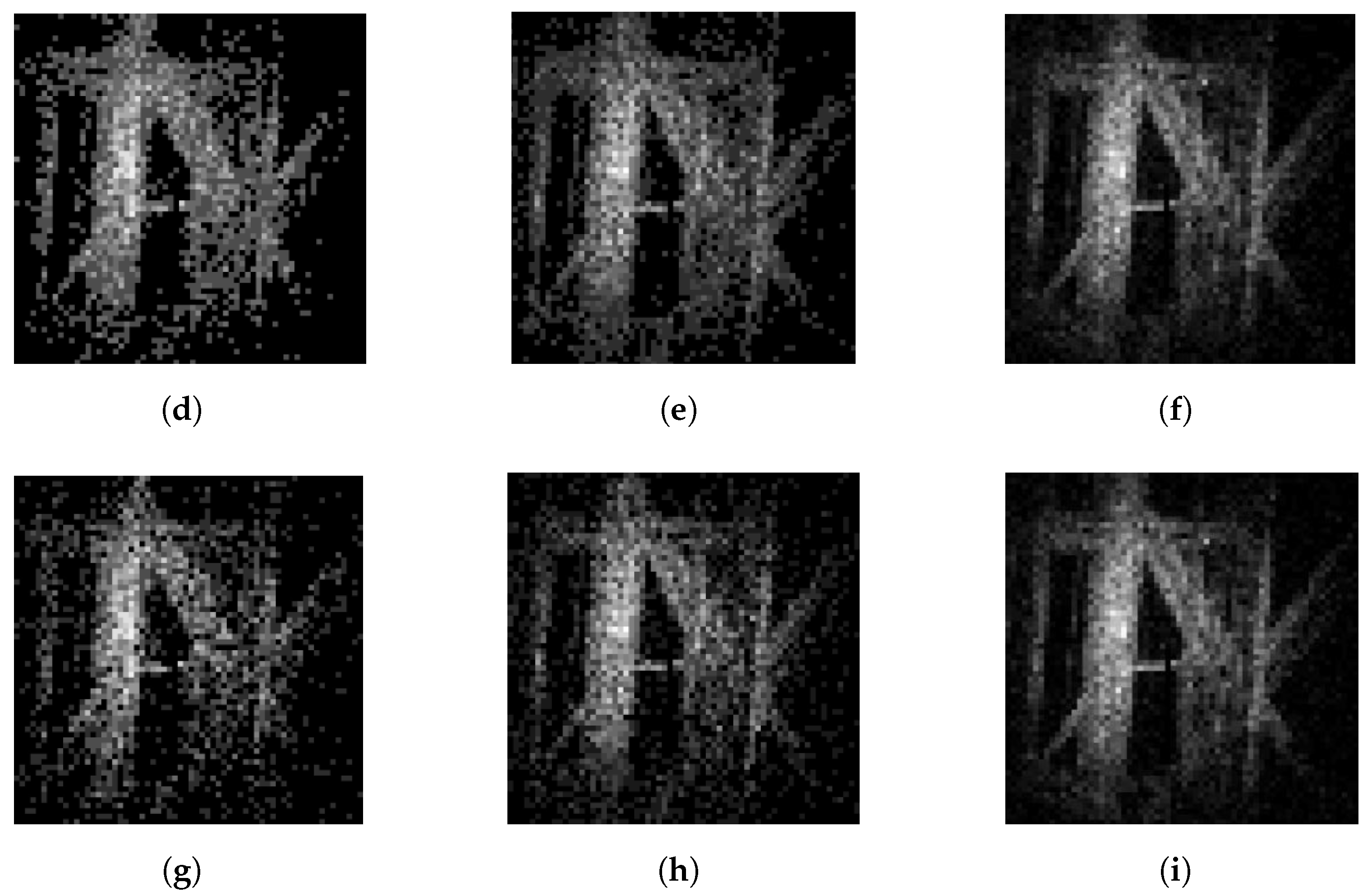

The imaging results are presented in

Figure 12d–i for

,

, and

using the CNN-PC method and CFAR method. Comparing

Figure 12d–f, it is evident that increasing the number of detection cycles leads to the CNN-PC method capturing more effective pixels in the target image, resulting in a smoother and clearer image. Similar conclusions can be drawn from the corresponding imaging results of the CFAR method shown in

Figure 12g–i. A comparison between

Figure 12d,g reveals that when

, the CNN-PC method detects more pixel points than the CFAR method. As observed in

Figure 12f,i, at

, the CNN-PC method significantly improves the imaging quality compared to the CFAR method; however, their difference becomes less pronounced. Therefore, under a small number of detection cycles, the CNN-PC method exhibits greater advantages.