Deep Learning and Adjoint Method Accelerated Inverse Design in Photonics: A Review

Abstract

1. Introduction

2. AM for Inverse Design

2.1. AM

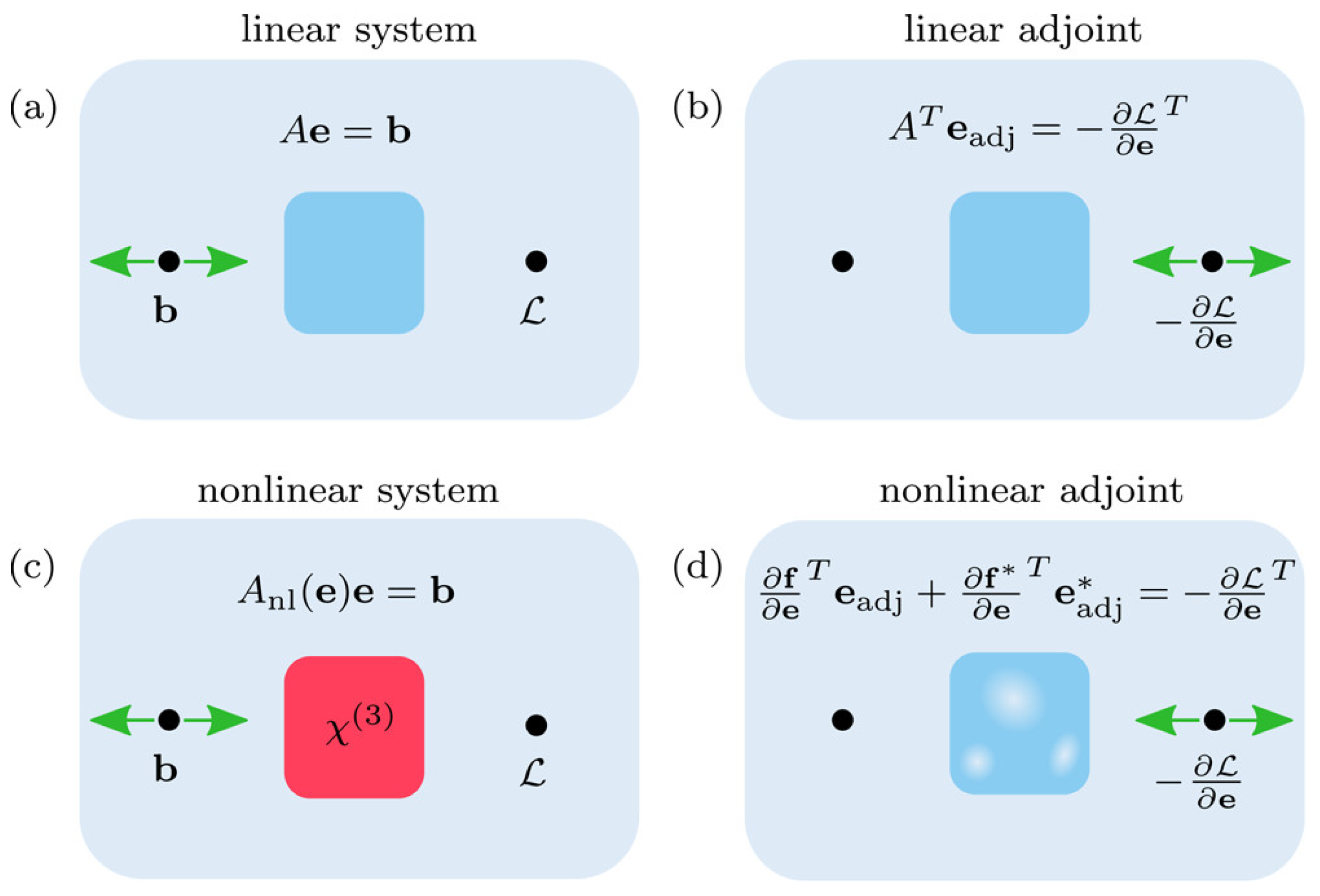

2.1.1. Linear AM

2.1.2. Nonlinear AM

2.2. Application of AM in Photonic Inverse Design

2.3. Limitations of AM in Photon Inverse Design

3. DL for Photonic Inverse Design

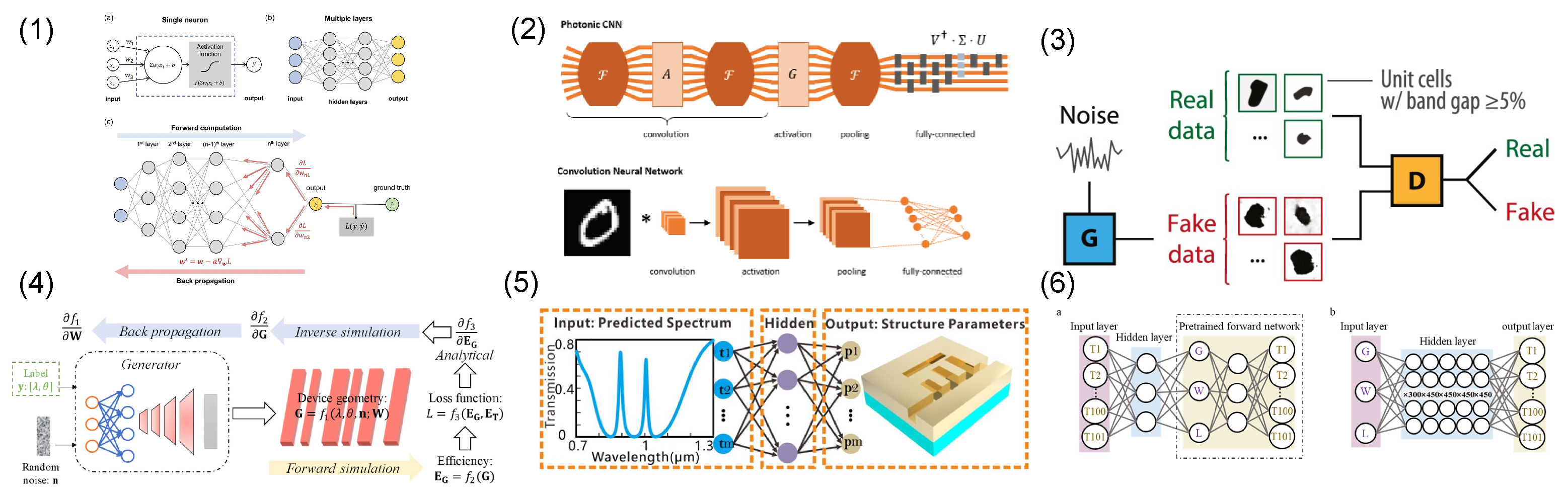

3.1. DL Networks Applied to Photonic Inverse Design

3.1.1. Fully Connected Networks (FCNs)

3.1.2. Convolutional Neural Networks (CNNs)

3.1.3. Recurrent Neural Networks (RNNs)

3.1.4. Deep Neural Networks (DNNs)

3.1.5. Deep Generative Models

3.2. Limitations of DL in Photon Inverse Design

3.2.1. Degrees of Freedom

3.2.2. Training Dataset

3.2.3. Nonuniqueness Problem

3.2.4. Local Minimum

3.2.5. Generalization Ability

3.2.6. Problem of Rerunning

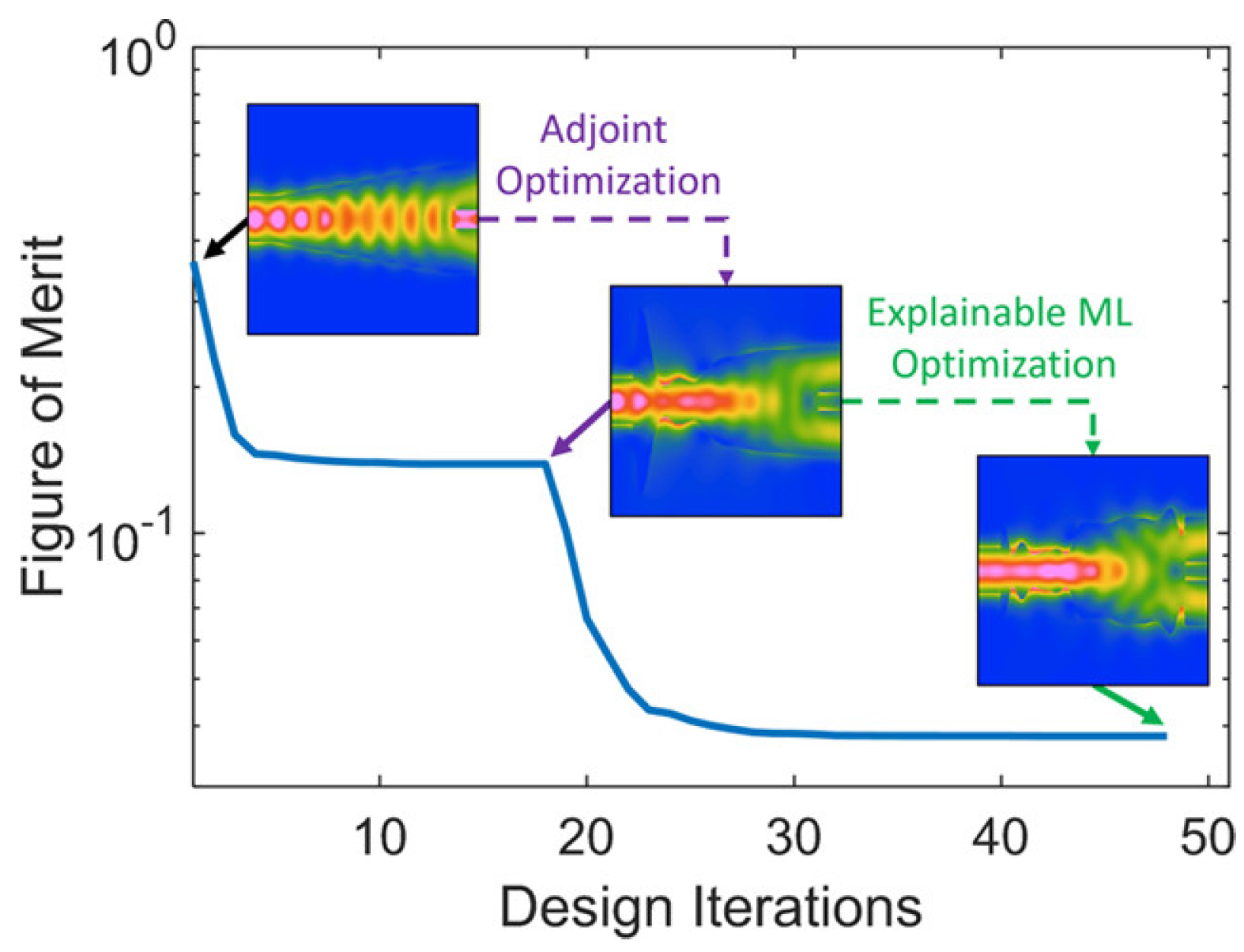

4. Hybridization of AM and DL For Inverse Design

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shen, B.; Wang, P.; Polson, R.; Menon, R. An Integrated-Nanophotonics Polarization Beamsplitter with 2.4 × 2.4 μm2 Footprint. Nat. Photonics 2015, 9, 378–382. [Google Scholar] [CrossRef]

- Piggott, A.Y.; Lu, J.; Lagoudakis, K.G.; Petykiewicz, J.; Babinec, T.M.; Vučković, J. Inverse Design and Demonstration of a Compact and Broadband On-Chip Wavelength Demultiplexer. Nat. Photonics 2015, 9, 374–377. [Google Scholar] [CrossRef]

- Peurifoy, J.; Shen, Y.; Jing, L.; Yang, Y.; Cano-Renteria, F.; DeLacy, B.G.; Joannopoulos, J.D.; Tegmark, M.; Solja, M. Nanophotonic Particle Simulation and Inverse Design Using Artificial Neural Networks. Sci. Adv. 2018, 4, eaar4206. [Google Scholar] [CrossRef]

- Qiu, T.; Shi, X.; Wang, J.; Li, Y.; Qu, S.; Cheng, Q.; Cui, T.; Sui, S. Deep Learning: A Rapid and Efficient Route to Automatic Metasurface Design. Adv. Sci. 2019, 6, 1900128. [Google Scholar] [CrossRef] [PubMed]

- Bor, E.; Babayigit, C.; Kurt, H.; Staliunas, K.; Turduev, M. Directional invisibility by genetic optimization. Opt. Lett. 2018, 43, 5781–5784. [Google Scholar] [CrossRef] [PubMed]

- Fu, P.H.; Lo, S.C.; Tsai, P.C.; Lee, K.L.; Wei, P.K. Optimization for Gold Nanostructure-Based Surface Plasmon Biosensors Using a Microgenetic Algorithm. ACS Photonics 2018, 5, 2320–2327. [Google Scholar] [CrossRef]

- Solís, D.M.; Obelleiro, F.; Taboada, J.M. Surface Integral Equation-Domain Decomposition Scheme for Solving Multiscale Nanoparticle Assemblies with Repetitions. IEEE Photonics J. 2016, 8, 1–14. [Google Scholar] [CrossRef]

- Majérus, B.; Butet, J.; Bernasconi, G.D.; Valapu, R.T.; Lobet, M.; Henrard, L.; Martin, O.J.F. Optical Second Harmonic Generation from Nanostructured Graphene: A Full Wave Approach. Opt. Express 2017, 25, 27015–27027. [Google Scholar] [CrossRef]

- Yu, D.M.; Liu, Y.N.; Tian, F.L.; Pan, X.M.; Sheng, X.Q. Accurate Thermoplasmonic Simulation of Metallic Nanoparticles. J. Quant. Spectrosc. Radiat. Transf. 2017, 187, 150–160. [Google Scholar] [CrossRef]

- Pan, X.M.; Xu, K.J.; Yang, M.L.; Sheng, X.Q. Prediction of Metallic Nano-Optical Trapping Forces by Finite Element-Boundary Integral Method. Opt. Express 2015, 23, 6130–6144. [Google Scholar] [CrossRef]

- Pan, X.M.; Gou, M.J.; Sheng, X.Q. Prediction of Radiation Pressure Force Exerted on Moving Particles by the Two-Level Skeletonization. Opt. Express 2014, 22, 10032–10045. [Google Scholar] [CrossRef]

- Mao, S.; Cheng, L.; Zhao, C.; Khan, F.N.; Li, Q.; Fu, H.Y. Inverse Design for Silicon Photonics: From Iterative Optimization Algorithms to Deep Neural Networks. Appl. Sci. 2021, 11, 3822. [Google Scholar] [CrossRef]

- Chen, W.; Zhang, B.; Wang, P.; Dai, S.; Liang, W.; Li, H.; Fu, Q.; Li, J.; Li, Y.; Dai, T.; et al. Ultra-Compact and Low-Loss Silicon Polarization Beam Splitter Using a Particle-Swarm-Optimized Counter-Tapered Coupler. Opt. Express 2020, 28, 30701. [Google Scholar] [CrossRef]

- Mao, S.; Cheng, L.; Mu, X.; Wu, S.; Fu, H.Y. Ultra-Broadband Compact Polarization Beam Splitter Based on Asymmetric Etched Directional Coupler. In Proceedings of the 14th Pacific Rim Conference on Lasers and Electro-Optics (CLEO PR 2020), Sydney, Australia, 2–6 August 2020; Optica Publishing Group: Sydney, Australia, 2020; p. C12H_1. [Google Scholar]

- Wang, Q.; Ho, S.T. Ultracompact Multimode Interference Coupler Designed by Parallel Particle Swarm Optimization with Parallel Finite-Difference Time-Domain. J. Light. Technol. 2010, 28, 1298–1304. [Google Scholar] [CrossRef]

- Sanchis, P.; Villalba, P.; Cuesta, F.; Håkansson, A.; Griol, A.; Galán, J.V.; Brimont, A.; Martí, J. Highly Efficient Crossing Structure for Silicon-on-Insulator Waveguides. Opt. Lett. 2009, 34, 2760. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Yang, S.; Lim, A.E.J.; Lo, G.Q.; Galland, C.; Baehr-Jones, T.; Hochberg, M. A Compact and Low Loss Y-junction for Submicron Silicon Waveguide. Opt. Express 2013, 21, 1310. [Google Scholar] [CrossRef]

- Tanemura, T.; Balram, K.C.; Ly-Gagnon, D.S.; Wahl, P.; White, J.S.; Brongersma, M.L.; Miller, D.A.B. Multiple-Wavelength Focusing of Surface Plasmons with a Nonperiodic Nanoslit Coupler. Nano Lett. 2011, 11, 2693–2698. [Google Scholar] [CrossRef]

- Fu, P.H.; Huang, T.Y.; Fan, K.W.; Huang, D.W. Optimization for Ultrabroadband Polarization Beam Splitters Using a Genetic Algorithm. IEEE Photonics J. 2019, 11, 1–11. [Google Scholar] [CrossRef]

- Lalau-Keraly, C.M.; Bhargava, S.; Miller, O.D.; Yablonovitch, E. Adjoint Shape Optimization Applied to Electromagnetic Design. Opt. Express 2013, 21, 21693. [Google Scholar] [CrossRef] [PubMed]

- Borel, P.I.; Harpøth, A.; Frandsen, L.H.; Kristensen, M.; Shi, P.; Jensen, J.S.; Sigmund, O. Topology optimization and fabrication of photonic crystal structures. Opt. Express 2004, 12, 1996–2001. [Google Scholar] [CrossRef]

- Jensen, J.S.; Sigmund, O. Systematic design of photonic crystal structures using topology optimization: Low-loss waveguide bends. Appl. Phys. Lett. 2004, 84, 2022–2024. [Google Scholar] [CrossRef]

- Kao, C.Y.; Osher, S.; Yablonovitch, E. Maximizing band gaps in two-dimensional photonic crystals by using level set methods. Appl. Phys. B Lasers Opt. 2005, 81, 235–244. [Google Scholar] [CrossRef]

- Burger, M. A framework for the construction of level set methods for shape optimization and reconstruction. Interfaces Free Bound. 2003, 5, 301–329. [Google Scholar] [CrossRef]

- Burger, M.; Osher, S.J. A survey on level set methods for inverse problems and optimal design. Eur. J. Appl. Math. 2005, 16, 263–301. [Google Scholar] [CrossRef]

- Molesky, S.; Lin, Z.; Piggott, A.Y.; Jin, W.; Vucković, J.; Rodriguez, A.W. Inverse Design in Nanophotonics. Nat. Photonics 2018, 12, 659–670. [Google Scholar] [CrossRef]

- Yeung, C.; Ho, D.; Pham, B.; Fountaine, K.T.; Zhang, Z.; Levy, K.; Raman, A.P. Enhancing Adjoint Optimization-Based Photonic Inverse Design with Explainable Machine Learning. ACS Photonics 2022, 9, 1577–1585. [Google Scholar] [CrossRef]

- Zhang, D.; Liu, Z.; Yang, X.; Xiao, J.J. Inverse Design of Multifunctional Metasurface Based on Multipole Decomposition and the Adjoint Method. ACS Photonics 2022, 9, 3899–3905. [Google Scholar] [CrossRef]

- Hughes, T.W.; Minkov, M.; Williamson, I.A.D.; Fan, S. Adjoint Method and Inverse Design for Nonlinear Nanophotonic Devices. ACS Photonics 2018, 5, 4781–4787. [Google Scholar] [CrossRef]

- Garza, E.; Sideris, C. Fast Inverse Design of 3D Nanophotonic Devices Using Boundary Integral Methods. ACS Photonics 2022, 10, 824–835. [Google Scholar] [CrossRef]

- Giles, M.B.; Pierce, N.A. An Introduction to the Adjoint Approach to Design. Flow Turbul. Combust. 2000, 65, 393–415. [Google Scholar] [CrossRef]

- Tanriover, I.; Lee, D.; Chen, W.; Aydin, K. Deep Generative Modeling and Inverse Design of Manufacturable Free-Form Dielectric Metasurfaces. ACS Photonics 2023, 10, 875–883. [Google Scholar] [CrossRef]

- Liu, Z.; Zhu, D.; Raju, L.; Cai, W. Tackling Photonic Inverse Design with Machine Learning. Adv. Sci. 2021, 8, 2002923. [Google Scholar] [CrossRef] [PubMed]

- Mengu, D.; Rahman, M.S.S.; Luo, Y.; Li, J.; Kulce, O.; Ozcan, A. At the intersection of optics and deep learning: Statistical inference, computing, and inverse design. Adv. Opt. Photonics 2022, 14, 209–290. [Google Scholar] [CrossRef]

- Christensen, T.; Loh, C.; Picek, S.; Jakobović, D.; Jing, L.; Fisher, S.; Ceperic, V.; Joannopoulos, J.D.; Soljačić, M. Predictive and Generative Machine Learning Models for Photonic Crystals. Nanophotonics 2020, 9, 4183–4192. [Google Scholar] [CrossRef]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A. Improved Training of Wasserstein GANs. arXiv 2017, arXiv:1704.00028. [Google Scholar]

- Hughes, T.W.; Minkov, M.; Shi, Y.; Fan, S. Training of Photonic Neural Networks through in Situ Backpropagation and Gradient Measurement. Optica 2018, 5, 864. [Google Scholar] [CrossRef]

- Jiang, J.; Fan, J.A. Global Optimization of Dielectric Metasurfaces Using a Physics-Driven Neural Network. Nano Lett. 2019, 19, 5366–5372. [Google Scholar] [CrossRef]

- Wang, J.; Shi, Y.; Hughes, T.; Zhao, Z.; Fan, S. Adjoint-based optimization of active nanophotonic devices. Opt. Express 2018, 26, 3236–3248. [Google Scholar] [CrossRef]

- Georgieva, N.; Glavic, S.; Bakr, M.; Bandler, J. Feasible adjoint sensitivity technique for EM design optimization. IEEE Trans. Microw. Theory Tech. 2002, 50, 2751–2758. [Google Scholar] [CrossRef]

- Lu, J.; Vučković, J. Nanophotonic computational design. Opt. Express 2013, 21, 13351–13367. [Google Scholar] [CrossRef]

- Yee, K. Numerical solution of initial boundary value problems involving maxwell’s equations in isotropic media. IEEE Trans. Antennas Propag. 1966, 14, 302–307. [Google Scholar] [CrossRef]

- Jin, J. The Finite Element Method in Electromagnetics, 3rd ed.; John Wiley & Sons: Hoboken, NJ, USA, 2014. [Google Scholar]

- Obayya, S. Computational Photonics; Wiley: Hoboken, NJ, USA, 2011. [Google Scholar]

- Kreutz-Delgado, K. The Complex Gradient Operator and the CR-Calculus. arXiv 2009, arXiv:0906.4835. [Google Scholar]

- Press, W.H. Numerical Recipes 3rd Edition: The Art of Scientific Computing; Cambridge University Press: Cambridge, UK, 2007. [Google Scholar]

- Elesin, Y.; Lazarov, B.; Jensen, J.; Sigmund, O. Design of Robust and Efficient Photonic Switches Using Topology Optimization. Photonics Nanostruct.-Fundam. Appl. 2012, 10, 153–165. [Google Scholar] [CrossRef]

- Rasmus, E. Christiansen and Ole Sigmund. Inverse design in photonics by topology optimization: Tutorial. J. Opt. Soc. Am. B 2021, 38, 496–509. [Google Scholar] [CrossRef]

- Aage, N.; Andreassen, E.; Lazarov, B.S.; Sigmund, O. Giga-voxel computational morphogenesis for structural design. Nature 2017, 550, 84–86. [Google Scholar] [CrossRef] [PubMed]

- Jensen, J.S.; Sigmund, O. Topology Optimization of Photonic Crystal Structures: A High-Bandwidth Low-Loss T-junction Waveguide. J. Opt. Soc. Am. B 2005, 22, 1191. [Google Scholar] [CrossRef]

- Jensen, J.S.; Sigmund, O. Topology optimization for nano-photonics. Laser Photonics Rev. 2011, 5, 308–321. [Google Scholar] [CrossRef]

- Bendsøe, M.P.; Kikuchi, N. Generating optimal topologies in structural design using a homogenization method. Comput. Methods Appl. Mech. Eng. 1988, 71, 197–224. [Google Scholar] [CrossRef]

- Tortorelli, D.A.; Michaleris, P. Design sensitivity analysis: Overview and review. Inverse Probl. Eng. 1994, 1, 71–105. [Google Scholar] [CrossRef]

- Frellsen, L.F.; Ding, Y.; Sigmund, O.; Frandsen, L.H. Topology Optimized Mode Multiplexing in Silicon-on-Insulator Photonic Wire Waveguides. Opt. Express 2016, 24, 16866. [Google Scholar] [CrossRef]

- Niederberger, A.C.R.; Fattal, D.A.; Gauger, N.R.; Fan, S.; Beausoleil, R.G. Sensitivity Analysis and Optimization of Sub-Wavelength Optical Gratings Using Adjoints. Opt. Express 2014, 22, 12971. [Google Scholar] [CrossRef] [PubMed]

- Lin, Z.; Liu, V.; Pestourie, R.; Johnson, S.G. Topology optimization of freeform large-area metasurfaces. Opt. Express 2019, 27, 15765–15775. [Google Scholar] [CrossRef] [PubMed]

- Tsuji, Y.; Hirayama, K.; Nomura, T.; Sato, K.; Nishiwaki, S. Design of optical circuit devices based on topology optimization. IEEE Photonics Technol. Lett. 2006, 18, 850–852. [Google Scholar] [CrossRef]

- Shrestha, P.K.; Chun, Y.T.; Chu, D. A High-Resolution Optically Addressed Spatial Light Modulator Based on ZnO Nanoparticles. Light. Sci. Appl. 2015, 4, e259. [Google Scholar] [CrossRef]

- Zhou, H.; Liao, K.; Su, Z.; Li, T.; Geng, G.; Li, J.; Wang, Y.; Hu, X.; Huang, L. Tunable On-Chip Mode Converter Enabled by Inverse Design. Nanophotonics 2023, 12, 1105–1114. [Google Scholar] [CrossRef]

- Mansouree, M.; Kwon, H.; Arbabi, E.; McClung, A.; Faraon, A.; Arbabi, A. Multifunctional 2.5D Metastructures Enabled by Adjoint Optimization. Optica 2020, 7, 77. [Google Scholar] [CrossRef]

- Minkov, M.; Williamson, I.A.D.; Andreani, L.C.; Gerace, D.; Lou, B.; Song, A.Y.; Hughes, T.W.; Fan, S. Inverse Design of Photonic Crystals through Automatic Differentiation. ACS Photonics 2020, 7, 1729–1741. [Google Scholar] [CrossRef]

- Chung, H.; Miller, O.D. Tunable Metasurface Inverse Design for 80% Switching Efficiencies and 144° Angular Deflection. ACS Photonics 2020, 7, 2236–2243. [Google Scholar] [CrossRef]

- Wang, K.; Ren, X.; Chang, W.; Lu, L.; Liu, D.; Zhang, M. Inverse Design of Digital Nanophotonic Devices Using the Adjoint Method. Photonics Res. 2020, 8, 528. [Google Scholar] [CrossRef]

- Deng, Y.; Liu, Z.; Song, C.; Wu, J.; Liu, Y.; Wu, Y. Topology Optimization-Based Computational Design Methodology for Surface Plasmon Polaritons. Plasmonics 2015, 10, 569–583. [Google Scholar] [CrossRef]

- Sell, D.; Yang, J.; Doshay, S.; Yang, R.; Fan, J.A. Large-Angle, Multifunctional Metagratings Based on Freeform Multimode Geometries. Nano Lett. 2017, 17, 3752–3757. [Google Scholar] [CrossRef] [PubMed]

- Jiang, J.; Chen, M.; Fan, J.A. Deep neural networks for the evaluation and design of photonic devices. Nat. Rev. Mater. 2021, 6, 679–700. [Google Scholar] [CrossRef]

- Jin, C.; Ge, R.; Netrapalli, P.; Kakade, S.M.; Jordan, M.I. How to Escape Saddle Points Efficiently. arXiv, 2017; arXiv:1703.00887. [Google Scholar]

- Fan, J.A. Freeform metasurface design based on topology optimization. MRS Bull. 2020, 45, 196–201. [Google Scholar] [CrossRef]

- Deng, L.; Xu, Y.; Liu, Y. Hybrid inverse design of photonic structures by combining optimization methods with neural networks. Photonics Nanostruct.-Fundam. Appl. 2022, 52, 101073. [Google Scholar] [CrossRef]

- Colburn, S.; Majumdar, A. Inverse design and flexible parameterization of meta-optics using algorithmic differentiation. Commun. Phys. 2021, 4, 65. [Google Scholar] [CrossRef]

- Zhan, A.; Fryett, T.K.; Colburn, S.; Majumdar, A. Inverse design of optical elements based on arrays of dielectric spheres. Appl. Opt. 2018, 57, 1437–1446. [Google Scholar] [CrossRef] [PubMed]

- Bayati, E.; Pestourie, R.; Colburn, S.; Lin, Z.; Johnson, S.G.; Majumdar, A. Inverse Designed Metalenses with Extended Depth of Focus. ACS Photonics 2020, 7, 873–878. [Google Scholar] [CrossRef]

- Backer, A.S. Computational inverse design for cascaded systems of metasurface optics. Opt. Express 2019, 27, 30308–30331. [Google Scholar] [CrossRef] [PubMed]

- Wang, E.W.; Sell, D.; Phan, T.; Fan, J.A. Robust design of topology-optimized metasurfaces. Opt. Mater. Express 2019, 9, 469–482. [Google Scholar] [CrossRef]

- Moharam, M.G.; Gaylord, T.K. Rigorous coupled-wave analysis of planar-grating diffraction. J. Opt. Soc. Am. 1981, 71, 811–818. [Google Scholar] [CrossRef]

- Gill, P.E.; Murray, W.; Wright, M.H. Practical Optimization; Academic Press: London, UK, 1981. [Google Scholar]

- So, S.; Badloe, T.; Noh, J.; Bravo-Abad, J.; Rho, J. Deep Learning Enabled Inverse Design in Nanophotonics. Nanophotonics 2020, 9, 1041–1057. [Google Scholar] [CrossRef]

- Bodaghi, M.; Damanpack, A.; Hu, G.; Liao, W. Large deformations of soft metamaterials fabricated by 3D printing. Mater. Des. 2017, 131, 81–91. [Google Scholar] [CrossRef]

- Sanchis, L.; Håkansson, A.; López-Zanón, D.; Bravo-Abad, J.; Sánchez-Dehesa, J. Integrated optical devices design by genetic algorithm. Appl. Phys. Lett. 2004, 84, 4460–4462. [Google Scholar] [CrossRef]

- Su, L.; Piggott, A.Y.; Sapra, N.V.; Petykiewicz, J.; Vučković, J. Inverse Design and Demonstration of a Compact On-Chip Narrowband Three-Channel Wavelength Demultiplexer. ACS Photonics 2018, 5, 301–305. [Google Scholar] [CrossRef]

- Silver, D.; Schrittwieser, J.; Simonyan, K.; Antonoglou, I.; Huang, A.; Guez, A.; Hubert, T.; Baker, L.; Lai, M.; Bolton, A.; et al. Mastering the game of go without human knowledge. Nature 2017, 550, 354–359. [Google Scholar] [CrossRef] [PubMed]

- Krasikov, S.; Tranter, A.; Bogdanov, A.; Kivshar, Y. Intelligent metaphotonics empowered by machine learning. Opto-Electron. Adv. 2022, 5, 210147. [Google Scholar] [CrossRef]

- Wang, Z.; Xiao, Y.; Liao, K.; Li, T.; Song, H.; Chen, H.; Uddin, S.M.Z.; Mao, D.; Wang, F.; Zhou, Z.; et al. Metasurface on Integrated Photonic Platform: From Mode Converters to Machine Learning. Nanophotonics 2022, 11, 3531–3546. [Google Scholar] [CrossRef]

- Gigli, C.; Saba, A.; Ayoub, A.B.; Psaltis, D. Predicting nonlinear optical scattering with physics-driven neural networks. APL Photonics 2023, 8, 026105. [Google Scholar] [CrossRef]

- Salmela, L.; Tsipinakis, N.; Foi, A.; Billet, C.; Dudley, J.M.; Genty, G. Predicting ultrafast nonlinear dynamics in fibre optics with a recurrent neural network. Nat. Mach. Intell. 2021, 3, 344–354. [Google Scholar] [CrossRef]

- Fan, Q.; Zhou, G.; Gui, T.; Lu, C.; Lau, A.P.T. Advancing theoretical understanding and practical performance of signal processing for nonlinear optical communications through machine learning. Nat. Commun. 2020, 11, 3694. [Google Scholar] [CrossRef]

- Teğin, U.; Yıldırım, M.; Oğuz, İ.; Moser, C.; Psaltis, D. Scalable optical learning operator. Nat. Comput. Sci. 2021, 1, 542–549. [Google Scholar] [CrossRef]

- Wright, L.G.; Onodera, T.; Stein, M.M.; Wang, T.; Schachter, D.T.; Hu, Z.; McMahon, P.L. Deep physical neural networks trained with backpropagation. Nature 2022, 601, 549–555. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Yun, J.; Kim, S.; So, S.; Kim, M.; Rho, J. Deep Learning for Topological Photonics. Adv. Phys. X 2022, 7, 2046156. [Google Scholar] [CrossRef]

- Alagappan, G.; Ong, J.R.; Yang, Z.; Ang, T.Y.L.; Zhao, W.; Jiang, Y.; Zhang, W.; Png, C.E. Leveraging AI in Photonics and Beyond. Photonics 2022, 9, 75. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, J.; Liu, Q.; Zhou, J.; Dai, J.; Han, X.; Zhou, Y.; Xu, K. Efficient Spectrum Prediction and Inverse Design for Plasmonic Waveguide Systems Based on Artificial Neural Networks. Photonics Res. 2019, 7, 368. [Google Scholar] [CrossRef]

- Wu, Q.; Li, X.; Wang, W.; Dong, Q.; Xiao, Y.; Cao, X.; Wang, L.; Gao, L. Comparison of Different Neural Network Architectures for Plasmonic Inverse Design. ACS Omega 2021, 6, 23076–23082. [Google Scholar] [CrossRef]

- Olivas, E.S.; Guerrero, J.D.M.; Martinez-Sober, M.; Magdalena-Benedito, J.R.; Serrano, L. Handbook of Research on Machine Learning Applications and Trends: Algorithms, Methods, and Techniques: Algorithms, Methods, and Techniques; IGI Global: Hershey, PA, USA, 2009. [Google Scholar]

- Mikolov, T.; Karafiát, M.; Burget, L.; Černocký, J.; Khudanpur, S. Recurrent neural network based language model. In Proceedings of the Interspeech 2010, Chiba, Japan, 26–30 September 2010; pp. 1045–1048. [Google Scholar] [CrossRef]

- He, L.; Wen, Z.; Jin, Y.; Torrent, D.; Zhuang, X.; Rabczuk, T. Inverse Design of Topological Metaplates for Flexural Waves with Machine Learning. Mater. Des. 2021, 199, 109390. [Google Scholar] [CrossRef]

- Lininger, A.; Hinczewski, M.; Strangi, G. General Inverse Design of Layered Thin-Film Materials with Convolutional Neural Networks. ACS Photonics 2021, 8, 3641–3650. [Google Scholar] [CrossRef]

- Lin, R.; Zhai, Y.; Xiong, C.; Li, X. Inverse Design of Plasmonic Metasurfaces by Convolutional Neural Network. Opt. Lett. 2020, 45, 1362. [Google Scholar] [CrossRef]

- Ma, W.; Liu, Z.; Kudyshev, Z.A.; Boltasseva, A.; Cai, W.; Liu, Y. Deep Learning for the Design of Photonic Structures. Nat. Photonics 2021, 15, 77–90. [Google Scholar] [CrossRef]

- Zhou, Q.; Yang, C.; Liang, A.; Zheng, X.; Chen, Z. Low computationally complex recurrent neural network for high speed optical fiber transmission. Opt. Commun. 2019, 441, 121–126. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; Association for Computational Linguistics: Cedarville, OH, USA, 2014; pp. 1724–1734. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Lee, M.C.; Yu, C.H.; Yao, C.K.; Li, Y.L.; Peng, P.C. A Neural-network-based Inverse Design of the Microwave Photonic Filter Using Multiwavelength Laser. Opt. Commun. 2022, 523, 128729. [Google Scholar] [CrossRef]

- Sajedian, I.; Kim, J.; Rho, J. Finding the optical properties of plasmonic structures by image processing using a combination of convolutional neural networks and recurrent neural networks. Microsyst. Nanoeng. 2019, 5, 27. [Google Scholar] [CrossRef] [PubMed]

- Liu, D.; Tan, Y.; Khoram, E.; Yu, Z. Training Deep Neural Networks for the Inverse Design of Nanophotonic Structures. ACS Photonics 2018, 5, 1365–1369. [Google Scholar] [CrossRef]

- Kojima, K.; Tahersima, M.H.; Koike-Akino, T.; Jha, D.K.; Tang, Y.; Wang, Y.; Parsons, K. Deep Neural Networks for Inverse Design of Nanophotonic Devices. J. Light. Technol. 2021, 39, 1010–1019. [Google Scholar] [CrossRef]

- Malkiel, I.; Mrejen, M.; Nagler, A.; Arieli, U.; Wolf, L.; Suchowski, H. Plasmonic Nanostructure Design and Characterization via Deep Learning. Light Sci. Appl. 2018, 7, 60. [Google Scholar] [CrossRef] [PubMed]

- Tu, X.; Xie, W.; Chen, Z.; Ge, M.F.; Huang, T.; Song, C.; Fu, H.Y. Analysis of Deep Neural Network Models for Inverse Design of Silicon Photonic Grating Coupler. J. Light. Technol. 2021, 39, 2790–2799. [Google Scholar] [CrossRef]

- Mao, S.; Cheng, L.; Chen, H.; Liu, X.; Geng, Z.; Li, Q.; Fu, H. Multi-task topology optimization of photonic devices in low-dimensional Fourier domain via deep learning. Nanophotonics 2023, 12, 1007–1018. [Google Scholar] [CrossRef]

- Hegde, R.S. Photonics Inverse Design: Pairing Deep Neural Networks with Evolutionary Algorithms. IEEE J. Select. Top. Quantum Electron. 2020, 26, 1–8. [Google Scholar] [CrossRef]

- Deng, Y.; Ren, S.; Malof, J.; Padilla, W.J. Deep Inverse Photonic Design: A Tutorial. Photonics Nanostruct.-Fundam. Appl. 2022, 52, 101070. [Google Scholar] [CrossRef]

- So, S.; Rho, J. Designing nanophotonic structures using conditional deep convolutional generative adversarial networks. Nanophotonics 2019, 8, 1255–1261. [Google Scholar] [CrossRef]

- An, S.; Zheng, B.; Tang, H.; Shalaginov, M.Y.; Zhou, L.; Li, H.; Kang, M.; Richardson, K.A.; Gu, T.; Hu, J.; et al. Multifunctional Metasurface Design with a Generative Adversarial Network. Adv. Opt. Mater. 2021, 9, 2001433. [Google Scholar] [CrossRef]

- Yeung, C.; Tsai, R.; Pham, B.; King, B.; Kawagoe, Y.; Ho, D.; Liang, J.; Knight, M.W.; Raman, A.P. Global Inverse Design across Multiple Photonic Structure Classes Using Generative Deep Learning. Adv. Opt. Mater. 2021, 9, 2100548. [Google Scholar] [CrossRef]

- Kudyshev, Z.A.; Kildishev, A.V.; Shalaev, V.M.; Boltasseva, A. Machine Learning–Assisted Global Optimization of Photonic Devices. Nanophotonics 2020, 10, 371–383. [Google Scholar] [CrossRef]

- Paz, A.; Moran, S. Non deterministic polynomial optimization problems and their approximations. Theor. Comput. Sci. 1981, 15, 251–277. [Google Scholar] [CrossRef]

- Doersch, C. Tutorial on Variational Autoencoders. arXiv 2021, arXiv:1606.05908. [Google Scholar]

- Yang, F.; Song, W.; Meng, F.; Luo, F.; Lou, S.; Lin, S.; Gong, Z.; Cao, J.; Barnard, E.S.; Chan, E.; et al. Tunable Second Harmonic Generation in Twisted Bilayer Graphene. Matter 2020, 3, 1361–1376. [Google Scholar] [CrossRef]

- Wright, L.G.; Renninger, W.H.; Christodoulides, D.N.; Wise, F.W. Nonlinear multimode photonics: Nonlinear optics with many degrees of freedom. Optica 2022, 9, 824–841. [Google Scholar] [CrossRef]

- Hegde, R.S. Deep learning: A new tool for photonic nanostructure design. Nanoscale Adv. 2020, 2, 1007–1023. [Google Scholar] [CrossRef] [PubMed]

- Qiu, C.; Wu, X.; Luo, Z.; Yang, H.; Wang, G.; Liu, N.; Huang, B. Simultaneous inverse design continuous and discrete parameters of nanophotonic structures via back-propagation inverse neural network. Opt. Commun. 2021, 483, 126641. [Google Scholar] [CrossRef]

- Liu, V.; Fan, S. S4: A free electromagnetic solver for layered periodic structures. Comput. Phys. Commun. 2012, 183, 2233–2244. [Google Scholar] [CrossRef]

- Jiang, J.; Lupoiu, R.; Wang, E.W.; Sell, D.; Paul Hugonin, J.; Lalanne, P.; Fan, J.A. MetaNet: A New Paradigm for Data Sharing in Photonics Research. Opt. Express 2020, 28, 13670. [Google Scholar] [CrossRef]

- Chen, Y.; Zhu, J.; Xie, Y.; Feng, N.; Liu, Q.H. Smart Inverse Design of Graphene-Based Photonic Metamaterials by an Adaptive Artificial Neural Network. Nanoscale 2019, 11, 9749–9755. [Google Scholar] [CrossRef]

- Wiecha, P.R.; Arbouet, A.; Girard, C.; Muskens, O.L. Deep learning in nano-photonics: Inverse design and beyond. Photonics Res. 2021, 9, B182–B200. [Google Scholar] [CrossRef]

- Sheverdin, A.; Monticone, F.; Valagiannopoulos, C. Photonic Inverse Design with Neural Networks: The Case of Invisibility in the Visible. Phys. Rev. Appl. 2020, 14, 024054. [Google Scholar] [CrossRef]

- Qu, Y.; Jing, L.; Shen, Y.; Qiu, M.; Soljačić, M. Migrating Knowledge between Physical Scenarios Based on Artificial Neural Networks. ACS Photonics 2019, 6, 1168–1174. [Google Scholar] [CrossRef]

- Unni, R.; Yao, K.; Zheng, Y. Deep Convolutional Mixture Density Network for Inverse Design of Layered Photonic Structures. ACS Photonics 2020, 7, 2703–2712. [Google Scholar] [CrossRef]

- Tanriover, I.; Hadibrata, W.; Aydin, K. Physics-Based Approach for a Neural Networks Enabled Design of All-Dielectric Metasurfaces. ACS Photonics 2020, 7, 1957–1964. [Google Scholar] [CrossRef]

- Lenaerts, J.; Pinson, H.; Ginis, V. Rtificial Neural Networks for Inverse Design of Resonant Nanophotonic Components with Oscillatory Loss Landscapes. Nanophotonics 2020, 10, 385–392. [Google Scholar] [CrossRef]

- Jiang, J.; Fan, J.A. Multiobjective and Categorical Global Optimization of Photonic Structures Based on ResNet Generative Neural Networks. Nanophotonics 2020, 10, 361–369. [Google Scholar] [CrossRef]

- Wang, Q.; Makarenko, M.; Burguete Lopez, A.; Getman, F.; Fratalocchi, A. Advancing Statistical Learning and Artificial Intelligence in Nanophotonics Inverse Design. Nanophotonics 2022, 11, 2483–2505. [Google Scholar] [CrossRef]

- Miller, O.D. Photonic Design: From Fundamental Solar Cell Physics to Computational Inverse Design; University of California: Berkeley, CA, USA, 2012. [Google Scholar]

- Li, W.; Meng, F.; Chen, Y.; Li, Y.F.; Huang, X. Topology Optimization of Photonic and Phononic Crystals and Metamaterials: A Review. Adv. Theory Simulations 2019, 2, 1900017. [Google Scholar] [CrossRef]

- Campbell, S.D.; Sell, D.; Jenkins, R.P.; Whiting, E.B.; Fan, J.A.; Werner, D.H. Review of numerical optimization techniques for meta-device design. Opt. Mater. Express 2019, 9, 1842–1863. [Google Scholar] [CrossRef]

- Jiang, J.; Sell, D.; Hoyer, S.; Hickey, J.; Yang, J.; Fan, J.A. Free-Form Diffractive Metagrating Design Based on Generative Adversarial Networks. ACS Nano 2019, 13, 8872–8878. [Google Scholar] [CrossRef]

- Hooten, S.; Beausoleil, R.G.; Van Vaerenbergh, T. Inverse Design of Grating Couplers Using the Policy Gradient Method from Reinforcement Learning. Nanophotonics 2021, 10, 3843–3856. [Google Scholar] [CrossRef]

- Yeung, C.; Pham, B.; Tsai, R.; Fountaine, K.T.; Raman, A.P. DeepAdjoint: An All-in-One Photonic Inverse Design Framework Integrating Data-Driven Machine Learning with Optimization Algorithms. ACS Photonics 2023, 10, 884–891. [Google Scholar] [CrossRef]

- Ren, S.; Padilla, W.; Malof, J.M. Benchmarking deep inverse models over time, and the neural-adjoint method. Adv. Neural Inf. Process. Syst. 2020, 33, 38–48. [Google Scholar]

- Deng, Y.; Ren, S.; Fan, K.; Malof, J.M.; Padilla, W.J. Neural-Adjoint Method for the Inverse Design of All-Dielectric Metasurfaces. Opt. Express 2021, 29, 7526. [Google Scholar] [CrossRef]

- Zhang, D.; Bao, Q.; Chen, W.; Liu, Z.; Wei, G.; Xiao, J.J. Inverse Design of an Optical Film Filter by a Recurrent Neural Adjoint Method: An Example for a Solar Simulator. J. Opt. Soc. Am. B 2021, 38, 1814. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, Z.; Pan, X. Deep Learning and Adjoint Method Accelerated Inverse Design in Photonics: A Review. Photonics 2023, 10, 852. https://doi.org/10.3390/photonics10070852

Pan Z, Pan X. Deep Learning and Adjoint Method Accelerated Inverse Design in Photonics: A Review. Photonics. 2023; 10(7):852. https://doi.org/10.3390/photonics10070852

Chicago/Turabian StylePan, Zongyong, and Xiaomin Pan. 2023. "Deep Learning and Adjoint Method Accelerated Inverse Design in Photonics: A Review" Photonics 10, no. 7: 852. https://doi.org/10.3390/photonics10070852

APA StylePan, Z., & Pan, X. (2023). Deep Learning and Adjoint Method Accelerated Inverse Design in Photonics: A Review. Photonics, 10(7), 852. https://doi.org/10.3390/photonics10070852