Isotropic Two-Dimensional Differentiation Based on Dual Dynamic Volume Holograms

Abstract

1. Introduction

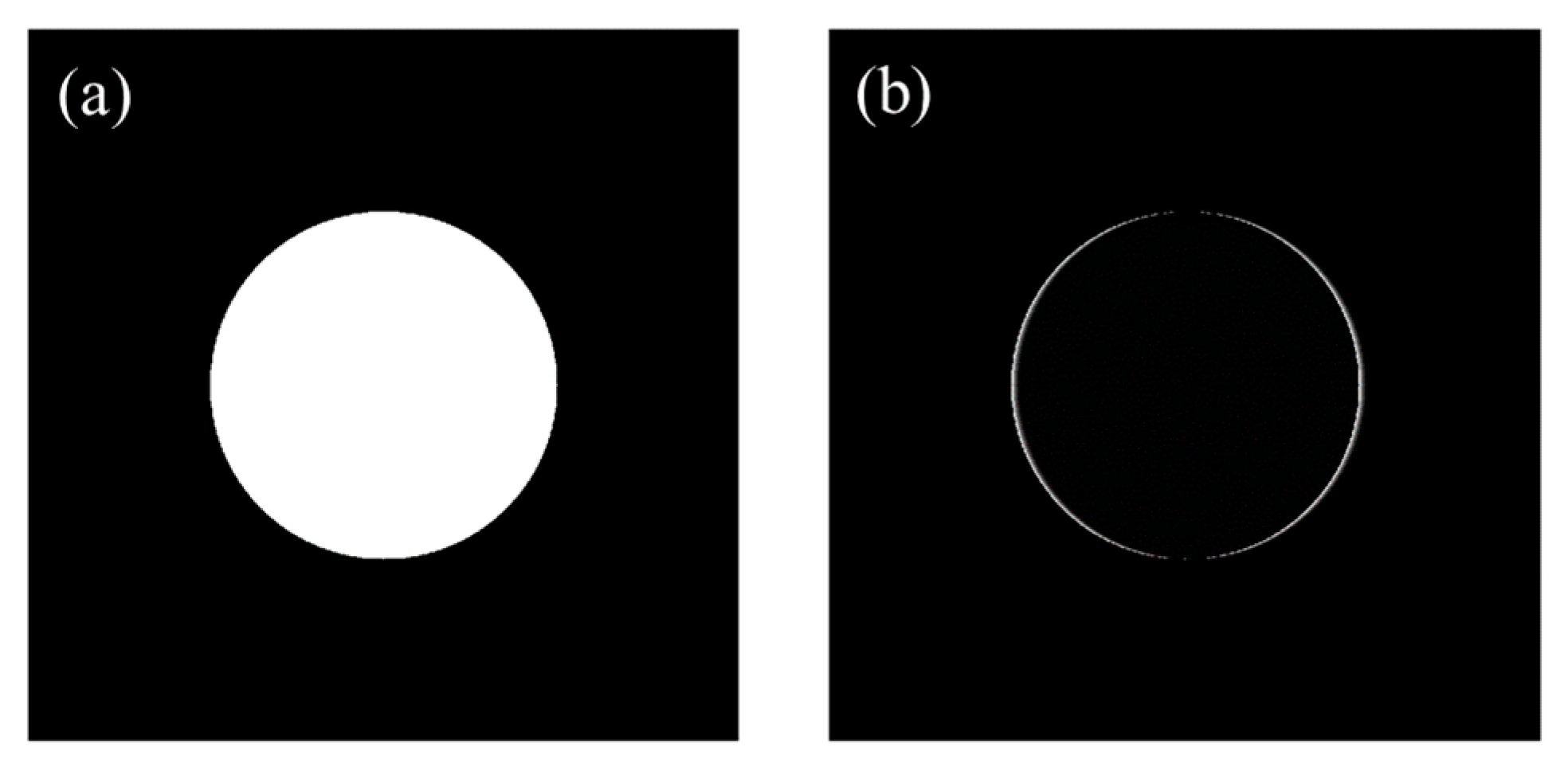

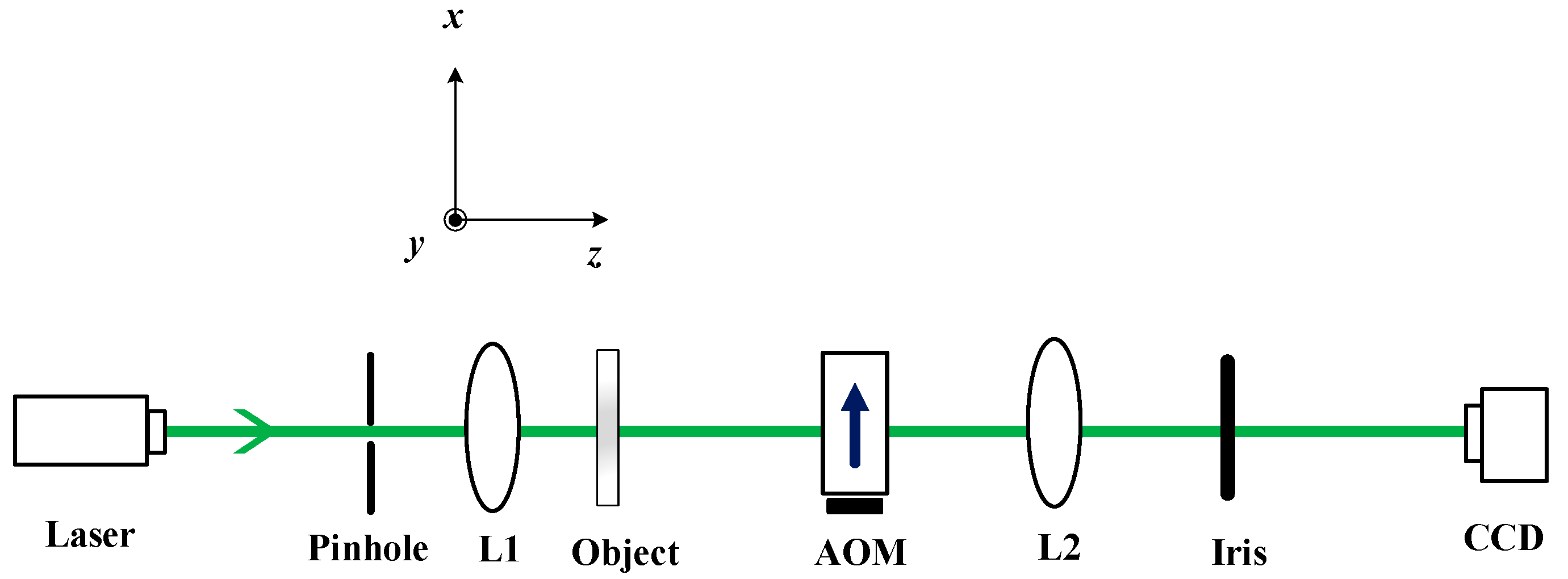

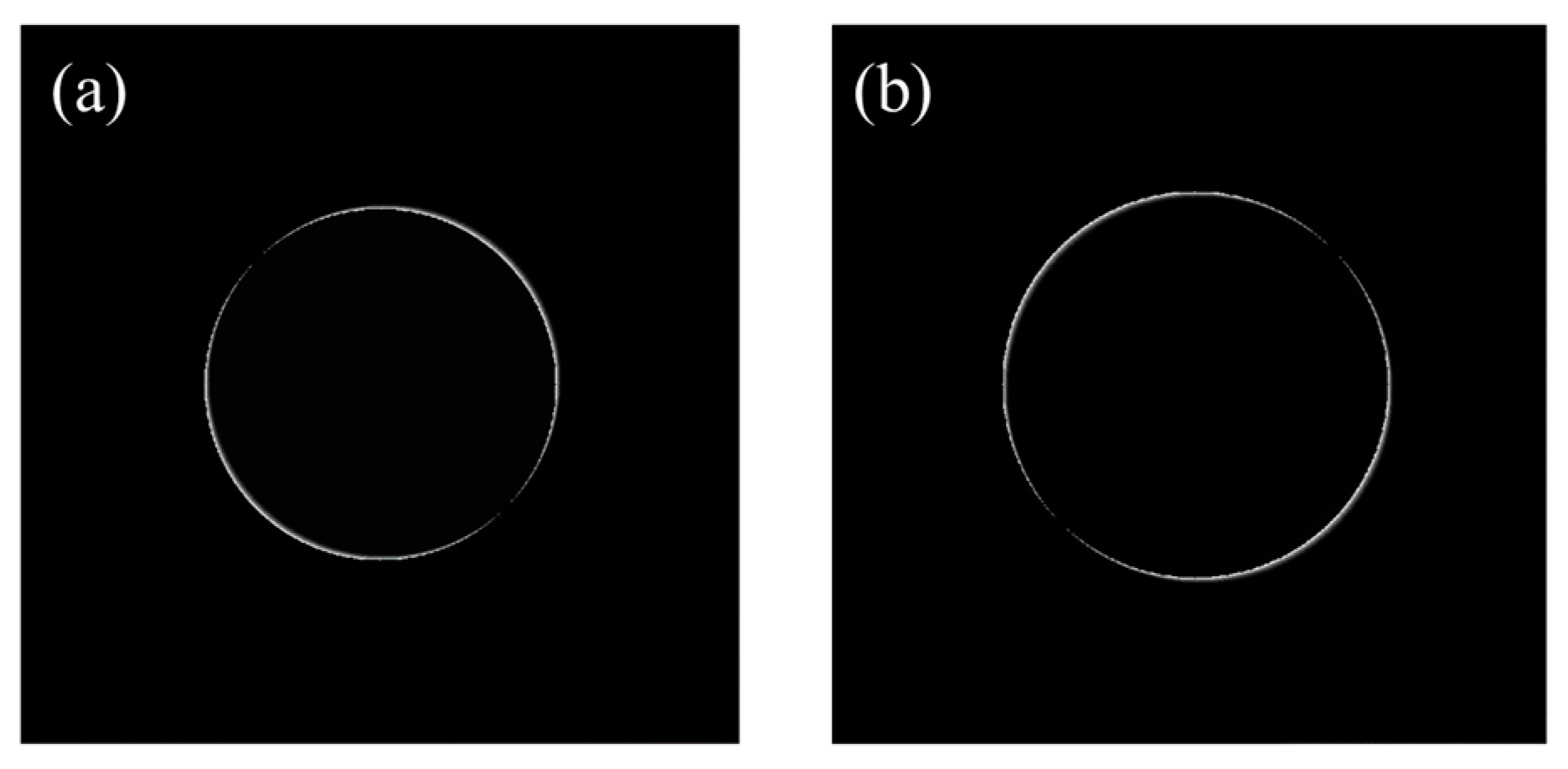

2. Image Processing with One AOM

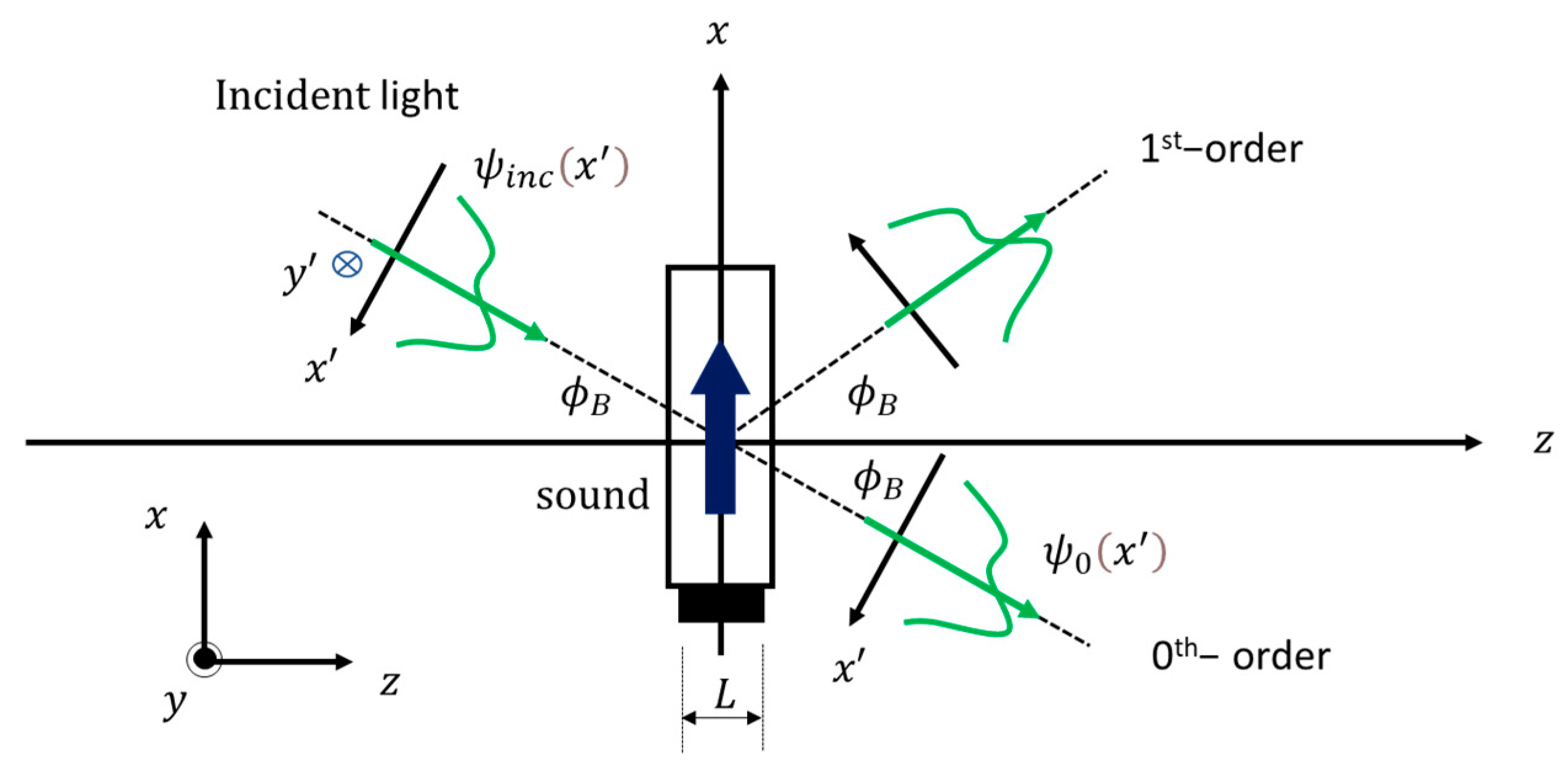

2.1. Principle of AOM and Acousto-Optic Transfer Function

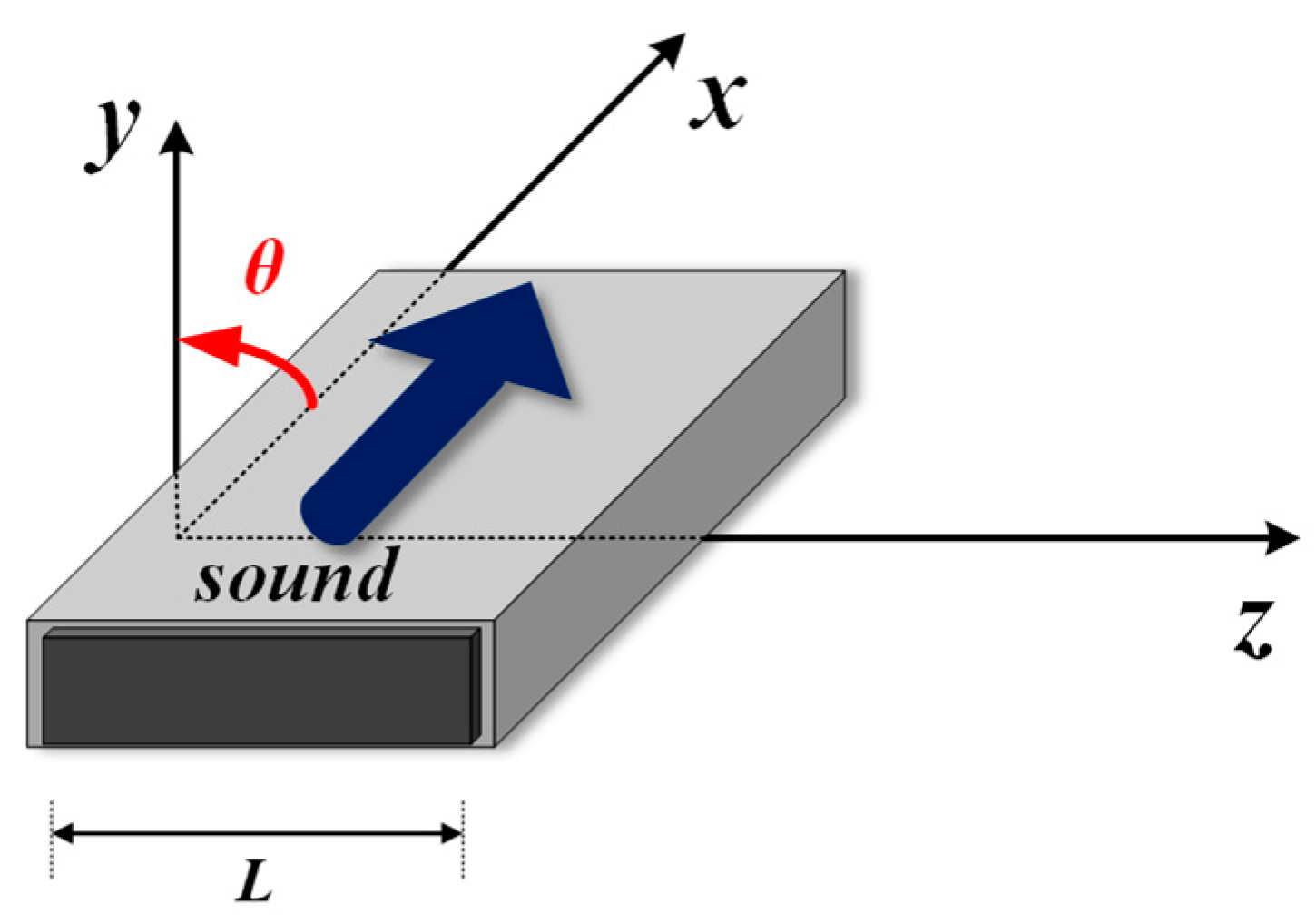

2.2. Rotation of AOM on the x′−y′ Plane

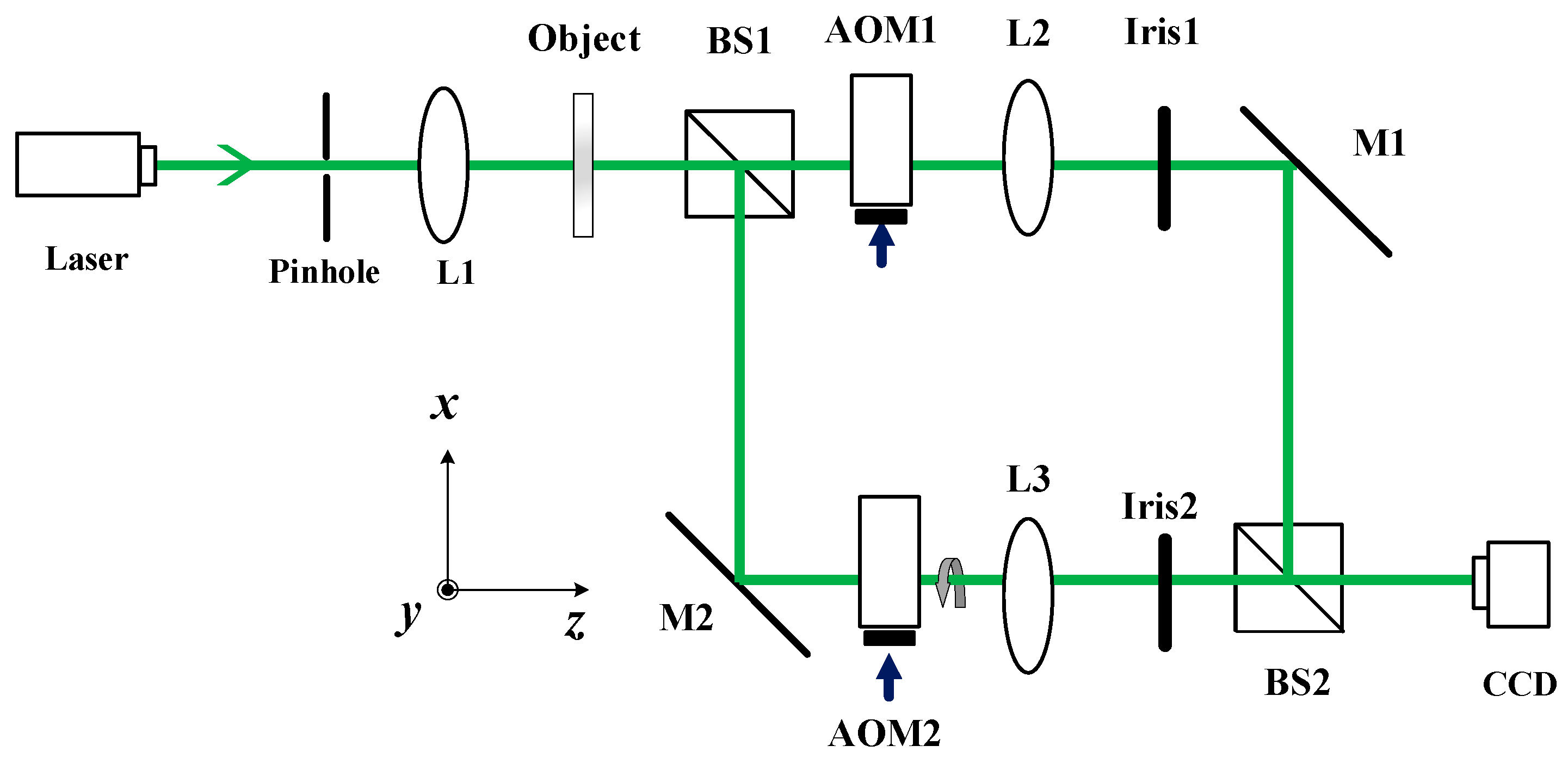

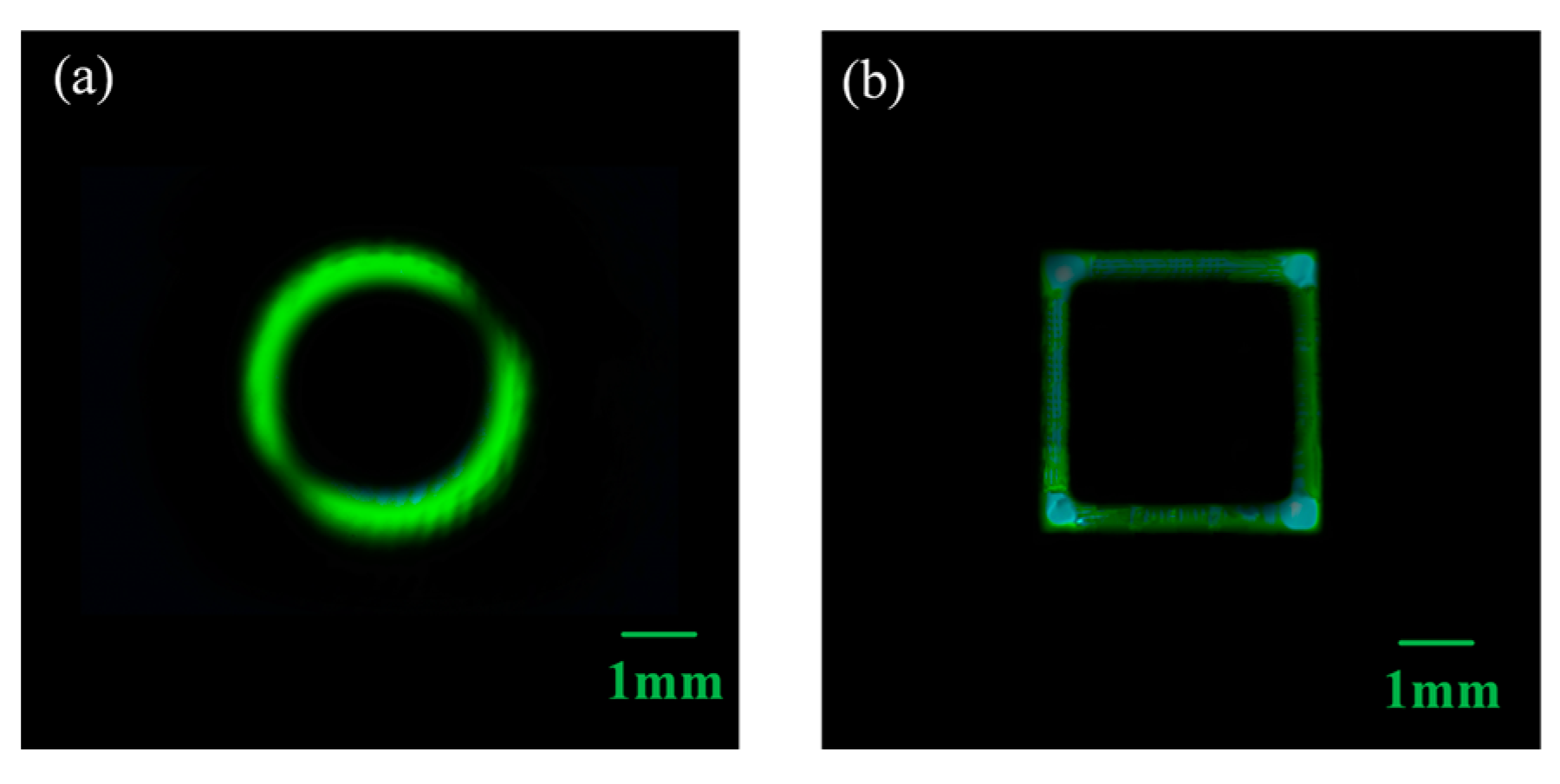

3. Mach–Zehnder Interferometer (MZI) with Dual Acousto-Optic Modulators

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ren, Z.; Lam, E.Y.; Zhao, J. Acceleration of autofocusing with improved edge extraction using structure tensor and Schatten norm. Opt. Express 2020, 10, 14712–14728. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.D.; Zhang, Y.P.; Wang, F.; Zhu, X.F.; Li, C.G.; Zhang, Y.A.; Xu, W. Edge extraction based on optical scanning holography system with annular pupils. Chin. J. Lasers 2019, 46, 0109001. [Google Scholar] [CrossRef]

- Zhang, Y.P.; Poon, T.-C.; Tsang, P.W.; Wang, R.D.; Wang, L. Review on feature extraction for 3-D incoherent image processing using optical scanning holography. IEEE Trans. Ind. Inform. 2019, 15, 6146–6154. [Google Scholar] [CrossRef]

- Zhang, Y.P.; Wang, R.D.; Tsang, P.W.; Poon, T.-C. Sectioning with edge extraction in optical incoherent imaging processing. OSA Continuum. 2020, 3, 698–708. [Google Scholar] [CrossRef]

- Bi, G.L.; Xu, Z.J.; Zhao, J.; Sun, Q. Multispectral image enhancement based on irradiation-reflection model and bounded operation. Acta Phys. Sin. 2015, 64, 78–86. [Google Scholar]

- Gao, J.Y.; Xu, H.L.; Shao, K.L.; Yin, H. An adaptive edge detection method based on local edge feature descriptor. Chin. J. Lasers 2020, 47, 193–201. [Google Scholar]

- Vo, A.; Oraintara, S. A study of relative phase in complex wavelet domain: Property, statistics and applications in texture image retrieval and segmentation. Signal Process.-Image 2010, 25, 28–46. [Google Scholar] [CrossRef]

- Gonzalez, R.C. Woods R E. Digital Image Processing, 3rd ed.; Pearson Prentice Hall: Upper Saddle River, NJ, USA, 2008; pp. 61–121. [Google Scholar]

- Zhou, Y.; Feng, S.; Nie, S.; Ma, J.; Yuan, C. Image edge enhancement using Airy spiral phase filter. Opt. Express 2016, 24, 25258–25268. [Google Scholar] [CrossRef]

- Situ, G.H.; Pedrini, G.; Osten, W. Spiral phase filtering and orientation-selective edge detection/enhancement. J. Opt. Soc. Am. A 2009, 26, 1788–1797. [Google Scholar] [CrossRef]

- Pan, Y.J.; Jia, W.; Yu, J.J.; Dobson, K.; Zhou, C.H.; Wang, Y.T.; Poon, T.-C. Edge extraction using a time-varying vortex beam in incoherent digital holography. Opt. Lett. 2014, 39, 4176–4179. [Google Scholar] [CrossRef]

- Zhang, Y.P.; Poon, T.-C. Modern Information Optics with MATLAB; Cambridge University Press and Higher Education Press: Cambridge, UK, 2023; Chapter 7. [Google Scholar]

- Chrostowski, J.; Delisle, C. Bistable optical switching based on Bragg diffraction. Opt. Commun. 1982, 41, 71–74. [Google Scholar] [CrossRef]

- Pieper, R.J.; Poon, T.C. An acoustooptic FM receiver demonstrating some principles of modern signal processing. IEEE Trans. Educ. 1985, 28, 11–17. [Google Scholar] [CrossRef]

- Chatterjee, M.R.; Poon, T.-C.; Sitter, D.N. Transfer function formalism for strong acousto-optic Bragg diffraction of light beams with arbitrary profiles. Acta Acust. United Acust. 1990, 71, 81–92. [Google Scholar]

- Chen, S.T.; Chatterjee, M.R. Dual-input hybrid acousto-optic set-reset flip-flop and its nonlinear dynamics. Appl. Optics 1997, 36, 3147–3154. [Google Scholar] [CrossRef]

- Wang, T.S.; Zhang, C.; Aleksov, A.; Salama, I.; Kar, A. Gaussian beam diffraction by two-dimensional refractive index modulation for high diffraction efficiency and large deflection angle. Opt. Express 2017, 25, 16002. [Google Scholar] [CrossRef]

- Korpel, A. Acousto-Optics; Marcel Dekker: New York, NY, USA, 1988; pp. 43–93. [Google Scholar]

- Balakshy, V.I. Scanning of images. Sov. J. Quantum Electron. 1979, 6, 965–971. [Google Scholar] [CrossRef]

- Xia, J.G.; Dunn, D.B.; Poon, T.-C.; Banerjee, P.P. Image edge enhancement by Bragg diffraction. Opt. Commun. 1996, 128, 1–7. [Google Scholar] [CrossRef]

- Cao, D.Q.; Banerjee, P.P.; Poon, T.-C. Image edge enhancement with two cascaded acousto-optic cells with contrapropagating sound. Appl. Optics 1998, 37, 3007–3014. [Google Scholar] [CrossRef]

- Banerjee, P.P.; Cao, D.Q.; Poon, T.-C. Basic image-processing operations by use of acousto-optics. Appl. Optics 1997, 36, 3086–3089. [Google Scholar] [CrossRef]

- Zhang, Y.P.; Fan, H.X.; Poon, T.-C. Optical image processing using acousto-optic modulators as programmable volume holograms: A review. Chin. Opt. Lett. 2022, 20, 29–38. [Google Scholar] [CrossRef]

- Voloshinov, V.B.; Babkina, T.M.; Molchanov, V.Y. Two-dimensional selection of optical spatial frequencies by acousto-optic methods. Opt. Eng. 2002, 41, 1273. [Google Scholar] [CrossRef]

- Sharma, M.K.; Joseph, J.; Senthilkumaran, P. Selective edge enhancement using shifted anisotropic vortex filter. J. Optics-UK 2013, 42, 1–7. [Google Scholar] [CrossRef]

- Dobson, K.K.; Jia, W.; Poon, T.-C. Anisotropic edge enhancement in optical scanning holography with spiral phase filtering. Chin. Opt. Lett. 2016, 14, 010006. [Google Scholar] [CrossRef]

- Klein, W.R.; Cook, B.D. A unified approach to ultrasonic light diffraction. IEEE Trans. Sonics Ultrason. 1967, 14, 123. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; Fan, H.; Zhang, Y.; Yao, Y.; Zhang, B.; Qin, W.; Poon, T.-C. Isotropic Two-Dimensional Differentiation Based on Dual Dynamic Volume Holograms. Photonics 2023, 10, 828. https://doi.org/10.3390/photonics10070828

Wang P, Fan H, Zhang Y, Yao Y, Zhang B, Qin W, Poon T-C. Isotropic Two-Dimensional Differentiation Based on Dual Dynamic Volume Holograms. Photonics. 2023; 10(7):828. https://doi.org/10.3390/photonics10070828

Chicago/Turabian StyleWang, Pin, Houxin Fan, Yaping Zhang, Yongwei Yao, Bing Zhang, Wenlong Qin, and Ting-Chung Poon. 2023. "Isotropic Two-Dimensional Differentiation Based on Dual Dynamic Volume Holograms" Photonics 10, no. 7: 828. https://doi.org/10.3390/photonics10070828

APA StyleWang, P., Fan, H., Zhang, Y., Yao, Y., Zhang, B., Qin, W., & Poon, T.-C. (2023). Isotropic Two-Dimensional Differentiation Based on Dual Dynamic Volume Holograms. Photonics, 10(7), 828. https://doi.org/10.3390/photonics10070828