Design and Experimental Validation of an Optical Autofocusing System with Improved Accuracy

Abstract

:1. Introduction

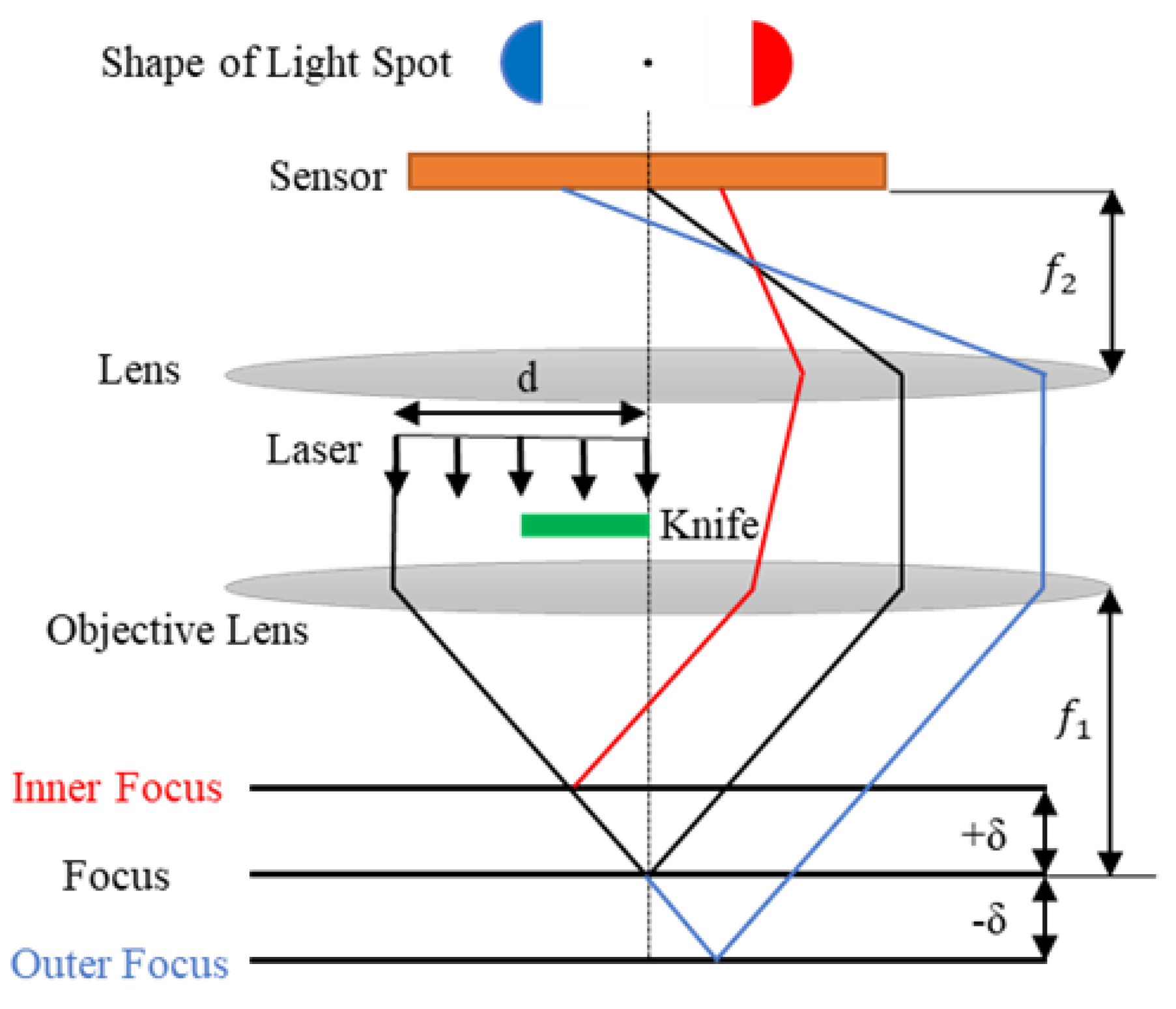

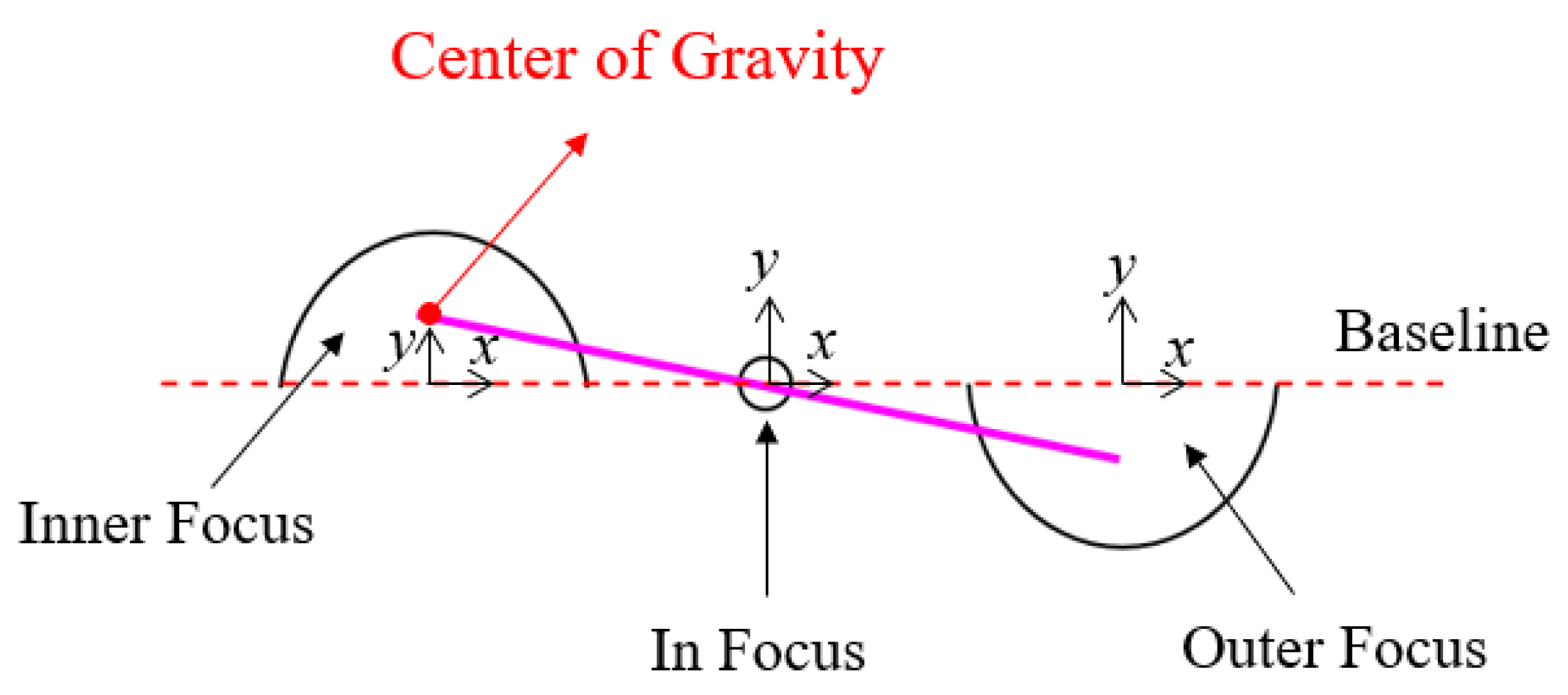

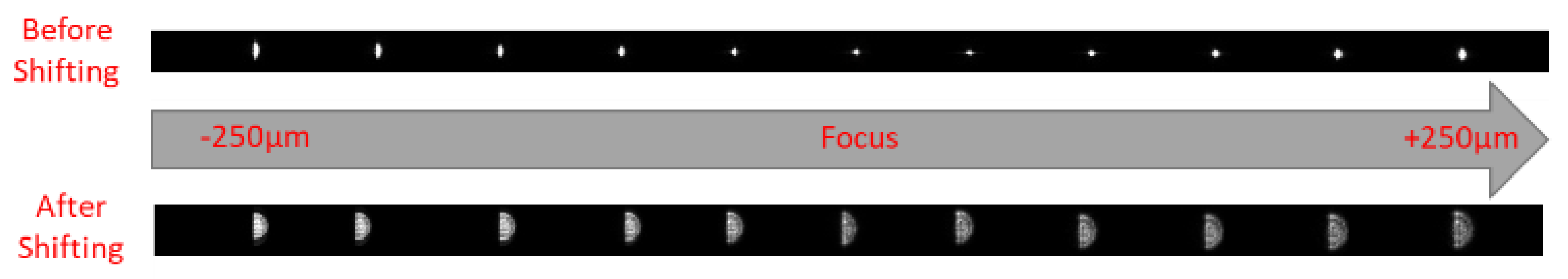

2. Traditional Optics-Based Autofocusing System with Centroid Method

2.1. Principle of Traditional Autofocusing System with Centroid Method

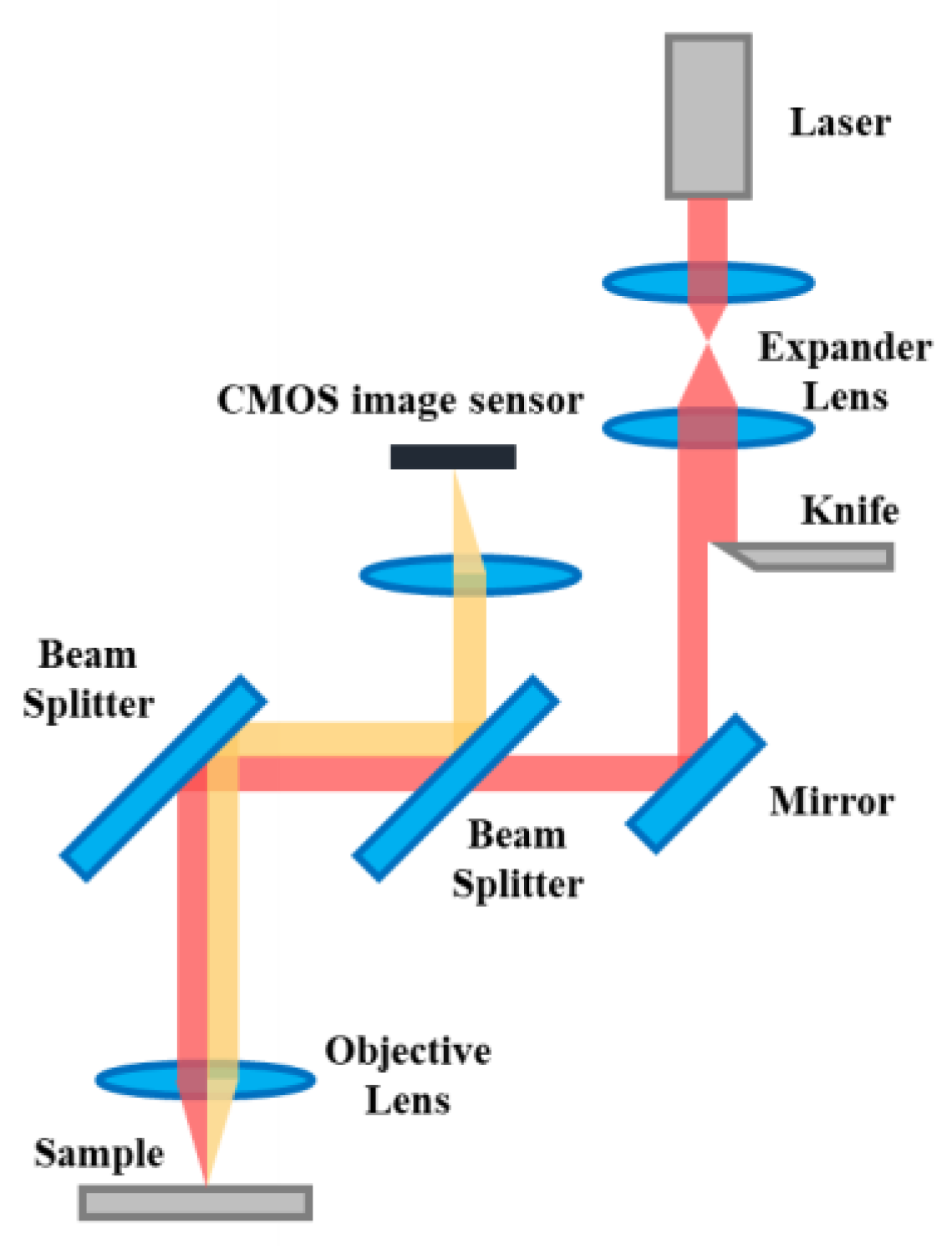

2.2. Structure of Traditional Autofocusing Method with Centroid Method

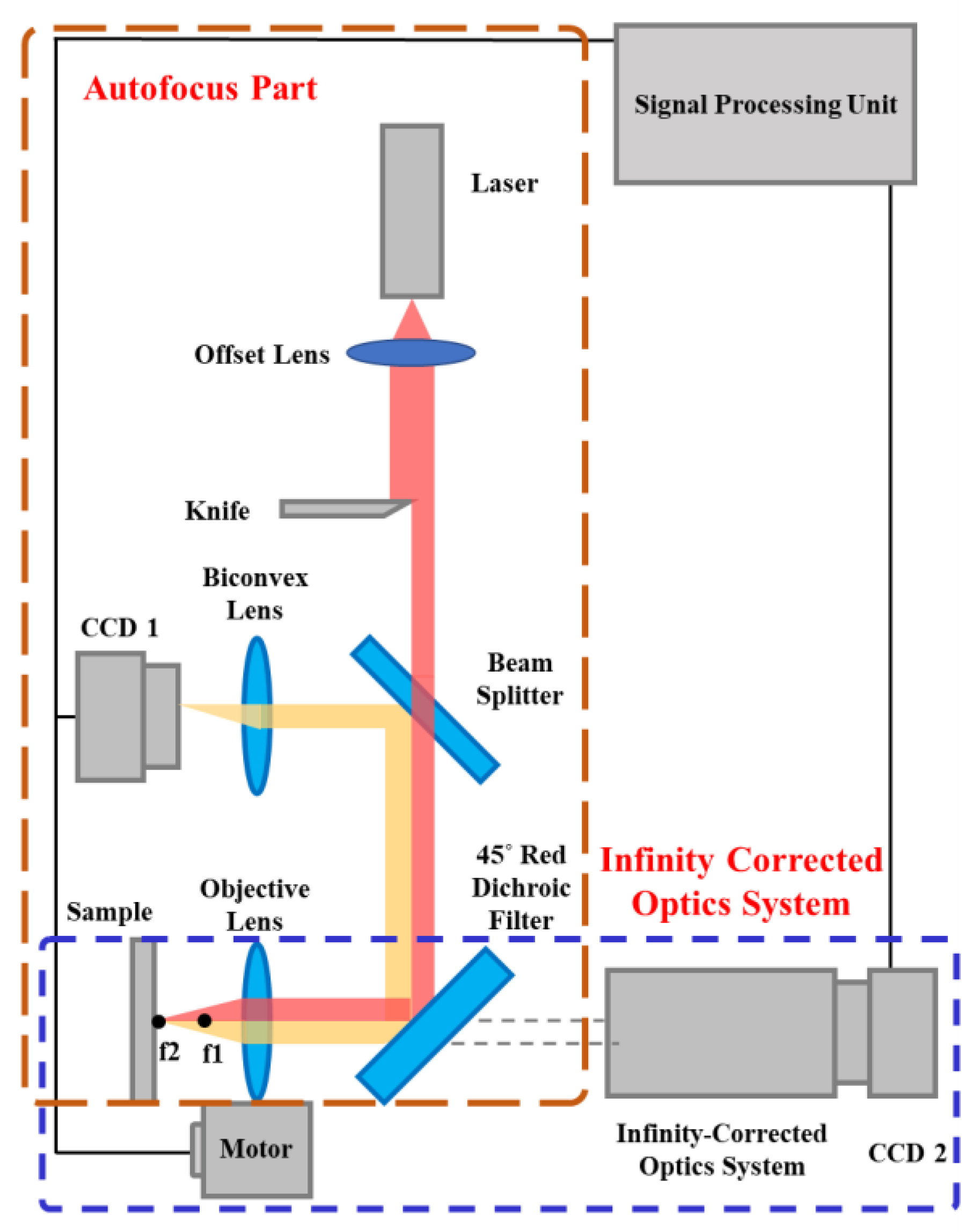

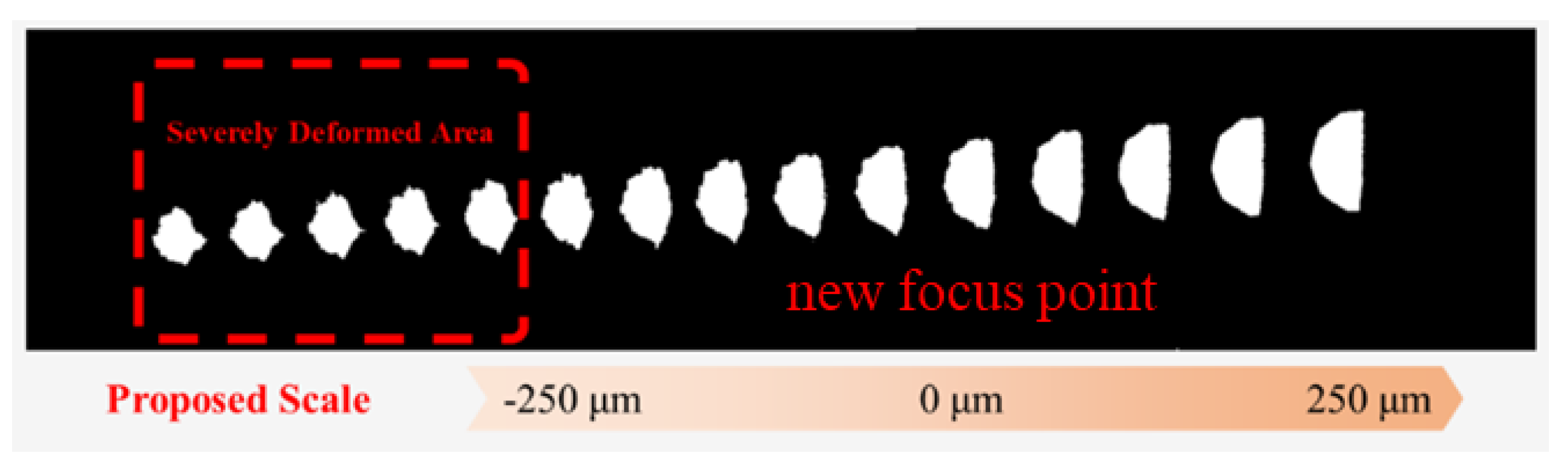

3. Proposed Autofocusing Structure and Prototype Model

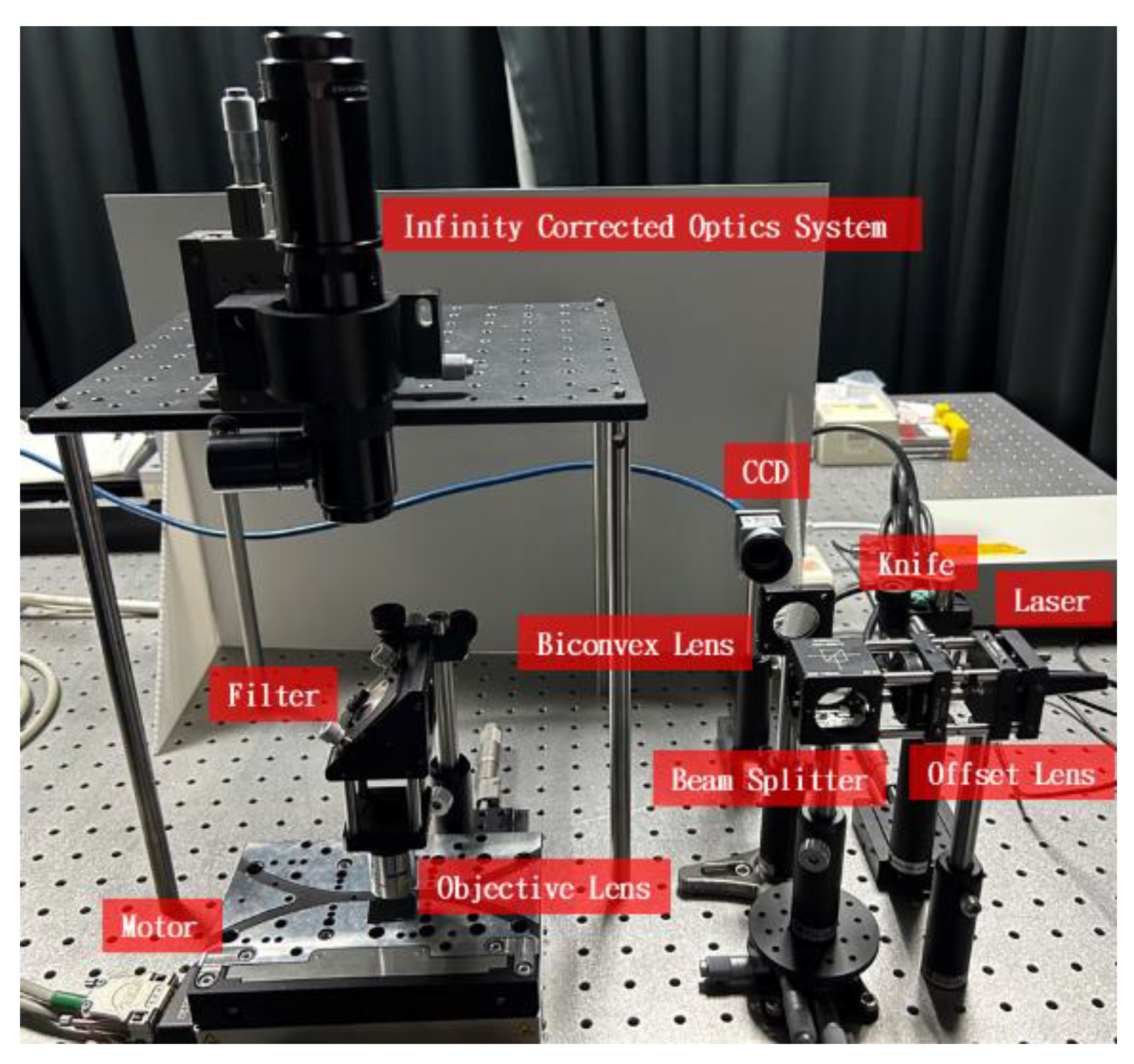

3.1. Structure of Proposed Autofocusing System

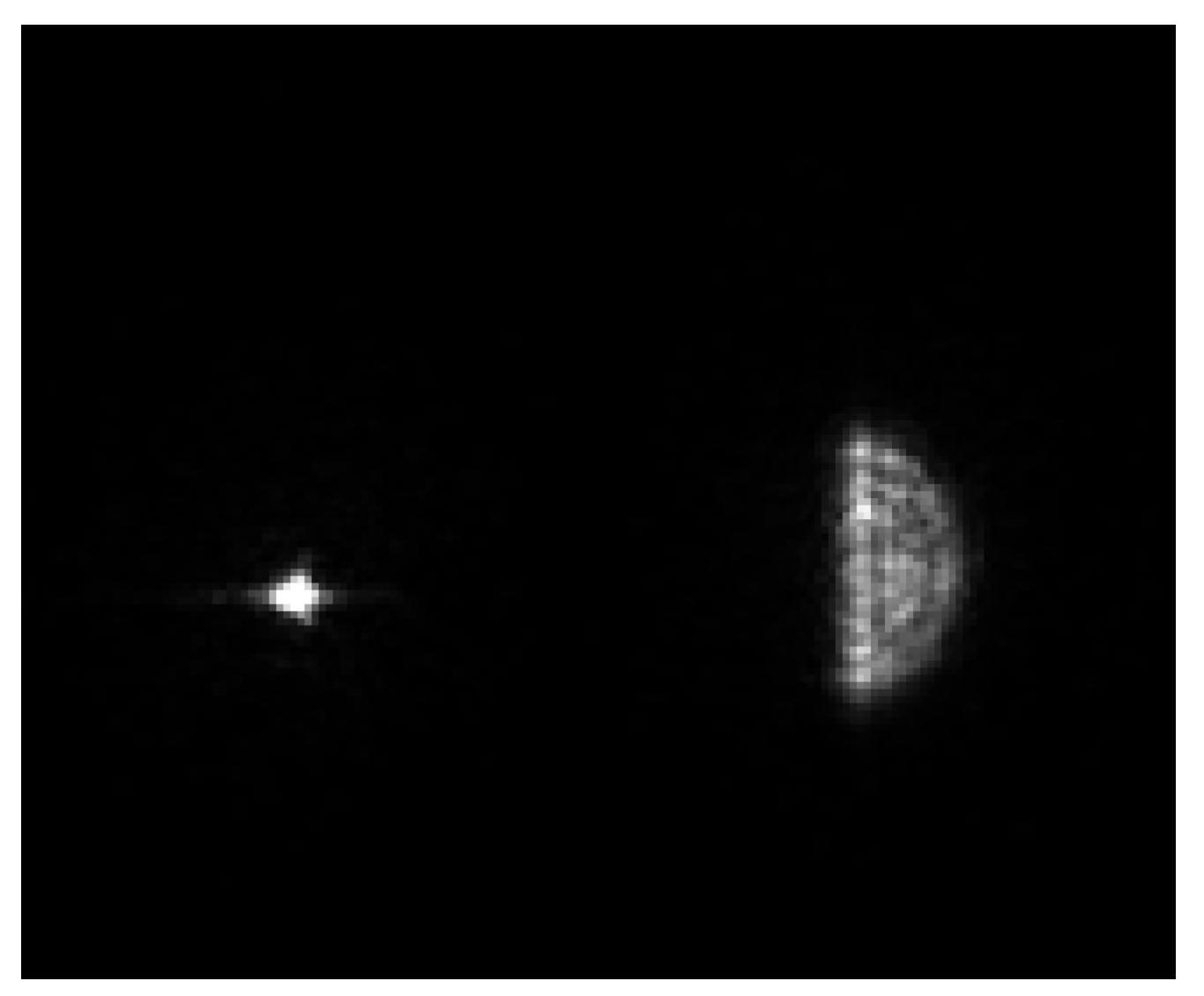

3.2. Experimental Prototype and Procedure of Proposed Autofocusing System

4. Experimental Results of Proposed System

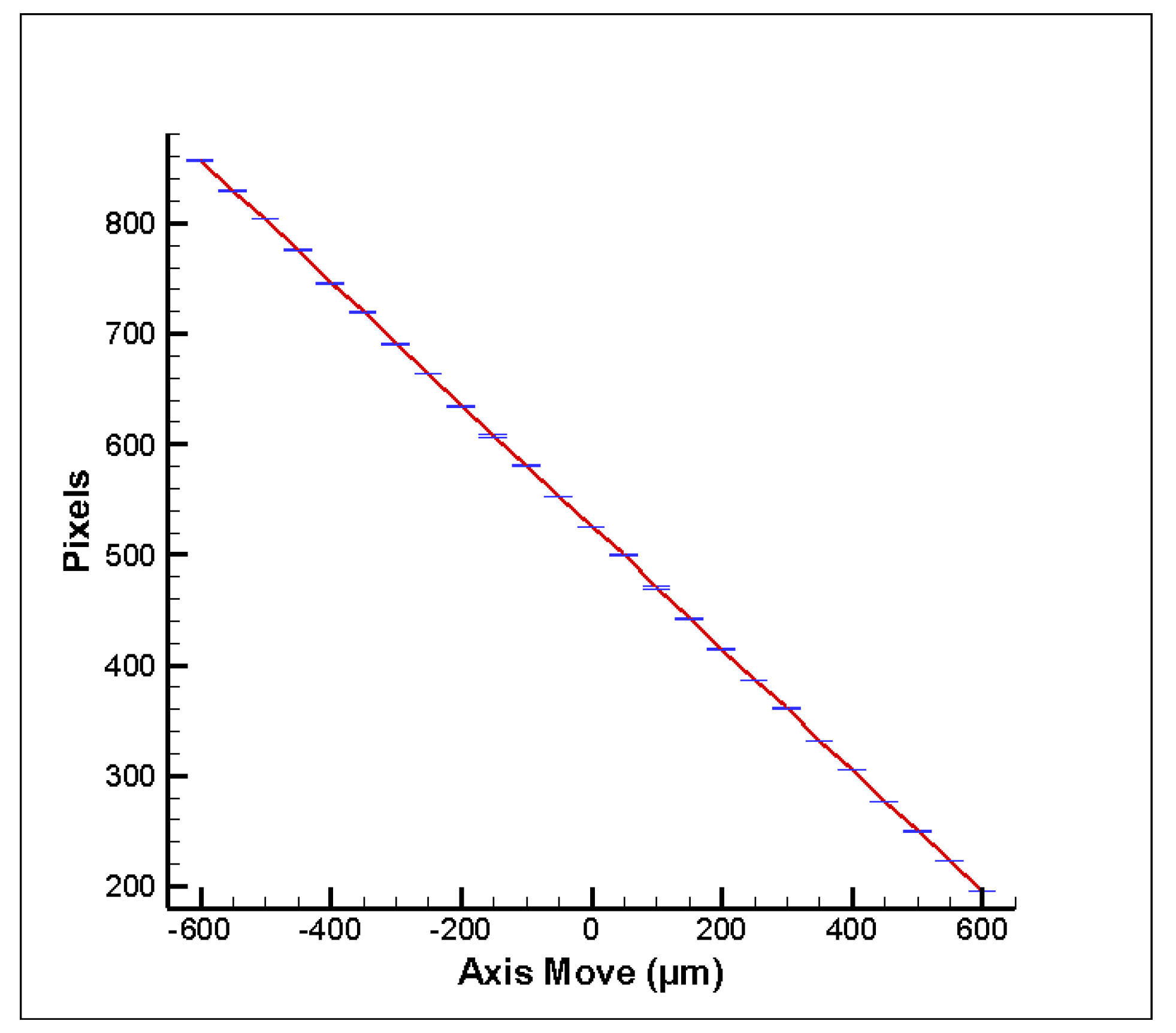

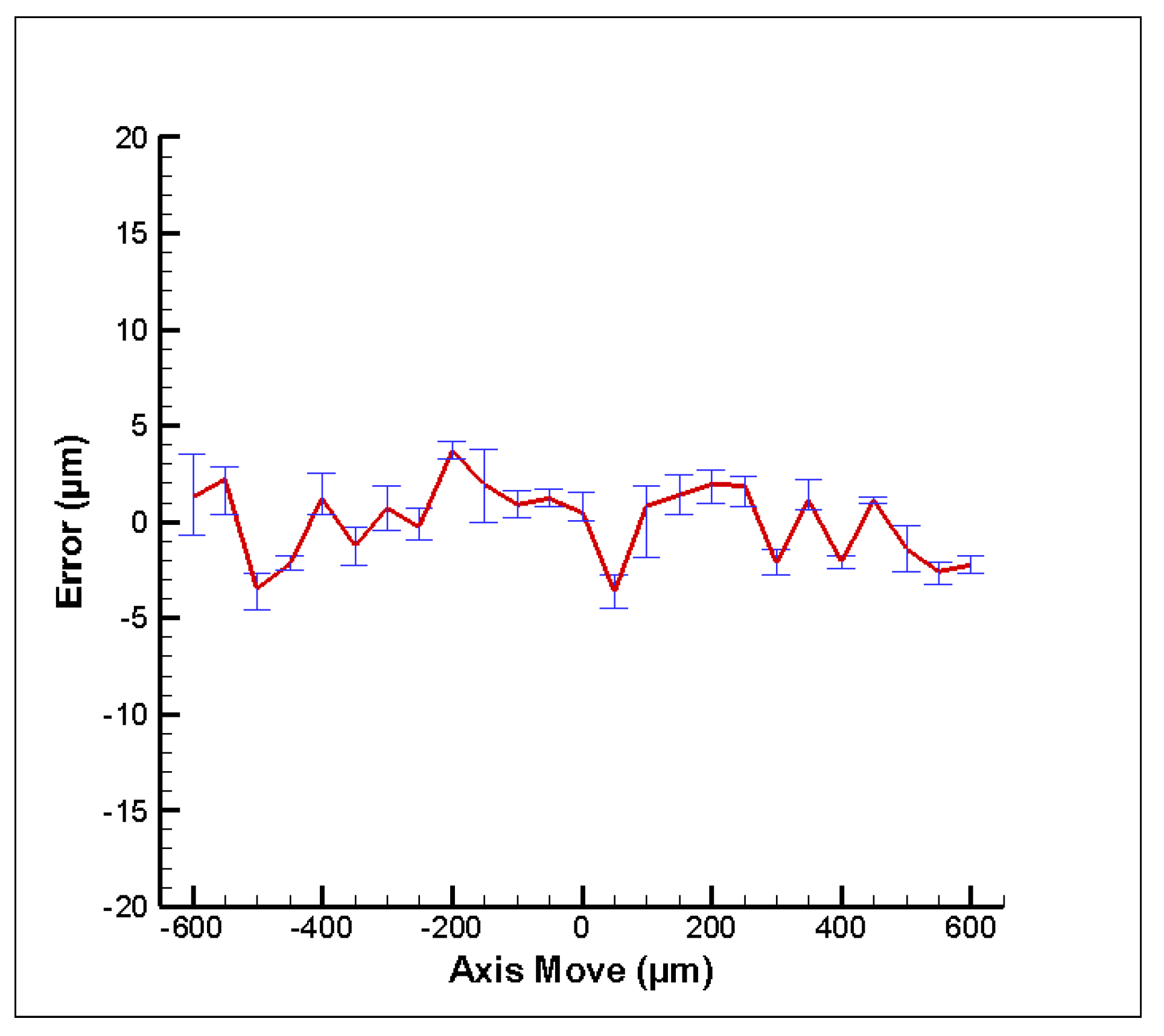

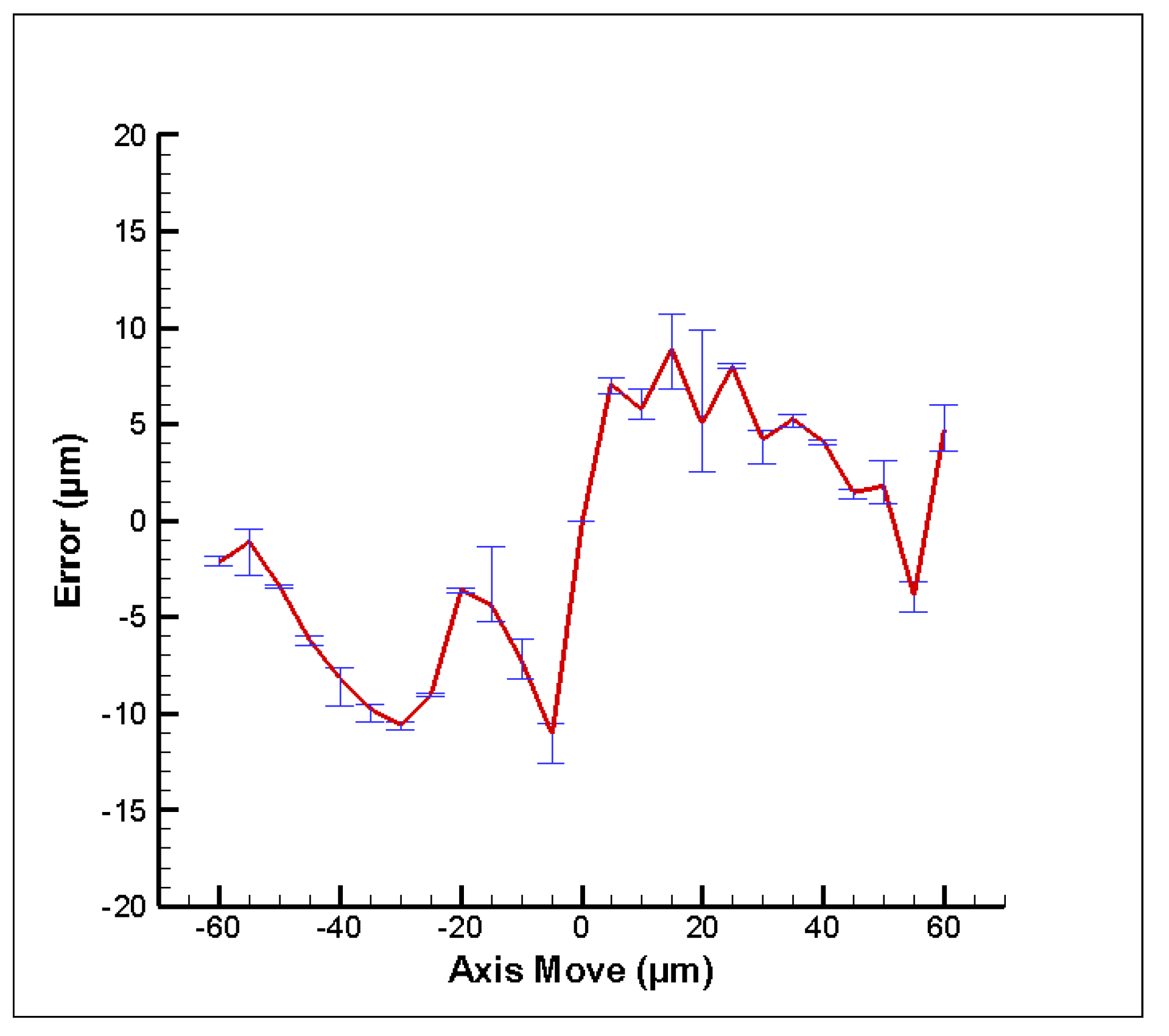

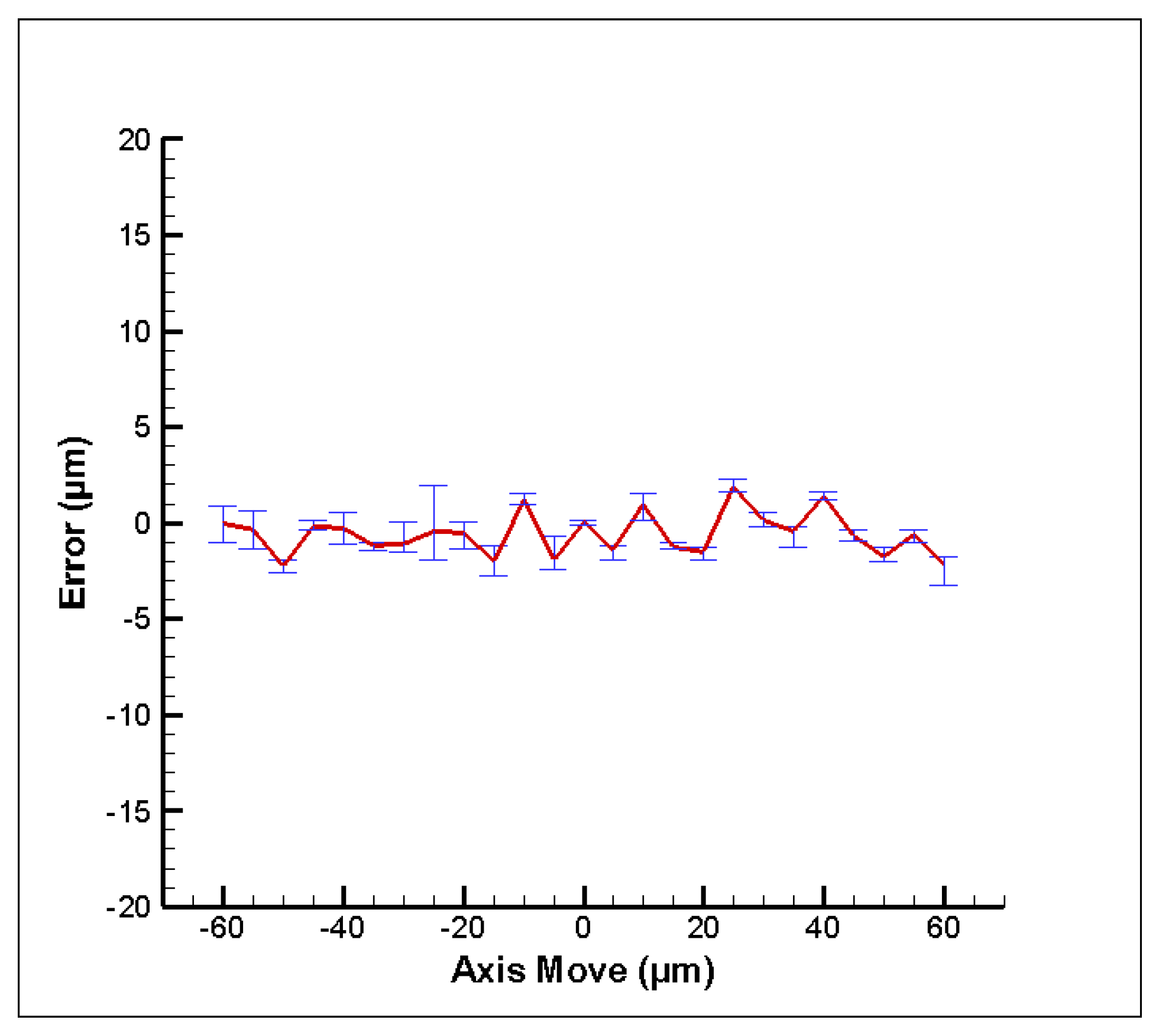

4.1. Experimental Results

4.2. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, F.; Cao, P.; Zhang, Y.; Hu, H.; Yang, Y. A machine vision method for correction of eccentric error based on adaptive enhancement algorithm. IEEE Trans. Instrum. Meas. 2020, 70, 5002311. [Google Scholar] [CrossRef]

- Shi, Q.; Xi, N.; Chen, Y. Development of an automatic optical measurement system for automotive part surface inspection. In Proceedings of the 2005 IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Monterey, CA, USA, 24–28 July 2005; pp. 1557–1562. [Google Scholar]

- Abd Al Rahman, M.; Mousavi, A. A review and analysis of automatic optical inspection and quality monitoring methods in electronics industry. IEEE Access 2020, 8, 183192–183271. [Google Scholar]

- Shirazi, M.F.; Park, K.; Wijesinghe, R.E.; Jeong, H.; Han, S.; Kim, P.; Jeon, M.; Kim, J. Fast industrial inspection of optical thin film using optical coherence tomography. Sensors 2016, 16, 1598. [Google Scholar] [CrossRef] [PubMed]

- Agour, M.; Falldorf, C.; Bergmann, R.B. Spatial multiplexing and autofocus in holographic contouring for inspection of micro-parts. Opt. Express 2018, 26, 28576–28588. [Google Scholar] [CrossRef] [PubMed]

- Fuller, D.N.; Kellner, A.L.; Price, J.H. Exploiting chromatic aberration for image-based microscope autofocus. Appl. Opt. 2011, 50, 4967–4976. [Google Scholar] [CrossRef]

- Moscaritolo, M.; Jampel, H.; Knezevich, F.; Zeimer, R. An image based auto-focusing algorithm fordigital fundus photography. IEEE Trans. Med. Imaging 2009, 28, 1703–1707. [Google Scholar] [CrossRef]

- Burge, J. Accurate Image-Based Estimates of Focus Error in the Human Eye and in a Smartphone Camera. Inf. Disp. 2017, 33, 18–23. [Google Scholar] [CrossRef]

- Marrugo, A.G.; Millán, M.S.; Abril, H.C. Implementation of an image based focusing algorithm for non-mydriatic retinal imaging. In Proceedings of the 2014 III International Congress of Engineering Mechatronics and Automation (CIIMA), Cartagena, Colombia, 22–24 October 2014; pp. 1–3. [Google Scholar]

- Yang, C.; Chen, M.; Zhou, F.; Li, W.; Peng, Z. Accurate and rapid auto-focus methods based on image quality assessment for telescope observation. Appl. Sci. 2020, 10, 658. [Google Scholar] [CrossRef]

- Choi, H.; Ryu, J. Design of wide angle and large aperture optical system with inner focus for compact system camera applications. Appl. Sci. 2019, 10, 179. [Google Scholar] [CrossRef]

- Marks, D.L.; Oldenburg, A.L.; Reynolds, J.J.; Boppart, S.A. Autofocus algorithm for dispersion correction in optical coherence tomography. Appl. Opt. 2003, 42, 3038–3046. [Google Scholar] [CrossRef]

- Bathe-Peters, M.; Annibale, P.; Lohse, M. All-optical microscope autofocus based on an electrically tunable lens and a totally internally reflected IR laser. Opt. Express 2018, 26, 2359–2368. [Google Scholar] [CrossRef] [PubMed]

- Liang, Y.; Yan, M.; Tang, Z.; He, Z.; Liu, J. Learning to autofocus based on gradient boosting machine for optical microscopy. Optik 2019, 198, 163002. [Google Scholar] [CrossRef]

- Liu, C.-S.; Jiang, S.-H. A novel laser displacement sensor with improved robustness toward geometrical fluctuations of the laser beam. Meas. Sci. Technol. 2013, 24, 105101. [Google Scholar] [CrossRef]

- Du, J.; Sun, S.; Li, F.; Jiang, J.; Yan, W.; Wang, S.; Tian, P. Large range nano autofocus method based on differential centroid technique. Opt. Laser Technol. 2023, 159, 109015. [Google Scholar] [CrossRef]

- Gu, C.-C.; Cheng, H.; Wu, K.-J.; Zhang, L.-J.; Guan, X.-P. A high precision laser-based autofocus method using biased image plane for microscopy. J. Sens. 2018, 2018, 8542680. [Google Scholar] [CrossRef]

- Chen, R.; van Beek, P. Improving the accuracy and low-light performance of contrast-based autofocus using supervised machine learning. Pattern Recognit. Lett. 2015, 56, 30–37. [Google Scholar] [CrossRef]

- Liu, C.-S.; Tu, H.-D. Innovative image processing method to improve autofocusing accuracy. Sensors 2022, 22, 5058. [Google Scholar] [CrossRef]

- Fu, W.; Li, H. An Improved Phase Gradient Autofocus Algorithm For ISAR Phase Autofocus Problem. arXiv 2023. [Google Scholar] [CrossRef]

- Zhang, X.; Fan, F.; Gheisari, M.; Srivastava, G. A novel auto-focus method for image processing using laser triangulation. IEEE Access 2019, 7, 64837–64843. [Google Scholar] [CrossRef]

- Bueno-Ibarra, M.A.; A’lvarez-Borrego, J.; Acho, L.; Chávez-Sánchez, M.C. Fast autofocus algorithm for automated microscopes. Opt. Eng. 2005, 44, 063601–063601-063608. [Google Scholar]

- Liu, C.-S.; Hu, P.-H.; Lin, Y.-C. Design and experimental validation of novel optics-based autofocusing microscope. Appl. Phys. B 2012, 109, 259–268. [Google Scholar] [CrossRef]

- Liu, C.-S.; Jiang, S.-H. Design and experimental validation of novel enhanced-performance autofocusing microscope. Appl. Phys. B 2014, 117, 1161–1171. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hung, J.-H.; Tu, H.-D.; Hsu, W.-H.; Liu, C.-S. Design and Experimental Validation of an Optical Autofocusing System with Improved Accuracy. Photonics 2023, 10, 1329. https://doi.org/10.3390/photonics10121329

Hung J-H, Tu H-D, Hsu W-H, Liu C-S. Design and Experimental Validation of an Optical Autofocusing System with Improved Accuracy. Photonics. 2023; 10(12):1329. https://doi.org/10.3390/photonics10121329

Chicago/Turabian StyleHung, Jui-Hsiang, Ho-Da Tu, Wen-Huai Hsu, and Chien-Sheng Liu. 2023. "Design and Experimental Validation of an Optical Autofocusing System with Improved Accuracy" Photonics 10, no. 12: 1329. https://doi.org/10.3390/photonics10121329

APA StyleHung, J.-H., Tu, H.-D., Hsu, W.-H., & Liu, C.-S. (2023). Design and Experimental Validation of an Optical Autofocusing System with Improved Accuracy. Photonics, 10(12), 1329. https://doi.org/10.3390/photonics10121329