High-Flux Fast Photon-Counting 3D Imaging Based on Empirical Depth Error Correction

Abstract

:1. Introduction

2. Depth Error Correction Method

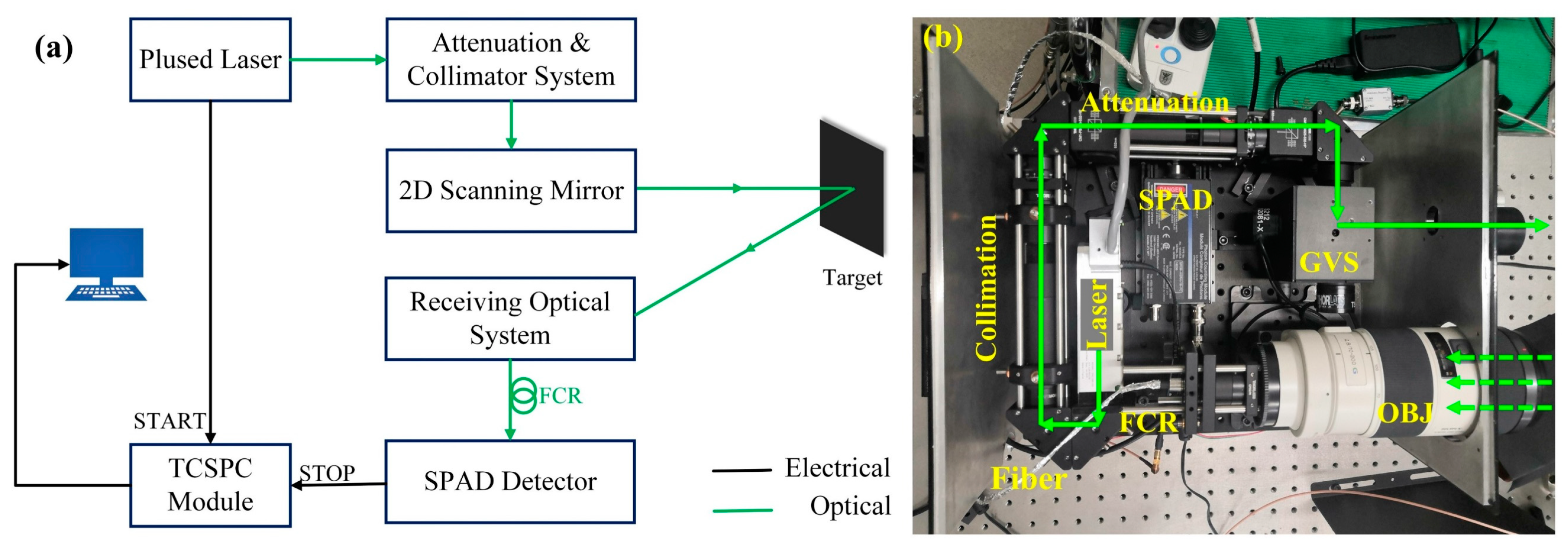

2.1. Experimental Setup

2.2. Probabilistic Detection Model and Derivation of Echo Photon Flux

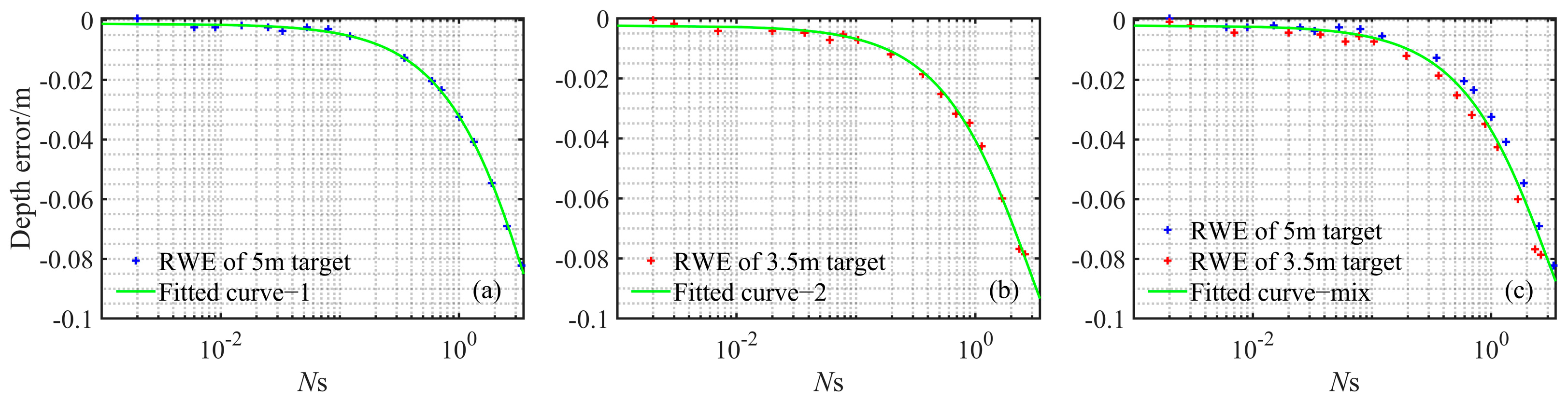

2.3. Depth Error Function Correction Model

3. Experimental Results and Analysis

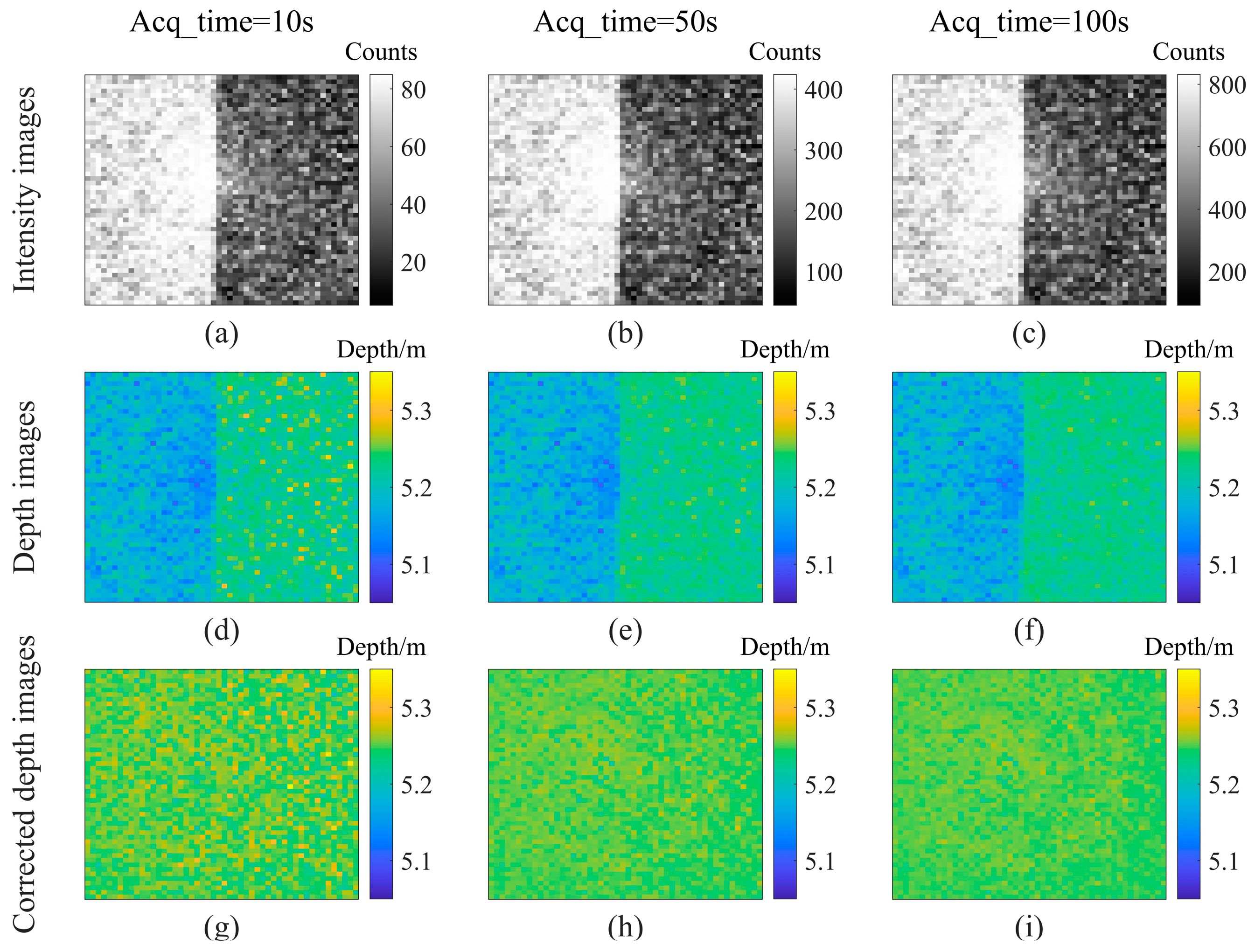

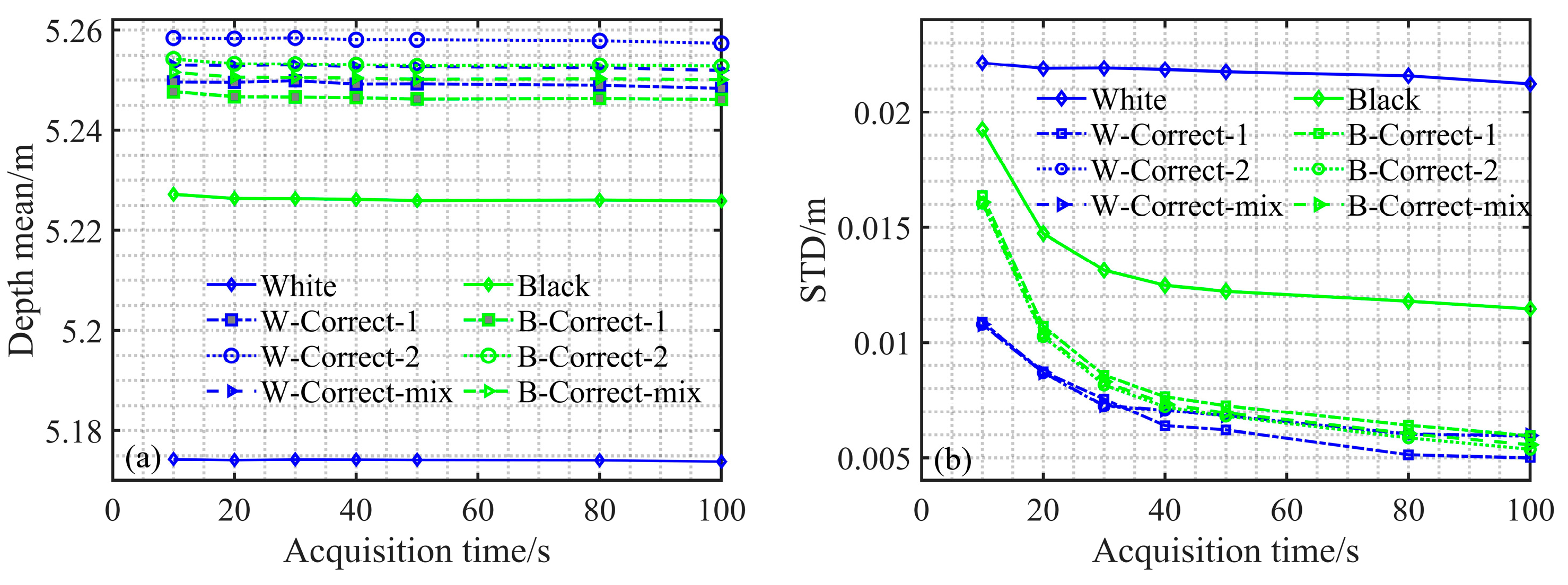

3.1. Depth Imaging Experiment for a White-Black Plate Target

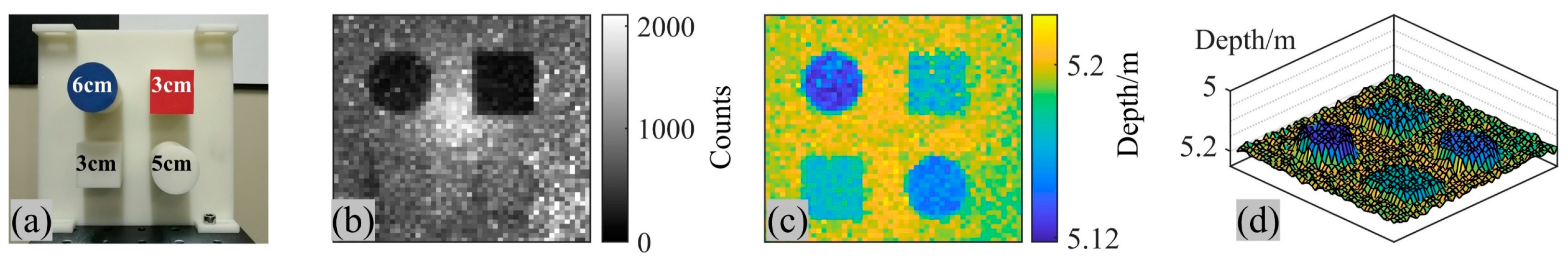

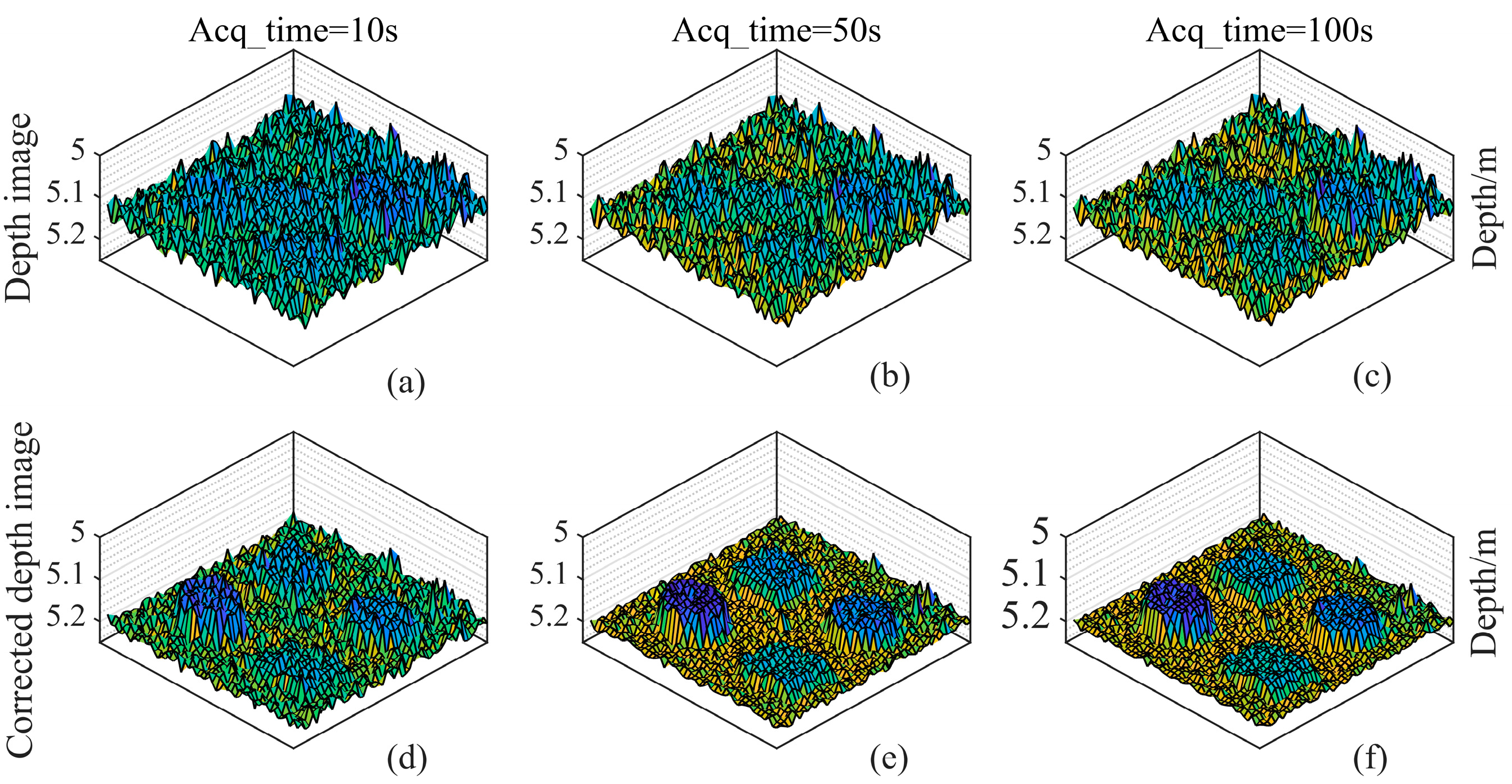

3.2. Depth Imaging Experiment for a Complex Geometry Target

3.3. Depth Imaging Experiment for Targets at a Longer Distance of 10 m

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, Z.P.; Ye, J.T.; Huang, X.; Jiang, P.Y.; Cao, Y.; Hong, Y.; Yu, C.; Zhang, J.; Zhang, Q.; Peng, C.Z.; et al. Single-photon imaging over 200 km. Optica 2021, 8, 344–349. [Google Scholar] [CrossRef]

- Pawlikowska, A.M.; Halimi, A.; Lamb, R.A.; Buller, G.S. Single-photon three-dimensional imaging at up to 10 kilometers range. Opt. Express 2017, 25, 11919–11930. [Google Scholar] [CrossRef]

- Kang, Y.; Li, L.F.; Liu, D.W.; Li, D.J.; Zhang, T.Y.; Zhao, W. Fast long-range photon counting depth imaging with sparse single-photon data. IEEE Photonics J. 2018, 10, 7500710. [Google Scholar] [CrossRef]

- Maccarone, A.; Mccarthy, A.; Ren, X.M.; Warburton, R.E.; Wallace, A.M.; Moffat, J.; Petillot, Y.; Buller, G.S. Underwater depth imaging using time-correlated single-photon counting. Opt. Express 2015, 23, 33911–33926. [Google Scholar] [CrossRef]

- Shi, H.T.; Qi, H.Y.; Shen, G.Y.; Li, Z.H.; Wu, G. High-resolution underwater single-photon imaging with Bessel beam illumination. IEEE J. Sel. Top. Quantum Electron. 2022, 28, 8300106. [Google Scholar] [CrossRef]

- Maccarone, A.; Drummond, K.; McCarthy, A.; Steinlehner, U.K.; Tachella, J.; Garcia, D.A.; Pawlikowska, A.; Lamb, R.A.; Henderson, R.K.; McLaughlin, S.; et al. Submerged single-photon LiDAR imaging sensor used for real-time 3D scene reconstruction in scattering underwater environments. Opt. Express 2023, 31, 16690–16708. [Google Scholar] [CrossRef] [PubMed]

- Tan, C.S.; Kong, W.; Huang, G.H.; Hou, J.; Jia, S.L.; Chen, T.; Shu, R. Design and demonstration of a novel long-range photon-counting 3D imaging LiDAR with 32× 32 transceivers. Remote Sens. 2022, 14, 2851. [Google Scholar] [CrossRef]

- Incoronato, A.; Locatelli, M.; Zappa, F. SPAD-based time-of-flight discrete-time statistical model and distortion compensation. In Proceedings of the Novel Optical Systems, Methods, and Applications XXIV, San Diego, CA, USA, 7 September 2021. [Google Scholar]

- Cheng, Y.; Zhao, X.Y.; Li, L.J.; Sun, M.J. First-photon imaging with independent depth reconstruction. APL Photonics 2022, 7, 036103. [Google Scholar] [CrossRef]

- Xie, J.H.; Zhang, Z.J.; Jia, F.; Li, J.H.; Huang, M.W.; Zhao, Y. Improved single-photon active imaging through ambient noise guided missing data filling. Opt. Commun. 2022, 508, 127747. [Google Scholar] [CrossRef]

- Cao, R.Z.; Goumoens, F.D.; Blochet, B.; Xu, J.; Yang, C.H. High-resolution non-line-of-sight imaging employing active focusing. Nat. Photonics 2022, 16, 16. [Google Scholar] [CrossRef]

- Acconcia, G.; Cominelli, A.; Ghioni, M.; Rech, I. Fast fully-integrated front-end circuit to overcome pile-up limits in time-correlated single photon counting with single photon avalanche diodes. Opt. Express 2018, 26, 15398–15410. [Google Scholar] [CrossRef] [PubMed]

- Rapp, J.; Ma, Y.T.; Dawson, R.M.A.; Goyal, V.K. Dead time compensation for high-flux ranging. IEEE Trans. Signal Process. 2019, 67, 3471–3486. [Google Scholar] [CrossRef]

- Patting, M.; Wahl, M.; Kapusta, P.; Erdmann, R. Dead-time effects in TCSPC data analysis. In Proceedings of the International Congress on Optics and Optoelectronics, Prague, Czech Republic, 11 May 2007. [Google Scholar]

- Li, Z.J.; Lai, J.C.; Wang, C.Y.; Yan, W.; Li, Z.H. Influence of dead-time on detection efficiency and range performance of photon-counting laser radar that uses a Geiger-mode avalanche photodiode. Appl. Opt. 2017, 56, 6680–6687. [Google Scholar] [CrossRef] [PubMed]

- Becker, W. Advanced Time-Correlated Single Photon Counting Techniques; Springer: Berlin/Heidelberg, Germany, 2005. [Google Scholar]

- Heide, F.; Diamond, S.; Lindell, D.B.; Wetzstein, G. Sub-picosecond photon-efficient 3D imaging using single-photon sensors. Sci. Rep. 2018, 8, 17726. [Google Scholar] [CrossRef] [PubMed]

- Rapp, J.; Ma, Y.T.; Dawson, R.M.A.; Goyal, V.K. High-flux single-photon lidar. Optica 2021, 8, 30–39. [Google Scholar] [CrossRef]

- Zhou, H.; He, Y.H.; You, L.X.; Chen, S.J.; Zhang, W.J.; Wu, J.J.; Wang, Z.; Xie, X.M. Few-photon imaging at 1550 nm using a low-timing-jitter superconducting nanowire single-photon detector. Opt. Express 2015, 23, 14603–14611. [Google Scholar] [CrossRef] [PubMed]

- Ye, L.; Gu, G.H.; He, W.J.; Dai, H.D.; Chen, Q. A real-time restraint method for range walk error in 3D imaging lidar via dual detection. IEEE Photonics J. 2018, 10, 3900309. [Google Scholar] [CrossRef]

- Xie, J.H.; Zhang, Z.J.; He, Q.S.; Li, J.H.; Zhao, Y. A method for maintaining the stability of range walk error in photon counting lidar with probability distribution regulator. IEEE Photonics J. 2019, 11, 1505809. [Google Scholar] [CrossRef]

- Oh, M.S.; Kong, H.J.; Kim, T.H.; Hong, K.H.; Kim, B.W. Reduction of range walk error in direct detection laser radar using a Geiger mode avalanche photodiode. Opt. Commun. 2010, 283, 304–308. [Google Scholar] [CrossRef]

- He, W.J.; Sima, B.; Chen, Y.F.; Dai, H.D.; Chen, Q.; Gu, G.H. A correction method for range walk error in photon counting 3D imaging LIDAR. Opt. Commun. 2013, 308, 211–217. [Google Scholar] [CrossRef]

- Xu, L.; Zhang, Y.; Wu, L.; Yang, C.H.; Yang, X.; Zhang, Z.J.; Zhao, Y. Signal restoration method for restraining the range walk error of Geiger-mode avalanche photodiode lidar in acquiring a merged three-dimensional image. Appl. Opt. 2017, 56, 3059–3063. [Google Scholar] [CrossRef] [PubMed]

- Feller, W. On Probability Problems in the Theory of Counters; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar]

- Huang, K.; Li, S.; Ma, Y.; Tian, X. Theoretical model and correction method of range walk error for single-photon laser ranging. Acta Phys. Sin. 2018, 67, 064205. [Google Scholar] [CrossRef]

- Fong, B.S.; Davies, M.; Deschamps, P. Timing resolution and time walk in super low K factor single-photon avalanche diode—measurement and optimization. J. Nanophoton. 2018, 12, 016015. [Google Scholar] [CrossRef]

| Area | Depth Mean/Time | ΔDepth (DB−DW) | STD/Time | |||

|---|---|---|---|---|---|---|

| Low flux | Black | 5.2446 m | 4000 s | 2.5 mm | 7.2 mm | 4000 s |

| White | 5.2421 m | 4000 s | 5.4 mm | 1200 s | ||

| High flux | Black | 5.2259 m | 100 s | 52.1 mm | 11.5 mm | 100 s |

| White | 5.1738 m | 100 s | 21.2 mm | 100 s | ||

| High flux corrected | Black | 5.2501 m | 100 s | −1.8 mm | 5.6 mm | 100 s |

| White | 5.2519 m | 100 s | 6.0 mm | 100 s | ||

| Depth Mean (m) | Time(s) | STD (mm) | Time(s) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Area | ‘LT’ | ‘RT’ | ‘LB’ | ‘RB’ | ‘LT’ | ‘RT’ | ‘LB’ | ‘RB’ | ||

| Low flux | 5.1368 | 5.1672 | 5.1686 | 5.1500 | 4000 | 15.8 | 11.1 | 6.1 | 11.3 | 1200 |

| High flux | 5.1022 | 5.1412 | 5.1053 | 5.0784 | 100 | 18.2 | 12.4 | 14.2 | 17.9 | 100 |

| High flux corrected | 5.1356 | 5.1652 | 5.1750 | 5.1542 | 100 | 12.4 | 7.3 | 5.2 | 9.0 | 100 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Zhang, T.; Kang, Y.; Li, W.; Liang, J. High-Flux Fast Photon-Counting 3D Imaging Based on Empirical Depth Error Correction. Photonics 2023, 10, 1304. https://doi.org/10.3390/photonics10121304

Wang X, Zhang T, Kang Y, Li W, Liang J. High-Flux Fast Photon-Counting 3D Imaging Based on Empirical Depth Error Correction. Photonics. 2023; 10(12):1304. https://doi.org/10.3390/photonics10121304

Chicago/Turabian StyleWang, Xiaofang, Tongyi Zhang, Yan Kang, Weiwei Li, and Jintao Liang. 2023. "High-Flux Fast Photon-Counting 3D Imaging Based on Empirical Depth Error Correction" Photonics 10, no. 12: 1304. https://doi.org/10.3390/photonics10121304

APA StyleWang, X., Zhang, T., Kang, Y., Li, W., & Liang, J. (2023). High-Flux Fast Photon-Counting 3D Imaging Based on Empirical Depth Error Correction. Photonics, 10(12), 1304. https://doi.org/10.3390/photonics10121304