Human Activity Recognition from Accelerometry, Based on a Radius of Curvature Feature

Abstract

1. Introduction

1.1. Human Activity Recognition

1.2. Sensor Position

1.3. Related Works

1.4. Based Methodology

2. Materials and Methods

2.1. Dataset

2.2. System Architecture for HAR Process

2.3. Preprocessing Data

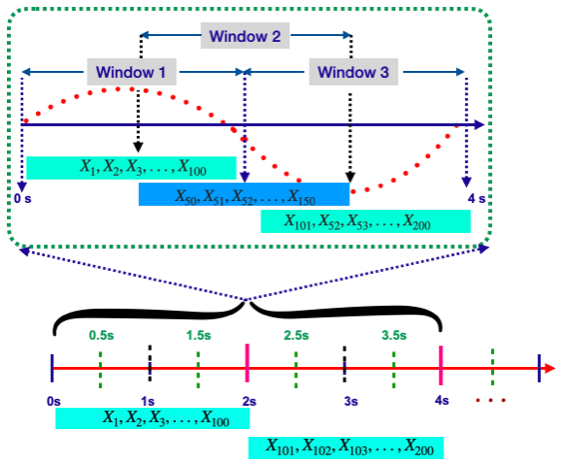

2.4. Data Segmentation

2.5. Feature Extraction

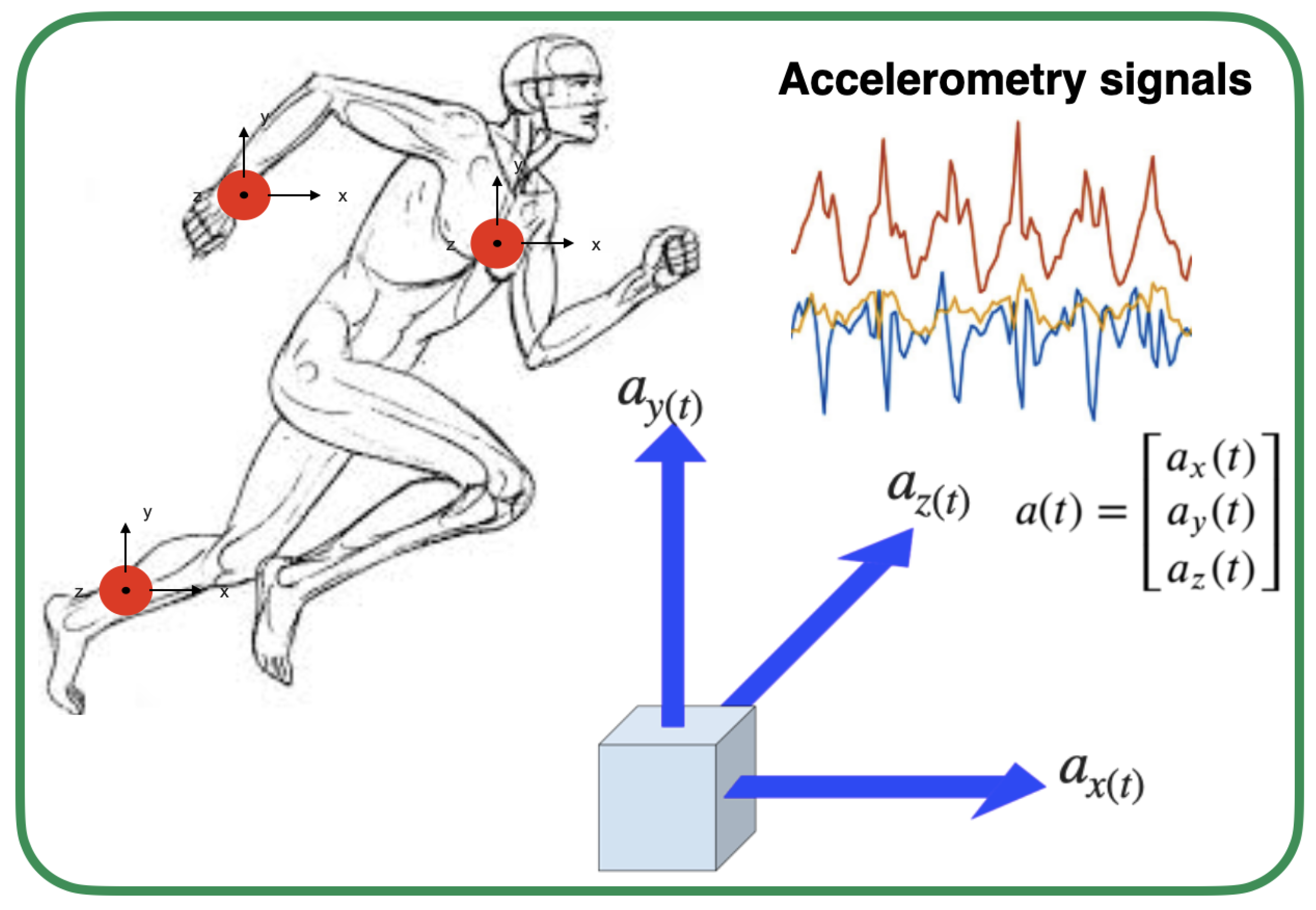

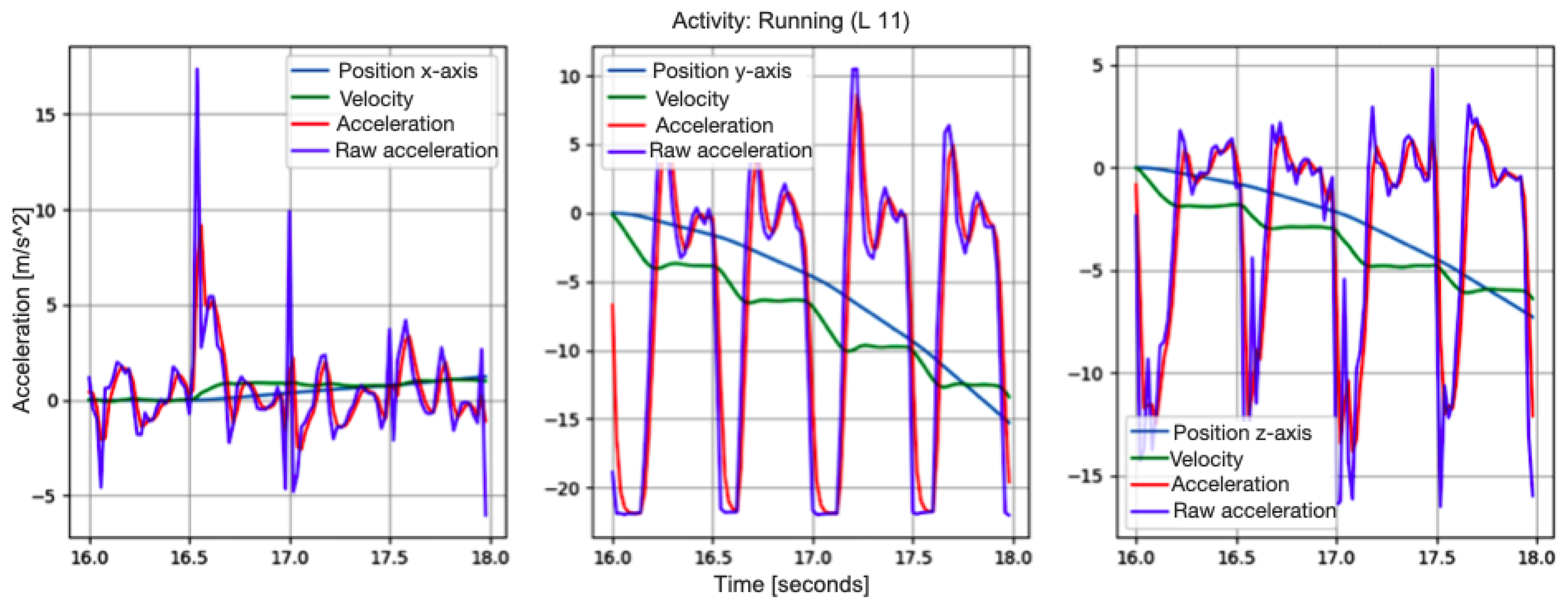

- X-axis component, where , , are the instantaneous acceleration, velocity and position, respectively:

- Y-axis component, where , , are the instantaneous acceleration, velocity and position:

- Z-axis component, where , , are the instantaneous acceleration, velocity and position:

- X-axis component:

- Y-axis component:

- Z-axis component:

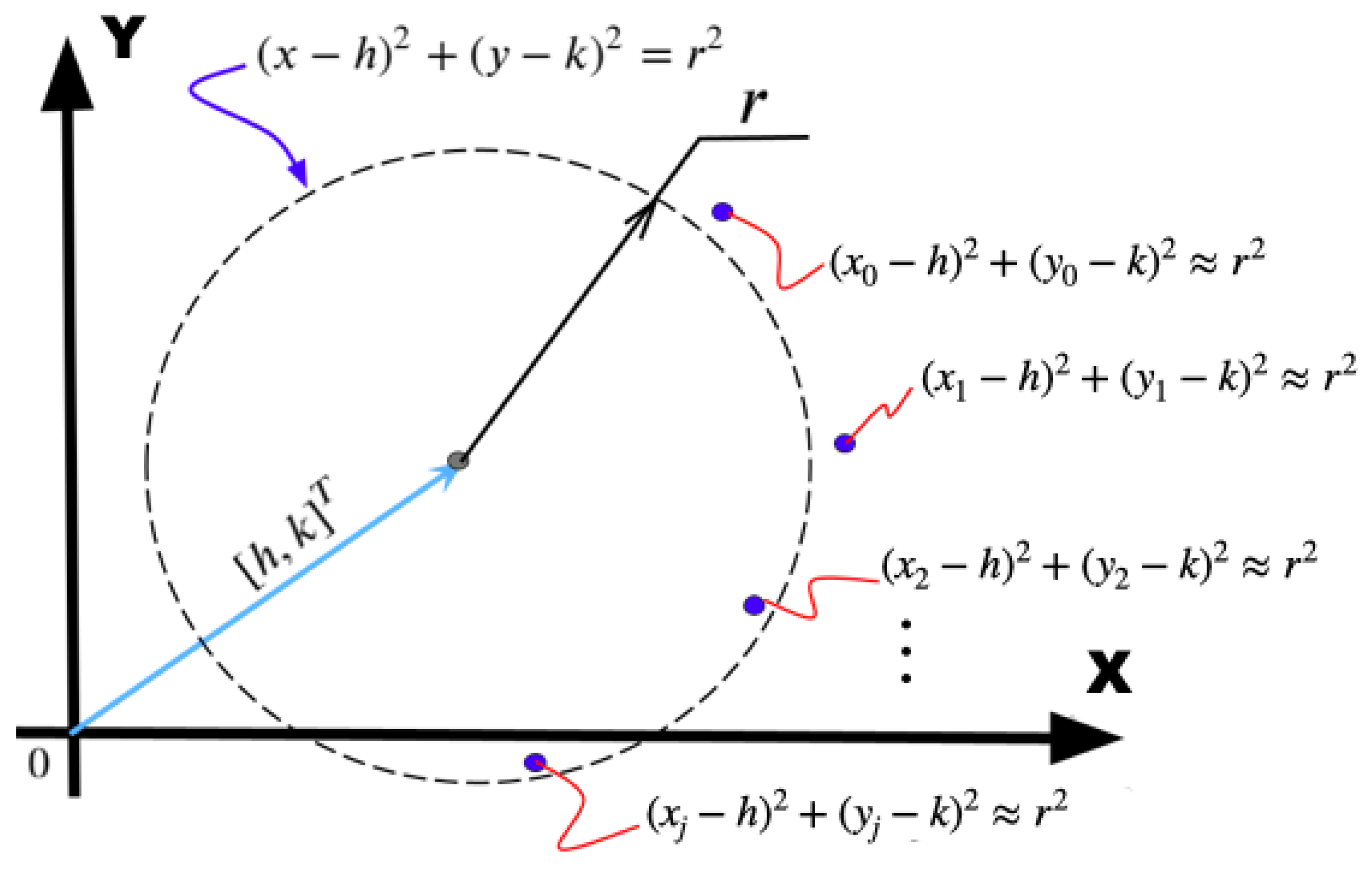

2.6. Proposed Estimating Model for Curvature Radius

2.7. Proposed Feature Vector

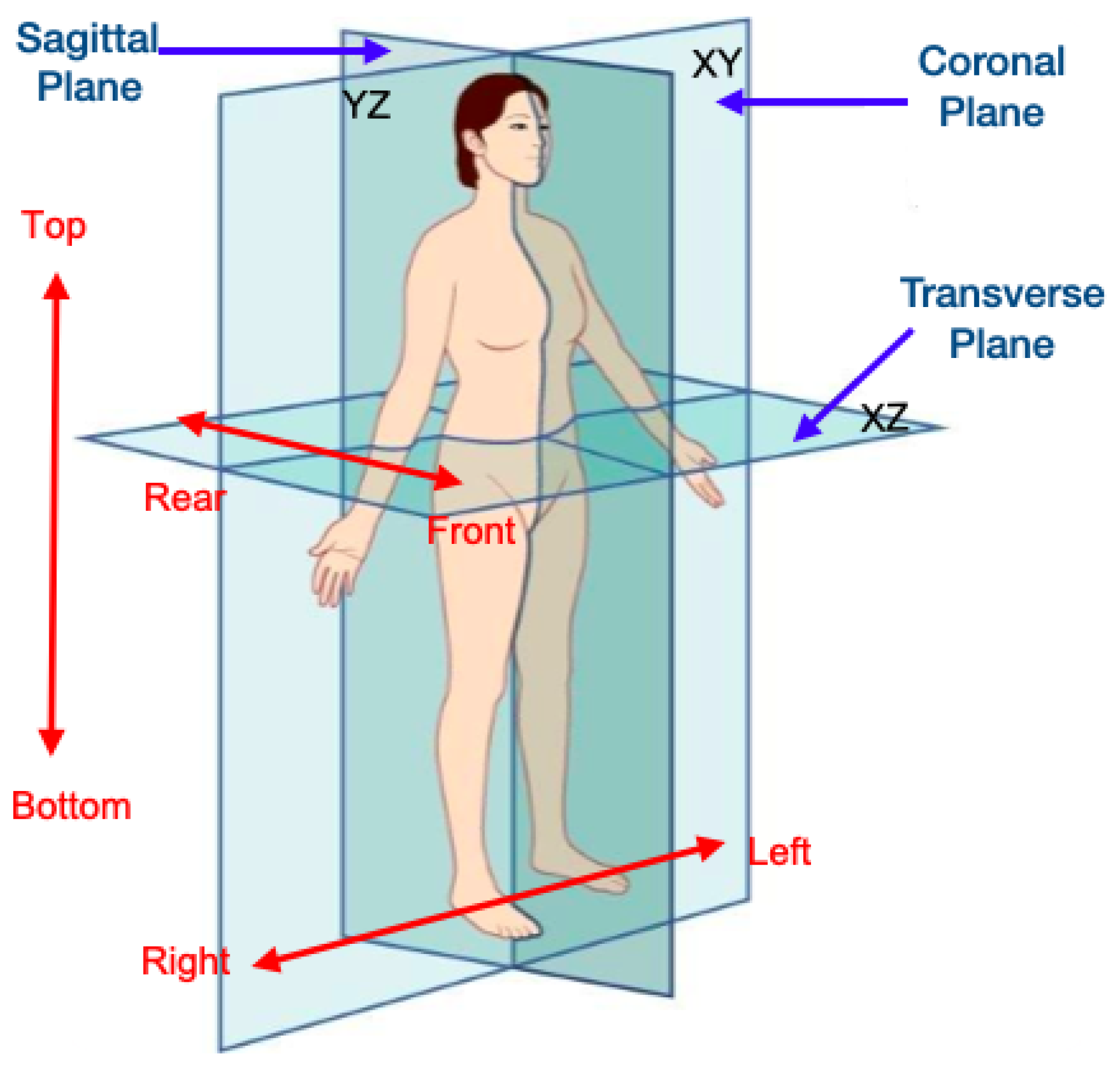

- Curvature radius in the saggital plane , where the point corresponding to the center of the circle is obtained as follows:where is known as the pseudo-inverse of , () are the coordinates of the position points in the plane. Therefore, the curvature radius is obtained as follows:

- Curvature radius in the transverse plane , where the point corresponding to the center of the circle is obtained as follows:where is known as the pseudo-inverse of , () are the coordinates of the position points in the plane. Therefore, the curvature radius is obtained as follows:

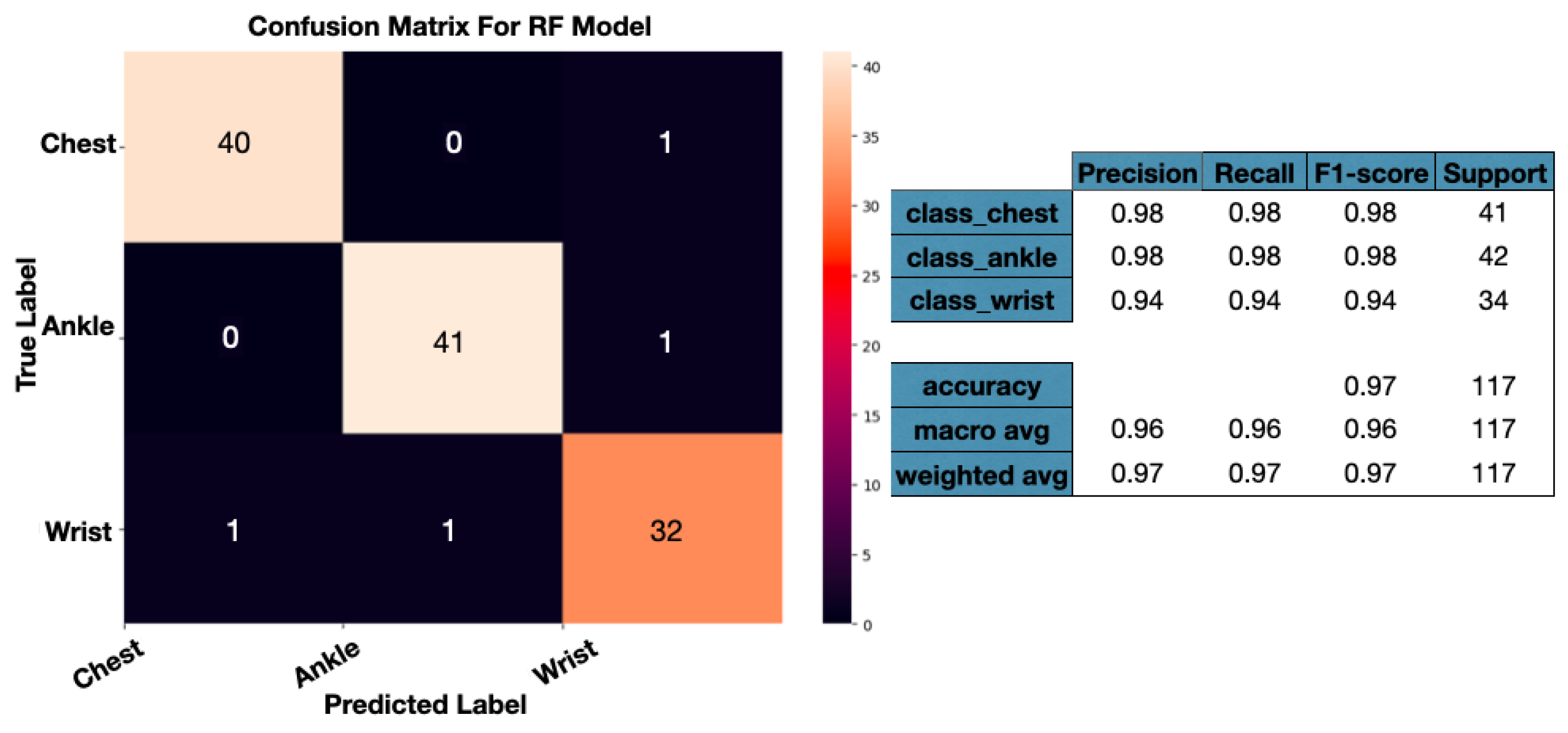

2.8. Accelerometer Position Detection

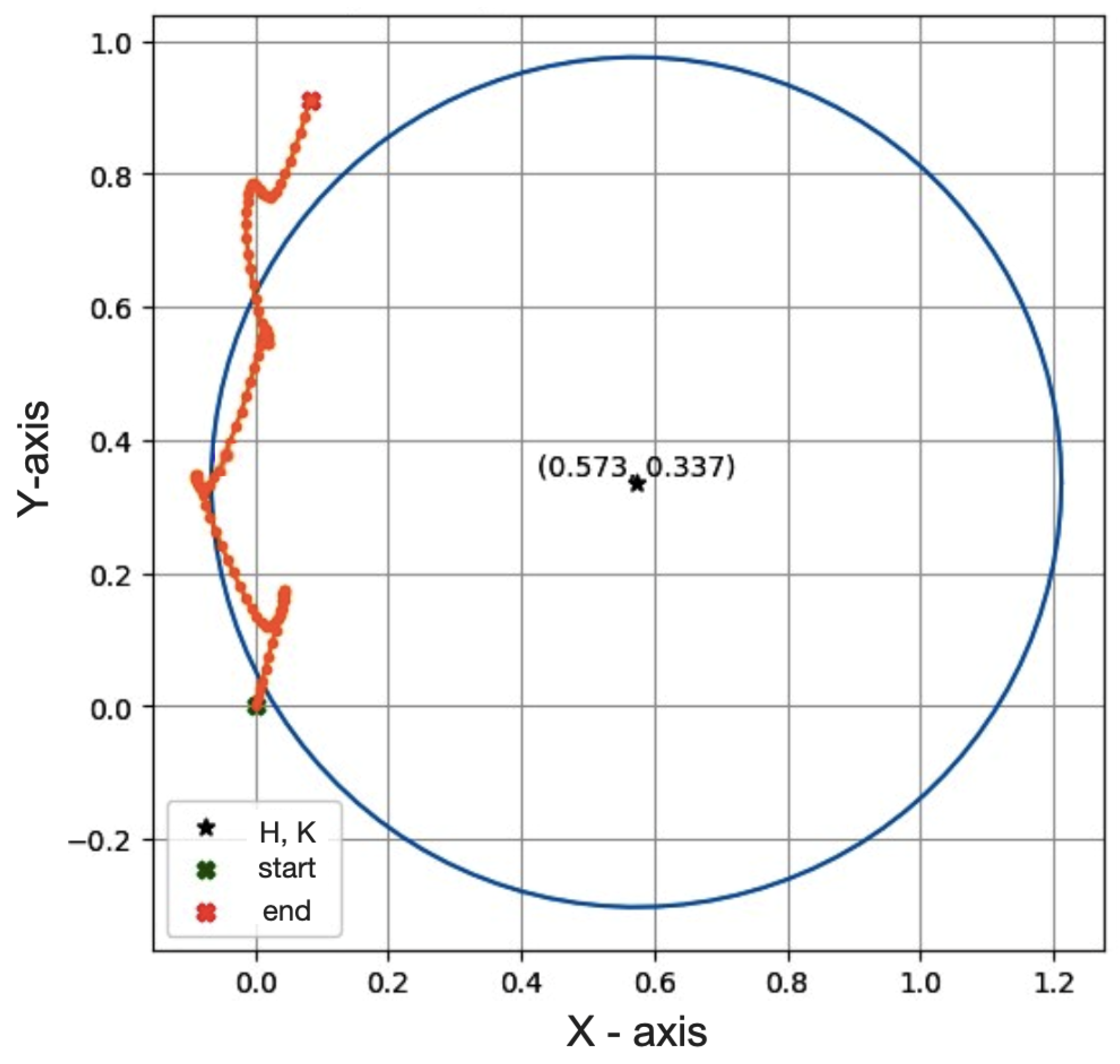

2.9. Measurement Validation

3. Results: Accelerometer Position Detection and Human Activity Recognition

3.1. Detection Position Model

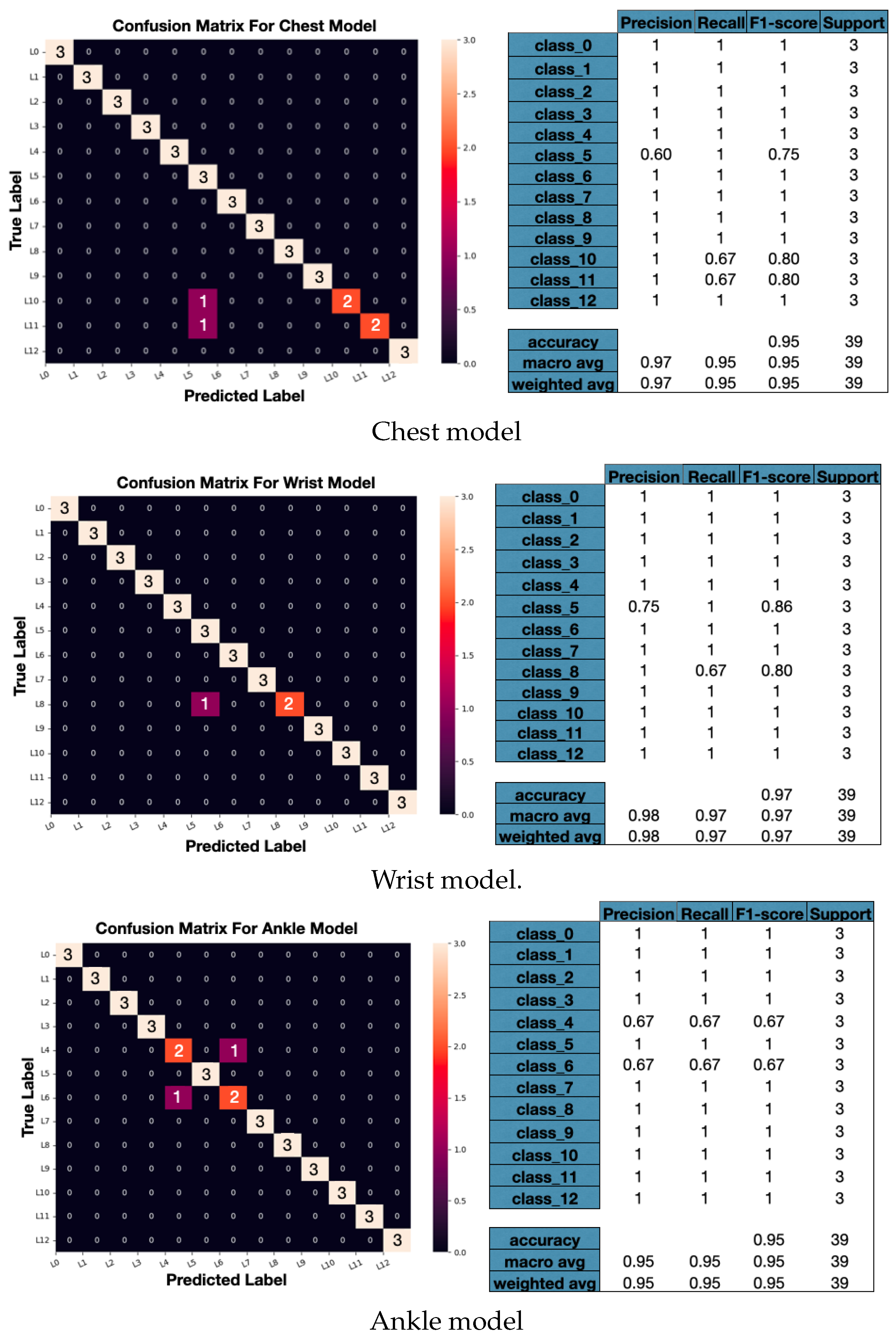

3.2. Physical Activity Classification Models

4. Conclusions

Discussion and Limitations

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cao, Z.B. Physical activity levels and physical activity recommendations in Japan. In Physical Activity, Exercise, Sedentary Behavior and Health; Springer: Tokyo, Japan, 2015; pp. 3–15. [Google Scholar]

- Black, N.; Johnston, D.W.; Propper, C.; Shields, M.A. The effect of school sports facilities on physical activity, health and socioeconomic status in adulthood. Soc. Sci. Med. 2019, 220, 120–128. [Google Scholar] [CrossRef] [PubMed]

- Atiq, F.; Mauser-Bunschoten, E.P.; Eikenboom, J.; van Galen, K.P.; Meijer, K.; de Meris, J.; Cnossen, M.H.; Beckers, E.A.; Laros-van Gorkom, B.A.; Nieuwenhuizen, L.; et al. Sports participation and physical activity in patients with von Willebrand disease. Haemophilia 2019, 25, 101–108. [Google Scholar] [CrossRef] [PubMed]

- Afshin, A.; Babalola, D.; Mclean, M.; Yu, Z.; Ma, W.; Chen, C.Y.; Arabi, M.; Mozaffarian, D. Information technology and lifestyle: A systematic evaluation of internet and mobile interventions for improving diet, physical activity, obesity, tobacco, and alcohol use. J. Am. Heart Assoc. 2016, 5, e003058. [Google Scholar] [CrossRef] [PubMed]

- Ungurean, L.; Brezulianu, A. An internet of things framework for remote monitoring of the healthcare parameters. Adv. Electr. Comput. Eng. 2017, 17, 11–16. [Google Scholar] [CrossRef]

- Ramanujam, E.; Perumal, T.; Padmavathi, S. Human activity recognition with smartphone and wearable sensors using deep learning techniques: A review. IEEE Sens. J. 2021, 21, 13029–13040. [Google Scholar] [CrossRef]

- Nweke, H.F.; Teh, Y.W.; Mujtaba, G.; Al-Garadi, M.A. Data fusion and multiple classifier systems for human activity detection and health monitoring: Review and open research directions. Inf. Fusion 2019, 46, 147–170. [Google Scholar] [CrossRef]

- Paraschiakos, S.; de Sá, S.; Okai, J.; Slagboom, E.; Beekman, M.; Knobbe, A. RNNs on Monitoring Physical Activity Energy Expenditure in Older People. arXiv 2020, arXiv:2006.01169. Available online: https://tinyurl.com/cfp7849a (accessed on 6 July 2021).

- Trost, S.G.; Wong, W.K.; Pfeiffer, K.A.; Zheng, Y. Artificial neural networks to predict activity type and energy expenditure in youth. Med. Sci. Sport. Exerc. 2012, 44, 1801. [Google Scholar] [CrossRef]

- Jang, Y.; Song, Y.; Noh, H.W.; Kim, S. A basic study of activity type detection and energy expenditure estimation for children and youth in daily life using 3-axis accelerometer and 3-stage cascaded artificial neural network. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 2860–2863. [Google Scholar]

- Dimiccoli, M.; Cartas, A.; Radeva, P. Activity Recognition from Visual Lifelogs: State of the Art and Future Challenges; Elsevier Ltd.: Amsterdam, The Netherlands, 2018; pp. 121–134. [Google Scholar] [CrossRef]

- Kang, K.H.; Shin, S.H.; Jung, J.; Kim, Y.T. Estimation of a Physical Activity Energy Expenditure with a Patch-Type Sensor Module Using Artificial Neural Network; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2019; pp. 1–9. [Google Scholar] [CrossRef]

- Zeng, M.; Nguyen, L.T.; Yu, B.; Mengshoel, O.J.; Zhu, J.; Wu, P.; Zhang, J. Convolutional neural networks for human activity recognition using mobile sensors. In Proceedings of the 6th International Conference on Mobile Computing, Applications and Services, Austin, TX, USA, 6–7 November 2014; pp. 197–205. [Google Scholar]

- Xu, T.; Zhou, Y.; Zhu, J. New advances and challenges of fall detection systems: A survey. Appl. Sci. 2018, 8, 418. [Google Scholar] [CrossRef]

- Sathyanarayana, S.; Satzoda, R.K.; Sathyanarayana, S.; Thambipillai, S. Vision-based patient monitoring: A comprehensive review of algorithms and technologies. J. Ambient Intell. Humaniz. Comput. 2018, 9, 225–251. [Google Scholar] [CrossRef]

- Sunny, J.T.; George, S.M.; Kizhakkethottam, J.J. Applications and Challenges of Human Activity Recognition using Sensors in a Smart Environment. IJIRST—Int. J. Innov. Res. Sci. Technol. 2015, 2, 50–57. [Google Scholar]

- Bulling, A.; Blanke, U.; Schiele, B. A tutorial on human activity recognition using body-worn inertial sensors. ACM Comput. Surv. (CSUR) 2014, 46, 1–33. [Google Scholar] [CrossRef]

- Attal, F.; Mohammed, S.; Dedabrishvili, M.; Chamroukhi, F.; Oukhellou, L.; Amirat, Y. Physical human activity recognition using wearable sensors. Sensors 2015, 15, 31314–31338. [Google Scholar] [CrossRef] [PubMed]

- Mannini, A.; Sabatini, A.M.; Intille, S.S. Accelerometry-based recognition of the placement sites of a wearable sensor. Pervasive Mob. Comput. 2015, 21, 62–74. [Google Scholar] [CrossRef] [PubMed]

- Fujinami, K.; Kouchi, S. Recognizing a Mobile Phone’s Storing Position as a Context of a Device and a User. In Mobile and Ubiquitous Systems: Computing, Networking, and Services, Proceedings of the International Conference on Mobile and Ubiquitous Systems: Computing, Networking, and Services, Beijing, China, 12–14 December 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 76–88. [Google Scholar]

- Durmaz Incel, O. Analysis of movement, orientation and rotation-based sensing for phone placement recognition. Sensors 2015, 15, 25474–25506. [Google Scholar] [CrossRef]

- Kunze, K.; Lukowicz, P. Dealing with sensor displacement in motion-based onbody activity recognition systems. In Proceedings of the 10th International Conference on Ubiquitous Computing, Seoul, Republic of Korea, 21–24 September 2008; pp. 20–29. [Google Scholar]

- Garnotel, M.; Simon, C.; Bonnet, S. Physical activity estimation from accelerometry. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 6–10. [Google Scholar]

- Kurban, O.C.; Yildirim, T. Daily motion recognition system by a triaxial accelerometer usable in different positions. IEEE Sens. J. 2019, 19, 7543–7552. [Google Scholar] [CrossRef]

- Clevenger, K.A.; Pfeiffer, K.A.; Montoye, A.H. Cross-generational comparability of hip-and wrist-worn ActiGraph GT3X+, wGT3X-BT, and GT9X accelerometers during free-living in adults. J. Sport. Sci. 2020, 38, 2794–2802. [Google Scholar] [CrossRef]

- Bao, L.; Intille, S.S. Activity recognition from user-annotated acceleration data. In Pervasive Computing, Proceedings of the International Conference on Pervasive Computing, Vienna, Austria, 21–23 April 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 1–17. [Google Scholar]

- Altini, M.; Penders, J.; Vullers, R.; Amft, O. Estimating energy expenditure using body-worn accelerometers: A comparison of methods, sensors number and positioning. IEEE J. Biomed. Health Inform. 2014, 19, 219–226. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit. Lett. 2019, 119, 3–11. [Google Scholar] [CrossRef]

- Sharifani, K.; Amini, M. Machine learning and deep learning: A review of methods and applications. World Inf. Technol. Eng. J. 2023, 10, 3897–3904. [Google Scholar]

- Kanjo, E.; Younis, E.M.; Ang, C.S. Deep learning analysis of mobile physiological, environmental and location sensor data for emotion detection. Inf. Fusion 2019, 49, 46–56. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2012, 15, 1192–1209. [Google Scholar] [CrossRef]

- Zhang, H.; Xiao, Z.; Wang, J.; Li, F.; Szczerbicki, E. A novel IoT-perceptive human activity recognition (HAR) approach using multihead convolutional attention. IEEE Internet Things J. 2019, 7, 1072–1080. [Google Scholar] [CrossRef]

- Hu, C.; Chen, Y.; Peng, X.; Yu, H.; Gao, C.; Hu, L. A Novel Feature Incremental Learning Method for Sensor-Based Activity Recognition. IEEE Trans. Knowl. Data Eng. 2019, 31, 1038–1050. [Google Scholar] [CrossRef]

- Kuncan, F.; Kaya, Y.; Kuncan, M. A novel approach for activity recognition with down-sampling 1D local binary pattern. Adv. Electr. Comput. Eng. 2019, 19, 35–44. [Google Scholar] [CrossRef]

- Wei, X.; Wang, Z. TCN-attention-HAR: Human activity recognition based on attention mechanism time convolutional network. Sci. Rep. 2024, 14, 7414. [Google Scholar] [CrossRef]

- Ray, L.S.S.; Geißler, D.; Liu, M.; Zhou, B.; Suh, S.; Lukowicz, P. ALS-HAR: Harnessing Wearable Ambient Light Sensors to Enhance IMU-based HAR. arXiv 2024, arXiv:2408.09527. [Google Scholar]

- Liandana, M.; Hostiadi, D.P.; Pradipta, G.A. A New Approach for Human Activity Recognition (HAR) Using A Single Triaxial Accelerometer Based on a Combination of Three Feature Subsets. Int. J. Intell. Eng. Syst. 2024, 17, 235–250. [Google Scholar]

- Geravesh, S.; Rupapara, V. Artificial neural networks for human activity recognition using sensor based dataset. Multimed. Tools Appl. 2023, 82, 14815–14835. [Google Scholar] [CrossRef]

- Hafeez, S.; Alotaibi, S.S.; Alazeb, A.; Al Mudawi, N.; Kim, W. Multi-Sensor-Based Action Monitoring and Recognition via Hybrid Descriptors and Logistic Regression. IEEE Access 2023, 11, 48145–48157. [Google Scholar] [CrossRef]

- Jantawong, P.; Jitpattanakul, A.; Mekruksavanich, S. Enhancement of Human Complex Activity Recognition using Wearable Sensors Data with InceptionTime Network. In Proceedings of the 2021 2nd International Conference on Big Data Analytics and Practices (IBDAP), Bangkok, Thailand, 26–27 August 2021. [Google Scholar]

- Mekruksavanich, S.; Jitpattanakul, A.; Sitthithakerngkiet, K.; Youplao, P.; Yupapin, P. Resnet-se: Channel attention-based deep residual network for complex activity recognition using wrist-worn wearable sensors. IEEE Access 2022, 10, 51142–51154. [Google Scholar] [CrossRef]

- Lohit, S.; Wang, Q.; Turaga, P. Temporal transformer networks: Joint learning of invariant and discriminative time warping. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12426–12435. [Google Scholar]

- Neverova, N.; Wolf, C.; Lacey, G.; Fridman, L.; Chandra, D.; Barbello, B.; Taylor, G. Learning human identity from motion patterns. IEEE Access 2016, 4, 1810–1820. [Google Scholar] [CrossRef]

- AlShorman, O.; Alshorman, B.; Masadeh, M.S. A review of physical human activity recognition chain using sensors. Indones. J. Electr. Eng. Inform. (IJEEI) 2020, 8, 560–573. [Google Scholar]

- Karantonis, D.M.; Narayanan, M.R.; Mathie, M.; Lovell, N.H.; Celler, B.G. Implementation of a real-time human movement classifier using a triaxial accelerometer for ambulatory monitoring. IEEE Trans. Inf. Technol. Biomed. 2006, 10, 156–167. [Google Scholar] [CrossRef]

- Banos, O.; Garcia, R.; Holgado-Terriza, J.A.; Damas, M.; Pomares, H.; Rojas, I.; Saez, A. mHealthDroid: A novel framework for agile development of mobile health applications. In Proceedings of the International Workshop on Ambient Assisted Living, Belfast, UK, 2–5 December 2014; pp. 2–5. [Google Scholar]

- Yang, J. Toward physical activity diary: Motion recognition using simple acceleration features with mobile phones. In Proceedings of the 1st International Workshop on Interactive Multimedia for Consumer Electronics, Beijing, China, 23 October 2009; pp. 1–10. [Google Scholar]

- Fridolfsson, J.; Börjesson, M.; Buck, C.; Ekblom, Ö.; Ekblom-Bak, E.; Hunsberger, M.; Lissner, L.; Arvidsson, D. Effects of frequency filtering on intensity and noise in accelerometer-based physical activity measurements. Sensors 2019, 19, 2186. [Google Scholar] [CrossRef]

- Preece, S.J.; Goulermas, J.Y.; Kenney, L.P.; Howard, D. A comparison of feature extraction methods for the classification of dynamic activities from accelerometer data. IEEE Trans. Biomed. Eng. 2008, 56, 871–879. [Google Scholar] [CrossRef]

- Dehghani, A.; Sarbishei, O.; Glatard, T.; Shihab, E. A quantitative comparison of overlapping and non-overlapping sliding windows for human activity recognition using inertial sensors. Sensors 2019, 19, 5026. [Google Scholar] [CrossRef]

- San-Segundo, R.; Montero, J.M.; Barra-Chicote, R.; Fernández, F.; Pardo, J.M. Feature extraction from smartphone inertial signals for human activity segmentation. Signal Process. 2016, 120, 359–372. [Google Scholar] [CrossRef]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J. A survey of online activity recognition using mobile phones. Sensors 2015, 15, 2059–2085. [Google Scholar] [CrossRef]

- Nweke, H.F.; Teh, Y.W.; Al-Garadi, M.A.; Alo, U.R. Deep learning algorithms for human activity recognition using mobile and wearable sensor networks: State of the art and research challenges. Expert Syst. Appl. 2018, 105, 233–261. [Google Scholar] [CrossRef]

- Bennasar, M.; Price, B.A.; Gooch, D.; Bandara, A.K.; Nuseibeh, B. Significant features for human activity recognition using tri-axial accelerometers. Sensors 2022, 22, 7482. [Google Scholar] [CrossRef]

- Gil-Martín, M.; San-Segundo, R.; Fernandez-Martinez, F.; Ferreiros-López, J. Improving physical activity recognition using a new deep learning architecture and post-processing techniques. Eng. Appl. Artif. Intell. 2020, 92, 103679. [Google Scholar] [CrossRef]

- Dua, N.; Singh, S.N.; Semwal, V.B. Multi-input CNN-GRU based human activity recognition using wearable sensors. Computing 2021, 103, 1461–1478. [Google Scholar] [CrossRef]

- Kutlay, M.A.; Gagula-Palalic, S. Application of machine learning in healthcare: Analysis on MHEALTH dataset. Southeast Eur. J. Soft Comput. 2016, 4. [Google Scholar] [CrossRef]

- Cosma, G.; Mcginnity, T.M. Feature extraction and classification using leading eigenvectors: Applications to biomedical and multi-modal mHealth data. IEEE Access 2019, 7, 107400–107412. [Google Scholar] [CrossRef]

- Sztyler, T.; Stuckenschmidt, H. On-body localization of wearable devices: An investigation of position-aware activity recognition. In Proceedings of the 2016 IEEE International Conference on Pervasive Computing and Communications (PerCom), Sydney, NSW, Australia, 14–19 March 2016; pp. 1–9. [Google Scholar]

- Ciuti, G.; Ricotti, L.; Menciassi, A.; Dario, P. MEMS sensor technologies for human centred applications in healthcare, physical activities, safety and environmental sensing: A review on research activities in Italy. Sensors 2015, 15, 6441–6468. [Google Scholar] [CrossRef]

- Janidarmian, M.; Roshan Fekr, A.; Radecka, K.; Zilic, Z. A comprehensive analysis on wearable acceleration sensors in human activity recognition. Sensors 2017, 17, 529. [Google Scholar] [CrossRef]

- Coskun, D.; Incel, O.D.; Ozgovde, A. Phone position/placement detection using accelerometer: Impact on activity recognition. In Proceedings of the 2015 IEEE Tenth International Conference on Intelligent Sensors, Sensor Networks and Information Processing (ISSNIP), Singapore, 7–9 April 2015; pp. 1–6. [Google Scholar]

- O’Halloran, J.; Curry, E. A Comparison of Deep Learning Models in Human Activity Recognition and Behavioural Prediction on the MHEALTH Dataset. In Proceedings of the Irish Conference on Artificial Intelligence and Cognitive Science, Galway, Ireland, 5–6 December 2019; pp. 212–223. [Google Scholar]

- Nguyen, Q.H.; Ly, H.B.; Ho, L.S.; Al-Ansari, N.; Le, H.V.; Tran, V.Q.; Prakash, I.; Pham, B.T. Influence of data splitting on performance of machine learning models in prediction of shear strength of soil. Math. Probl. Eng. 2021, 2021, 4832864. [Google Scholar] [CrossRef]

| Authors | Methods | Accuracy |

|---|---|---|

| Geravesh, and Rupapara, et al. [38] (2023) | KNN | 94% |

| Hafeez, Alotaibi, et al. [39] (2023) | Logistic Regression (LR) | 93% |

| Jantawong, Ponnipa, et al. [40] (2021) | InceptTime model | 88% |

| Zhang, Haoxi, et al. [32] (2019) | Multi-head Convolutional Attention | 95% |

| Mekruksavanich, Jitpattanakul, et al. [41] (2022) | ResNet-SE model | 94–97% |

| Lohit, Wang, et al. [42] (2019) | Temporal Transformer Networks | 78% |

| Neverova, Natalia, et al. [43] (2016) | Dense Clockwork RNN | 93% |

| Our proposed approach | FFNN | 95–97% |

| Authors | Dataset | Sensor | Model | Feature Extraction Domain | Accuracy |

|---|---|---|---|---|---|

| Bennasar M. et al. [54] (2022) | WISDIM | Acce | SVM, KNN | Frequency, Time | 90.6–93.2% |

| Gil M. et al. [55] (2020) | PAMAP2 | Acce | CNNs | Frequency | 89.8–96.6% |

| Dua N. et al. [56] (2021) | PAMAP2 | Acce, Gyro, Mag | Multi-Input CNN-GRU | Time | 95.2% |

| Kutlay M. et al. [57] (2016) | mHealth | Acce, Gyro, Mag | SVM, MLP | Time | 91.7%, 83.2% |

| Cosma G. et al. [58] (2019) | mHealth | Acc, Gyro | KNN | Time, Frequency | 47.5–82.3% |

| Proposed Model | mHealth | Acce | FFNN | Time | 95–97% |

| Label | Activity | Duration |

|---|---|---|

| L1 | Standing | 1 min |

| L2 | Sitting and relaxing | 1 min |

| L3 | Lying down | 1 min |

| L4 | Walking | 1 min |

| L5 | Climbing stairs | 1 min |

| L6 | forward waist bends | 20× |

| L7 | Frontal elevation of arms | 20× |

| L8 | Knee-bending (crouching) | 20× |

| L9 | Cycling | 1 min |

| L10 | Jogging | 1 min |

| L11 | Running | 1 min |

| L12 | Jump front–back | 20× |

| Features |

|---|

| in a coronal plane |

| in a saggital plane |

| in a transverse plane |

| Mean acceleration in (x, y, z) axes |

| Mean velocity in (x, y, z) axes |

| Mean position in (x, y, z) axes |

| Variance acceleration in (x, y, z) axes |

| Variance velocity in (x, y, z) axes |

| Variance position in (x, y, z) axes |

| Accuracy | |||

|---|---|---|---|

| Subject | Chest | Wrist | Ankle |

| Subject 1 | 96.34% | 98.12% | 94.72% |

| Subject 2 | 96.87% | 98.25% | 95.21% |

| Subject 3 | 97.21% | 98.08% | 94.85% |

| Subject 4 | 96.12% | 97.99% | 95.67% |

| Subject 5 | 97.85% | 98.43% | 94.18% |

| Subject 6 | 96.63% | 98.51% | 95.73% |

| Subject 7 | 95.21% | 97.92% | 95.49% |

| Subject 8 | 97.34% | 98.67% | 94.92% |

| Subject 9 | 97.58% | 97.84% | 93.67% |

| Subject 10 | 97.96% | 98.29% | 95.26% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cavita-Huerta, E.; Reyes-Reyes, J.; Romero-Ugalde, H.M.; Osorio-Gordillo, G.L.; Escobar-Jiménez, R.F.; Alvarado-Martínez, V.M. Human Activity Recognition from Accelerometry, Based on a Radius of Curvature Feature. Math. Comput. Appl. 2024, 29, 80. https://doi.org/10.3390/mca29050080

Cavita-Huerta E, Reyes-Reyes J, Romero-Ugalde HM, Osorio-Gordillo GL, Escobar-Jiménez RF, Alvarado-Martínez VM. Human Activity Recognition from Accelerometry, Based on a Radius of Curvature Feature. Mathematical and Computational Applications. 2024; 29(5):80. https://doi.org/10.3390/mca29050080

Chicago/Turabian StyleCavita-Huerta, Elizabeth, Juan Reyes-Reyes, Héctor M. Romero-Ugalde, Gloria L. Osorio-Gordillo, Ricardo F. Escobar-Jiménez, and Victor M. Alvarado-Martínez. 2024. "Human Activity Recognition from Accelerometry, Based on a Radius of Curvature Feature" Mathematical and Computational Applications 29, no. 5: 80. https://doi.org/10.3390/mca29050080

APA StyleCavita-Huerta, E., Reyes-Reyes, J., Romero-Ugalde, H. M., Osorio-Gordillo, G. L., Escobar-Jiménez, R. F., & Alvarado-Martínez, V. M. (2024). Human Activity Recognition from Accelerometry, Based on a Radius of Curvature Feature. Mathematical and Computational Applications, 29(5), 80. https://doi.org/10.3390/mca29050080