Psychometric Properties of the Science Self-Efficacy Scale for STEMM Undergraduates

Abstract

1. Introduction

2. Methods

2.1. Data Source

2.2. Science Self-Efficacy Scale (SSES)

2.3. Analyses

2.3.1. Descriptive Analysis

2.3.2. Construct Validity: Dimensionality, Reliability Coefficients, and Item Response Theory Modeling

2.3.3. Validity Based on Other Related Traits and Outcomes

3. Results

3.1. Descriptives and Response Percentages

3.2. Construct Validity

3.2.1. Dimensionality

3.2.2. Reliability Coefficients

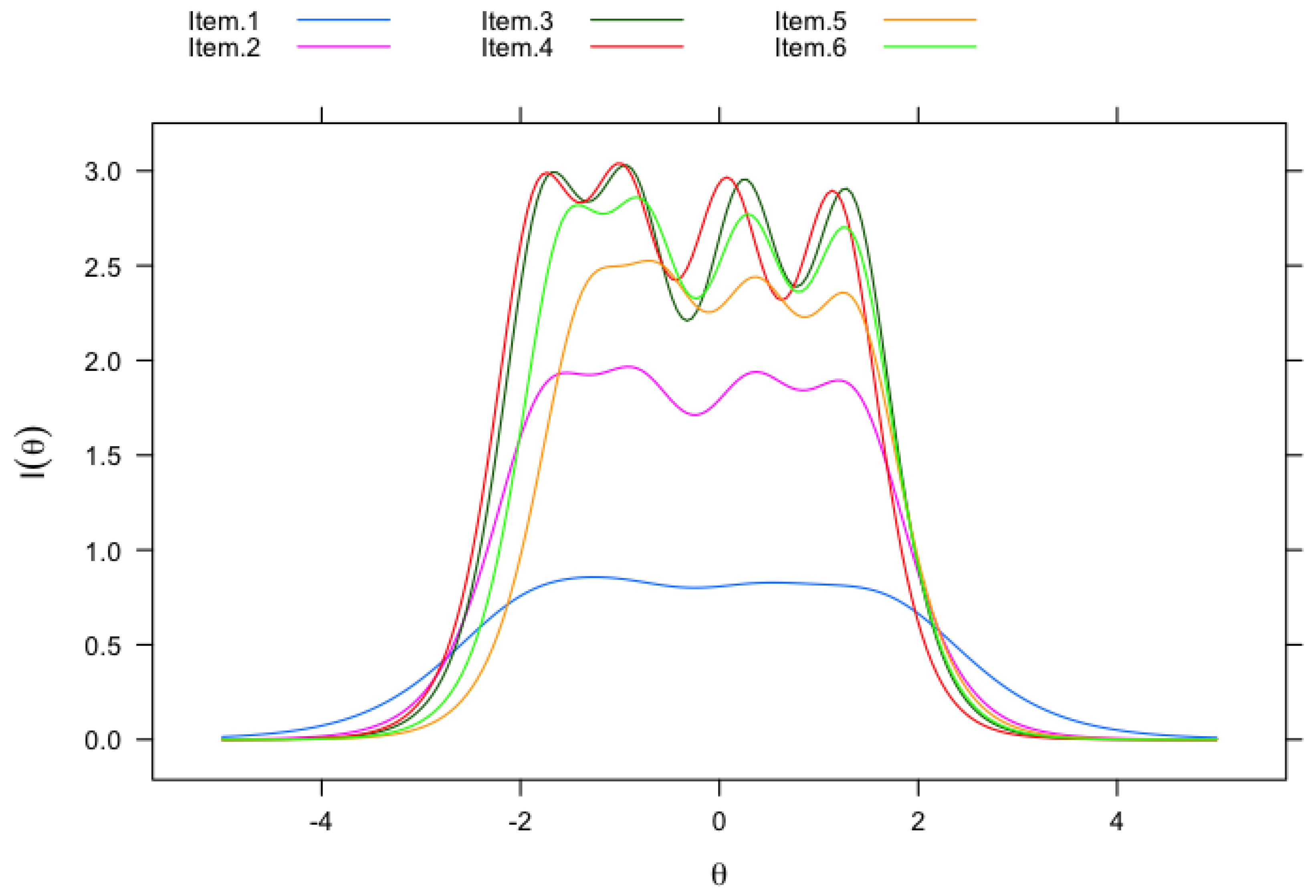

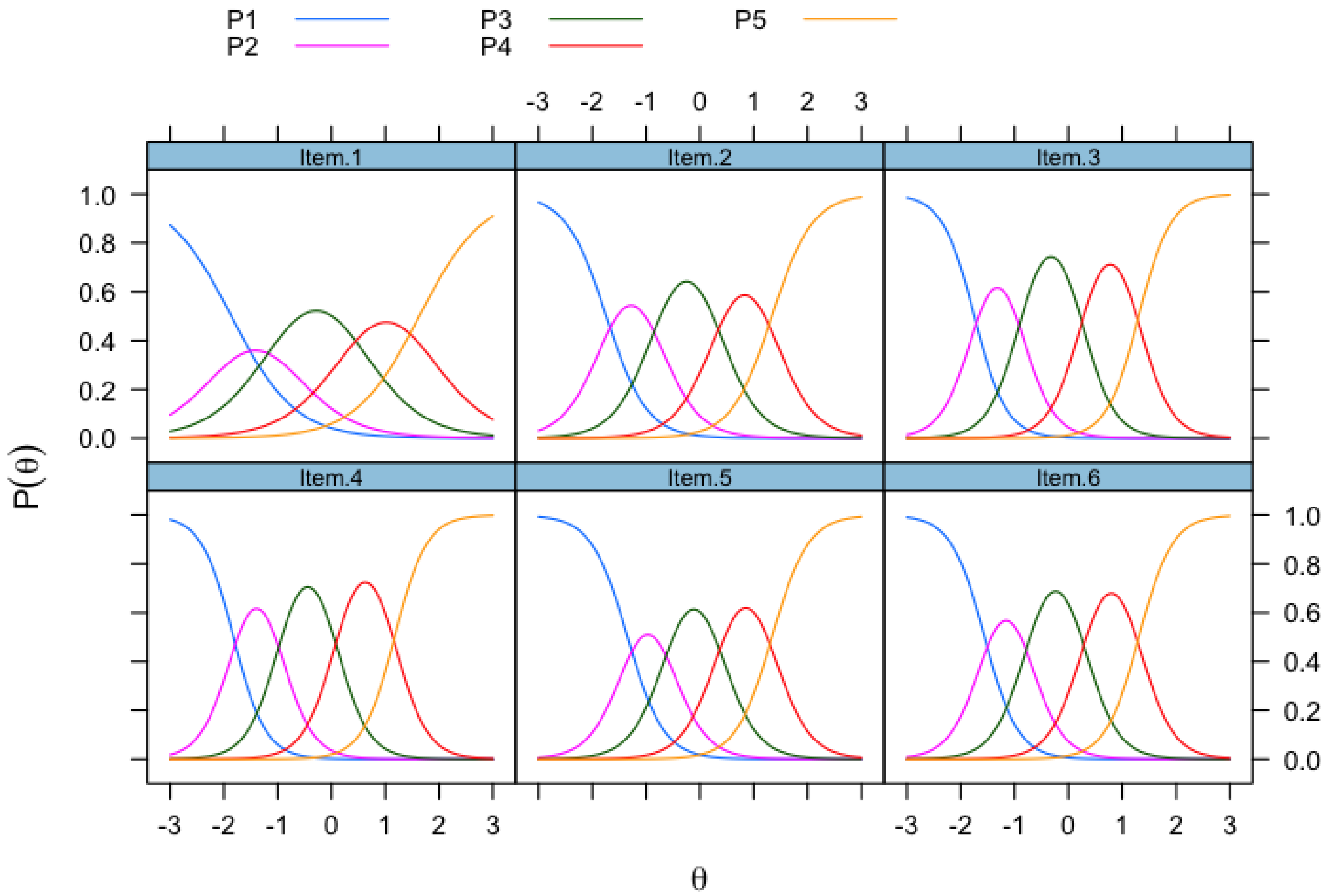

3.2.3. Item Response Theory Model: Model Parameters

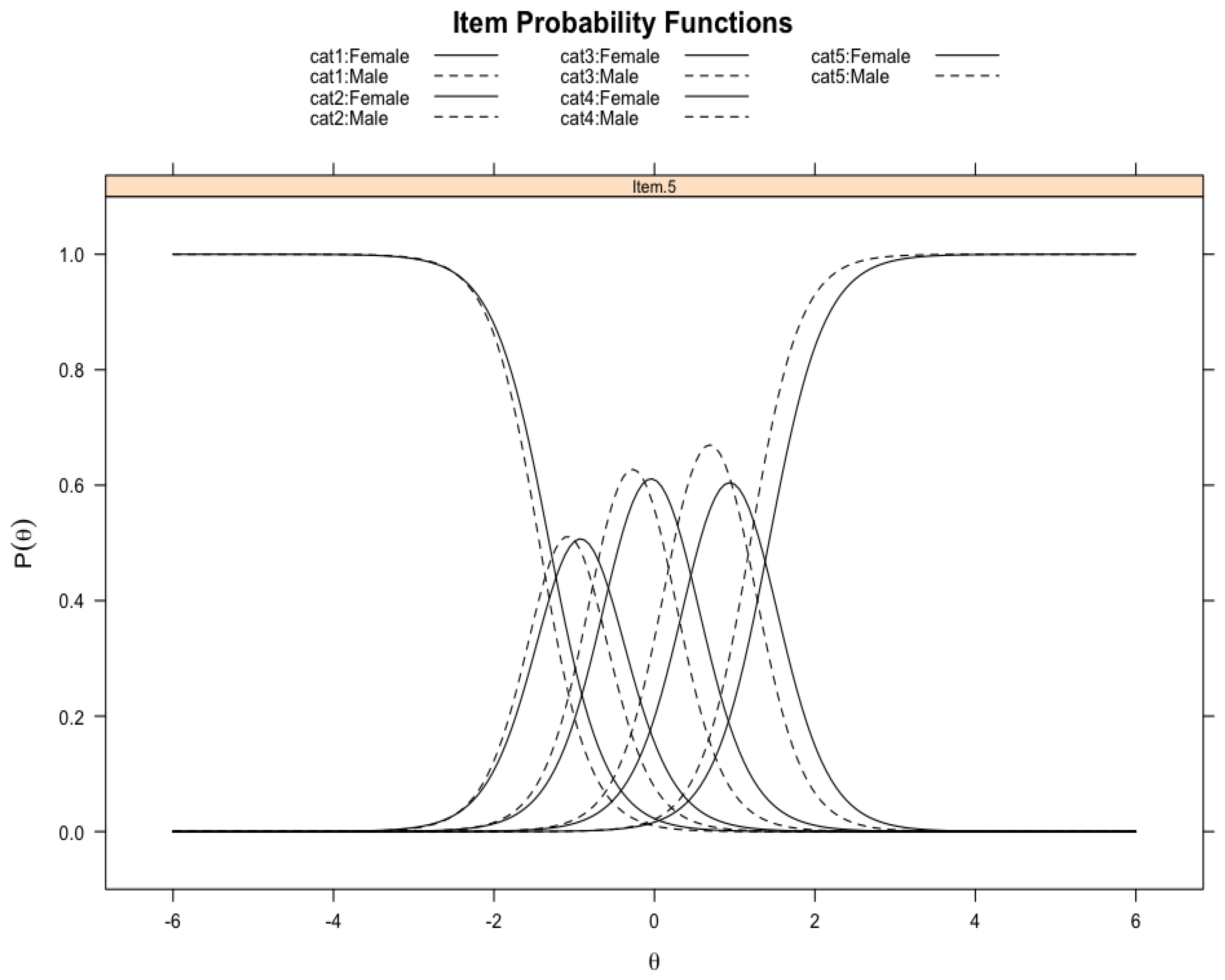

3.2.4. Item Response Theory Model: Differential Item Functioning

3.3. Validity Based on Related Traits and Outcomes: Science Identity and Graduation

4. Discussion

4.1. Limitations

4.2. Recommendations for STEMM Research and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

| 1 | With respect to race/ethnicity, we had five groups: Asian, AIAN, Black/African American, Latine, and White. |

References

- Adedokun, O. A., Bessenbacher, A. B., Parker, L. C., Kirkham, L. L., & Burgess, W. D. (2013). Research skills and STEM undergraduate research students’ aspirations for research careers: Mediating effects of research self-efficacy. Journal of Research in Science Teaching, 50(8), 940–951. [Google Scholar] [CrossRef]

- AERA, APA & NCME. (2014). Standards for educational and psychological testing. AERA. [Google Scholar]

- Amelink, C. T., Artis, S., & King Liu, T. J. (2015). Examining the self-efficacy of community college STEM majors: Factors related to four-year degree attainment. Community College Journal of Research and Practice, 39(12), 1111–1124. [Google Scholar] [CrossRef]

- Artino, A. R. (2012). Academic self-efficacy: From educational theory to instructional practice. Perspectives on Medical Education, 1, 76–85. [Google Scholar] [CrossRef] [PubMed]

- Bandura, A. (1977). Self-efficacy: Toward a unifying theory of behavioral change. Psychological Review, 84(2), 191–215. [Google Scholar] [CrossRef]

- Bandura, A. (1997). Self-efficacy: The exercise of control. Freeman. [Google Scholar]

- Bandura, A., & Adams, N. E. (1977). Analysis of self-efficacy theory of behavioral change. Cognitive Therapy and Research, 1(4), 287–310. [Google Scholar] [CrossRef]

- Boateng, G. O., Neilands, T. B., Frongillo, E. A., Melgar-Quiñonez, H. R., & Young, S. L. (2018). Best practices for developing and validating scales for health, social, and behavioral research: A primer. Frontiers in Public Health, 6, 149. [Google Scholar] [CrossRef]

- Byars, A. M., & Hackett, G. (1998). Applications of social cognitive theory to the career development of women of color. Applied and Preventive Psychology, 7(4), 255–267. [Google Scholar] [CrossRef]

- Byars-Winston, A., Rogers, J., Branchaw, J., Pribbenow, C., Hanke, R., & Pfund, C. (2016). New measures assessing predictors of academic persistence for historically underrepresented racial/ethnic undergraduates in science. CBE Life Sciences Education, 15(3), ar32. [Google Scholar] [CrossRef]

- Carpi, A., Ronan, D. M., Falconer, H. M., & Lents, N. H. (2017). Cultivating minority scientists: Undergraduate research increases self-efficacy and career ambitions for underrepresented students in STEM. Journal of Research in Science Teaching, 54(2), 169–194. [Google Scholar] [CrossRef]

- Chalmers, R. P. (2012). mirt: A multidimensional item response theory package for the R environment. Journal of Statistical Software, 48(6), 1–29. [Google Scholar] [CrossRef]

- Chemers, M. M. (2006). Science identity and self-efficacy (Unpublished manuscript). University of California. [Google Scholar]

- Chemers, M. M., Zurbriggen, E. L., Syed, M., Goza, B. K., & Bearman, S. (2011). The role of efficacy and identity in science career commitment among underrepresented minority students. Journal of Social Issues, 67(3), 469–491. [Google Scholar] [CrossRef]

- Clark, L. A., & Watson, D. (1995). Constructing validity: Basic issues in objective scale development. Psychological Assessment, 7(3), 309–319. [Google Scholar] [CrossRef]

- Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. Psychometrika, 16(3), 297–334. [Google Scholar] [CrossRef]

- Dorfman, B. S., & Fortus, D. (2019). Students’ self-efficacy for science in different school systems. Journal of Research in Science Teaching, 56(8), 1037–1059. [Google Scholar] [CrossRef]

- Dunn, M. C., & Bourne, P. E. (2017). Building the biomedical data science workforce. PLoS Biology, 15(7), e2003082. [Google Scholar] [CrossRef]

- Embretson, S. E., & Reise, S. P. (2000). Item response theory for psychologists. L. Erlbaum Associates. [Google Scholar]

- Estrada, M., Woodcock, A., Hernandez, P. R., & Schultz, P. W. (2011). Toward a Model of Social Influence that Explains Minority Student Integration into the Scientific Community. Journal of Educational Psychology, 103(1), 206. [Google Scholar] [CrossRef]

- Floyd, F. J., & Widaman, K. F. (1995). Factor analysis in the development and refinement of clinical assessment instruments. Psychological Assessment, 7, 286–299. [Google Scholar] [CrossRef]

- Green, S. B., & Yang, Y. (2009). Commentary on coefficient Alpha: A cautionary tale. Psychometrika, 74(1), 121–135. [Google Scholar] [CrossRef]

- Guerrero, L. R., Seeman, T., McCreath, H., Maccalla, N. M. G., & Norris, K. C. (2022). Understanding the context and appreciating the complexity of evaluating the Diversity Program Consortium. New Directions for Evaluation, 2022(174), 11–20. [Google Scholar] [CrossRef]

- Hu, L., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6(1), 1–55. [Google Scholar] [CrossRef]

- Larose, S., Ratelle, C. F., Guay, F., Senécal, C., & Harvey, M. (2006). Trajectories of science self-efficacy beliefs during the college transition and academic and vocational adjustment in science and technology programs. Educational Research and Evaluation, 12(4), 373–393. [Google Scholar] [CrossRef]

- Lent, R. W., & Brown, S. D. (2002). Social cognitive career theory and adult career development. In Adult career development: Concepts, issues and practices (3rd ed., pp. 76–97). National Career Development Association. [Google Scholar]

- Lent, R. W., Brown, S. D., & Hackett, G. (2000). Contextual supports and barriers to career choice: A social cognitive analysis. Journal of Counseling Psychology, 47(1), 36–49. [Google Scholar] [CrossRef]

- MacPhee, D., Farro, S., & Canetto, S. S. (2013). Academic self-efficacy and performance of underrepresented STEM majors: Gender, ethnic, and social class patterns. Analyses of Social Issues and Public Policy, 13(1), 347–369. [Google Scholar] [CrossRef]

- Maydeu-Olivares, A. (2015). Evaluating the fit of IRT models. In Handbook of item response theory modeling: Applications to typical performance assessment (pp. 111–127). Routledge/Taylor & Francis Group. [Google Scholar]

- McDonald, R. P. (2013). Test theory: A unified treatment. Psychology Press. [Google Scholar]

- McNeish, D. (2018). Thanks coefficient alpha, we’ll take it from here. Psychological Methods, 23(3), 412–433. [Google Scholar] [CrossRef]

- Miles, J. A., & Naumann, S. E. (2021). Science self-efficacy in the relationship between gender & science identity. International Journal of Science Education, 43(17), 2769–2790. [Google Scholar] [CrossRef]

- Milojević, S., Radicchi, F., & Walsh, J. P. (2018). Changing demographics of scientific careers: The rise of the temporary workforce. Proceedings of the National Academy of Sciences, 115(50), 12616–12623. [Google Scholar] [CrossRef]

- National Academies of Sciences, Engineering, and Medicine (NASEM). (2016). Barriers and opportunities for 2-year and 4-year STEM degrees: Systemic change to support diverse student pathways. Committee on barriers and opportunities in completing 2-year and 4-year STEM degrees. In S. Malcom, & M. Feder (Eds.), Board on science education, division of behavioral and social sciences and education. Board on higher education and the workforce, policy and global affairs. National Academies Press. [Google Scholar] [CrossRef]

- National Academies of Sciences, Engineering, and Medicine (NASEM). (2024). International talent programs in the changing global environment. The National Academies Press. [Google Scholar] [CrossRef]

- Nearing, K. A., Hunt, C., Presley, J. H., Nuechterlein, B. M., Moss, M., & Manson, S. M. (2015). Solving the puzzle of recruitment and retention—Strategies for building a robust clinical and translational research workforce. Clinical and Translational Science, 8(5), 563–567. [Google Scholar] [CrossRef]

- Ramirez, K. D., Joseph, C. J., & Oh, H. (2022). Describing engagement practices for the Enhance Diversity Study using principles of Tailored Panel Management. New Directions for Evaluation, 2022(174), 33–45. [Google Scholar] [CrossRef]

- R Core Team. (2022). R: A language and environment for statistical computing. R Foundation for Statistical Computing [Computer software]. Available online: http://www.R-project.org/ (accessed on 1 November 2024).

- Robnett, R. D., Chemers, M. M., & Zurbriggen, E. L. (2015). Longitudinal associations among undergraduates’ research experience, self-efficacy, and identity. Journal of Research in Science Teaching, 52(6), 847–867. [Google Scholar] [CrossRef]

- Schermelleh-Engel, K., Moosbrugger, H., & Müller, H. (2003). Evaluating the fit of structural equation models: Tests of significance and descriptive goodness-of-fit measures. Methods of Psychological Research Online, 8(2), 23–74. [Google Scholar]

- Schunk, D. H., & Mullen, C. A. (2012). Self-efficacy as an engaged learner. In Handbook of research on student engagement (pp. 219–235). Springer. [Google Scholar]

- Science and Technology Action Committee (STAC). (2023). The State of Science in America. Available online: https://sciencetechaction.org/the-state-of-science-in-america/ (accessed on 12 March 2025).

- Thissen, D., & Wainer, H. (2001). Test scoring. Lawrence Erlbaum Associates. [Google Scholar]

- Trizano-Hermosilla, I., & Alvarado, J. M. (2016). Best Alternatives to cronbach’s alpha reliability in realistic conditions: Congeneric and asymmetrical measurements. Frontiers in Psychology, 7, 769. [Google Scholar] [CrossRef]

- Zinbarg, R. E., Revelle, W., Yovel, I., & Li, W. (2005). Cronbach’s α, Revelle’s β, and Mcdonald’s ωH: Their relations with each other and two alternative conceptualizations of reliability. Psychometrika, 70(1), 123–133. [Google Scholar] [CrossRef]

| Variables | N (%) |

|---|---|

| Gender | |

| Women | 6030 (60.12) |

| Men | 2935 (29.27) |

| Race/Ethnicity | |

| AIAN | 388 (4.44) |

| Asian | 1906 (21.79) |

| Black/AA | 1389 (15.88) |

| Latine | 2899 (33.14) |

| NHPI | 78 (0.89) |

| White | 2087 (23.86) |

| Major | |

| Biomed Sciences and Engineering | 4281 (51.36) |

| Biomed Social/Behavioral Sciences | 973 (11.67) |

| Non-Biomedical | 3082 (36.97) |

| Response Percentages (%) | ||||||

|---|---|---|---|---|---|---|

| Item Number | Item Description | Not at all Confident (1) | A little Confident (2) | Somewhat Confident (3) | Very Confident (4) | Absolutely Confident (5) |

| Item 1 | Use technical science skills (use of tools, instruments, and/or techniques) | 9.84 | 14.68 | 37.30 | 25.80 | 12.38 |

| Item 2 | Generate a research question | 7.44 | 16.50 | 37.23 | 26.15 | 12.67 |

| Item 3 | Determine how to collect appropriate data | 6.20 | 14.38 | 38.37 | 29.38 | 11.67 |

| Item 4 | Explain the results of a study | 5.49 | 13.28 | 33.95 | 33.14 | 14.13 |

| Item 5 | Use scientific literature to guide research | 12.15 | 17.76 | 33.35 | 24.99 | 11.75 |

| Item 6 | Integrate results from multiple studies | 8.46 | 15.95 | 36.00 | 27.73 | 11.87 |

| Items | Factor Loadings for the First Half of the Sample (EFA) | Factor Loadings for the Second Half of the Sample (CFA) |

|---|---|---|

| Item 1 | 0.65 | 0.66 |

| Item 2 | 0.78 | 0.78 |

| Item 3 | 0.84 | 0.84 |

| Item 4 | 0.83 | 0.84 |

| Item 5 | 0.81 | 0.80 |

| Item 6 | 0.83 | 0.82 |

| Items | a (SE) | b1 (SE) | b2 (SE) | b3 (SE) | b4 (SE) |

|---|---|---|---|---|---|

| Item 1 | 1.69 (0.03) | −1.86 (0.03) | −0.97 (0.02) | 0.40 (0.02) | 1.63 (0.03) |

| Item 2 | 2.66 (0.04) | −1.74 (0.03) | −0.82 (0.02) | 0.32 (0.02) | 1.33 (0.02) |

| Item 3 | 3.36 (0.06) | −1.75 (0.03) | −0.89 (0.02) | 0.25 (0.01) | 1.30 (0.02) |

| Item 4 | 3.36 (0.06) | −1.82 (0.03) | −0.96 (0.02) | 0.08 (0.01) | 1.17 (0.02) |

| Item 5 | 2.99 (0.05) | −1.34 (0.02) | −0.59 (0.02) | 0.37 (0.01) | 1.34 (0.02) |

| Item 6 | 3.23 (0.06) | −1.56 (0.02) | −0.76 (0.02) | 0.28 (0.01) | 1.31 (0.02) |

| Items | Gender | Race/Ethnicity | ||

|---|---|---|---|---|

| Wald Statistic | Adjusted p-Values | Wald Statistic | Adjusted p-Values | |

| Item 1 | 1.338 | 0.412 | 1.007 | 0.631 |

| Item 2 | 3.816 | 0.152 | 0.017 | 0.898 |

| Item 3 | 1.195 | 0.412 | 0.207 | 0.898 |

| Item 4 | 0.017 | 0.927 | 1.346 | 0.631 |

| Item 5 | 7.206 | 0.044 | 0.03 | 0.898 |

| Item 6 | 0.008 | 0.927 | 1.411 | 0.631 |

| Model | df | BIC | RMSEA | ChiSq Difference | Pr (>ChiSq) |

|---|---|---|---|---|---|

| Model 1 | 18 | 114,028 | |||

| Model 2 | 23 | 113,988 | 0.00314 | 5.194 | 0.3927 |

| Model 3 | 28 | 113,973 | 0.0355 | 29.792 | 1.621 × 10−5 *** |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the University Association of Education and Psychology. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Srinivasan, J.; Cobian, K.P.; Jeon, M. Psychometric Properties of the Science Self-Efficacy Scale for STEMM Undergraduates. Eur. J. Investig. Health Psychol. Educ. 2025, 15, 124. https://doi.org/10.3390/ejihpe15070124

Srinivasan J, Cobian KP, Jeon M. Psychometric Properties of the Science Self-Efficacy Scale for STEMM Undergraduates. European Journal of Investigation in Health, Psychology and Education. 2025; 15(7):124. https://doi.org/10.3390/ejihpe15070124

Chicago/Turabian StyleSrinivasan, Jayashri, Krystle P. Cobian, and Minjeong Jeon. 2025. "Psychometric Properties of the Science Self-Efficacy Scale for STEMM Undergraduates" European Journal of Investigation in Health, Psychology and Education 15, no. 7: 124. https://doi.org/10.3390/ejihpe15070124

APA StyleSrinivasan, J., Cobian, K. P., & Jeon, M. (2025). Psychometric Properties of the Science Self-Efficacy Scale for STEMM Undergraduates. European Journal of Investigation in Health, Psychology and Education, 15(7), 124. https://doi.org/10.3390/ejihpe15070124