Abstract

To date, parallel simulation algorithms for spiking neural P (SNP) systems are based on a matrix representation. This way, the simulation is implemented with linear algebra operations, which can be easily parallelized on high performance computing platforms such as GPUs. Although it has been convenient for the first generation of GPU-based simulators, such as CuSNP, there are some bottlenecks to sort out. For example, the proposed matrix representations of SNP systems lead to very sparse matrices, where the majority of values are zero. It is known that sparse matrices can compromise the performance of algorithms since they involve a waste of memory and time. This problem has been extensively studied in the literature of parallel computing. In this paper, we analyze some of these ideas and apply them to represent some variants of SNP systems. We also provide a new simulation algorithm based on a novel compressed representation for sparse matrices. We also conclude which SNP system variant better suits our new compressed matrix representation.

1. Introduction

Membrane computing [,] is an interdisciplinary research area in the intersection of computer science and cellular biology mainly [], but also with many other fields such as engineering, neuroscience, systems biology, chemistry, etc. The aim is to study computational devices called P systems, taking inspiration from how living cells process information. Spiking neural P (SNP) systems [] are a type of P system composed of a directed graph inspired by how neurons are interconnected by axons and synapses in the brain. Neurons communicate through spikes, and the time difference between them plays an important role in the computation. Therefore, this model belongs to the known third generation of artificial neural networks, i.e., based on spikes.

Aside from computing numbers, SNP systems can also compute strings, and hence, languages. More general ways to provide the input or receive the output include the use of spike trains, i.e., a stream or sequence of spikes entering or leaving the system. Further results and details on computability, complexity, and applications of spiking neural P systems are detailed in [,,], a dedicated chapter in the Handbook in [], and an extensive bibliography until February 2016 in []. Moreover, there is a wide range of SNP system variants: with delays, with weights [], with astrocytes [], with anti-spikes [], dendrites [], rules on synapses [], scheduled synapses [], stochastic firing [], numerical [], etc.

The research on applications and variants of SNP systems has required the development of simulators. The simulation of SNP systems was initially carried out through sequential simulators such as pLinguaCore []. In 2010, a matrix representation of SNP systems was introduced []. Since then, most simulation algorithms are based on matrices and vector representations, and consists of a set of linear algebra operations. This way, parallel simulators can be efficiently implemented, since matrix-vector multiplications are easy to parallelize. Moreover, there are efficient algebra libraries that can be used out-of-the-box, although they have not been explored yet for this purpose. For instance, GPUs are parallel devices optimized for certain matrix operations [], and can handle matrix operations efficiently. We can say, without loss of generality, that these matrix representations of SNP systems fit well to the highly parallel architecture of these devices. This have been harnessed already by introducing CuSNP, a set of simulators for SNP systems implemented with CUDA [,,,]. Simulators for specific solutions have been also defined in the literature [,]. Moreover, this is not unique for SNP systems, many simulators for other P system variants have been accelerated on GPUs [,,].

However, this matrix representation can be sparse (i.e., having a majority of zero values) because the directed graph of SNP systems is not usually fully connected. A first approach to tackle this problem was presented in [], where some of the ideas described in this work were described. Following these ideas, in [], the transition matrix was split to reduce the memory footprint of the SNP representation. In many disciplines, sparse vector-matrix operations are very usual, and hence, many solutions based on compressed implementations have been proposed in the literature [].

In this paper, we introduce compressed representations for the simulation of SNP systems based on sparse vector-matrix operations. First, we provide two approaches to compress the transition matrix for the simulation of SNP systems with static graph. Second, we extend these algorithms and data structures for SNP systems with dynamic graphs (division, budding, and plasticity). Finally, we make a complexity analysis and comparison of the algorithms to draw some conclusions.

The paper is structured as follows: Section 2 provides required concepts for the methods and algorithms here defined; Section 3 defines the designs of the representations; Section 4 contains the detailed algorithms based on the compressed representations; Section 5 shows the results on complexity analyses of the algorithms; Section 6 provides final conclusions, remarks, and plans of future work.

2. Preliminaries

In this section we briefly introduce the concepts employed in this work. Firstly, we define the standard model of spiking neural P systems and three variants. Second, a matrix-based simulation algorithm for this model is revisited. Third, the fundamentals of compressed formats for sparse matrix-vector operations are given.

2.1. Spiking Neural P Systems

Let us first formally introduce the definition of spiking neural P system. This model was first introduced in [].

Definition 1.

A spiking neural P system of degree is a tuple

where:

- is the singleton alphabet (a is called spike);

- represents the arcs of a directed graph whose nodes are ;

- are neurons of the formwhere:

- –

- is the initial number of spikes within neuron labeled by i; and

- –

- is a finite set of rules associated to neuron labeled by i, of one of the following forms:

- (1)

- , being E a regular expression over , (firing rules);

- (2)

- for some , with the restriction that for each rule of type of type from , we have (forgetting rules).

- such that , for any .

A spiking neural P system of degree can be viewed as a set of q neurons interconnected by the arcs of a directed graph , called synapse graph. There is a distinguished neuron label , called output neuron (), which communicates with the environment.

If a neuron contains k spikes at an instant t, and , then the rule can be applied. By the application of that rule, c spikes are removed from neuron and the neuron fires producing p spikes immediately. Thus, each neuron such that receives p spikes. For , the output neuron , the spikes are sent to the environment.

The rules of type are forgetting rules, and they are applied as follows: If neuron contains exactly s spikes, then the rule from can be applied. By the application of this rule all s spikes are removed from .

In spiking neural P systems, a global clock is assumed, marking the time for the whole system. Only one rule can be executed in each neuron at step t. As models of computation, spiking neural P systems are Turing complete, i.e., as powerful as Turing machines. On one hand, a common way to introduce the input (instance of the problem to solve) to the system is to encode it into some or all of the initial spikes ’s (inside each neuron i). On the other hand, a common way to obtain the output is by observing neuron : either by getting the interval where sent its first two spikes at times and (we say n is computed or generated by the system), or by counting all the spikes sent by to the environment until the system halts.

For the rest of the paper, we call this model spiking neural P systems with static structure, or just static SNP, given that the graph associated with it does not change along the computation. Next, we briefly introduce and focus on three variants with a dynamic graph: division, budding, and plasticity. A broader explanation of them and more variants are provided at [,,,].

Finally, let us introduce some notations and definitions:

- : for a neuron , the presynapses of this neuron is .

- : for a neuron , the out degree of this neuron is: .

- : for a neuron , the insynapses of this neuron is .

- : for a neuron , the in degree of this neuron is: .

2.1.1. Spiking Neural P Systems with Budding Rules

Based on the idea of neuronal budding, where a cell is divided into two new cells, we can abstract it to budding rules. In this process, the new neurons can differ in some aspects: their connections, contents, and shape. A budding rule has the following form:

where E is a regular expression and .

If a neuron contains s spikes, , and there is no neuron such that there exists a synapse in the system, then the rule is enabled and it can be executed. A new neuron is created, and both neurons and are empty after the execution of the rule. This neuron keeps all the synapses that were going in, and this inherits all the synapses that were going out of in the previous configuration. There is also a synapse between neurons and , and the rest of synapses of are given to the neuron depending on the synapses of .

2.1.2. Spiking Neural P Systems with Division Rules

Inspired by the process of mitosis, division rules have been widely used within the field of membrane computing. In SNP systems, a division rule can be defined as follows:

where E is a regular expression and .

If a neuron contains s spikes, , and there is no neuron such that the synapse or exists in the system, , then the rule is enabled and it can be executed. Neuron is then divided into two new cells, and . The new cells are empty at the time of their creation. The new neurons keep the synapses previously associated to the original neuron , that is, if there was a synapse from to , then a new synapse from to and a new one to are created, and if there was a synapse from to , then a new synapse from to and a new one from to are created. The rest of synapses of and are given by the ones defined in .

2.1.3. Spiking Neural P Systems with Plasticity Rules

It is known that new synapses can be created in the brain thanks to the process of synaptogenesis. We can recreate this process in the framework of spiking neural P systems defining plasticity rules in the following form:

where E is a regular expression, , , and (a.k.a. neuron set). Recall that is the set of presynapses of neuron .

If a neuron contains s spikes, , then the rule is enabled and can be executed. The rule consumes c spikes and, depending on the value of , it performs one of the following:

- If and , or if and , then there is nothing more to do.

- If and , deterministically create a synapse to every , . Otherwise, if , then non-deterministically select k neurons in and create one synapse to each selected neuron.

- If and , deterministically delete all synapses in . Otherwise, if , then non-deterministically select k neurons in and delete each synapse to the selected neurons.

- If , create (respectively, delete) synapses at time t and then delete (resp., create) synapses at time . Even when this rule is applied, neurons are still open, that is, they can continue receiving spikes.

Let us notice that if, for some , we apply a plasticity rule with , when a synapse is created, a spike is sent from to the neuron that has been connected. That is, when attaches to through this method, we have immediately transferring one spike to .

2.2. Matrix Representation for SNP Systems

Usually, parallel, P system simulators make use of ad-hoc representations, tailored for a certain variant [,,]. In order to ease the simulation of static SNP system and its deployment to parallel environments, a matrix representation was introduced []. By using a set of algebraic operations, it is possible to reproduce the transitions of a computation. Although the baseline representation only involves SNP systems without delays and static structure, many extensions have followed such as for enabling delays [,], handling non-determinism [], plasticity rules [], rules on synapses [], and dendrite P systems []. In this section we briefly introduce the definitions for the matrix representation of the basic model of spiking neural P systems without delays, as defined above. We also provide the pseudocode to simulate just one computation of any P system of the variant using this matrix representation. In our notation, we use capital letters for vectors and matrices, and for accessing values: is the value at position i of the vector V, and is the value at row i and column j of matrix M.

For an SNP system of degree (q neurons and m rules, where ), we define the following vectors and matrices:

Configuration vector: is the vector containing all spikes in every neuron on the k computation step/time, where denotes the initial configuration; i.e., , for neuron . It contains q elements.

Spiking vector: shows if a rule is going to fire at the transition step k (having value 1) or not (having value 0). Given the non-determinism nature of SNP systems, it would be possible to have a set of valid spiking vectors, which is denoted as . However, for the computation of the next configuration vector, only a spiking vector is used. It contains m elements.

Transition matrix: is a matrix comprised of elements given as

In this representation, rows represent rules and columns represent neurons in the spiking transition matrix. Note also that a negative entry corresponds to the consumption of spikes. Thus, it is easy to observe that each row has exactly one negative entry, and each column has at least one negative entry [].

Hence, to compute the transition k, it is enough to select a spiking vector from all possibilities and calculate: .

The pseudocode to simulate a computation of an SNP system is as described in Algorithm 1. The selection of valid spiking vectors can be done in different ways, as in [,]. This returns a set of valid spiking vectors. In this work, we focus on just one computation, but non-determinism can be tackled by maintaining a queue of generated configurations [].

| Algorithm 1 MAIN PROCEDURE: simulating one computation for static spiking neural P systems. |

|

In this work we focus on compressing the representation, specifically the transition matrix, so the determination of the spiking vector is not affecting these designs. Therefore, we use a straightforward approach and select just one valid spiking vector randomly. The representations here depicted only affect how the computation of the next configuration is done (matrix-vector multiplication at line 6 in Algorithm 1).

2.3. Sparse Matrix-Vector Operations

Algebraic operations have been studied deeply in parallel computing solutions. Specifically, GPU computing provides large speedups when accelerating such kind of operations. This technology allows us to run scientific computations in parallel on the GPU, given that a GPU device typically contains thousands of cores and high memory bandwidth []. However, parallel computing on a GPU has more constraints than on a CPU: threads have to run in an SPMD fashion while accessing data in a coalesced way; that is, best performance is achieved when the execution of threads is synchronized and accessing contiguous data from memory. In fact, GPUs have been employed for P system simulations since the introduction of CUDA.

Matrix computation is a highly optimized operation in CUDA [], and there are many efficient libraries for algebra computations like cuBLAS. It is usual that when working with large matrices, these are almost “empty”, or with a majority of zero values. This is known as sparse matrix, and this downgrades the runtime in two ways: lot of memory is wasted, and lot of operations are redundant.

Given the importance of linear algebra in many computational disciplines, sparse vector-matrix operations (SpMV) have been subject of study in parallel computing (and so, on GPUs). Today there exists many approaches to tackle this problem []. Let us focus on two formats to represent sparse matrices in a compressed way, assuming that threads access rows in parallel:

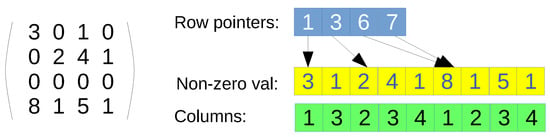

- CSR format. Only non-null values are represented by using three arrays: row pointers, non-zero values, and columns (see Figure 1 for an example). First, the row-pointers array is accessed, which contains a position per row of the original matrix. Each position says the index where the row start in the non-zero values and columns arrays. The non-zero values and the columns arrays can be seen as a single array of pairs, since every entry has to be accessed at the same time. Once a row is indexed, then a loop over the values in that row has to be performed, so that the corresponding column is found, and therefore, the value. If the column is not present, then the value is assumed to be zero, since this data structure contains all non-zero values. The main advantage is that it is a full-compressed format if , where and are the number of non-zero and zero values in the original matrix, respectively. However, the drawbacks is that the search of elements in the non-zero values and columns arrays is not coalesced when using parallelism per row. Moreover, since it is a full-compressed format, there is no room for modifying the values, such as introducing new non-zero values;

Figure 1. CSR format example. Non-zero val array stores the non-null values, columns array stores the column indexes, and row pointers are the positions where each row starts in the previous arrays.

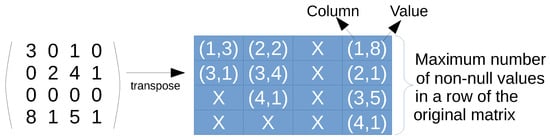

Figure 1. CSR format example. Non-zero val array stores the non-null values, columns array stores the column indexes, and row pointers are the positions where each row starts in the previous arrays. - ELL format. This representation aims at increasing the memory coalescing access of threads in CUDA. This is achieved by using a matrix of pairs, containing a trimmed, transposed version of the original matrix (see Figure 2 for an example). Each column of the ELL matrix is devoted to each row of the matrix, even though the row is empty (all elements are zero). Every element is a pair, where the first position denotes the column and the second is the value, of only the non-zero elements in the corresponding row. However, the size of the matrix is fixed, so the number of columns equals the number of rows of the original matrix, but the number of rows is the maximum length of a row in terms of non-zero values; in other words, the maximum amount of non-zero elements in a row of the original matrix. Rows containing fewer elements pad the difference with null elements. The main advantage of this format is that threads always access the elements of all rows in coalesced way, and the null elements padded by short rows can be utilized to incorporate new data. However, there is a waste of memory, which is worst when the rows are unbalanced in terms of number of zeros.

Figure 2. ELL format example. Note that it represents the transpose of the original matrix to increase the coalesced access in GPU devices. It includes pairs of column and value for every row. The compressed matrix has a number of columns equals to the number of original rows, and a number of rows equals to the maximum amount of non-null values in an original row.

Figure 2. ELL format example. Note that it represents the transpose of the original matrix to increase the coalesced access in GPU devices. It includes pairs of column and value for every row. The compressed matrix has a number of columns equals to the number of original rows, and a number of rows equals to the maximum amount of non-null values in an original row.

3. Methods

SNP systems in the literature typically do not contain fully connected graphs. This means that the transition matrix gets very sparse and, therefore, both computing time and memory are wasted. However, further optimizations based on SpMV can be conveyed. In the following subsections we discuss some approaches. Of course, if the graph inherent to an SNP system leads to a compressed transition matrix, then a normal (sparse) format can be employed, because using compressed formats will increase the memory footprint.

In this work, we focus on the basic model of spiking neural P systems without delays as defined above, as well as three variants with dynamic network: budding, division and plasticity. The set of algorithms defined next are designed to take advantage of data parallelism, what is convenient for GPUs and vector co-processors. Their pseudocodes are detailed in Section 4 of this paper.

In Algorithm 2 we generalize the pseudocode disposed in Algorithm 1 to be able to handle both static and dynamic networks. This way, each variant requires to re-define just some functions from the ones defined for the static SNP system variant using vector and matrices without compression (i.e., sparse representation). In order to understand the algorithms, we will present in this section the main new data structures and their behavior. The detailed pseudocodes are available in Section 4.

| Algorithm 2 MAIN PROCEDURE: simulating one computation for spiking neural P systems. |

|

As a convention, those vectors and matrices using subindex k are dynamic and can change during the simulation time, while those with subindex are constructed at the beginning and are invariant. Capital letters refer to vectors and matrices, and small letters are scalar numbers. A total order over the rules defined in the system is assumed, which is denoted as . For the sake of simplicity, we represent each rule , with , as a tuple , where i is the subindex of the set where belongs (i.e., the neuron where it is contained). Specifically, forgetting rules just have .

For static SNP systems using sparse representation, we use the following vectors and matrices:

- Preconditions vector is a vector storing the preconditions of the rules; that is, both the regular expression and the consumed spikes. Initially, , for each .

- Neuron-rule map vector is a vector that maps each neuron index with its rules indexes. Specifically, is the index of the first rule in the neuron. Given that rules have been ordered in R as mentioned above, rules belonging to the same neuron have contiguous indexes. Thus, it is enough to store just the first index. In this sense, the first rule in neuron i is and the last one is . In other words, contains elements, and it is initialized as follows: . Specifically, and , for

- is the transition tuple, where . If the variant has a dynamic network, the transition matrix needs to be modified. Therefore, we start with . The following algorithms show how they are constructed.

Algorithm 2 can be easily transformed to Algorithm 1 by defining the INIT and COMPUTE_NEXT functions as in Algorithm 3. They work exactly as already specified in Section 2.2; that is, using the usual vector-matrix multiplication operation to calculate the next configuration vector. We will also detail how the selection of spiking vectors can be done. This is defined in Algorithm 4, and it is based on previous ideas already presented in [,]. First, SPIKING_VECTORS function calculates the set of all possible spiking vectors by using a recursive function over neuron index i. It gathers all spiking vectors that can be generated for neurons and then. If neuron i contains applicable rules, it populates a spiking vector for each of these rules, and from each of the generated spiking vectors form neurons . Finally, neuron i propagates these spiking vectors to the next neuron .

| Algorithm 3 Functions for static SNP systems with sparse matrix representation. | |

|

|

| Algorithm 4 Spiking vectors selection with static SNP systems and sparse representation. | |

|

|

3.1. Approach with ELL Format

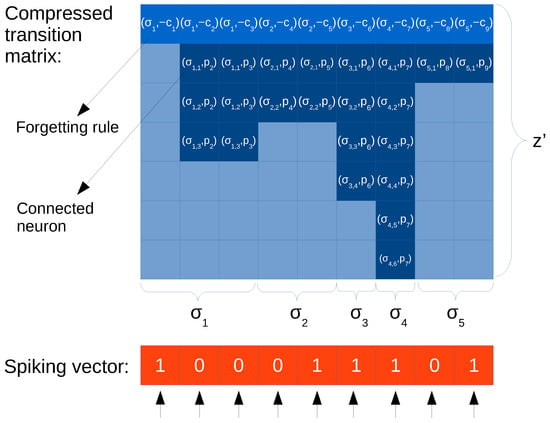

Our first approach to compress the representation of the transition matrix, , is to use the ELL format (see Figure 3 for an example). The reason for using ELL and not other compressed formats for sparse matrices (CSR, COO, BSR, …) is to enable extensions for dynamic networks, as seen later. ELL can give some room for modifications without much memory re-allocations, while CSR requires us to modify the whole matrix to add new elements.

Figure 3.

Illustration of compressed representation based on the ELL format of a static spiking neural P (SNP) system. Light cells are empty values (0,0). The first column is a forgetting rule (there is no need to use p = 0). The rows below the spiking vector illustrate threads, showing the level of parallelism that can be achieved; i.e., each column and each position of the spiking vector in parallel can be processed in parallel.

ELL format leads to the new compressed matrix . The following aspects have been taken into consideration:

- The ELL format represents the transpose of the original matrix, so now rows correspond to neurons and columns to rules. This is convenient for SIMD processors such as GPUs.

- The number of rows of equals the maximum amount of non-zero values in a row of , denoted by . It can be shown that , where z is the maximum output degree found in the neurons of the SNP system. Specifically, (see definition in Section 2.1). can be derived from the composition of the transition matrix, where row j devoted for rule contains the values for every neuron i (columns) connected though an output synapse with the neuron where the rule belongs to (i.e., ), and a value for consuming the spikes in the neuron the rule belongs to (i.e., ).

- The values inside columns can be sorted, so that the consumption of spikes ( values) are placed at the first row. In this way, if implemented in parallel, all threads can start by doing the same task: consuming spikes.

- Every position of is a pair (as illustrated in Figure 3), where the first element is a neuron label, and the second is the number of spikes produced ().

A parallel code can be implemented with this design by assigning a thread to each rule, and so, one per column of the spiking vector and one per column of (rows of the original transition matrix). For the vector-matrix multiplication, it is enough to have a loop of steps at maximum through the columns. In the loop of each column j, the corresponding value in the spiking vector (either 0 or 1) is multiplied to the value in the pair , and added to the neuron id in the configuration vector . In case the SNP network contains hubs (nodes with high amount of input synapses), then we can opt for a parallel reduction per column. Since some threads might write to same positions in the configuration vector at the same time, a solution would be to use atomic adding operations, which are available on devices such as GPUs.

In order to use this representation in Algorithm 2, we only need to re-define functions INIT_RULE_MATRICES and COMPUTE_NEXT from Algorithm 3 (for sparse representation) as shown in Algorithm 5. The rest of functions remain unchanged.

| Algorithm 5 Functions for static SNP systems with ELL-based matrix representation. | |

|

|

3.2. Optimized Approach for Static Networks

If, in general, more than one rule are associated to each neuron, many of the iterations in the main loop in COMPUTE_NEXT function are wasted. Indeed, if the loop is parallelized and each iteration is assigned to a thread, then many of them will be inactive (having a 0 in the spiking vector), causing performance drops such as branch divergence and non-coalesced memory access in GPUs. Moreover, note in Figure 3 that columns corresponding to rules belonging to the same neuron contain redundant information: the generation of spikes () is replicated for all synapses.

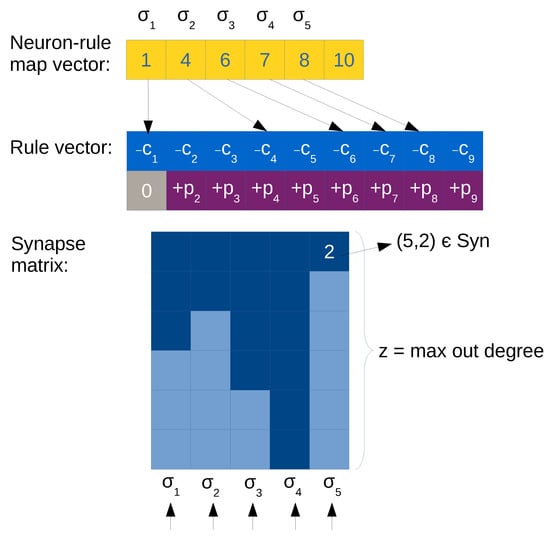

Therefore, a more efficient compressed matrix representation can be obtained when maintaining the synapses separated from the rule information. This is called optimized matrix representation, and can be done with the following data structures:

- Rule vector, . By using a CSR-like format (see Figure 4 for an example), rules of the form (also forgetting rules are included, assuming ) can be represented by an array storing the values c and p in a pair. We can use the already defined neuron-rule map vector to relate the subset of rules associated to each neuron.

Figure 4. Illustration of optimized compressed matrix representation. Light cells in the synapse matrix are empty values (0), dark cells are positions with values greater than 0 (i.e., with neuron labels). The rows below illustrate threads, showing the level of parallelism that can be achieved (each column/neuron in parallel). The first column in the rule vector is a forgetting rule, where .

Figure 4. Illustration of optimized compressed matrix representation. Light cells in the synapse matrix are empty values (0), dark cells are positions with values greater than 0 (i.e., with neuron labels). The rows below illustrate threads, showing the level of parallelism that can be achieved (each column/neuron in parallel). The first column in the rule vector is a forgetting rule, where . - Synapse matrix, . It is a transposed matrix as with ELL representation (to better fit to SIMD architectures such as GPU devices), but it has a column per neuron i and a row for every neuron j such that (there is a synapse). That is, every element of the matrix corresponds to a synapse (the neuron id) or a null value otherwise. Null values are employed for padding the columns, since the number of rows equals z (the maximum output degree in the neurons of the SNP system). See Figure 4 for an example.

- Spiking vector is modified, containing only q positions instead of n (i.e., one per neuron), and states which rule is selected.

Note that we replace the transition matrix for a pair with rule vector and synapse matrix: . In order to compute the next configuration, it is enough to loop over the neurons. Then, for each neuron i, we check which rule j is selected, according to the spiking vector at position . This is used to grab the pair from the rule vector, and therefore consume spikes in the neuron i and add spikes in the neurons at the column i of the synapse matrix. The loop over the column can end prematurely if the out degree of neuron i is not z (that is, when encountering a null value). This operation can be easily parallelized by assigning a thread to each column of the synapse matrix (requiring q threads, one per neuron).

In order to use this optimized representation in Algorithm 2, we need to re-define the spiking selection function, since this vector works differently. To do this, it is enough to just modify two lines in the definition of COMBINATIONS function at Algorithm 4, in order to keep the spiking vector with size q and storing the rule id instead of just 1 or 0 (see Section 4 for more detail). Moreover, we need to define tailored INIT_RULE_MATRICES and COMPUTE_NEXT functions as shown in Algorithm 6, replacing those from Algorithm 3.

| Algorithm 6 Functions for static SNP systems with optimized compressed matrix representation. | |

|

|

3.3. Optimized Approach for Dynamic Networks

The optimized compressed matrix representation discussed in Section 3.2 can be further extended to support rules that modify the network, such as budding, division, or plasticity.

3.3.1. Budding and Division Rules

We start by analyzing how to simulate dynamic SNP systems with budding and division rules. They are supported at the same time in order to unify the pseudocode and also because both kind of rules are usually together in the model.

First of all, the synapse matrix has to be flexible enough to host new neurons. This can be accomplished by allocating a matrix large enough to populate new neurons (probably up to fill the whole memory available). We denote as the maximum amount of neurons that the simulator is able to support, and the amount of neurons in a given step k. The formula to calculate is in Section 5. It is important to point out that the simulator needs to differentiate between neuron label and neuron id []. The reason for this separation is that we can have more than one neuron (with different ids) with the same label (and hence, rules).

In order to achieve this separation, it is enough to have a vector to map each neuron id to its label. We will call this new vector, neuron-id map vector , and the following holds at step k: . That is, neuron-id map vector, configuration vector and spiking vector have a size of as well. Once the label of a neuron is obtained, the information of its corresponding rules can be accessed as usual, like the neuron-rule map vector . For simplicity, we attach the neuron-id map vector to the transition tuple. Moreover, the synapse matrix becomes dynamic, thus using k sub-index: ; hence, the transition matrix is also dynamic. Let us now introduce this new notation for transition tuple and transition matrix:

- The transition matrix is now a dynamic pair: .

- The transition tuple is extended as follows: .

We use the following encoding for each type of rule. Spiking and forgetting rules remain unchanged:

- For a budding rule as . Given that all pairs in the rule vector are of the form , and c is always greater equal than 1, then we can encode a budding rule as a pair .

- For a division rule as . Given that all pairs in the rule vector are of the form , and c is always greater equal than 1, then we can encode a division rule as a pair .

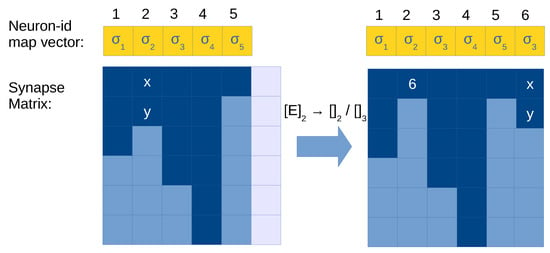

The execution of a budding rule requires the following operations (see Figure 5 for an illustration):

- Let be the neuron id executing this rule.

- Allocate a column to the synapse matrix for the new neuron, and use this index as its neuron id.

- Add an entry to the neuron-id map vector at position with the label l.

- Copy column to the new column in .

- Delete the content of column and add only one element at the first row with the id .

Figure 5.

Illustration of application of a budding rule in the synapse matrix with compressed representation. Light blue cells in the synapse matrix are empty values (0), dark cells are positions with values greater than 0 (i.e., with neuron id), and light cells are empty columns allocated in memory (a total of ). Neuron 1 is applying budding, and its content is copied to an empty column (5) and replaced by a single synapse to the created neuron.

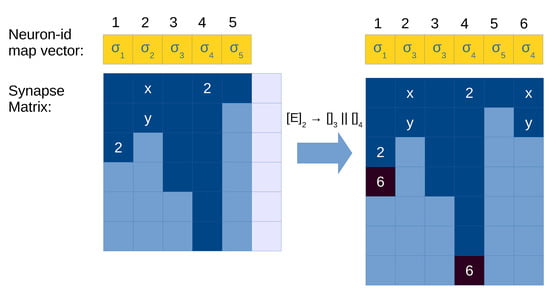

For a division rule , the following operations have to be performed (see Figure 6 for an example):

- Let be the neuron id executing this rule.

- Allocate a new column for the created neuron l in the synapse matrix .

- Modify the neuron-id map vector as follows: replace the value at position for label j, and add a new entry for to associate it with label k.

- Copy column to in (the generated neuron gets the out synapses of the parent).

- Find all occurrences of in the synapse matrix, and add to the columns where it is found.

Figure 6.

Illustration of application of a division rule in the synapse matrix with compressed representation. Light blue cells in the synapse matrix are empty values (0), dark cells are positions with values greater than 0 (i.e., with neuron id), and light cells are empty columns allocated in memory (a total of ). Neuron 1 is being divided, and its content is copied to an empty column (5). Columns 0 and 3 represent neurons with a synapse to the neuron being divided (1), so we need to update them as well with the synapse to the created neuron (5). Neuron 3 has reached its limit of maximum out degree, therefore we need to expand the matrix with a new row, or use a COO-like system to store these exceeded elements.

The last operation can be very expensive if the amount of neurons is large, since it requires to loop all over the synapse matrix. Moreover, when adding in all the columns containing , it would be possible to exceed the predetermined size z. For this situation, a special array of overflows is needed, like ELL + COO format for SpMV []. For simplicity, we will assume this situation is weird and the algorithm will allocate a new row for the synapse matrix.

Some functions in the pseudocode are re-defined to support dynamic networks with division and budding:

- INIT functions as in Algorithm 7. They now take into account the initialization of structures at its maximum amount , including the new neuron-id map vector.

- SPIKING_VECTORS function, as defined in Algorithm 4 and modified in Section 3.2 for optimized matrix representation, is slightly modified (just two lines) to support the neuron-id map vector.

- APPLICABLE function as in Algorithm 8. This function, when dealing with division rules, has to search if there are existing synapses for the neurons involved. If they exist, the division rule does not apply.

- COMPUTE_NEXT function as in Algorithm 9, to include the operations described above. It now needs to expand the synapse matrix either by columns (when new neurons are created) or by rows if there is a neuron from which we need to create a synapse to the new neuron and it has already the maximum out degree z. In this case, we need to re-allocate the synapse matrix in order to extend it by one row (this is written in the pseudocode with the function EXPAND_MATRIX). Finally, let us remark that we can easily detect if type of a rule at it’s associated value: if 0, it is a budding rule, if it is positive number, a spiking rule, otherwise (negative value) it is a division rule.

| Algorithm 7 Initialization functions for dynamic SNP systems with budding and division rules over optimized compressed matrix representation. | |

|

|

| Algorithm 8 Applicable functions for dynamic SNP systems with budding rules over optimized compressed matrix representation. | |

|

|

| Algorithm 9 Compute next function for dynamic SNP systems with budding and division rules using optimized compressed matrix representation. | |

|

|

3.3.2. Plasticity Rules

For dynamic SNP systems with plasticity rules, the synapse matrix can be allocated in advance to the exact size q, since no new neurons are created. Thus, there is no need of using a neuron-id map vector as before. However, enough rows (value z) in the synapse matrix have to be pre-established to support the maximum amount of synapses. Fortunately, this can be pre-computed by looking to the initial out degrees of the neurons and the size of the neuron sets in the plasticity rules adding synapses. We encode a plasticity rule , with as follows: . Next, we define the value of for SNP systems with plasticity rules: , where . In other words, is the maximum out degree (z) that a neuron can have initially plus those new connections that can be created with plasticity rules inside that neuron. This result can be refined for plasticity rules having , because we know up to k new synapses can be created at a time. However, for simplicity, we will use the formula above.

First, we need to represent plasticity rules into vectors. We assume that is the total amount of plasticity rules in the system, and that there is a total order between these rules. Given a plasticity rule, we can initialize the neuron-map and the precondition vector as with spiking rules. But in this case, we need a couple of new vectors and modify existing ones in order to represent all plasticity rules , with (following the imposed total order):

- Rule vector stores the following pair for a plasticity rule : , that is, the consumed spikes and the unique index of the plasticity rule j. This index is used to access the following vector, and it is stored as a negative value in order to detect that this is a plasticity rule.

- Plasticity rule vector, of size , contains a tuple for each plasticity rule of the form . The values and are used as indexes, from (start) to (end) for the following vector.

- Plasticity neuron vector, of size , represents all neuron sets of plasticity rules. Thus, the elements of are stored, in an ordered way, between to .

- Time vector is used to prevent neurons from applying rules during one step if the plasticity rule applied was of the type . It contains binary (0 or 1) values.

- Transition matrix is therefore . Note that the Synapse matrix can be modified at each step, so we use sub-index k.

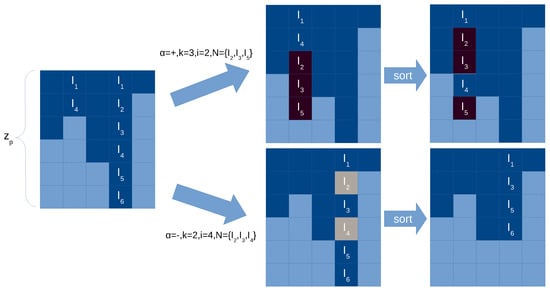

The following operations have to be performed to reproduce the behavior plasticity rules (see Figure 7 for an illustration):

- For each column in the synapse matrix executing a plasticity rule deleting x synapses:

- (a)

- If the intersection of the rule’s neuron set and the current synapses in is larger than x, then randomly select x synapses.

- (b)

- Loop through the rows (up to iterations) to search the selected neurons and set them to null. Given that holes might appear in the column, its values can be sorted (or compacted).

- For each column in the synapse matrix executing a plasticity rule adding x synapses:

- (a)

- If the difference of the rule’s neuron set and the current synapses in is larger than x, then randomly select x neurons.

- (b)

- Loop through the rows (up to iterations) to insert the selected new synapses while keeping the order.

Figure 7.

Illustration of application of a plasticity rule in the synapse matrix with compressed representation. Light blue cells in the synapse matrix are empty values (0), dark cells are positions with values greater than 0 (i.e., with neuron label). Two examples are give, in case of adding new synapses (top) and in case of deleting synapses (bottom). We sort the synapses per column for more efficiency.

Checking the applicability of plasticity rules is much simpler than for division rules, given that the preconditions only affect to the local neuron and we do not need to know if there are existing synapses. However, for a plasticity rule r in a neuron i, and in order to create new or delete existing synapses, we need to check which neurons declared in r are already in the column i in the synapse matrix. This search can be , being the length of the neuron set in r. Nevertheless, by maintaining always the order in the column, this search can be done easily in .

Given that it is not usual to have budding and division rules together with plasticity rules, the pseudocode is based on the optimized matrix representation for static SNP systems (and not for division and budding) in Section 3.2. Algorithm 10 shows the re-definition of INIT_RULE_MATRICES and COMPUTE_NEXT functions, replacing those from Algorithm 3. For COMPUTE_NEXT, the implementation is very similar to the original one, but it just call to a new function, PLASTICITY, which actually modify the synapses of the neuron (by just modifying its corresponding column in the synapse matrix). This function and its auxiliaries are defined in Algorithms 11 and 12, respectively.

| Algorithm 10 Functions for dynamic SNP systems with plasticity rules using optimized compressed matrix representation. | |

|

|

4. Algorithms

In this section we define the algorithms implementing the methods described in Section 3.

Let us first define a generic function to create a new, empty (all values to 0) vector of size s as follows: EMPTY_VECTOR(s). In order to create an empty matrix with f rows and c columns, we will use the following function: EMPTY_MATRIX(). Next, the pseudocodes for simulating static SNP systems with sparse representation are given. Algorithm 3 shows the INIT and COMPUTE_NEXT functions, while Algorithm 4 shows the selection of spiking vectors.

For ELL-based matrix representation for static SNP systems, we need to re-define only two functions (INIT_RULE_MATRICES and COMPUTE_NEXT) from Algorithm 3 (static SNP systems with sparse representation) as shown in Algorithm 5.

For our optimized matrix representation for static SNP systems, we need to re-define only two functions (INIT_RULE_MATRICES and COMPUTE_NEXT) from Algorithm 3 (static SNP systems with sparse representation) as shown in Algorithm 6. Moreover, the following two lines in the definition of COMBINATIONS function at Algorithm 4 are required, in order to support a spiking vector of size q:

- Line 13 at Algorithm 4: EMPTY_VECTOR(q)

- Line 22 at Algorithm 4:

For dynamic SNP systems with budding and division rules, the following functions are redefined: INIT functions as in Algorithm 7, APPLICABLE function as in Algorithm 8, and COMPUTE_NEXT function as in Algorithm 9. The SPIKING_VECTORS function, as defined in Algorithm 4 and modified in Section 3.2 for optimized matrix representation, is slightly modified (just two lines) to support the neuron-id map vector as follows:

- Line 6 at Algorithm 4:

- Line 18 at Algorithm 4: for

For dynamic SNP systems with plasticity rules, the pseudocode is based on the optimized matrix representation for static SNP systems (and not for division and budding) in Section 3.2. Algorithm 10 shows the re-definition of INIT_RULE_MATRICES and COMPUTE_NEXT functions, replacing those from Algorithm 3. As for line 17, we assume that the function SORT exists, which takes a set of neurons, sorts them by id, and generates a vector. Moreover, we can copy vectors directly from one position by just one assignation. The new PLASTICITY function is defined in Algorithm 11, and its auxiliaries are defined in Algorithm 12.

| Algorithm 11 Function for plasticity mechanism using optimized compressed matrix representation. | |

|

|

| Algorithm 12 Auxiliary functions for plasticity mechanism using optimized compressed matrix representation. | |

|

|

In order to keep Algorithm 12 simple, we assume that the functions INTERSEC, DIFF, and DELETE_RANDOM are already defined. As mentioned above, INTERSEC and DIFF can be implemented with algorithms of complexity , given that the vectors (a column of synapse matrix and a chunk of plasticity neuron vector) are already sorted. We also assume that DELETE_RANDOM is a function that randomly select k elements from a total of n while keeping the order between elements. This can be done with an algorithm of complexity .

5. Results

In this section we conduct a complexity analysis (for both time and memory) of the algorithms. In order to define the formulas, we need to introduce a set of descriptors for a spiking neural P system . These are described in Table 1. Moreover, Table 2 summarizes the vectors and matrices employed by each representation, and their corresponding sizes defined according to the descriptors. We use the following short names for the representations: Sparse (original sparse representation as Section 3), ELL (ELL compressed representation as in Section 3.1), optimized static (optimized static compressed representation as in Section 3.2), division and budding (optimized dynamic compressed representation for division and budding as in Section 3.3.1), and plasticity (optimized dynamic compressed representation for plasticity as in Section 3.3.2).

Table 1.

Descriptors of an SNP system.

Table 2.

Size of matrices employed in the representations for SNP systems. Those whose name are in bold were used for the total calculation, which assumes just one spiking vector.

According to Table 2, we can limit the value of for dynamic SNP systems with division and budding with the following formula: , where is the maximum amount of memory in the system (measured in the word size employed to encode the elements of all the matrices and vectors; e.g., 4 Bytes). Moreover, we can infer when the matrix representation will be smaller for static SNP systems: ELL is better than sparse when ; optimized is better than ELL when ; optimized is better than sparse when . In other words, our optimized compressed representation is worth when the the maximum out degree of the neurons is less than the total number of rules minus 2.

For dynamic SNP systems, given can say that a solution to a problem using an SNP with plasticity rules is better than a solution based on division and budding, if ; in other words, if we can know the maximum amount of neurons to generate, and this number is greater than a formula based on number of initial neurons, number of rules, and number of elements in the neuron set and the max out degree, then the solution will need less memory using plasticity.

Finally, Table 3 shows the order of complexity of each function as defined for each representation. We can see that COMPUTE_NEXT gets reduced in complexity as well when using optimized static representation against ELL and sparse, given that we expect that , and also . However, we can see that implementing division and budding explodes the complexity of the algorithms, since they need to loop over all the neurons checking for in-synapses. This also depends on the total amount of generated neurons in a given step. This is also the case for the generation of spiking vectors, because the applicability function also needs to loop over all existing neurons. However, for dynamic networks, plasticity keeps the complexity with the amount of neurons, the value z, and the descriptors of plasticity rules (max value of k and amount of neurons in a neuron set ).

Table 3.

Algorithmic order of complexity of the main functions employed in the simulation loop (i.e., excluding init functions) for each representation.

Therefore, we can see that using our compressed representations, both the memory footprint of the simulators and their complexity are reduced, as long as the maximum out degree of neurons is a low number. Furthermore, we can see that for dynamic networks, plasticity is an option that keeps the complexity balanced, since we know in advance the amount of neurons and synapses.

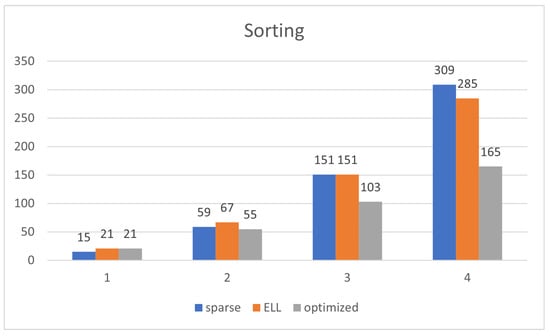

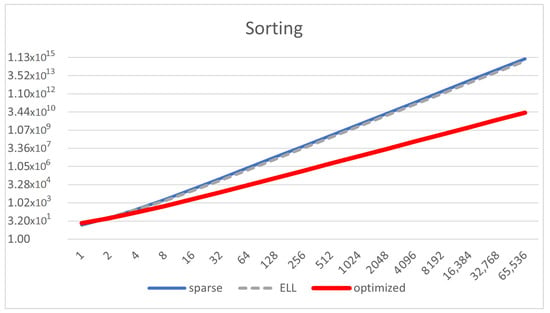

Let us make an easy example of comparison with an example from the literature. For example, if we take the SNP system for sorting natural numbers as defined in [], then we have that , and , where n is the amount of natural numbers to sort. Thus:

- The size of the sparse representation is and the complexity of COMPUTE_NEXT is .

- The size of the ELL representation is and the complexity of COMPUTE_NEXT is .

- The size of the optimized representation is and the complexity of COMPUTE_NEXT is .

The optimized representation drastically decreases the order of complexity and amount of memory spent for the algorithms, going from orders of to . ELL has a similar order of complexity to that of sparse, but the amount of memory is just a bit decreased. Figure 8 shows that the reduction of the memory footprint achieved with the compressed representations takes effect after . Figure 9 shows that the optimized representation scales better than ELL and sparse. ELL is only a bit better than the sparse representation, demonstrating the need for using the optimized one, which significantly scales much better.

Figure 8.

Memory size of the matrix representation (Y-axis) depending on the amount of natural numbers (n, X-axis) for the model of natural number sorting in [], using sparse, ELL and optimized representation, for only .

Figure 9.

Memory size of the matrix representation (Y-axis in log scale) depending on the amount of natural numbers (n, X-axis in log scale) for the model of natural number sorting, using sparse, ELL, and optimized representation, for .

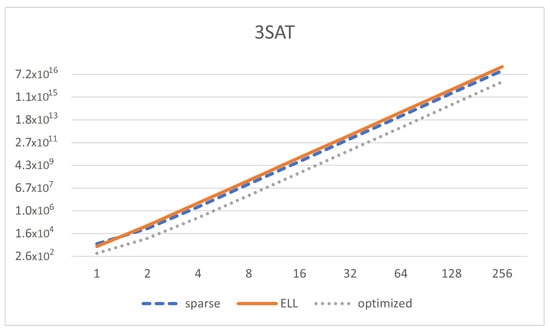

Finally, we also analyze a uniform solution to 3SAT with SNP systems without delays as in [] (Figure 10). We can see that , and , where n is the amount of variables in the 3SAT instance. We can see that , so our optimized implementation will be able to save some memory. Therefore:

- The size of the sparse representation is and the complexity of COMPUTE_NEXT is .

- The size of the ELL representation is and the complexity of COMPUTE_NEXT is .

- The size of the optimized representation is and the complexity of COMPUTE_NEXT is .

Figure 10.

Memory size of the matrix representation (Y-axis in log scale) depending on the number of variables in the SAT formula (, X-axis in log scale) for the model of 3SAT, using sparse, ELL, and optimized representation.

We can see that the memory footprint is decreased but it is still of the same order of magnitude (), and the same happens with the computing complexity. Thus, our representation helps to reduce memory, although not significantly for this specific solution. This is mainly due to having a high value of z. We can see in Figure 10 how the reduction of memory takes place only for optimized representation as long as n increases. It is interesting to see that the ELL representation is even worse than just using sparse representation.

Finally, let us analyze the size of the solution uniform solution to subset sum with plasticity rules in []. The descriptors for the matrix representation of a dynamic SNP system with plasticity rules are the following: , , , , where n is the number of sets V, therefore, the memory footprint is described as: . If we were using a sparse representation where the transition matrix is of order , then the amount of memory is of order .

6. Conclusions

In this paper, we addressed the problem of having very sparse matrices in the matrix representation of SNP systems. Usually, the graph defined for an SNP system is not fully connected, leading to sparse matrices. This drastically downgrades the performance of the simulators. However, sparse matrices are a known issue in other disciplines, and efficient representations have been introduced in the literature. There are even solutions tailored for parallel architectures such as GPUs.

We propose two efficient compressed representations for SNP systems, one based on the classic format ELL, and an optimized one based on a combination of CSR and ELL. This representation gives room to support rules for dynamic networks: division, budding, and plasticity. The representation for plasticity poses more advantages than the one for division and budding, since the synapse matrix size can be pre-computed. Thus, no label mapping nor empty columns to host new neurons are required. Moreover, simulating the creation of new neurons in parallel can damage the performance of the simulator significantly, because this operation can be sequential. Plasticity rules do not create new neurons, so this is avoided.

As future work, we plan to provide implementations of these designs within cuSNP [] and P-Lingua [] frameworks to provide high performance simulations with real examples from the literature. We believe that these concepts will help to bring efficient tools to simulate SNP systems on GPUs, enabling the simulation of large networks in parallel. Specifically, we will use these designs to develop a new framework for automatically designing SNP systems using genetic algorithms []. Another tool that could benefit from the inclusion of this new type of representation are visual tools for SNP systems []. Moreover, our optimized designs will enable the effective usage of spiking neural P systems on industrial processes such as [,,,], and to optimization applications as [,]. SNP systems have been used in many applications [], and in order to be used in industrial applications we need efficient simulators where compressed representations of sparse matrices can help.

Numerical SNP systems (or NSNP systems) [,] are SNP system variants which are largely dissimilar to many variants of SNP systems, especially to the variants considered in this paper, for at least two main reasons: (1) rules in NSNP systems do not use regular expressions, and instead use linear functions, so that rules are applied when certain values or threshold of the variables in such functions are satisfied, and (2) the variables in the functions are real-valued, unlike the natural numbers associated with strings and regular expressions. One of the main goals in [] for introducing NSNP systems is to create an SNP system variant, which in a future work may be more feasible for use with training algorithms in traditional neural networks []. For these reasons, we plan to extend our algorithms and compressed data structures for NSNP systems. We think that simulators for this variant can be effectively accelerated on GPUs. Specifically, GPUs are devices designed for floating point operations and not for integer arithmetic, although the latter is supported.

We also plan to include more models and ingredients into these new methods, such as delays, weights, dendrites, rules on synapses, and scheduled synapses, among others. Moreover, a recent work in SNP systems with plasticity shows that having the same set of rules in all neurons leads to Turing complete algorithms []. This means that m descriptor can be common to all neurons, leading to smaller representations for this kind of systems. We plan to study this deeper and combine it with our representations. Our aim on focusing on plasticity is also related to other results involving this ingredient in other fields such as machine learning [].

Author Contributions

Conceptualization, M.Á.M.-d.-A., and F.G.C.C.; methodology, M.Á.M.-d.-A. and D.O.-M.; validation, M.Á.M.-d.-A., F.G.C.C. and D.O.-M.; formal analysis, D.O.-M., I.P.-H. and H.N.A.; investigation, M.Á.M.-d.-A. and F.G.C.C.; resources, D.O.-M., F.G.C.C. and I.P.-H.; writing—original draft preparation, M.Á.M.-d.-A., D.O.-M., I.P.-H., F.G.C.C., H.N.A.; writing—review and editing, M.Á.M.-d.-A., D.O.-M., I.P.-H., F.G.C.C., H.N.A.; supervision, I.P.-H. and H.N.A.; All authors have read and agreed to the published version of the manuscript.

Funding

Financiado por: FEDER/Ministerio de Ciencia e Innovación—Agencia Estatal de Investigación/_Proyecto (TIN2017-89842-P). F.G.C. Cabarle is supported in part by the ERDT program of the DOST-SEI, Philippines, and the Dean Ruben A. Garcia PCA from UP Diliman. H.N. Adorna is supported by Semirara Mining Corp Professorial Chair for Computer Science, RLC grant from UPD OVCRD, and ERDT-DOST research grant.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Păun, G. Computing with membranes. J. Comput. Syst. Sci. TUCS Rep. No 208 2000, 61, 108–143. [Google Scholar] [CrossRef]

- Song, B.; Li, K.; Orellana-Martín, D.; Pérez-Jiménez, M.J.; Pérez-Hurtado, I. A Survey of Nature-Inspired Computing: Membrane Computing. ACM Comput. Surv. 2021, 54. [Google Scholar] [CrossRef]

- Arteta Albert, A.; Díaz-Flores, E.; López, L.F.D.M.; Gómez Blas, N. An In Vivo Proposal of Cell Computing Inspired by Membrane Computing. Processes 2021, 9, 511. [Google Scholar] [CrossRef]

- Ionescu, M.; Pundefinedun, G.; Yokomori, T. Spiking Neural P Systems. Fundam. Inform. 2006, 71, 279–308. [Google Scholar]

- Fan, S.; Paul, P.; Wu, T.; Rong, H.; Zhang, G. On Applications of Spiking Neural P Systems. Appl. Sci. 2020, 10, 7011. [Google Scholar] [CrossRef]

- Păun, G.; Pérez-Jiménez, M.J. Spiking Neural P Systems. Recent Results, Research Topics. Algorithmic Bioprocess. 2009, 273–291. [Google Scholar] [CrossRef]

- Rong, H.; Wu, T.; Pan, L.; Zhang, G. Spiking neural P systems: Theoretical results and applications. In Enjoying Natural Computing; Springer: Berlin/Heidelberg, Germany, 2018; pp. 256–268. [Google Scholar]

- Ibarra, O.; Leporati, A.; Păun, A.; Woodworth, S. Spiking Neural P Systems. In The Oxford Handbook of Membrane Computing; Păun, G., Rozenberg, G., Salomaa, A., Eds.; Oxford University Press: Oxford, UK, 2010; pp. 337–362. [Google Scholar]

- Pan, L.; Wu, T.; Zhang, Z. A Bibliography of Spiking Neural P Systems; Technical Report; Bulletin of the International Membrane Computing Society: Sevilla, Spain, 2016. [Google Scholar]

- Wang, J.; Hoogeboom, H.J.; Pan, L.; Păun, G.; Pérez-Jiménez, M.J. Spiking Neural P Systems with Weights. Neural Comput. 2010, 22, 2615–2646. [Google Scholar] [CrossRef]

- Pan, L.; Wang, J.; Hoogeboom, H.J. Spiking Neural P Systems with Astrocytes. Neural Comput. 2012, 24, 805–825. [Google Scholar] [CrossRef]

- Song, X.; Wang, J.; Peng, H.; Ning, G.; Sun, Z.; Wang, T.; Yang, F. Spiking neural P systems with multiple channels and anti-spikes. Biosystems 2018, 169–170, 13–19. [Google Scholar] [CrossRef]

- Peng, H.; Bao, T.; Luo, X.; Wang, J.; Song, X.; Nez, A.R.N.; Pérez-Jiménez, M.J. Dendrite P systems. Neural Netw. 2020, 127, 110–120. [Google Scholar] [CrossRef] [PubMed]

- Song, T.; Pan, L.; Păun, G. Spiking neural P systems with rules on synapses. Theor. Comput. Sci. 2014, 529, 82–95. [Google Scholar] [CrossRef]

- Cabarle, F.G.C.; Adorna, H.N.; Jiang, M.; Zeng, X. Spiking neural P systems with scheduled synapses. IEEE Trans. Nanobiosci. 2017, 16, 792–801. [Google Scholar] [CrossRef]

- Lazo, P.P.L.; Cabarle, F.G.C.; Adorna, H.N.; Yap, J.M.C. A return to stochasticity and probability in spiking neural P systems. J. Membr. Comput. 2021, 1–13. [Google Scholar] [CrossRef]

- Wu, T.; Pan, L.; Yu, Q.; Tan, K.C. Numerical Spiking Neural P Systems. IEEE Trans. Neural Netw. Learn. Syst. 2020. [Google Scholar] [CrossRef]

- Macías-Ramos, L.F.; Pérez-Hurtado, I.; García-Quismondo, M.; Valencia-Cabrera, L.; Pérez-Jiménez, M.J.; Riscos-Núñez, A. A P—Lingua Based Simulator for Spiking Neural p Systems. In Proceedings 12th International Conference on Membrane Computing; Springer: Berlin/Heidelberg, Germany, 2011; Volume 7184, pp. 257–281. [Google Scholar] [CrossRef]

- Zeng, X.; Adorna, H.; Martínez-del-Amor, M.A.; Pan, L.; Pérez-Jiménez, M.J. Matrix Representation of Spiking Neural P Systems. In Proceedings of the 11th International Conference on Membrane Computing, Jena, Germany, 24–27 August 2010; Volume 6501, pp. 377–391. [Google Scholar] [CrossRef]

- Fatahalian, K.; Sugerman, J.; Hanrahan, P. Understanding the Efficiency of GPU Algorithms for Matrix-Matrix Multiplication. In Proceedings ACM SIGGRAPH/EUROGRAPHICS Conference on Graphics Hardware; Association for Computing Machinery: New York, NY, USA, 2004; pp. 133–137. [Google Scholar] [CrossRef]

- Carandang, J.; Villaflores, J.; Cabarle, F.; Adorna, H.; Martínez-del-Amor, M. CuSNP: Spiking Neural P Systems Simulators in CUDA. Rom. J. Inf. Sci. Technol. 2017, 20, 57–70. [Google Scholar]

- Carandang, J.; Cabarle, F.; Adorna, H.; Hernandez, N.; Martínez-del-Amor, M.A. Nondeterminism in Spiking Neural P Systems: Algorithms and Simulations. In Proceedings of the 6th Asian Conference on Membrane Computing, Chengdu, China, 21–25 September 2017. [Google Scholar]

- Cabarle, F.G.C.; Adorna, H.N.; Martínez-del Amor, M.Á.; Pérez Jiménez, M.d.J. Improving GPU simulations of spiking neural P systems. Rom. J. Inf. Sci. Technol. 2012, 15, 5–20. [Google Scholar]

- Carandang, J.P.; Cabarle, F.G.C.; Adorna, H.N.; Hernandez, N.H.S.; Martínez-del-Amor, M.Á. Handling Non-determinism in Spiking Neural P Systems: Algorithms and Simulations. Fundam. Inform. 2019, 164, 139–155. [Google Scholar] [CrossRef]

- Ochirbat, O.; Ishdorj, T.O.; Cichon, G. An error-tolerant serial binary full-adder via a spiking neural P system using HP/LP basic neurons. J. Membr. Comput. 2020, 2, 42–48. [Google Scholar] [CrossRef]

- Martínez-del-Amor, M.A.; García-Quismondo, M.; Macías-Ramos, L.F.; Valencia-Cabrera, L.; Riscos-Núñez, A.; Pérez-Jiménez, M.J. Simulating P systems on GPU devices: A survey. Fundam. Inform. 2015, 136, 269–284. [Google Scholar] [CrossRef]

- Muniyandi, R.C.; Maroosi, A. A Representation of Membrane Computing with a Clustering Algorithm on the Graphical Processing Unit. Processes 2020, 8, 1199. [Google Scholar] [CrossRef]

- Martínez-del-Amor, M.; Pérez-Hurtado, I.; Orellana-Martín, D.; Pérez-Jiménez, M.J. Adaptative parallel simulators for bioinspired computing models. Future Gener. Comput. Syst. 2020, 107, 469–484. [Google Scholar] [CrossRef]

- Martínez-del-Amor, M.Á.; Orellana-Martín, D.; Cabarle, F.G.C.; Pérez-Jiménez, M.J.; Adorna, H.N. Sparse-matrix representation of spiking neural P systems for GPUs. In Proceedings of the 15th Brainstorming Week on Membrane Computing, Sevilla, Spain, 31 January–5 February 2017; pp. 161–170. [Google Scholar]

- Aboy, B.C.D.; Bariring, E.J.A.; Carandang, J.P.; Cabarle, F.G.C.; de la Cruz, R.T.A.; Adorna, H.N.; Martínez-del-Amor, M.Á. Optimizations in CuSNP Simulator for Spiking Neural P Systems on CUDA GPUs. In Proceedings of the 17th International Conference on High Performance Computing & Simulation, Dublin, Ireland, 15–19 July 2019; pp. 535–542. [Google Scholar] [CrossRef]

- AlAhmadi, S.; Mohammed, T.; Albeshri, A.; Katib, I.; Mehmood, R. Performance Analysis of Sparse Matrix-Vector Multiplication (SpMV) on Graphics Processing Units (GPUs). Electronics 2020, 9, 1675. [Google Scholar] [CrossRef]

- Adorna, H.; Cabarle, F.; Macías-Ramos, L.; Pan, L.; Pérez-Jiménez, M.; Song, B.; Song, T.; Valencia-Cabrera, L. Taking the pulse of SN P systems: A Quick Survey. In Multidisciplinary Creativity; Spandugino: Bucharest, Romania, 2015; pp. 1–16. [Google Scholar]

- Cabarle, F.G.; Adorna, H.N.; Pérez-Jiménez, M.J.; Song, T. Spiking Neural P Systems with Structural Plasticity. Neural Comput. Appl. 2015, 26, 1905–1917. [Google Scholar] [CrossRef]

- Cabarle, F.G.C.; Hernandez, N.H.S.; Martínez-del-Amor, M.Á. Spiking neural P systems with structural plasticity: Attacking the subset sum problem. In International Conference on Membrane Computing; Springer: Berlin/Heidelberg, Germany, 2015; pp. 106–116. [Google Scholar]

- Pan, L.; Păun, G.; Pérez-Jiménez, M. Spiking neural P systems with neuron division and budding. Sci. China Inf. Sci. 2011, 54, 1596–1607. [Google Scholar] [CrossRef]

- Jimenez, Z.; Cabarle, F.; de la Cruz, R.T.; Buño, K.; Adorna, H.; Hernandez, N.; Zeng, X. Matrix representation and simulation algorithm of spiking neural P systems with structural plasticity. J. Membr. Comput. 2019, 1, 145–160. [Google Scholar] [CrossRef]

- Cabarle, F.G.C.; de la Cruz, R.T.A.; Cailipan, D.P.P.; Zhang, D.; Liu, X.; Zeng, X. On solutions and representations of spiking neural P systems with rules on synapses. Inf. Sci. 2019, 501, 30–49. [Google Scholar] [CrossRef]

- Orellana-Martín, D.; Martínez-del-Amor, M.; Valencia-Cabrera, L.; Pérez-Hurtado, I.; Riscos-Núñez, A.; Pérez-Jiménez, M.J. Dendrite P Systems Toolbox: Representation, Algorithms and Simulators. Int. J. Neural Syst. 2021, 31, 2050071. [Google Scholar] [CrossRef] [PubMed]

- Kirk, D.B.; Hwu, W.W. Programming Massively Parallel Processors: A Hands-on Approach, 3rd ed.; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2016. [Google Scholar]

- NVIDIA CUDA C Programming Guide. Available online: https://docs.nvidia.com/cuda/cuda-c-programming-guide/index.html (accessed on 15 February 2021).

- Bell, N.; Garland, M. Efficient Sparse Matrix-Vector Multiplication on CUDA; NVIDIA Technical Report NVR-2008-004; NVIDIA Corporation: Santa Clara, CA, USA, 2008. [Google Scholar]

- Ionescu, M.; Sburlan, D. Some Applications of Spiking Neural P Systems. Comput. Inform. 2008, 27, 515–528. [Google Scholar]

- Leporati, A.; Mauri, G.; Zandron, C.; Păun, G.; Pérez-Jiménez, M.J. Uniform Solutions to SAT and Subset Sum by Spiking Neural P Systems. Nat. Comput. Int. J. 2009, 8, 681–702. [Google Scholar] [CrossRef]

- Pérez-Hurtado, I.; Orellana-Martín, D.; Zhang, G.; Pérez-Jiménez, M.J. P-Lingua in two steps: Flexibility and efficiency. J. Membr. Comput. 2019, 1, 93–102. [Google Scholar] [CrossRef]

- Casauay, L.J.P.; Cabarle, F.G.G.; Macababayao, I.C.H.; Adorna, H.N.; Zeng, X.; Martínez-del-Amor, M.Á. A Framework for Evolving Spiking Neural P Systems. Int. J. Unconv. Comput. 2021, 16, 121–139. [Google Scholar]

- Fernandez, A.D.C.; Fresco, R.M.; Cabarle, F.G.C.; de la Cruz, R.T.A.; Macababayao, I.C.H.; Ballesteros, K.J.; Adorna, H.N. Snapse: A Visual Tool for Spiking Neural P Systems. Processes 2021, 9, 72. [Google Scholar] [CrossRef]

- Lin, H.; Zhao, B.; Liu, D.; Alippi, C. Data-based fault tolerant control for affine nonlinear systems through particle swarm optimized neural networks. IEEE/CAA J. Autom. Sin. 2020, 7, 954–964. [Google Scholar] [CrossRef]

- Zerari, N.; Chemachema, M.; Essounbouli, N. Neural network based adaptive tracking control for a class of pure feedback nonlinear systems with input saturation. IEEE/CAA J. Autom. Sin. 2019, 6, 278–290. [Google Scholar] [CrossRef]

- Gao, S.; Zhou, M.; Wang, Y.; Cheng, J.; Yachi, H.; Wang, J. Dendritic Neuron Model With Effective Learning Algorithms for Classification, Approximation, and Prediction. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 601–614. [Google Scholar] [CrossRef] [PubMed]

- Shang, M.; Luo, X.; Liu, Z.; Chen, J.; Yuan, Y.; Zhou, M. Randomized latent factor model for high-dimensional and sparse matrices from industrial applications. IEEE/CAA J. Autom. Sin. 2019, 6, 131–141. [Google Scholar] [CrossRef]

- Liu, W.; Luo, F.; Liu, Y.; Ding, W. Optimal Siting and Sizing of Distributed Generation Based on Improved Nondominated Sorting Genetic Algorithm II. Processes 2019, 7, 955. [Google Scholar] [CrossRef]

- Pan, J.S.; Hu, P.; Chu, S.C. Novel Parallel Heterogeneous Meta-Heuristic and Its Communication Strategies for the Prediction of Wind Power. Processes 2019, 7, 845. [Google Scholar] [CrossRef]

- Yin, X.; Liu, X.; Sun, M.; Ren, Q. Novel Numerical Spiking Neural P Systems with a Variable Consumption Strategy. Processes 2021, 9, 549. [Google Scholar] [CrossRef]

- de la Cruz, R.T.A.; Cabarle, F.G.C.; Macababayao, I.C.H.; Adorna, H.N.; Zeng, X. Homogeneous spiking neural P systems with structural plasticity. J. Membr. Comput. 2021. [Google Scholar] [CrossRef]

- Spiess, R.; George, R.; Cook, M.; Diehl, P.U. Structural plasticity denoises responses and improves learning speed. Front. Comput. Neurosci. 2016, 10, 93. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).