Quadratic Interpolation Based Simultaneous Heat Transfer Search Algorithm and Its Application to Chemical Dynamic System Optimization

Abstract

1. Introduction

- (1)

- A new variant of HTS algorithm named the QISHTS algorithm is presented by integrating the effect of SHTS, QI method, and population regeneration mechanism. The ensemble of these three modifications can provide a more efficient HTS method with well-balanced exploration and exploitation capabilities.

- (2)

- The performance of the QISHTS algorithm is investigated by a set of 18 well-defined benchmark functions, and the obtained results are compared with those of other well-established MHAs.

- (3)

- The proposed QISHTS algorithm is applied for solving four real-world chemical DOPs, including two dynamic parameter estimation problems and two optimal control problems. To the best of our knowledge, HTS-based algorithms have not been used for handling chemical DOPs, and our work is the first attempt to utilize it for solving such problems.

- (4)

- The effectiveness of the QISHTS algorithm in solving chemical DOPs is compared with those of twelve well-established MHAs existing in the literature.

2. Dynamic Optimization Problems Formulation

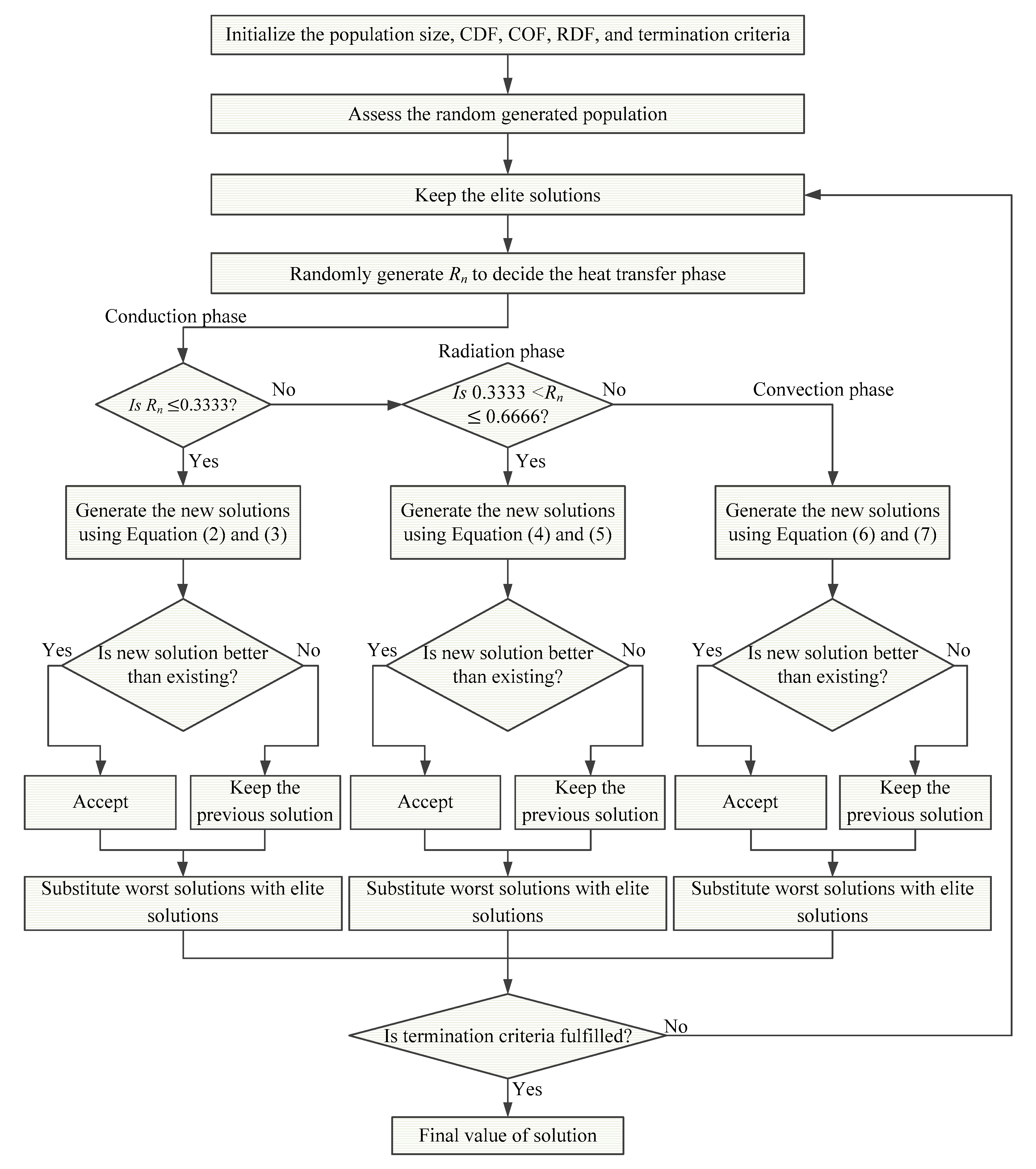

3. Heat Transfer Search (HTS) Algorithm

3.1. Conduction Phase

3.2. Convection Phase

3.3. Radiation Phase

4. Quadratic Interpolation Based Simultaneous Heat Transfer Search (QISHTS) Algorithm

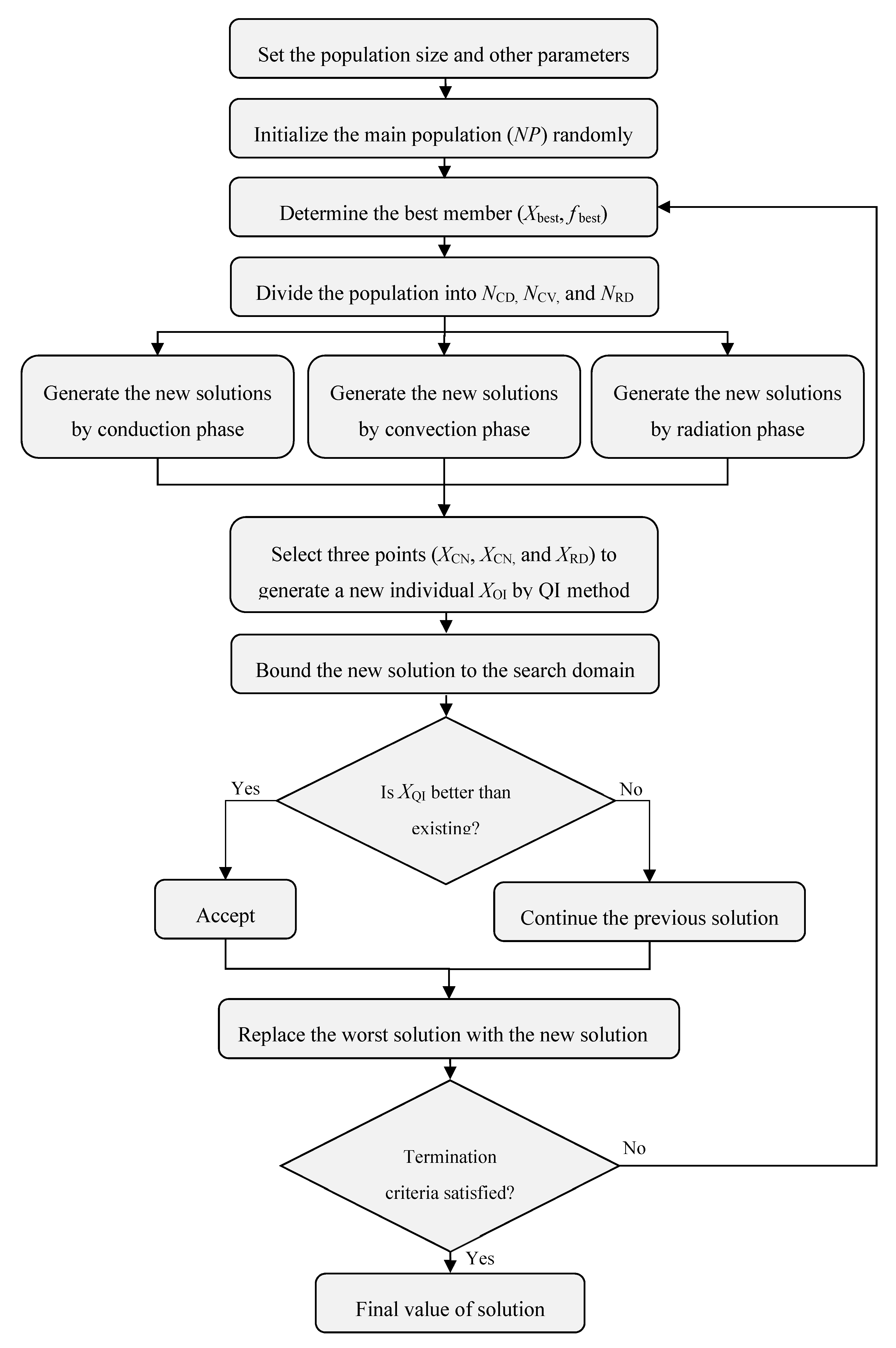

4.1. Simultaneous Heat Transfer Search (SHTS)

4.2. Quadratic Interpolation Method

4.3. Population Regeneration Mechanism

4.4. The Overall Process of QISHTS Algorithm

- Step 1. Define the population size , the function optimization goal, the maximum number of function evaluations , the setting values of two controlling parameters ( and ), the current function evaluations , and the stopping criteria.

- Step2. Randomly generate the main population within the lower and upper boundaries of the decision variables and calculate the objective function values .

- Step3. Determine the best individual in the population according to the fitness.

- Step 4. Randomly divide the whole population members into three sub-populations (, and ) and assign every sub-population to one of the three phases, where , and are the set of population members for the conduction, convection, and radiation phase, respectively.

- Step5. Generate the new solutions by the conduction phase, convection, and radiation phase.Step6. Select the three best members (, and ) obtained by the conduction phase, convection phase, and radiation phase to generate a new member () by the QI method according to Equations (8) and (9).

- Step7. Bound the new solution to the search domain.

- Step8. Evaluate the new solution; if the new obtained solution is superior to the existing one, then accept it and use it to replace the worst one.

- Step9. If the stopping criteria is met, then output the final solution. Otherwise, return to step 3.

5. Numerical Experiments and Discussions

5.1. Comparison of QISHTS with MHAs

5.2. Comparison of QISHTS with State-Of-The-Art DEs

6. Application to Chemical Dynamic System Optimization

6.1. Dynamic Parameter Estimation Problems

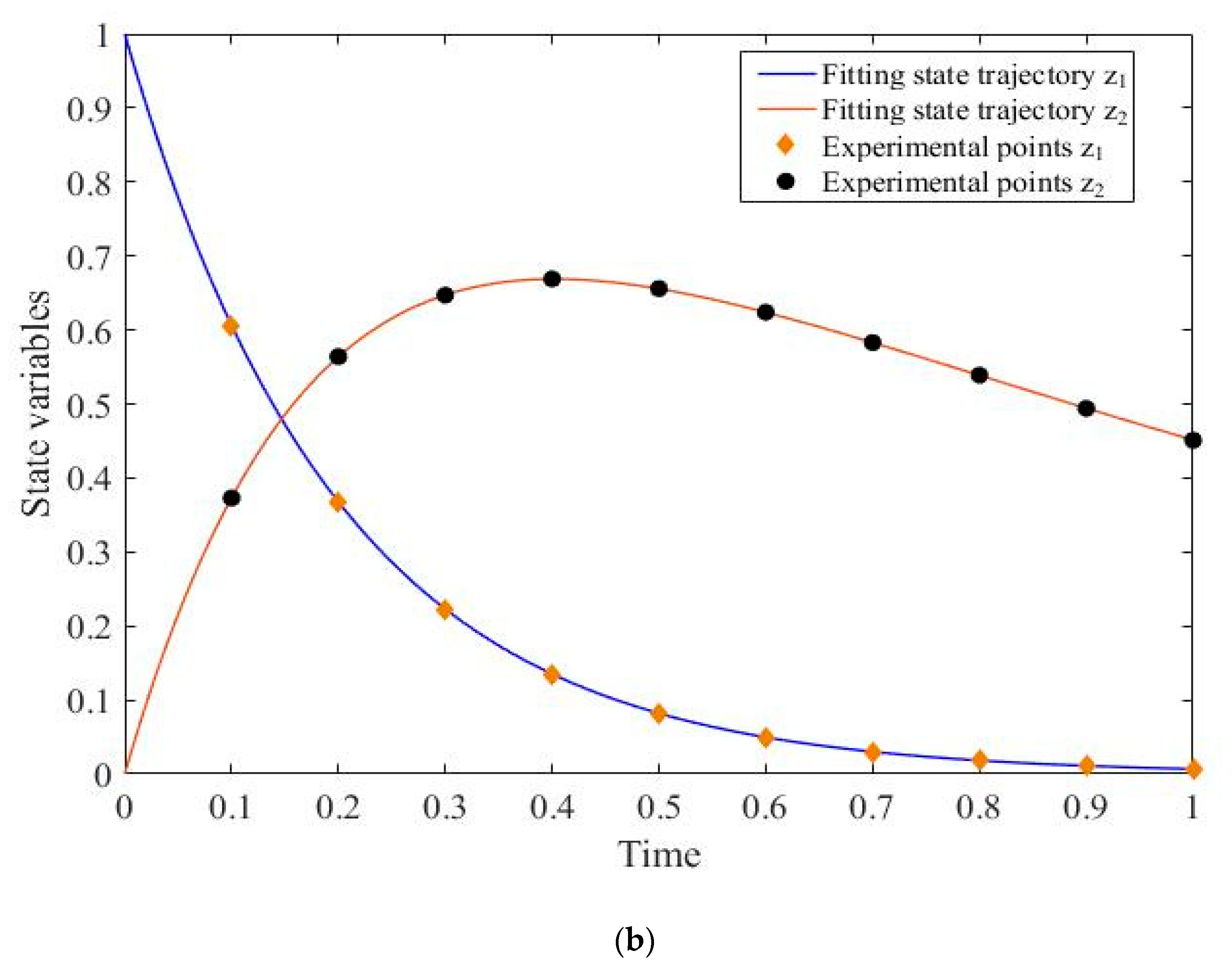

6.1.1. First-Order Reversible Series Reaction Problem

6.1.2. Catalytic Cracking of Gas Oil Problem

6.2. Optimal Control Problems

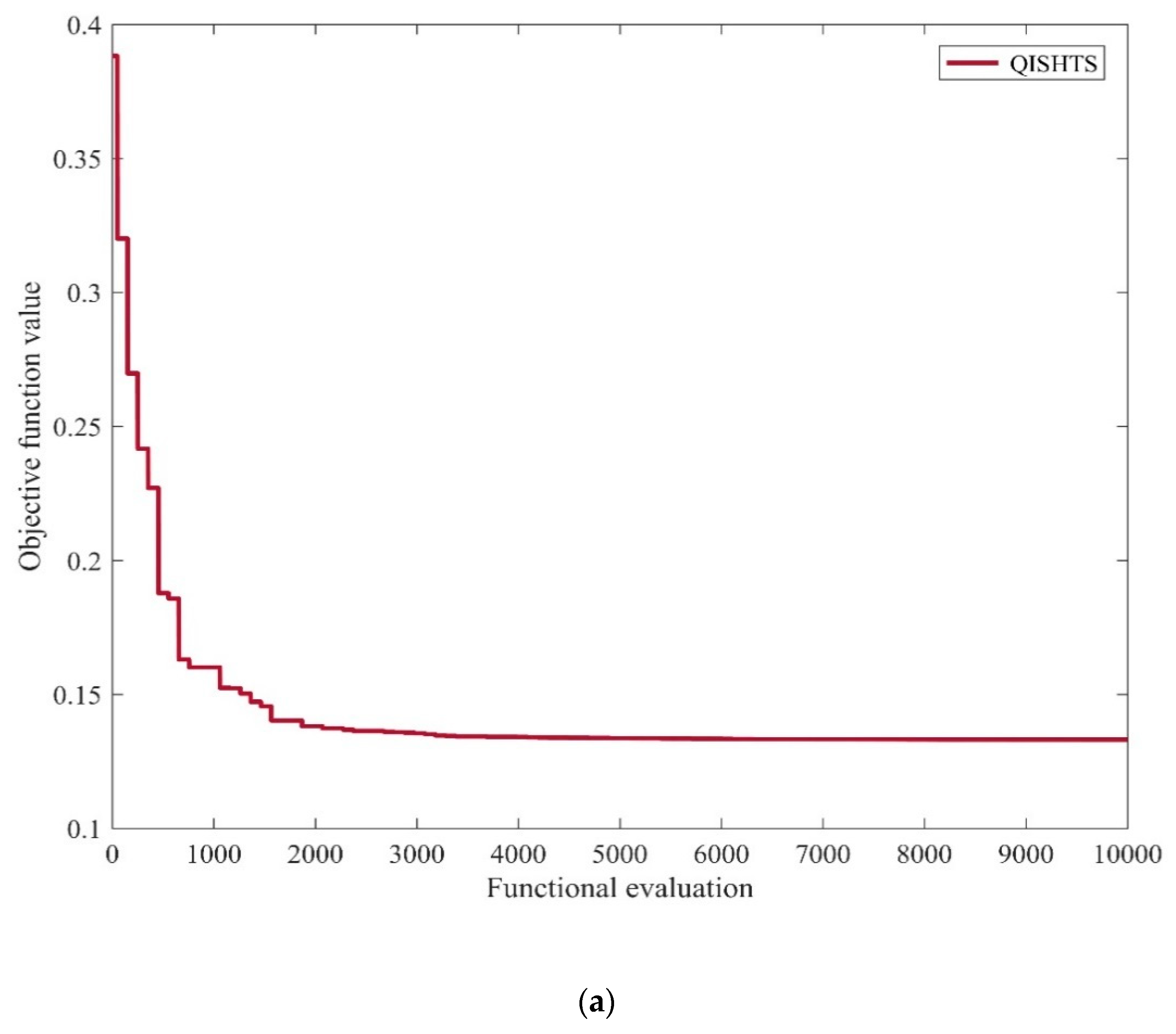

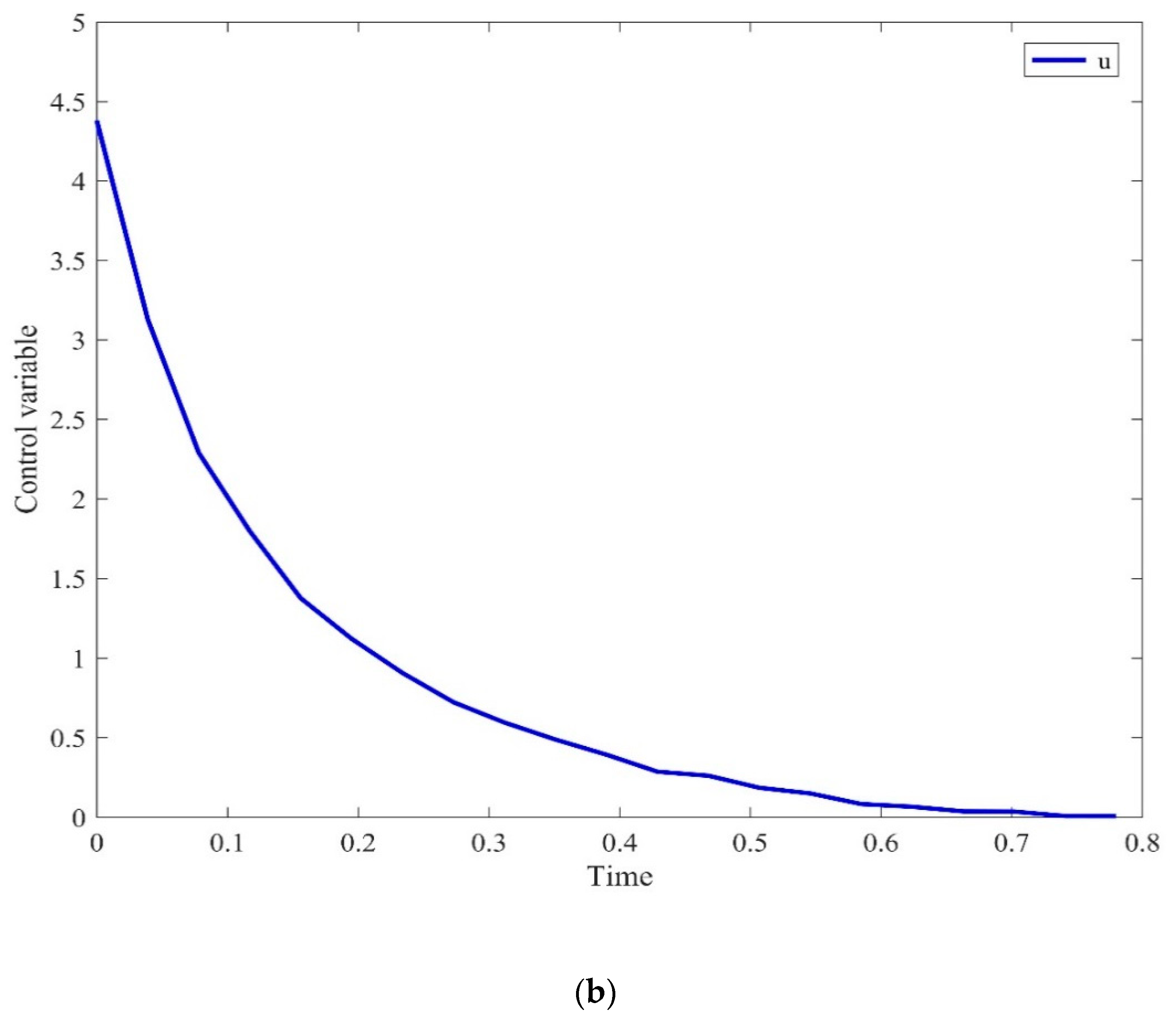

6.2.1. Multi-Modal Continuous Stirred Tank Reactor (CSTR) Problem

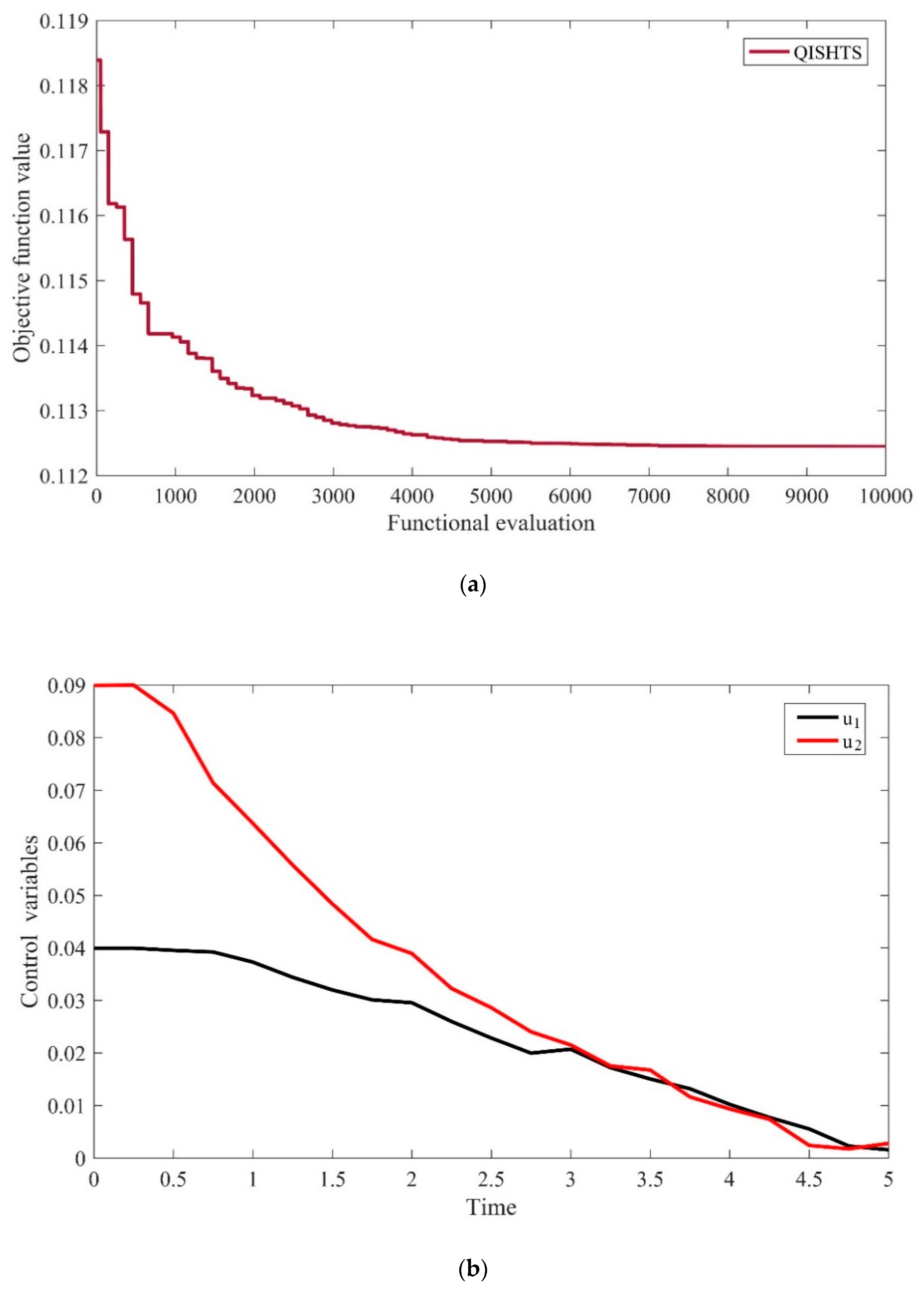

6.2.2. Six-Plate Gas Absorption Tower Problem

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Srinivasan, B.; Bonvin, D.; Visser, E.; Palanki, S. Dynamic optimization of batch processes: Ii. Role of measurements in handling uncertainty. Comput. Chem. Eng. 2003, 27, 27–44. [Google Scholar] [CrossRef]

- Irizarry, R. A generalized framework for solving dynamic optimization problems using the artificial chemical process paradigm: Applications to particulate processes and discrete dynamic systems. Chem. Eng. Sci. 2005, 60, 5663–5681. [Google Scholar] [CrossRef]

- Jiménez-Hornero, J.E.; Santos-Dueñas, I.M.; García-García, I. Optimization of biotechnological processes. The acetic acid fermentation. Part iii: Dynamic optimization. Biochem. Eng. J. 2009, 45, 22–29. [Google Scholar]

- Floudas, C.A.; Pardalos, P.M.; Adjiman, C.; Esposito, W.R.; Gümüs, Z.H.; Harding, S.T.; Klepeis, J.L.; Meyer, C.A.; Schweiger, C.A. Handbook of Test Problems in Local and Global Optimization; Springer Science & Business Media: Berlin, Germany, 2013; Volume 33. [Google Scholar]

- Bellman, R. Dynamic Programming [M]; Princeton University Press: Princeton, NJ, USA, 2010. [Google Scholar]

- Luus, R. Iterative Dynamic Programming [M]; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Sundaralingam, R. Two-step method for dynamic optimization of inequality state constrained systems using iterative dynamic programming. Ind. Eng. Chem. Res. 2015, 54, 7658–7667. [Google Scholar] [CrossRef]

- Bryson, A.E. Applied Optimal Control: Optimization, Estimation and Control; Routledge: Abingdon, UK, 2018. [Google Scholar]

- Cervantes, A.; Biegler, L.T. Optimization strategies for dynamic systems. Encycl. Optim. 2009, 4, 216–227. [Google Scholar]

- Sarkar, D.; Modak, J.M. Optimization of fed-batch bioreactors using genetic algorithm: Multiple control variables. Comput. Chem. Eng. 2004, 28, 789–798. [Google Scholar] [CrossRef]

- Du, W.; Bao, C.; Chen, X.; Tian, L.; Jiang, D. Dynamic optimization of the tandem acetylene hydrogenation process. Ind. Eng. Chem. Res. 2016, 55, 11983–11995. [Google Scholar] [CrossRef]

- Chen, X.; Du, W.; Tianfield, H.; Qi, R.; He, W.; Qian, F. Dynamic optimization of industrial processes with nonuniform discretization-based control vector parameterization. IEEE Trans. Autom. Sci. Eng. 2014, 11, 1289–1299. [Google Scholar] [CrossRef]

- Canto, E.B.; Banga, J.R.; Alonso, A.A.; Vassiliadis, V.S. Restricted second order information for the solution of optimal control problems using control vector parameterization. J. Process Control 2002, 12, 243–255. [Google Scholar] [CrossRef]

- Nocedal, J.; Wright, S. Numerical Optimization; Springer Science & Business Media: Berlin, Germany, 2006. [Google Scholar]

- Angira, R.; Santosh, A. Optimization of dynamic systems: A trigonometric differential evolution approach. Comput. Chem. Eng. 2007, 31, 1055–1063. [Google Scholar] [CrossRef]

- Papamichail, I.; Adjiman, C.S. Global optimization of dynamic systems. Comput. Chem. Eng. 2004, 28, 403–415. [Google Scholar] [CrossRef]

- Esposito, W.R.; Floudas, C.A. Global optimization for the parameter estimation of differential-algebraic systems. Ind. Eng. Chem. Res. 2000, 39, 1291–1310. [Google Scholar] [CrossRef]

- BoussaïD, I.; Lepagnot, J.; Siarry, P. A survey on optimization metaheuristics. Inf. Sci. 2013, 237, 82–117. [Google Scholar] [CrossRef]

- Patel, N.; Padhiyar, N. Modified genetic algorithm using box complex method: Application to optimal control problems. J. Process Control 2015, 26, 35–50. [Google Scholar] [CrossRef]

- Qian, F.; Sun, F.; Zhong, W.; Luo, N. Dynamic optimization of chemical engineering problems using a control vector parameterization method with an iterative genetic algorithm. Eng. Optim. 2013, 45, 1129–1146. [Google Scholar] [CrossRef]

- Dai, K.; Wang, N. A hybrid DNA based genetic algorithm for parameter estimation of dynamic systems. Chem. Eng. Res. Des. 2012, 90, 2235–2246. [Google Scholar] [CrossRef]

- Babu, B.; Angira, R. Modified differential evolution (MDE) for optimization of non-linear chemical processes. Comput. Chem. Eng. 2006, 30, 989–1002. [Google Scholar] [CrossRef]

- Chen, X.; Du, W.; Qian, F. Multi-objective differential evolution with ranking-based mutation operator and its application in chemical process optimization. Chemom. Intell. Lab. Syst. 2014, 136, 85–96. [Google Scholar] [CrossRef]

- Penas, D.R.; Banga, J.R.; González, P.; Doallo, R. Enhanced parallel differential evolution algorithm for problems in computational systems biology. Appl. Soft Comput. 2015, 33, 86–99. [Google Scholar] [CrossRef]

- Chen, X.; Du, W.; Qian, F. Solving chemical dynamic optimization problems with ranking-based differential evolution algorithms. Chin. J. Chem. Eng. 2016, 24, 1600–1608. [Google Scholar] [CrossRef]

- Zhang, B.; Chen, D.; Zhao, W. Iterative ant-colony algorithm and its application to dynamic optimization of chemical process. Comput. Chem. Eng. 2005, 29, 2078–2086. [Google Scholar] [CrossRef]

- Schluter, M.; Egea, J.A.; Antelo, L.T.; Alonso, A.A.; Banga, J.R. An extended ant colony optimization algorithm for integrated process and control system design. Ind. Eng. Chem. Res. 2009, 48, 6723–6738. [Google Scholar] [CrossRef]

- Shelokar, P.; Jayaraman, V.K.; Kulkarni, B.D. Multicanonical jump walk annealing assisted by tabu for dynamic optimization of chemical engineering processes. Eur. J. Oper. Res. 2008, 185, 1213–1229. [Google Scholar] [CrossRef]

- Faber, R.; Jockenhövel, T.; Tsatsaronis, G. Dynamic optimization with simulated annealing. Comput. Chem. Eng. 2005, 29, 273–290. [Google Scholar] [CrossRef]

- Chen, X.; Du, W.; Qi, R.; Qian, F.; Tianfield, H. Hybrid gradient particle swarm optimization for dynamic optimization problems of chemical processes. Asia-Pac. J. Chem. Eng. 2013, 8, 708–720. [Google Scholar] [CrossRef]

- Zhou, Y.; Liu, X. Control parameterization-based adaptive particle swarm approach for solving chemical dynamic optimization problems. Chem. Eng. Technol. 2014, 37, 692–702. [Google Scholar] [CrossRef]

- Yang, J.; Lu, L.; Ouyang, W.; Gou, Y.; Chen, Y.; Ma, H.; Guo, J.; Fang, F. Estimation of kinetic parameters of an anaerobic digestion model using particle swarm optimization. Biochem. Eng. J. 2017, 120, 25–32. [Google Scholar] [CrossRef]

- Sun, J.; Palade, V.; Cai, Y.; Fang, W.; Wu, X. Biochemical systems identification by a random drift particle swarm optimization approach. BMC Bioinform. 2014, 15, S1. [Google Scholar] [CrossRef]

- Castellani, M.; Pham, Q.T.; Pham, D.T. Dynamic optimisation by a modified bees algorithm. Proc. Inst. Mech. Eng. Part I J. Syst. Control Eng. 2012, 226, 956–971. [Google Scholar] [CrossRef]

- Egea, J.A.; Balsa-Canto, E.; García, M.a.-S.G.; Banga, J.R. Dynamic optimization of nonlinear processes with an enhanced scatter search method. Ind. Eng. Chem. Res. 2009, 48, 4388–4401. [Google Scholar] [CrossRef]

- Villaverde, A.F.; Egea, J.A.; Banga, J.R. A cooperative strategy for parameter estimation in large scale systems biology models. BMC Syst. Biol. 2012, 6, 75. [Google Scholar] [CrossRef] [PubMed]

- Penas, D.R.; González, P.; Egea, J.A.; Doallo, R.; Banga, J.R. Parameter estimation in large-scale systems biology models: A parallel and self-adaptive cooperative strategy. BMC Bioinform. 2017, 18, 52. [Google Scholar] [CrossRef] [PubMed]

- Sun, D.-Y.; Lin, P.-M.; Lin, S.-P. Integrating controlled random search into the line-up competition algorithm to solve unsteady operation problems. Ind. Eng. Chem. Res. 2008, 47, 8869–8887. [Google Scholar] [CrossRef]

- Rakhshani, H.; Dehghanian, E.; Rahati, A. Hierarchy cuckoo search algorithm for parameter estimation in biological systems. Chemom. Intell. Lab. Syst. 2016, 159, 97–107. [Google Scholar] [CrossRef]

- Nikumbh, S.; Ghosh, S.; Jayaraman, V.K. Biogeography-based optimization for dynamic optimization of chemical reactors. In Applications of Metaheuristics in Process Engineering; Springer: Berlin, Germany, 2014; pp. 201–216. [Google Scholar]

- Chen, X.; Mei, C.; Xu, B.; Yu, K.; Huang, X. Quadratic interpolation-based teaching-learning-based optimization for chemical dynamic system optimization. Knowl.-Based Syst. 2018, 145, 250–263. [Google Scholar] [CrossRef]

- Patel, V.K.; Savsani, V.J. Heat transfer search (hts): A novel optimization algorithm. Inf. Sci. 2015, 324, 217–246. [Google Scholar] [CrossRef]

- Degertekin, S.; Lamberti, L.; Hayalioglu, M. Heat transfer search algorithm for sizing optimization of truss structures. Lat. Am. J. Solids Struct. 2017, 14, 373–397. [Google Scholar] [CrossRef]

- Tejani, G.G.; Savsani, V.J.; Patel, V.K.; Mirjalili, S. An improved heat transfer search algorithm for unconstrained optimization problems. J. Comput. Des. Eng. 2019, 6, 13–32. [Google Scholar] [CrossRef]

- Tejani, G.; Savsani, V.; Patel, V. Modified sub-population based heat transfer search algorithm for structural optimization. Int. J. Appl. Metaheuristic Comput. (IJAMC) 2017, 8, 1–23. [Google Scholar] [CrossRef]

- Tejani, G.G.; Kumar, S.; Gandomi, A.H. Multi-objective heat transfer search algorithm for truss optimization. Eng. Comput. 2019, 1–22. [Google Scholar] [CrossRef]

- Maharana, D.; Kotecha, P. Simultaneous heat transfer search for computationally expensive numerical optimization. In Proceedings of the 2016 IEEE Congress on Evolutionary Computation (CEC), Vancouver, BC, Canada, 24–29 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 2982–2988. [Google Scholar]

- Maharana, D.; Kotecha, P. Simultaneous heat transfer search for single objective real-parameter numerical optimization problem. In Proceedings of the 2016 IEEE Region 10 Conference (TENCON), Singapore, Singapore, 22–25 November 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 2138–2141. [Google Scholar]

- Tawhid, M.A.; Savsani, V. ∈-constraint heat transfer search (∈-HTS) algorithm for solving multi-objective engineering design problems. J. Comput. Des. Eng. 2018, 5, 104–119. [Google Scholar] [CrossRef]

- Ali, M.M.; Törn, A.; Viitanen, S. A numerical comparison of some modified controlled random search algorithms. J. Glob. Optim. 1997, 11, 377–385. [Google Scholar] [CrossRef]

- Li, H.; Jiao, Y.-C.; Zhang, L. Hybrid differential evolution with a simplified quadratic approximation for constrained optimization problems. Eng. Optim. 2011, 43, 115–134. [Google Scholar] [CrossRef]

- Deep, K.; Das, K.N. Quadratic approximation based hybrid genetic algorithm for function optimization. Appl. Math. Comput. 2008, 203, 86–98. [Google Scholar] [CrossRef]

- Yang, Y.; Zong, X.; Yao, D.; Li, S. Improved alopex-based evolutionary algorithm (AEA) by quadratic interpolation and its application to kinetic parameter estimations. Appl. Soft Comput. 2017, 51, 23–38. [Google Scholar] [CrossRef]

- Yang, X.-S.; Deb, S. Engineering optimisation by cuckoo search. arXiv 2010, arXiv:1005.2908. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Li, X.; Zhang, J.; Yin, M. Animal migration optimization: An optimization algorithm inspired by animal migration behavior. Neural Comput. Appl. 2014, 24, 1867–1877. [Google Scholar] [CrossRef]

- Rahnamayan, S.; Tizhoosh, H.R.; Salama, M.M. Opposition-based differential evolution. IEEE Trans. Evol. Comput. 2008, 12, 64–79. [Google Scholar] [CrossRef]

- Qin, A.K.; Huang, V.L.; Suganthan, P.N. Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Trans. Evol. Comput. 2009, 13, 398–417. [Google Scholar] [CrossRef]

- Maulik, U.; Saha, I. Automatic fuzzy clustering using modified differential evolution for image classification. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3503–3510. [Google Scholar] [CrossRef]

- Wang, Y.; Cai, Z.; Zhang, Q. Differential evolution with composite trial vector generation strategies and control parameters. IEEE Trans. Evol. Comput. 2011, 15, 55–66. [Google Scholar] [CrossRef]

- Islam, S.M.; Das, S.; Ghosh, S.; Roy, S.; Suganthan, P.N. An adaptive differential evolution algorithm with novel mutation and crossover strategies for global numerical optimization. IEEE Trans. Syst. ManCybern. Part B Cybern. 2012, 42, 482–500. [Google Scholar] [CrossRef]

- Cai, Y.; Wang, J. Differential evolution with hybrid linkage crossover. Inf. Sci. 2015, 320, 244–287. [Google Scholar] [CrossRef]

- Shi, Y.; Eberhart, R. A modified particle swarm optimizer. In Proceedings of the 1998 IEEE International Conference on Evolutionary Computation Proceedings. IEEE World Congress on Computational Intelligence (Cat. No. 98TH8360), Anchorage, AK, USA, 4–9 May 1998; IEEE: Piscataway, NJ, USA, 1988; pp. 69–73. [Google Scholar]

- Liang, J.J.; Qin, A.K.; Suganthan, P.N.; Baskar, S. Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans. Evol. Comput. 2006, 10, 281–295. [Google Scholar] [CrossRef]

- Brest, J.; Greiner, S.; Boskovic, B.; Mernik, M.; Zumer, V. Self-adapting control parameters in differential evolution: A comparative study on numerical benchmark problems. IEEE Trans. Evol. Comput. 2006, 10, 646–657. [Google Scholar] [CrossRef]

- Gong, W.; Cai, Z.; Ling, C.X.; Li, H. A real-coded biogeography-based optimization with mutation. Appl. Math. Comput. 2010, 216, 2749–2758. [Google Scholar] [CrossRef]

- Gong, W.; Cai, Z.; Ling, C.X. DE/BBO: A hybrid differential evolution with biogeography-based optimization for global numerical optimization. Soft Comput. 2010, 15, 645–665. [Google Scholar] [CrossRef]

- Rao, R.V.; Savsani, V.J.; Vakharia, D. Teaching–learning-based optimization: An optimization method for continuous non-linear large scale problems. Inf. Sci. 2012, 183, 1–15. [Google Scholar] [CrossRef]

- Wu, Z.-S.; Fu, W.-P.; Xue, R. Nonlinear inertia weighted teaching-learning-based optimization for solving global optimization problem. Comput. Intell. Neurosci. 2015, 2015. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Tianfield, H.; Mei, C.; Du, W.; Liu, G. Biogeography-based learning particle swarm optimization. Soft Comput. 2017, 21, 7519–7541. [Google Scholar] [CrossRef]

- Chen, X.; Yu, K.; Du, W.; Zhao, W.; Liu, G. Parameters identification of solar cell models using generalized oppositional teaching learning based optimization. Energy 2016, 99, 170–180. [Google Scholar] [CrossRef]

- Lin, Y.; Stadtherr, M.A. Deterministic global optimization for parameter estimation of dynamic systems. Ind. Eng. Chem. Res. 2006, 45, 8438–8448. [Google Scholar] [CrossRef]

- Wang, F.-S.; Chiou, J.-P. Nonlinear optimal control and optimal parameter selection by a modified reduced gradient method. Eng. Optim. + A35 1997, 28, 273–298. [Google Scholar] [CrossRef]

| Test Function | Dimension | Range | Optimum |

|---|---|---|---|

| 30 | [−100, 100] | 0 | |

| 30 | [−10, 10] | 0 | |

| 30 | [−100, 100] | 0 | |

| 30 | [−100, 100] | 0 | |

| 30 | [−100, 100] | 0 | |

| 30 | [−1.28, 1.28] | 0 | |

| 30 | [−30, 30] | 0 | |

| 30 | [−500, 500] | −418.98 × 105 | |

| 30 | [−5.12, 5.12] | 0 | |

| 30 | [−32, 32] | 0 | |

| 30 | [−600, 600] | 0 | |

| 2 | [−65, 65] | 9.9800 × 10−1 | |

| 4 | [−5, 5] | 3.0000 × 10−4 | |

| 2 | [−5, 5] | −1.0316 | |

| 2 | [−5, 5] | 3.9800 × 10−1 | |

| 2 | [−2, 2] | 3 | |

| 3 | [1, 3] | −3.86 | |

| 6 | [0, 1] | −3.322 |

| Function | CS | GSA | ABC | AMO | HTS | IHTS | QISHTS | |

|---|---|---|---|---|---|---|---|---|

| f1 | Mean | 5.6565 × 10−6 | 3.3748 × 10−18 | 2.9860 × 10−20 | 8.6464 × 10−40 | 0.0000 | 0.0000 | 0.0000 |

| STD | 2.8611 × 10−6 | 8.0862 × 10−19 | 2.1455 × 10−20 | 1.0435 × 10−39 | 0.0000 | 0.0000 | 0.0000 | |

| Rank | 7 | 6 | 5 | 4 | 1 | 1 | 1 | |

| f2 | Mean | 2.0000 × 10−3 | 8.9212 × 10−9 | 1.4213 × 10−15 | 8.2334 × 10−32 | 0.0000 | 0.0000 | 0.0000 |

| STD | 8.0959 × 10−4 | 1.3340 × 10−9 | 5.5340 × 10−16 | 3.4120 × 10−32 | 0.0000 | 0.0000 | 0.0000 | |

| Rank | 7 | 6 | 5 | 4 | 1 | 1 | 1 | |

| f3 | Mean | 1.1400 × 10−3 | 1.1260 × 10−1 | 2.4027 × 103 | 8.8904 × 10−4 | 0.0000 | 2.5658 × 10−36 | 0.0000 |

| STD | 6.0987 × 10−4 | 1.2660 × 10−1 | 6.5696 × 102 | 8.7256 × 10−4 | 0.0000 | 7.3407 × 10−36 | 0.0000 | |

| Rank | 5 | 6 | 7 | 4 | 1 | 3 | 1 | |

| f4 | Mean | 3.2388 | 9.9302 × 10−10 | 1.8523 × 101 | 2.8622 × 10−5 | 7.8547 × 10−43 | 2.3219 × 10−33 | 0.0000 |

| STD | 6.6440 × 10−1 | 1.1899 × 10−10 | 4.2477 | 2.3468 × 10−5 | 2.1944 × 10−42 | 4.9053 × 10−33 | 0.0000 | |

| Rank | 6 | 4 | 7 | 5 | 2 | 3 | 1 | |

| f5 | Mean | 5.4332 × 10−6 | 3.3385 × 10−18 | 3.0884 × 10−20 | 0.0000 | 1.3920 × 10−1 | 1.3906 × 10−7 | 0.0000 |

| STD | 2.2446 × 10−6 | 5.6830 × 10−19 | 4.0131 × 10−20 | 0.0000 | 4.5100 × 10−1 | 2.7467 × 10−7 | 0.0000 | |

| Rank | 6 | 4 | 3 | 1 | 7 | 5 | 1 | |

| f6 | Mean | 9.6000 × 10−3 | 3.900 × 10−3 | 3.2400 × 10−2 | 1.7000 × 10−3 | 1.7370 × 10−3 | 1.5430 × 10−3 | 1.0744 × 10−4 |

| STD | 2.8000 × 10−3 | 1.3000 × 10−3 | 5.9000 × 10−3 | 4.7058 × 10−4 | 7.5904 × 10−4 | 6.3685 × 10−4 | 8.9991 × 10−5 | |

| Rank | 6 | 5 | 7 | 3 | 4 | 2 | 1 | |

| Mean Rank | 6.16 | 5.16 | 5.66 | 3.50 | 2.66 | 2.50 | 1.00 | |

| Final Rank | 7 | 5 | 6 | 4 | 3 | 2 | 1 | |

| Function | CS | GSA | ABC | AMO | HTS | IHTS | QISHTS | |

|---|---|---|---|---|---|---|---|---|

| f7 | Mean | 8.0092 | 2.0082 × 101 | 4.4100 × 10−2 | 4.1817 | 2.6156 × 101 | 2.2876 × 101 | 2.0024 × 101 |

| STD | 1.9188 | 1.7220 × 10−1 | 7.0700× 10−2 | 2.1618 | 3.0311 | 4.3741 | 8.1310 × 10−1 | |

| Rank | 3 | 5 | 1 | 2 | 7 | 6 | 4 | |

| f8 | Mean | −9.1492 × 103 | −3.0499 × 103 | −1.2507 × 104 | −1.2569 × 104 | −1.2569 × 104 | −1.2569 × 104 | −5.9959 × 104 |

| STD | 2.5314 × 102 | 3.3886 × 102 | 6.1118 × 101 | 1.2384 × 10−7 | 5.7799 × 10−1 | 3.7130 × 10−12 | 1.1102 | |

| Rank | 6 | 7 | 5 | 4 | 3 | 2 | 1 | |

| f9 | Mean | 5.1220 × 101 | 7.2831 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| STD | 8.1069 | 1.8991 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | |

| Rank | 7 | 6 | 1 | 1 | 1 | 1 | 1 | |

| f10 | Mean | 2.3750 | 1.4717 × 10−9 | 1.1946 × 10−9 | 4.4409 × 10−15 | 2.8741 × 10−14 | 2.8599 × 10−14 | 8.8818 × 10−16 |

| STD | 1.1238 | 1.4449 × 10−10 | 5.0065 × 10−10 | 0.0000 | 5.6806 × 10−15 | 4.3511 × 10−15 | 0.0000 | |

| Rank | 7 | 6 | 5 | 2 | 4 | 3 | 1 | |

| f11 | Mean | 4.4900 × 10−5 | 1.265 × 10−2 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| STD | 8.9551 × 10−5 | 2.1600 × 10−2 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | 0.0000 | |

| Rank | 6 | 7 | 1 | 1 | 1 | 1 | 1 | |

| Mean Rank | 5.80 | 6.20 | 2.60 | 2.00 | 3.20 | 2.60 | 1.60 | |

| Final Rank | 6 | 7 | 3 | 2 | 5 | 3 | 1 | |

| Function | CS | GSA | ABC | AMO | HTS | IHTS | QISHTS | |

|---|---|---|---|---|---|---|---|---|

| f12 | Mean | 9.9810 × 10−1 | 5.9533 | 9.9800 × 10−1 | 9.9800 × 10−1 | 9.9800 × 10−1 | 9.9800 × 10−1 | 9.9800 × 10−1 |

| STD | 4.8277 × 10−4 | 3.4819 | 3.7921 × 10−16 | 3.3858 × 10−12 | 3.3993 × 10−16 | 3.3993 × 10−16 | 3.3993 × 10−16 | |

| Rank | 6 | 7 | 4 | 5 | 1 | 1 | 1 | |

| f13 | Mean | 5.0310 × 10−4 | 4.8000 × 10−3 | 7.4715 × 10−4 | 3.9738 × 10−4 | 5.3397 × 10−4 | 3.4416 × 10−4 | 3.1988 × 10−4 |

| STD | 1.1180 × 10−4 | 3.3000 × 10−3 | 2.1481 × 10−4 | 4.4503 × 10−5 | 3.9577 × 10−4 | 1.8313 × 10−4 | 3.0497 × 10−5 | |

| Rank | 4 | 7 | 6 | 3 | 5 | 2 | 1 | |

| f14 | Mean | −1.03163 | −1.03163 | −1.0316 | −1.0316 | −1.03163 | −1.03163 | −1.03163 |

| STD | 1.5821 × 10−7 | 4.7536 × 10−16 | 1.1269 × 10−14 | 5.2006 × 10−11 | 4.5325 × 10−16 | 4.5325 × 10−16 | 6.5323 × 10−8 | |

| Rank | 7 | 3 | 4 | 5 | 1 | 1 | 6 | |

| f15 | Mean | 3.9790 × 10−1 | 3.9790 × 10−1 | 3.9790 × 10−1 | 3.9790 × 10−1 | 3.9789 × 10−1 | 3.9789 × 10−1 | 3.9790 × 10−1 |

| STD | 3.2449 × 10−6 | 0.0000 | 5.3819 × 10−8 | 0.0000 | 5.6656 × 10−17 | 5.6656 × 10−17 | 5.5872 × 10−17 | |

| Rank | 7 | 1 | 6 | 1 | 4 | 4 | 3 | |

| f16 | Mean | 3.0013 | 3.7403 | 3.0000 | 3.0018 | 3.0000 | 3.0000 | 3.0000 |

| STD | 2.6000 × 10−3 | 1.6055 | 2.6164 × 10−5 | 5.5000 × 10−3 | 0.0000 | 0.0000 | 3.7456 × 10−4 | |

| Rank | 5 | 7 | 3 | 6 | 1 | 1 | 4 | |

| f17 | Mean | −3.8628 | −3.8625 | −3.8628 | −3.8628 | −3.8628 | −3.8628 | −3.8628 |

| STD | 1.4043 × 10−5 | 3.8767 × 10−4 | 1.3654 × 10−10 | 1.3669 × 10−15 | 9.0649 × 10−16 | 9.0649 × 10−16 | 1.6997 × 10−16 | |

| Rank | 6 | 7 | 5 | 4 | 2 | 2 | 1 | |

| f18 | Mean | −3.321 | −3.3220 | −3.3220 | −3.3220 | −3.2837 | −3.2935 | −3.3228 |

| STD | 7.5186 × 10−4 | 4.7967 × 10−16 | 8.5733 × 10−10 | 5.085 × 10−6 | 5.6553 × 10−2 | 5.1824 × 10−2 | 4.8300 × 10−2 | |

| Rank | 4 | 1 | 2 | 3 | 7 | 6 | 5 | |

| Mean Rank | 5.57 | 4.71 | 4.28 | 3.85 | 3.00 | 2.42 | 3.00 | |

| Final Rank | 7 | 6 | 5 | 4 | 2 | 1 | 2 | |

| F | FEs | ODE | SaDE | MoDE | CoDE | MDE-pBX | QISHTS | |

|---|---|---|---|---|---|---|---|---|

| f1 | 150,000 | Mean | 2.24 × 10−27 | 1.62 × 10−48 | 6.51 × 10−4 | 2.28 × 10−7 | 1.24 × 10−3 | 0.00 |

| STD | 2.72 × 10−27 | 4.46 × 10−48 | 2.81 × 10−3 | 8.29 × 10−8 | 4.83 × 10−3 | 0.00 | ||

| Rank | 3 | 2 | 5 | 4 | 6 | 1 | ||

| f2 | 150,000 | Mean | 1.52 × 10−11 | 6.11 × 10−45 | 2.89 × 10−3 | 1.16 × 10−7 | 4.17 × 10−8 | 0.00 |

| STD | 1.06 × 10−11 | 1.54 × 10−44 | 1.23 × 10−2 | 3.79 × 10−8 | 1.85 × 10−7 | 0.00 | ||

| Rank | 3 | 2 | 6 | 5 | 4 | 1 | ||

| f5 | 150,000 | Mean | 2.32 × 104 | 1.00 × 104 | 6.05 × 102 | 6.25 × 104 | 8.12 × 103 | 0.00 |

| STD | 1.76 × 103 | 1.65 × 103 | 5.43 × 102 | 1.91 × 103 | 1.18 × 104 | 0.00 | ||

| Rank | 5 | 4 | 2 | 6 | 3 | 1 | ||

| f7 | 500,000 | Mean | 2.52 × 101 | 1.28 × 101 | 4.51 | 3.33 | 4.70 × 101 | 0.00 |

| STD | 1.1000 | 5.86 | 5.30 | 1.96 | 3.15 × 101 | 0.00 | ||

| Rank | 5 | 4 | 3 | 2 | 6 | 1 | ||

| f8 | 300,000 | Mean | 6.99 × 103 | 3.55 × 101 | 4.14 × 103 | 1.21 × 10−12 | 1.22 × 103 | 3.74 × 10−4 |

| STD | 3.08 × 102 | 6.34 × 101 | 6.51 × 102 | 8.72 × 10−13 | 4.34 × 102 | 0.00 | ||

| Rank | 6 | 3 | 5 | 1 | 4 | 2 | ||

| f9 | 300,000 | Mean | 3.60 × 101 | 1.43 | 6.01 × 101 | 1.02 × 10−11 | 7.80 | 0.00 |

| STD | 1.91 × 101 | 1.13 | 1.41 × 101 | 1.73 × 10−11 | 2.25 | 0.00 | ||

| Rank | 5 | 3 | 6 | 2 | 4 | 1 | ||

| f10 | 150,000 | Mean | 3.53 × 10−14 | 4.02 × 10−15 | 7.70 | 1.31 × 10−4 | 1.77 × 10−2 | 8.88 × 10−16 |

| STD | 2.04 × 10−14 | 6.49 × 10−16 | 1.82 | 3.74 × 10−5 | 9.30 × 10−2 | 0.00 | ||

| Rank | 3 | 2 | 6 | 4 | 5 | 1 | ||

| f11 | 200,000 | Mean | 2.47 × 10−4 | 2.79 × 10−3 | 2.49 × 10−1 | 4.19 × 10−9 | 4.92 × 10−3 | 0.00 |

| STD | 1.35 × 10−3 | 6.61 × 10−3 | 2.33 × 10−1 | 3.72 × 10−9 | 1.24 × 10−2 | 0.00 | ||

| Rank | 3 | 4 | 6 | 2 | 5 | 1 | ||

| Mean Rank | 4.12 | 3 | 4.87 | 3.25 | 4.62 | 1.12 | ||

| Final Rank | 4 | 2 | 6 | 3 | 5 | 1 | ||

| Dynamic Optimization Problem | maxFEs | Ap | PDNFES | PV |

|---|---|---|---|---|

| First-order reversible series reaction problem | 1000 | 0.000002 | 10 | 0.1 |

| Catalytic cracking of gas oil problem | 1000 | 0.0030 | 10 | 0.1 |

| Multi-modal CSTR problem | 10,000 | 0.1400 | 100 | 0.3 |

| Six-plate gas absorption tower problem | 10,000 | 0.1125 | 100 | 0.3 |

| Method | Best | Mean | STD | Worst | SR | T(S) | Rank |

|---|---|---|---|---|---|---|---|

| LDWPSO | 1.18620 × 10−6 | 1.25679 × 10−6 | 8.30596 × 10−8 | 1.47490 × 10−6 | 100% | 18.03 | 11 |

| CLPSO | 3.73979 × 10−6 | 1.98549 × 10−4 | 1.54809 × 10−4 | 5.19505 × 10−4 | 0.00% | 17.97 | 12 |

| jDE | 1.18585 × 10−6 | 1.18586 × 10−6 | 1.43989 × 10−11 | 1.18589 × 10−6 | 100% | 15.60 | 5 |

| ABC | 1.18584 × 10−6 | 1.18585 × 10−6 | 2.52200 × 10−11 | 1.18597 × 10−6 | 100% | 17.52 | 4 |

| SaDE | 1.18585 × 10−6 | 1.18643 × 10−6 | 1.11590 × 10−9 | 1.19024 × 10−6 | 100% | 17.90 | 8 |

| RCBBO | 9.85416 × 10−6 | 1.68216 × 10−3 | 2.87773 × 10−3 | 9.61824 × 10−3 | 0.00% | 18.23 | 13 |

| DEBBO | 1.18585 × 10−6 | 1.18664 × 10−6 | 1.55322 × 10−9 | 1.19254 × 10−6 | 100% | 15.46 | 10 |

| TLBO | 1.18585 × 10−6 | 1.18598 × 10−6 | 2.84734 × 10−10 | 1.18703 × 10−6 | 100% | 15.62 | 6 |

| NIWTLBO | 1.18584 × 10−6 | 1.18585 × 10−6 | 5.14840 × 10−11 | 1.18613 × 10−6 | 100% | 18.92 | 3 |

| BLPSO | 1.18585 × 10−6 | 1.18650 × 10−6 | 1.13912 × 10−9 | 1.19090 × 10−6 | 100% | 18.06 | 9 |

| GOTLBO | 1.18585 × 10−6 | 1.18611 × 10−6 | 5.20662 × 10−10 | 1.18805 × 10−6 | 100% | 15.29 | 7 |

| QITLBO | 1.18584 × 10−6 | 1.18585 × 10−6 | 2.06093 × 10−12 | 1.18586 × 10−6 | 100% | 17.48 | 2 |

| QISHTS | 1.18584 × 10−6 | 1.18584 × 10−6 | 0.00000 | 1.18585 × 10−6 | 100% | 17.26 | 1 |

| Method | Best | Mean | STD | Worst | SR | T(S) | Rank |

|---|---|---|---|---|---|---|---|

| LDWPSO | 2.65569 × 10−3 | 2.65663 × 10−3 | 1.15296 × 10−6 | 2.66061 × 10−3 | 100% | 32.10 | 6 |

| CLPSO | 2.71167 × 10−3 | 3.54925 × 10−3 | 7.98382 × 10−4 | 6.06821 × 10−3 | 37% | 35.17 | 13 |

| jDE | 2.65567 × 10−3 | 2.65702 × 10−3 | 2.73064 × 10−6 | 2.66749 × 10−3 | 100% | 30.18 | 7 |

| ABC | 2.65674 × 10−3 | 3.13821 × 10−3 | 1.33151 × 10−3 | 8.51756 × 10−3 | 83% | 32.53 | 11 |

| SaDE | 2.65567 × 10−3 | 2.65587 × 10−3 | 2.91411 × 10−7 | 2.65698 × 10−3 | 100% | 32.41 | 4 |

| RCBBO | 2.68559 × 10−3 | 3.47463 × 10−3 | 1.00151 × 10−3 | 6.97048 × 10−3 | 53% | 32.35 | 12 |

| DEBBO | 2.65582 × 10−3 | 2.78029 × 10−3 | 4.26505 × 10−4 | 4.85929 × 10−3 | 90% | 29.82 | 10 |

| TLBO | 2.65567 × 10−3 | 2.65578 × 10−3 | 1.32328 × 10−7 | 2.65626 × 10−3 | 100% | 30.59 | 3 |

| NIWTLBO | 2.65567 × 10−3 | 2.73768 × 10−3 | 4.49100 × 10−4 | 5.11551 × 10−3 | 97% | 36.61 | 9 |

| BLPSO | 2.65568 × 10−3 | 2.65901 × 10−3 | 7.55110 × 10−6 | 2.68741 × 10−3 | 100% | 34.17 | 8 |

| GOTLBO | 2.65568 × 10−3 | 2.65649 × 10−3 | 1.54447 × 10−6 | 2.66225 × 10−3 | 100% | 30.22 | 5 |

| QITLBO | 2.65567 × 10−3 | 2.65570 × 10−3 | 8.30733 × 10−8 | 2.65607 × 10−3 | 100% | 34.31 | 2 |

| QISHTS | 2.65567 × 10−3 | 2.65568 × 10−3 | 1.81904 × 10−9 | 2.65599 × 10−3 | 100% | 32.14 | 1 |

| Method | Best | Mean | STD | Worst | SR | T(S) | Rank |

|---|---|---|---|---|---|---|---|

| LDWPSO | 1.3311 × 10−1 | 1.3317 × 10−1 | 3.89 × 10−5 | 1.3329 × 10−1 | 100% | 458.99 | 4 |

| CLPSO | 1.4920 × 10−1 | 1.6569 × 10−1 | 8.35 × 10−3 | 1.7957 × 10−1 | 0.00% | 478.32 | 12 |

| jDE | 1.3315 × 10−1 | 1.3327 × 10−1 | 6.96 × 10−5 | 1.3341 × 10−1 | 100% | 456.75 | 6 |

| ABC | 1.3968 × 10−1 | 1.4788 × 10−1 | 4.51 × 10−3 | 1.5592 × 10−1 | 3% | 464.76 | 11 |

| SaDE | 1.3315 × 10−1 | 1.3338 × 10−1 | 2.32 × 10−4 | 1.3421 × 10−1 | 100% | 458.89 | 7 |

| RCBBO | 1.3346 × 10−1 | 1.3550 × 10−1 | 2.07 × 10−3 | 1.4314 × 10−1 | 97% | 463.42 | 9 |

| DEBBO | 1.3317 × 10−1 | 1.3324 × 10−1 | 4.37 × 10−5 | 1.3333 × 10−1 | 100% | 457.52 | 5 |

| TLBO | 1.3311 × 10−1 | 1.3700 × 10−1 | 2.03 × 10−2 | 2.4445 × 10−1 | 97% | 466.63 | 10 |

| NIWTLBO | 1.3383 × 10−1 | 2.3744 × 10−1 | 2.75 × 10−2 | 2.4515 × 10−1 | 7% | 419.09 | 13 |

| BLPSO | 1.3310× 10−1 | 1.3311× 10−1 | 1.03 × 10−5 | 1.3314× 10−1 | 100% | 448.59 | 1 |

| GOTLBO | 1.3311 × 10−1 | 1.3392 × 10−1 | 4.30 × 10−3 | 1.5669 × 10−1 | 97% | 460.76 | 8 |

| QITLBO | 1.3311 × 10−1 | 1.3314 × 10−1 | 2.39 × 10−5 | 1.3320 × 10−1 | 100% | 460.91 | 3 |

| QISHTS | 1.3310× 10−1 | 1.3312 × 10−1 | 1.98 × 10−5 | 1.3318 × 10−1 | 100% | 455.86 | 2 |

| Method | Best | Mean | STD | Worst | SR | T(S) | Rank |

|---|---|---|---|---|---|---|---|

| LDWPSO | 1.1244 × 10−1 | 1.1245 × 10−1 | 6.86 × 10−6 | 1.1246 × 10−1 | 100% | 552.45 | 6 |

| CLPSO | 1.1321 × 10−1 | 1.1390 × 10−1 | 2.07 × 10−4 | 1.1420 × 10−1 | 0.00% | 554.22 | 13 |

| jDE | 1.1243× 10−1 | 1.1243× 10−1 | 1.14 × 10−6 | 1.1244 × 10−1 | 100% | 548.63 | 2 |

| ABC | 1.1286 × 10−1 | 1.1326 × 10−1 | 1.85 × 10−4 | 1.1357 × 10−1 | 0.00% | 588.13 | 12 |

| SaDE | 1.1244 × 10−1 | 1.1245 × 10−1 | 5.95 × 10−6 | 1.1247 × 10−1 | 100% | 549.59 | 6 |

| RCBBO | 1.1244 × 10−1 | 1.1247 × 10−1 | 1.55 × 10−5 | 1.1251 × 10−1 | 93% | 584.34 | 8 |

| DEBBO | 1.1243× 10−1 | 1.1244 × 10−1 | 1.17 × 10−6 | 1.1244 × 10−1 | 100% | 583.57 | 4 |

| TLBO | 1.1244 × 10−1 | 1.1248 × 10−1 | 1.97 × 10−5 | 1.1253 × 10−1 | 87% | 581.01 | 10 |

| NIWTLBO | 1.1257 × 10−1 | 1.1280 × 10−1 | 1.68 × 10−4 | 1.1333 × 10−1 | 0.00% | 549.69 | 11 |

| BLPSO | 1.1243× 10−1 | 1.1243× 10−1 | 2.96 × 10−8 | 1.1243× 10−1 | 100% | 618.02 | 2 |

| GOTLBO | 1.1244 × 10−1 | 1.1247 × 10−1 | 2.74 × 10−5 | 1.1254 × 10−1 | 90% | 582.16 | 8 |

| QITLBO | 1.1243× 10−1 | 1.1244 × 10−1 | 5.14 × 10−6 | 1.1246 × 10−1 | 100% | 561.71 | 4 |

| QISHTS | 1.1243× 10−1 | 1.1243× 10−1 | 2.45× 10−8 | 1.1243× 10−1 | 100% | 558.96 | 1 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alnahari, E.; Shi, H.; Alkebsi, K. Quadratic Interpolation Based Simultaneous Heat Transfer Search Algorithm and Its Application to Chemical Dynamic System Optimization. Processes 2020, 8, 478. https://doi.org/10.3390/pr8040478

Alnahari E, Shi H, Alkebsi K. Quadratic Interpolation Based Simultaneous Heat Transfer Search Algorithm and Its Application to Chemical Dynamic System Optimization. Processes. 2020; 8(4):478. https://doi.org/10.3390/pr8040478

Chicago/Turabian StyleAlnahari, Ebrahim, Hongbo Shi, and Khalil Alkebsi. 2020. "Quadratic Interpolation Based Simultaneous Heat Transfer Search Algorithm and Its Application to Chemical Dynamic System Optimization" Processes 8, no. 4: 478. https://doi.org/10.3390/pr8040478

APA StyleAlnahari, E., Shi, H., & Alkebsi, K. (2020). Quadratic Interpolation Based Simultaneous Heat Transfer Search Algorithm and Its Application to Chemical Dynamic System Optimization. Processes, 8(4), 478. https://doi.org/10.3390/pr8040478