Mixing in Turbulent Flows: An Overview of Physics and Modelling

Abstract

1. Introduction

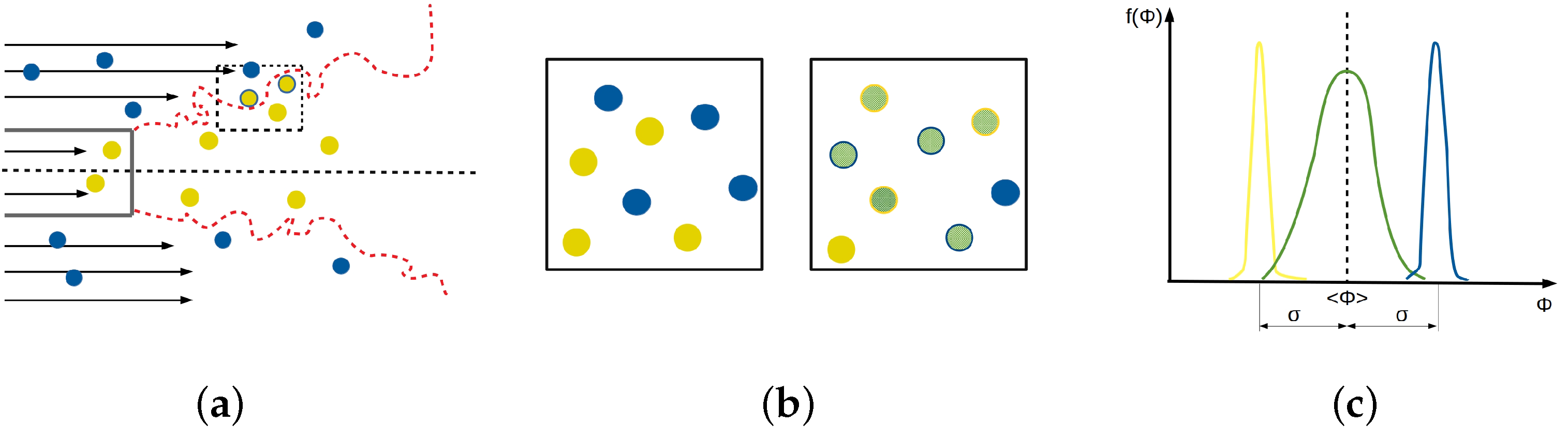

2. Mixing Processes

3. Turbulent Flows with Scalar Variables: Statistical Modelling

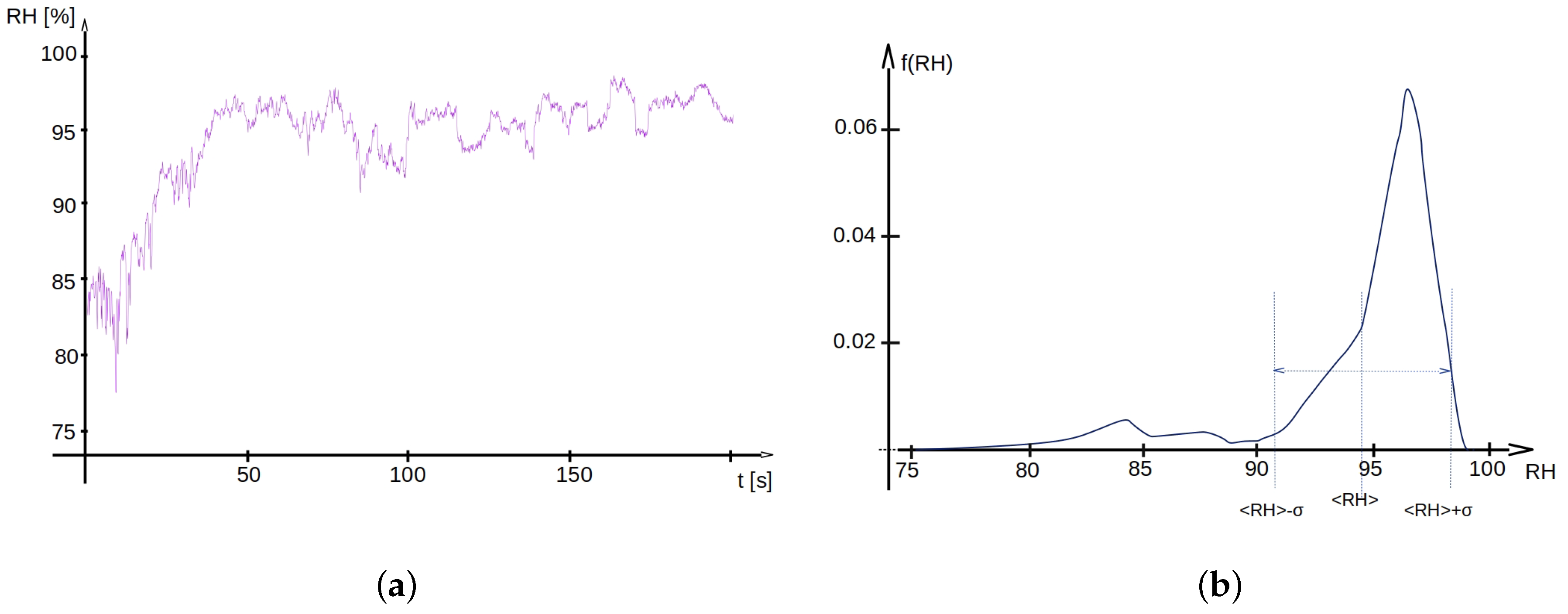

3.1. The PDF and Statistical Description

3.2. The PDF Equation of Scalar Transport

3.3. A Note on Moment Closures for Scalar Transport

4. Lagrangian Modelling of Scalar Micromixing

4.1. Generalities

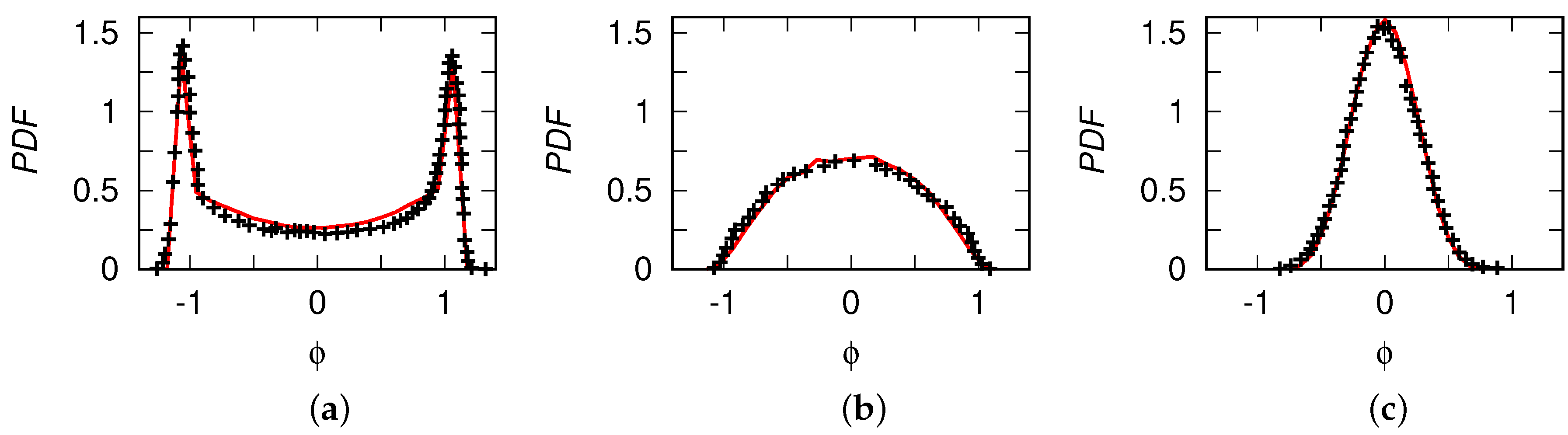

4.2. One-Point Scalar Mixing Models: IEM and Langevin

4.3. Binomial Langevin Model for Scalar Mixing Revisited: Bounded Langevin Model

4.4. More Models of Scalar Mixing

5. Filtered Density Function Approach to Scalars in Turbulence

5.1. The FDF Basics

5.2. The FDF Equation of Scalar Transport

6. Conclusions and Perspectives

Author Contributions

Funding

Conflicts of Interest

In Memoriam

Abbreviations

| N–S | Navier–Stokes |

| DNS | Direct Numerical Simulations |

| probability density function | |

| FDF | filtered density function |

| RANS | Reynolds-averaged Navier–Stokes |

| LES | large eddy simulations |

| CFD | computational fluid dynamics |

| IEM | interaction by exchange with the mean |

| LMSE | linear mean square estimation |

| CD | coalescence-dispersion |

| MC | mapping closure |

| BLM | bounded Langevin model |

| SDE | stochastic differential equation |

| EMST | Euclidean minimum spanning trees |

| MMC | multiple mapping conditioning |

| TS | time series |

| GPU | graphic processing units |

| QA | quantum algorithms |

References

- Pope, S.B. Turbulent Flows; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Fox, R.O. Computational Methods for Turbulent Reacting Flows; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Dimotakis, P.E. Turbulent mixing. Annu. Rev. Fluid Mech. 2005, 37, 329–356. [Google Scholar] [CrossRef]

- Klimenko, A.Y. What is mixing and can it be complex? Phys. Scr. 2013, T155, 014047. [Google Scholar] [CrossRef]

- Gregg, M.C.; D’Asaro, E.A.; Riley, J.J.; Kunze, E. Mixing efficiency in the ocean. Annu. Rev. Mar. Sci. 2018, 10, 443–473. [Google Scholar] [CrossRef]

- Horner-Devine, A.R.; Hetl, R.D.; MacDonald, D.G. Mixing and transport in coastal river plumes. Annu. Rev. Fluid Mech. 2015, 47, 569–594. [Google Scholar] [CrossRef]

- Honnert, R.; Masson, V.; Couvreux, F. A diagnostic for evaluating the representation of turbulence in atmospheric models at the kilometric scale. J. Atmos. Phys. 2011, 68, 3112–3131. [Google Scholar] [CrossRef]

- Bakosi, J.; Franzese, P.; Boybeyi, Z. Joint PDF modelling of turbulent flow and dispersion in an urban street canyon. Bound. Layer Met. 2009, 131, 245–261. [Google Scholar] [CrossRef][Green Version]

- Kristóf, G.; Papp, B. Application of GPU-based large eddy simulation in urban dispersion studies. Atmosphere 2018, 9, 442. [Google Scholar] [CrossRef]

- Malinowski, S.P.; Zawadzki, I.; Banat, P. Laboratory observations of cloud–clear air mixing at small scales. J. Atmos. Ocean. Technol. 1997, 15, 1060–1065. [Google Scholar] [CrossRef]

- Andrejczuk, M.; Grabowski, W.W.; Malinowski, S.P.; Smolarkiewicz, P.K. Numerical simulation of cloud–clear air interfacial mixing. J. Atmos. Sci. 2004, 61, 1726–1739. [Google Scholar] [CrossRef]

- Korczyk, P.M.; Kowalewski, T.A.; Malinowski, S.P. Turbulent mixing of clouds with the environment: Small scale two phase evaporating flow investigated in a laboratory by particle image velocimetry. Physics D 2012, 241, 288–296. [Google Scholar] [CrossRef]

- Sherwood, S.C.; Bony, S.; Dufresne, J.L. Spread in model climate sensitivity traced to atmospheric convective mixing. Nature 2014, 505, 37–42. [Google Scholar] [CrossRef]

- Baranowski, D.B.; Flatau, P.J.; Malinowski, S.P. Tropical cyclone turbulent mixing as observed by autonomous oceanic profilers with the high repetition rate. J. Phys. Conf. Ser. 2011, 318, 072001. [Google Scholar] [CrossRef]

- Bałdyga, J.; Bourne, J.R. Turbulent Mixing and Chemical Reactions; Wiley: Hoboken, NJ, USA, 1999. [Google Scholar]

- Ferrarotti, M.; Li, Z.; Parente, A. On the role of mixing models in the simulation of MILD combustion using finite-rate chemistry combustion models. Proc. Combust. Inst. 2019, 37, 4531–4538. [Google Scholar] [CrossRef]

- Lewandowski, M.T.; Li, Z.; Parente, A.; Pozorski, J. Generalised Eddy Dissipation Concept for MILD combustion regime at low local Reynolds and Damköhler numbers. Part 2: Validation of the model. Fuel 2020, 278, 117773. [Google Scholar] [CrossRef]

- Eswaran, V.; Pope, S.B. Direct numerical simulations of the turbulent mixing of a passive scalar. Phys. Fluids 1988, 31, 506–520. [Google Scholar] [CrossRef]

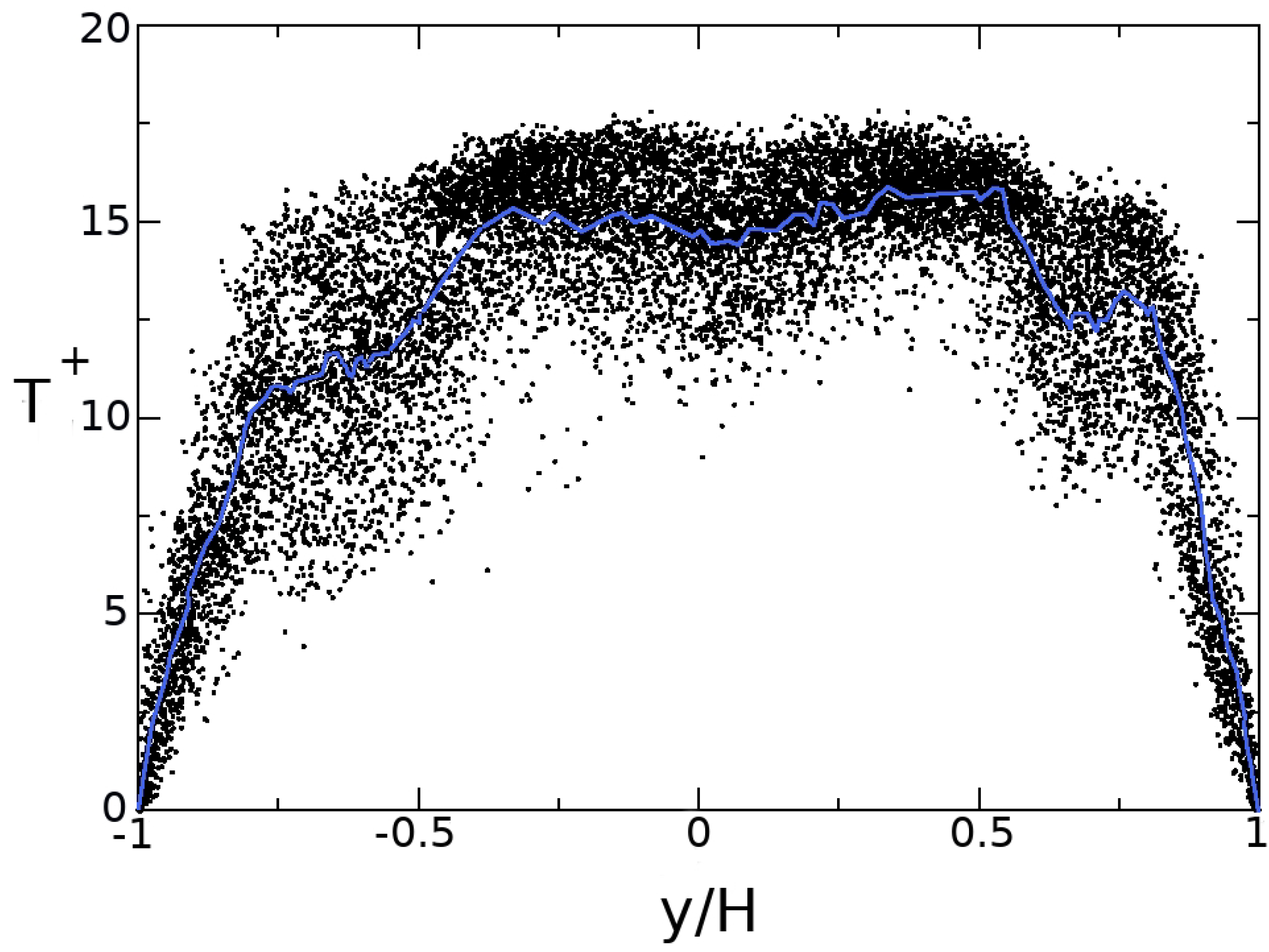

- Kim, J.; Moin, P. Transport of passive scalars in a turbulent channel flow. In Turbulent Shear Flows VI; André, J.C., Cousteix, J., Durst, F., Launder, B.E., Schmidt, F.W., Whitelaw, J.H., Eds.; Springer: Berlin/Heidelberg, Germany, 1989; pp. 85–96. [Google Scholar]

- Kasagi, N.; Tomita, Y.; Kuroda, A. Direct numerical simulation of passive scalar field in a turbulent channel flow. J. Heat Transf. 1992, 114, 598–606. [Google Scholar] [CrossRef]

- Kawamura, H.; Abe, H.; Matsuo, Y. DNS of turbulent heat transfer in channel flow with respect to Reynolds and Prandtl number effects. Int. J. Heat Fluid Flow 1999, 20, 196–207. [Google Scholar] [CrossRef]

- Na, Y.; Papavassiliou, D.V.; Hanratty, T.J. Use of direct numerical simulation to study the effect of Prandtl number on temperature fields. Int. J. Heat Fluid Flow 1999, 20, 187–195. [Google Scholar] [CrossRef]

- Heinz, S. A review of hybrid RANS-LES methods for turbulent flows: Concepts and applications. Prog. Aeronaut. Sci. 2020, 114, 100597. [Google Scholar] [CrossRef]

- Pitsch, H. Large-eddy simulation of turbulent combustion. Annu. Rev. Fluid Mech. 2006, 38, 453–482. [Google Scholar] [CrossRef]

- Haworth, D.C. Progress in probability density function methods for turbulent reacting flows. Prog. Energy Combust. Sci. 2010, 36, 168–259. [Google Scholar] [CrossRef]

- Pope, S.B. Small scales, many species and the manifold challenges of turbulent combustion. Proc. Combust. Inst. 2013, 34, 1–31. [Google Scholar] [CrossRef]

- Raman, V.; Hassanaly, M. Emerging trends in numerical simulations of combustion systems. Proc. Combust. Inst. 2019, 37, 2083–2089. [Google Scholar] [CrossRef]

- Abarzi, S.I.; Mauel, M.E.; Weitzner, H. Mixing in fusion plasmas. Phys. Scr. 2015, 90, 030201. [Google Scholar] [CrossRef][Green Version]

- Jaszczur, M.; Młynarczykowska, A. A general review of the current development of mechanically agitated vessels. Processes 2020, 8, 982. [Google Scholar] [CrossRef]

- Malecha, Z.M.; Malecha, K. Numerical analysis of mixing under low and high frequency pulsations at serpentine micromixers. Chem. Process Eng. 2014, 35, 369–385. [Google Scholar] [CrossRef]

- Bayareh, M.; Ashani, M.N.; Usefian, A. Active and passive micromixers: A comprehensive review. Chem. Eng. Process. Process Intensif. 2020, 147, 107771. [Google Scholar] [CrossRef]

- Raza, W.; Hossain, S.; Kim, K.Y. A review of passive micromixers with a comparative analysis. Micromachines 2020, 11, 455. [Google Scholar] [CrossRef]

- Ito, Y.; Watanabe, T.; Nagata, K.; Sakai, Y. Turbulent mixing of a passive scalar in grid turbulence. Phys. Scr. 2016, 91, 074002. [Google Scholar] [CrossRef]

- Nedic, J.; Tavoularis, S. Measurements of passive scalar diffusion downstream of regular and fractal grids. J. Fluid Mech. 2016, 800, 358–386. [Google Scholar] [CrossRef]

- Başbuğ, S.; Papadakis, G.; Vassilicos, J.C. Reduced mixing time in stirred vessels by means of irregular impellers. Phys. Rev. Fluids 2018, 3, 084502. [Google Scholar] [CrossRef]

- Łaniewski-Wołłk, Ł.; Rokicki, J. Adjoint Lattice Boltzmann for topology optimization on multi-GPU architecture. Comput. Math. Appl. 2016, 71, 833–848. [Google Scholar] [CrossRef]

- Shu, Z.; Zhu, S.; Zhang, J.; Zhao, W.; Ye, Z. Optimization design and analysis of polymer high efficiency mixer in offshore oil field. Processes 2020, 8, 110. [Google Scholar] [CrossRef]

- Villermaux, E. Mixing versus stirring. Annu. Rev. Fluid Mech. 2019, 51, 245–273. [Google Scholar] [CrossRef]

- Meyer, D.W.; Jenny, P. Micromixing models for turbulent flows. J. Comput. Phys. 2009, 228, 1275–1293. [Google Scholar] [CrossRef]

- Kraichnan, R. Anomalous Scaling of a Randomly Advected Passive Scalar. Phys. Rev. Lett. 1994, 72, 1016–1019. [Google Scholar] [CrossRef]

- Warhaft, Z. Passive scalars in turbulent flows. Annu. Rev. Fluid Mech. 2000, 32, 203–240. [Google Scholar] [CrossRef]

- Sreenivasan, K.R. Turbulent mixing: A perspective. Proc. Nat. Acad. Sci. USA 2019, 116, 18175–18183. [Google Scholar] [CrossRef]

- Schumacher, J.; Sreenivasan, K.R.; Yeung, P.K. Very fine structures in scalar mixing. J. Fluid Mech. 2005, 531, 113–122. [Google Scholar] [CrossRef]

- Sreenivasan, K.R. Fractals and multifractals in fluid turbulence. Annu. Rev. Fluid Mech. 1991, 23, 539–600. [Google Scholar] [CrossRef]

- Scotti, A.; Meneveau, C. A fractal interpolation model for large eddy simulation of turbulent flows. Physica D 1999, 127, 198–232. [Google Scholar] [CrossRef]

- Akinlabi, E.O.; Wacławczyk, M.; Malinowski, S.P.; Mellado, J.P. Fractal reconstruction of sub-grid scales for Large Eddy Simulation. Flow Turbul. Combust. 2019, 103, 293–322. [Google Scholar] [CrossRef]

- Pope, S.B. PDF methods for turbulent reactive flows. Prog. Energy Combust. Sci. 1985, 11, 119–192. [Google Scholar] [CrossRef]

- Van Kampen, N.G. Stochastic Processes in Physics and Chemistry, 2nd ed.; North-Holland: Amsterdam, The Netherlands, 1992. [Google Scholar]

- Jonsson, H. CIRPAS Twin Otter 10-Hz Navigation, State Parameter, and Microphysics Flight-Level Data—ASCII Format. Version 1.0. UCAR/NCAR—Earth Observing Laboratory. 2009. Available online: https://data.eol.ucar.edu/dataset/111.035 (accessed on 21 August 2020).

- Dopazo, C. Recent developments in PDF methods. In Turbulent Reacting Flows; Libby, P.A., Williams, F.A., Eds.; Academic Press: New York, NY, USA, 1994; pp. 375–474. [Google Scholar]

- Jones, W.P. The joint scalar probability density function. In Closure Strategies for Turbulent and Transitional Flows; Launder, B.E., Sandham, N.D., Eds.; Cambridge University Press: Cambridge, UK, 2002; pp. 582–625. [Google Scholar]

- Wouters, H.A.; Peeters, T.W.J.; Roekaerts, D. Joint velocity-scalar PDF methods. In Closure Strategies for Turbulent and Transitional Flows; Launder, B.E., Sandham, N.D., Eds.; Cambridge University Press: Cambridge, UK, 2002; pp. 626–655. [Google Scholar]

- Wacławczyk, M.; Pozorski, J.; Minier, J.P. PDF computation of turbulent flows with a new near-wall model. Phys. Fluids 2004, 16, 1410–1422. [Google Scholar] [CrossRef]

- Pozorski, J.; Wacławczyk, M.; Minier, J.P. Full velocity-scalar probability density function computation of heated channel flow with wall function approach. Phys. Fluids 2003, 15, 1220–1232. [Google Scholar] [CrossRef]

- Pozorski, J.; Minier, J.-P. Stochastic modelling of conjugate heat transfer in near-wall turbulence. Int. J. Heat Fluid Flow 2006, 27, 867–877. [Google Scholar] [CrossRef]

- Minier, J.-P. On Lagrangian stochastic methods for turbulent polydisperse two-phase reactive flows. Prog. Energy Combust. Sci. 2015, 50, 1–62. [Google Scholar] [CrossRef]

- Muradoglu, M.; Jenny, P.; Pope, S.B.; Caughey, D. A consistent hybrid finite-volume/particle method for the PDF equations of turbulent reactive flows. J. Comput. Phys. 1999, 154, 342–371. [Google Scholar] [CrossRef]

- Tolpadi, A.K.; Hu, I.Z.; Correa, S.M.; Burrus, D.L. Coupled Lagrangian Monte Carlo PDF-CFD computation of gas turbine combustor flowfields with finite-rate chemistry. J. Eng. Gas Turbines Power 1997, 119, 519–526. [Google Scholar] [CrossRef]

- Anand, M.S.; Hsu, A.T.; Pope, S.B. Calculations of swirl combustors using joint velocity-scalar probability density function method. AIAA J. 1997, 35, 1143–1150. [Google Scholar]

- Cho, H.; Vanturi, D.; Karniadakis, G.E. Numerical methods for high-dimensional probability density function equations. J. Comput. Phys. 2016, 305, 817–837. [Google Scholar] [CrossRef]

- Kays, W.M. Turbulent Prandtl number—Where are we? J. Heat Transf. 1994, 116, 285–295. [Google Scholar] [CrossRef]

- Craske, J.; Salizzoni, P.; van Reeuwijk, M. The turbulent Prandtl number in a pure plume is 3/5. J. Fluid Mech. 2017, 822, 774–790. [Google Scholar] [CrossRef]

- Forster, D.; Nelson, D.R.; Stephen, M.J. Large-distance and long-time properties of a randomly stirred fluid. Phys. Rev. A 1977, 16, 732–749. [Google Scholar] [CrossRef]

- Nagano, Y. Modelling heat transfer in near-wall flows. In Closure Strategies for Turbulent and Transitional Flows; Launder, B.E., Sandham, N.D., Eds.; Cambridge University Press: Cambridge, UK, 2002; pp. 188–247. [Google Scholar]

- Chen, H.; Chen, S.; Kraichnan, R.H. Probability distribution of stochastically advected scalar field. Phys. Rev. Lett. 1989, 63, 2657–2660. [Google Scholar] [CrossRef]

- Villermaux, J.; Falk, L. Recent advances in modelling micromixing and chemical reaction. Revue de l’Institut Français du Pétrole 1996, 51, 205–213. [Google Scholar] [CrossRef]

- Celis, C.; da Figueira, S.L.F. Lagrangian mixing models for turbulent combustion: Review and prospects. Flow Turbul. Combust. 2015, 94, 643–689. [Google Scholar] [CrossRef]

- Dopazo, C.; Cifuentes, L.; Hierro, J.; Martin, J. Micro-scale mixing in turbulent constant density reacting flows and premixed combustion. Flow Turbul. Combust. 2016, 96, 547–571. [Google Scholar] [CrossRef]

- Merci, B.; Roekaerts, D.; Naud, B.; Pope, S.B. Comparative study of micromixing models in transported scalar PDF simulations of turbulent nonpremixed bluff body flames. Combust. Flame 2006, 146, 109–130. [Google Scholar] [CrossRef]

- Ren, Z.; Pope, S.B. An investigation of the performance of turbulent mixing models. Combust. Flame 2004, 136, 208–216. [Google Scholar] [CrossRef]

- Ren, Z.Y.; Kuron, M.; Zhao, X.Y.; Lu, T.F.; Hawkes, E.; Kolla, H.; Chen, J.H. Micromixing models for PDF simulations of turbulent premixed flames. Combust. Sci. Technol. 2019, 191, 1430–1455. [Google Scholar] [CrossRef]

- Pozorski, J.; Minier, J.P. Modeling scalar mixing process in turbulent flow. In Proceedings of the 1st International Symposium “Turbulence and Shear Flow Phenomena”, Santa Barbara, CA, USA, 12–15 September 1999; pp. 1357–1362. [Google Scholar]

- Bukasa, T.; Pozorski, J.; Minier, J.P. PDF computation of thermal mixing layer in grid turbulence. In Advances in Turbulence VIII; Dopazo, C., Ed.; CIMNE: Barcelona, Spain, 2000; pp. 219–222. [Google Scholar]

- Curl, R.L. Dispersed phase mixing. I. Theory and effects of simple reactors. AIChE J. 1963, 9, 175–181. [Google Scholar] [CrossRef]

- Pope, S.B. Mapping closures for turbulent mixing and reaction. Theor. Comput. Fluid Dyn. 1991, 2, 255–270. [Google Scholar] [CrossRef]

- Valiño, L.; Dopazo, C. A binomial sampling model for scalar turbulent mixing. Phys. Fluids A 1990, 2, 1204–1212. [Google Scholar] [CrossRef][Green Version]

- Valiño, L.; Dopazo, C. A binomial Langevin model for turbulent mixing. Phys. Fluids A 1991, 3, 3034–3037. [Google Scholar] [CrossRef]

- Pozorski, J.; Wacławczyk, M.; Minier, J.P. Probability density function computation of heated turbulent channel flow with the bounded Langevin model. J. Turbul. 2003, 4, 011. [Google Scholar] [CrossRef]

- Gardiner, C.W. Handbook of Stochastic Methods for Physics, Chemistry and the Natural Sciences, 2nd ed.; Springer: Berlin, Germany, 1990. [Google Scholar]

- Ma, B.K.; Warhaft, Z. Some aspects of the thermal mixing layer in grid turbulence. Phys. Fluids 1986, 29, 3114–3120. [Google Scholar] [CrossRef]

- Subramanian, S.; Pope, S.B. A mixing model for turbulent reactive flows based on Euclidean minimum spanning trees. Combust. Flame 1998, 115, 487–514. [Google Scholar] [CrossRef]

- Soulard, O.; Sabelnikov, V.; Gorokhovski, M. Stochastic scalar mixing models accounting for turbulent frequency multiscale fluctuations. Int. J. Heat Fluid Flow 2004, 25, 875–883. [Google Scholar] [CrossRef]

- Kerstein, A.R.; Ashurst, W.T.; Wunsch, S.; Nilsen, V. One-dimensional turbulence: Vector formulation and application to free shear flows. J. Fluid Mech. 2001, 447, 85–109. [Google Scholar] [CrossRef]

- Pope, S.B. A model for turbulent mixing based on shadow-position conditioning. Phys. Fluids 2013, 25, 110803. [Google Scholar] [CrossRef]

- Yang, T.W.; Zhou, H.; Ren, Z.Y. A particle mass-based implementation for mixing models with differential diffusion. Combust. Flame 2020, 214, 116–120. [Google Scholar] [CrossRef]

- Richardson, E.S.; Chen, J.H. Application of PDF mixing models to premixed flames with differential diffusion. Combust. Flame 2012, 159, 2398–2414. [Google Scholar] [CrossRef]

- Kerstein, A.R. Linear-eddy modelling of turbulent transport. Part 3. Mixing and differential molecular diffusion in round jets. J. Fluid Mech. 1990, 216, 411–435. [Google Scholar] [CrossRef]

- Klimenko, A.Y.; Pope, S.B. The modeling of turbulent reactive flows based on multiple mapping conditioning. Phys. Fluids 2003, 15, 1907–1925. [Google Scholar] [CrossRef]

- Wandel, A.P.; Lindstedt, R.P. A mixture-fraction-based hybrid binomial Langevin-multiple mapping conditioning model. Proc. Combust. Inst. 2019, 37, 2151–2158. [Google Scholar] [CrossRef]

- Perry, B.A.; Mueller, M.E. Joint probability density function models for multiscalar turbulent mixing. Combust. Flame 2018, 193, 344–362. [Google Scholar] [CrossRef]

- Gao, F.; O’Brien, E.E. A large-eddy simulation scheme for turbulent reacting flows. Phys. Fluids A 1993, 5, 1282–1284. [Google Scholar] [CrossRef]

- Colucci, P.J.; Jaberi, F.A.; Givi, P.; Pope, S.B. The filtered density function for large-eddy simulation of turbulent reactive flows. Phys. Fluids 1998, 10, 499–515. [Google Scholar] [CrossRef]

- Gicquel, L.Y.M.; Givi, P.; Jaberi, F.A.; Pope, S.B. Velocity filtered density function for large eddy simulation of turbulent flows. Phys. Fluids 2002, 14, 1196–1213. [Google Scholar] [CrossRef]

- Suciu, N.; Schüler, L.; Attinger, S.; Knabner, P. Towards a filtered density function approach for reactive transport in groundwater. Adv. Water Res. 2016, 90, 83–98. [Google Scholar] [CrossRef]

- Wacławczyk, M.; Pozorski, J.; Minier, J.-P. New molecular transport model for FDF/LES of turbulence with passive scalar. Flow Turbul. Combust. 2008, 81, 235–260. [Google Scholar] [CrossRef]

- Wacławczyk, M.; Pozorski, J.; Minier, J.-P. Filtered density function modelling of near-wall scalar transport with POD velocity modes. Int. J. Heat Fluid Flow 2009, 30, 77–87. [Google Scholar] [CrossRef]

- Pozorski, J.; Wacławczyk, T.; Łuniewski, M. LES of turbulent channel flow and heavy particle dispersion. J. Theor. Appl. Mech. 2007, 45, 643–657. [Google Scholar]

- Pozorski, J. Models of turbulent flows and particle dynamics. In Particles in Wall-Bounded Turbulent Flows: Deposition, Re-Suspension and Agglomeration; Minier, J.-P., Pozorski, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2017; pp. 97–150. [Google Scholar]

- Béghein, C.; Allery, C.; Wacławczyk, M.; Pozorski, J. Application of POD-based dynamical systems to dispersion and deposition of particles in turbulent channel flow. Int. J. Multiph. Flow 2014, 58, 97–113. [Google Scholar]

- Abade, G.C.; Grabowski, W.W.; Pawlowska, H. Broadening of cloud droplet spectra through eddy hopping: Turbulent entraining parcel simulations. J. Atmos. Sci. 2018, 75, 3365–3379. [Google Scholar] [CrossRef]

- Li, Z.; Banaeizadeh, A.; Jaberi, F.A. Two-phase filtered mass density function for LES of turbulent reacting flows. J. Fluid Mech. 2014, 760, 243–277. [Google Scholar] [CrossRef]

- De Frahan, H.M.T.; Yellapantula, S.; King, R.; Day, M.S.; Grout, R.W. Deep learning for presumed probability density function models. Combust. Flame 2019, 208, 436–450. [Google Scholar] [CrossRef]

- Raissi, M.; Babaee, H.; Givi, P. Deep learning of turbulent scalar mixing. Phys. Rev. Fluids 2019, 4, 124501. [Google Scholar] [CrossRef]

- Kajzer, A.; Pozorski, J. Application of the entropically damped artificial compressibility model to direct numerical simulation of turbulent channel flow. Comput. Math. Appl. 2018, 76, 997–1013. [Google Scholar] [CrossRef]

- Clay, M.P.; Buaria, D.; Yeung, P.K.; Gotoh, T. GPU acceleration of a petascale application for turbulent mixing at high Schmidt number using OpenMP 4.5. Comput. Phys. Commun. 2018, 228, 100–114. [Google Scholar] [CrossRef]

- Gaitan, F. Finding flows of a Navier–Stokes fluid through quantum computing. NPJ Quantum Inf. 2020, 6, 61. [Google Scholar] [CrossRef]

- Xu, G.; Daley, A.J.; Givi, P.; Somma, R.D. Turbulent mixing simulation via a quantum algorithm. AIAA J. 2018, 56, 1–13. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pozorski, J.; Wacławczyk, M. Mixing in Turbulent Flows: An Overview of Physics and Modelling. Processes 2020, 8, 1379. https://doi.org/10.3390/pr8111379

Pozorski J, Wacławczyk M. Mixing in Turbulent Flows: An Overview of Physics and Modelling. Processes. 2020; 8(11):1379. https://doi.org/10.3390/pr8111379

Chicago/Turabian StylePozorski, Jacek, and Marta Wacławczyk. 2020. "Mixing in Turbulent Flows: An Overview of Physics and Modelling" Processes 8, no. 11: 1379. https://doi.org/10.3390/pr8111379

APA StylePozorski, J., & Wacławczyk, M. (2020). Mixing in Turbulent Flows: An Overview of Physics and Modelling. Processes, 8(11), 1379. https://doi.org/10.3390/pr8111379