1. Introduction

The rapid advancement of artificial intelligence (AI) technologies has led to their growing prevalence in industrial production and everyday life [

1]. In industrial heat treatment, especially for aluminum heating systems, temperature control precision and fault diagnosis capability are considered essential indicators of overall system performance. Concerning temperature regulation, conventional methods utilizing a singular proportional-integral-derivative (PID) algorithm distinguished by its straightforward architecture, adaptable tuning, and robust flexibility have been extensively implemented in diverse temperature control systems. In fault diagnosis, temperature anomalies often arise in high-temperature conditions. In particular, within semiconductor manufacturing and related high-precision electronic processes, fluctuations in temperature can have a pronounced impact on equipment, greatly increasing the risk of device degradation or catastrophic failure. Thus, accurate localization and diagnosis of temperature-related faults become especially critical. Furthermore, extended operation of the heaters may lead to anomalies such as open-circuit conditions, potentially causing abrupt temperature declines and system instability. By efficiently monitoring and diagnosing temperature anomalies, heater-related faults can be swiftly identified, enabling appropriate measures to be taken to avert potential risks associated with temperature excursions [

2,

3,

4,

5].

A multi-port aluminum-block heating platform typically necessitates consistent temperature regulation. Nonetheless, the plant’s nonlinearity, long response time, and substantial time delay considerably complicate the regulation of the temperature-control system [

6]. Conventional PID control algorithms frequently do not achieve the necessary precision and responsiveness in complex operating conditions. Researchers are increasingly utilizing system identification methods to model systems and enhance control strategies for improved temperature precision. The step-response method, an effective and commonly used system identification technique, has been extensively employed in modeling temperature control systems [

7].

Following the acquisition of a mathematical model of the plant, researchers have systematically introduced diverse methods to refine and augment traditional PID control algorithms, thereby improving the efficacy of temperature-control systems. Lam et al. [

8] employed fuzzy logic to optimize PID, decreasing temperature overshoot from 3 °C to 1 °C, constraining the steady-state error to ±0.5 °C, and attaining an overall accuracy of ±2 °C. Machado et al. [

9] proposed a distributed framework that amalgamates various control strategies to ensure stable regulation of temperature and energy-storage capacity in multi-production-area heating systems. Ohnishi et al. [

10] enhanced temperature-control precision and diminished energy consumption in semiconductor manufacturing by integrating virtually combined heaters and coolers with feedforward–feedback composite control and a frequency-domain-optimized PID. Wang et al. [

11] integrated PID control with a state machine to dynamically modify the control strategy in response to temperature deviations, thereby markedly improving the efficacy of thermoelectric cooling (TEC) systems. Zhao et al. [

12] presented a Smith predictor, residual-integral prediction, and fuzzy adaptive PID, resulting in rapid, precise, and minimal-overshoot temperature regulation. Yu et al. [

13] aligned the temperature coefficients of the PID and AC bridge circuit to achieve ultra-precise temperature regulation of a superconducting gravimeter’s vacuum chamber. Despite these advances, most PID-related studies rely on fixed or slowly updated parameters and a single-loop view of the plant, which degrades performance under time-varying heat loads, strong thermal coupling across multiple points, and long dead-time typical of aluminum-block heating.

In the fault diagnosis of a multi-point aluminum-block heating platform, conventional methods predominantly depend on threshold alarms and expert systems, which ascertain heater disconnection faults by establishing fixed temperature fluctuation ranges [

14]. In aluminum-block heating platforms characterized by significant nonlinearity and elevated thermal inertia, temperature signals frequently display dynamic hysteresis, rendering fixed-threshold methods susceptible to false and missed alarms.

In response to this phenomenon, researchers have started investigating various data-driven diagnostic techniques. Jin et al. [

15] proposed a model and a data-driven fault diagnosis method capable of concurrently identifying faults in lithium battery sensors and internal resistance. Zhang [

16] employed on-state voltage measurement and state-detection methodologies to effectively diagnose open-circuit faults in three-level T-type inverters. Dong [

17] conducted intelligent identification of multi-sensor faults in high-speed train traction converters utilizing LSTM networks. Sun [

18] integrated DFD with LOF anomaly detection to facilitate multidimensional dynamic monitoring of lithium battery voltage and temperature. Wang et al. [

19] combined Mask R-CNN instance segmentation with temperature-rule analysis to facilitate the automatic diagnosis of multiple insulators in infrared images. Piardi [

20] proposed a collaborative-diagnosis method for CPS utilizing a multi-agent system, enhancing overall fault-identification efficacy. Schmid et al. [

21] identified and localized faults through data-driven PCA statistical analysis. Cheng [

22] utilized the DSFA-BRB algorithm, which integrates deep slow feature analysis with a confidence rule base, to identify faults in high-speed rail operating mechanisms. Jin [

23] accomplished resilient estimation of sensor malfunctions through adaptive Kalman filtering. Li [

24] amalgamated voltage, current, and temperature data, employing the MMSE method to diagnose early internal short circuits in lithium batteries. Qiu [

25] integrated TRNSYS simulation with state-space models to develop an analytical model-based fault diagnosis framework.

To rectify inadequate control precision and protracted fault diagnosis in multi-point aluminum-block heating temperature-control platforms functioning under intricate conditions [

26], recent efforts have increasingly utilized intelligent algorithms in industrial systems, encompassing predictive modeling [

27], enhancements to comfort indices [

28], and optimization of critical phases such as data computation and compression [

29,

30,

31,

32,

33]. The emergence of Industry 4.0 has established digital twin platforms as a novel framework for real-time monitoring and collaborative optimization.

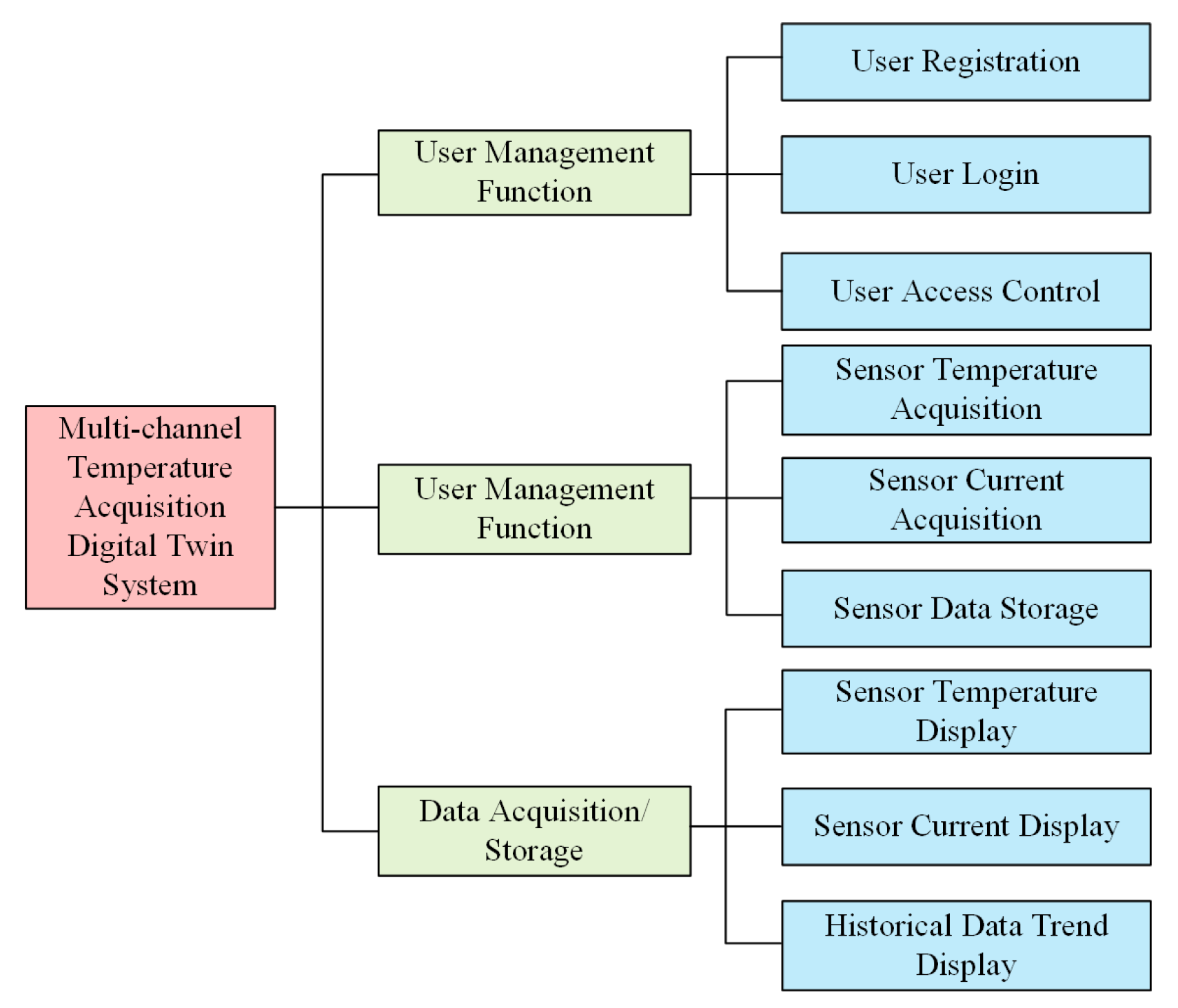

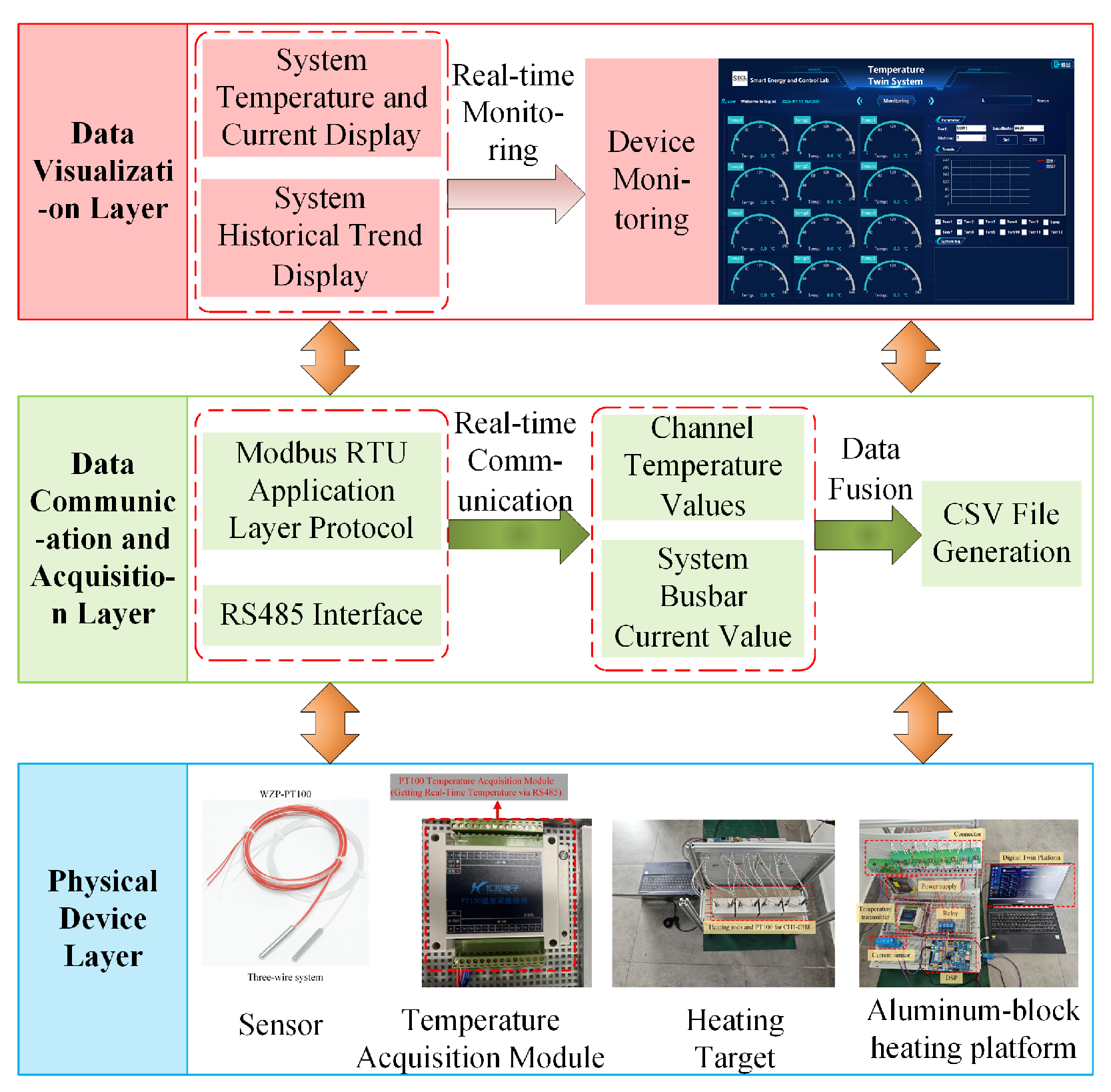

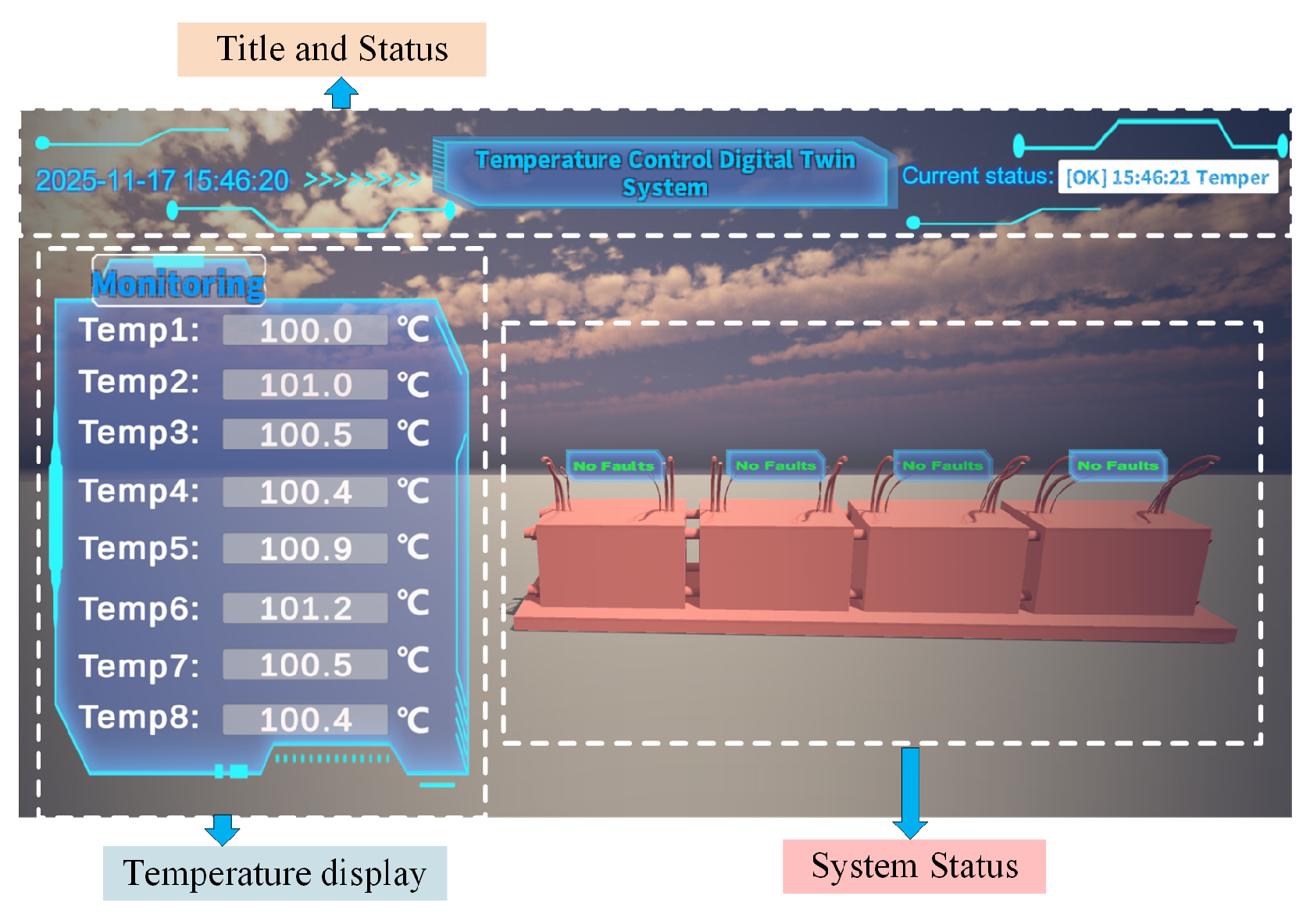

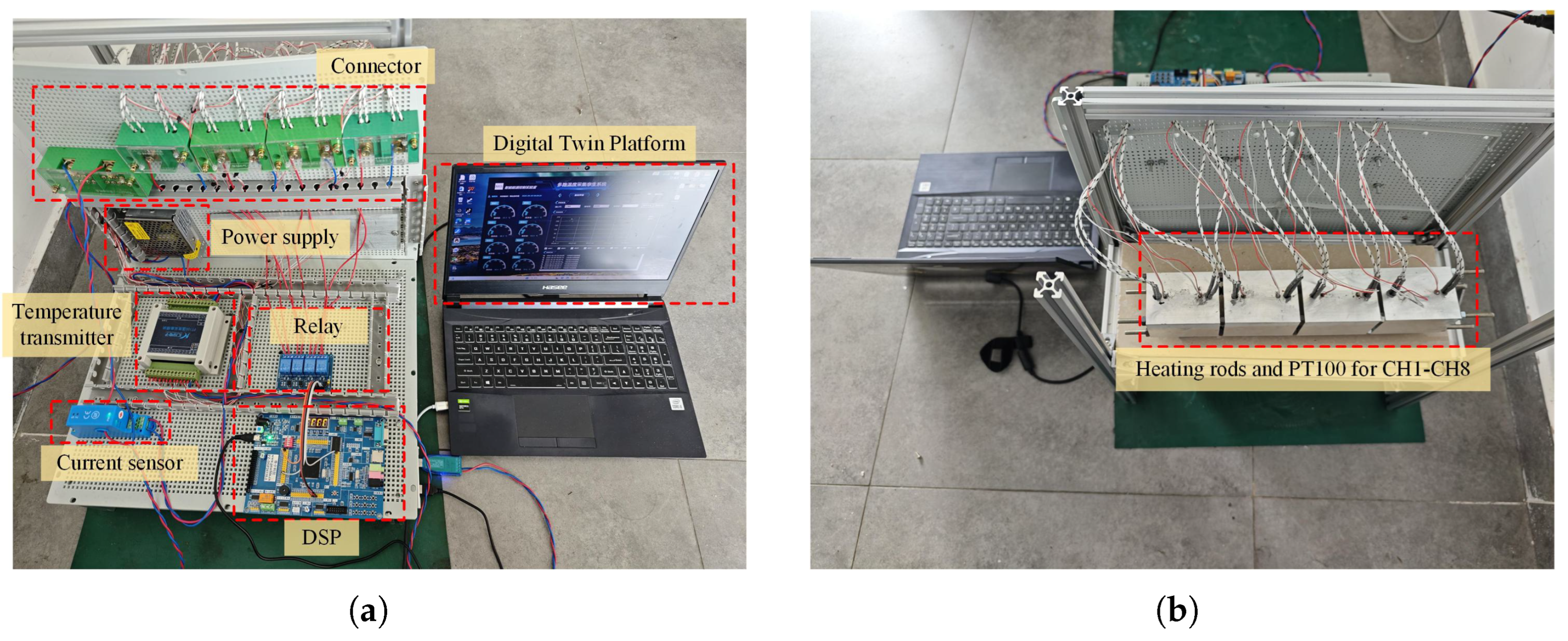

This study combines temperature regulation and fault diagnosis using a dual-AI-driven approach. On the one hand, the LM-BP controller and the PID controller are implemented on the physical control unit to regulate the temperature. On the other hand, the proposed cyber-physical monitoring platform synchronizes real-time temperature and current measurements with a virtual representation for visualization, data logging, and offline analysis. The collected time-series data are further used to construct high-quality spatiotemporal datasets for benchmarking 1D-CNNs and other learning-based models in fast detection and localization of heater-channel anomalies. The main contributions of this brief are summarized as follows:

- 1.

A digital-twin-inspired monitoring framework is developed to synchronize multi-source measurements with a virtual representation, supporting real-time visualization, safe data collection, and offline what-if analysis for intelligent heating processes.

- 2.

A dynamic interaction strategy combining an LM-optimized BP neural network with conventional tuned PID control is proposed, which enables adaptive online learning and precise temperature regulation in nonlinear, large-time-constant, and large-time-delay multi-point heating systems.

- 3.

A relay-injected open-circuit fault mechanism and spatiotemporal dataset construction scheme are developed. Using these datasets, a 1D-CNN-based diagnostic model achieves fast detection and channel-level localization of heater open-circuit induced channel anomalies.

The rest of this article is organized as follows.

Section 2 and

Section 3 provides a detailed introduction to the digital-twin-inspired monitoring system and temperature control algorithms.

Section 4 discusses the fault diagnosis methodology and compares it with alternative approaches, while

Section 5 presents the simulation setup and experimental validation on the multi-point aluminum block heating platform. Finally,

Section 6 concludes this article.

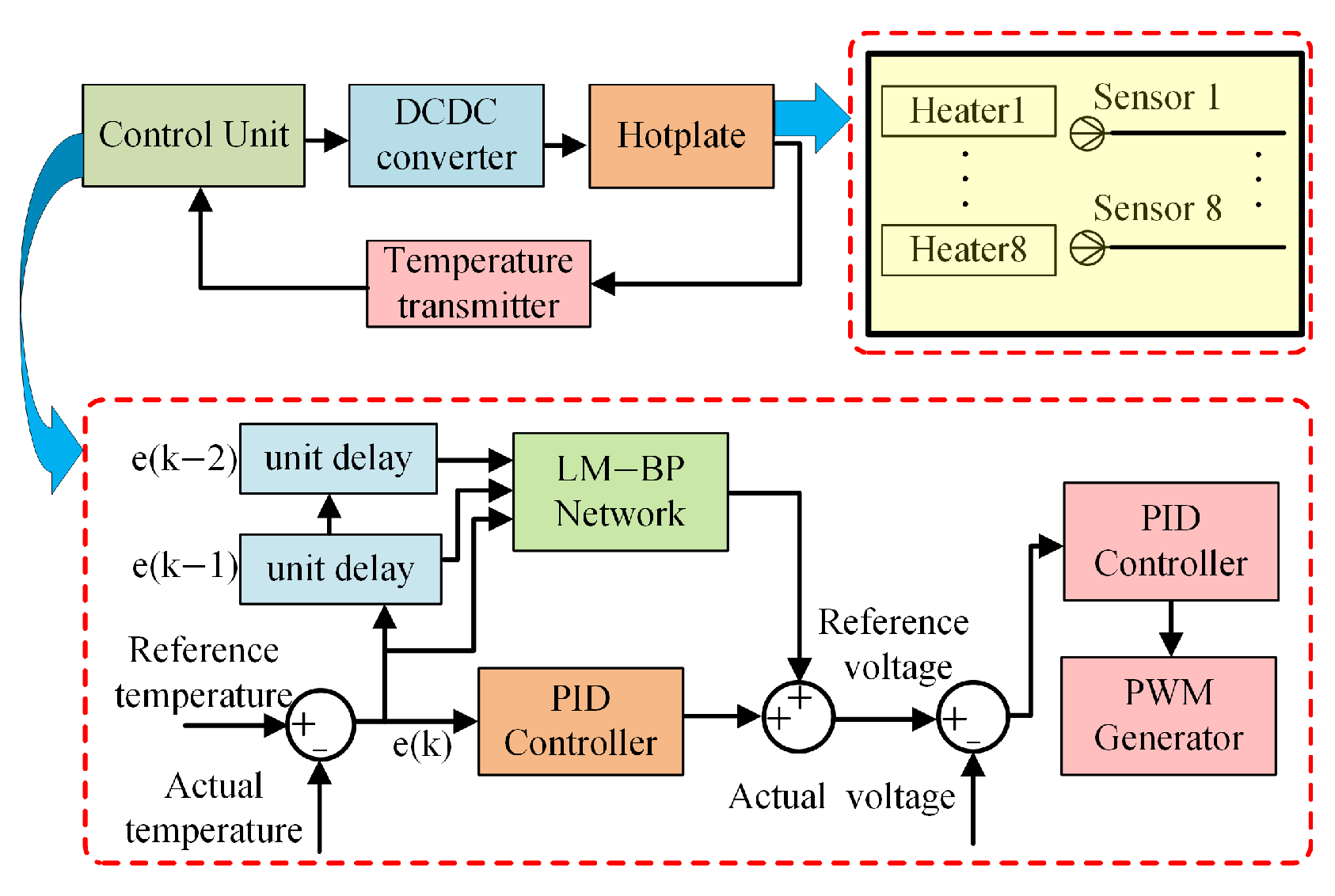

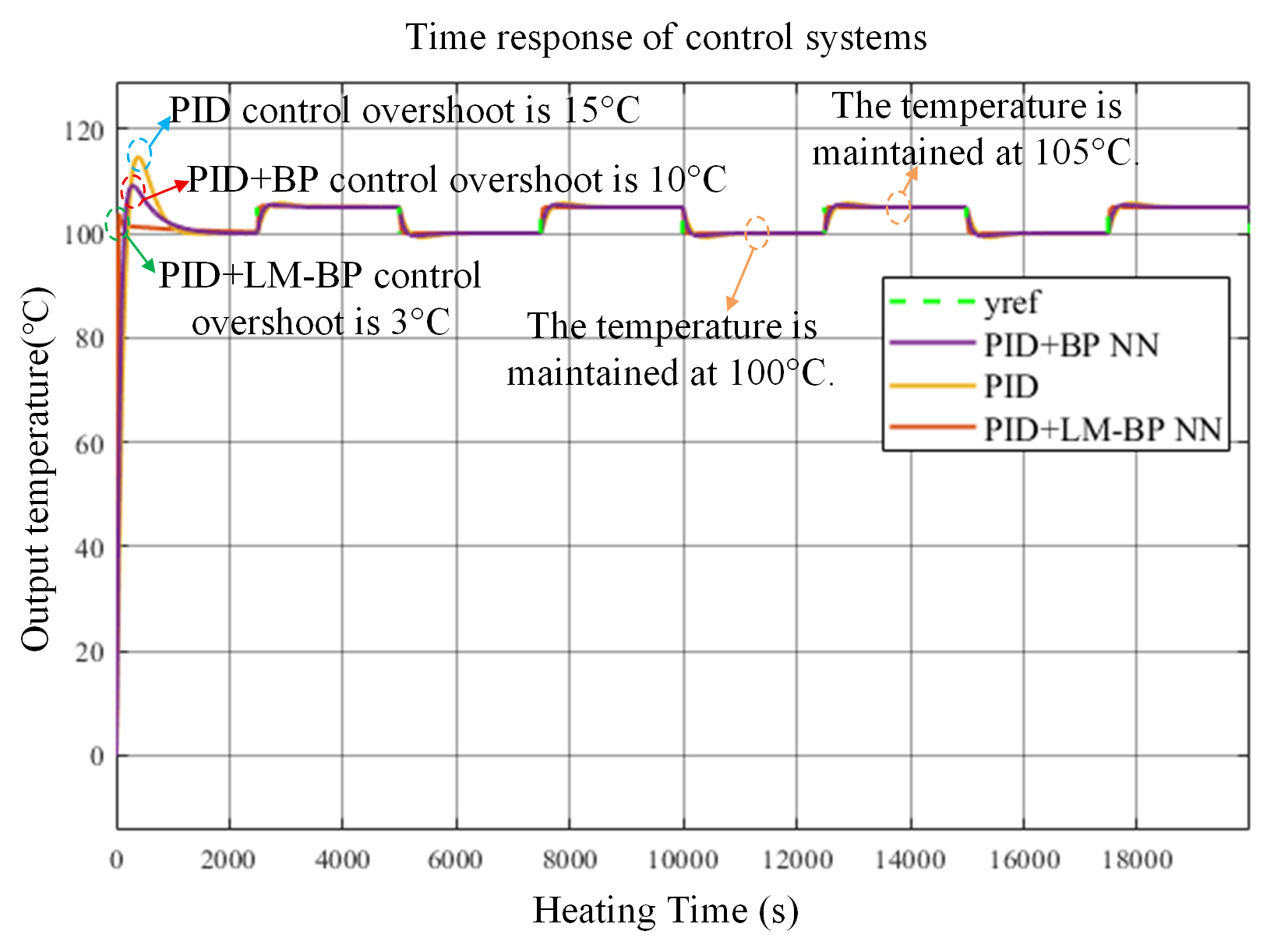

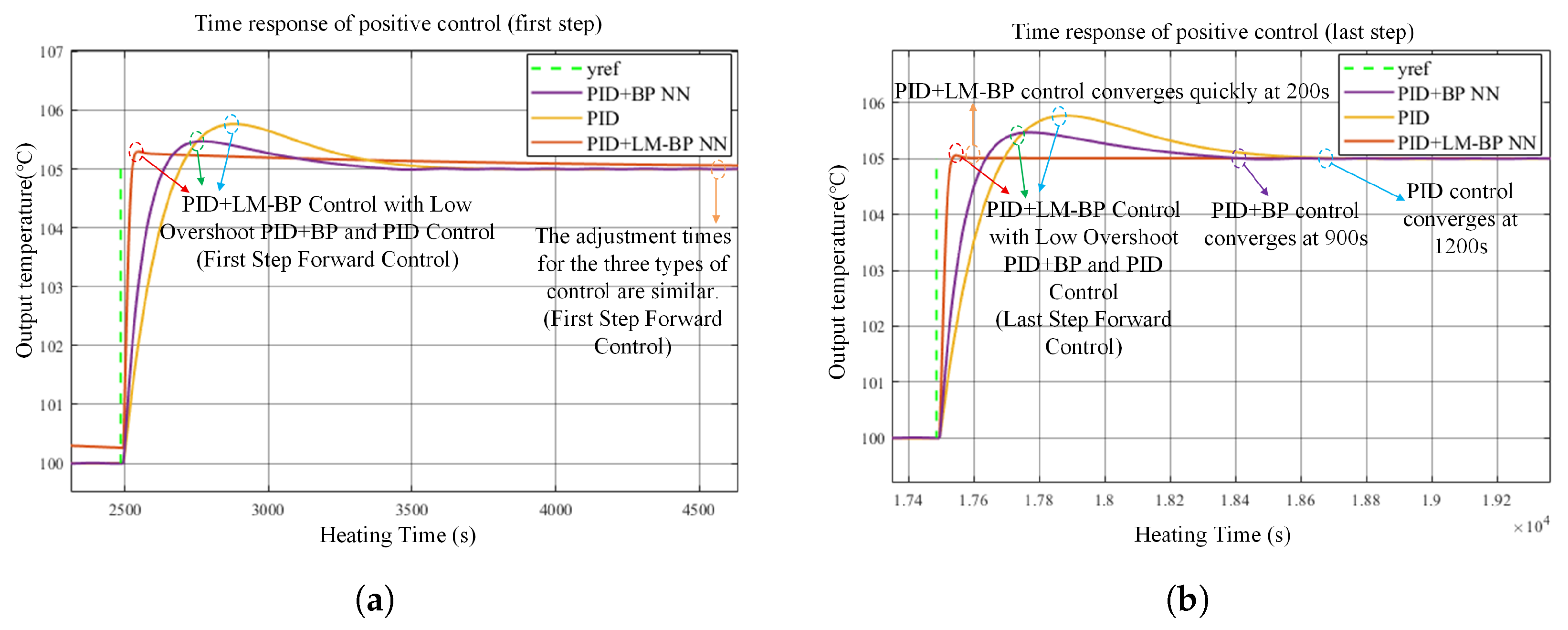

3. High-Precision Temperature Control System Based on LM-Optimized BP Neural Network

The digital-twin-inspired monitoring system offers real-time feedback on temperature fluctuations and facilitates the prediction of operational conditions and optimization modifications within a simulated environment. It attains real-time temperature regulation via continuous surveillance and data collection. This study utilizes a BP neural network augmented by the Levenberg–Marquardt algorithm, in conjunction with a PID controller, to attain high-precision temperature control.

Figure 4 illustrates a comprehensive block diagram of the temperature control system for the multi-point aluminum block heating platform.

The complete block diagram of the control system includes a control module, a power-conversion module, and a controlled-object module featuring temperature feedback. The system employs eight low-voltage heating rods to warm the aluminum block. The control scheme utilizes a two-loop strategy, featuring a temperature outer loop and a voltage inner loop. The system gathers real-time temperature data using a three-wire platinum resistance thermometer (RTD) along with a temperature transmitter, compares this data to the setpoint, and produces a target voltage signal through the control algorithm. The target voltage is compared to the measured voltage; the PID controller processes the error and sends it to the PWM generator to create the necessary PWM waveform. The PWM subsequently controls the power switching device to manage the input voltage of the heating rods, thus attaining the desired temperature.

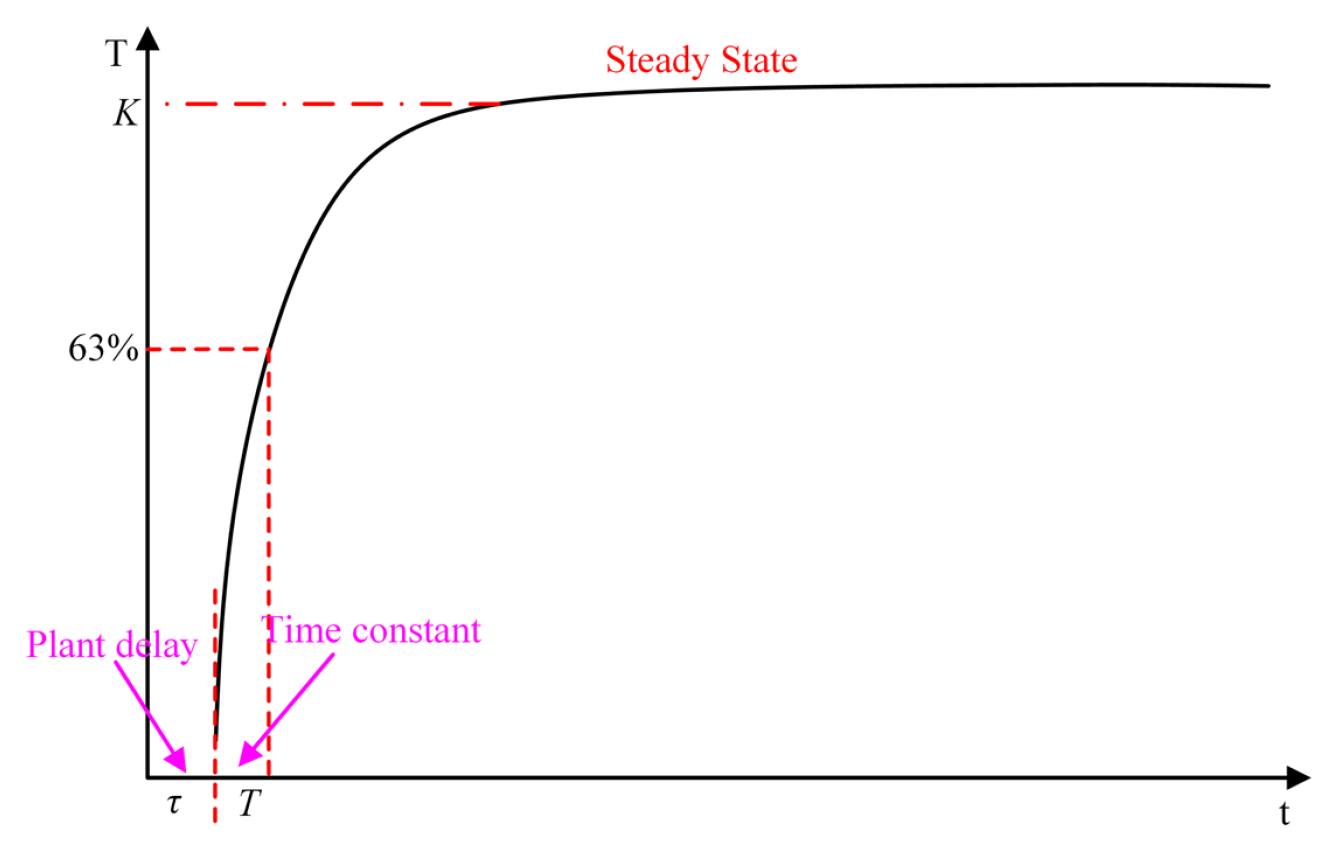

3.1. Controlled Object with Time Delay

The controlled object is a thermal processing system that can be modeled as a first-order plus time-delay (FOPTD) system. The system’s transfer function is given in Equation (

1), where

K is the steady-state gain relating output temperature to input current,

T is the plant time constant, and

is the response delay.

When a step input of magnitude

is applied to the system, the output response is given by Equation (

2). The time-domain response

can be divided into two stages. The first stage is a pure delay, during which the system has not yet begun to respond, and the output remains at its initial value. The second stage is characterized by a first-order rise. After the delay, the output follows a first-order exponential transient and approaches its steady-state value

K. The step response of the system is shown in

Figure 5.

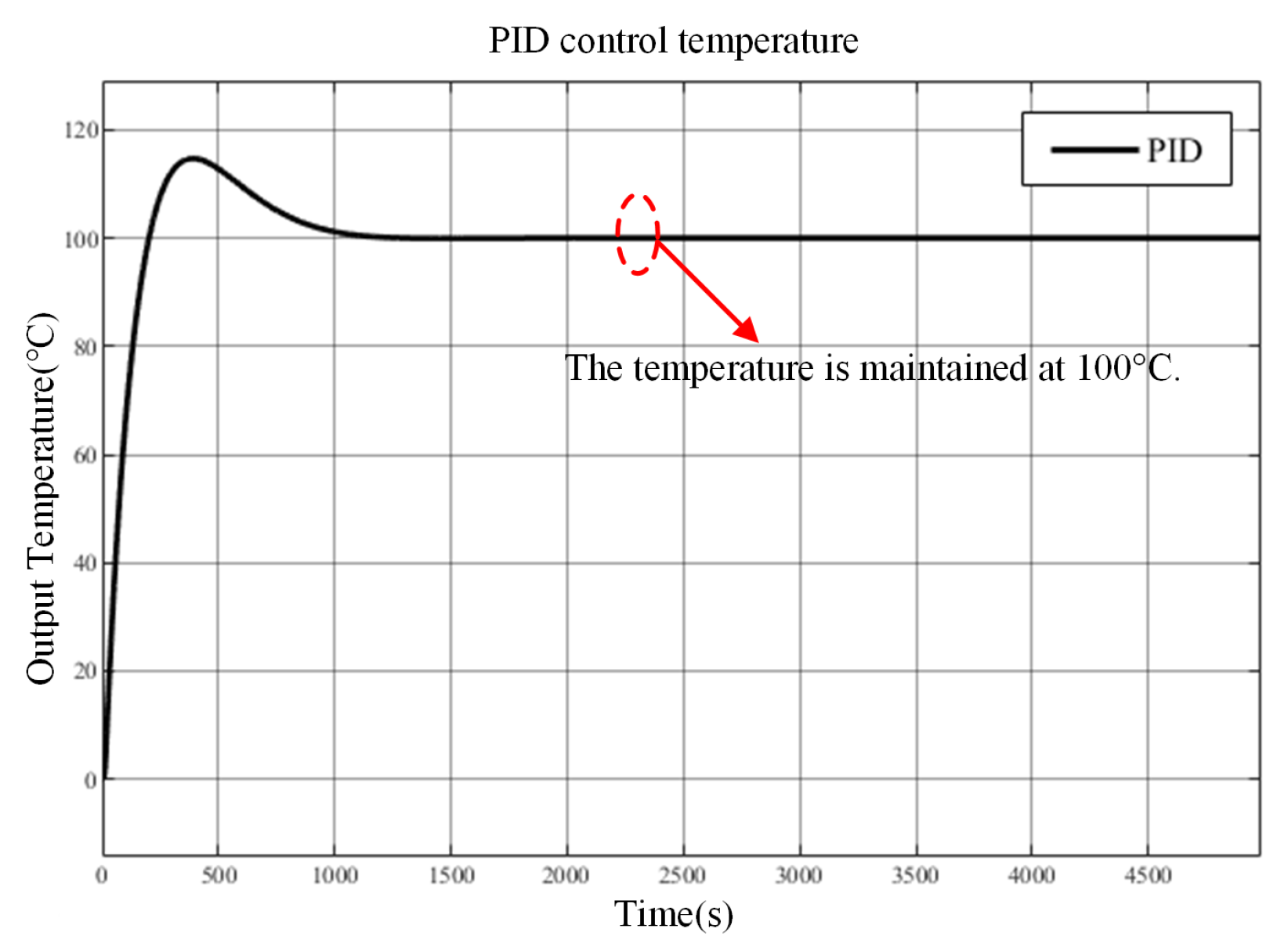

3.2. Traditional PID Control

Upon completion of training, the neural network controller assumes the principal control function. During the startup phase, a PID controller regulates the system due to the network’s need for time to learn and stabilize weights. The fundamental PID control law is expressed in Equation (

3), where

denotes the proportional gain,

represents the integral time constant, and

signifies the derivative time constant.

Stability is an essential requirement in control systems. The stability of the PID controller, designed via the step response method, can be assured. The PID parameter values

,

, and

are derived from

,

K, and

T as specified in Equation (

1), with their respective formulas presented in Equations (4)–(6). The PID tuning parameters adopted in this study and the key simulation constraints are summarized in

Table 1.

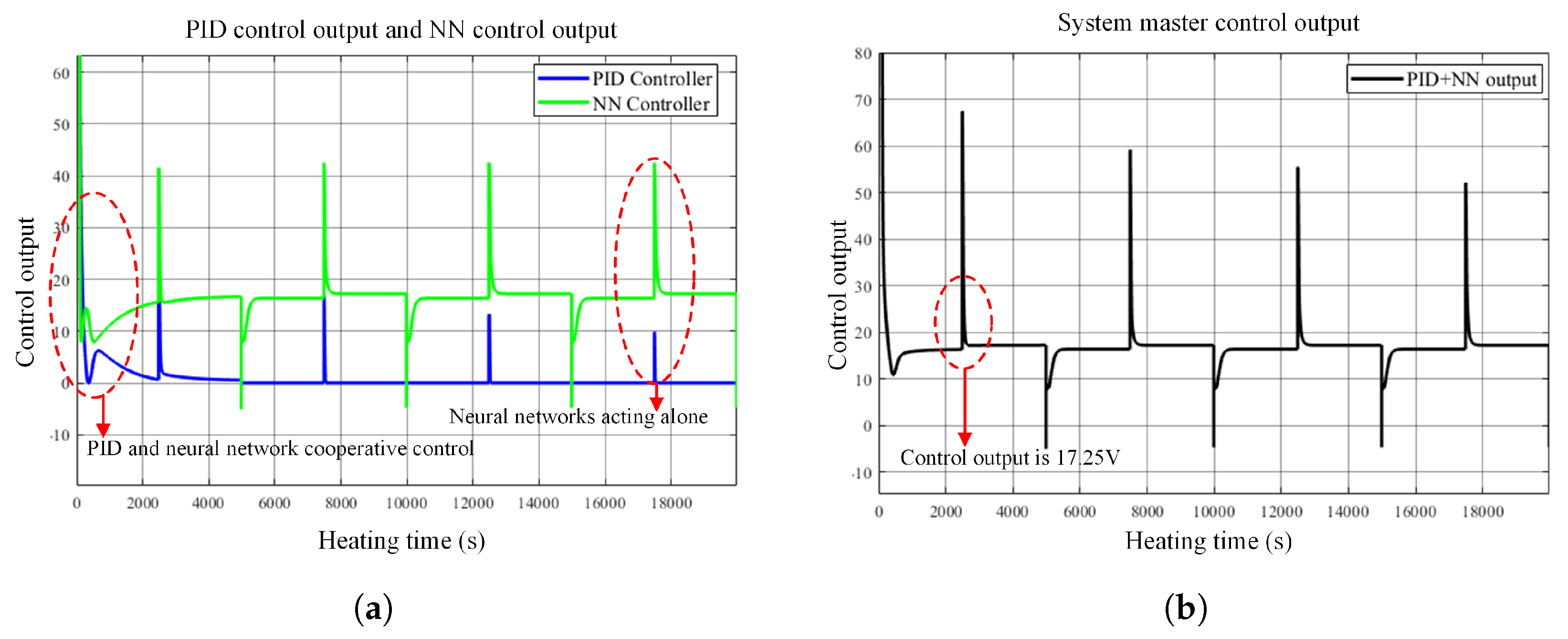

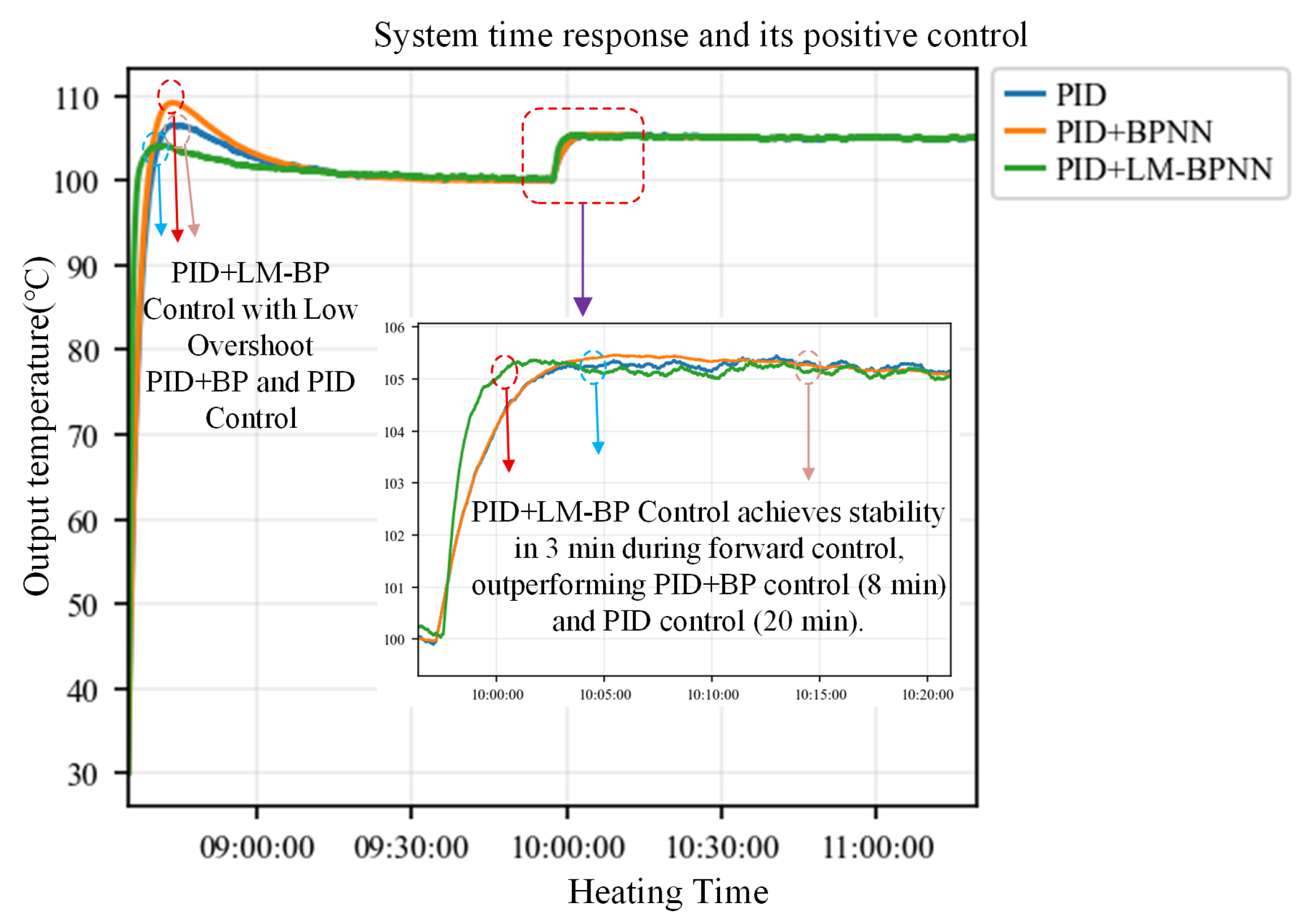

Nonetheless, due to the nonlinearity of the controlled plant and its significant time delay, a PID controller alone results in suboptimal control performance. Consequently, a neural network is implemented to optimize temperature regulation and augment overall control efficacy. In this study, the final control command is obtained by directly adding the outputs of the PID controller and the neural-network controller. At the beginning, the PID output provides a robust baseline, while the neural-network output is continuously refined via Levenberg–Marquardt-based online updating. As the neural network improves its compensation capability and reduces the tracking error, the magnitude of the PID correction required to maintain performance correspondingly decreases over time.

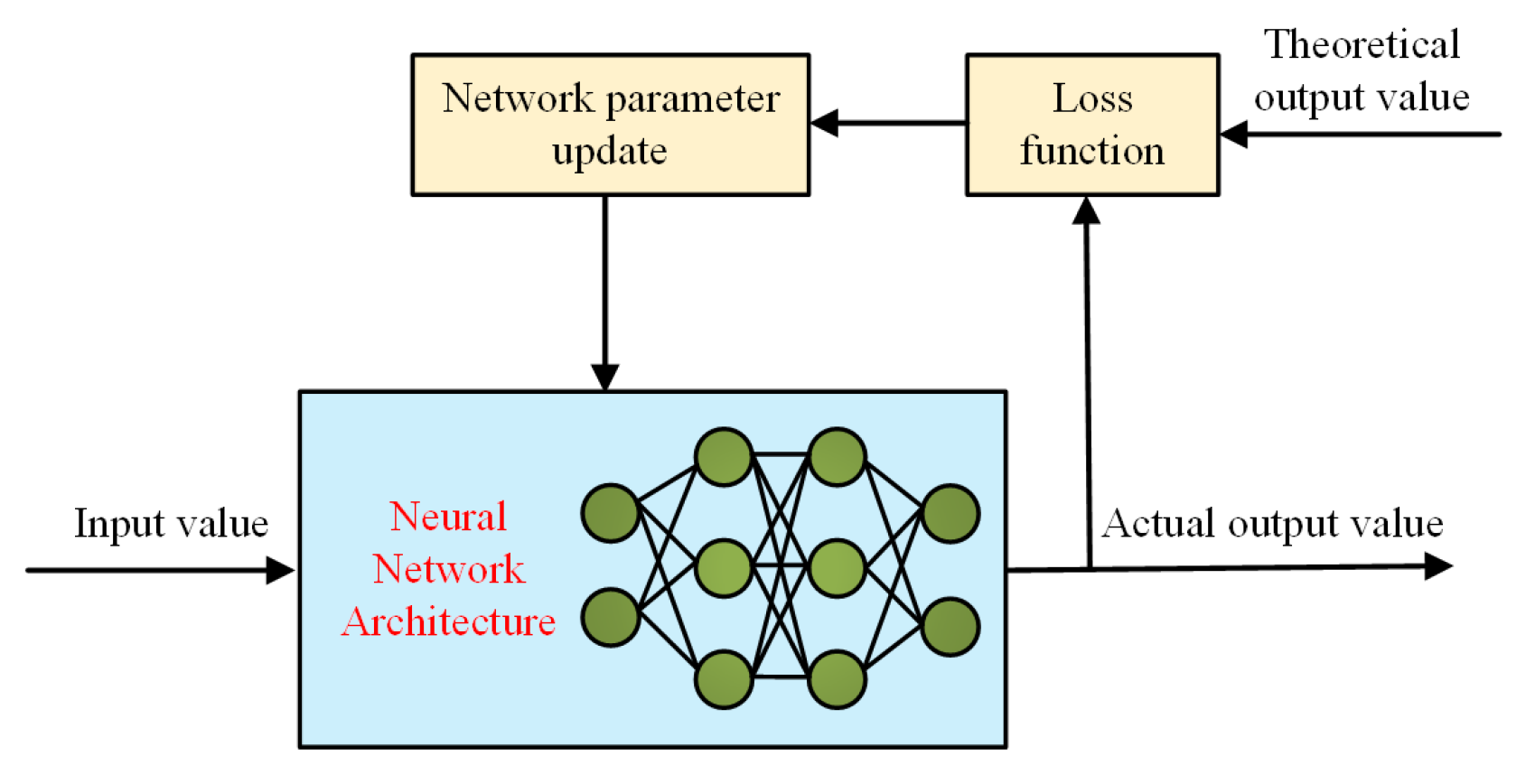

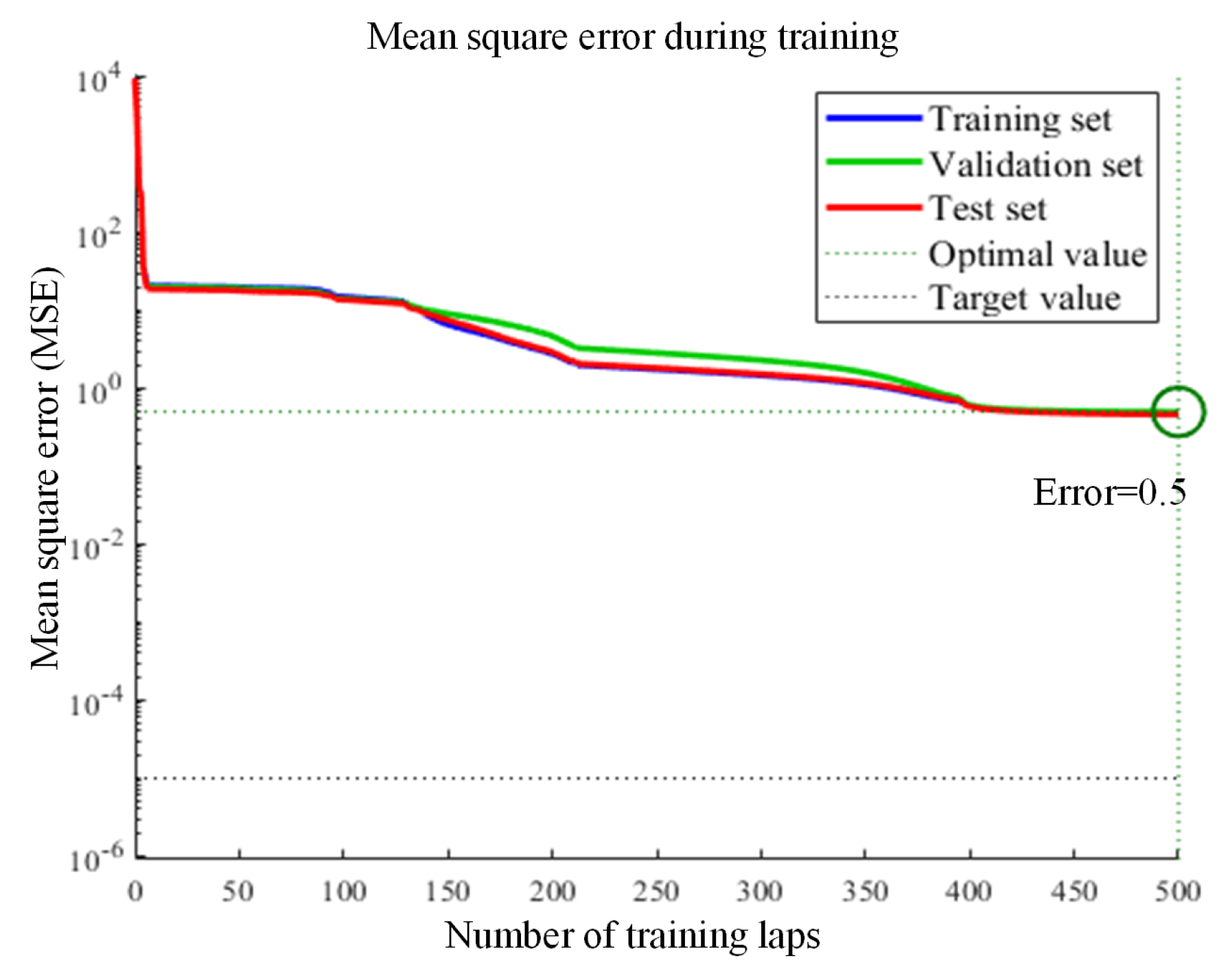

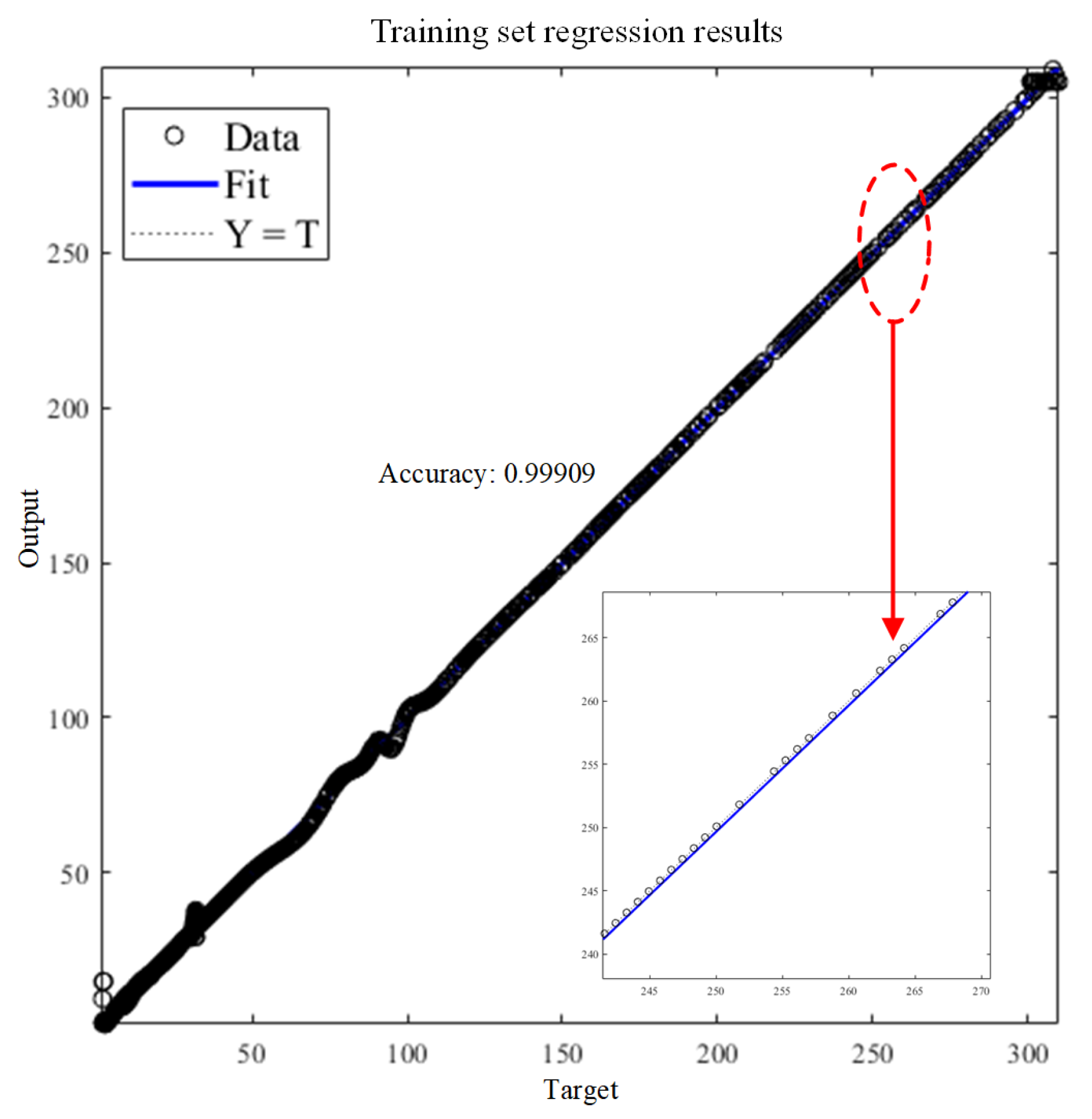

3.3. BP Neural Network

Artificial neural networks (ANNs) are machine learning models that replicate the functions of biological neurons. By emulating the information-processing functions of the human brain, they facilitate modeling and decision-making on intricate datasets. A conventional BP neural network is a standard ANN architecture, typically consisting of an input layer, one or more hidden layers, and an output layer. Neurons convert signals via activation functions. Training generally involves forward propagation, error calculation, and backward propagation. Backpropagation is fundamental to BP networks: gradients of a loss function concerning the network parameters are calculated and utilized with gradient descent to optimize the network.

Figure 6 illustrates the fundamental architecture of a BP neural network. These networks can approximate nonlinear relationships. The loss function is derived from the difference between the predicted outputs and the target outputs. The weights and biases are updated to minimize the loss by calculating the partial derivatives of the loss with respect to the parameters.

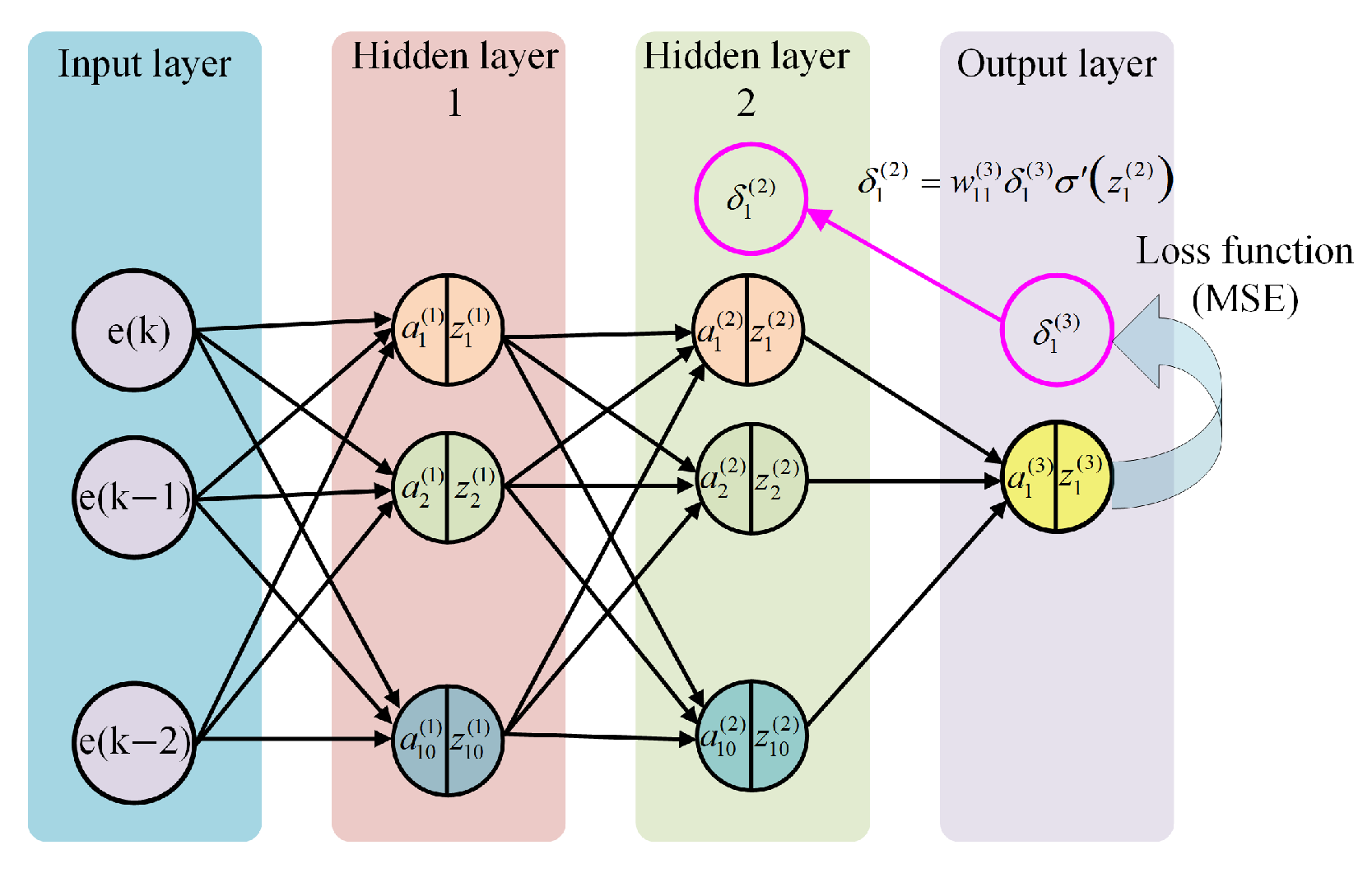

To achieve high-precision temperature control, a multilayer BP neural network is introduced alongside the PID controller. The network architecture, shown in

Figure 7, consists of one input layer, two hidden layers, and one output layer; each hidden layer contains 10 neurons. The inputs to the network are the current temperature-tracking error e(k) and the two lagged errors e(k − 1) and e(k − 2), where

denotes the temperature setpoint and

is the measured temperature.

3.3.1. BP Neural Network Forward Propagation

The BP neural network utilizes the error signals e(k), e(k − 1), and e(k − 2) from a conventional PID temperature-control system as inputs, while employing the PID controller output as the target signal. The hidden layers employ the hyperbolic tangent (tanh) activation function, while the output layer is linear. The matrices for weight and bias transitioning from the input layer to the first hidden layer are presented in Equation (

7), while the input vector and the formulas for element-wise calculations can be found in Equations (8) and (9). The matrices for weight and bias transitioning from the first hidden layer to the second hidden layer, as well as the formulas for element-wise calculations, are presented in Equations (10) and (11). The weight and bias matrices connecting the second hidden layer to the output layer, as well as the formulas for element-wise calculations, are presented in Equations (12) and (13).

In these expressions, , , and denote the weight matrices for layers 1–3, and , , and denote the corresponding bias vectors. Specifically, the BP network consists of an input layer, two hidden layers, and an output layer. Let be the input dimension, and let and be the numbers of neurons in the first and second hidden layers, respectively. Then, and map the input layer to the first hidden layer, and map the first hidden layer to the second hidden layer, and and map the second hidden layer to the output layer. The element denotes the weight connecting the j-th neuron in layer to the i-th neuron in layer l, and denotes the bias of the i-th neuron in layer l.

The activation functions and are hyperbolic tangent (tanh) functions with an output range of , which introduce nonlinearity and improve the representation capability of the hidden layers. The output-layer activation is chosen as a linear function to produce an unconstrained real-valued output, which is suitable for regression and helps prevent output saturation, thereby facilitating stable gradient-based training during backpropagation.

3.3.2. BP Neural Network Error Calculation

For nonlinear regression problems, neural networks typically use the mean squared error (MSE) as the loss function. For the

k-th sample with target

and predicted output

, the sample error and the mean squared error are defined in Equations (14) and (15).

In these expressions,

denotes the error between the target and predicted outputs, and

E denotes the mean squared error. For a dataset with

N samples, the overall loss is given in Equation (

16).

In this expression, L denotes the overall loss of the prediction model, N is the number of samples, and denote the true value and the predicted value of the nth sample, respectively.

3.3.3. Backpropagation in BP Neural Networks

Backpropagation calculates the gradients of the loss concerning the parameters using the chain rule, transmitting error signals from the output layer of the BP neural network controller back to the input layer. The network has been optimized through the use of gradient descent. For every sample and each layer, the error terms and parameter gradients are calculated to establish the update direction; using a learning rate , the weights and biases are adjusted accordingly. The gradient for the output layer with a linear activation is derived directly using the chain rule, streamlining the computation process.

The error term

, the weight gradient

, and the bias gradient

are given by Equations (17)–(19).

Both the activation functions of the first and second hidden layers are the hyperbolic tangent (tanh); the corresponding formulas for the error, weight gradients, and bias gradients are given in Equations (20)–(25).

In these expressions, the error terms for the second and first hidden layers are denoted by

and

, respectively; the corresponding weight and bias gradients are

and

for the second hidden layer, and

and

for the first hidden layer. After obtaining the gradients for each layer, the parameters can be updated. The update formulas for weights and biases are given in Equations (26) and (27).

Through repeated iterations of this process, the neural network progressively adjusts all network weights and biases on the given training data, thereby minimizing the mean squared error (MSE) and completing the regression fit.

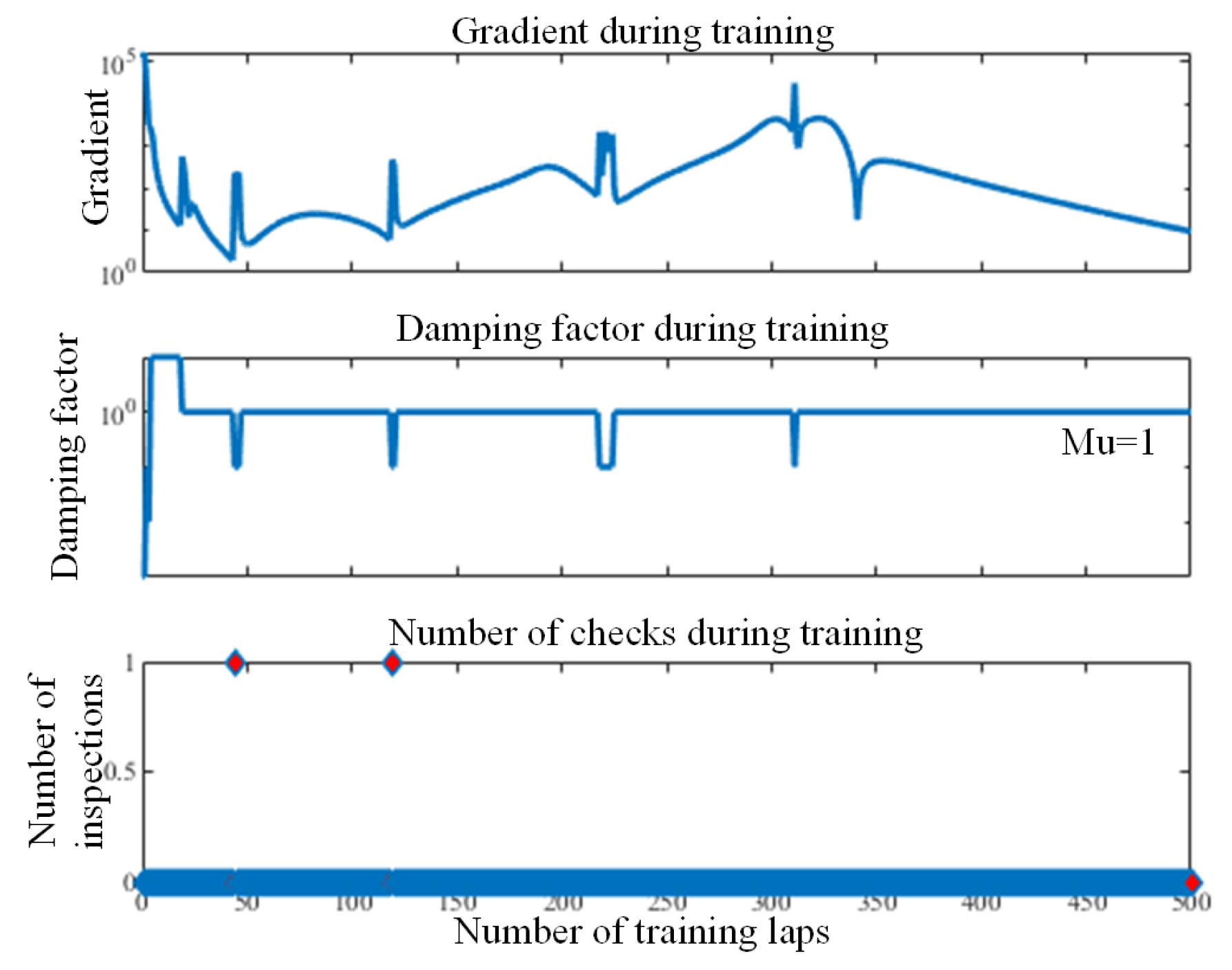

3.4. BP Neural Network Improved by the Levenberg–Marquardt (LM) Algorithm

Conventional backpropagation (BP) neural networks utilize solely first-order information in the optimization process. To achieve a more rapid and stable minimization method, second-order information (the Hessian) may be integrated. Nevertheless, due to the elevated dimensionality and computational expense, explicitly constructing the Hessian and resolving the corresponding Newton system are unfeasible for neural networks. The Gauss–Newton (GN) approximation of the Hessian is utilized to resolve this issue. The resultant approximation is presented in Equation (

28).

In this expression,

denotes the Jacobian matrix. With this approximation, second-order derivatives need not be computed; instead, all gradients can be obtained in a single backpropagation, enabling construction of

and approximation of the Hessian. On this basis, the standard Gauss–Newton step is given in Equation (

29).

The Gauss–Newton method utilizes an approximate second-order framework that generally converges more rapidly than standard gradient descent; however, the corresponding normal matrix is not assured to be strictly positive definite, which may result in the existence of

, rendering the method susceptible to noise. The Levenberg–Marquardt (LM) algorithm enhances the Gauss–Newton method by incorporating a damping factor and appending a scaled identity matrix to the Hessian approximation

. This modification alleviates the deficiencies of Gauss–Newton and facilitates more dependable parameter updates. The update step is delineated in Equation (

30).

In this expression, denotes the identity matrix and the damping factor. When is large, the parameter updates are equivalent to those of the standard gradient descent algorithm; when is small, the updates reduce to the Gauss–Newton (GN) algorithm, yielding fast second-order convergence.

4. Breakpoint Fault Diagnosis Based on a Digital Twin Aluminum-Block Heating Platform

The heating system of the aluminum-block heating platform, being a continuous and energy-intensive core industrial process, is susceptible to failure, frequently resulting in cascading production interruptions, significant economic losses, and safety hazards. Conventional fault-diagnosis techniques, dependent on human expertise and threshold-based detection, experience response delays and elevated error rates, complicating real-time, precise diagnosis. A virtual-physical (digital twin) interaction model of the aluminum block heating platform is developed to visualize and obtain key parameters in real-time, facilitating fault diagnosis using live data from the digital twin platform.

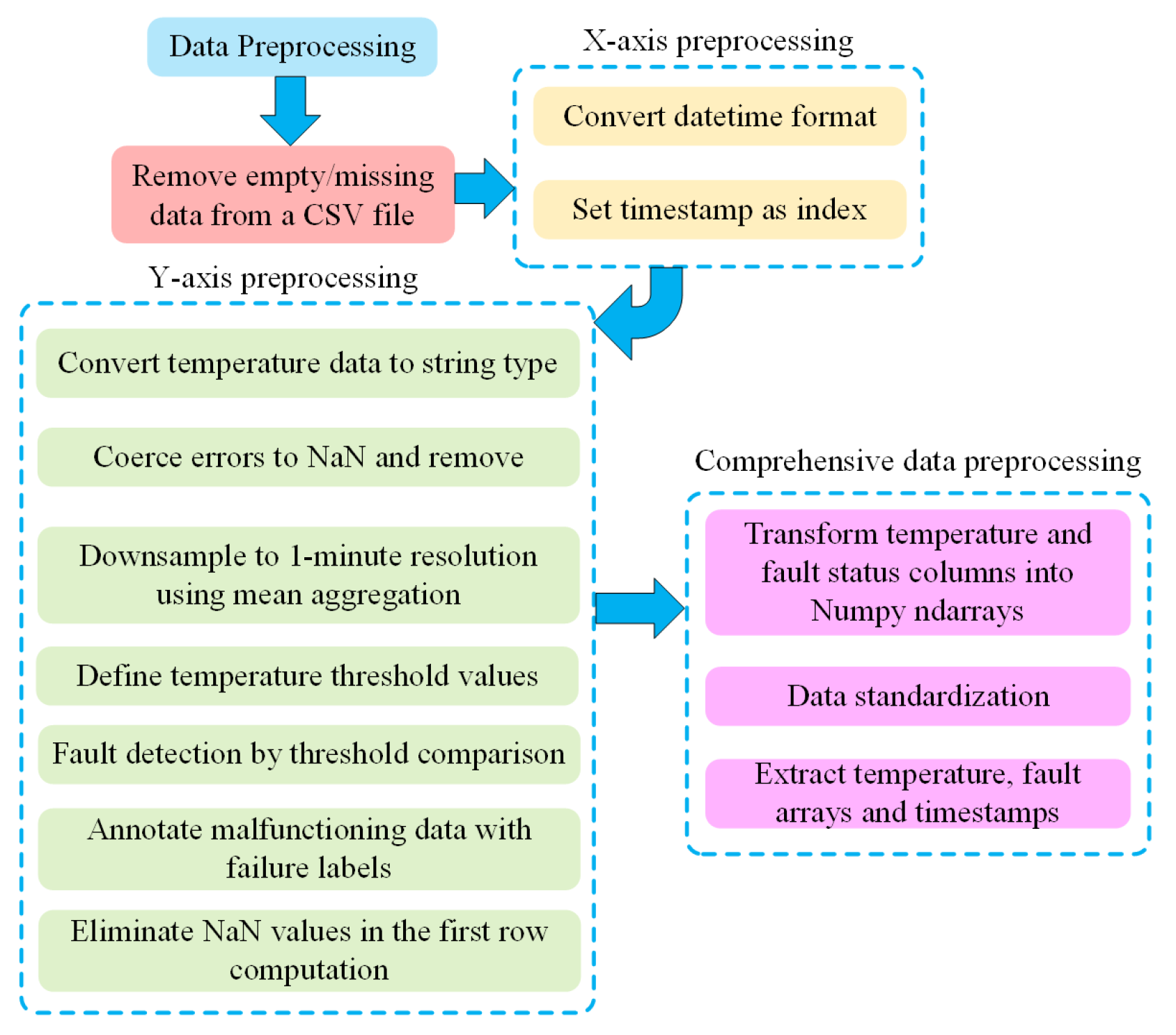

4.1. Data Preprocessing for Fault Diagnosis Based on Aluminum-Block Heating Platform

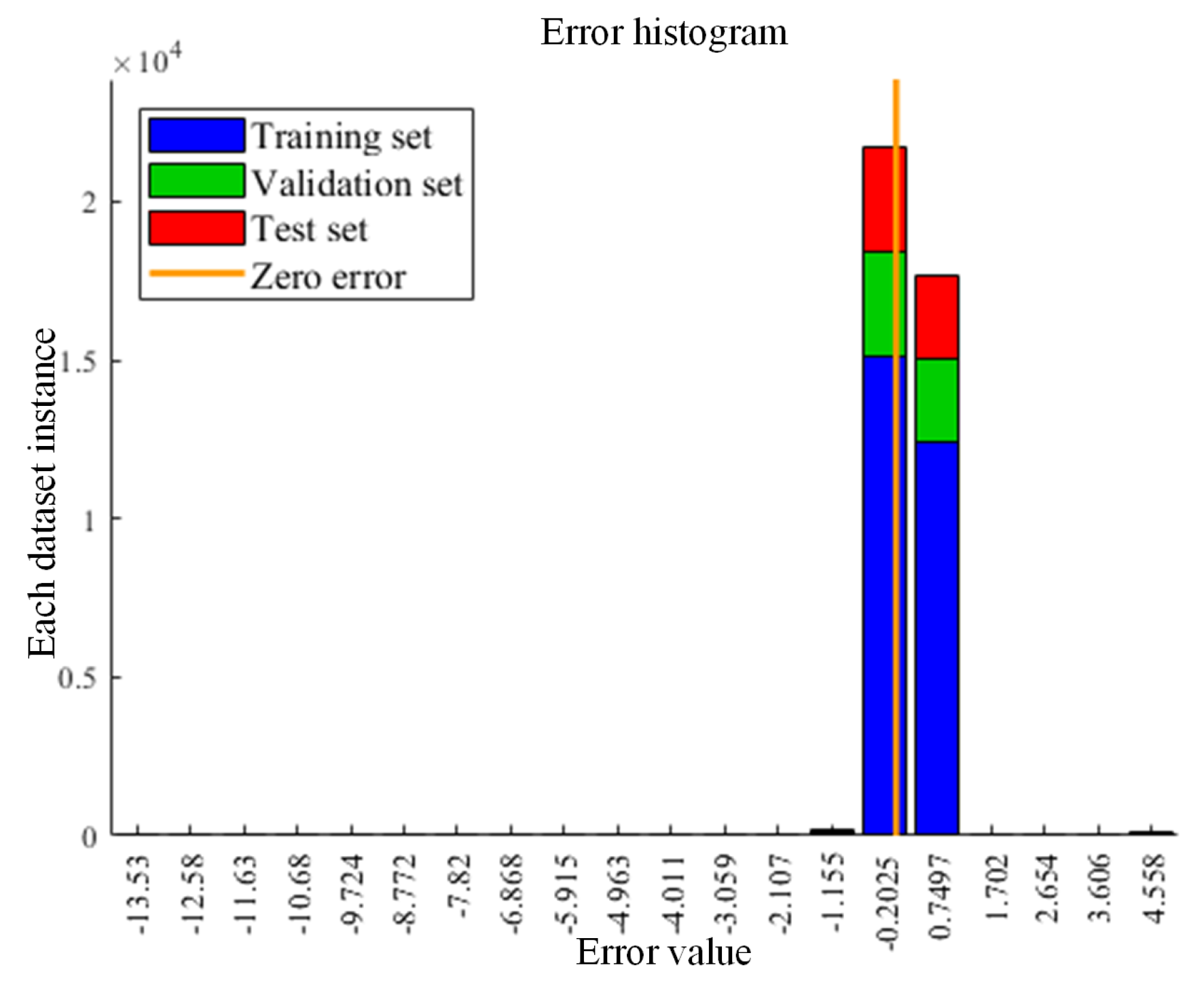

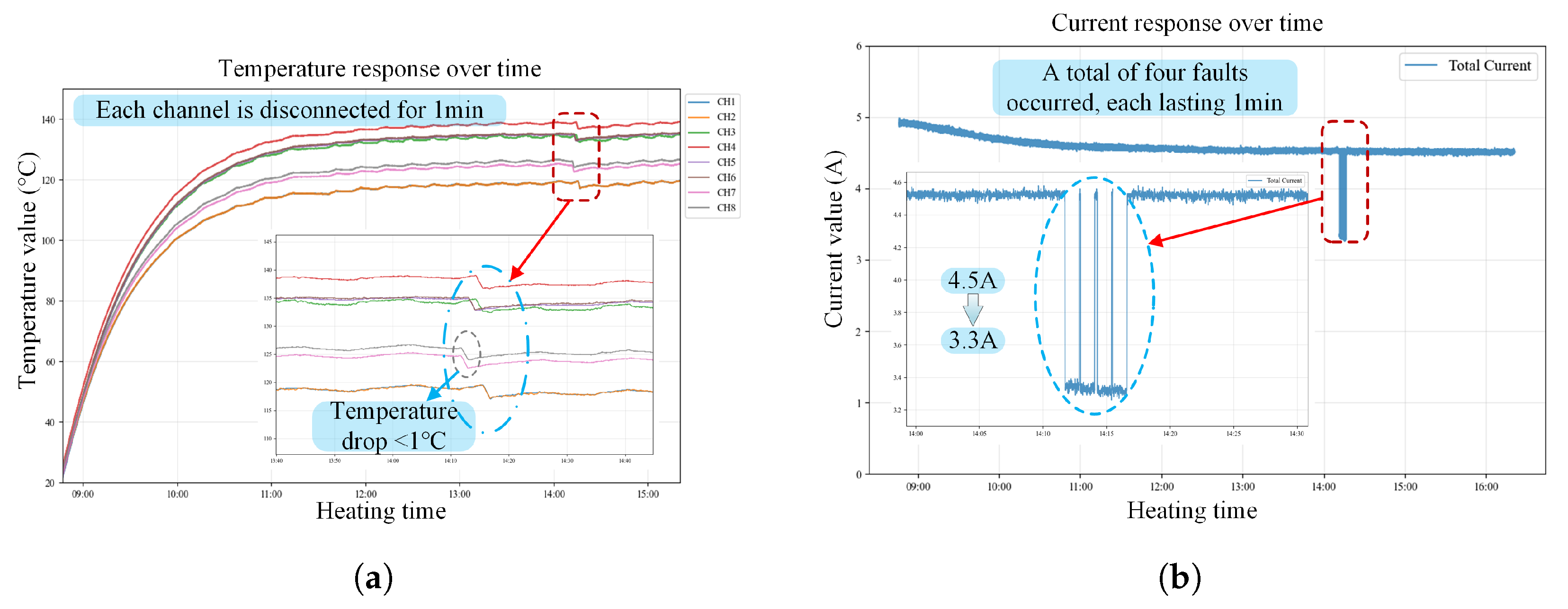

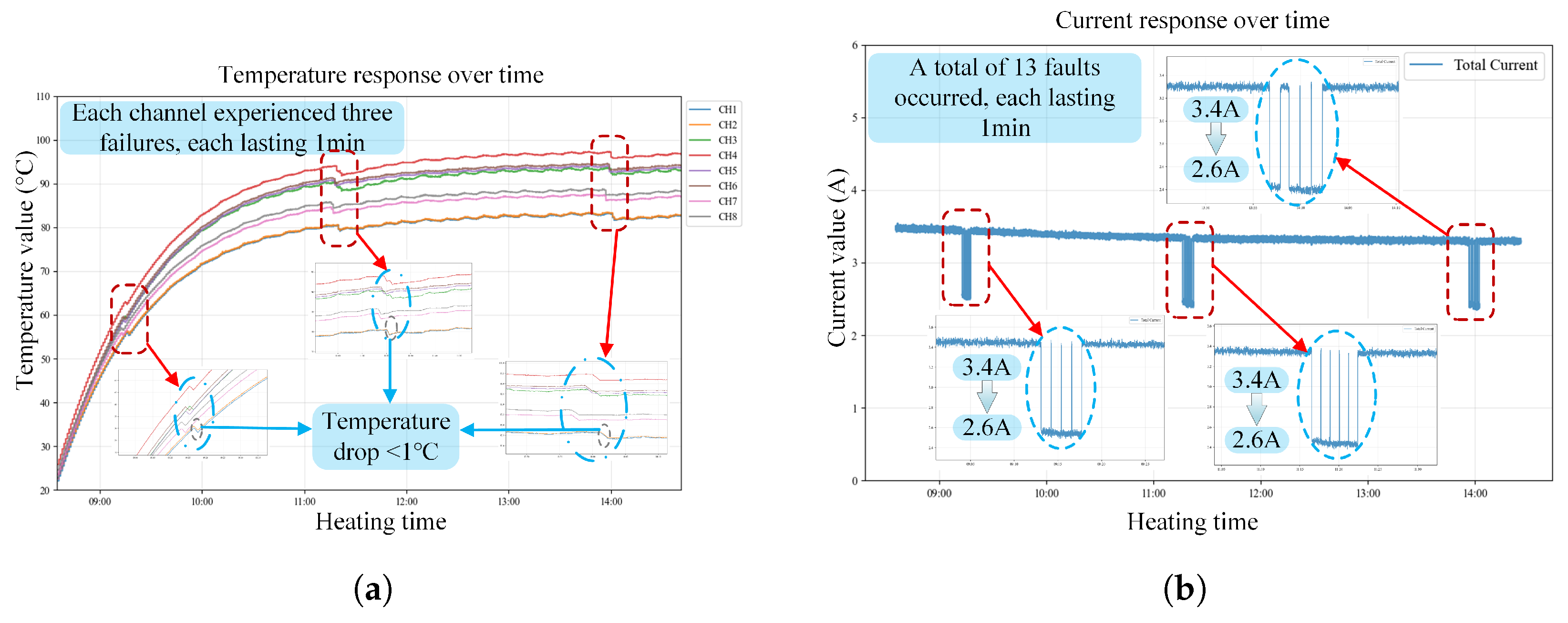

This study constructed a spatiotemporal fusion dataset using time-series temperature and current data obtained from the digital-twin platform. The initial step is to preprocess the entire dataset. Preprocessing transforms raw data into a format appropriate for model training, rectifies data-quality concerns, and enhances generalization, thereby improving model accuracy and reliability.

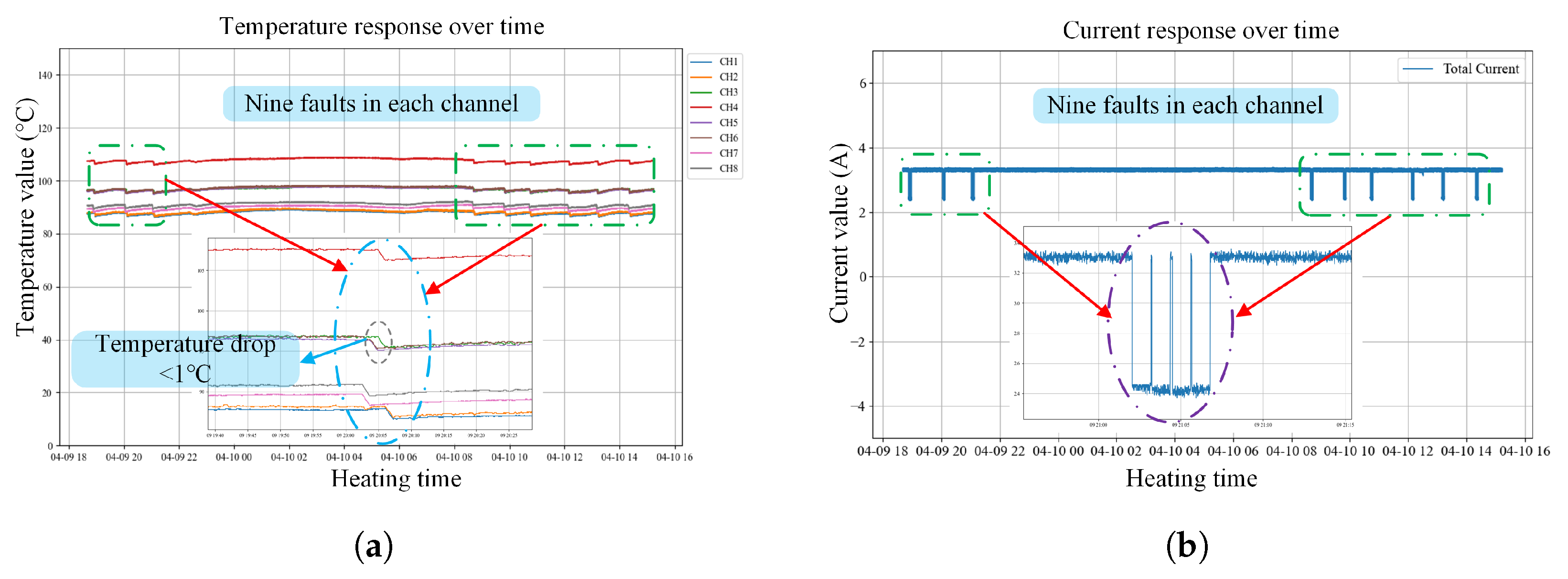

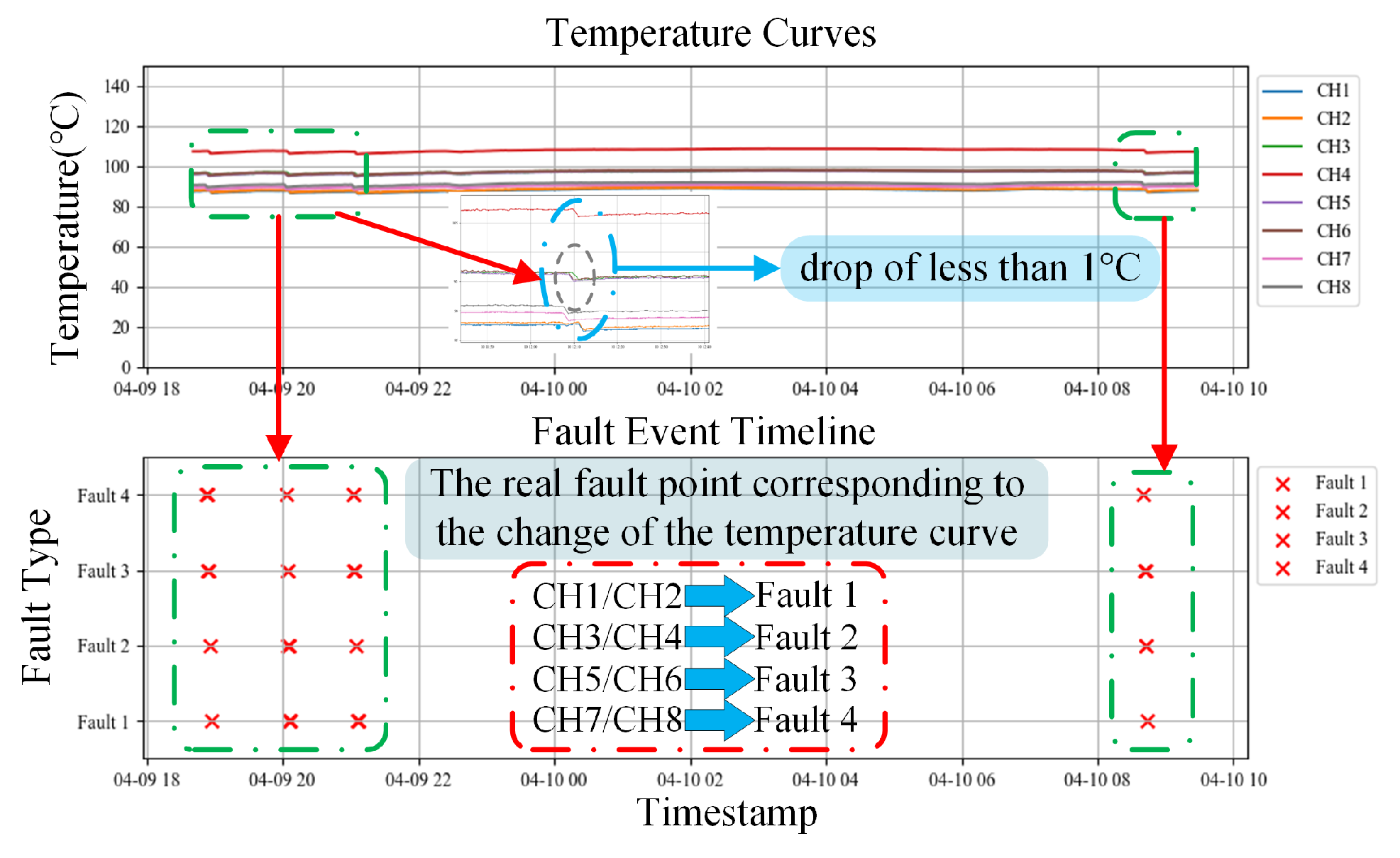

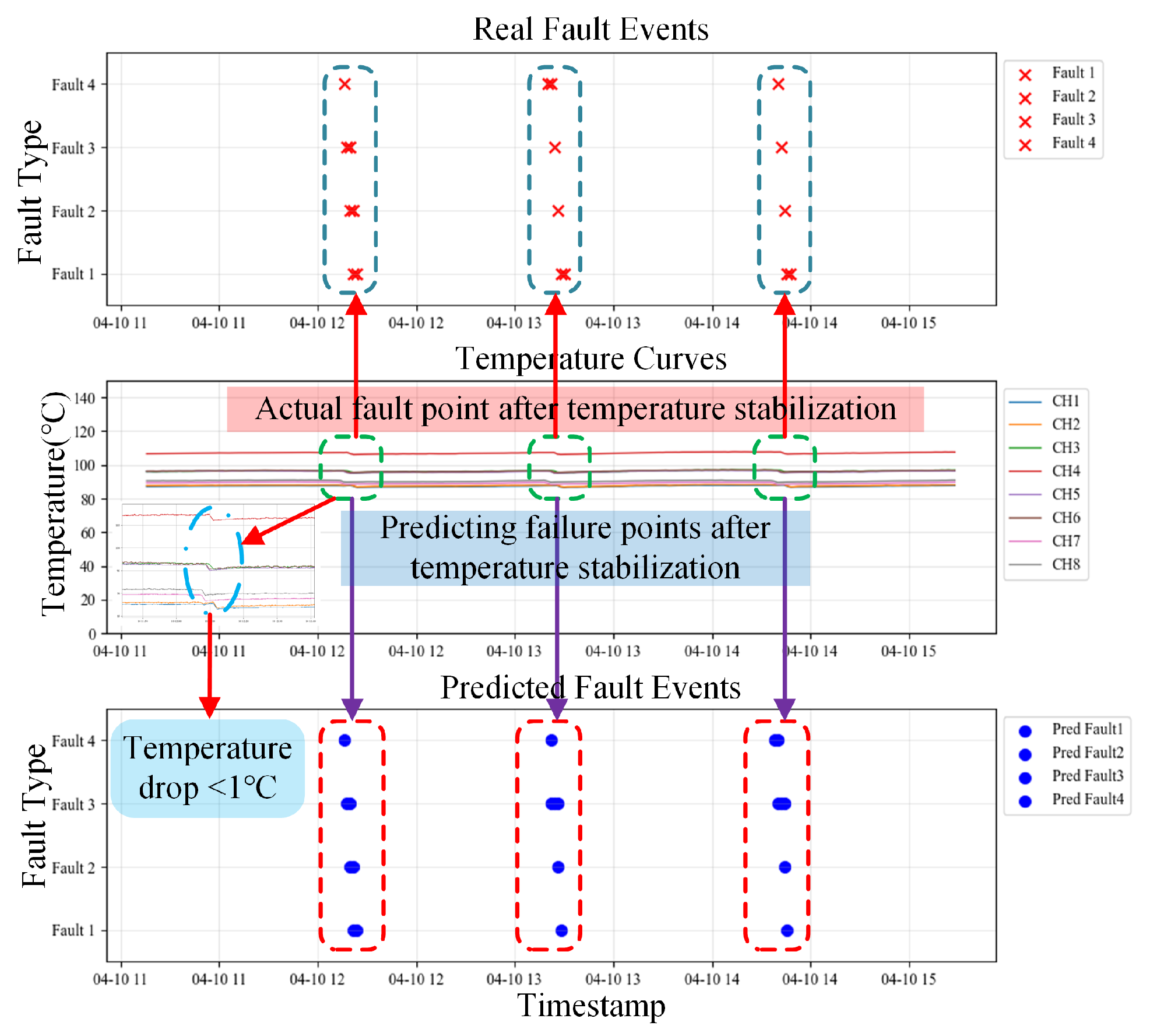

Figure 8 illustrates the comprehensive preprocessing workflow. A dataset containing temperature and current measurements under standard operating conditions and during breakpoint failures is acquired for post-preprocessing.

4.2. Selection and Introduction of Fault Diagnosis Models

To assess the accuracy and stability of the selected model, this paper employs multiple loss functions. The losses considered are mean squared error (MSE), binary cross-entropy (BCE), and binary cross-entropy with logits (BCEWithLogits).

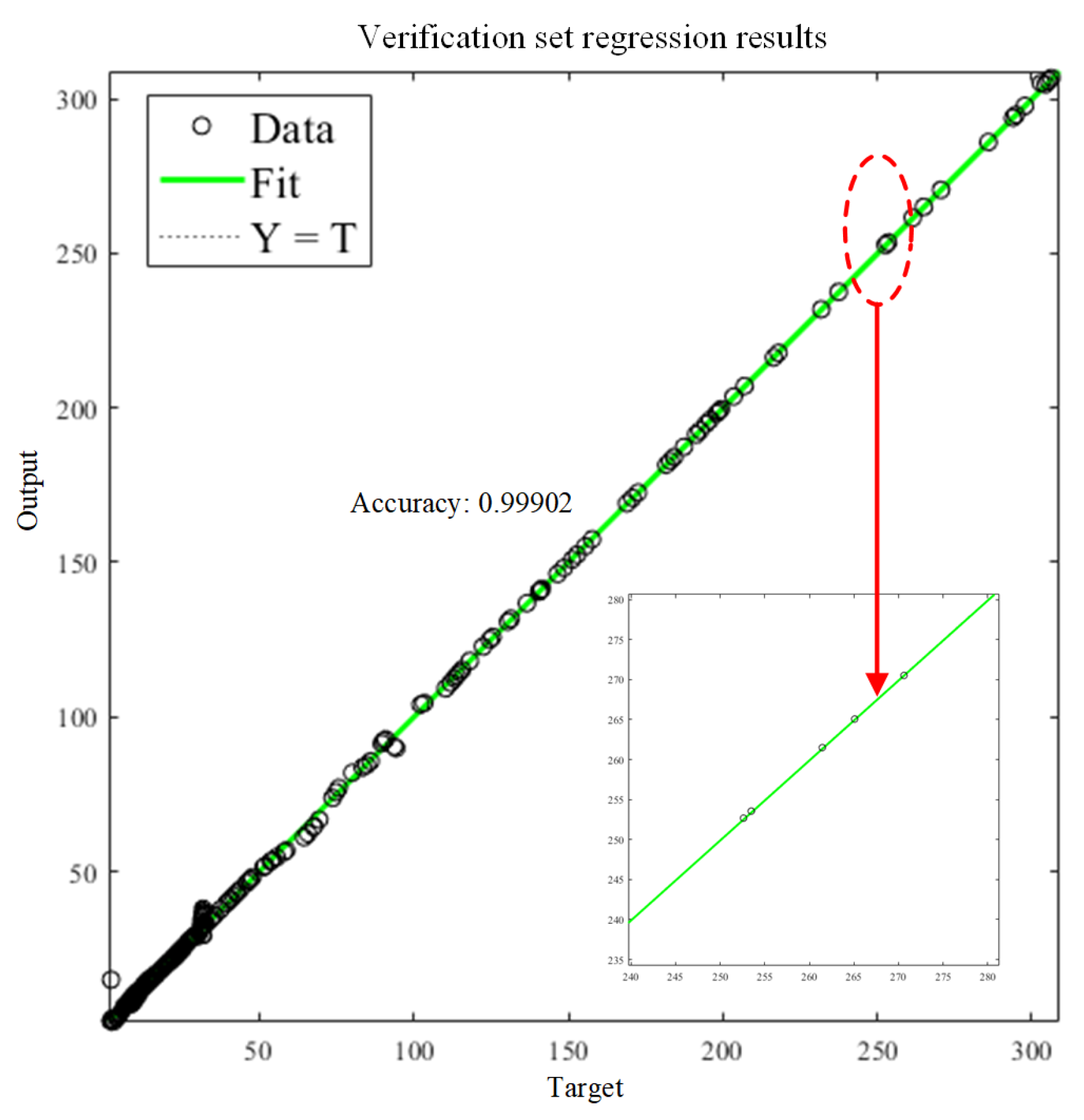

This study employs a 1D-CNN to preprocess data collected by a digital twin monitoring platform and subsequently performs fault diagnosis on the resampled data. To demonstrate the model’s effectiveness for fault diagnosis of the aluminum-block heating platform, the 1D-CNN is compared with two other classification algorithms. Using relevant evaluation metrics, the relative merits of the three models are assessed using relevant evaluation metrices. After preprocessing, the dataset is split chronologically into training, validation, and test sets to prevent temporal leakage. Specifically, the first 70% of the time-ordered samples is used for training, the next 10% is used for validation, and the final 20% is used for testing.

Table 2 reports the split statistics. The key implementation settings and hyperparameters for the three models, including the 1D-CNN, the BP neural network, and XGBoost, are summarized in

Table 3.

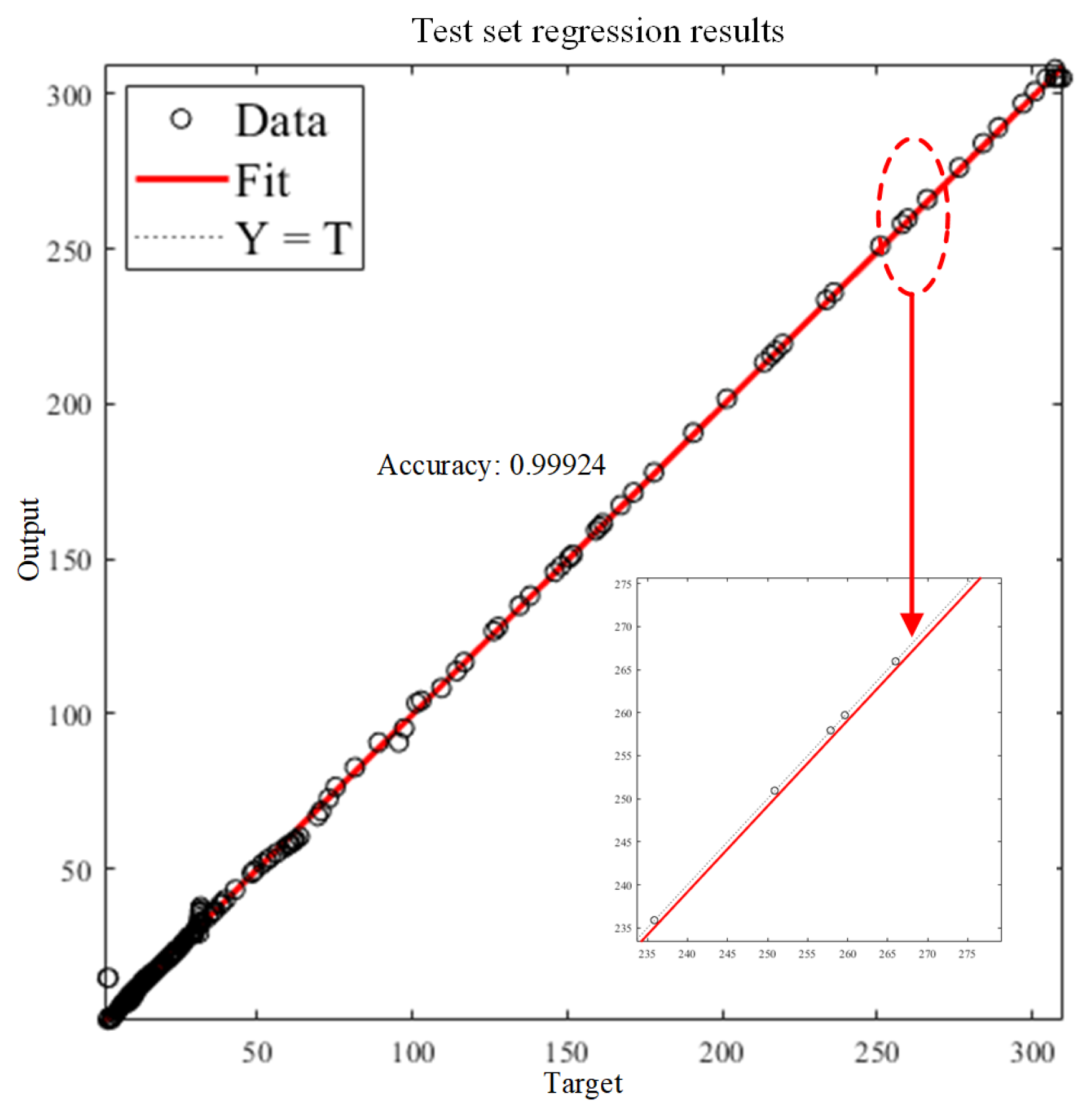

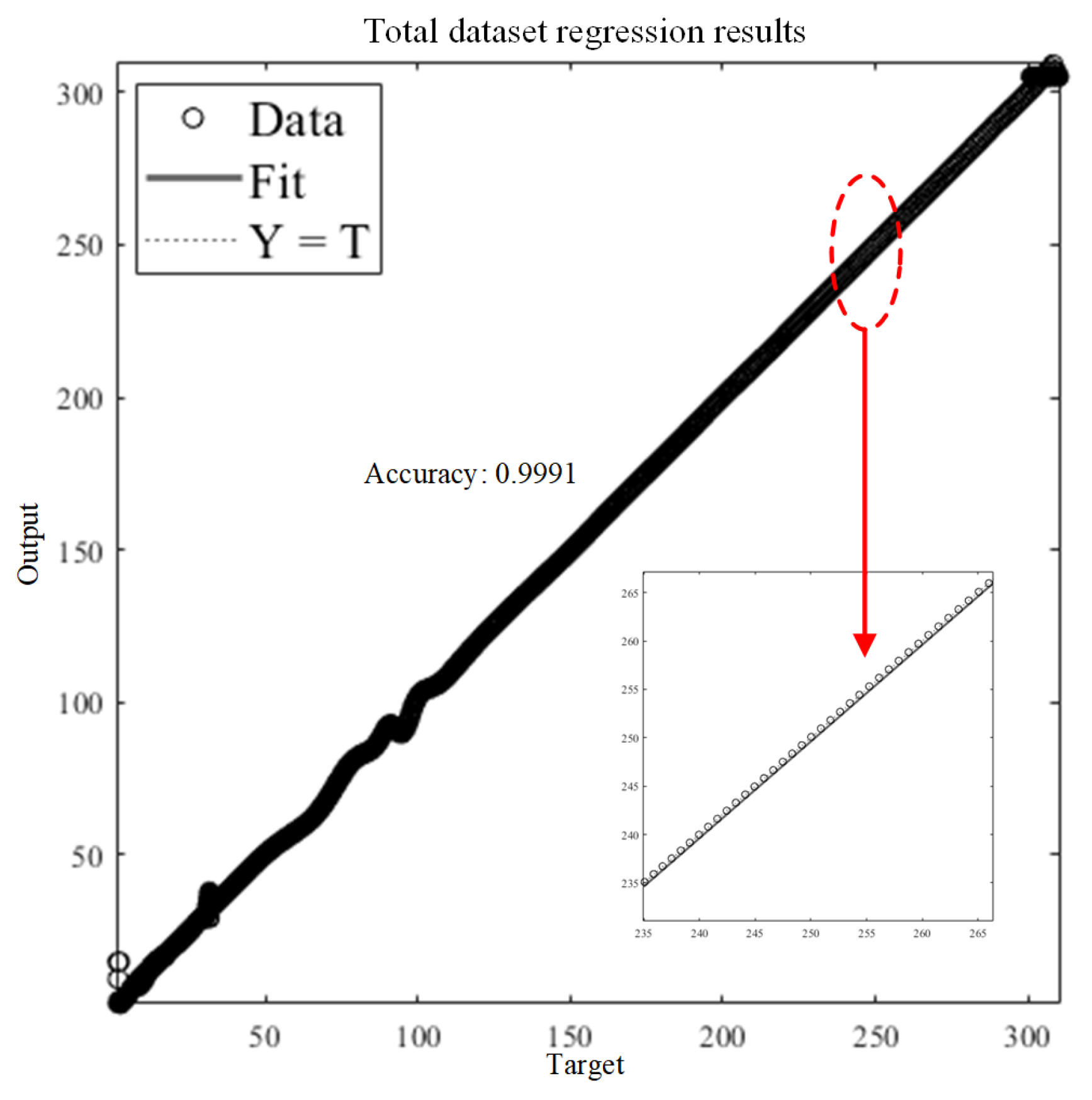

The comparison indicates that one-dimensional convolutional neural networks are more computationally efficient, achieve lower loss values, and exhibit better responsiveness and stability.

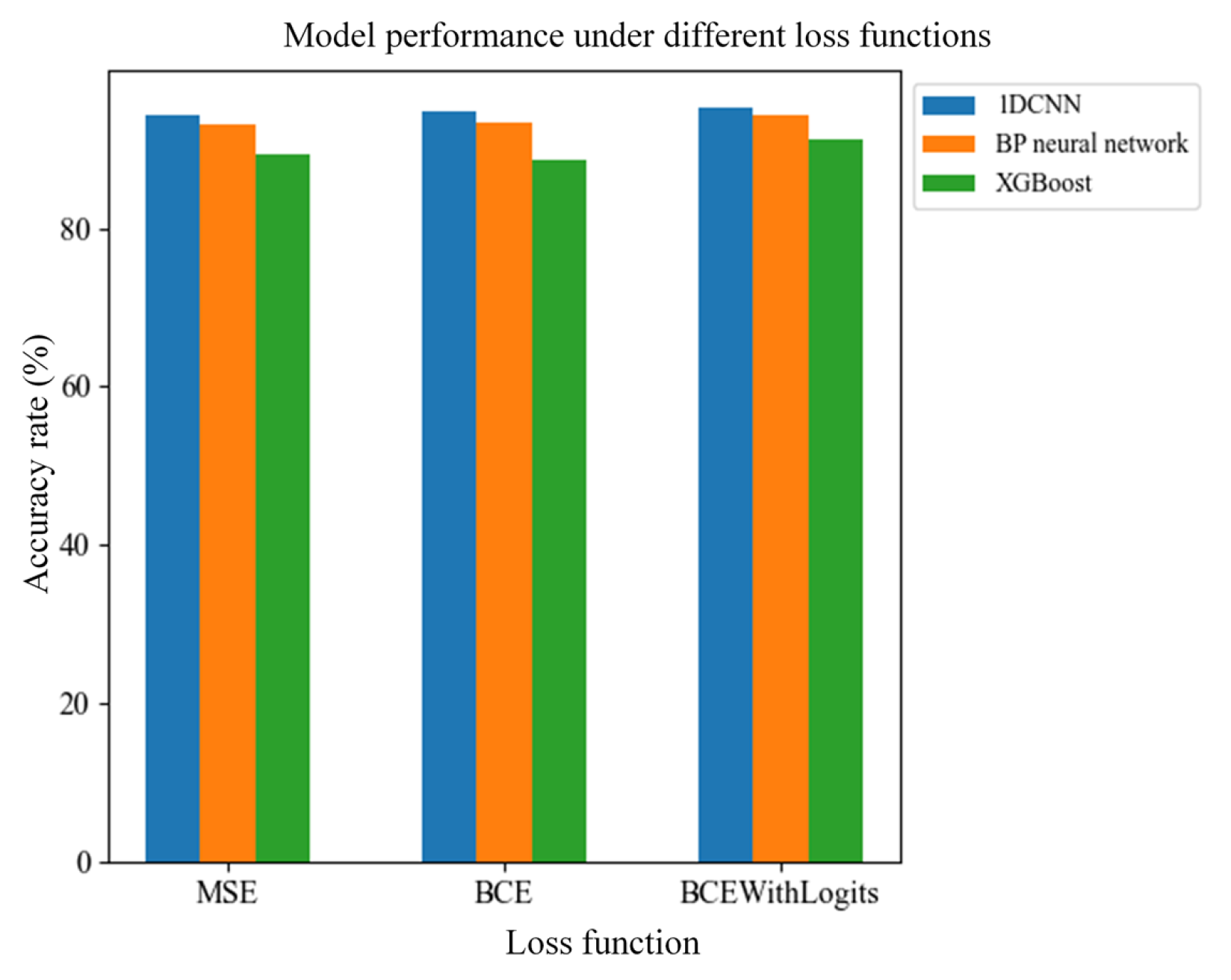

Table 4 reports the validation accuracy of each model trained with MSE, BCE, and BCEWithLogits.

Figure 9 illustrates a comparison of the performance metrics of the three algorithms, derived from the data presented in

Table 4. The plot highlights the strengths and weaknesses of each algorithm. Across the different loss functions, the 1D-CNN achieves, on average, an accuracy 2.7% higher than that of the traditional BP neural network and 6.75% higher than that of the XGBoost algorithm. The 1D-CNN architecture was selected for the temperature anomaly diagnosis task due to its exceptional performance in identifying temperature anomalies. In addition, the confusion matrix of the 1D-CNN is presented in

Table 5.

4.3. 1D-CNN Architecture and Training Configuration

After preprocessing, the raw measurement data are resampled at a 1 min interval using mean aggregation. Each sample is represented as an 8-dimensional temperature feature vector corresponding to the eight temperature channels. The input features are standardized via z-score normalization using the mean and standard deviation computed from the training set, and the same statistics are applied to normalize the test set.

The 1D-CNN reshapes the normalized 8-dimensional vector into a one-channel 1D sequence of length 8, enabling the convolutional layers to learn local correlations among the multi-channel temperature features. The network architecture is summarized in

Table 6. No pooling or batch-normalization layers are used in this implementation. The hidden layers adopt ReLU activation functions, and a dropout layer with a rate of 0.2 is inserted before the fully connected classifier.

The network outputs a 4-dimensional logit vector corresponding to four fault-indicator labels. The normal condition is encoded as an all-zero indicator vector, whereas a fault condition is indicated when at least one indicator is positive. During inference, the logits are first mapped by a sigmoid function and then converted into binary indicators using a fixed threshold of 0.25. For evaluation, a sample is counted as correctly classified only when all four predicted indicators exactly match the ground-truth indicators.

For training, the Adam optimizer is employed with a learning rate of 0.001 and a weight decay of

. The loss function is BCEWithLogitsLoss. The batch size is set to 128, and the model is trained for up to 100 epochs. During training, the best checkpoint is selected based on validation performance and may be used for early stopping, while the test set is strictly held out for the final unbiased evaluation and reporting. The resulting fault-versus-normal performance of the 1D-CNN on the test set is summarized in

Table 7, including accuracy, precision, recall, and F1-score.