5.1. Dataset Description

In actual industrial scenarios, bearings usually operate under complex variable conditions. Specifically, changes in rotational speed can significantly affect fault signal characteristics, making fault diagnosis more challenging. Therefore, conducting experiments with variable rotational speeds can better simulate real-world working conditions, test the model’s adaptability and robustness across different speeds, and improve the practicality and reliability of the fault diagnosis method. This paper uses the University of Ottawa bearing dataset (Ottawa) [

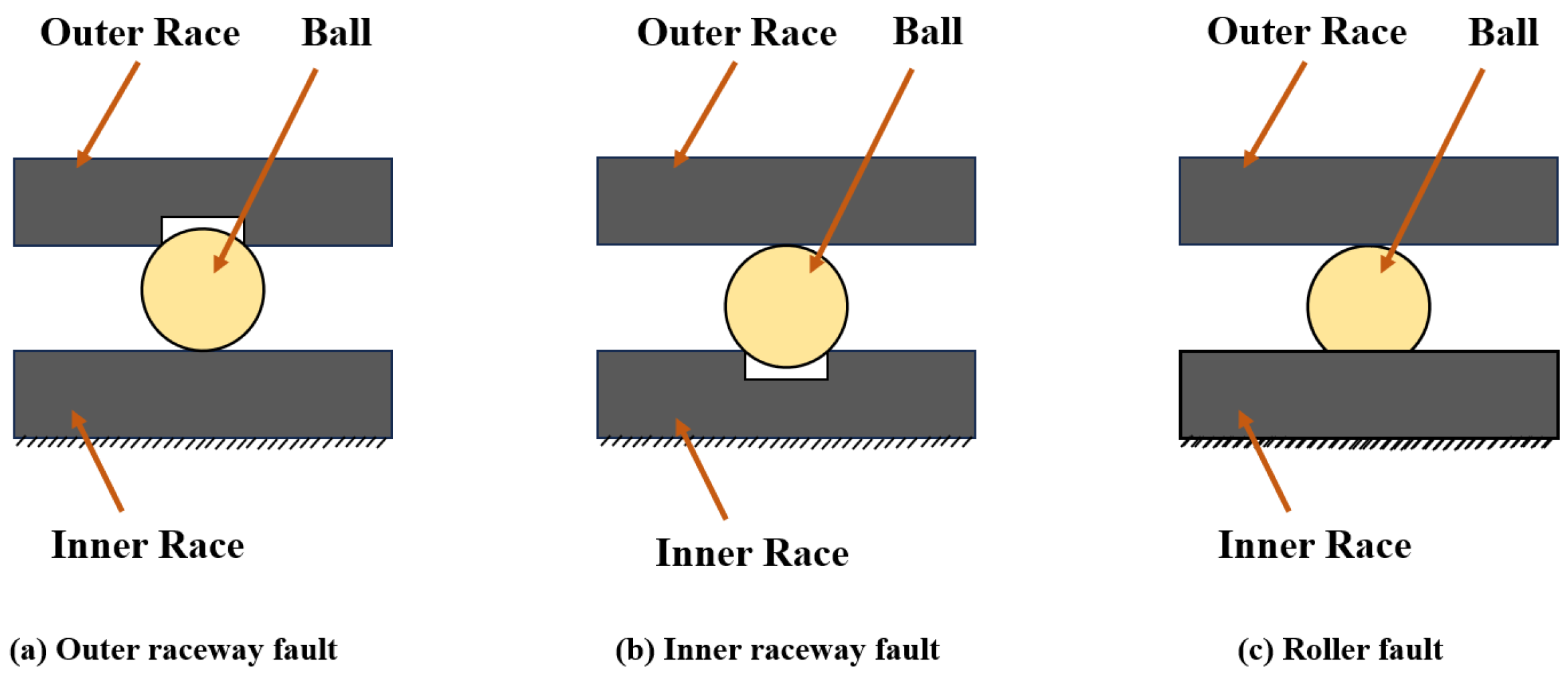

30] for testing. To evaluate model stability, all experiments are repeated five times independently, and the average results and standard deviations are reported. Considering the stochastic nature of rolling element contact on the raceway, the bearing conditions in the experiment are defined as follows: healthy, inner ring failure (IF), outer ring failure (OF), and roller failure (BF). The details of the bearings used for testing are shown in

Table 4.

The publicly available University of Ottawa (Ottawa) bearing dataset contains vibration signals for a variety of failure types and at different rotational speeds. The dataset provides failure data for ER16K bearings in various states under variable operating conditions, and the experimental states under variable operating conditions are shown in

Table 5.

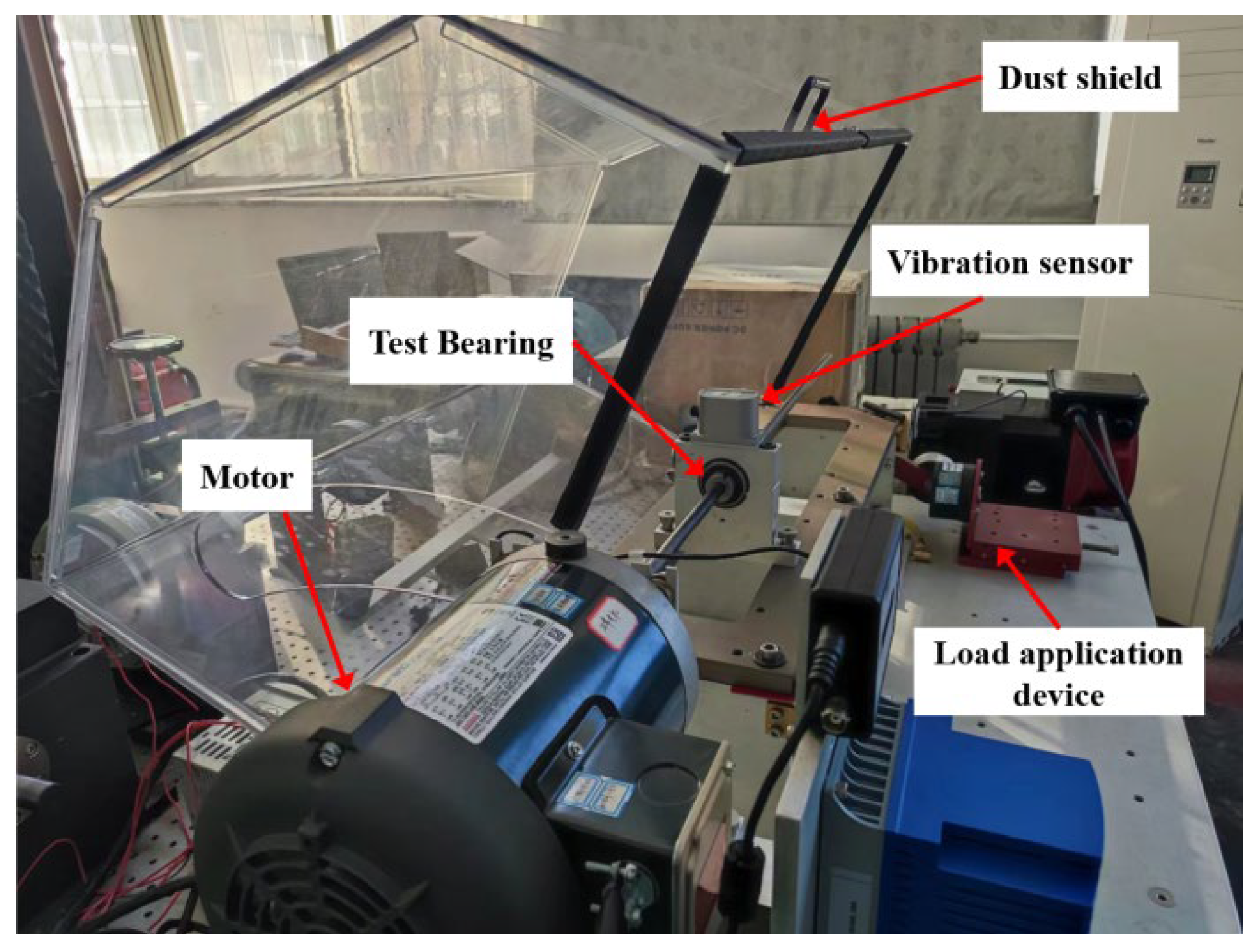

The raw time-domain vibration signals were collected with a 2-channel data logger at a sampling frequency of 200 kHz for not less than 10 s for each fault condition. The experiments were conducted on a SpectraQuest Mechanical Failure Simulator (MFS-PK5M), and the experimental setup is shown in

Figure 13.

The test rig consists of a motor, AC drive, encoder, coupling, rotor, and bearings. The Ottawa bearing dataset is used in this paper to test the diagnostic accuracy of the proposed method under variable operating conditions.

5.2. Comparative Analysis at Different RPMs

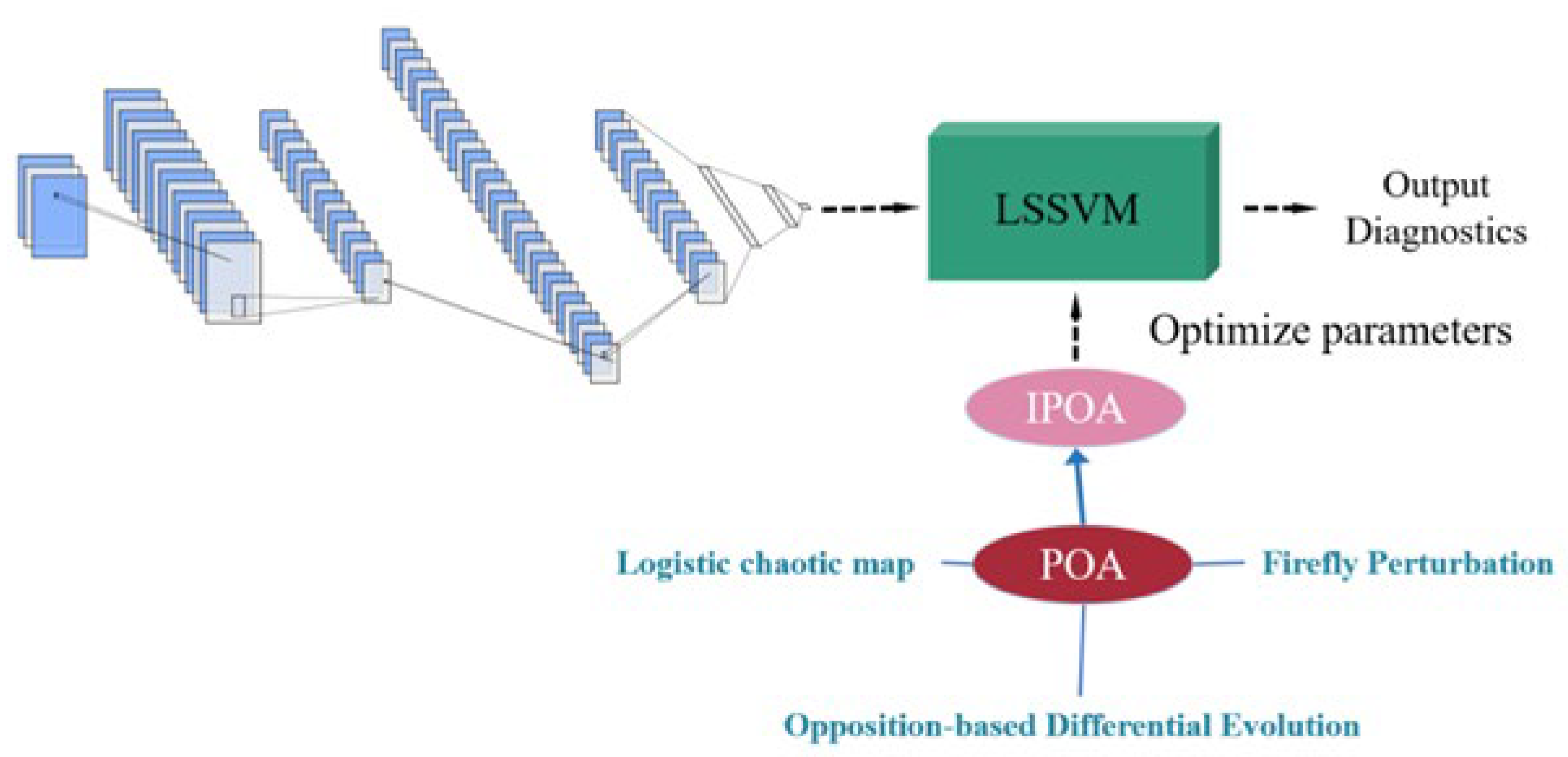

To verify the adaptability and robustness of the CNN-IPOA-LSSVM model proposed in this paper under different rotational speed conditions, experimental analyses were conducted based on the Ottawa dataset for four variable speed operating conditions (A1, B1, C1, and D1).

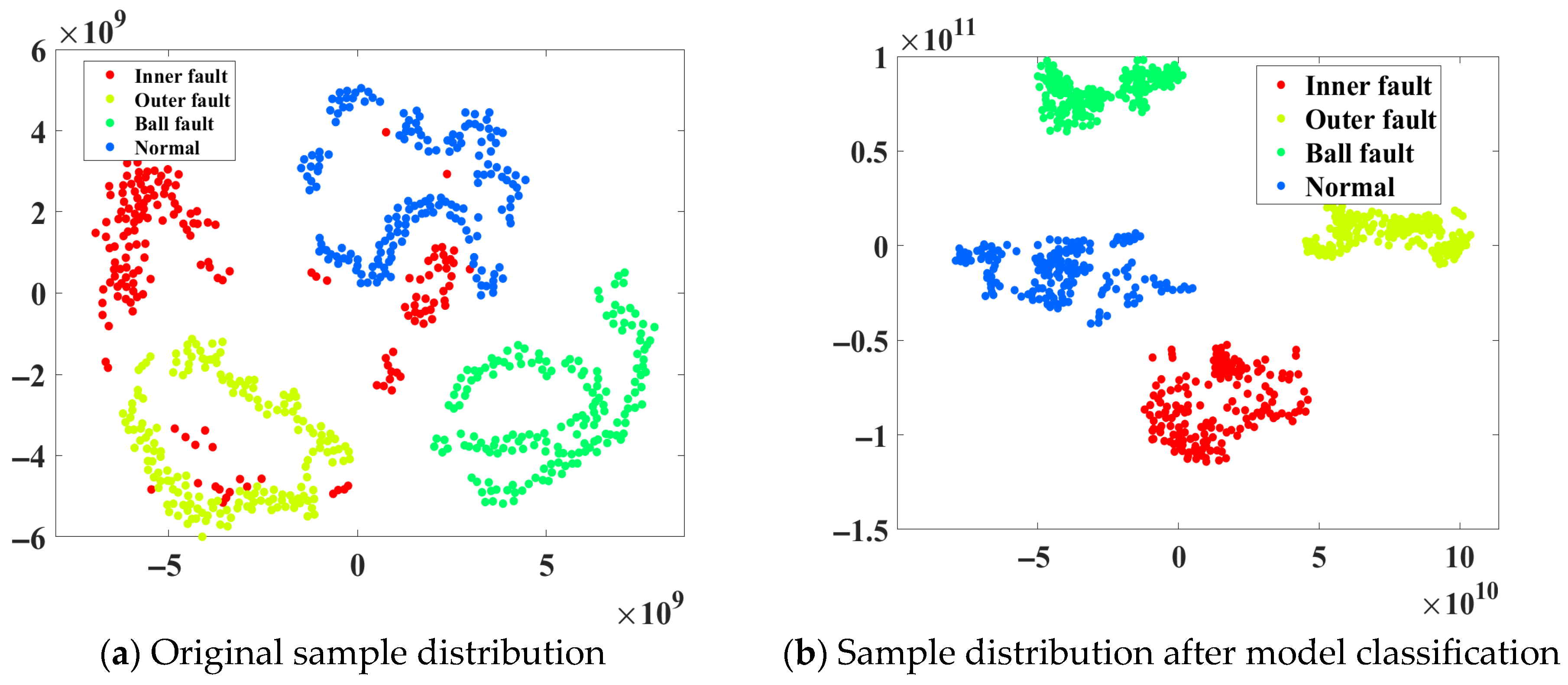

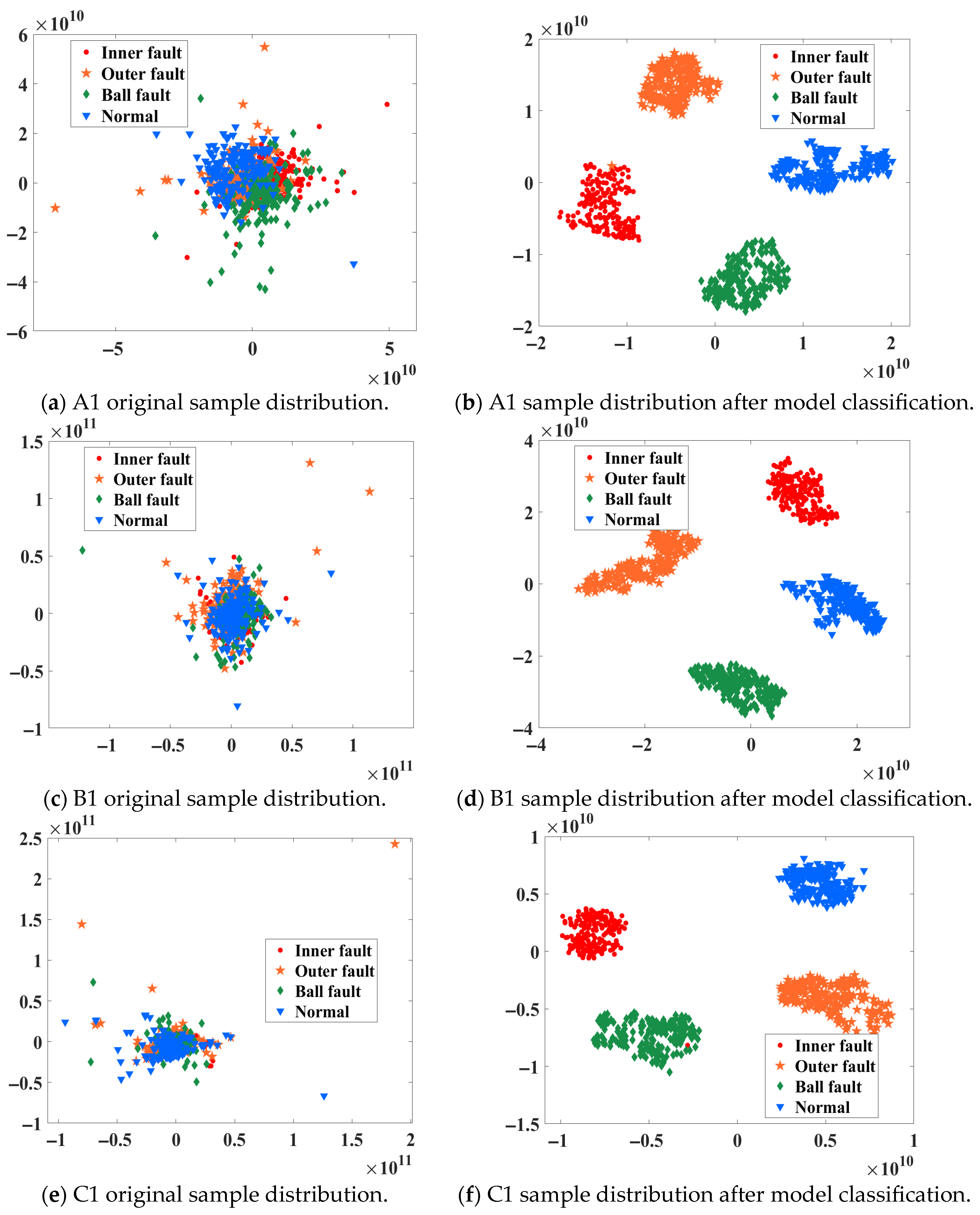

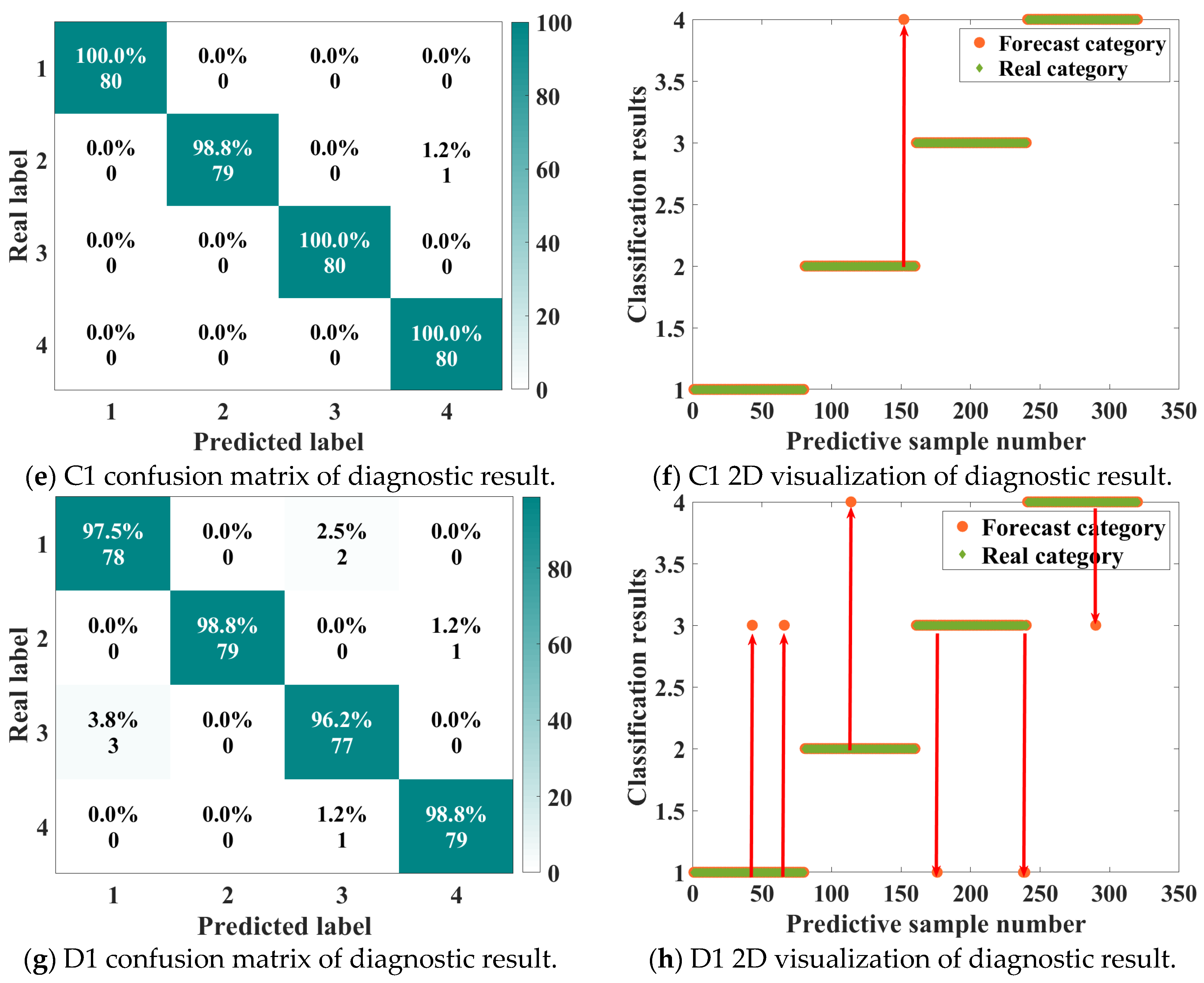

Figure 14 and

Figure 15 demonstrate the distribution of fault samples and the classification effect of the model under different speeds, and the diagnostic performance of the model under variable operating conditions is intuitively reflected by the t-SNE visualization and the confusion matrix.

The classification accuracies for distinct working conditions in the Ottawa dataset are 99.69%, 100%, 99.69%, and 97.81%, with an average accuracy of 99.30%. To better evaluate the performance of the proposed classification model, this study used essential indicators such as precision, recall, F1 score, and standard deviation (Std.). The specific expressions are as follows.

Table 6 shows the detailed performance indicator data of the model tested on the Ottawa dataset.

Precision is the proportion of correctly predicted positive examples out of all predicted positive examples.

Recall reflects the proportion of correctly predicted positive examples out of all actual positive examples.

The F1 score represents the harmonic mean of precision and recall.

The standard deviation (Std.) is calculated based on the classification results of five independent runs, reflecting model stability.

(Note: TP: true positive; FP: false positive; and FN: false negative)

Based on the data analysis above, the CNN-IPOA-LSSVM model proposed in this paper shows near-perfect classification performance across all fault categories, with an average F1 score of 99.75% and a very low standard deviation (±0.11), greatly exceeding traditional methods. Especially in outer ring fault (OF) detection, the model attains 100% precision and recall rates. Furthermore, the model proves highly stable across five independent runs, with maximum fluctuation below 0.2%, confirming the benefits of digital twin data augmentation combined with IPOA parameter optimization.

5.3. Comparative Analysis of Different Models

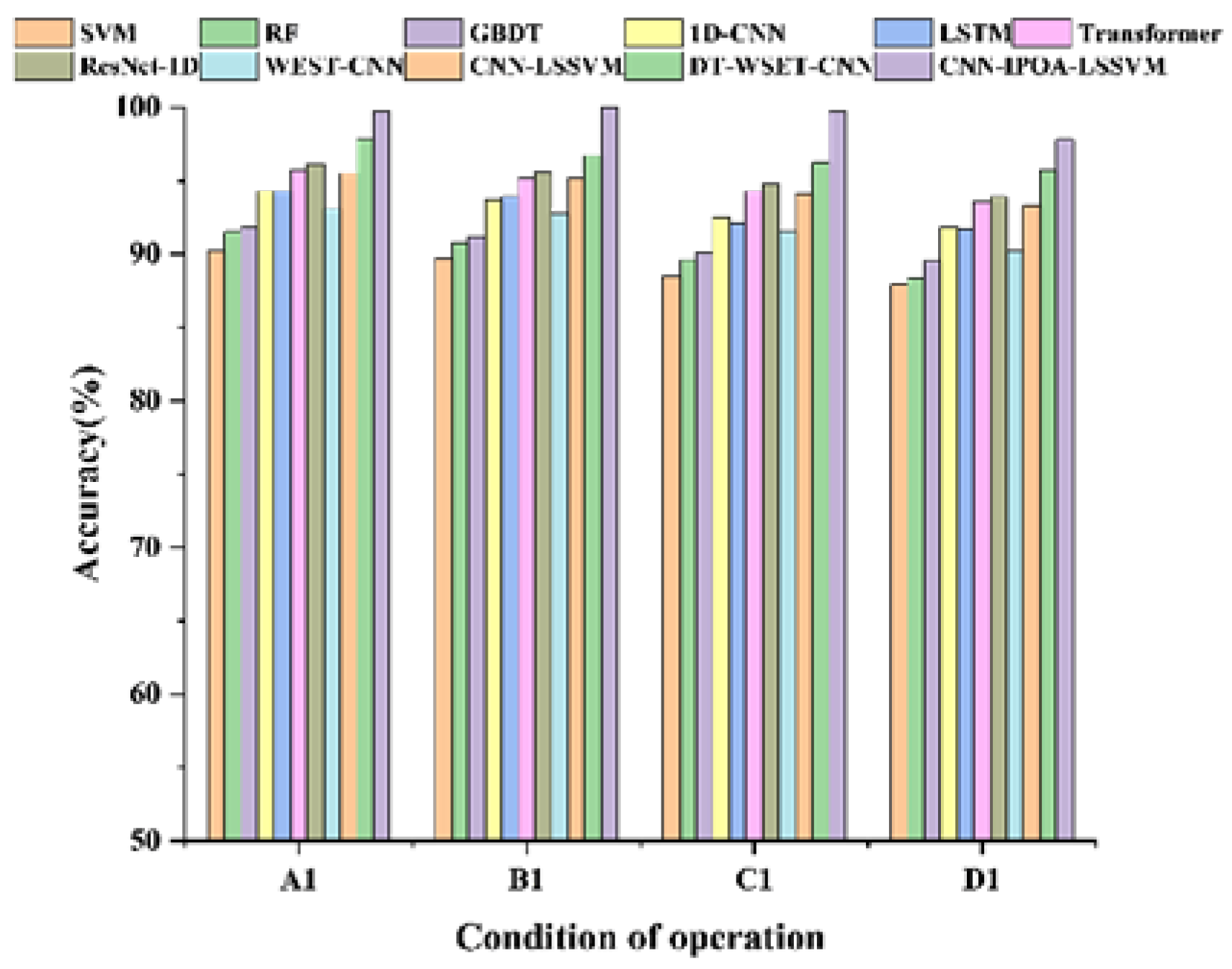

To further validate the superiority of the proposed model, the model presented in this paper is compared with several classical fault diagnosis models, including the traditional support vector machine (SVM), deep neural network (DNN), random forest (RF), one-dimensional convolutional neural network (1D-CNN), long short-term memory network (LSTM), gradient boosting tree (GBDT), one-dimensional residual network (1D-ResNet), and Transformer. Meanwhile, to verify the contributions of each module, a WSET-CNN (without digital twins), CNN-LSSVM (standard POA), and DT-WSET-CNN (without IPOA) were compared and verified. All comparison models used the same preprocessing process and the same dataset division. Model hyperparameters were optimized through grid search to ensure fairness in the comparison. The outcomes of the comparison experiments are depicted in

Figure 16, and the model training curve is shown in

Figure 17.

It can be seen that compared with other methods, the accuracy of the CNN-IPOA-LSSVM model proposed in this paper improves significantly compared to other diagnostic models: under the A1 growth rate working condition, it improves by 9.5%, 8.2%, and 7.9% compared to SVM (90.2%), Random Forest (91.5%), and GBDT (91.8%), respectively, and compared to the benchmark deep learning models of 1D-CNN (94.3%), LSTM (94.3%), Transformer (95.7%), and ResNet-1D (96.1%), it improves by 5.4%, 5.4%, 4% and 3.6%, respectively. Under the B1 degradation working condition, the accuracy of the model in this paper significantly outperforms that of the traditional methods (SVM 89.7%, Random Forest 90.8%, and GBDT 91.2%), with relative improvements of 10.3%, 9.2%, and 8.8%, respectively. Compared with the deep learning models, the improvements over 1D-CNN (93.7%), LSTM (93.9%), Transformer (95.2%), and ResNet-1D (95.6%) are 6.3%, 6.1%, 4.8%, and 4.4%, respectively. In the face of C1 complex working conditions, the models in this paper show stronger dynamic adaptability: 11.2%, 10.1%, and 9.6% improvement over the traditional models (SVM 88.5%, Random Forest 89.6%, and GBDT 90.1%); 11.2%, 10.1%, and 9.6% improvement over the DL models (1D-CNN 92.5%, LSTM 92.1%, Transformer 94.3%, and ResNet-1D 94.8%); and 9.6% improvement over the traditional models (1D-CNN 92.5%, LSTM 92.1%, Transformer 94.3%, and ResNet-1D 94.8%) Especially in the speed inflection interval (Epochs 40–60), the fluctuation amplitude of this paper’s model is 37.5% lower than that of ResNet-1D, indicating that its improved optimization algorithm can effectively track the variable speed characteristics. In the D1 reverse gear condition, the model’s performance in this paper exhibits the smallest degradation (only 1.8% compared with the A1 condition), whereas the comparison model shows an average degradation of 3.5%. The specific performance is as follows: 9.9%, 9.5%, and 8.3% improvement over SVM (87.9%), Random Forest (88.3%), and GBDT (89.5%); 6.0% improvement over 1D-CNN (91.8%); and 6.1%, 4.2%, and 3.9% improvement over LSTM (91.7%), Transformer (93.6%), and ResNet-1D (93.9%). In this bidirectional variable-speed scenario, the enhanced data generated by the method proposed in this paper through a digital twin encompasses a broader spectrum of speed combinations, thereby rendering the classification boundary more robust.

Comparing different optimization methods under the same model, the CNN-IPOA-LSSVM model proposed in this paper demonstrates significant advantages in four variable speed operating conditions of the Ottawa bearing dataset: Achieving accuracy rates of 99.7%, 100%, 99.7%, and 97.8%, respectively, in the A1 speed increase, B1 speed decrease, C1 increase then decrease, and D1 decrease then increase conditions, representing a 7.4% improvement over the WSET-CNN model without digital twins (average 91.9%), a 4.8% improvement over the standard POA-optimized CNN-LSSVM (average 94.5%), and a 2.5% improvement over the DT-WSET-CNN without IPOA (average 96.8%). The classification accuracy of 100% in the B1 condition validates the strong adaptability of the IPOA algorithm to sudden changes in signals. Training convergence analysis shows that the CNN-IPOA-LSSVM model in this paper achieves 96.8% validation accuracy in 50 epochs, compared to 75 epochs for the DT-WSET-CNN (without IPOA), 85 epochs for the CNN-LSSVM (standard POA), and the WSET-CNN (without digital twin), which did not fully converge even after 100 epochs. The final convergence accuracy reached 99.5%, outperforming DT-WSET-CNN (96.8%), CNN-LSSVM (95.3%), and WSET-CNN (92.7%) by 2.7, 4.2, and 6.8 percentage points, respectively, with a training–validation gap of only 0.4%.

Table 7 shows the results of the significance test for all models compared, using condition A1 as an example. Specifically: (1) Accuracy difference (Δ%) = average accuracy of CNN-IPOA-LSSVM-average accuracy of the comparison model. (2) Effect size: Cohen’s d > 0.8 is considered a “large effect.” (3) All

p-values are corrected using the Bonferroni correction (to control for multiple testing errors).

Statistical test results confirm that the CNN-IPOA-LSSVM model demonstrates statistically significant (p < 0.01) and practically substantial (Cohen’s d > 0.9) performance advantages over 11 comparison methods (including eight benchmark models and three ablation models). In terms of module-specific analysis: Compared to the WSET-CNN model without digital twins, there was a 7.8% improvement, validating the critical role of virtual–physical data augmentation in feature extraction. Compared to the standard POA-optimized CNN-LSSVM model, it achieved a 5.2% improvement, demonstrating the value of the IPOA algorithm in three aspects: chaotic initialization, backward difference evolution, and firefly perturbation. Compared to the DT-WSET-CNN model without IPOA, it still maintained a 2.5% advantage, highlighting the synergistic gain effect between the parameter optimization module and the data augmentation module.